114 results in 68Qxx

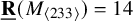

Polarised random

$k$-SAT

$k$-SAT

- Part of

-

- Journal:

- Combinatorics, Probability and Computing / Volume 32 / Issue 6 / November 2023

- Published online by Cambridge University Press:

- 20 July 2023, pp. 885-899

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

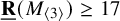

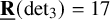

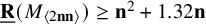

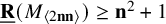

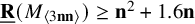

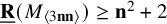

New lower bounds for matrix multiplication and

$\operatorname {det}_3$

$\operatorname {det}_3$

- Part of

-

- Journal:

- Forum of Mathematics, Pi / Volume 11 / 2023

- Published online by Cambridge University Press:

- 29 May 2023, e17

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

The first-order theory of binary overlap-free words is decidable

- Part of

-

- Journal:

- Canadian Journal of Mathematics / Volume 76 / Issue 4 / August 2024

- Published online by Cambridge University Press:

- 26 May 2023, pp. 1144-1162

- Print publication:

- August 2024

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Stable finiteness of twisted group rings and noisy linear cellular automata

- Part of

-

- Journal:

- Canadian Journal of Mathematics / Volume 76 / Issue 4 / August 2024

- Published online by Cambridge University Press:

- 22 May 2023, pp. 1089-1108

- Print publication:

- August 2024

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

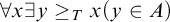

SOME CONSEQUENCES OF

${\mathrm {TD}}$ AND

${\mathrm {TD}}$ AND  ${\mathrm {sTD}}$

${\mathrm {sTD}}$

- Part of

-

- Journal:

- The Journal of Symbolic Logic / Volume 88 / Issue 4 / December 2023

- Published online by Cambridge University Press:

- 15 May 2023, pp. 1573-1589

- Print publication:

- December 2023

-

- Article

- Export citation

Rigid continuation paths II. structured polynomial systems

- Part of

-

- Journal:

- Forum of Mathematics, Pi / Volume 11 / 2023

- Published online by Cambridge University Press:

- 14 April 2023, e12

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Asymptotic normality for

$\boldsymbol{m}$-dependent and constrained

$\boldsymbol{m}$-dependent and constrained  $\boldsymbol{U}$-statistics, with applications to pattern matching in random strings and permutations

$\boldsymbol{U}$-statistics, with applications to pattern matching in random strings and permutations

- Part of

-

- Journal:

- Advances in Applied Probability / Volume 55 / Issue 3 / September 2023

- Published online by Cambridge University Press:

- 28 March 2023, pp. 841-894

- Print publication:

- September 2023

-

- Article

- Export citation

MULTIPLICATION TABLES AND WORD-HYPERBOLICITY IN FREE PRODUCTS OF SEMIGROUPS, MONOIDS AND GROUPS

- Part of

-

- Journal:

- Journal of the Australian Mathematical Society / Volume 115 / Issue 3 / December 2023

- Published online by Cambridge University Press:

- 17 March 2023, pp. 396-430

- Print publication:

- December 2023

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

An efficient method for generating a discrete uniform distribution using a biased random source

- Part of

-

- Journal:

- Journal of Applied Probability / Volume 60 / Issue 3 / September 2023

- Published online by Cambridge University Press:

- 07 March 2023, pp. 1069-1078

- Print publication:

- September 2023

-

- Article

- Export citation

THE ZHOU ORDINAL OF LABELLED MARKOV PROCESSES OVER SEPARABLE SPACES

- Part of

-

- Journal:

- The Review of Symbolic Logic / Volume 16 / Issue 4 / December 2023

- Published online by Cambridge University Press:

- 27 February 2023, pp. 1011-1032

- Print publication:

- December 2023

-

- Article

- Export citation

Functional norms, condition numbers and numerical algorithms in algebraic geometry

- Part of

-

- Journal:

- Forum of Mathematics, Sigma / Volume 10 / 2022

- Published online by Cambridge University Press:

- 22 November 2022, e103

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

On images of subshifts under embeddings of symbolic varieties

- Part of

-

- Journal:

- Ergodic Theory and Dynamical Systems / Volume 43 / Issue 9 / September 2023

- Published online by Cambridge University Press:

- 01 August 2022, pp. 3131-3149

- Print publication:

- September 2023

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

IS CAUSAL REASONING HARDER THAN PROBABILISTIC REASONING?

- Part of

-

- Journal:

- The Review of Symbolic Logic / Volume 17 / Issue 1 / March 2024

- Published online by Cambridge University Press:

- 18 May 2022, pp. 106-131

- Print publication:

- March 2024

-

- Article

-

- You have access

- HTML

- Export citation

SOLENOIDAL MAPS, AUTOMATIC SEQUENCES, VAN DER PUT SERIES, AND MEALY AUTOMATA

- Part of

-

- Journal:

- Journal of the Australian Mathematical Society / Volume 114 / Issue 1 / February 2023

- Published online by Cambridge University Press:

- 06 April 2022, pp. 78-109

- Print publication:

- February 2023

-

- Article

- Export citation

GAPS IN THE THUE–MORSE WORD

- Part of

-

- Journal:

- Journal of the Australian Mathematical Society / Volume 114 / Issue 1 / February 2023

- Published online by Cambridge University Press:

- 25 January 2022, pp. 110-144

- Print publication:

- February 2023

-

- Article

- Export citation

A MINIMAL SET LOW FOR SPEED

- Part of

-

- Journal:

- The Journal of Symbolic Logic / Volume 87 / Issue 4 / December 2022

- Published online by Cambridge University Press:

- 03 January 2022, pp. 1693-1728

- Print publication:

- December 2022

-

- Article

- Export citation

Tuning as convex optimisation: a polynomial tuner for multi-parametric combinatorial samplers

- Part of

-

- Journal:

- Combinatorics, Probability and Computing / Volume 31 / Issue 5 / September 2022

- Published online by Cambridge University Press:

- 15 December 2021, pp. 765-811

-

- Article

- Export citation

Topology of random

$2$-dimensional cubical complexes

$2$-dimensional cubical complexes

- Part of

-

- Journal:

- Forum of Mathematics, Sigma / Volume 9 / 2021

- Published online by Cambridge University Press:

- 29 November 2021, e76

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

NEW RELATIONS AND SEPARATIONS OF CONJECTURES ABOUT INCOMPLETENESS IN THE FINITE DOMAIN

- Part of

-

- Journal:

- The Journal of Symbolic Logic / Volume 87 / Issue 3 / September 2022

- Published online by Cambridge University Press:

- 22 November 2021, pp. 912-937

- Print publication:

- September 2022

-

- Article

- Export citation

DEGREES OF RANDOMIZED COMPUTABILITY

- Part of

-

- Journal:

- Bulletin of Symbolic Logic / Volume 28 / Issue 1 / March 2022

- Published online by Cambridge University Press:

- 27 September 2021, pp. 27-70

- Print publication:

- March 2022

-

- Article

- Export citation