doi:10.1017/jfm.2024.514 Kumar & Premachandran Flow film boiling on a sphere in the mixed and forced convection regimes

JFM Rapids

High-flexibility reconstruction of small-scale motions in wall turbulence using a generalized zero-shot learning

-

- Published online by Cambridge University Press:

- 14 August 2024, R1

-

- Article

- Export citation

Fake turbulence

-

- Published online by Cambridge University Press:

- 12 August 2024, R2

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

On a similarity solution for lock-release gravity currents affected by slope, drag and entrainment

-

- Published online by Cambridge University Press:

- 13 August 2024, R3

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Focus on Fluids

It takes three to tangle

-

- Published online by Cambridge University Press:

- 12 August 2024, F1

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

JFM Papers

Generalizing electroosmotic-flow predictions over charge-modulated periodic topographies: tuneable far-field effects

-

- Published online by Cambridge University Press:

- 12 August 2024, A1

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Turbulence modulation by charged inertial particles in channel flow

-

- Published online by Cambridge University Press:

- 12 August 2024, A2

-

- Article

- Export citation

Lagrangian-based simulation method using constrained Stokesian dynamics for particulate flows in microchannel

-

- Published online by Cambridge University Press:

- 12 August 2024, A3

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

The impact of non-frozen turbulence on the modelling of the noise from serrated trailing edges

-

- Published online by Cambridge University Press:

- 12 August 2024, A4

-

- Article

- Export citation

Transport of inertial spherical particles in compressible turbulent boundary layers

-

- Published online by Cambridge University Press:

- 12 August 2024, A5

-

- Article

- Export citation

Pore-scale study of CO2 desublimation and sublimation in a packed bed during cryogenic carbon capture

-

- Published online by Cambridge University Press:

- 12 August 2024, A6

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

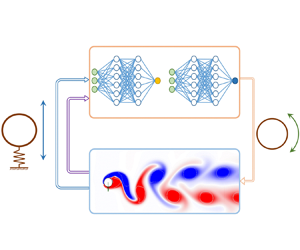

Deep reinforcement learning finds a new strategy for vortex-induced vibration control

-

- Published online by Cambridge University Press:

- 12 August 2024, A7

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Thermodynamically consistent diffuse-interface mixture models of incompressible multicomponent fluids

-

- Published online by Cambridge University Press:

- 12 August 2024, A8

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Perfect active absorption of water waves in a channel by a dipole source

-

- Published online by Cambridge University Press:

- 12 August 2024, A9

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Dynamics of particle aggregation in dewetting films of complex liquids

-

- Published online by Cambridge University Press:

- 12 August 2024, A10

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Dynamics of weighted flexible ribbons in a uniform flow

-

- Published online by Cambridge University Press:

- 14 August 2024, A11

-

- Article

- Export citation

Resonant standing surface waves excited by an oscillating cylinder in a narrow rectangular cavity

-

- Published online by Cambridge University Press:

- 14 August 2024, A12

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

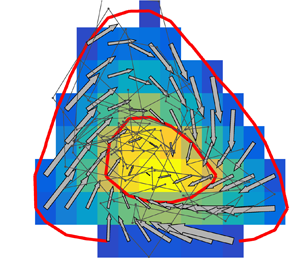

Inertial particle focusing in fluid flow through spiral ducts: dynamics, tipping phenomena and particle separation

-

- Published online by Cambridge University Press:

- 14 August 2024, A13

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Shear-induced depinning of thin droplets on rough substrates

-

- Published online by Cambridge University Press:

- 14 August 2024, A14

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Experimental study on the settling motion of coral grains in still water

-

- Published online by Cambridge University Press:

- 14 August 2024, A15

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Cracking of submerged beds

-

- Published online by Cambridge University Press:

- 14 August 2024, A16

-

- Article

- Export citation