1 The blowup-polynomial of a metric space and its distance matrix

This work aims to provide novel connections between metric geometry, the geometry of (real) polynomials, and algebraic combinatorics via partially symmetric functions. In particular, we introduce and study a polynomial graph-invariant for each graph, which to the best of our knowledge, is novel.

1.1 Motivations

The original motivation for our paper came from the study of distance matrices

![]() $D_G$

of graphs G – on both the algebraic and spectral sides:

$D_G$

of graphs G – on both the algebraic and spectral sides:

-

• On the algebraic side, Graham and Pollak [Reference Graham and Pollak21] initiated the study of

$D_G$

by proving: if

$D_G$

by proving: if

$T_k$

is a tree on k nodes, then

$T_k$

is a tree on k nodes, then

$\det D_{T_k}$

is independent of the tree structure and depends only on k. By now, many variants of such results are proved, for trees as well as several other families of graphs, including with q-analogs, weightings, and combinations of both of these. (See, e.g., [Reference Choudhury and Khare16] and its references for a list of such papers, results, and their common unification.)

$\det D_{T_k}$

is independent of the tree structure and depends only on k. By now, many variants of such results are proved, for trees as well as several other families of graphs, including with q-analogs, weightings, and combinations of both of these. (See, e.g., [Reference Choudhury and Khare16] and its references for a list of such papers, results, and their common unification.) -

• Following the above work [Reference Graham and Pollak21], Graham also worked on the spectral side, and with Lovász, studied in [Reference Graham and Lovász20] the distance matrix of a tree, including computing its inverse and characteristic polynomial. This has since led to the intensive study of the roots, i.e., the “distance spectrum,” for trees and other graphs. See, e.g., the survey [Reference Aouchiche and Hansen3] for more on distance spectra.

A well-studied problem in spectral graph theory involves understanding which graphs are distance co-spectral – i.e., for which graphs

![]() $H' \not \cong K'$

, if any, do

$H' \not \cong K'$

, if any, do

![]() $D_{H'}, D_{K'}$

have the same spectra. Many such examples exist; see, e.g., the references in [Reference Drury and Lin18]. In particular, the characteristic polynomial of

$D_{H'}, D_{K'}$

have the same spectra. Many such examples exist; see, e.g., the references in [Reference Drury and Lin18]. In particular, the characteristic polynomial of

![]() $D_G$

does not “detect” the graph G. It is thus natural to seek some other byproduct of

$D_G$

does not “detect” the graph G. It is thus natural to seek some other byproduct of

![]() $D_G$

which does – i.e., which recovers G up to isometry. In this paper, we find such a (to the best of our knowledge) novel graph invariant: a multivariate polynomial, which we call the blowup-polynomial of G, and which does detect G. Remarkably, this polynomial turns out to have several additional attractive properties:

$D_G$

which does – i.e., which recovers G up to isometry. In this paper, we find such a (to the best of our knowledge) novel graph invariant: a multivariate polynomial, which we call the blowup-polynomial of G, and which does detect G. Remarkably, this polynomial turns out to have several additional attractive properties:

-

• It is multi-affine in its arguments.

-

• It is also real-stable, so that its “support” yields a hitherto unexplored delta-matroid.

-

• The blowup-polynomial simultaneously encodes the determinants of all graph-blowups of G (defined presently), thereby connecting with the algebraic side (see the next paragraph).

-

• Its “univariate specialization” is a transformation of the characteristic polynomial of

$D_G$

, thereby connecting with the spectral side as well.

$D_G$

, thereby connecting with the spectral side as well.

Thus, the blowup-polynomial that we introduce, connects distance spectra for graphs – and more generally, for finite metric spaces – to other well-studied objects, including real-stable/Lorentzian polynomials and delta-matroids.

On the algebraic side, a natural question involves asking if there are graph families

![]() $\{ G_i : i \in I \}$

(like trees on k vertices) for which the scalars

$\{ G_i : i \in I \}$

(like trees on k vertices) for which the scalars

![]() $\det (D_{G_i})$

behave “nicely” as a function of

$\det (D_{G_i})$

behave “nicely” as a function of

![]() $i \in I$

. As stated above, the family of blowups of a fixed graph G (which help answer the preceding “spectral” question) not only answer this question positively as well, but the nature of the answer – multi-affine polynomiality – is desirable in conjunction with its real-stability. In fact, we will obtain many of these results, both spectral and algebraic, in greater generality: for arbitrary finite metric spaces.

$i \in I$

. As stated above, the family of blowups of a fixed graph G (which help answer the preceding “spectral” question) not only answer this question positively as well, but the nature of the answer – multi-affine polynomiality – is desirable in conjunction with its real-stability. In fact, we will obtain many of these results, both spectral and algebraic, in greater generality: for arbitrary finite metric spaces.

The key construction required for all of these contributions is that of a blowup, and we begin by defining it more generally, for arbitrary metric spaces that are discrete (i.e., every point is isolated).

Definition 1.1 Given a metric space

![]() $(X,d)$

with all isolated points, and a function

$(X,d)$

with all isolated points, and a function

![]() $\mathbf {n} : X \to \mathbb {Z}_{>0}$

, the

$\mathbf {n} : X \to \mathbb {Z}_{>0}$

, the

![]() $\mathbf {n}$

-blowup of X is the metric space

$\mathbf {n}$

-blowup of X is the metric space

![]() $X[\mathbf {n}]$

obtained by creating

$X[\mathbf {n}]$

obtained by creating

![]() $n_x := \mathbf {n}(x)$

copies of each point x (also termed blowups of x). Define the distance between copies of distinct points

$n_x := \mathbf {n}(x)$

copies of each point x (also termed blowups of x). Define the distance between copies of distinct points

![]() $x \neq y$

in X to still be

$x \neq y$

in X to still be

![]() $d(x,y)$

, and between distinct copies of the same point to be

$d(x,y)$

, and between distinct copies of the same point to be

![]() $2 d(x,X \setminus \{x\}) = 2 \inf _{y \in X \setminus \{ x \}} d(x,y)$

.

$2 d(x,X \setminus \{x\}) = 2 \inf _{y \in X \setminus \{ x \}} d(x,y)$

.

Also define the distance matrix

![]() $D_X$

and the modified distance matrix

$D_X$

and the modified distance matrix

![]() $\mathcal {D}_X$

of X via:

$\mathcal {D}_X$

of X via:

Notice, for completeness, that the above construction applied to a non-discrete metric space does not yield a metric; and that blowups of X are “compatible” with isometries of X (see (1.3)). We also remark that this notion of blowup seems to be relatively less studied in the literature, and differs from several other variants in the literature – for metric spaces, e.g., [Reference Cheeger, Kleiner and Schioppa14] or for graphs, e.g., [Reference Liu29]. However, the variant studied in this paper was previously studied for the special case of unweighted graphs, see, e.g,. [Reference Hatami, Hirst and Norine24–Reference Komlós, Sárközy and Szemerédi26] in extremal and probabilistic graph theory.

1.2 Defining the blowup-polynomial; Euclidean embeddings

We now describe some of the results in this work, beginning with metric embeddings. Recall that the complete information about a (finite) metric space is encoded into its distance matrix

![]() $D_X$

(or equivalently, in the off-diagonal part of

$D_X$

(or equivalently, in the off-diagonal part of

![]() $\mathcal {D}_X$

). Metric spaces are useful in many sub-disciplines of the mathematical sciences, and have been studied for over a century. For instance, a well-studied question in metric geometry involves understanding metric embeddings. In 1910, Fréchet showed [Reference Fréchet19] that every finite metric space with

$\mathcal {D}_X$

). Metric spaces are useful in many sub-disciplines of the mathematical sciences, and have been studied for over a century. For instance, a well-studied question in metric geometry involves understanding metric embeddings. In 1910, Fréchet showed [Reference Fréchet19] that every finite metric space with

![]() $k+1$

points isometrically embeds into

$k+1$

points isometrically embeds into

![]() $\mathbb R^k$

with the supnorm. Similarly, a celebrated 1935 theorem of Schoenberg [Reference Schoenberg37] (following Menger’s works [Reference Menger32, Reference Menger33]) says the following.

$\mathbb R^k$

with the supnorm. Similarly, a celebrated 1935 theorem of Schoenberg [Reference Schoenberg37] (following Menger’s works [Reference Menger32, Reference Menger33]) says the following.

Theorem 1.2 (Schoenberg [Reference Schoenberg37])

A finite metric space

![]() $X = \{ x_0, \dots , x_k \}$

isometrically embeds inside Euclidean space

$X = \{ x_0, \dots , x_k \}$

isometrically embeds inside Euclidean space

![]() $(\mathbb R^r, \| \cdot \|_2)$

if and only if its modified Cayley–Menger matrix

$(\mathbb R^r, \| \cdot \|_2)$

if and only if its modified Cayley–Menger matrix

is positive semidefinite, with rank at most r.

As an aside, the determinant of this matrix is related to the volume of a polytope with vertices

![]() $x_i$

(beginning with classical work of Cayley [Reference Cayley13]), and the Cayley–Menger matrix itself connects to the principle of trilateration/triangulation that underlies the GPS system.

$x_i$

(beginning with classical work of Cayley [Reference Cayley13]), and the Cayley–Menger matrix itself connects to the principle of trilateration/triangulation that underlies the GPS system.

Returning to the present work, our goal is to study the distance matrix of a finite metric space vis-a-vis its blowups. We begin with a “negative” result from metric geometry. Note that every blowup of a finite metric space embeds into

![]() $\mathbb R^k$

(for some k) equipped with the supnorm, by Fréchet’s aforementioned result. In contrast, we employ Schoenberg’s Theorem 1.2 to show that the same is far from true when considering the Euclidean metric. Namely, given a finite metric space X, we characterize all blowups

$\mathbb R^k$

(for some k) equipped with the supnorm, by Fréchet’s aforementioned result. In contrast, we employ Schoenberg’s Theorem 1.2 to show that the same is far from true when considering the Euclidean metric. Namely, given a finite metric space X, we characterize all blowups

![]() $X[\mathbf {n}]$

that embed in some Euclidean space

$X[\mathbf {n}]$

that embed in some Euclidean space

![]() $(\mathbb R^k, \| \cdot \|_2)$

. Since X embeds into

$(\mathbb R^k, \| \cdot \|_2)$

. Since X embeds into

![]() $X[\mathbf {n}]$

, a necessary condition is that X itself should be Euclidean. With this in mind, we have the following.

$X[\mathbf {n}]$

, a necessary condition is that X itself should be Euclidean. With this in mind, we have the following.

Theorem A Suppose

![]() $X = \{ x_1, \dots , x_k \}$

is a finite metric subspace of Euclidean space

$X = \{ x_1, \dots , x_k \}$

is a finite metric subspace of Euclidean space

![]() $(\mathbb R^r, \| \cdot \|_2)$

. Given positive integers

$(\mathbb R^r, \| \cdot \|_2)$

. Given positive integers

![]() $\{ n_{x_i} : 1 \leqslant i \leqslant k \}$

, not all of which equal

$\{ n_{x_i} : 1 \leqslant i \leqslant k \}$

, not all of which equal

![]() $1$

, the following are equivalent:

$1$

, the following are equivalent:

-

(1) The blowup

$X[\mathbf {n}]$

isometrically embeds into some Euclidean space

$X[\mathbf {n}]$

isometrically embeds into some Euclidean space

$(\mathbb R^{r'}, \| \cdot \|_2)$

.

$(\mathbb R^{r'}, \| \cdot \|_2)$

. -

(2) Either

$k=1$

and

$k=1$

and

$\mathbf {n}$

is arbitrary (then, by convention,

$\mathbf {n}$

is arbitrary (then, by convention,

$X[\mathbf {n}]$

is a simplex); or

$X[\mathbf {n}]$

is a simplex); or

$k>1$

and there exists a unique

$k>1$

and there exists a unique

$1 \leqslant j \leqslant k$

such that

$1 \leqslant j \leqslant k$

such that

$n_{x_j} = 2$

. In this case, we moreover have: (a)

$n_{x_j} = 2$

. In this case, we moreover have: (a)

$n_{x_i} = 1\ \forall i \neq j$

, (b)

$n_{x_i} = 1\ \forall i \neq j$

, (b)

$x_j$

is not in the affine hull/span V of

$x_j$

is not in the affine hull/span V of

$\{ x_i : i \neq j \}$

, and (c) the unique point

$\{ x_i : i \neq j \}$

, and (c) the unique point

$v \in V$

closest to

$v \in V$

closest to

$x_j$

, lies in X.

$x_j$

, lies in X.

If these conditions hold, one can take

![]() $r' = r$

and

$r' = r$

and

![]() $X[\mathbf {n}] = X \sqcup \{ 2v - x_j \}$

.

$X[\mathbf {n}] = X \sqcup \{ 2v - x_j \}$

.

Given the preceding result, we turn away from metric geometry, and instead focus on studying the family of blowups

![]() $X[\mathbf {n}]$

– through their distance matrices

$X[\mathbf {n}]$

– through their distance matrices

![]() $D_{X[\mathbf {n}]}$

(which contain all of the information on

$D_{X[\mathbf {n}]}$

(which contain all of the information on

![]() $X[\mathbf {n}]$

). Drawing inspiration from Graham and Pollak [Reference Graham and Pollak21], we focus on one of the simplest invariants of this family of matrices: their determinants, and the (possibly algebraic) nature of the dependence of

$X[\mathbf {n}]$

). Drawing inspiration from Graham and Pollak [Reference Graham and Pollak21], we focus on one of the simplest invariants of this family of matrices: their determinants, and the (possibly algebraic) nature of the dependence of

![]() $\det D_{X[\mathbf {n}]}$

on

$\det D_{X[\mathbf {n}]}$

on

![]() $\mathbf {n}$

. In this paper, we show that the function

$\mathbf {n}$

. In this paper, we show that the function

![]() $: \mathbf {n} \mapsto \det D_{X[\mathbf {n}]}$

possesses several attractive properties. First,

$: \mathbf {n} \mapsto \det D_{X[\mathbf {n}]}$

possesses several attractive properties. First,

![]() $\det D_{X[\mathbf {n}]}$

is a polynomial function in the sizes

$\det D_{X[\mathbf {n}]}$

is a polynomial function in the sizes

![]() $n_x$

of the blowup, up to an exponential factor.

$n_x$

of the blowup, up to an exponential factor.

Theorem B Given

![]() $(X,d)$

a finite metric space, and a tuple of positive integers

$(X,d)$

a finite metric space, and a tuple of positive integers

![]() $\mathbf {n} := (n_x)_{x \in X} \in \mathbb {Z}_{>0}^X$

, the function

$\mathbf {n} := (n_x)_{x \in X} \in \mathbb {Z}_{>0}^X$

, the function

![]() $\mathbf {n} \mapsto \det D_{X[\mathbf {n}]}$

is a multi-affine polynomial

$\mathbf {n} \mapsto \det D_{X[\mathbf {n}]}$

is a multi-affine polynomial

![]() $p_X(\mathbf {n})$

in the

$p_X(\mathbf {n})$

in the

![]() $n_x$

(i.e., its monomials are squarefree in the

$n_x$

(i.e., its monomials are squarefree in the

![]() $n_x$

), times the exponential function

$n_x$

), times the exponential function

Moreover, the polynomial

![]() $p_X(\mathbf {n})$

has constant term

$p_X(\mathbf {n})$

has constant term

![]() $p_X(\mathbf {0}) = \prod _{x \in X} (-2 \; d(x, X \setminus \{ x \}))$

, and linear term

$p_X(\mathbf {0}) = \prod _{x \in X} (-2 \; d(x, X \setminus \{ x \}))$

, and linear term

![]() $-p_X(\mathbf {0}) \sum _{x \in X} n_x$

.

$-p_X(\mathbf {0}) \sum _{x \in X} n_x$

.

Theorem B follows from a stronger one proved below. See Theorem 2.3, which shows, in particular, that not only do the conclusions of Theorem B hold over an arbitrary commutative ring, but moreover, the blowup-polynomial

![]() $p_X(\mathbf {n})$

is a polynomial function in the variables

$p_X(\mathbf {n})$

is a polynomial function in the variables

![]() $\mathbf {n} = \{ n_x : x \in X \}$

as well as the entries of the “original” distance matrix

$\mathbf {n} = \{ n_x : x \in X \}$

as well as the entries of the “original” distance matrix

![]() $D_X$

– and moreover, it is squarefree/multi-affine in all of these arguments (where we treat all entries of

$D_X$

– and moreover, it is squarefree/multi-affine in all of these arguments (where we treat all entries of

![]() $D_X$

to be “independent” variables).

$D_X$

to be “independent” variables).

We also refine the final assertions of Theorem B, by isolating in Proposition 2.6, the coefficient of every monomial in

![]() $p_X(\mathbf {n})$

. That proposition moreover provides a sufficient condition under which the coefficients of two monomials in

$p_X(\mathbf {n})$

. That proposition moreover provides a sufficient condition under which the coefficients of two monomials in

![]() $p_X(\mathbf {n})$

are equal.

$p_X(\mathbf {n})$

are equal.

Theorem B leads us to introduce the following notion, for an arbitrary finite metric space (e.g., every finite, connected,

![]() $\mathbb {R}_{>0}$

-weighted graph).

$\mathbb {R}_{>0}$

-weighted graph).

Definition 1.3 Define the (multivariate) blowup-polynomial of a finite metric space

![]() $(X,d)$

to be

$(X,d)$

to be

![]() $p_X(\mathbf {n})$

, where the

$p_X(\mathbf {n})$

, where the

![]() $n_x$

are thought of as indeterminates. We write out a closed-form expression in the proof of Theorem B – see equation (2.2).

$n_x$

are thought of as indeterminates. We write out a closed-form expression in the proof of Theorem B – see equation (2.2).

In this paper, we also study a specialization of this polynomial. Define the univariate blowup-polynomial of

![]() $(X,d)$

to be

$(X,d)$

to be

![]() $u_X(n) := p_X(n,n,\dots ,n)$

, where n is thought of as an indeterminate.

$u_X(n) := p_X(n,n,\dots ,n)$

, where n is thought of as an indeterminate.

Remark 1.4 Definition 1.3 requires a small clarification. The polynomial map (by Theorem B)

can be extended from the Zariski dense subset

![]() $\mathbb {Z}_{>0}^k$

to all of

$\mathbb {Z}_{>0}^k$

to all of

![]() $\mathbb R^k$

. (Zariski density is explained during the proof of Theorem B.) Since

$\mathbb R^k$

. (Zariski density is explained during the proof of Theorem B.) Since

![]() $\mathbb R$

is an infinite field, this polynomial map on

$\mathbb R$

is an infinite field, this polynomial map on

![]() $\mathbb R^k$

may now be identified with a polynomial, which is precisely

$\mathbb R^k$

may now be identified with a polynomial, which is precisely

![]() $p_X(-)$

, a polynomial in

$p_X(-)$

, a polynomial in

![]() $|X|$

variables (which we will denote by

$|X|$

variables (which we will denote by

![]() $\{ n_x : x \in X \}$

throughout the paper, via a mild abuse of notation). Now setting all arguments to be the same indeterminate yields the univariate blowup-polynomial of

$\{ n_x : x \in X \}$

throughout the paper, via a mild abuse of notation). Now setting all arguments to be the same indeterminate yields the univariate blowup-polynomial of

![]() $(X,d)$

.

$(X,d)$

.

1.3 Real-stability

We next discuss the blowup-polynomial

![]() $p_X(\cdot )$

and its univariate specialization

$p_X(\cdot )$

and its univariate specialization

![]() $u_X(\cdot )$

from the viewpoint of root-location properties. As we will see, the polynomial

$u_X(\cdot )$

from the viewpoint of root-location properties. As we will see, the polynomial

![]() $u_X(n) = p_X(n,n,\dots ,n)$

always turns out to be real-rooted in n. In fact, even more is true. Recall that in recent times, the notion of real-rootedness has been studied in a much more powerful avatar: real-stability. Our next result strengthens the real-rootedness of

$u_X(n) = p_X(n,n,\dots ,n)$

always turns out to be real-rooted in n. In fact, even more is true. Recall that in recent times, the notion of real-rootedness has been studied in a much more powerful avatar: real-stability. Our next result strengthens the real-rootedness of

![]() $u_X(\cdot )$

to the second attractive property of

$u_X(\cdot )$

to the second attractive property of

![]() $p_X(\cdot )$

– namely, real-stability.

$p_X(\cdot )$

– namely, real-stability.

Theorem C The blowup-polynomial

![]() $p_X(\mathbf {n})$

of every finite metric space

$p_X(\mathbf {n})$

of every finite metric space

![]() $(X,d)$

is real-stable in

$(X,d)$

is real-stable in

![]() $\{ n_x \}$

. (Hence, its univariate specialization

$\{ n_x \}$

. (Hence, its univariate specialization

![]() $u_X(n) = p_X(n,n,\dots ,n)$

is always real-rooted.)

$u_X(n) = p_X(n,n,\dots ,n)$

is always real-rooted.)

Recall that real-stable polynomials are simply ones with real coefficients, which do not vanish when all arguments are constrained to lie in the (open) upper half-plane

![]() $\Im (z)> 0$

. Such polynomials have been intensively studied in recent years, with a vast number of applications. For instance, they were famously used in celebrated works of Borcea–Brändén (e.g., [Reference Borcea and Brändén5–Reference Borcea and Brändén7]) and Marcus–Spielman–Srivastava [Reference Marcus, Spielman and Srivastava30, Reference Marcus, Spielman and Srivastava31] to prove longstanding conjectures (including of Kadison–Singer, Johnson, Bilu–Linial, Lubotzky, and others), construct expander graphs, and vastly extend the Laguerre–Pólya–Schur program [Reference Laguerre27, Reference Pólya34, Reference Pólya and Schur35] from the turn of the 20th century (among other applications).

$\Im (z)> 0$

. Such polynomials have been intensively studied in recent years, with a vast number of applications. For instance, they were famously used in celebrated works of Borcea–Brändén (e.g., [Reference Borcea and Brändén5–Reference Borcea and Brändén7]) and Marcus–Spielman–Srivastava [Reference Marcus, Spielman and Srivastava30, Reference Marcus, Spielman and Srivastava31] to prove longstanding conjectures (including of Kadison–Singer, Johnson, Bilu–Linial, Lubotzky, and others), construct expander graphs, and vastly extend the Laguerre–Pólya–Schur program [Reference Laguerre27, Reference Pólya34, Reference Pólya and Schur35] from the turn of the 20th century (among other applications).

Theorem C reveals that for all finite metric spaces – in particular, for all finite connected graphs – the blowup-polynomial is indeed multi-affine and real-stable. The class of multi-affine real-stable polynomials has been characterized in [Reference Brändén11, Theorem 5.6] and [Reference Wagner and Wei43, Theorem 3]. (For a connection to matroids, see [Reference Brändén11, Reference Choe, Oxley, Sokal and Wagner15].) To the best of our knowledge, blowup-polynomials

![]() $p_X(\mathbf {n})$

provide novel examples/realizations of multi-affine real-stable polynomials.

$p_X(\mathbf {n})$

provide novel examples/realizations of multi-affine real-stable polynomials.

1.4 Graph metric spaces: symmetries, complete multipartite graphs

We now turn from the metric-geometric Theorem A, the algebraic Theorem B, and the analysis-themed Theorem C, to a more combinatorial theme, by restricting from metric spaces to graphs. Here, we present two “main theorems” and one proposition.

1.4.1 Graph invariants and symmetries

Having shown that

![]() $\det D_{X[\mathbf {n}]}$

is a polynomial in

$\det D_{X[\mathbf {n}]}$

is a polynomial in

![]() $\mathbf {n}$

(times an exponential factor), and that

$\mathbf {n}$

(times an exponential factor), and that

![]() $p_X(\cdot )$

is always real-stable, our next result explains a third attractive property of

$p_X(\cdot )$

is always real-stable, our next result explains a third attractive property of

![]() $p_X(\cdot )$

: The blowup-polynomial of a graph

$p_X(\cdot )$

: The blowup-polynomial of a graph

![]() $X = G$

is indeed a (novel) graph invariant. To formally state this result, we begin by re-examining the blowup-construction for graphs and their distance matrices.

$X = G$

is indeed a (novel) graph invariant. To formally state this result, we begin by re-examining the blowup-construction for graphs and their distance matrices.

A distinguished sub-class of discrete metric spaces is that of finite simple connected unweighted graphs G (so, without parallel/multiple edges or self-loops). Here, the distance between two nodes

![]() $v,w$

is defined to be the (edge-)length of any shortest path joining

$v,w$

is defined to be the (edge-)length of any shortest path joining

![]() $v,w$

. In this paper, we term such objects graph metric spaces. Note that the blowup

$v,w$

. In this paper, we term such objects graph metric spaces. Note that the blowup

![]() $G[\mathbf {n}]$

is a priori only defined as a metric space; we now adjust the definition to make it a graph.

$G[\mathbf {n}]$

is a priori only defined as a metric space; we now adjust the definition to make it a graph.

Definition 1.5 Given a graph metric space

![]() $G = (V,E)$

, and a tuple

$G = (V,E)$

, and a tuple

![]() $\mathbf {n} = (n_v : v \in V)$

, the

$\mathbf {n} = (n_v : v \in V)$

, the

![]() $\mathbf {n}$

-blowup of G is defined to be the graph

$\mathbf {n}$

-blowup of G is defined to be the graph

![]() $G[\mathbf {n}]$

– with

$G[\mathbf {n}]$

– with

![]() $n_v$

copies of each vertex v – such that a copy of v and one of w are adjacent in

$n_v$

copies of each vertex v – such that a copy of v and one of w are adjacent in

![]() $G[\mathbf {n}]$

if and only if

$G[\mathbf {n}]$

if and only if

![]() $v \neq w$

are adjacent in G.

$v \neq w$

are adjacent in G.

(For example, the

![]() $\mathbf {n}$

-blowup of a complete graph is a complete multipartite graph.) Now note that if G is a graph metric space, then so is

$\mathbf {n}$

-blowup of a complete graph is a complete multipartite graph.) Now note that if G is a graph metric space, then so is

![]() $G[\mathbf {n}]$

for all tuples

$G[\mathbf {n}]$

for all tuples

![]() $\mathbf {n} \in \mathbb {Z}_{>0}^{|V|}$

. The results stated above thus apply to every such graph G – more precisely, to the distance matrices of the blowups of G.

$\mathbf {n} \in \mathbb {Z}_{>0}^{|V|}$

. The results stated above thus apply to every such graph G – more precisely, to the distance matrices of the blowups of G.

To motivate our next result, now specifically for graph metric spaces, we first relate the symmetries of the graph with those of its blowup-polynomial

![]() $p_G(\mathbf {n})$

. Suppose a graph metric space

$p_G(\mathbf {n})$

. Suppose a graph metric space

![]() $G = (V,E)$

has a structural (i.e., adjacency-preserving) symmetry

$G = (V,E)$

has a structural (i.e., adjacency-preserving) symmetry

![]() $\Psi : V \to V$

– i.e., an (auto-)isometry as a metric space. Denoting the corresponding relabeled graph metric space by

$\Psi : V \to V$

– i.e., an (auto-)isometry as a metric space. Denoting the corresponding relabeled graph metric space by

![]() $\Psi (G)$

,

$\Psi (G)$

,

It is thus natural to ask if the converse holds – i.e., if

![]() $p_G(\cdot )$

helps recover the group of auto-isometries of G. A stronger result would be if

$p_G(\cdot )$

helps recover the group of auto-isometries of G. A stronger result would be if

![]() $p_G$

recovers G itself (up to isometry). We show that both of these hold.

$p_G$

recovers G itself (up to isometry). We show that both of these hold.

Theorem D Given a graph metric space

![]() $G = (V,E)$

and a bijection

$G = (V,E)$

and a bijection

![]() $\Psi : V \to V$

, the symmetries of the polynomial

$\Psi : V \to V$

, the symmetries of the polynomial

![]() $p_G$

equal the isometries of G. In particular, any (equivalently all) of the statements in (1.3) hold, if and only if

$p_G$

equal the isometries of G. In particular, any (equivalently all) of the statements in (1.3) hold, if and only if

![]() $\Psi $

is an isometry of G. More strongly, the polynomial

$\Psi $

is an isometry of G. More strongly, the polynomial

![]() $p_G(\mathbf {n})$

recovers the graph metric space G (up to isometry). However, this does not hold for the polynomial

$p_G(\mathbf {n})$

recovers the graph metric space G (up to isometry). However, this does not hold for the polynomial

![]() $u_G$

.

$u_G$

.

As the proof reveals, one in fact needs only the homogeneous quadratic part of

![]() $p_G$

, i.e., its Hessian matrix

$p_G$

, i.e., its Hessian matrix

![]() $((\partial _{n_v} \partial _{n_{v'}} p_G)(\mathbf {0}_V))_{v,v' \in V}$

, to recover the graph and its isometries. Moreover, this associates to every graph a partially symmetric polynomial, whose symmetries are precisely the graph-isometries.

$((\partial _{n_v} \partial _{n_{v'}} p_G)(\mathbf {0}_V))_{v,v' \in V}$

, to recover the graph and its isometries. Moreover, this associates to every graph a partially symmetric polynomial, whose symmetries are precisely the graph-isometries.

Our next result works more generally in metric spaces X, hence is stated over them. Note that the polynomial

![]() $p_X(\mathbf {n})$

is “partially symmetric,” depending on the symmetries (or isometries) of the distance matrix (or metric space). Indeed, partial symmetry is as much as one can hope for, because it turns out that “full” symmetry (in all variables

$p_X(\mathbf {n})$

is “partially symmetric,” depending on the symmetries (or isometries) of the distance matrix (or metric space). Indeed, partial symmetry is as much as one can hope for, because it turns out that “full” symmetry (in all variables

![]() $n_x$

) occurs precisely in one situation.

$n_x$

) occurs precisely in one situation.

Proposition 1.6 Given a finite metric space X, the following are equivalent:

-

(1) The polynomial

$p_X(\mathbf {n})$

is symmetric in the variables

$p_X(\mathbf {n})$

is symmetric in the variables

$\{ n_x, \ x \in X \}$

.

$\{ n_x, \ x \in X \}$

. -

(2) The metric

$d_X$

is a rescaled discrete metric:

$d_X$

is a rescaled discrete metric:

$d_X(x,y) = c \mathbf {1}_{x \neq y}\ \forall x,y \in X$

, for some

$d_X(x,y) = c \mathbf {1}_{x \neq y}\ \forall x,y \in X$

, for some

$c>0$

.

$c>0$

.

1.4.2 Complete multipartite graphs: novel characterization via stability

The remainder of this section returns back to graphs. We next present an interesting byproduct of the above results: a novel characterization of the class of complete multipartite graphs. Begin by observing from the proof of Theorem C that the polynomials

![]() $p_G(\cdot )$

are stable because of a determinantal representation (followed by inversion). However, they do not enjoy two related properties:

$p_G(\cdot )$

are stable because of a determinantal representation (followed by inversion). However, they do not enjoy two related properties:

-

(1)

$p_G(\cdot )$

is not homogeneous.

$p_G(\cdot )$

is not homogeneous. -

(2) The coefficients of the multi-affine polynomial

$p_G(\cdot )$

are not all of the same sign; in particular, they cannot form a probability distribution on the subsets of

$p_G(\cdot )$

are not all of the same sign; in particular, they cannot form a probability distribution on the subsets of

$\{ 1, \dots , k \}$

(corresponding to the various monomials in

$\{ 1, \dots , k \}$

(corresponding to the various monomials in

$p_G(\cdot )$

). In fact, even the constant and linear terms have opposite signs, by the final assertion in Theorem B.

$p_G(\cdot )$

). In fact, even the constant and linear terms have opposite signs, by the final assertion in Theorem B.

These two (unavailable) properties of real-stable polynomials are indeed important and well-studied in the literature. Corresponding to the preceding numbering:

-

(1) Very recently, Brändén and Huh [Reference Brändén and Huh12] introduced and studied a distinguished class of homogeneous real polynomials, which they termed Lorentzian polynomials (defined below). Relatedly, Gurvits [Reference Gurvits, Kotsireas and Zima23] / Anari–Oveis Gharan–Vinzant [Reference Anari, Oveis Gharan and Vinzant2] defined strongly/completely log-concave polynomials, also defined below. These classes of polynomials have several interesting properties as well as applications (see, e.g., [Reference Anari, Oveis Gharan and Vinzant1, Reference Anari, Oveis Gharan and Vinzant2, Reference Brändén and Huh12, Reference Gurvits, Kotsireas and Zima23] and related/follow-up works).

-

(2) Recall that strongly Rayleigh measures are probability measures on the power set of

$\{ 1, \dots , k \}$

whose generating (multi-affine) polynomials are real-stable. These were introduced and studied by Borcea, Brändén, and Liggett in the fundamental work [Reference Borcea, Brändén and Liggett8]. This work developed the theory of negative association/dependence for such measures, and enabled the authors to prove several conjectures of Liggett, Pemantle, and Wagner, among other achievements.

$\{ 1, \dots , k \}$

whose generating (multi-affine) polynomials are real-stable. These were introduced and studied by Borcea, Brändén, and Liggett in the fundamental work [Reference Borcea, Brändén and Liggett8]. This work developed the theory of negative association/dependence for such measures, and enabled the authors to prove several conjectures of Liggett, Pemantle, and Wagner, among other achievements.

Given that

![]() $p_G(\cdot )$

is always real-stable, a natural question is if one can characterize those graphs for which a certain homogenization of

$p_G(\cdot )$

is always real-stable, a natural question is if one can characterize those graphs for which a certain homogenization of

![]() $p_G(\cdot )$

is Lorentzian, or a suitable normalization is strongly Rayleigh. The standard mathematical way to address obstacle (1) above is to “projectivize” using a new variable

$p_G(\cdot )$

is Lorentzian, or a suitable normalization is strongly Rayleigh. The standard mathematical way to address obstacle (1) above is to “projectivize” using a new variable

![]() $z_0$

, while for obstacle (2) we evaluate at

$z_0$

, while for obstacle (2) we evaluate at

![]() $(-z_1, \dots , -z_k)$

, where we use

$(-z_1, \dots , -z_k)$

, where we use

![]() $z_j$

instead of

$z_j$

instead of

![]() $n_{x_j}$

to denote complex variables. Thus, our next result proceeds via homogenization at

$n_{x_j}$

to denote complex variables. Thus, our next result proceeds via homogenization at

![]() $-z_0$

.

$-z_0$

.

Theorem E Say

![]() $G = (V,E)$

with

$G = (V,E)$

with

![]() $|V|=k$

. Define the homogenized blowup-polynomial

$|V|=k$

. Define the homogenized blowup-polynomial

Then the following are equivalent:

-

(1) The polynomial

$\widetilde {p}_G(z_0, z_1, \dots , z_k)$

is real-stable.

$\widetilde {p}_G(z_0, z_1, \dots , z_k)$

is real-stable. -

(2) The polynomial

$\widetilde {p}_G(\cdot )$

has all coefficients (of the monomials

$\widetilde {p}_G(\cdot )$

has all coefficients (of the monomials

$z_0^{k - |J|} \prod _{j \in J} z_j$

) nonnegative.

$z_0^{k - |J|} \prod _{j \in J} z_j$

) nonnegative. -

(3) We have

$(-1)^k p_G(-1,\dots ,-1)> 0$

, and the normalized “reflected” polynomial is strongly Rayleigh. In other words, this (multi-affine) polynomial is real-stable and has nonnegative coefficients (of all monomials

$(-1)^k p_G(-1,\dots ,-1)> 0$

, and the normalized “reflected” polynomial is strongly Rayleigh. In other words, this (multi-affine) polynomial is real-stable and has nonnegative coefficients (of all monomials $$\begin{align*}(z_1, \dots, z_k) \quad \mapsto \quad \frac{p_G(-z_1, \dots, -z_k)}{p_G(-1,\dots,-1)} \end{align*}$$

$$\begin{align*}(z_1, \dots, z_k) \quad \mapsto \quad \frac{p_G(-z_1, \dots, -z_k)}{p_G(-1,\dots,-1)} \end{align*}$$

$\prod _{j \in J} z_j$

), which sum up to

$\prod _{j \in J} z_j$

), which sum up to

$1$

.

$1$

.

-

(4) The modified distance matrix

$\mathcal {D}_G$

(see Definition 1.1) is positive semidefinite.

$\mathcal {D}_G$

(see Definition 1.1) is positive semidefinite. -

(5) G is a complete multipartite graph.

Theorem E is a novel characterization result of complete multipartite graphs in the literature, in terms of real stability and the strong(ly) Rayleigh property. Moreover, given the remarks preceding Theorem E, we present three further equivalences to these characterizations.

Corollary 1.7 Definitions in Section 4.2. The assertions in Theorem E are further equivalent to:

-

(6) The polynomial

$\widetilde {p}_G(z_0, \dots , z_k)$

is Lorentzian.

$\widetilde {p}_G(z_0, \dots , z_k)$

is Lorentzian. -

(7) The polynomial

$\widetilde {p}_G(z_0, \dots , z_k)$

is strongly log-concave.

$\widetilde {p}_G(z_0, \dots , z_k)$

is strongly log-concave. -

(8) The polynomial

$\widetilde {p}_G(z_0, \dots , z_k)$

is completely log-concave.

$\widetilde {p}_G(z_0, \dots , z_k)$

is completely log-concave.

We quickly explain the corollary. Theorem E(1) implies

![]() $\widetilde {p}_G$

is Lorentzian (see [Reference Brändén and Huh12, Reference Choe, Oxley, Sokal and Wagner15]), which implies Theorem E(2). The other equivalences follow from [Reference Brändén and Huh12, Theorem 2.30], which shows that – for any real homogeneous polynomial – assertions (7), (8) here are equivalent to

$\widetilde {p}_G$

is Lorentzian (see [Reference Brändén and Huh12, Reference Choe, Oxley, Sokal and Wagner15]), which implies Theorem E(2). The other equivalences follow from [Reference Brändén and Huh12, Theorem 2.30], which shows that – for any real homogeneous polynomial – assertions (7), (8) here are equivalent to

![]() $\widetilde {p}_G$

being Lorentzian.

$\widetilde {p}_G$

being Lorentzian.

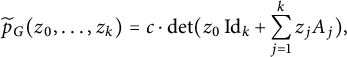

Remark 1.8 As we see in the proof of Theorem E, when

![]() $\mathcal {D}_G$

is positive semidefinite, the homogeneous polynomial

$\mathcal {D}_G$

is positive semidefinite, the homogeneous polynomial

![]() $\widetilde {p}_G(z_0, \dots , z_k)$

has a determinantal representation, i.e.,

$\widetilde {p}_G(z_0, \dots , z_k)$

has a determinantal representation, i.e.,

$$\begin{align*}\widetilde{p}_G(z_0, \dots, z_k) = c \cdot \det( z_0 \operatorname{\mathrm{Id}}_k + \sum_{j=1}^k z_j A_j), \end{align*}$$

$$\begin{align*}\widetilde{p}_G(z_0, \dots, z_k) = c \cdot \det( z_0 \operatorname{\mathrm{Id}}_k + \sum_{j=1}^k z_j A_j), \end{align*}$$

with all

![]() $A_j$

positive semidefinite and

$A_j$

positive semidefinite and

![]() $c \in \mathbb R$

. In Proposition A.2, we further compute the mixed characteristic polynomial of these matrices

$c \in \mathbb R$

. In Proposition A.2, we further compute the mixed characteristic polynomial of these matrices

![]() $A_j$

(see (A.1) for the definition), and show that up to a scalar, it equals the “inversion” of the univariate blowup-polynomial, i.e.,

$A_j$

(see (A.1) for the definition), and show that up to a scalar, it equals the “inversion” of the univariate blowup-polynomial, i.e.,

![]() $z_0^k u_G(z_0^{-1})$

.

$z_0^k u_G(z_0^{-1})$

.

Remark 1.9 We also show that the univariate polynomial

![]() $u_G(x)$

is intimately related to the characteristic polynomial of

$u_G(x)$

is intimately related to the characteristic polynomial of

![]() $D_G$

(i.e., the “distance spectrum” of G), whose study was one of our original motivations. See Proposition 4.2 and the subsequent discussion, for precise statements.

$D_G$

(i.e., the “distance spectrum” of G), whose study was one of our original motivations. See Proposition 4.2 and the subsequent discussion, for precise statements.

1.5 Two novel delta-matroids

We conclude with a related byproduct: two novel constructions of delta-matroids, one for every finite metric space and the other for each tree graph. Recall that a delta-matroid consists of a finite “ground set” E and a nonempty collection of feasible subsets

![]() $\mathcal {F} \subseteq 2^E$

, satisfying

$\mathcal {F} \subseteq 2^E$

, satisfying

![]() $\bigcup _{F \in \mathcal {F}} F = E$

as well as the symmetric exchange axiom: Given

$\bigcup _{F \in \mathcal {F}} F = E$

as well as the symmetric exchange axiom: Given

![]() $A,B \in \mathcal {F}$

and

$A,B \in \mathcal {F}$

and

![]() $x \in A \Delta B$

(their symmetric difference), there exists

$x \in A \Delta B$

(their symmetric difference), there exists

![]() $y \in A \Delta B$

such that

$y \in A \Delta B$

such that

![]() $A \Delta \{ x, y \} \in \mathcal {F}$

. Delta-matroids were introduced by Bouchet in [Reference Bouchet9] as a generalization of the notion of matroids.

$A \Delta \{ x, y \} \in \mathcal {F}$

. Delta-matroids were introduced by Bouchet in [Reference Bouchet9] as a generalization of the notion of matroids.

Each (skew-)symmetric matrix

![]() $A_{k \times k}$

over a field yields a linear delta-matroid

$A_{k \times k}$

over a field yields a linear delta-matroid

![]() $\mathcal {M}_A$

as follows. Given any matrix

$\mathcal {M}_A$

as follows. Given any matrix

![]() $A_{k \times k}$

, let

$A_{k \times k}$

, let

![]() $E := \{ 1, \dots , k \}$

and let a subset

$E := \{ 1, \dots , k \}$

and let a subset

![]() $F \subseteq E$

belong to

$F \subseteq E$

belong to

![]() $\mathcal {M}_A$

if either F is empty or the principal submatrix

$\mathcal {M}_A$

if either F is empty or the principal submatrix

![]() $A_{F \times F}$

is nonsingular. In [Reference Bouchet10], Bouchet showed that if A is (skew-)symmetric, then the set system

$A_{F \times F}$

is nonsingular. In [Reference Bouchet10], Bouchet showed that if A is (skew-)symmetric, then the set system

![]() $\mathcal {M}_A$

is indeed a delta-matroid, which is said to be linear.

$\mathcal {M}_A$

is indeed a delta-matroid, which is said to be linear.

We now return to the blowup-polynomial. First, recall a 2007 result of Brändén [Reference Brändén11]: given a multi-affine real-stable polynomial, the set of monomials with nonzero coefficients forms a delta-matroid. Thus, from

![]() $p_X(\mathbf {n}),$

we obtain a delta-matroid, which as we will explain is linear.

$p_X(\mathbf {n}),$

we obtain a delta-matroid, which as we will explain is linear.

Corollary 1.10 Given a finite metric space

![]() $(X,d)$

, the set of monomials with nonzero coefficients in

$(X,d)$

, the set of monomials with nonzero coefficients in

![]() $p_X(\mathbf {n})$

forms the linear delta-matroid

$p_X(\mathbf {n})$

forms the linear delta-matroid

![]() $\mathcal {M}_{\mathcal {D}_X}$

.

$\mathcal {M}_{\mathcal {D}_X}$

.

Definition 1.11 We term

![]() $\mathcal {M}_{\mathcal {D}_X}$

the blowup delta-matroid of

$\mathcal {M}_{\mathcal {D}_X}$

the blowup delta-matroid of

![]() $(X,d)$

.

$(X,d)$

.

The blowup delta-matroid

![]() $\mathcal {M}_{\mathcal {D}_X}$

is – even for X a finite connected unweighted graph – a novel construction that arises out of metric geometry rather than combinatorics, and one that seems to be unexplored in the literature (and unknown to experts). Of course, it is a simple, direct consequence of Brändén’s result in [Reference Brändén11]. However, the next delta-matroid is less direct to show.

$\mathcal {M}_{\mathcal {D}_X}$

is – even for X a finite connected unweighted graph – a novel construction that arises out of metric geometry rather than combinatorics, and one that seems to be unexplored in the literature (and unknown to experts). Of course, it is a simple, direct consequence of Brändén’s result in [Reference Brändén11]. However, the next delta-matroid is less direct to show.

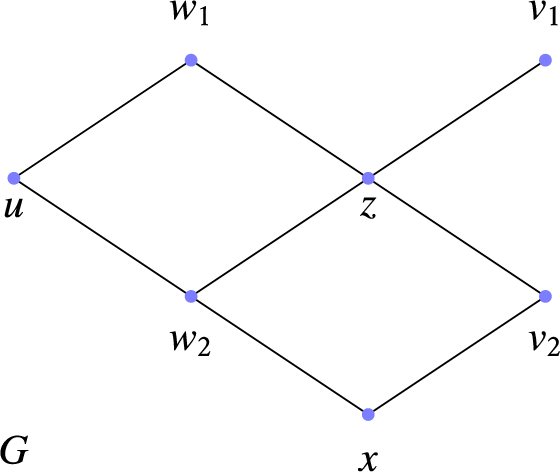

Theorem F Suppose

![]() $T = (V,E)$

is a finite connected unweighted tree with

$T = (V,E)$

is a finite connected unweighted tree with

![]() $|V| \geqslant 2$

. Define the set system

$|V| \geqslant 2$

. Define the set system

![]() $\mathcal {M}'(T)$

to comprise all subsets

$\mathcal {M}'(T)$

to comprise all subsets

![]() $I \subseteq V$

, except for the ones that contain two vertices

$I \subseteq V$

, except for the ones that contain two vertices

![]() $v_1 \neq v_2$

in I such that the Steiner tree

$v_1 \neq v_2$

in I such that the Steiner tree

![]() $T(I)$

has

$T(I)$

has

![]() $v_1, v_2$

as leaves with a common neighbor. Then

$v_1, v_2$

as leaves with a common neighbor. Then

![]() $\mathcal {M}'(T)$

is a delta-matroid, which does not equal

$\mathcal {M}'(T)$

is a delta-matroid, which does not equal

![]() $\mathcal {M}_{D_T}$

for every path graph

$\mathcal {M}_{D_T}$

for every path graph

![]() $T = P_k$

,

$T = P_k$

,

![]() $k \geqslant 9$

.

$k \geqslant 9$

.

We further prove, this notion of (in)feasible subsets in

![]() $\mathcal {M}'(T)$

does not generalize to all graphs. Thus,

$\mathcal {M}'(T)$

does not generalize to all graphs. Thus,

![]() $\mathcal {M}'(T)$

is a combinatorial (not matrix-theoretic) delta-matroid that is also unstudied in the literature to the best of our knowledge, and which arises from every tree, but interestingly, not from all graphs.

$\mathcal {M}'(T)$

is a combinatorial (not matrix-theoretic) delta-matroid that is also unstudied in the literature to the best of our knowledge, and which arises from every tree, but interestingly, not from all graphs.

As a closing statement here: in addition to further exploring the real-stable polynomials

![]() $p_G(\mathbf {n})$

, it would be interesting to obtain connections between these delta-matroids

$p_G(\mathbf {n})$

, it would be interesting to obtain connections between these delta-matroids

![]() $\mathcal {M}_{\mathcal {D}_G}$

and

$\mathcal {M}_{\mathcal {D}_G}$

and

![]() $\mathcal {M}'(T)$

, and others known in the literature from combinatorics, polynomial geometry, and algebra.

$\mathcal {M}'(T)$

, and others known in the literature from combinatorics, polynomial geometry, and algebra.

1.6 Organization of the paper

The remainder of the paper is devoted to proving the above Theorems A through F; this will require developing several preliminaries along the way. The paper is clustered by theme; thus, the next two sections and the final one respectively involve, primarily:

-

• (commutative) algebraic methods – to prove the polynomiality of

$p_X(\cdot )$

(Theorem B), and to characterize those X for which it is a symmetric polynomial (Proposition 1.6);

$p_X(\cdot )$

(Theorem B), and to characterize those X for which it is a symmetric polynomial (Proposition 1.6); -

• methods from real-stability and analysis – to show

$p_X(\cdot )$

is real-stable (Theorem C);

$p_X(\cdot )$

is real-stable (Theorem C); -

• metric geometry – to characterize for a given Euclidean finite metric space X, all blowups that remain Euclidean (Theorem A), and to write down a related “tropical” version of Schoenberg’s Euclidean embedding theorem from [Reference Schoenberg37].

In the remaining Section 4, we prove Theorems D–F. In greater detail: we focus on the special case of

![]() $X = G$

a finite simple connected unweighted graph, with the minimum edge-distance metric. After equating the isometries of G with the symmetries of

$X = G$

a finite simple connected unweighted graph, with the minimum edge-distance metric. After equating the isometries of G with the symmetries of

![]() $p_G(\mathbf {n})$

, and recovering G from

$p_G(\mathbf {n})$

, and recovering G from

![]() $p_G(\mathbf {n})$

, we prove the aforementioned characterization of complete multipartite graphs G in terms of

$p_G(\mathbf {n})$

, we prove the aforementioned characterization of complete multipartite graphs G in terms of

![]() $\widetilde {p}_G$

being real-stable, or

$\widetilde {p}_G$

being real-stable, or

![]() $p_G(-\mathbf {n}) / p_G(-1, \dots , -1)$

being strongly Rayleigh. Next, we discuss a family of blowup-polynomials from this viewpoint of “partial” symmetry. We also connect

$p_G(-\mathbf {n}) / p_G(-1, \dots , -1)$

being strongly Rayleigh. Next, we discuss a family of blowup-polynomials from this viewpoint of “partial” symmetry. We also connect

![]() $u_G(x)$

to the characteristic polynomial of

$u_G(x)$

to the characteristic polynomial of

![]() $D_G$

, hence to the distance spectrum of G. Finally, we introduce the delta-matroid

$D_G$

, hence to the distance spectrum of G. Finally, we introduce the delta-matroid

![]() $\mathcal {M}'(T)$

for every tree, and explore its relation to the blowup delta-matroid

$\mathcal {M}'(T)$

for every tree, and explore its relation to the blowup delta-matroid

![]() $\mathcal {M}_{\mathcal {D}_T}$

(for T a path), as well as extensions to general graphs. We end with Appendices A and B that contain supplementary details and results.

$\mathcal {M}_{\mathcal {D}_T}$

(for T a path), as well as extensions to general graphs. We end with Appendices A and B that contain supplementary details and results.

We conclude this section on a philosophical note. Our approach in this work adheres to the maxim that the multivariate polynomial is a natural, general, and more powerful object than its univariate specialization. This is of course famously manifested in the recent explosion of activity in the geometry of polynomials, via the study of real-stable polynomials by Borcea–Brändén and other researchers; but also shows up in several other settings – we refer the reader to the survey [Reference Sokal and Webb40] by Sokal for additional instances. (E.g., a specific occurrence is in the extreme simplicity of the proof of the multivariate Brown–Colbourn conjecture [Reference Royle and Sokal36, Reference Sokal39], as opposed to the involved proof in the univariate case [Reference Wagner42].)

2 Algebraic results: the blowup-polynomial and its full symmetry

We begin this section by proving Theorem B in “full” algebraic (and greater mathematical) generality, over an arbitrary unital commutative ring R. We require the following notation.

Definition 2.1 Fix positive integers

![]() $k, n_1, \dots , n_k> 0$

, and vectors

$k, n_1, \dots , n_k> 0$

, and vectors

![]() $\mathbf {p}_i, \mathbf {q}_i \in R^{n_i}$

for all

$\mathbf {p}_i, \mathbf {q}_i \in R^{n_i}$

for all

![]() $1 \leqslant i \leqslant k$

.

$1 \leqslant i \leqslant k$

.

-

(1) For these parameters, define the blowup-monoid to be the collection

$\mathcal {M}_{\mathbf {n}}(R) := R^k \times R^{k \times k}$

. We write a typical element as a pair

$\mathcal {M}_{\mathbf {n}}(R) := R^k \times R^{k \times k}$

. We write a typical element as a pair

$(\mathbf {a}, D)$

, where in coordinates,

$(\mathbf {a}, D)$

, where in coordinates,

$\mathbf {a} = (a_i)^T$

and

$\mathbf {a} = (a_i)^T$

and

$D = (d_{ij})$

.

$D = (d_{ij})$

. -

(2) Given

$(\mathbf {a}, D) \in \mathcal {M}_{\mathbf {n}}(R)$

, define

$(\mathbf {a}, D) \in \mathcal {M}_{\mathbf {n}}(R)$

, define

$M(\mathbf {a},D)$

to be the square matrix of dimension

$M(\mathbf {a},D)$

to be the square matrix of dimension

$n_1 + \cdots + n_k$

with

$n_1 + \cdots + n_k$

with

$k^2$

blocks, whose

$k^2$

blocks, whose

$(i,j)$

-block for

$(i,j)$

-block for

$1 \leqslant i,j \leqslant k$

is

$1 \leqslant i,j \leqslant k$

is

$\delta _{i,j} a_i \operatorname {\mathrm {Id}}_{n_i} + d_{ij} \mathbf {p}_i \mathbf {q}_j^T$

. Also define

$\delta _{i,j} a_i \operatorname {\mathrm {Id}}_{n_i} + d_{ij} \mathbf {p}_i \mathbf {q}_j^T$

. Also define

$\Delta _{\mathbf {a}} \in R^{k \times k}$

to be the diagonal matrix with

$\Delta _{\mathbf {a}} \in R^{k \times k}$

to be the diagonal matrix with

$(i,i)$

entry

$(i,i)$

entry

$a_i$

, and

$a_i$

, and  $$\begin{align*}N(\mathbf{a},D) := \Delta_{\mathbf{a}} + \operatorname{\mathrm{diag}}(\mathbf{q}_1^T \mathbf{p}_1, \dots, \mathbf{q}_k^T \mathbf{p}_k) \cdot D \ \in R^{k \times k}. \end{align*}$$

$$\begin{align*}N(\mathbf{a},D) := \Delta_{\mathbf{a}} + \operatorname{\mathrm{diag}}(\mathbf{q}_1^T \mathbf{p}_1, \dots, \mathbf{q}_k^T \mathbf{p}_k) \cdot D \ \in R^{k \times k}. \end{align*}$$

-

(3) Given

$\mathbf {a}, \mathbf {a}' \in R^k$

, define

$\mathbf {a}, \mathbf {a}' \in R^k$

, define

$\mathbf {a} \circ \mathbf {a}' := (a_1 a^{\prime }_1, \dots , a_k a^{\prime }_k)^T \in R^k$

.

$\mathbf {a} \circ \mathbf {a}' := (a_1 a^{\prime }_1, \dots , a_k a^{\prime }_k)^T \in R^k$

.

The set

![]() $\mathcal {M}_{\mathbf {n}}(R)$

is of course a group under addition, but we are interested in the following non-standard monoid structure on it.

$\mathcal {M}_{\mathbf {n}}(R)$

is of course a group under addition, but we are interested in the following non-standard monoid structure on it.

Lemma 2.2 The set

![]() $\mathcal {M}_{\mathbf {n}}(R)$

is a monoid under the product

$\mathcal {M}_{\mathbf {n}}(R)$

is a monoid under the product

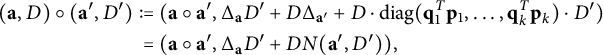

$$ \begin{align*} (\mathbf{a},D) \circ (\mathbf{a}',D') := &\ (\mathbf{a} \circ \mathbf{a}', \Delta_{\mathbf{a}} D' + D \Delta_{\mathbf{a}'} + D \cdot \operatorname{\mathrm{diag}}(\mathbf{q}_1^T \mathbf{p}_1, \dots, \mathbf{q}_k^T \mathbf{p}_k) \cdot D')\\ = &\ (\mathbf{a} \circ \mathbf{a}', \Delta_{\mathbf{a}} D' + D N(\mathbf{a}',D')), \end{align*} $$

$$ \begin{align*} (\mathbf{a},D) \circ (\mathbf{a}',D') := &\ (\mathbf{a} \circ \mathbf{a}', \Delta_{\mathbf{a}} D' + D \Delta_{\mathbf{a}'} + D \cdot \operatorname{\mathrm{diag}}(\mathbf{q}_1^T \mathbf{p}_1, \dots, \mathbf{q}_k^T \mathbf{p}_k) \cdot D')\\ = &\ (\mathbf{a} \circ \mathbf{a}', \Delta_{\mathbf{a}} D' + D N(\mathbf{a}',D')), \end{align*} $$

and with identity element

![]() $((1,\dots ,1)^T, 0_{k \times k})$

.

$((1,\dots ,1)^T, 0_{k \times k})$

.

With this notation in place, we now present the “general” formulation of Theorem B.

Theorem 2.3 Fix integers

![]() $k, n_1, \dots , n_k$

and vectors

$k, n_1, \dots , n_k$

and vectors

![]() $\mathbf {p}_i, \mathbf {q}_i$

as above. Let

$\mathbf {p}_i, \mathbf {q}_i$

as above. Let

![]() $K := n_1 + \cdots + n_k$

.

$K := n_1 + \cdots + n_k$

.

-

(1) The following map is a morphism of monoids:

$$\begin{align*}\Psi : (\mathcal{M}_{\mathbf{n}}(R), \circ) \to (R^{K \times K}, \cdot), \qquad (\mathbf{a},D) \mapsto M(\mathbf{a},D). \end{align*}$$

$$\begin{align*}\Psi : (\mathcal{M}_{\mathbf{n}}(R), \circ) \to (R^{K \times K}, \cdot), \qquad (\mathbf{a},D) \mapsto M(\mathbf{a},D). \end{align*}$$

-

(2) The determinant of

$M(\mathbf {a},D)$

equals

$M(\mathbf {a},D)$

equals

$\prod _i a_i^{n_i - 1}$

times a multi-affine polynomial in

$\prod _i a_i^{n_i - 1}$

times a multi-affine polynomial in

$a_i, d_{ij}$

, and the entries

$a_i, d_{ij}$

, and the entries

$\mathbf {q}_i^T \mathbf {p}_i$

. More precisely, (2.1)

$\mathbf {q}_i^T \mathbf {p}_i$

. More precisely, (2.1) $$ \begin{align} \det M(\mathbf{a},D) = \det N(\mathbf{a},D) \prod_{i=1}^k a_i^{n_i - 1}. \end{align} $$

$$ \begin{align} \det M(\mathbf{a},D) = \det N(\mathbf{a},D) \prod_{i=1}^k a_i^{n_i - 1}. \end{align} $$

-

(3) If all

$a_i \in R^\times $

and

$a_i \in R^\times $

and

$N(\mathbf {a},D)$

is invertible, then so is

$N(\mathbf {a},D)$

is invertible, then so is

$M(\mathbf {a},D)$

, and

$M(\mathbf {a},D)$

, and  $$\begin{align*}M(\mathbf{a},D)^{-1} = M((a_1^{-1}, \dots, a_k^{-1})^T, -\Delta_{\mathbf{a}}^{-1} D N(\mathbf{a},D)^{-1}). \end{align*}$$

$$\begin{align*}M(\mathbf{a},D)^{-1} = M((a_1^{-1}, \dots, a_k^{-1})^T, -\Delta_{\mathbf{a}}^{-1} D N(\mathbf{a},D)^{-1}). \end{align*}$$

Instead of using

![]() $N(\mathbf {a},D)$

which involves “post-multiplication” by D, one can also use

$N(\mathbf {a},D)$

which involves “post-multiplication” by D, one can also use

![]() $N(\mathbf {a},D^T)^T$

in the above results, to obtain similar formulas that we leave to the interested reader.

$N(\mathbf {a},D^T)^T$

in the above results, to obtain similar formulas that we leave to the interested reader.

Proof The first assertion is easy, and it implies the third assertion via showing that

![]() $M(\mathbf {a},D)^{-1} M(\mathbf {a},D) = \operatorname {\mathrm {Id}}_K$

. (We show these computations for completeness in the appendix.) Thus, it remains to prove the second assertion. To proceed, we employ Zariski density, as was done in, e.g., our previous work [Reference Choudhury and Khare16]. Namely, we begin by working over the field of rational functions in

$M(\mathbf {a},D)^{-1} M(\mathbf {a},D) = \operatorname {\mathrm {Id}}_K$

. (We show these computations for completeness in the appendix.) Thus, it remains to prove the second assertion. To proceed, we employ Zariski density, as was done in, e.g., our previous work [Reference Choudhury and Khare16]. Namely, we begin by working over the field of rational functions in

![]() $k + k^2 + 2K$

variables

$k + k^2 + 2K$

variables

where

![]() $A_i, D_{ij}$

(with a slight abuse of notation), and

$A_i, D_{ij}$

(with a slight abuse of notation), and

![]() $Q_i^{(l)}, P_i^{(l)}$

– with

$Q_i^{(l)}, P_i^{(l)}$

– with

![]() $1 \leqslant i,j \leqslant k$

and

$1 \leqslant i,j \leqslant k$

and

![]() $1 \leqslant l \leqslant n_i$

– serve as proxies for

$1 \leqslant l \leqslant n_i$

– serve as proxies for

![]() $a_i, d_{ij}$

, and the coordinates of

$a_i, d_{ij}$

, and the coordinates of

![]() $\mathbf {q}_i, \mathbf {p}_i$

, respectively. Over this field, we work with

$\mathbf {q}_i, \mathbf {p}_i$

, respectively. Over this field, we work with

and the matrix

![]() $\mathbf {D} = (D_{ij})$

; note that

$\mathbf {D} = (D_{ij})$

; note that

![]() $\mathbf {D}$

has full rank

$\mathbf {D}$

has full rank

![]() $r=k$

, since

$r=k$

, since

![]() $\det \mathbf {D}$

is a nonzero polynomial over

$\det \mathbf {D}$

is a nonzero polynomial over

![]() $\mathbb {Q}$

, hence is a unit in

$\mathbb {Q}$

, hence is a unit in

![]() $\mathbb {F}$

.

$\mathbb {F}$

.

Let

![]() $\mathbf {D} = \sum _{j=1}^r \mathbf {u}_j \mathbf {v}_j^T$

be any rank-one decomposition. For each

$\mathbf {D} = \sum _{j=1}^r \mathbf {u}_j \mathbf {v}_j^T$

be any rank-one decomposition. For each

![]() $1 \leqslant j \leqslant r$

, write

$1 \leqslant j \leqslant r$

, write

![]() $\mathbf {u}_j = (u_{j1}, \dots , u_{jk})^T$

, and similarly for

$\mathbf {u}_j = (u_{j1}, \dots , u_{jk})^T$

, and similarly for

![]() $\mathbf {v}_j$

. Then

$\mathbf {v}_j$

. Then

![]() $D_{ij} = \sum _{s=1}^r u_{si} v_{sj}$

for all

$D_{ij} = \sum _{s=1}^r u_{si} v_{sj}$

for all

![]() $i,j$

. Now a Schur complement argument (with respect to the

$i,j$

. Now a Schur complement argument (with respect to the

![]() $(2,2)$

block below) yields:

$(2,2)$

block below) yields:

We next compute the determinant on the right alternately: by using the Schur complement with respect to the

![]() $(1,1)$

block instead. This yields:

$(1,1)$

block instead. This yields:

$$\begin{align*}\det M(\mathbf{A}, \mathbf{D}) = \det ( \operatorname{\mathrm{Id}}_r + M ) \prod_{i=1}^k A_i^{n_i}, \end{align*}$$

$$\begin{align*}\det M(\mathbf{A}, \mathbf{D}) = \det ( \operatorname{\mathrm{Id}}_r + M ) \prod_{i=1}^k A_i^{n_i}, \end{align*}$$

where

![]() $M_{r \times r}$

has

$M_{r \times r}$

has

![]() $(i,j)$

entry

$(i,j)$

entry

![]() $\sum _{l=1}^k v_{il} \; (A_l^{-1} \mathbf {Q}_l^T \mathbf {P}_l) \; u_{jl}$

. But

$\sum _{l=1}^k v_{il} \; (A_l^{-1} \mathbf {Q}_l^T \mathbf {P}_l) \; u_{jl}$

. But

![]() $\det (\operatorname {\mathrm {Id}}_r + M)$

is also the determinant of

$\det (\operatorname {\mathrm {Id}}_r + M)$

is also the determinant of

by taking the Schur complement with respect to its

![]() $(1,1)$

block. Finally, take the Schur complement with respect to the

$(1,1)$

block. Finally, take the Schur complement with respect to the

![]() $(2,2)$

block of

$(2,2)$

block of

![]() $M'$

, to obtain

$M'$

, to obtain

$$ \begin{align*} \det M(\mathbf{A}, \mathbf{D}) = &\ \det M' \prod_{i=1}^k A_i^{n_i} = \det \left(\operatorname{\mathrm{Id}}_k + \Delta_{\mathbf{A}}^{-1} \operatorname{\mathrm{diag}}( \mathbf{Q}_1^T \mathbf{P}_1, \dots, \mathbf{Q}_k^T \mathbf{P}_k) \mathbf{D} \right) \prod_{i=1}^k A_i^{n_i}\\ = &\ \det N(\mathbf{A}, \mathbf{D}) \prod_{i=1}^k A_i^{n_i - 1}, \end{align*} $$

$$ \begin{align*} \det M(\mathbf{A}, \mathbf{D}) = &\ \det M' \prod_{i=1}^k A_i^{n_i} = \det \left(\operatorname{\mathrm{Id}}_k + \Delta_{\mathbf{A}}^{-1} \operatorname{\mathrm{diag}}( \mathbf{Q}_1^T \mathbf{P}_1, \dots, \mathbf{Q}_k^T \mathbf{P}_k) \mathbf{D} \right) \prod_{i=1}^k A_i^{n_i}\\ = &\ \det N(\mathbf{A}, \mathbf{D}) \prod_{i=1}^k A_i^{n_i - 1}, \end{align*} $$

and this is indeed

![]() $\prod _i A_i^{n_i - 1}$

times a multi-affine polynomial in the claimed variables.

$\prod _i A_i^{n_i - 1}$

times a multi-affine polynomial in the claimed variables.

The above reasoning proves the assertion (2.1) over the field

defined above. We now explain how Zariski density helps prove (2.1) over every unital commutative ring – with the key being that both sides of (2.1) are polynomials in the variables. Begin by observing that (2.1) actually holds over the polynomial (sub)ring

but the above proof used the invertibility of the polynomials

![]() $A_1, \dots , A_k, \det (D_{ij})_{i,j=1}^k$

.

$A_1, \dots , A_k, \det (D_{ij})_{i,j=1}^k$

.

Now use that

![]() $\mathbb {Q}$

is an infinite field; thus, the following result applies.

$\mathbb {Q}$

is an infinite field; thus, the following result applies.

Proposition 2.4 The following are equivalent for a field

![]() $\mathbb {F}$

.

$\mathbb {F}$

.

-

(1) The polynomial ring

$\mathbb {F}[x_1, \dots , x_n]$

(for some

$\mathbb {F}[x_1, \dots , x_n]$

(for some

$n \geqslant 1$

) equals the ring of polynomial functions from affine n-space

$n \geqslant 1$

) equals the ring of polynomial functions from affine n-space

$\mathbb {A}_{\mathbb {F}}^n \cong \mathbb {F}^n$

to

$\mathbb {A}_{\mathbb {F}}^n \cong \mathbb {F}^n$

to

$\mathbb {F}$

.

$\mathbb {F}$

. -

(2) The preceding statement holds for every

$n \geqslant 1$

.

$n \geqslant 1$

. -

(3)

$\mathbb {F}$

is infinite.

$\mathbb {F}$

is infinite.

Moreover, the nonzero-locus

![]() $\mathcal {L}$

of any nonzero polynomial in

$\mathcal {L}$

of any nonzero polynomial in

![]() $\mathbb {F}[x_1, \dots , x_n]$

with

$\mathbb {F}[x_1, \dots , x_n]$

with

![]() $\mathbb {F}$

an infinite field, is Zariski dense in

$\mathbb {F}$

an infinite field, is Zariski dense in

![]() $\mathbb {A}_{\mathbb {F}}^n$

. In other words, if a polynomial in n variables equals zero on

$\mathbb {A}_{\mathbb {F}}^n$

. In other words, if a polynomial in n variables equals zero on

![]() $\mathcal {L}$

, then it vanishes on all of

$\mathcal {L}$

, then it vanishes on all of

![]() $\mathbb {A}_{\mathbb {F}}^n \cong \mathbb {F}^n$

.

$\mathbb {A}_{\mathbb {F}}^n \cong \mathbb {F}^n$

.

Proof-sketch

Clearly

![]() $(2) \implies (1)$

; and that the contrapositive of

$(2) \implies (1)$

; and that the contrapositive of

![]() $(1) \implies (3)$

holds follows from the fact that over a finite field

$(1) \implies (3)$

holds follows from the fact that over a finite field

![]() $\mathbb {F}_q$

, the nonzero polynomial

$\mathbb {F}_q$

, the nonzero polynomial

![]() $x_1^q - x_1$

equals the zero function. The proof of

$x_1^q - x_1$

equals the zero function. The proof of

![]() $(1) \implies (3)$

is by induction on

$(1) \implies (3)$

is by induction on

![]() $n \geqslant 1$

, and is left to the reader (or see, e.g., standard textbooks, or even [Reference Choudhury and Khare16]) – as is the proof of the final assertion.

$n \geqslant 1$

, and is left to the reader (or see, e.g., standard textbooks, or even [Reference Choudhury and Khare16]) – as is the proof of the final assertion.

By the equivalence in Proposition 2.4, the above polynomial ring

![]() $R_0$

equals the ring of polynomial functions in the same number of variables, so (2.1) now holds over the ring of polynomial functions in the above

$R_0$

equals the ring of polynomial functions in the same number of variables, so (2.1) now holds over the ring of polynomial functions in the above

![]() $k + k^2 + 2K$

variables – but only on the nonzero-locus of the polynomial

$k + k^2 + 2K$

variables – but only on the nonzero-locus of the polynomial

![]() $(\det \mathbf {D}) \prod _i A_i$

, since we used

$(\det \mathbf {D}) \prod _i A_i$

, since we used

![]() $A_i^{-1}$

and the invertibility of

$A_i^{-1}$

and the invertibility of

![]() $\mathbf {D}$

in the above proof.

$\mathbf {D}$

in the above proof.

Now for the final touch: as

![]() $(\det \mathbf {D}) \prod _i A_i$

is a nonzero polynomial, its nonzero-locus is Zariski dense in affine space

$(\det \mathbf {D}) \prod _i A_i$

is a nonzero polynomial, its nonzero-locus is Zariski dense in affine space

![]() $\mathbb {A}_{\mathbb {Q}}^{k + k^2 + 2K}$

(by Proposition 2.4). Since the difference of the polynomials in (2.1) (this is where we use that

$\mathbb {A}_{\mathbb {Q}}^{k + k^2 + 2K}$

(by Proposition 2.4). Since the difference of the polynomials in (2.1) (this is where we use that

![]() $\det (\cdot )$

is a polynomial!) vanishes on the above nonzero-locus, it does so for all values of

$\det (\cdot )$

is a polynomial!) vanishes on the above nonzero-locus, it does so for all values of

![]() $A_i$

and the other variables. Therefore, (2.1) holds in the ring

$A_i$

and the other variables. Therefore, (2.1) holds in the ring

![]() $R^{\prime }_0$

of polynomial functions with coefficients in

$R^{\prime }_0$

of polynomial functions with coefficients in

![]() $\mathbb {Q}$

, hence upon restricting to the polynomial subring of

$\mathbb {Q}$

, hence upon restricting to the polynomial subring of

![]() $R^{\prime }_0$

with integer (not just rational) coefficients – since the polynomials on both sides of (2.1) have integer coefficients. Finally, the proof is completed by specializing the variables

$R^{\prime }_0$

with integer (not just rational) coefficients – since the polynomials on both sides of (2.1) have integer coefficients. Finally, the proof is completed by specializing the variables

![]() $A_i$

to specific scalars

$A_i$

to specific scalars

![]() $a_i$

in an arbitrary unital commutative ring R, and similarly for the other variables.

$a_i$

in an arbitrary unital commutative ring R, and similarly for the other variables.

Theorem 2.3, when specialized to

![]() $p_i^{(l)} = q_i^{(l)} = 1$

for all

$p_i^{(l)} = q_i^{(l)} = 1$

for all

![]() $1 \leqslant i \leqslant k$

and

$1 \leqslant i \leqslant k$

and

![]() $1 \leqslant l \leqslant n_i$

, reveals how to convert the sizes

$1 \leqslant l \leqslant n_i$

, reveals how to convert the sizes

![]() $n_{x_i}$

in the blowup-matrix

$n_{x_i}$

in the blowup-matrix

![]() $D_{X[\mathbf {n}]}$

into entries of the related matrix

$D_{X[\mathbf {n}]}$

into entries of the related matrix

![]() $N(\mathbf {a},D)$

. This helps prove a result in the introduction – that

$N(\mathbf {a},D)$

. This helps prove a result in the introduction – that

![]() $\det D_{X[\mathbf {n}]}$

is a polynomial in

$\det D_{X[\mathbf {n}]}$

is a polynomial in

![]() $\mathbf {n}$

.

$\mathbf {n}$

.

Proof of Theorem B

Everything but the final sentence follows from Theorem 2.3, specialized to

$$ \begin{align*} R = \mathbb{R}, \qquad n_i = n_{x_i}, \qquad d_{ij} = &\ d(x_i, x_j)\ \forall i \neq j, \qquad d_{ii} = 2 d(x_i, X \setminus \{ x_i \}) = -a_i, \\ D = \mathcal{D}_X = &\ (d_{ij})_{i,j=1}^k, \qquad p_i^{(l)} = q_i^{(l)} = 1\ \forall 1 \leqslant l \leqslant n_i. \end{align*} $$

$$ \begin{align*} R = \mathbb{R}, \qquad n_i = n_{x_i}, \qquad d_{ij} = &\ d(x_i, x_j)\ \forall i \neq j, \qquad d_{ii} = 2 d(x_i, X \setminus \{ x_i \}) = -a_i, \\ D = \mathcal{D}_X = &\ (d_{ij})_{i,j=1}^k, \qquad p_i^{(l)} = q_i^{(l)} = 1\ \forall 1 \leqslant l \leqslant n_i. \end{align*} $$

(A word of caution:

![]() $d_{ii} \neq d(x_i, x_i)$

, and hence

$d_{ii} \neq d(x_i, x_i)$

, and hence

![]() $\mathcal {D}_X \neq D_X$

: they differ by a diagonal matrix.)

$\mathcal {D}_X \neq D_X$

: they differ by a diagonal matrix.)

In particular,

![]() $p_X(\mathbf {n})$

is a multi-affine polynomial in

$p_X(\mathbf {n})$

is a multi-affine polynomial in

![]() $\mathbf {q}_i^T \mathbf {p}_i = n_i$

. We also write out the blowup-polynomial, useful here and below:

$\mathbf {q}_i^T \mathbf {p}_i = n_i$

. We also write out the blowup-polynomial, useful here and below:

$$ \begin{align} p_X(\mathbf{n}) = \det &\ N(\mathbf{a}_X,\mathcal{D}_X), \quad \text{where} \quad \mathbf{a}_X = (D_X - \mathcal{D}_X) (1,1,\dots,1)^T,\\ \text{and so} \quad &\ N(\mathbf{a}_X,\mathcal{D}_X) = \operatorname{\mathrm{diag}}((n_{x_i} - 1) 2 d(x_i, X \setminus \{ x_i \}))_i + (n_{x_i} d(x_i, x_j))_{i,j=1}^k. \notag \end{align} $$

$$ \begin{align} p_X(\mathbf{n}) = \det &\ N(\mathbf{a}_X,\mathcal{D}_X), \quad \text{where} \quad \mathbf{a}_X = (D_X - \mathcal{D}_X) (1,1,\dots,1)^T,\\ \text{and so} \quad &\ N(\mathbf{a}_X,\mathcal{D}_X) = \operatorname{\mathrm{diag}}((n_{x_i} - 1) 2 d(x_i, X \setminus \{ x_i \}))_i + (n_{x_i} d(x_i, x_j))_{i,j=1}^k. \notag \end{align} $$

Now the constant term is obtained by evaluating

![]() $\det N(\mathbf {a}_X, 0_{k \times k})$

, which is easy since

$\det N(\mathbf {a}_X, 0_{k \times k})$

, which is easy since

![]() $N(\mathbf {a}_X, 0_{k \times k})$

is diagonal. Similarly, the coefficient of

$N(\mathbf {a}_X, 0_{k \times k})$

is diagonal. Similarly, the coefficient of

![]() $n_{x_i}$

is obtained by setting all other

$n_{x_i}$

is obtained by setting all other

![]() $n_{x_{i'}} = 0$

in

$n_{x_{i'}} = 0$

in

![]() $\det N(\mathbf {a}_X,\mathcal {D}_X)$

. Expand along the ith column to compute this determinant; now adding these determinants over all i yields the claimed formula for the linear term.

$\det N(\mathbf {a}_X,\mathcal {D}_X)$

. Expand along the ith column to compute this determinant; now adding these determinants over all i yields the claimed formula for the linear term.

As a further refinement of Theorem B, we isolate every term in the multi-affine polynomial

![]() $p_X(\mathbf {n})$

. Two consequences follow: (a) a formula relating the blowup-polynomials for a metric space X and its subspace Y; and (b) a sufficient condition for two monomials in

$p_X(\mathbf {n})$

. Two consequences follow: (a) a formula relating the blowup-polynomials for a metric space X and its subspace Y; and (b) a sufficient condition for two monomials in

![]() $p_X(\mathbf {n})$

to have equal coefficients. In order to state and prove these latter two results, we require the following notion.

$p_X(\mathbf {n})$

to have equal coefficients. In order to state and prove these latter two results, we require the following notion.

Definition 2.5 We say that a metric subspace Y of a finite metric space

![]() $(X,d)$

is admissible if for every

$(X,d)$

is admissible if for every

![]() $y \in Y$

, there exists

$y \in Y$

, there exists

![]() $y' \in Y$

such that

$y' \in Y$

such that

![]() $d(y, X \setminus \{ y \}) = d(y,y')$

.

$d(y, X \setminus \{ y \}) = d(y,y')$

.

For example, in every finite simple connected unweighted graph G with the minimum edge-distance as its metric, a subset Y of vertices is admissible if and only if the induced subgraph in G on Y has no isolated vertices.

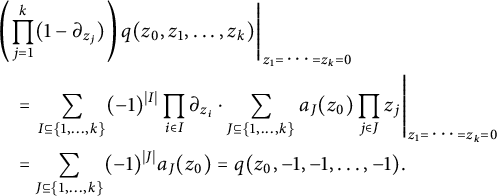

Proposition 2.6 Notation as above.

-

(1) Given any subset

$I \subseteq \{ 1, \dots , k \}$

, the coefficient in

$I \subseteq \{ 1, \dots , k \}$

, the coefficient in

$p_X(\mathbf {n})$

of

$p_X(\mathbf {n})$

of

$\prod _{i \in I} n_{x_i}$

is with

$\prod _{i \in I} n_{x_i}$