Policy Significance Statement

The results of this experimental study suggest that respondents perceive independent algorithmic decision-making (ADM) about the European Union (EU) budget to be illegitimate. EU policy-makers should exercise caution when incorporating ADM systems in the political decision-making process. ADM systems for far-reaching decisions, such as budgeting, may only be used to assist or inform human decision-makers rather than replacing them. An additional takeaway from this study is that the factual and perceived legitimacy of ADM do not necessarily correspond—that is, even ADM systems that produce high-quality outputs, and are implemented transparently and fairly, may still be perceived as illegitimate and might, therefore, be rejected by the electorate. To be socially acceptable, implementation of ADM systems must, therefore, take account of both factual and perceived legitimacy.

1 Introduction

The European Union (EU) currently faces a number of significant crises, most notably the European debt crisis, the distribution of refugees across EU member states, the so-called “Brexit” (withdrawal of the United Kingdom from the EU), and the social and economic consequences of the Covid-19 pandemic. As a result, right-wing populist parties promoting anti-EU messages have gained momentum and threaten the stability of the EU as a whole (Schmidt, Reference Schmidt2015). To resolve these crises, the EU must demonstrate responsiveness to citizens’ concerns (input legitimacy), effective and transparent procedures (throughput legitimacy), and good governance performance (output legitimacy) (Weiler, Reference Weiler2012; Schmidt, Reference Schmidt2013). However, the EU allegedly lacks legitimacy on all three counts because of a democratic deficit in the institution’s design, the lack of a European identity, the inadequacies of the European public sphere, and the intricacies of producing effective policies for all member states (Follesdal, Reference Follesdal2006; Habermas, Reference Habermas2009; Risse, Reference Risse2014; De Angelis, Reference De Angelis2017).

To improve their legitimacy, EU political institutions have increasingly committed to data-driven forms of governance. By integrating digital data into political processes, the EU seeks increasingly to base decision-making on sound empirical evidence (e.g., the Data4Policy project). In particular, algorithmic decision-making (ADM) systems are used to identify pressing societal issues, to forecast potential policy outcomes, to inform the policy process, and to evaluate policy effectiveness (Poel et al., Reference Poel, Meyer and Schroeder2018; AlgorithmWatch, 2019). For instance, ADM systems have been shown to successfully support decision-making regarding the socially acceptable distribution of refugees. Trials suggest that this approach increases refugee employment rates by 40–70% as compared to human-led distribution practices (Bansak et al., Reference Bansak, Ferwerda, Hainmueller, Dillon, Hangartner, Lawrence and Weinstein2018).

However, little is known about the specific impact of ADM on public perceptions of legitimacy. On the one hand, high public support for digitalization, in general, and autonomous systems, in particular, means that the use of ADM may increase perceived legitimacy (European Commission, 2017). Notably, ADM systems are commonly perceived as true, objective, and accurate and, therefore, capable of reducing human bias in the decision-making process (Lee, Reference Lee2018). On the other hand, ADM-based policy-making poses a number of novel challenges in terms of perceived legitimacy: (a) Citizens may believe that they have little influence on ADM selection criteria—for instance, which digital data are collected, or on which indicators the algorithm ultimately bases decisions (input legitimacy). (b) Citizens may not understand the complex and often opaque technicalities of the ADM process (throughput legitimacy). (c) Citizens may doubt that ADM systems can make better decisions than humans, or they may question whether certain decisions produce the desired results (output legitimacy).

Few studies have investigated the effects of ADM on perceptions of legitimacy, especially with respect to political decisions. To date, empirical studies have tended to focus on public sector areas, such as education and health, evaluating the effects of ADM as compared to human decision-making (HDM) in terms of variables, such as fairness and trust (Lee, Reference Lee2018; Araujo et al., Reference Araujo, Helberger, Kruikemeier and de Vreese2020; Marcinkowski et al., Reference Marcinkowski, Kieslich, Starke and Lünich2020). While those studies investigate decisions that affect individual citizens (e.g., decisions about loans or university admissions), the political context examined in this study refers to decisions that affect societal groups, or even society as a whole. To bridge this research gap, the present study investigates the extent to which the use of ADM influences the perceived legitimacy of policy-making at EU level. For that, we use EU budgeting decisions as a case in point. Even though fully or semiautomated ADM is unlikely to be implemented in EU decision-making in the near future, it is key to gain empirical insights into their potential consequences. This point refers to the so-called “Collingridge dilemma,” which states that every new technology is accompanied with two competing concerns. “On one hand, regulations are difficult to develop at an early technological stage because their consequences are difficult to predict. On the other hand, if regulations are postponed until the technology is widely used, then the recommendations come too late” (Awad et al., Reference Awad, Dsouza, Bonnefon, Shariff and Rahwan2020, p. 53). Addressing this dilemma, the study extends the existing literature in three respects. (1) It provides novel insights into the potential of ADM to exacerbate or alleviate the EU’s perceived legitimacy deficit. (2) It clarifies the effects of three distinct decision-making arrangements on perceptions of legitimacy: (a) independent decision-making by EU politicians or HDM; (b) independent decision-making by AI-based systems or ADM; and (c) hybrid decision-making (HyDM), where politicians select among decisions suggested by ADM systems. (3) Using structural means modeling (SMM) to analyze citizens’ perceptions, the study proposes a general measure of input, throughput, and output legitimacy.

2 A Crisis of EU Legitimacy? Input, Throughput, Output

In making effective decisions to resolve major crises, the EU’s actions depend on political legitimacy. According to Gurr, “governance can be considered legitimate in so far as its subjects regard it as proper and deserving of support” (Gurr, Reference Gurr1971, p. 185). In his seminal work on legitimacy, Scharpf (Reference Scharpf1999) distinguished between two dimensions of legitimacy; input legitimacy and output legitimacy. Input legitimacy is characterized as “responsiveness to citizen concerns as a result of participation by the people” (Schmidt, Reference Schmidt2013, p. 2). It, thus, depends on free and fair elections, high voter turnout, and lively political debate in the public sphere (Scharpf, Reference Scharpf1999). Output legitimacy refers to “the effectiveness of the EU’s policy outcomes for the people” (Schmidt, Reference Schmidt2013, p. 2)—that is, the EU’s problem-solving capacity in pursuing desired goals, such as preserving peace, ensuring security, protecting the environment, and fostering prosperity (Follesdal, Reference Follesdal2006). Moving beyond this dichotomy, some scholars (Schmidt, Reference Schmidt2013; Schmidt and Wood, Reference Schmidt and Wood2019) have added throughput as a third dimension of legitimacy, referring to the accountability, efficacy, and transparency of EU policy-makers and their “inclusiveness and openness to consultation with the people” (Schmidt, Reference Schmidt2013, p. 2). Also referred to as the “black box” (Steffek, Reference Steffek2019, p. 1), throughput legitimacy encompasses the political practices and processes of EU institutions in turning citizen input into policy output (Steffek, Reference Steffek2019; Schmidt and Wood, Reference Schmidt and Wood2019).

Ever since the EU was founded, and especially since the failed Constitutional Treaty referenda in France and the Netherlands in 2005, European integration has been dogged by criticisms that the EU lacks legitimacy. Most scholars point to the democratic deficit, the lack of a European identity, and an inadequate public sphere as primary reasons for this alleged crisis of legitimacy (Follesdal and Hix, Reference Follesdal and Hix2006; Habermas, Reference Habermas2009). The debate centers on four arguments (Follesdal, Reference Follesdal2006; Follesdal and Hix, Reference Follesdal and Hix2006; Holzhacker, Reference Holzhacker2007; De Angelis, Reference De Angelis2017). First, among key EU political institutions, only the European Parliament (EP) is legitimized by European citizens by means of elections, but scholars argue that the EP is too weak in comparison to the European Commission (EC) (Follesdal and Hix, Reference Follesdal and Hix2006). While continuous reform of EU treaties has substantially strengthened the EP’s role within the institutional design of the EU, it still lacks the power to initiate legislation (Holzhacker, Reference Holzhacker2007). Second, the EU’s institutional design gives national governments pivotal power over the Council of the EU and the EC. However, as those actors are somewhat exempt from parliamentary scrutiny by the EP and national parliaments, there is a deficit in democratic checks and balances (Follesdal and Hix, Reference Follesdal and Hix2006). Third, the European elections are not sufficiently “European” (Follesdal, Reference Follesdal2006)—that is, “they are not about the personalities and parties at the European level or the direction of the EU policy agenda” (Follesdal and Hix, Reference Follesdal and Hix2006, p. 536). Instead, national politicians, parties, and issues still dominate campaigns and remain crucial in citizens’ voting decisions (Hobolt and Wittrock, Reference Hobolt and Wittrock2011). Finally, European citizens are arguably too detached from the EU (Follesdal and Hix, Reference Follesdal and Hix2006). Public opinion research suggests that although a sense of European identity, trust in European institutions, and satisfaction with EU democracy are on the rise, these pale in comparison to the corresponding scores at national level (Risse, Reference Risse2014; European Commission, 2019b). Consequently, scholars have argued that the EU lacks a European demos—that is, “a strong sense of community and loyalty among a political group” (Risse, Reference Risse2014, p. 1207). In addition, the alleged lack of a European public sphere that would enable communication and debate around political issues lends further credence to the claim that the EU suffers from insufficient citizen participation (Habermas, Reference Habermas2009; Kleinen-von Königslöw, Reference Kleinen-von Königslöw2012).

As all four arguments primarily question the EU’s input and throughput legitimacy, many have argued that output is the stronghold for the EU legitimacy. According to Scharpf, “the EU has developed considerable effectiveness as a regulatory authority” (Scharpf, Reference Scharpf2009, p. 177). In that regard, the EU enables member states to implement policies that they would otherwise be unable to advance, especially in relation to global policy issues (Menon and Weatherill, Reference Menon and Weatherill2008). Weiler contended that output legitimacy “is part of the very ethos of the Commission” (Weiler, Reference Weiler2012, p. 828), but recent crises have also challenged this view; for instance, the austerity measures imposed on debtor states had detrimental effects on the lives of many European citizens (De Angelis, Reference De Angelis2017). Debate about the EU’s alleged legitimacy crisis centers primarily on institutional shortcomings in the political system, and public perceptions of legitimacy are neglected. However, Jones (Reference Jones2009) claimed that subjective perceptions are often more important than the normative criteria themselves.

3 ADM for Policy-making in the EU?

In recent years, EU institutions have increasingly sought to address this perceived deficit of legitimacy through evidence-based policy-making: “Against the backdrop of multiple crises, policymakers seem ever more inclined to legitimize specific ways of action by referring to ‘hard’ scientific evidence suggesting that a particular initiative will eventually yield the desired outcomes” (Rieder and Simon, Reference Rieder and Simon2016, p. 1). This push for numerical evidence comes at a time when the computerization of society has precipitated the creation and storage of vast amounts of digital data. According to Boyd and Crawford, the so-called big data “offer a higher form of intelligence and knowledge that can generate insights that were previously impossible, with the aura of truth, objectivity, and accuracy” (Boyd and Crawford, Reference Boyd and Crawford2012, p. 663). It is often presumed that the more data are analyzed, that is, preferably all available data as “N = all” trumps sampling, the greater is the potential to gain insights and receive the best result (Mayer-Schönberger and Cukier, Reference Mayer-Schönberger and Cukier2013). Digital data are collected, accessed, and analyzed in real time, leading to substantial advances in analytics, modeling, and dynamic visualization (Craglia et al., Reference Craglia, Annoni, Benczur, Bertoldi, Delipetrev, De Prato and Vesnic Alujevic2018; Poel et al., Reference Poel, Meyer and Schroeder2018; Verhulst et al., Reference Verhulst, Engin and Crowcroft2019). This transformation of real-world phenomena into digital data is expected to provide a timely and undistorted view of societal mechanisms and institutions.

Lately, public discourse around the potential of computerization and big data has included a renewed focus on Artificial Intelligence (AI). According to Katz,

“AI stands for a confused mix of terms—such as ‘big data,’ ‘machine learning,’ or ‘deep learning’—whose common denominator is the use of expensive computing power to analyze massive centralized data. (…) It’s a vision in which truth emerges from big data, where more metrics always need to be imposed upon human endeavors, and where inexorable progress in technology can ‘solve’ humanity’s problems”

(Katz, Reference Katz2017, p. 2).Indeed, the increasing availability of digital data in combination with significant advances in computing power have underpinned the recent emergence of many successful AI applications, such as self-driving cars and natural language generation, and face recognition. This has, in turn, raised expectations regarding the use of AI for evidence-based or data-driven policy-making (Esty and Rushing, Reference Esty and Rushing2007; Giest, Reference Giest2017; Poel et al., Reference Poel, Meyer and Schroeder2018). To exploit technological developments and increasing data availability for policy-making purposes, the EC contracted the Data4Policy project (Rubinstein et al., Reference Rubinstein, Meyer, Schroeder, Poel, Treperman, van Barneveld, Biesma-Pickles, Mahieu, Potau and Svetachova2016), arguing that “data technologies are amongst the valuable tools that policymakers have at hand for informing the policy process, from identifying issues, to designing their intervention and monitoring results” (European Commission, 2019a, para. 1).

In that context, van Veenstra and Kotterink (Reference van Veenstra, Kotterink, Parycek, Charalabidis, Chugunov, Panagiotopoulos, Pardo, Sæbø and Tambouris2017, p. 101) noted that “data-driven policy making is not only expected to result in better policies, but also aims to create legitimacy.” Recent reports suggest that ADM systems are already in use throughout the EU to deliver public services, optimize traffic flows, or identify social fraud (Poel et al., Reference Poel, Meyer and Schroeder2018; AlgorithmWatch, 2019). Case studies confirm that ADM systems can indeed contribute to better policy (Bansak et al., Reference Bansak, Ferwerda, Hainmueller, Dillon, Hangartner, Lawrence and Weinstein2018), using big data to identify emerging issues, to foresee demand for political action, to monitor social problems, and to design policy options (Poel et al., Reference Poel, Meyer and Schroeder2018; Verhulst et al., Reference Verhulst, Engin and Crowcroft2019). To that extent, data-driven systems can potentially contribute to the increased legitimacy of input (by enabling new forms of citizen participation,) of throughput (by making the political process more transparent), and of output (by increasing the quality of policies and outcomes).

Yet, despite these promising indications, numerous examples of AI’s pitfalls in political decision-making exist. For instance, a recent report by the research institute AI NOW revealed that ADM systems may falsely accuse citizens of fraud, arbitrarily exclude them from food support programs, or mistakenly reduce their disability benefits. Incorrect classification by ADM systems has led to a wave of lawsuits against the US government at federal and state levels, undermining both the much vaunted cost efficiency of automated systems and the perceived legitimacy of political decision-making as a whole (Richardson et al., Reference Richardson, Schultz and Southerland2019). As a consequence, in tackling the issues that come with the implementation of AI systems in society, the EU has appointed an AI High-Level Expert Group that developed “Ethics Guidelines for Trustworthy AI” (Artificial Intelligence High-Level Expert Group, 2019). All seven key requirements introduced with the guidelines also address issues at the heart of potential legitimacy concerns of the public with regard to proposed ADM systems in governance. Most prominently, when it comes to policy-making, ADM raises important questions concerning the need for human agency and oversight, transparency, diversity, non-discrimination, and fairness, as well as accountability. In terms of the three dimensions of legitimacy, ADM systems pose the following challenges: (a) On the input dimension, citizens may lack insight into or influence over the criteria or data that intelligent algorithms use to make decisions. This may undermine fundamental democratic values such as civic participation or representation. (b) On the throughput dimension, citizens may be unable to comprehend the complex and often inscrutable logic that underpins algorithmic predictions, recommendations, or decisions. The corresponding opacity of the decision-making process may violate the due process principle, for example, that citizens receive explanations for political decisions and have the opportunity to file complaints or even go to court. (3) On the output dimension, citizens may fundamentally doubt whether ADM systems actually contribute to better and/or more efficient policy. This may conflict with key democratic principles, such as non-discrimination.

As with all technological innovations, success or failure depends greatly on all stakeholders’ participation and acceptance (Bauer, Reference Bauer1995). In the present context, those stakeholders include EU institutions, bureaucracies, and regulators who may favor the introduction of ADM systems in policy-making, and the electoral body of voters who legitimize proposed policies and implementation. While there are no existing accounts of citizens’ perceptions of ADM systems in the context of political decision-making in the EU, survey data provide some initial insights. Several Eurobarometer surveys have shown that public perception of digital technologies is broadly positive throughout the EU, especially when compared to perceptions of other mega-technologies, such as nuclear power, biotechnology, or gene editing (European Commission, 2015, 2017). According to a recent survey commissioned by the Center for the Governance of Change, “25% of Europeans are somewhat or totally in favor of letting an artificial intelligence make important decisions about the running of their country” (Rubio and Lastra, Reference Rubio and Lastra2019, p. 10). On that basis, it seems likely that demands to embed AI in the political process will increase, and that political programs will respond to those demands.

4 Hypotheses

The key objective of this study was to investigate whether and to what extent ADM systems in policy-making influence public perceptions of EU input, throughput, and output legitimacy. Previous empirical studies have suggested that different decision-making arrangements (e.g., formal versus descriptive representation, direct voting versus deliberation) can differ significantly in terms of their perceived legitimacy (Esaiasson et al., Reference Esaiasson, Gilljam and Persson2012; Persson et al., Reference Persson, Esaiasson and Gilljam2013; Arnesen and Peters, Reference Arnesen and Peters2018; Arnesen et al., Reference Arnesen, Broderstad, Johannesson and Linde2019). However, as those studies did not specifically investigate the potential effects of ADM systems, the present study sought to distinguish between three different decision-making arrangements: (a) independent decision-making by EU politicians (HDM); (b) independent decision-making by ADM systems (ADM); and (c) HyDM by politicians, based on suggestions made by ADM systems. The reasoning for our hypotheses refers to the specific decision-making process tested in this study, namely the distribution of the EU budget to different policy areas.

With regard to perceived input legitimacy, we contend that respondents are likely to perceive the current decision-making process as more legitimate than processes that rely partly or completely on ADM. The primary reason for this assumption is that transferring some (HyDM) or all (ADM) authority about EU budgeting decisions to algorithms is likely to diminish the role of democratically elected institutions, which would undermine a fundamental pillar of representative democracies. As a result, algorithmic or hybrid decision systems would probably marginalize the opportunities for citizen participation and thereby decrease input legitimacy. This reasoning, of course, applies primarily to those technologies that directly decide on policy, instead of being involved in less far-reaching stages of the policy cycle, such as agenda setting or policy evaluation (Verhulst et al., Reference Verhulst, Engin and Crowcroft2019). Furthermore, as Barocas and Selbst (Reference Barocas and Selbst2016) point out, minorities and other disadvantaged social groups are often underrepresented in existing digital data, making them vulnerable to be disregarded by ADM. Even though first evidence indicates that data science has some potential to increase citizen participation and representation by assessing policy preferences via opinion mining of social media data (Ceron and Negri, Reference Ceron and Negri2016; Sluban and Battiston, Reference Sluban and Battiston2017), it is unlikely that citizens perceive such indirect and rather unknown forms of political participation to be more legitimate than currently existing democratic procedures. On that basis, we tested the following preregistered hypotheses (see preregistration at Open Science Framework (OSF)):

H1a: HDM leads to higher perceived input legitimacy as compared to ADM.

H1b: HDM leads to higher perceived input legitimacy as compared to HyDM.

H1c: HyDM leads to higher perceived input legitimacy as compared to ADM.

With regard to perceived throughput legitimacy, we argue that implementation of ADM leads to lower levels of perceived legitimacy as compared to the existing political process. Even though EU decision-making processes are often criticized for their lack of transparency, ADM systems suffer from the same deficiency, as they are themselves considered to be a “black box” (Wachter et al., Reference Wachter, Mittelstadt and Russell2018). The extent of transparency of self-learning systems, however, is a major driver of public perceptions of legitimacy (De Fine Licht and De Fine Licht, Reference De Fine Licht and De Fine Licht2020). A recent EC report, therefore, stressed the urgent need to make ADM more explainable and transparent (Craglia et al., Reference Craglia, Annoni, Benczur, Bertoldi, Delipetrev, De Prato and Vesnic Alujevic2018) on the grounds that such systems are typically too complex for the layperson to understand and are largely unable to give proper justifications for decisions. Moreover, their ability to mitigate discrimination in decision-making processes is still subject to contested debates in the literature. While some empirical evidence suggests that ADM may lead to more positive perceptions of procedural fairness (Marcinkowski et al., Reference Marcinkowski, Kieslich, Starke and Lünich2020), ADM is also prone to reproduce and even exacerbate existing societal biases (Barocas and Selbst, Reference Barocas and Selbst2016). Furthermore, ADM systems lack public accountability because citizens do not know who to turn to regarding policy or administrative failures. Indeed, preliminary empirical evidence suggests that activities that require human skills are perceived as fairer and more trustworthy when executed by humans rather than algorithms (Lee, Reference Lee2018). On that basis, we formulated the following hypotheses.

H2a: HDM leads to higher perceived throughput legitimacy as compared to ADM.

H2b: HDM leads to higher perceived throughput legitimacy as compared to HyDM.

H2c: HyDM leads to higher perceived throughput legitimacy as compared to ADM.

Several scholars suggest that the EU already legitimizes itself primarily via the output dimension (Scharpf, Reference Scharpf2009; Weiler, Reference Weiler2012) due to the aforementioned democratic deficit on the input dimension. Below, we argue why implementing algorithmic or hybrid decision system would mean that the EU is doubling down on output legitimacy. Perceived output legitimacy comprises two key dimensions: citizens’ perceptions of whether political decisions can attain predefined goals (e.g., economic growth, environmental sustainability), and the subjective favorability of such decisions. Assessment of the perceived quality of political output involves both dimensions, and this is where ADM systems are said to have a distinct advantage over human decision-makers, as they can produce novel insights from vast amounts of digital data that would be impossible when relying solely on human intelligence (Boyd and Crawford, Reference Boyd and Crawford2012). Empirical studies comparing public perceptions of ADM and HDM seem to support this assumption; looking at proxies for legitimacy, ADM systems are evaluated as fairer in distributive terms than HDM (Marcinkowski et al., Reference Marcinkowski, Kieslich, Starke and Lünich2020), especially in high impact situations (Araujo et al., Reference Araujo, Helberger, Kruikemeier and de Vreese2020). Building on these empirical findings, we further argue that citizens perceive ADM systems to be most legitimate when they operate under the scrutiny of democratically elected institutions. Thus, we formulated the following hypotheses.

H3a: HDM leads to lower perceived goal attainment as compared to ADM.

H3b: HDM leads to lower perceived goal attainment as compared to HyDM.

H3c: HyDM leads to higher perceived goal attainment as compared to ADM.

H4a: HDM leads to lower decision favorability as compared to ADM.

H4b: HDM leads to lower decision favorability as compared to HyDM.

H4c: HyDM leads to higher decision favorability as compared to ADM.

5 Method

To test these hypotheses, we conducted an online experiment, applying a between-subjects-design using one factor with three levels: (a) EU politicians making decisions independently (condHDM); (b) ADM systems making decisions independently (condADM); and (c) ADM systems suggesting decisions to be passed by EU politicians (condHyDM) (see preregistrationFootnote 1 at OSF). All measurements and stimulus material and the questionnaire’s basic functionality were thoroughly tested in multiple pretests involving 321 respondents in total.

5.1 Sample

Respondents were recruited through the noncommercial SoSci Open Access Panel (OAP) during the period April 8–22, 2019. In accordance with German law, SoSci OAP registration involves a double opt-in process, in which panelists first sign up using an email address and must then activate their account and confirm pool membership (Leiner, Reference Leiner2016). Although the SoSci OAP is not representative in terms of sociodemographic variables, its key advantage is participant motivation; as respondents are not compensated for survey participation, their main motivation is topic interest, which is a crucial indicator of data quality (Brüggen et al., Reference Brüggen, Wetzels, De Ruyter and Schillewaert2011). In addition, all questionnaires using the SoSci OAP must first undergo rigorous peer review, so ensuring “major improvements to the instrument before data are collected” (Leiner, Reference Leiner2016, p. 373).

Using Soper’s (Reference Soper2019) a priori sample size calculator for structural equation modeling, we determined an optimal sample size of 520, based on the results from a pretest conducted 10 weeks before final data collection. Altogether, 3,000 members of the SoSci OAP were invited by e-mail to participate in the study. In total, 612 respondents completed the questionnaire. A thorough two-step cleaning process was applied for quality control purposes. The first step excluded respondents who failed an attention check regarding the target topic (n = 14). In the second step, using the DEG_Time variable (Leiner, Reference Leiner2019), each respondent accumulated minus points for completing single questions or the whole questionnaire too quickly. As the SoSci OAP administrators recommend a threshold score of 50 for rigorous filtering, all respondents with a minus point score of 50, or higher, were excluded from the analysis (n = 26). After filtering, the sample comprised 572 respondents—a response rate of 19.1%.

5.2 Treatment conditions (independent variable)

Respondents were randomly assigned to one of the three conditions and received a short text (ca. 250 words per condition) about the decision-making process regarding distribution of the annual EU budget. The stimulus material also included a pie chart, showing budget allocation for different policy areas to inform readers about the range of budgetary items and their distribution in the actual EU budget. For reasons of validity, the text was adapted from the official EU website (European Union, 2019). While the pie chart was identical for all three conditions, the closing paragraph of the text was edited to reflect manipulation of the independent variable: (a) decisions made by politicians of EU institutions only—the status quo (condHDM; n = 182); (b) decisions made by ADM only (condADM; n = 204); and (c) decisions suggested by ADM and subsequently passed by politicians of EU institutions (condHyDM; n = 186).Footnote 2 In the two latter stimuli that make reference to ADM, we did not mention the specific criteria as to how the AI system would be instructed what an optimal distribution might look like. The wording, which makes reference to “all available data being used to deliver optimal results,” thus merely connects to the abovementioned popular trope that ever larger data sets offer superior results that are beyond human abilities and comprehension. Randomization checks suggest that no differences between the distribution of respondents to the conditions exist in terms of age (M = 47.26, SD = 15.99; F(2, 569) = .182, p = .834); gender (female = 45.8 %, male = 53.5 %, diverse = .7 %; χ 2(4) = 1.89, p = .757); education (non-tertiary education = 40.6 %, tertiary education = 59.4 %; χ 2(2) = .844, p = .656) and political interest (M = 3.85, SD = .82; F(2, 569) = .491, p = .608).Footnote 3 Respondents were not deceived into thinking that condADM and condHyDM are existing decision-making procedures in the EU as it was explicitly stressed that the scenario at hand was only a potential decision-making process. At the end of the survey, they were debriefed about the research interest of the study.

5.3 Manipulation check

All respondents answered two items that served as manipulation checks to validate that respondents perceived the differences in the respective conditions. First, perceived technical automation of the decision-making process was assessed by responses (on a five-point Likert scale) to the question How technically automated was the decision-making process? The results indicated a significant difference among the three conditions (F(2, 524) = 389.71, p < .001). Using a Games-Howell post hoc test, condHDM (M = 2.11; SD = .97), condADM (M = 4.52; SD = .77), and condHyDM (M = 4.16; SD = .80) were found to differ significantly from each other, confirming that respondents recognized the extent to which the described decision-making processes were technically automated.

The perceived involvement of political actors and institutions in the different decision-making arrangements was measured by responses (on a five-point Likert scale) to the question: What role did politicians or political institutions play in the decision-making process? Again, there were significant differences among the three conditions (F(2, 548) = 161.98, p < .001). Using a Games-Howell post hoc test, condHDM (M = 4.45; SD = .88), condADM (M = 2.63; SD = .99), and condHyDM (M = 3.41; SD = 1.04), all were found to differ significantly from each other, confirming that respondents recognized the degree to which political actors and institutions were involved in each condition.

5.4 Measures

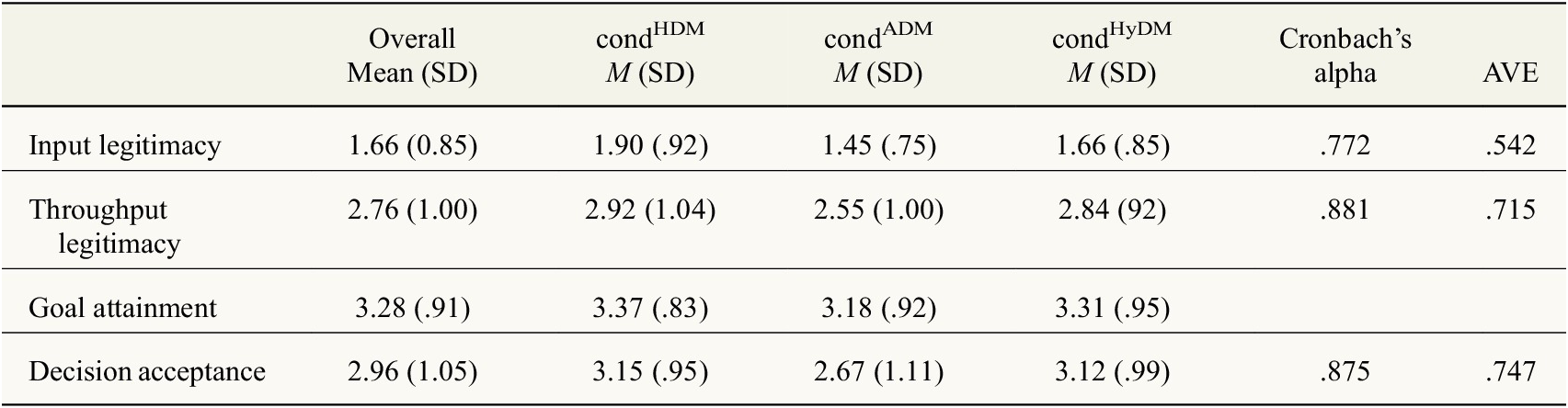

As Persson et al. (Reference Persson, Esaiasson and Gilljam2013, p. 391) rightly noted, “legitimacy is an inherently abstract concept that is hard to measure directly.” To account for this difficulty, measures for input legitimacy (dV1), throughput legitimacy (dV2), and output legitimacy using the two dependent variables, goal attainment (dV3), and decision favorability (dV4), were thoroughly pretested and validated. All items used in the analysis were measured on a five-point Likert scale, ranging from 1 (do not agree) to 5 (agree) and including the residual category don’t know. Footnote 4 The factor validity of all measures was assessed using Cronbach’s alpha (α) and average variance extracted (AVE) (Table 1).

Table 1. Descriptives and factorial validity

Abbreviation: AVE, average variance extracted.

Input Legitimacy (dV1). Three items were used to measure perceived input legitimacy, using wording adaptedFootnote 5 from previous studies (Lindgren and Persson, Reference Lindgren and Persson2010; Persson et al., Reference Persson, Esaiasson and Gilljam2013; Colquitt and Rodell, Reference Colquitt, Rodell, Cropanzano and Ambrose2015): (a) All citizens had the opportunity to participate in the decision-making process (IL1); (b) People like me could voice their opinions in the decision-making process (IL2); and (c) People like me could influence the decision-making process (IL3). All items were randomized and used as indicators of a latent variable in the analysis.

Throughput Legitimacy (dV2). To measure perceived throughput legitimacy, three items were adapted from Werner and Marien (Reference Werner and Marien2018). Respondents were asked to indicate to what extent they perceived the decision-making process described in the stimulus material as (a) fair (TL1); (b) satisfactory (TL2); and (c) appropriate (TL3). All items were randomized and used as indicators of a latent variable in the analysis.

Goal Attainment (dV3). To measure perceived goal attainment, which is considered an important pillar of output legitimacy (Lindgren and Persson, Reference Lindgren and Persson2010), respondents were asked to indicate to what extent they believed the decision-making process could achieve the goals referred to in the stimulus text (adapted from the official EU website): (a) Better development of transport routes, energy networks, and communication links between EU countries (GA1); (b) Improved protection of the environment throughout Europe (GA2); (c) An increase in the global competitiveness of the European economy (GA3); and (d) Promoting cross-border associations of European scientists and researchers (GA4) (European Union, 2019). The order of the items was randomized. As the four goals can be independently attained, the underlying construct is not one-dimensional and reflective. For that reason, we computed a mean index for goal attainment that was used as a manifest variable in the analysis.

Decision Favorability (dV4). In the existing literature, decision acceptance or favorability is commonly used as a measure of legitimacy (Esaiasson et al., Reference Esaiasson, Gilljam and Persson2012; Werner and Marien, Reference Werner and Marien2018). Conceptualizing decision favorability as the second key pillar of output legitimacy, we used three items to measure dV4. Two of these items were adopted from Werner and Marien’s (Reference Werner and Marien2018) four-item scale: (a) I accept the decision (DF1), and (b) I agree with the decision (DF2). As the other two items in their scale refer to the concept of reactance, we opted to formulate one additional item: (c) The decision satisfies me (DF3). All items were randomized and used as indicators of a latent variable in the analysis.

5.5 Data analysis

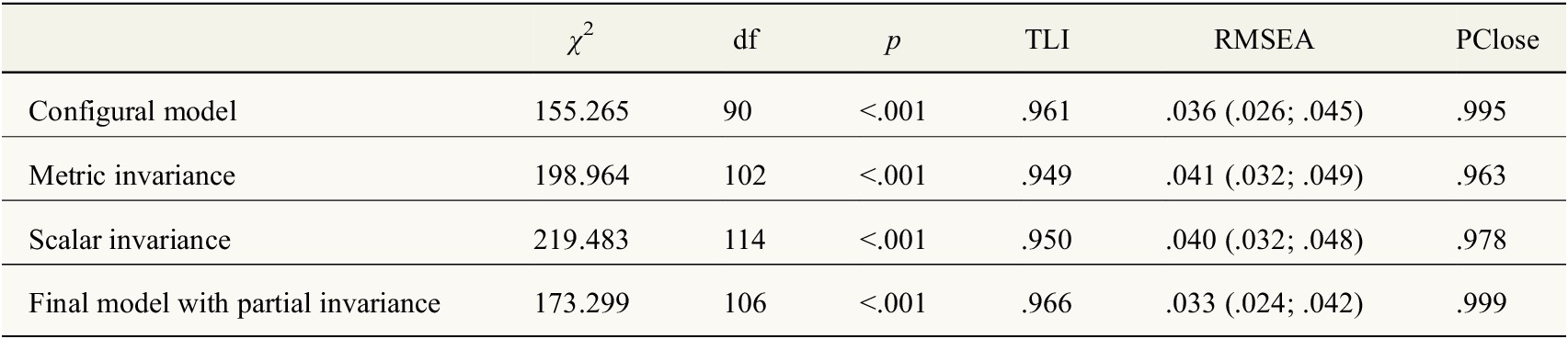

The analysis employed SMM, incorporating all four variables in a single model. As this approach takes account of measurement error due to latent variables, it was adopted in preference to traditional analysis of variance (Breitsohl, Reference Breitsohl2019). To test the hypotheses, we compared means between groups, using critical ratios for differences between parameters in the specified model. All statistical analyses were performed using AMOS 23. Because of missing data, Full Information Maximum Likelihood estimation was used in conjunction with estimation of means and intercepts (Kline, Reference Kline2016). Full model fit was assessed using a chi-square test and RMSEA (lower and upper bound of the 90% confidence interval, PClose value), along with the Tucker–Lewis-Index (TLI) measure of goodness of fit (Holbert and Stephenson, Reference Holbert and Stephenson2002; van de Schoot et al., Reference van de Schoot, Lugtig and Hox2012). Differences in means were investigated by obtaining critical ratios (CR); for CR > 1.96 or < −1.96, respectively, the parameter difference indicated two-sided statistical significance at the 5% level.

As the experimental design compared three groups, we tested the measurement models of all latent factors for measurement invariance (van de Schoot et al., Reference van de Schoot, Lugtig and Hox2012; Kline, Reference Kline2016). This test was necessary to assess whether factor loadings (metric invariance) and item intercepts (scalar invariance) were equal across groups. This “strong invariance” is a necessary precondition to confirm that latent factors are measuring the same construct and can be meaningfully compared across groups (Widaman and Reise, Reference Widaman, Reise, Bryant, Windle and West1997). The chi-square-difference test for strong measurement invariance in Table 2 shows that the assumptions of metric and scalar invariance are violated. Subsequent testing of indicator items identified indicator IL02 as non-invariant. On that basis, a model with only partial invariance was estimated, freeing both the indicator loading and item intercept constraints of IL02. A chi-square-difference test for partial measurement invariance showed better model fit as compared to the configural model (Δχ 2 = 18.034, Δdf = 16; p = .322). The final model with partial measurement invariance fit the data well (χ 2(106) = 173.299, p < .001; RMSEA = .033 (.024; .042); PClose = .999; TLI = .966). The latent means of the specified model with partial invariance were constrained to zero in condHDM. On that basis, the first condition, in which only EU politicians made decisions about the EU budget, was used as the reference group when reporting the results of group comparisons.

Table 2. Descriptives and factorial validity

6 Results

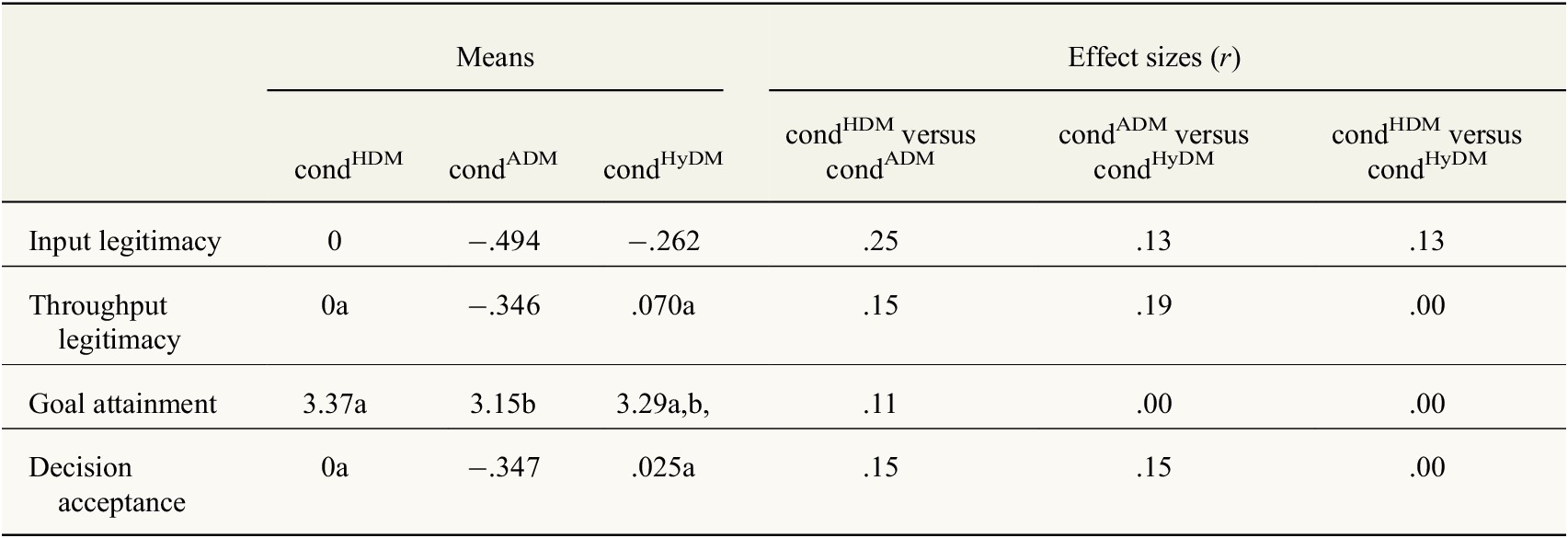

Construct means are shown in Table 3. In addition, based on a transformation of Hedge’s g, a standardized effect size r as proposed by Steimetz et al. (Steinmetz et al., Reference Steinmetz, Schmidt, Tina-Booh, Wieczorek and Schwartz2009) was manually calculated. This is also reported in Table 3.

Table 3. Comparisons of structured means of the legitimacy dimensions

Note. Means not sharing any letter are significantly different by the test of critical ratios at the 5% level of significance.

With regard to perceived input legitimacy, we assumed that this would be highest in condHDM (in which only EU politicians made budget decisions) and lowest in condADM (decisions based solely on ADM), with condHyDM (ADM and EU politicians combined) somewhere between the two. The results indicate that respondents perceived input legitimacy as significantly lower in condADM (ΔM = −.494, p < .001) and condHyDM (ΔM = −.262, p = .011) compared to condHDM. As the difference between these conditions was also significant (ΔM = −.232, p = .009), hypotheses H1a, H1b, and H1c were supported.

For perceived throughput legitimacy, the results indicate (as expected) that condADM was perceived as significantly less legitimate than condHDM (ΔM = −.346, p < .001). No difference was observed between condHDM and condHyDM (ΔM = −.070, p = .481), but condHyDM differed significantly from condADM (ΔM = −.276, p = .004). As a consequence, hypotheses H2a and H2b were supported while H2c was rejected.

In contrast to input and throughput legitimacy, we assumed that condHDM would score lower than the other two conditions for perceived goal attainment, and that condHyDM would score higher than the other two conditions. In fact, condHDM returned the highest mean (M = 3.37) and did not differ significantly from condHyDM (M = 3.29; ΔM = .083, p = .383). Again, condADM scored lowest (M = 3.15) and differed significantly from condHDM (ΔM = .223, p = .014) but not from condHyDM (ΔM = −.014, p = .151). These results found no support for hypotheses H3a, H3b, or H3c and even ran counter to the assumptions of H3a.

We anticipated that perceived decision favorability would be highest for condHyDM, lowest for condHDM, with condADM somewhere between the two. In fact, condADM scored significantly lower than condHDM (ΔM = −.347, p < .001) and significantly lower than condHyDM (ΔM = −.372, p < .001). There was no significant difference between condHDM and condHyDM (ΔM = −.25, p = .809). As a result, H4a and H4b were rejected while H4c was accepted.

7 Discussion

This paper answers the call for more empirical research to understand the nexus of ADM for political decision-making and its perceived legitimacy. How does the integration of AI into policy-making influence people’s perceptions of the legitimacy of the decision-making process? In pursuit of preliminary answers to this question, the results of a preregistered online experiment that systematically manipulated levels of autonomy given to an algorithm in EU policy-making yielded three main insights. First, existing EU decision-making arrangements were considered the most participatory—that is, they scored highest on input legitimacy. Second, in terms of process quality (throughput legitimacy) and outcome quality (output legitimacy), no differences were observed between existing decision-making arrangements and HyDM. Finally, decision-making, informed solely by ADM, was perceived as the least legitimate arrangement across all three legitimacy dimensions. In the following sections, we consider the implications of these findings for EU legitimacy, data-driven policy-making, and avenues for future research.

7.1 Implications for the legitimacy of the EU

Our findings lend further credence to previous assertions that the EU lacks political legitimacy (Holzhacker, Reference Holzhacker2007), in that current decision-making arrangements, which solely involve EU politicians, score low on input legitimacy (M = 1.90 on a five-point Likert scale). This finding speaks to a previously noted democratic deficit (Follesdal, Reference Follesdal2006; Follesdal and Hix, Reference Follesdal and Hix2006). The present results further reveal that ADM systems do not seem to offer an appropriate remedy; on the contrary, it seems that such systems may even exacerbate the problem, as the existing process is still perceived as having greater input legitimacy than arrangements based wholly (ADM condition) or partly (HyDM condition) on ADM systems. It appears that ADM systems fail to engage citizens in the decision-making process or to make their voices heard. Implementing ADM technologies to assist or replace human political actors is seen as less democratic than the status quo, even though incumbent decision-makers such as the European Commission themselves lack democratic legitimacy. One plausible explanation for this finding is that ADM systems are perceived even more technocratic and detached from voters than EU politicians. For that reason, citizens favor human decision-makers when dealing with human tasks, aligning with earlier findings by Lee (Reference Lee2018).

As the EU depends heavily on public approval, it seems important to explore alternative ways of increasing its legitimacy. Rather than leaving political decisions to ADM systems, less far-reaching forms of data-driven policy-making might help to achieve this goal. Beyond decision making, data-driven applications can help to address input legitimacy deficits by contributing to a much wider range of tasks that include foresight and agenda setting (Ceron and Negri, Reference Ceron and Negri2016; Poel et al., Reference Poel, Meyer and Schroeder2018). For instance, some existing applications already use public discourse and opinion poll data to predict issues that require political action before these become problematic (Ceron and Negri, Reference Ceron and Negri2016; Rubinstein et al., Reference Rubinstein, Meyer, Schroeder, Poel, Treperman, van Barneveld, Biesma-Pickles, Mahieu, Potau and Svetachova2016). Further empirical investigation is needed to assess how such applications might affect legitimacy perceptions. Our findings from a German OAP imply citizens’ skepticism regarding the potential of ADM systems to increase democratic participation and citizens’ representation (input legitimacy).

With regard to the quality of decision-making processes—throughput legitimacy—we found no difference between existing decision-making arrangements and hybrid regimes, involving ADM systems and EU politicians. However, citizens seem to view decision-making based solely on ADM systems as less fair or appropriate than the other two arrangements. Regarding existing EU procedures and practices, critics lament a lack of transparency, efficiency, and accountability (Schmidt and Wood, Reference Schmidt and Wood2019), yet ADM systems exhibit the same deficiency (Shin and Park, Reference Shin and Park2019). Inside the “black box,” ADM systems change and adapt decision-making criteria according to new inputs and elusive feedback loops that defy explanation even among AI experts. Under the umbrella term “explainable AI,” a significant strand of computer science literature seeks to enhance ADM’s transparency to users and the general public (Miller, Reference Miller2019; Mittelstadt et al., Reference Mittelstadt, Russell and Wachter2019). For instance, “counterfactual explanations” indicate which ADM criteria would need to be changed to arrive at a different decision (Wachter et al., Reference Wachter, Mittelstadt and Russell2018).

Regarding citizens’ perceptions of the effectiveness and favorability of decision-making outcomes (output legitimacy), we found no difference between the existing decision-making process and hybrid regimes incorporating ADM systems and EU politicians. ADM-based systems alone are considered unable to achieve the desired goals, and the respondents in our sample would not approve of the corresponding decisions. It is important to note that decision output was identical for all three experimental conditions, and that only the decision-making process varied. Nevertheless, these result in differing perceptions of output legitimacy, implying that factual legitimacy (as in the actual quality of policies and their outcomes) and perceived legitimacy are not necessarily congruent. In relation to the European debt crisis, Jones (Reference Jones2009) suggested that political institutions must convince the public that they are performing properly, whatever their actual performance. As the interplay between actual and perceived performance also seems important in the case of ADM, we contend that both aspects warrant equal consideration when implementing such systems in policy-making.

Some of the present results run counter to our hypotheses. Given the largely positive attitude to AI in the EU (European Commission, 2015, 2017), and in light of recent empirical evidence (Araujo et al., Reference Araujo, Helberger, Kruikemeier and de Vreese2020; Marcinkowski et al., Reference Marcinkowski, Kieslich, Starke and Lünich2020), we expected ADM to score highly on output legitimacy. However, respondents expressed a more favorable view of HDM and HyDM outcomes, suggesting that they consider it illegitimate to leave important EU political decisions solely to automated systems. ADM systems were considered legitimate as long as humans remained in the loop, indicating that to maintain existing levels of perceived legitimacy, ADM systems should support or inform human policy-makers rather than replacing them. This finding is consistent with the recommendations for “trustworthy AI” by the HLEG calling for human oversight “through governance mechanisms such as a human-in-the-loop (HITL), human-on-the-loop (HOTL), or human-in-command (HIC) approach” (Artificial Intelligence High-Level Expert Group, 2019, p. 16). However, if the decision-making process includes the capability for human intervention or veto, respondents in our study perceived ADM systems as fairly legitimate even in far-reaching decision-making like distributing the EU budget. More research is needed to systematically investigate whether this finding is stable across different political decisions to zoom in on potential differences between collective decisions that affect the whole society (e.g., EU budget) and individual-level decisions that affect single citizens (e.g., loan granting).

Furthermore, the results suggest that implementing hybrid systems for EU policy-making would mean that the EU doubles down on focusing on output legitimacy instead of input legitimacy as citizens do not perceive those systems to solve the democratic deficit of the EU (input legitimacy), yet they consider them to produce equally good policy outcomes. The findings also indicate that increasing factual legitimacy (e.g., by improving the quality of policy outcomes) does not necessarily yield a corresponding increase in perceived legitimacy.

7.2 Implications for data-driven policy-making

Our findings also contribute to the current discussion around data-driven or algorithmic policy-making. To begin, ADM systems as sole decision-makers do not seem to enhance citizens’ assessment of decision-making procedures or outcomes in our sample. However, when such systems operate under the scrutiny of democratically elected institutions (as in the hybrid condition), they are seen to be as legitimate as the existing policy-making process. This suggests that including humans in the loop is a necessary precondition for implementing ADM (Goldenfein, Reference Goldenfein, Bertram, Gibson and Nugent2019), lending support to the EU’s call for trustworthy AI that highlights the crucial importance of human agency and oversight. With recent reports indicating that this may thus be the more plausible scenario in the immediate future (Poel et al., Reference Poel, Meyer and Schroeder2018; AlgorithmWatch, 2019), this finding has important implications for data-driven policy-making, as it shows that citizens view HITL, HOTL, or HIC decision-making as legitimate arguably because politicians can modify or overrule decisions made by ADM systems (Dietvorst et al., Reference Dietvorst, Simmons and Massey2018).

Of course, our study tests a far-reaching form of algorithmic policy-making, in which algorithms take important budgeting decisions under conditions of limited (HyDM condition) or no (ADM condition) democratic oversight. Yet, as Verhulst, Engin, and Crowcroft point out: “Data have the potential to transform every part of the policy-making life cycle—agenda setting and needs identification; the search for solutions; prototyping and implementation of solutions; enforcement; and evaluation” (Verhulst et al., Reference Verhulst, Engin and Crowcroft2019, p. 1). Public administration has only recently begun to exploit the potential of ADM to produce better outcomes (Wirtz et al., Reference Wirtz, Weyerer and Geyer2019). For instance, the Netherlands now uses an ADM system to detect welfare fraud, and in Poland, the Ministry of Justice has implemented an ADM system that randomly allocates court cases to judges (AlgorithmWatch, 2019). Given the increased data availability and computing power fueling powerful AI innovations, it is reasonable to assume that we have only scratched the surface of algorithmic policy-making and that more far-reaching forms of ADM will be implemented in the future. Moreover, first opinion polls suggest that significant shares of citizens (25% in the EU) agree with AI taking over important political decisions about their country (Rubio and Lastra, Reference Rubio and Lastra2019). The present findings suggest that implementation processes should be designed to facilitate synergies between ADM and HDM.

7.3 Implications for future empirical research

Four main limitations of this study outline avenues for future empirical research. First, our sample was not representative of the German population. As data were collected using the noncommercial SoSci OAP, the convenience sample was skewed in terms of education. This may have yielded slightly more positive perceptions of current EU legitimacy (HDM condition) as compared to the German population, as previous evidence suggests that higher levels of education are associated with more positive attitudes to the EU (Boomgaarden et al., Reference Boomgaarden, Schuck, Elenbaas and de Vreese2011). Furthermore, the sample may be over-sophisticated in terms of digital literacy and general interest in ADM, which are likely to be associated with perceived legitimacy of as well as trust in algorithmic processes (Cheng et al., Reference Cheng, Guo, Chen, Li, Zhang and Gao2019). To make stronger claims in terms of the generalizability of the results, future research should use representative national samples.

Second, due to the successful randomization check and better power and fit indices of the model, we did not further control for confounding variables. Future research needs to investigate the effects of individual factors (e.g., EU attitudes, digital literacy) on the perceived legitimacy of HDM, ADM, and HyDM in the EU context.

Third, our study was limited to Germany. While German citizens generally hold more positive views of the EU compared to the European average (European Commission, 2019b), they also favor ADM in politics more than the European average (Rubio and Lastra, Reference Rubio and Lastra2019). Future studies should investigate the relationship between AI-driven decision-making and perceptions of legitimacy in other national contexts and by means of cross-country comparisons. For instance, preliminary opinion polls suggest that Netherlands’ citizens express much higher support for ADM in policy-making than citizens of Portugal (43 versus 19%) (Rubio and Lastra, Reference Rubio and Lastra2019).

Finally, two of the three decision-making arrangements tested here are hypothetical and are unlikely to be implemented in the immediate future—that is, ADM systems are unlikely to be authorized to allocate the EU’s annual budget. On that basis, future research should focus on the effects of less abstract data-driven applications on perceived legitimacy at different stages of the policy cycle and should include varying degrees of transparency of self-learning systems (De Fine Licht and De Fine Licht, Reference De Fine Licht and De Fine Licht2020). In light of the EU’s recent efforts to implement trustworthy AI systems in society, further empirical scrutiny needs to be devoted to assess whether proposed standards and guidelines are also in accordance with public demands and expectations. For instance, citizens may consider it more legitimate to employ AI-based systems to identify existing societal issues requiring political action or to evaluate the success of legislation based on extensive available data, on the condition that such systems will be able to give convincing justifications for their decisions.

8 Conclusion

This study sheds first light on citizens’ perceptions of the legitimacy of using ADM in EU policy-making. Based on these empirical findings, we suggest that EU policy-makers should exercise caution when incorporating ADM systems in the decision-making process. To maintain the current levels of perceived legitimacy, ADM systems should only be used to assist or consult human decision-makers rather than replacing them, as excluding humans from the loop seems detrimental to perceived legitimacy. Second, it seems clear that the factual and perceived legitimacy of ADM do not necessarily correspond—that is, even ADM systems that produce high-quality outputs and are implemented transparently and fairly may still be perceived as illegitimate and will, therefore, be rejected. To be socially acceptable, implementation of ADM systems must, therefore, take account of both factual and perceived legitimacy. This study lays the groundwork for further research and hopefully sparks further investigations, addressing the impact of specific nuances of ADM in data-driven policy-making.

Acknowledgments

We would like to thank Nils Köbis for his valuable comments to the manuscript. A preprint of the manuscript is available at arXiv:2003.11320.

Funding Statement

This research project was self-funded.

Competing Interests

The authors declare no competing interests exist.

Data Availability Statement

Replication data can be found in Zenodo: https://doi.org/10.5281/zenodo.3728207

Author Contributions

Both authors have contributed to the Conceptualization, Data curation, Formal Analysis, Methodology, Project administration, Visualization, Writing—original draft, Writing—review and editing. All authors approved the final submitted draft.

Ethical Standards

The research meets all ethical guidelines, including adherence to the legal requirements of the study country.

Supplementary Materials

To view supplementary material for this article, please visit http://dx.doi.org/10.1017/dap.2020.19.

Comments

No Comments have been published for this article.