1. Introduction

Two points in a homogeneous and isotropic turbulent flow tend to move apart. As a result, a cloud of Lagrangian tracers spreads under the action of turbulent fluctuations. This fact, known since Taylor (Reference Taylor1922) and Richardson (Reference Richardson1926), is often called turbulent diffusion or dispersion and is often modelled using eddy diffusivities representing turbulent fluctuations in much the same way molecular diffusivities represent molecular agitation and thermal fluctuations.

Formally, the above scenario is valid in homogeneous and isotropic flows. In the ‘dynamic’ case of a stratified flow where density differences actively modify the velocity field, the homogeneity and isotropy assumptions break down. Typically, in that case parcels of fluid – and, hence, Lagrangian tracers carried by these parcels – are subject to restoring buoyancy forces. At least in the case where density is not allowed to irreversibly mix, i.e. when the molecular diffusivity of the scalar setting density ![]() $\kappa$ is zero, such buoyancy forces tend to bring parcels back to their initial (equilibrium) position when vertically displaced, even in the presence of turbulent fluctuations that tend to disperse the parcels. In this case, we expect (eddy) diffusion by turbulent motion to be limited and the emergence of a stationary probability distribution describing the restricted spreading of tracers away from their initial position, in the same way that the diffusive spreading of Brownian particles is constrained when subject to confining potential forces.

$\kappa$ is zero, such buoyancy forces tend to bring parcels back to their initial (equilibrium) position when vertically displaced, even in the presence of turbulent fluctuations that tend to disperse the parcels. In this case, we expect (eddy) diffusion by turbulent motion to be limited and the emergence of a stationary probability distribution describing the restricted spreading of tracers away from their initial position, in the same way that the diffusive spreading of Brownian particles is constrained when subject to confining potential forces.

On the other hand, when ![]() $\kappa \neq 0$, (irreversible) mixing through molecular diffusion alters the density of a vertically perturbed parcel. As a result, the expected equilibrium position of such a parcel dynamically and irreversibly changes as it comes back to rest. The relevant time scale (or mixing time, denoted

$\kappa \neq 0$, (irreversible) mixing through molecular diffusion alters the density of a vertically perturbed parcel. As a result, the expected equilibrium position of such a parcel dynamically and irreversibly changes as it comes back to rest. The relevant time scale (or mixing time, denoted ![]() $t_{M}$ in what follows), after which mixing substantially alters the density of a fluid parcel is primarily controlled by the rate of kinematic deformation of the parcel (and, hence, by gradients in the macroscopic velocity field that stirs it), molecular diffusion coming into play as logarithmic or weak power-law corrections (Villermaux Reference Villermaux2019). As a result, stirring motions increase the rate of mixing (that otherwise would be controlled by the relatively slow molecular diffusion time scale), which itself affects the driving buoyancy forces of the stirring motions (Caulfield Reference Caulfield2021). How is this intricate two-way coupling affecting the dispersion of Lagrangian tracers? Kimura & Herring (Reference Kimura and Herring1996) and Venayagamoorthy & Stretch (Reference Venayagamoorthy and Stretch2006) empirically showed the emergence of a stationary distribution for the vertical displacement of tracers in stratified turbulent flows in the case

$t_{M}$ in what follows), after which mixing substantially alters the density of a fluid parcel is primarily controlled by the rate of kinematic deformation of the parcel (and, hence, by gradients in the macroscopic velocity field that stirs it), molecular diffusion coming into play as logarithmic or weak power-law corrections (Villermaux Reference Villermaux2019). As a result, stirring motions increase the rate of mixing (that otherwise would be controlled by the relatively slow molecular diffusion time scale), which itself affects the driving buoyancy forces of the stirring motions (Caulfield Reference Caulfield2021). How is this intricate two-way coupling affecting the dispersion of Lagrangian tracers? Kimura & Herring (Reference Kimura and Herring1996) and Venayagamoorthy & Stretch (Reference Venayagamoorthy and Stretch2006) empirically showed the emergence of a stationary distribution for the vertical displacement of tracers in stratified turbulent flows in the case ![]() $\kappa \neq 0$. Lindborg & Brethouwer (Reference Lindborg and Brethouwer2008) analytically showed that the root-mean-square vertical displacement in freely decaying stratified turbulent flows indeed has a finite limit as time increases, and predicted this limit up to a free parameter corresponding to the time-integrated fraction of potential energy that is dissipated. These studies focused on the effect of the stratification on the dispersion of Lagrangian tracers. Here, we focus on the effects of the molecular properties of the flow.

$\kappa \neq 0$. Lindborg & Brethouwer (Reference Lindborg and Brethouwer2008) analytically showed that the root-mean-square vertical displacement in freely decaying stratified turbulent flows indeed has a finite limit as time increases, and predicted this limit up to a free parameter corresponding to the time-integrated fraction of potential energy that is dissipated. These studies focused on the effect of the stratification on the dispersion of Lagrangian tracers. Here, we focus on the effects of the molecular properties of the flow.

Our goal is to develop a minimal model describing the (stochastic) path of Lagrangian tracers in stratified turbulent flows. As discussed above, in addition to turbulent fluctuations, the dynamics of Lagrangian tracers is affected by restoring buoyancy forces and by mixing. We here model restoring buoyancy forces as a resetting process (Evans & Majumdar Reference Evans and Majumdar2011; Evans, Majumdar & Schehr Reference Evans, Majumdar and Schehr2020) constraining the otherwise diffusive spread of a cloud of tracers. Mixing is modelled as a memory process (Boyer, Evans & Majumdar Reference Boyer, Evans and Majumdar2017). Indeed, as a parcel of fluid with Lagrangian tracers settles towards its equilibrium position, it mixes with the surrounding environment and, hence, keeps track of the density levels seen along its path, emphasising the fact that ‘history matters’ (Villermaux Reference Villermaux2019). Especially, its density will equilibrate with that of the surrounding fluid at mixing time ![]() $t_{M}$, as experimentally shown in Petropoulos et al. (Reference Petropoulos, Caulfield, Meunier and Villermaux2023). As a result, the parcel will tend to equilibrate at the position it had at

$t_{M}$, as experimentally shown in Petropoulos et al. (Reference Petropoulos, Caulfield, Meunier and Villermaux2023). As a result, the parcel will tend to equilibrate at the position it had at ![]() $t_{M}$.

$t_{M}$.

Various parameters arise when building the model and we constrain them by studying a reduced-order model for the vertical displacement of an elementary (Lagrangian) density structure carrying Lagrangian tracers (similar to the one developed in Petropoulos et al. Reference Petropoulos, Caulfield, Meunier and Villermaux2023). Importantly, the stochastic model arising from this analysis is simple enough so that an analytical study can be conducted. More precisely, we show the emergence of a stationary probability distribution. We derive scalings for the properties of this distribution as a function of the molecular properties of the fluid (via the Prandtl number ![]() $Pr := \nu /\kappa$, where

$Pr := \nu /\kappa$, where ![]() $\nu$ is the kinematic viscosity) as well as the turbulent characteristics of the flow. We compare the theoretical prediction of the model with the numerical data, both from Riley & de Bruyn Kops (Reference Riley and de Bruyn Kops2003) and from new, never previously reported simulations at various

$\nu$ is the kinematic viscosity) as well as the turbulent characteristics of the flow. We compare the theoretical prediction of the model with the numerical data, both from Riley & de Bruyn Kops (Reference Riley and de Bruyn Kops2003) and from new, never previously reported simulations at various ![]() $Pr \neq 1$.

$Pr \neq 1$.

Note that one-dimensional stochastic models have been successfully used in the past to reproduce turbulence characteristics in various flows. Notably, Kerstein (Reference Kerstein1999) formulated a concise model for turbulence in buoyancy-driven flows that takes into account the interplay of advection, molecular transport and buoyancy forces using random mappings applied to one-dimensional velocity and density profiles. The random mappings, whose characteristics are controlled by flow energetics, model the effect of turbulent eddies on the velocity and density fields. The philosophy of the model presented here is similar. However, the tools used, and in particular the formulation of the interplay between advection, molecular diffusion and buoyancy forces, based on stochastic resettings, as well as the purpose of this work are different.

The rest of this work is organised as follows. We first present a numerical experiment that shows the emergence of a stationary distribution of Lagrangian tracers’ vertical displacements in freely decaying stratified turbulent flows (§ 2). We then present a model for the vertical displacement of Lagrangian tracers in stratified turbulent flows that aims to describe the emergence of such a stationary distribution (§ 3). We also compare the model's output to the numerical data. We highlight some limitations of the model in § 4. Conclusions are drawn in § 5.

2. Numerical experiment

In this section we present the methodology used throughout the paper. We start by describing the flow examined here. We consider fully resolved (three-dimensional) direct numerical simulations of stably stratified turbulence building on the simulation campaign originally reported by Riley & de Bruyn Kops (Reference Riley and de Bruyn Kops2003). The flow field satisfies the following (dimensionless) Navier–Stokes equations under the Boussinesq approximations:

\begin{equation} \left. \begin{gathered} \partial_{t}\boldsymbol{u} + \boldsymbol{u}\boldsymbol{\cdot}\boldsymbol{\nabla}\boldsymbol{u} ={-} \boldsymbol{\nabla} p + \frac{1}{Re_{L}}\nabla^{2}\boldsymbol{u} -\left(\frac{2{\rm \pi}}{Fr_{L}}\right)^{2} \rho^{\prime}\hat{\boldsymbol{e}}_{3}, \quad \boldsymbol{\nabla} \boldsymbol{\cdot} \boldsymbol{u} = 0,\\ \partial_{t}\rho^{\prime} + \boldsymbol{u} \boldsymbol{\cdot} \boldsymbol{\nabla} \rho^{\prime} - u_{3} = \frac{1}{Pr Re_{L}}\nabla^{2}\rho^{\prime}. \end{gathered} \right\} \end{equation}

\begin{equation} \left. \begin{gathered} \partial_{t}\boldsymbol{u} + \boldsymbol{u}\boldsymbol{\cdot}\boldsymbol{\nabla}\boldsymbol{u} ={-} \boldsymbol{\nabla} p + \frac{1}{Re_{L}}\nabla^{2}\boldsymbol{u} -\left(\frac{2{\rm \pi}}{Fr_{L}}\right)^{2} \rho^{\prime}\hat{\boldsymbol{e}}_{3}, \quad \boldsymbol{\nabla} \boldsymbol{\cdot} \boldsymbol{u} = 0,\\ \partial_{t}\rho^{\prime} + \boldsymbol{u} \boldsymbol{\cdot} \boldsymbol{\nabla} \rho^{\prime} - u_{3} = \frac{1}{Pr Re_{L}}\nabla^{2}\rho^{\prime}. \end{gathered} \right\} \end{equation}

Here ![]() $\hat {\boldsymbol {e}}_{3}$ is the (upward) vertical unit vector,

$\hat {\boldsymbol {e}}_{3}$ is the (upward) vertical unit vector, ![]() $\rho ^{\prime }$ is the density deviation from a linear background density profile characterised by a buoyancy frequency

$\rho ^{\prime }$ is the density deviation from a linear background density profile characterised by a buoyancy frequency ![]() $N$,

$N$, ![]() $p$ is the pressure perturbation away from hydrostatic balance and

$p$ is the pressure perturbation away from hydrostatic balance and ![]() $\boldsymbol {u} = (u_{1}, u_{2}, u_{3})$ is the velocity vector. Note that throughout the simulation, the (linear) background density profile is held constant. The dimensionless parameters are: the above-defined Prandtl number

$\boldsymbol {u} = (u_{1}, u_{2}, u_{3})$ is the velocity vector. Note that throughout the simulation, the (linear) background density profile is held constant. The dimensionless parameters are: the above-defined Prandtl number ![]() $Pr$, the Froude number

$Pr$, the Froude number ![]() $Fr_{L} := 2{\rm \pi} U/(NL)$ and the Reynolds number

$Fr_{L} := 2{\rm \pi} U/(NL)$ and the Reynolds number ![]() $Re_{L} := UL/\nu$. Here,

$Re_{L} := UL/\nu$. Here, ![]() $U$ and

$U$ and ![]() $L$ are characteristic velocity and length scales associated with the initial conditions. The initial velocity field is of the form

$L$ are characteristic velocity and length scales associated with the initial conditions. The initial velocity field is of the form

corresponding to Taylor–Green vortices (![]() $(x_{1},x_{2},x_{3})$ is the position vector), where

$(x_{1},x_{2},x_{3})$ is the position vector), where ![]() $L := 1/k$ determines the length scale of the initial flow field. A broad-banded noise (with a level of approximately

$L := 1/k$ determines the length scale of the initial flow field. A broad-banded noise (with a level of approximately ![]() $10\,\%$ of the initial Taylor–Green vortex energy) is added on top of the velocity field (2.2). Note that no density perturbation was initialised so that density fluctuations only appear due to the action of the flow field on the ambient density gradient, taken to be constant initially. No forcing is applied to sustain the turbulence generated by the initial condition and the flow naturally restratifies after the bursting of a turbulent event. In this work, the Froude and Reynolds numbers are fixed to

$10\,\%$ of the initial Taylor–Green vortex energy) is added on top of the velocity field (2.2). Note that no density perturbation was initialised so that density fluctuations only appear due to the action of the flow field on the ambient density gradient, taken to be constant initially. No forcing is applied to sustain the turbulence generated by the initial condition and the flow naturally restratifies after the bursting of a turbulent event. In this work, the Froude and Reynolds numbers are fixed to ![]() $Fr_{L} = 4$ and

$Fr_{L} = 4$ and ![]() $Re_{L} = 3200$ and the Prandtl number varies from

$Re_{L} = 3200$ and the Prandtl number varies from ![]() $Pr = 1$ to

$Pr = 1$ to ![]() $Pr = 50$. The three simulations considered here are summarised in table 1. Boundary conditions are triply periodic.

$Pr = 50$. The three simulations considered here are summarised in table 1. Boundary conditions are triply periodic.

Table 1. Description of the three simulations described in this work.

We define the dissipation rate of turbulent kinetic energy ![]() $\epsilon$ as the volume average of the pointwise local dissipation rate of turbulent kinetic energy, defined in terms of the symmetric part of the strain-rate tensor

$\epsilon$ as the volume average of the pointwise local dissipation rate of turbulent kinetic energy, defined in terms of the symmetric part of the strain-rate tensor ![]() $s_{ij}$ as (in dimensional form,

$s_{ij}$ as (in dimensional form, ![]() $\langle {\cdot } \rangle _{V}$ denotes a volume average)

$\langle {\cdot } \rangle _{V}$ denotes a volume average)

This quantity appears in the buoyancy Reynolds number ![]() $Re_{b}$, effectively a measure of the intensity of the stratified turbulence, i.e.

$Re_{b}$, effectively a measure of the intensity of the stratified turbulence, i.e.

\begin{equation} Re_{b} := \left(\frac{L_{O}}{L_{K}}\right)^{4/3} = \frac{\epsilon}{\nu N^{2}}; \quad L_{K} := \left(\frac{\nu^{3}}{\epsilon}\right)^{1/4}, \quad L_{O} := \left(\frac{\epsilon}{N^{3}}\right)^{1/2}, \end{equation}

\begin{equation} Re_{b} := \left(\frac{L_{O}}{L_{K}}\right)^{4/3} = \frac{\epsilon}{\nu N^{2}}; \quad L_{K} := \left(\frac{\nu^{3}}{\epsilon}\right)^{1/4}, \quad L_{O} := \left(\frac{\epsilon}{N^{3}}\right)^{1/2}, \end{equation}

where ![]() $L_{K}$ is the Kolmogorov scale and

$L_{K}$ is the Kolmogorov scale and ![]() $L_{O}$ the Ozmidov scale. We also define the (dimensional) destruction rate of buoyancy variance

$L_{O}$ the Ozmidov scale. We also define the (dimensional) destruction rate of buoyancy variance ![]() $\chi$ as the volume average

$\chi$ as the volume average

with ![]() $\rho _{0}$ denoting a reference density and

$\rho _{0}$ denoting a reference density and ![]() $g$ the acceleration of gravity. Howland, Taylor & Caulfield (Reference Howland, Taylor and Caulfield2021) noted that, scaled in this way (i.e. considering that the appropriately averaged density gradient against which turbulence is acting corresponds to the background density gradient),

$g$ the acceleration of gravity. Howland, Taylor & Caulfield (Reference Howland, Taylor and Caulfield2021) noted that, scaled in this way (i.e. considering that the appropriately averaged density gradient against which turbulence is acting corresponds to the background density gradient), ![]() $\chi$ provides a good approximation to the mean diapycnal mixing rate (and, more precisely, to the destruction rate of available potential energy), even in flows with significant variations in local stratification.

$\chi$ provides a good approximation to the mean diapycnal mixing rate (and, more precisely, to the destruction rate of available potential energy), even in flows with significant variations in local stratification.

The time evolution of ![]() $Re_{b}$,

$Re_{b}$, ![]() $\epsilon$,

$\epsilon$, ![]() $\chi$ and the turbulent flux coefficient

$\chi$ and the turbulent flux coefficient ![]() $\varGamma := \chi /\epsilon$ are presented in figure 1. As

$\varGamma := \chi /\epsilon$ are presented in figure 1. As ![]() $Pr$ increases,

$Pr$ increases, ![]() $\epsilon$ increases, leading to an increase in buoyancy Reynolds number

$\epsilon$ increases, leading to an increase in buoyancy Reynolds number ![]() $Re_{b}$. Conversely,

$Re_{b}$. Conversely, ![]() $\chi$ decreases and, hence, the flux coefficient decreases. Similar behaviours have been reported for both decaying and forced stratified turbulent flows (Riley, Couchman & de Bruyn Kops Reference Riley, Couchman and de Bruyn Kops2023; Petropoulos et al. Reference Petropoulos, Couchman, Mashayek, de Bruyn Kops and Caulfield2024).

$\chi$ decreases and, hence, the flux coefficient decreases. Similar behaviours have been reported for both decaying and forced stratified turbulent flows (Riley, Couchman & de Bruyn Kops Reference Riley, Couchman and de Bruyn Kops2023; Petropoulos et al. Reference Petropoulos, Couchman, Mashayek, de Bruyn Kops and Caulfield2024).

Figure 1. Time evolution of ![]() $Re_{b}$ (a),

$Re_{b}$ (a), ![]() $\epsilon$ (b),

$\epsilon$ (b), ![]() $\chi$ (c) and

$\chi$ (c) and ![]() $\varGamma$ (d) for the three simulations studied in this work.

$\varGamma$ (d) for the three simulations studied in this work.

We are here interested in a Lagrangian description of mixing and ask the following question: How is mixing (of density) and its aforementioned dependence on the Prandtl number affecting the vertical dispersion of Lagrangian tracers? To answer this question, ![]() $32\,000$ Lagrangian particles are released at

$32\,000$ Lagrangian particles are released at ![]() $t = 7$. The initial positions of the particles are randomly chosen in the simulation domain, determining the density of the parcels of fluid embedding the particles, which can then change subsequently due to (irreversible) diffusive mixing where

$t = 7$. The initial positions of the particles are randomly chosen in the simulation domain, determining the density of the parcels of fluid embedding the particles, which can then change subsequently due to (irreversible) diffusive mixing where ![]() $\kappa \neq 0$. A second-order Adams–Bashforth scheme is used to advance the particles in time. The time evolution of the three components of the mean square displacement of the particles

$\kappa \neq 0$. A second-order Adams–Bashforth scheme is used to advance the particles in time. The time evolution of the three components of the mean square displacement of the particles ![]() $\langle \boldsymbol {x}^{2}(t) \rangle$ (defined as the position of the particles at time

$\langle \boldsymbol {x}^{2}(t) \rangle$ (defined as the position of the particles at time ![]() $t$ minus their initial position) is presented in figure 2 (

$t$ minus their initial position) is presented in figure 2 (![]() $\langle {\cdot } \rangle$ denotes a statistical average). Whereas the horizontal components of the displacements showcase a diffusive behaviour (with eddy diffusivities given by the slope of the mean square displacement curves), the mean and variance of the vertical displacement seem to reach a steady state. This suggests the existence of a stationary probability distribution describing the vertical displacement of Lagrangian particles in decaying stratified turbulence. Note that a similar result was observed by Kimura & Herring (Reference Kimura and Herring1996); see, for instance, Kimura & Herring (Reference Kimura and Herring1996, figure 5). Similar observations were made by Venayagamoorthy & Stretch (Reference Venayagamoorthy and Stretch2006). These two studies focused on the effect of the stratification on the vertical dispersion of Lagrangian particles. Here, we focus on the effect of the molecular properties of the flow. We aim to describe and model the emergence of such a stationary distribution in the next section.

$\langle {\cdot } \rangle$ denotes a statistical average). Whereas the horizontal components of the displacements showcase a diffusive behaviour (with eddy diffusivities given by the slope of the mean square displacement curves), the mean and variance of the vertical displacement seem to reach a steady state. This suggests the existence of a stationary probability distribution describing the vertical displacement of Lagrangian particles in decaying stratified turbulence. Note that a similar result was observed by Kimura & Herring (Reference Kimura and Herring1996); see, for instance, Kimura & Herring (Reference Kimura and Herring1996, figure 5). Similar observations were made by Venayagamoorthy & Stretch (Reference Venayagamoorthy and Stretch2006). These two studies focused on the effect of the stratification on the vertical dispersion of Lagrangian particles. Here, we focus on the effect of the molecular properties of the flow. We aim to describe and model the emergence of such a stationary distribution in the next section.

Figure 2. Time evolution of the mean square displacement in (a) the horizontal and vertical directions and (b) the vertical (only) direction. Note the different vertical axes. A straight line is shown in panel (a) to demonstrate the (close to) diffusive behaviour in the horizontal. The different colours represent different Prandtl numbers.

3. Description of the model

3.1. Model without diffusion and a frozen density field

Let us first briefly consider the case without diffusion of density (i.e. ![]() $\kappa = 0$ or equivalently

$\kappa = 0$ or equivalently ![]() $Pr \rightarrow +\infty$). In that case, the density field is advected by the velocity field and, hence, the density carried by a Lagrangian parcel of fluid is constant in time. As a result, one could assume that a Lagrangian parcel in a linearly stratified turbulent flow is subject to Brownian motion in a quadratic potential (modelling the buoyancy forces on this parcel of constant density) provided that the background density profile that the parcel is exploring is and stays linear. Such dynamics admit a stationary state described by a Gaussian distribution. This method has however some drawbacks. It assumes that the density levels that the parcel sees on its stochastic path correspond to the density levels of the initial linear density profile, i.e. that the density field is frozen in time. This assumption might break down for vigorously turbulent flows and for

$Pr \rightarrow +\infty$). In that case, the density field is advected by the velocity field and, hence, the density carried by a Lagrangian parcel of fluid is constant in time. As a result, one could assume that a Lagrangian parcel in a linearly stratified turbulent flow is subject to Brownian motion in a quadratic potential (modelling the buoyancy forces on this parcel of constant density) provided that the background density profile that the parcel is exploring is and stays linear. Such dynamics admit a stationary state described by a Gaussian distribution. This method has however some drawbacks. It assumes that the density levels that the parcel sees on its stochastic path correspond to the density levels of the initial linear density profile, i.e. that the density field is frozen in time. This assumption might break down for vigorously turbulent flows and for ![]() $\kappa \neq 0$, i.e. when vigorous stirring and/or mixing obscure the initially linear background density field. Extending the above method to the case

$\kappa \neq 0$, i.e. when vigorous stirring and/or mixing obscure the initially linear background density field. Extending the above method to the case ![]() $\kappa \neq 0$ also requires the introduction of a model for the time evolution of the density carried by the Lagrangian parcel of fluid. This added complexity might make the analysis relatively complex. In the next section we hence develop a simpler approach, based on stochastic processes subject to resetting.

$\kappa \neq 0$ also requires the introduction of a model for the time evolution of the density carried by the Lagrangian parcel of fluid. This added complexity might make the analysis relatively complex. In the next section we hence develop a simpler approach, based on stochastic processes subject to resetting.

3.2. Model with diffusion

In this section we model the stochastic trajectories of the Lagrangian parcels of fluid bringing together ideas from stochastic processes subject to resetting (Evans & Majumdar Reference Evans and Majumdar2011) and memory (Boyer et al. Reference Boyer, Evans and Majumdar2017). The main modelling assumption of this work is that buoyancy forces eventually act to collapse a fluid parcel's stochastic trajectory onto the neutrally buoyant position of the parcel. In terms of probabilities, this translates into an increased chance of finding a fluid parcel in the neighbourhood of its neutrally buoyant position after a given (resetting) time.

3.2.1. Stochastic process with resetting

Let us start by considering a tracked parcel of fluid whose vertical position ![]() $z(t)$ is subject to Brownian motion with diffusion coefficient

$z(t)$ is subject to Brownian motion with diffusion coefficient ![]() $D$ and is reset to its initial (neutrally buoyant) position

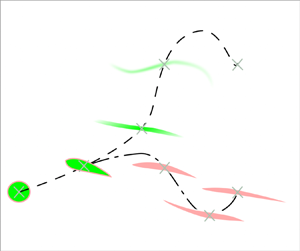

$D$ and is reset to its initial (neutrally buoyant) position ![]() $z_{0} = 0$ with a given probability (see pink trajectory on figure 3). The diffusion process models the ambient turbulence whereas the resetting events mimic the restoring effect of stratification in the absence of mixing (i.e.

$z_{0} = 0$ with a given probability (see pink trajectory on figure 3). The diffusion process models the ambient turbulence whereas the resetting events mimic the restoring effect of stratification in the absence of mixing (i.e. ![]() $\kappa = 0$ and, hence, the parcel's density remains constant). For simplicity, we assume that the resetting events follow a Poisson process. Hence, the probability of resetting the parcel between

$\kappa = 0$ and, hence, the parcel's density remains constant). For simplicity, we assume that the resetting events follow a Poisson process. Hence, the probability of resetting the parcel between ![]() $t$ and

$t$ and ![]() $t + \mathrm {d}t$ is equal to

$t + \mathrm {d}t$ is equal to ![]() $r\mathrm {d}t$ (where

$r\mathrm {d}t$ (where ![]() $r$ is the resetting rate) and the position of the parcel is updated as (Evans & Majumdar Reference Evans and Majumdar2011)

$r$ is the resetting rate) and the position of the parcel is updated as (Evans & Majumdar Reference Evans and Majumdar2011)

\begin{equation} z(t + {\rm d}t) = \begin{cases} z(t) + \xi(t)\sqrt{\mathrm{d}t}, & \text{with probability } 1 - r\,\mathrm{d}t, \\ 0, & \text{with probability } r\,\mathrm{d}t, \end{cases} \end{equation}

\begin{equation} z(t + {\rm d}t) = \begin{cases} z(t) + \xi(t)\sqrt{\mathrm{d}t}, & \text{with probability } 1 - r\,\mathrm{d}t, \\ 0, & \text{with probability } r\,\mathrm{d}t, \end{cases} \end{equation}

where ![]() $\langle \xi (t) \rangle = 0$,

$\langle \xi (t) \rangle = 0$, ![]() $\langle \xi (t)\xi (t') \rangle = 2D \delta (t - t')$ and

$\langle \xi (t)\xi (t') \rangle = 2D \delta (t - t')$ and ![]() $\delta$ is the Dirac

$\delta$ is the Dirac ![]() $\delta$ function. The associated ‘forward master’ equation for the probability

$\delta$ function. The associated ‘forward master’ equation for the probability ![]() $p(z, t)$ of finding a parcel in a neighbourhood of

$p(z, t)$ of finding a parcel in a neighbourhood of ![]() $z$ at time

$z$ at time ![]() $t$ is

$t$ is

On the right-hand side, the first term expresses the diffusive spread of probability, while the last two terms express the loss of probability of finding the parcel at position ![]() $z$ and the gain of probability of finding it at its neutrally buoyant position, respectively.

$z$ and the gain of probability of finding it at its neutrally buoyant position, respectively.

Figure 3. Schematic description of the model for the vertical displacement of Lagrangian particles in stratified turbulent flows, showing the two limiting regimes (i.e. ‘dispersing’ and ‘settling’) discussed in the text. A Lagrangian particle, represented by a grey cross, is initially in a density structure (depicted in green or pink). This structure is kinematically stretched by the flow. This stretching enhances the rate at which the density inside the structure adjusts to that of the surrounding fluid, hence defining a mixing time ![]() $t_{M}$. Because of restoring buoyancy forces, the structure also tends to come back to its initial position on a resetting time scale

$t_{M}$. Because of restoring buoyancy forces, the structure also tends to come back to its initial position on a resetting time scale ![]() $t_{R}$. The competing effects of mixing and resetting will influence the equilibrium position of the structure at

$t_{R}$. The competing effects of mixing and resetting will influence the equilibrium position of the structure at ![]() $t_{R}$ (vertical dotted line). For the green structure, mixing (schematically represented by shades of green) happens before resetting and, hence, the structure comes to equilibrium at the height it has at mixing time, demonstrating the ‘dispersing’ behaviour of moving away from its initial position. For the pink structure, mixing does not have time to happen before resetting and, hence, the structure comes back to its initial position, demonstrating the ‘settling’ behaviour.

$t_{R}$ (vertical dotted line). For the green structure, mixing (schematically represented by shades of green) happens before resetting and, hence, the structure comes to equilibrium at the height it has at mixing time, demonstrating the ‘dispersing’ behaviour of moving away from its initial position. For the pink structure, mixing does not have time to happen before resetting and, hence, the structure comes back to its initial position, demonstrating the ‘settling’ behaviour.

Let us now consider mixing. As the parcel moves along its stochastic path, its density changes at a rate that is controlled by the interplay between kinematic stretching and molecular diffusion (Villermaux Reference Villermaux2019). This interplay is modelled through a mixing time ![]() $t_{M}$ at which the density of the parcel starts equilibrating with the density of the surrounding fluid, as shown by Petropoulos et al. (Reference Petropoulos, Caulfield, Meunier and Villermaux2023). Furthermore, the authors showed experimentally that the parcel's position at mixing time

$t_{M}$ at which the density of the parcel starts equilibrating with the density of the surrounding fluid, as shown by Petropoulos et al. (Reference Petropoulos, Caulfield, Meunier and Villermaux2023). Furthermore, the authors showed experimentally that the parcel's position at mixing time ![]() $z(t_{M})$ provided a good approximation of its neutrally buoyant position after being mixed. Hence, instead of resetting the parcel's position to its initial position, it is natural to reset it at the position it had at

$z(t_{M})$ provided a good approximation of its neutrally buoyant position after being mixed. Hence, instead of resetting the parcel's position to its initial position, it is natural to reset it at the position it had at ![]() $t_{M}$ (see green trajectory on figure 3). This strategy allows us to bypass the modelling of the time evolution of the ‘background’ density field that the parcel sees on its stochastic path, at least if the density field does not evolve drastically between the mixing and resetting times. The mixing time

$t_{M}$ (see green trajectory on figure 3). This strategy allows us to bypass the modelling of the time evolution of the ‘background’ density field that the parcel sees on its stochastic path, at least if the density field does not evolve drastically between the mixing and resetting times. The mixing time ![]() $t_{M}$ is sampled from the probability density function

$t_{M}$ is sampled from the probability density function ![]() $\mathcal {T}_{M}(t_{M})$ that we assume to be of exponential form with mean mixing time

$\mathcal {T}_{M}(t_{M})$ that we assume to be of exponential form with mean mixing time ![]() $\langle t_{M} \rangle$:

$\langle t_{M} \rangle$:

Two reasons justify this choice. First, it enables an analytical analysis of the problem. Second, it has been shown to describe accurately the distribution of mixing times for passive scalar mixing in freely decaying turbulence (Duplat, Innocenti & Villermaux Reference Duplat, Innocenti and Villermaux2010). For completeness, we briefly recall their main arguments here. We assume that a fluid parcel carrying a given scalar content experiences successive random stretching of decaying rate but over increasing periods of time, modelling the effect of decaying turbulence. Therefore, the time ![]() $t_{M}$ for this parcel to mix (i.e. for its scalar content to diminish significantly) follows a non-inhomogeneous Poisson process whose last step is the most important, in the sense that the distribution of

$t_{M}$ for this parcel to mix (i.e. for its scalar content to diminish significantly) follows a non-inhomogeneous Poisson process whose last step is the most important, in the sense that the distribution of ![]() $t_{M}$ can be well approximated by the distribution of waiting times during the last step of the process. As a result, it is reasonable to assume that

$t_{M}$ can be well approximated by the distribution of waiting times during the last step of the process. As a result, it is reasonable to assume that ![]() $t_{M}$ follows an exponential distribution.

$t_{M}$ follows an exponential distribution.

Therefore, (3.2) needs to be modified as follows (Boyer et al. Reference Boyer, Evans and Majumdar2017):

Here

\begin{equation} \mathcal{K}(t_{M}, t) := \frac{\mathcal{T}_{M}(t_{M})}{\int_{0}^{t}\mathcal{T}_{M}(\tau)\,\mathrm{d}\tau} \end{equation}

\begin{equation} \mathcal{K}(t_{M}, t) := \frac{\mathcal{T}_{M}(t_{M})}{\int_{0}^{t}\mathcal{T}_{M}(\tau)\,\mathrm{d}\tau} \end{equation}

is the truncated version of ![]() $\mathcal {T}_{M}$ between

$\mathcal {T}_{M}$ between ![]() $0$ and

$0$ and ![]() $t$, as the parcel cannot be reset to a position it has not yet explored. Note that the resetting time

$t$, as the parcel cannot be reset to a position it has not yet explored. Note that the resetting time ![]() $1/r$ and the mean mixing time

$1/r$ and the mean mixing time ![]() $\langle t_{M} \rangle$ are not necessarily equal here; inertia and ambient turbulence potentially allow parcels to overshoot their equilibrium.

$\langle t_{M} \rangle$ are not necessarily equal here; inertia and ambient turbulence potentially allow parcels to overshoot their equilibrium.

The above model (3.4) is however incomplete since it now neglects the restoring action of buoyancy forces for long mixing times. More precisely, if the mixing time is larger than the natural time for the parcel to come back to its initial position in the absence of mixing, then the parcel will in fact come back to its initial position, since its density did not have time to change before the reset. This effect is modelled as follows: if the mixing time associated with a parcel is larger than the waiting time between two resets, it is reset to its initial position. As discussed later in § 4, this is an approximation. Due to the exponential nature of the distribution of times between resets (recall that the resetting process is assumed to be Poissonian) and of mixing times, a resetting event happens with probability ![]() $l$ such that

$l$ such that

The overall forward master equation is the weighted linear combination of (3.2) (pink trajectory on figure 3) and (3.4) (green trajectory) with weights ![]() $l$ and

$l$ and ![]() $1-l$, respectively:

$1-l$, respectively:

\begin{align} \partial_{t}p(z,t) &= \underbrace{D\partial_{z}^{2}p(z,t)}_{\text{Dispersion}} - \underbrace{rlp(z,t) + rl\delta(z)}_{\text{Settling back to initial position}}\nonumber\\ &\quad - \underbrace{r(1-l)p(z,t) + r(1-l)\int_{0}^{t}\mathcal{K}(t_{M}, t)p(z,t_{M})\mathrm{d}t_{M}}_{\text{Memory}}. \end{align}

\begin{align} \partial_{t}p(z,t) &= \underbrace{D\partial_{z}^{2}p(z,t)}_{\text{Dispersion}} - \underbrace{rlp(z,t) + rl\delta(z)}_{\text{Settling back to initial position}}\nonumber\\ &\quad - \underbrace{r(1-l)p(z,t) + r(1-l)\int_{0}^{t}\mathcal{K}(t_{M}, t)p(z,t_{M})\mathrm{d}t_{M}}_{\text{Memory}}. \end{align}Interestingly, this equation can be solved analytically and has a stationary solution of the form

\begin{equation} p_{\infty}(z) = \sum_{m=0}^{+\infty}A_{m}\mathrm{e}^{-\sqrt{({r + m/{\langle t_{M} \rangle}})/{D}}| z |}; \end{equation}

\begin{equation} p_{\infty}(z) = \sum_{m=0}^{+\infty}A_{m}\mathrm{e}^{-\sqrt{({r + m/{\langle t_{M} \rangle}})/{D}}| z |}; \end{equation}

see the Appendix for the derivation and the coefficients ![]() $A_{m}$. We closely follow Boyer et al. (Reference Boyer, Evans and Majumdar2017) who considered the case

$A_{m}$. We closely follow Boyer et al. (Reference Boyer, Evans and Majumdar2017) who considered the case ![]() $l=0$. This stationary distribution behaves as

$l=0$. This stationary distribution behaves as ![]() $\mathrm {e}^{-\sqrt {r/{D}}\vert z \vert }$ for large

$\mathrm {e}^{-\sqrt {r/{D}}\vert z \vert }$ for large ![]() $\vert z \vert$. Hence, this model exhibits a screening length

$\vert z \vert$. Hence, this model exhibits a screening length ![]() $\sqrt {{D}/{r}}$ that parcels and, hence, Lagrangian tracers will rarely cross. The stationary mean square vertical displacement is

$\sqrt {{D}/{r}}$ that parcels and, hence, Lagrangian tracers will rarely cross. The stationary mean square vertical displacement is

\begin{equation} \langle z^{2}(t \rightarrow +\infty) \rangle = 4\sum_{m=0}^{+\infty}A_{m}\left[\frac{D}{r + {m}/{\langle t_{M} \rangle}}\right]^{{3}/{2}}. \end{equation}

\begin{equation} \langle z^{2}(t \rightarrow +\infty) \rangle = 4\sum_{m=0}^{+\infty}A_{m}\left[\frac{D}{r + {m}/{\langle t_{M} \rangle}}\right]^{{3}/{2}}. \end{equation}3.2.2. Physical interpretation

The model presented above (3.7) involves various parameters: the resetting rate ![]() $r$, the mean mixing time

$r$, the mean mixing time ![]() $\langle t_{M} \rangle$ and the effective diffusivity of the Lagrangian tracers

$\langle t_{M} \rangle$ and the effective diffusivity of the Lagrangian tracers ![]() $D$. Here, we establish connections between these abstract quantities and measurable properties of a real flow. To do so, it is instructive to first think about a simple case. Let us consider a Lagrangian tracer that is embedded into a density structure (a lamella) of initial size

$D$. Here, we establish connections between these abstract quantities and measurable properties of a real flow. To do so, it is instructive to first think about a simple case. Let us consider a Lagrangian tracer that is embedded into a density structure (a lamella) of initial size ![]() $s_{0}$. We assume that the dynamics of the lamella is viscously dominated. The lamella is stretched at a given rate

$s_{0}$. We assume that the dynamics of the lamella is viscously dominated. The lamella is stretched at a given rate ![]() $\gamma$ that determines its mixing time

$\gamma$ that determines its mixing time ![]() $t_{M} := {\mathcal {F}(Pe)}/{\gamma }$, where the Péclet number

$t_{M} := {\mathcal {F}(Pe)}/{\gamma }$, where the Péclet number ![]() $Pe := {\gamma s_{0}^{2}}/{\kappa }$. As a result, its length along the direction of stretching

$Pe := {\gamma s_{0}^{2}}/{\kappa }$. As a result, its length along the direction of stretching ![]() $\ell$ increases with time. The (increasing) function

$\ell$ increases with time. The (increasing) function ![]() $\mathcal {F}$ is a ‘weak’ diffusive correction that depends on the stretching protocol at hand (Villermaux Reference Villermaux2019). The (vertical) position of the lamella

$\mathcal {F}$ is a ‘weak’ diffusive correction that depends on the stretching protocol at hand (Villermaux Reference Villermaux2019). The (vertical) position of the lamella ![]() $z_{b}$ has been shown to satisfy the following (dimensionless) equation (see Petropoulos et al. Reference Petropoulos, Caulfield, Meunier and Villermaux2023 for more details):

$z_{b}$ has been shown to satisfy the following (dimensionless) equation (see Petropoulos et al. Reference Petropoulos, Caulfield, Meunier and Villermaux2023 for more details):

Here ![]() $\rho _{b}$ denotes the (maximal) density of the lamella,

$\rho _{b}$ denotes the (maximal) density of the lamella, ![]() $\ell$ its length (that increases with time

$\ell$ its length (that increases with time ![]() $t$, the functional form of

$t$, the functional form of ![]() $\ell (t)$ depending on the stirring velocity field) and

$\ell (t)$ depending on the stirring velocity field) and ![]() $\alpha$ is an

$\alpha$ is an ![]() ${{O}}(1)-{{O}}(10)$ drag coefficient. Time

${{O}}(1)-{{O}}(10)$ drag coefficient. Time ![]() $t$ has been scaled by the shear time

$t$ has been scaled by the shear time ![]() ${1}/{\gamma }$,

${1}/{\gamma }$, ![]() $z_{b}$ by

$z_{b}$ by ![]() $s_{0}$ and

$s_{0}$ and ![]() $\rho _{b}$ by the initial (maximal) density of the lamella and, hence,

$\rho _{b}$ by the initial (maximal) density of the lamella and, hence, ![]() $Re := {\gamma s_{0}^{2}}/{\nu }$,

$Re := {\gamma s_{0}^{2}}/{\nu }$, ![]() $Fr := {\gamma }/{N}$ and

$Fr := {\gamma }/{N}$ and ![]() $\beta := {g}/[{s_{0}\gamma ^{2}}]$.

$\beta := {g}/[{s_{0}\gamma ^{2}}]$.

In the viscous regime ![]() $Re \lesssim 1$, the vertical trajectory of the lamella is well approximated by assuming that the density of the lamella

$Re \lesssim 1$, the vertical trajectory of the lamella is well approximated by assuming that the density of the lamella ![]() $\rho _{b}$ is constant (

$\rho _{b}$ is constant (![]() $\rho _{b} = 1$ in dimensionless units) before the mixing time and then equal to the density of the surrounding fluid at the position

$\rho _{b} = 1$ in dimensionless units) before the mixing time and then equal to the density of the surrounding fluid at the position ![]() $z_{b}(t_{M})$. If the mixing time is larger than the natural time for the lamella to return to its initial position (without mixing), it ‘settles’ back at its initial position, justifying the resetting strategy presented in the previous section. In the absence of mixing (i.e.

$z_{b}(t_{M})$. If the mixing time is larger than the natural time for the lamella to return to its initial position (without mixing), it ‘settles’ back at its initial position, justifying the resetting strategy presented in the previous section. In the absence of mixing (i.e. ![]() $\rho _{b} = 1$ at all times), two important quantities can be inferred. First, considering the balance between friction and buoyancy and neglecting the inertial term (a balance that inevitably happens when

$\rho _{b} = 1$ at all times), two important quantities can be inferred. First, considering the balance between friction and buoyancy and neglecting the inertial term (a balance that inevitably happens when ![]() $Re \lesssim 1$ and

$Re \lesssim 1$ and ![]() $\ell$ becomes large at late times) the natural (damping) time it takes for the lamella to settle (in the absence of mixing) is of order

$\ell$ becomes large at late times) the natural (damping) time it takes for the lamella to settle (in the absence of mixing) is of order ![]() $\sim {\alpha Fr^{2}}/{(\gamma Re)}$ in dimensional form (Petropoulos et al. Reference Petropoulos, Caulfield, Meunier and Villermaux2023).

$\sim {\alpha Fr^{2}}/{(\gamma Re)}$ in dimensional form (Petropoulos et al. Reference Petropoulos, Caulfield, Meunier and Villermaux2023).

Second, considering the oscillatory behaviour due to the (early time) balance between inertia and buoyancy forces, we find that the maximal excursion of the lamella (again, in the absence of mixing) is of order ![]() $\sim {\sqrt {2\mathcal {E}_{k}}Fr}/{\gamma }$ (where

$\sim {\sqrt {2\mathcal {E}_{k}}Fr}/{\gamma }$ (where ![]() $\mathcal {E}_{k}$ is the initial kinetic energy per unit mass of the lamella). Since

$\mathcal {E}_{k}$ is the initial kinetic energy per unit mass of the lamella). Since ![]() ${1}/{r}$ corresponds to the natural time it takes for a Lagrangian tracer to come back to its initial position and

${1}/{r}$ corresponds to the natural time it takes for a Lagrangian tracer to come back to its initial position and ![]() $\sqrt {{D}/{r}}$ defines the maximum excursion of the tracer, we can therefore infer, in the viscous regime considered here, that

$\sqrt {{D}/{r}}$ defines the maximum excursion of the tracer, we can therefore infer, in the viscous regime considered here, that

We now extend the above reasoning to the turbulent regime. In Petropoulos et al. (Reference Petropoulos, Caulfield, Meunier and Villermaux2023) the size ![]() $s_{0}$ of the lamellae was externally imposed in the experiment. In a turbulent flow, it is reasonable to think that this size, or at least its statistical mean, will emerge as a property of the turbulence. Here, we assume that the lamellae form on scales such that

$s_{0}$ of the lamellae was externally imposed in the experiment. In a turbulent flow, it is reasonable to think that this size, or at least its statistical mean, will emerge as a property of the turbulence. Here, we assume that the lamellae form on scales such that ![]() $Re \sim 1$, i.e. scales such that stretching balances viscous dissipation and, more specifically, scales at which vorticity starts to be viscously dissipated so that the kinematics of the lamellae are mainly affected by local strain. Therefore,

$Re \sim 1$, i.e. scales such that stretching balances viscous dissipation and, more specifically, scales at which vorticity starts to be viscously dissipated so that the kinematics of the lamellae are mainly affected by local strain. Therefore, ![]() $s_{0} \sim \sqrt {{\nu }/{\gamma }}$ and

$s_{0} \sim \sqrt {{\nu }/{\gamma }}$ and ![]() $Pe \sim Pr$ (Duplat et al. Reference Duplat, Innocenti and Villermaux2010). Finally, an estimate of the shear rate

$Pe \sim Pr$ (Duplat et al. Reference Duplat, Innocenti and Villermaux2010). Finally, an estimate of the shear rate ![]() $\gamma$ is still required. In a turbulent regime, this quantity is statistically distributed. However, recalling that it is defined as the norm of the symmetric part of the strain tensor, the mean shear rate scales as

$\gamma$ is still required. In a turbulent regime, this quantity is statistically distributed. However, recalling that it is defined as the norm of the symmetric part of the strain tensor, the mean shear rate scales as ![]() $\sqrt {{\epsilon }/{\nu }}$ (Batchelor Reference Batchelor1959). Therefore,

$\sqrt {{\epsilon }/{\nu }}$ (Batchelor Reference Batchelor1959). Therefore,

and the parameters of the model presented above can therefore be estimated from measurable quantities of the flow and molecular properties of the fluid. The length ![]() $L_{T}$ is the Taylor length scale defined as

$L_{T}$ is the Taylor length scale defined as ![]() $L_{T} \simeq \sqrt {10\nu {\mathcal {E}_{k}}/{\epsilon }}$. Equivalently, this can be rephrased as a function of the buoyancy time scale

$L_{T} \simeq \sqrt {10\nu {\mathcal {E}_{k}}/{\epsilon }}$. Equivalently, this can be rephrased as a function of the buoyancy time scale ![]() ${1}/{N}$ and

${1}/{N}$ and ![]() $Re_{b}$ as follows:

$Re_{b}$ as follows:

(We assume ![]() $\alpha$ to be an order

$\alpha$ to be an order ![]() ${O}(1)$ quantity here, and in what follows.)

${O}(1)$ quantity here, and in what follows.)

These estimates are presented in figure 4 for the three simulations. Note that the screening length ![]() $\sqrt {{D}/{r}}$ varies like

$\sqrt {{D}/{r}}$ varies like ![]() ${1}/{N}$ and, hence, we expect that the mean squared vertical displacement of the particles varies as

${1}/{N}$ and, hence, we expect that the mean squared vertical displacement of the particles varies as ![]() $N^{-2}$ (a scaling confirmed in figure 5b), as suggested empirically by Kimura & Herring (Reference Kimura and Herring1996) and Venayagamoorthy & Stretch (Reference Venayagamoorthy and Stretch2006) and derived theoretically by Lindborg & Brethouwer (Reference Lindborg and Brethouwer2008) in the case of freely decaying stratified turbulence.

$N^{-2}$ (a scaling confirmed in figure 5b), as suggested empirically by Kimura & Herring (Reference Kimura and Herring1996) and Venayagamoorthy & Stretch (Reference Venayagamoorthy and Stretch2006) and derived theoretically by Lindborg & Brethouwer (Reference Lindborg and Brethouwer2008) in the case of freely decaying stratified turbulence.

Figure 4. Parameters inferred from the scaling laws (3.11a,b): (a) shear time scale ![]() $\gamma ^{-1}$, (b) resetting rate

$\gamma ^{-1}$, (b) resetting rate ![]() $r$, (c) eddy diffusivity

$r$, (c) eddy diffusivity ![]() $D$, (d) screening length

$D$, (d) screening length ![]() $\sqrt {{D}/{r}}$.

$\sqrt {{D}/{r}}$.

Figure 5. (a) Mean square displacement ![]() $\langle z^{2} \rangle$ of the stationary probability distribution

$\langle z^{2} \rangle$ of the stationary probability distribution ![]() $p_{\infty }(z)$ solution of (3.7) as a function of

$p_{\infty }(z)$ solution of (3.7) as a function of ![]() $r\langle t_{M} \rangle$, highlighting the differences between the ‘dispersive’ and ‘settling’ regimes discussed in the text. (d) The probability distribution

$r\langle t_{M} \rangle$, highlighting the differences between the ‘dispersive’ and ‘settling’ regimes discussed in the text. (d) The probability distribution ![]() $p_{\infty }$ in the regime

$p_{\infty }$ in the regime ![]() $r\langle t_{M} \rangle \ll 1$ for various values of the mean mixing time

$r\langle t_{M} \rangle \ll 1$ for various values of the mean mixing time ![]() $\langle t_{M} \rangle$ (the inset corresponds to the same figure in log-linear coordinates). As

$\langle t_{M} \rangle$ (the inset corresponds to the same figure in log-linear coordinates). As ![]() $\langle t_{M} \rangle$ increases, extreme events are (slightly) favoured. (e) The analogous plots to panel (d) in the regime

$\langle t_{M} \rangle$ increases, extreme events are (slightly) favoured. (e) The analogous plots to panel (d) in the regime ![]() $r\langle t_{M} \rangle \gg 1$ for various values of

$r\langle t_{M} \rangle \gg 1$ for various values of ![]() $\langle t_{M} \rangle$. As

$\langle t_{M} \rangle$. As ![]() $\langle t_{M} \rangle$ increases, extreme displacements are prevented and the particles cluster around

$\langle t_{M} \rangle$ increases, extreme displacements are prevented and the particles cluster around ![]() $z=0$, i.e. no net displacement is favoured. Note that in order to ensure that the screening length

$z=0$, i.e. no net displacement is favoured. Note that in order to ensure that the screening length ![]() $\sqrt {{D}/{r}}$ is always constant,

$\sqrt {{D}/{r}}$ is always constant, ![]() $r=0.1$ and

$r=0.1$ and ![]() $D=10^{-4}$. (b–c) Mean square displacement

$D=10^{-4}$. (b–c) Mean square displacement ![]() $\langle z^{2} \rangle$ as a function of

$\langle z^{2} \rangle$ as a function of ![]() $N$ (b) or

$N$ (b) or ![]() $Pr$ (c) (all other parameters being fixed; here

$Pr$ (c) (all other parameters being fixed; here ![]() $\epsilon = 1$,

$\epsilon = 1$, ![]() $L_{T} = 1$ and

$L_{T} = 1$ and ![]() $\nu = 1$).

$\nu = 1$).

3.2.3. Limit cases

In the model presented above (3.7), the product ![]() $r\langle t_{M} \rangle$ plays a key role; it controls the ratio

$r\langle t_{M} \rangle$ plays a key role; it controls the ratio ![]() ${l}/{(1 - l)}$ and, hence, the importance of the resetting term relative to the memory term.

${l}/{(1 - l)}$ and, hence, the importance of the resetting term relative to the memory term.

Let us first consider the case ![]() $r\langle t_{M} \rangle \gg 1$. We refer to this regime as the ‘settling’ regime. If the mean time between resets

$r\langle t_{M} \rangle \gg 1$. We refer to this regime as the ‘settling’ regime. If the mean time between resets ![]() ${1}/{r}$ is small compared with the mean mixing time

${1}/{r}$ is small compared with the mean mixing time ![]() $\langle t_{M} \rangle$, statistically mixing does not have time to happen between resets and the particles (for which the density of the structures that carry them does not have time to change) will favourably be reset to their initial position and so ‘settle’ (back). As

$\langle t_{M} \rangle$, statistically mixing does not have time to happen between resets and the particles (for which the density of the structures that carry them does not have time to change) will favourably be reset to their initial position and so ‘settle’ (back). As ![]() $\langle t_{M} \rangle$ increases, the condition

$\langle t_{M} \rangle$ increases, the condition ![]() $r\langle t_{M} \rangle \gg 1$ becomes stronger and the statistics of mixing times shift towards large values; we then expect more particles to settle back to their initial position before mixing and, hence, the probability distribution

$r\langle t_{M} \rangle \gg 1$ becomes stronger and the statistics of mixing times shift towards large values; we then expect more particles to settle back to their initial position before mixing and, hence, the probability distribution ![]() $p_{\infty }(z)$ to be more peaked around

$p_{\infty }(z)$ to be more peaked around ![]() $z=0$. In terms of the physical parameters of the problem, the condition

$z=0$. In terms of the physical parameters of the problem, the condition ![]() $r\langle t_{M} \rangle \gg 1$ can be rephrased as

$r\langle t_{M} \rangle \gg 1$ can be rephrased as ![]() ${\mathcal {F}(Pr)}/{Re_{b}} \gg 1$, i.e. the flow is in the settling regime. In that regime, the above-listed aspects suggest that as

${\mathcal {F}(Pr)}/{Re_{b}} \gg 1$, i.e. the flow is in the settling regime. In that regime, the above-listed aspects suggest that as ![]() $Pr$ increases (and, hence,

$Pr$ increases (and, hence, ![]() $\langle t_{M} \rangle$ increases), more particles cluster around

$\langle t_{M} \rangle$ increases), more particles cluster around ![]() $z=0$.

$z=0$.

Let us now consider the case ![]() $r\langle t_{M} \rangle \ll 1$, which we refer to as the ‘dispersive’ regime. In that regime, the mean time between resets

$r\langle t_{M} \rangle \ll 1$, which we refer to as the ‘dispersive’ regime. In that regime, the mean time between resets ![]() ${1}/{r}$ is large compared with the mean mixing time

${1}/{r}$ is large compared with the mean mixing time ![]() $\langle t_{M} \rangle$ and the stochastic dynamics is mainly controlled by the memory term. As the particles evolve on their stochastic paths, they are preferentially reset (when that happens) to the position at their mixing time rather than at their initial position, with mixing having time to occur in between the two resetting events. As a result, as

$\langle t_{M} \rangle$ and the stochastic dynamics is mainly controlled by the memory term. As the particles evolve on their stochastic paths, they are preferentially reset (when that happens) to the position at their mixing time rather than at their initial position, with mixing having time to occur in between the two resetting events. As a result, as ![]() $\langle t_{M} \rangle$ increases (but is still small enough so that

$\langle t_{M} \rangle$ increases (but is still small enough so that ![]() $r\langle t_{M} \rangle \ll 1$), we expect particles to travel longer distances in a statistical sense, and so they are likely to ‘disperse’. Indeed, as particles are dispersed away from their initial position by turbulent fluctuations, those with large mixing times (an event that is more likely for large

$r\langle t_{M} \rangle \ll 1$), we expect particles to travel longer distances in a statistical sense, and so they are likely to ‘disperse’. Indeed, as particles are dispersed away from their initial position by turbulent fluctuations, those with large mixing times (an event that is more likely for large ![]() $\langle t_{M} \rangle$) will have time to travel longer distances before mixing. Therefore, they will be reset further away from their initial position compared with particles with small mixing times when reset happens (at the actual position at mixing time). Reformulated in terms of the physical parameters of our problems, this means that in this ‘dispersive’ regime

$\langle t_{M} \rangle$) will have time to travel longer distances before mixing. Therefore, they will be reset further away from their initial position compared with particles with small mixing times when reset happens (at the actual position at mixing time). Reformulated in terms of the physical parameters of our problems, this means that in this ‘dispersive’ regime ![]() ${\mathcal {F}(Pr)}/{Re_{b}} \ll 1$, as

${\mathcal {F}(Pr)}/{Re_{b}} \ll 1$, as ![]() $Pr$ increases (and, hence,

$Pr$ increases (and, hence, ![]() $\langle t_{M} \rangle$ increases), we expect – everything else being kept equal – that the particles will statistically travel longer vertical distances, turbulent fluctuations winning over restoring buoyancy forces. In other words, in that regime, since the particles with large mixing times have more time to be dispersed by turbulent fluctuations away from their initial position before mixing, they will be reset further away from their initial position when they start ‘feeling’ the stratification and are reset at the position they had at mixing time.

$\langle t_{M} \rangle$ increases), we expect – everything else being kept equal – that the particles will statistically travel longer vertical distances, turbulent fluctuations winning over restoring buoyancy forces. In other words, in that regime, since the particles with large mixing times have more time to be dispersed by turbulent fluctuations away from their initial position before mixing, they will be reset further away from their initial position when they start ‘feeling’ the stratification and are reset at the position they had at mixing time.

We can summarise these observations as follows. At a fixed screening length ![]() $\sqrt {{D}/{r}}$, as

$\sqrt {{D}/{r}}$, as ![]() $r\langle t_{M} \rangle$ increases, we move from a ‘dispersive’ regime where the variance of the stationary process increases to a ‘settling’ (back) regime where the variance decreases, as shown in figure 5. In terms of the physical parameters of the problem, the Prandtl number

$r\langle t_{M} \rangle$ increases, we move from a ‘dispersive’ regime where the variance of the stationary process increases to a ‘settling’ (back) regime where the variance decreases, as shown in figure 5. In terms of the physical parameters of the problem, the Prandtl number ![]() $Pr$ plays a role (through the weak diffusive correction

$Pr$ plays a role (through the weak diffusive correction ![]() $\mathcal {F}(Pr)$) in defining the boundary between the ‘dispersive’ and ‘settling’ regimes; for

$\mathcal {F}(Pr)$) in defining the boundary between the ‘dispersive’ and ‘settling’ regimes; for ![]() $N$ and

$N$ and ![]() $\epsilon$ fixed, there exists a large enough

$\epsilon$ fixed, there exists a large enough ![]() $Pr$ so that the regime is ‘settling’.

$Pr$ so that the regime is ‘settling’.

3.2.4. Comparison with numerical data

Let us now compare the stationary probability distributions of vertical displacements predicted using (3.8) and the empirically determined distributions from our numerical data. Figure 6 summarises the numerical data and the model predictions for the three simulations considered here. The model parameters used here are ![]() $D \simeq 0.01$,

$D \simeq 0.01$, ![]() $r \simeq 0.8$ and

$r \simeq 0.8$ and ![]() $\gamma ^{-1} \simeq 0.3$, values that are in relative agreement with the theoretical predictions presented in figure 4, at the time when the turbulence is the most energetic. The weak diffusive correction for the mean mixing times

$\gamma ^{-1} \simeq 0.3$, values that are in relative agreement with the theoretical predictions presented in figure 4, at the time when the turbulence is the most energetic. The weak diffusive correction for the mean mixing times ![]() $\langle t_{M} \rangle$ is assumed to be logarithmic, corresponding to exponential stretching (Duplat et al. Reference Duplat, Innocenti and Villermaux2010; Villermaux Reference Villermaux2019). This seems to be a reasonable choice in stratified turbulence where fluid parcels are elongated at an exponential rate in the two horizontal directions. The model's prediction seems to fit the data relatively well, the main discrepancies coming from the small displacement data

$\langle t_{M} \rangle$ is assumed to be logarithmic, corresponding to exponential stretching (Duplat et al. Reference Duplat, Innocenti and Villermaux2010; Villermaux Reference Villermaux2019). This seems to be a reasonable choice in stratified turbulence where fluid parcels are elongated at an exponential rate in the two horizontal directions. The model's prediction seems to fit the data relatively well, the main discrepancies coming from the small displacement data ![]() $z \simeq 0$.

$z \simeq 0$.

Figure 6. Stationary probability distribution of vertical displacement ![]() $p_{\infty }(z)$ for the simulation data (dots) and the model (3.7) (coloured lines) and (3.4) (black lines). The vertical axis is presented in both linear (panel a) and logarithmic (panel b) scales. A zoom on the

$p_{\infty }(z)$ for the simulation data (dots) and the model (3.7) (coloured lines) and (3.4) (black lines). The vertical axis is presented in both linear (panel a) and logarithmic (panel b) scales. A zoom on the ![]() $z \simeq 0$ region is presented in panel (c). Dotted lines correspond to

$z \simeq 0$ region is presented in panel (c). Dotted lines correspond to ![]() $Pr=7$ and dashed lines correspond to

$Pr=7$ and dashed lines correspond to ![]() $Pr=50$. The inset in panel (a) shows the logarithm of the right tail of the stationary distribution, plotted in log coordinates. Our model predicts a purely exponential tail, a prediction that seems consistent with the data. For reference, we also plot a line with slope

$Pr=50$. The inset in panel (a) shows the logarithm of the right tail of the stationary distribution, plotted in log coordinates. Our model predicts a purely exponential tail, a prediction that seems consistent with the data. For reference, we also plot a line with slope ![]() $2$, corresponding to a Gaussian tail, e.g. arising when considering a Brownian process in a quadratic potential.

$2$, corresponding to a Gaussian tail, e.g. arising when considering a Brownian process in a quadratic potential.

The following trends are observed. As ![]() $Pr$ increases from

$Pr$ increases from ![]() $Pr=1$ to

$Pr=1$ to ![]() $Pr=7$, smaller displacements are favoured, whereas from

$Pr=7$, smaller displacements are favoured, whereas from ![]() $Pr=7$ to

$Pr=7$ to ![]() $Pr=50$, larger displacements are more likely. A tentative explanation is given here, since, unfortunately, not only

$Pr=50$, larger displacements are more likely. A tentative explanation is given here, since, unfortunately, not only ![]() $Pr$ changes but also

$Pr$ changes but also ![]() $\epsilon$, making any comparative analysis challenging. As the Prandtl number

$\epsilon$, making any comparative analysis challenging. As the Prandtl number ![]() $Pr$ increases,

$Pr$ increases, ![]() $\mathcal {F}(Pr)$ as well as the dissipation rate of turbulent

$\mathcal {F}(Pr)$ as well as the dissipation rate of turbulent ![]() $\epsilon$ increases. Increasing

$\epsilon$ increases. Increasing ![]() $\mathcal {F}(Pr)$ tends to increase the mean mixing time

$\mathcal {F}(Pr)$ tends to increase the mean mixing time ![]() $\langle t_{M} \rangle$, hence allowing particles to travel longer vertical distances in the ‘dispersive’ regime, as described in § 3.2.3 and considered here, everything else being fixed. Conversely, increasing

$\langle t_{M} \rangle$, hence allowing particles to travel longer vertical distances in the ‘dispersive’ regime, as described in § 3.2.3 and considered here, everything else being fixed. Conversely, increasing ![]() $\epsilon$ tends to decrease

$\epsilon$ tends to decrease ![]() $\langle t_{M} \rangle$, thus preventing particles from travelling long distances. Further complicating interpretation, an increase in

$\langle t_{M} \rangle$, thus preventing particles from travelling long distances. Further complicating interpretation, an increase in ![]() $\epsilon$ also tends to decrease the resetting rate

$\epsilon$ also tends to decrease the resetting rate ![]() $r$ and, hence, potentially allows particles to travel further (at fixed

$r$ and, hence, potentially allows particles to travel further (at fixed ![]() $\langle t_{M} \rangle$). In summary, in the regime considered here, turbulent energetics (

$\langle t_{M} \rangle$). In summary, in the regime considered here, turbulent energetics (![]() $\epsilon$) and the molecular properties of the fluid (

$\epsilon$) and the molecular properties of the fluid (![]() $Pr$) have competing effects on dispersion. Note also that the eddy diffusivity

$Pr$) have competing effects on dispersion. Note also that the eddy diffusivity ![]() $D$ (slightly) decreases, a decrease that compensates for the decrease of

$D$ (slightly) decreases, a decrease that compensates for the decrease of ![]() $r$ so that the screening length

$r$ so that the screening length ![]() $\sqrt {{D}/{r}}$ remains constant, as shown in figure 4(d). Therefore, diffusion by turbulent fluctuations is more limited in the case

$\sqrt {{D}/{r}}$ remains constant, as shown in figure 4(d). Therefore, diffusion by turbulent fluctuations is more limited in the case ![]() $Pr=7$ than in the case

$Pr=7$ than in the case ![]() $Pr=1$. In other words, we are here witnessing the opposite effects of turbulence energetics on mixing times and resetting rates (increasing

$Pr=1$. In other words, we are here witnessing the opposite effects of turbulence energetics on mixing times and resetting rates (increasing ![]() $\epsilon$ and, hence,

$\epsilon$ and, hence, ![]() $Re_{b}$ decreases the mean mixing time, allowing particles to travel shorter vertical distances in the ‘dispersing’ regime considered here, but increases the mean time between resets

$Re_{b}$ decreases the mean mixing time, allowing particles to travel shorter vertical distances in the ‘dispersing’ regime considered here, but increases the mean time between resets ![]() $1/r$, allowing particles to travel longer distances). The story is made even more complex by the fact that

$1/r$, allowing particles to travel longer distances). The story is made even more complex by the fact that ![]() $r\langle t_{M} \rangle$ is

$r\langle t_{M} \rangle$ is ![]() ${O}(1)$ rather than in one of the limit cases studied earlier (§ 3.2.3). Hence, the regime studied here is in fact in the transition between the ‘settling’ regime and the ‘dispersive’ regime described in § 3.2.3. All in all, between

${O}(1)$ rather than in one of the limit cases studied earlier (§ 3.2.3). Hence, the regime studied here is in fact in the transition between the ‘settling’ regime and the ‘dispersive’ regime described in § 3.2.3. All in all, between ![]() $Pr=1$ and

$Pr=1$ and ![]() $Pr=7$, increases in

$Pr=7$, increases in ![]() $Pr$ and

$Pr$ and ![]() $\epsilon$ lead to particles travelling less in the

$\epsilon$ lead to particles travelling less in the ![]() $Pr=7$ case. When

$Pr=7$ case. When ![]() $Pr$ increases from

$Pr$ increases from ![]() $Pr=7$ to

$Pr=7$ to ![]() $Pr=50$,

$Pr=50$, ![]() $\epsilon$ is relatively constant and, hence, the mixing time increases due essentially to changes in

$\epsilon$ is relatively constant and, hence, the mixing time increases due essentially to changes in ![]() $Pr$ with the other parameters remaining approximately constant. As a result, in the marginal case studied here, particles travel longer distances in the

$Pr$ with the other parameters remaining approximately constant. As a result, in the marginal case studied here, particles travel longer distances in the ![]() $Pr=50$ case in comparison with the

$Pr=50$ case in comparison with the ![]() $Pr=7$ case.

$Pr=7$ case.

4. Comments on the model

In this section we discuss the different assumptions used to build the model presented above.

4.1. Distribution of resetting times

The main assumption concerns the distribution of resetting events and mixing times. Resetting (or settling) events are assumed to follow a Poisson process with parameter ![]() $r$. More generally, we could have defined the resetting process through a waiting time distribution between resetting events (Eule & Metzger Reference Eule and Metzger2016). However, it then becomes difficult to write down a forward master equation as one must keep track of the time since the last reset. Since the resetting events are introduced to mimic the restoring forces experienced by particles due to density differences, a perhaps better model would consider the waiting time between two passages at the minimum potential energy level of a particle subject to Brownian motion in a quadratic potential. For the sake of simplicity and analytical solvability, we restricted ourselves to the well-studied Poissonian resetting case. This can perhaps explain the discrepancy between the model and the data around

$r$. More generally, we could have defined the resetting process through a waiting time distribution between resetting events (Eule & Metzger Reference Eule and Metzger2016). However, it then becomes difficult to write down a forward master equation as one must keep track of the time since the last reset. Since the resetting events are introduced to mimic the restoring forces experienced by particles due to density differences, a perhaps better model would consider the waiting time between two passages at the minimum potential energy level of a particle subject to Brownian motion in a quadratic potential. For the sake of simplicity and analytical solvability, we restricted ourselves to the well-studied Poissonian resetting case. This can perhaps explain the discrepancy between the model and the data around ![]() $z=0$, and especially the Gaussian-like distribution near the origin rather than exponential-like distribution as predicted by the model.

$z=0$, and especially the Gaussian-like distribution near the origin rather than exponential-like distribution as predicted by the model.

4.2. Resetting strategy

On a similar note, we have assumed that if the mixing time associated with a particle is larger than the waiting time between two resets, then the particle should be reset to its initial position, i.e. it should ‘settle’ back. This is an oversimplification; indeed, it would then in fact be more accurate to reset the particle to the position it had at last reset, i.e. its last equilibrium position. This involves keeping track of the resetting times. In the case of Poissonian resetting, this might be possible, since the number of resets up to time ![]() $t$ follows a Poisson distribution and the time of the

$t$ follows a Poisson distribution and the time of the ![]() $n$th reset follows a gamma distribution, but adds significant complexity to the model.

$n$th reset follows a gamma distribution, but adds significant complexity to the model.

We could, for instance, change the term ![]() $rl\delta (z)$ in (3.7) into

$rl\delta (z)$ in (3.7) into

\begin{equation} r\left[l P(R(t) = 0)\delta(z) + \sum_{n=1}^{+\infty}l_{n}P(R(t) = n)\int_{0}^{t}\mathcal{K}_{r}(t_{r}, t; n, r)p(z, t_{r})\,\mathrm{d}t_{r}\right], \end{equation}

\begin{equation} r\left[l P(R(t) = 0)\delta(z) + \sum_{n=1}^{+\infty}l_{n}P(R(t) = n)\int_{0}^{t}\mathcal{K}_{r}(t_{r}, t; n, r)p(z, t_{r})\,\mathrm{d}t_{r}\right], \end{equation}

with ![]() $R(t)$ the number of resets up to time

$R(t)$ the number of resets up to time ![]() $t$, following a Poisson distribution of parameter

$t$, following a Poisson distribution of parameter ![]() $rt$, where

$rt$, where ![]() $\mathcal {K}_{r}(t_{r}, t; n, r)$ is the truncated distribution of the

$\mathcal {K}_{r}(t_{r}, t; n, r)$ is the truncated distribution of the ![]() $n$th reset time between times

$n$th reset time between times ![]() $0$ and

$0$ and ![]() $t$ (recall that the

$t$ (recall that the ![]() $n$th reset time follows a gamma distribution of parameter

$n$th reset time follows a gamma distribution of parameter ![]() $n$,

$n$, ![]() $r$) and

$r$) and ![]() $l_{n}$ is the probability of the

$l_{n}$ is the probability of the ![]() $n$th resetting time being smaller than the mixing time. The other terms in (3.7) should also be changed accordingly, i.e. weighted by

$n$th resetting time being smaller than the mixing time. The other terms in (3.7) should also be changed accordingly, i.e. weighted by ![]() $l_{n}P(R(t) = n)$ or

$l_{n}P(R(t) = n)$ or ![]() $(1-l_{n})P(R(t) = n)$. Compared with the

$(1-l_{n})P(R(t) = n)$. Compared with the ![]() $rl\delta (z)$ resetting term, (4.1) does not enforce the particles to settle back to their initial position

$rl\delta (z)$ resetting term, (4.1) does not enforce the particles to settle back to their initial position ![]() $z=0$ and, hence, might correct the discrepancies between the predictions of (3.7) and the simulation data for small displacement

$z=0$ and, hence, might correct the discrepancies between the predictions of (3.7) and the simulation data for small displacement ![]() $z \simeq 0$. Indeed, as a rule of thumb, the first correction in (4.1) is of the form

$z \simeq 0$. Indeed, as a rule of thumb, the first correction in (4.1) is of the form ![]() $P(R(t) = 0)\delta (z) = \mathrm {e}^{-rt}\delta (z)$ and, hence, the particles lose memory of their initial position on a time scale

$P(R(t) = 0)\delta (z) = \mathrm {e}^{-rt}\delta (z)$ and, hence, the particles lose memory of their initial position on a time scale ![]() $1/r$. It is therefore instructive to compare the stationary solutions of models (3.7) and (3.4), i.e. the models with or without memory of the initial position, respectively, with the data. As can be seen in figure 6, the data are indeed (slightly) better fitted when considering the stationary solution of (3.4), giving good hope that the full correction (4.1) corrects the model for small displacement

$1/r$. It is therefore instructive to compare the stationary solutions of models (3.7) and (3.4), i.e. the models with or without memory of the initial position, respectively, with the data. As can be seen in figure 6, the data are indeed (slightly) better fitted when considering the stationary solution of (3.4), giving good hope that the full correction (4.1) corrects the model for small displacement ![]() $z$. However, this correction prevents any analytical treatment and we therefore limit ourselves here to what is effectively the first step of the (resetting) Poisson process. Such added complexity might (for example) be needed in the case of forced turbulence, which is beyond the scope of this work.

$z$. However, this correction prevents any analytical treatment and we therefore limit ourselves here to what is effectively the first step of the (resetting) Poisson process. Such added complexity might (for example) be needed in the case of forced turbulence, which is beyond the scope of this work.

Another important question is whether the resetting term in our model is suitable for modelling the effect of buoyancy force. Note that we are here working with the stochastic paths of the fluid parcels rather than their physical paths and, hence, it seems reasonable to think that restoring buoyancy forces will eventually (i.e. at a randomly sampled resetting time) collapse the fluid parcels’ stochastic paths onto their neutrally buoyant positions. This plausible argument motivated our modelling assumptions.