Policy Significance Statement

Algorithms are becoming more important in societies; governments are asked to deploy these tools for public purposes. We do not know enough about the conditions under which agencies choose to deploy such tools. Crime laboratories have used one such tool for many years yet use remains unevenly distributed. We show that the constraints and pressures all agencies face (such as limited resources or expanding task environments) are also associated with their use of this expert system.

1. Introduction

Information systems researchers have long debated the prospect of the delegation of important public decisions to machines. The 1956 announcement of the development of the first expert system (Watson and Mann, Reference Watson and Mann1988) has brought us to pronouncements of a “second machine age” in which machines and algorithms will augment or even replace skilled and cognitive work (Brynjolfsson and McAfee, Reference Brynjolfsson and McAfee2016; Schwab, Reference Schwab2016; Fry, Reference Fry2018; Noble, Reference Noble2018). Given a world inhabited by machines of advanced capabilities, a common narrative is that firms and government agencies will pursue such emerging technologies when possible.

While all organizations decide whether and how to engage with automation (Orlikowski, Reference Orlikowski2000; Roper, Reference Roper2002; Dunleavy, Reference Dunleavy2005; Mergel, Reference Mergel2018), our knowledge of such innovations in government agencies remains limited. In general, innovation processes are different in government organizations from those in the private sector (e.g., Rainey et al., Reference Rainey, Backoff and Levine1976; Perry and Rainey, Reference Perry and Rainey1988). Researchers have argued that government agencies can choose to “innovate with integrity” (Borins, Reference Borins1998), but we also know that such innovation does not happen uniformly. A vast array of case studies on innovation in government agencies show that “when” and “how” depend on many factors—and that our systematic knowledge of those factors remains coarse. One well-regarded large-scale systematic literature review specifically notes limitations in methods (with qualitative research dominating the use of surveys and other approaches), theory testing (most studies are exploratory), and location heterogeneity (not accounting for variations in governance and state traditions; De Vries et al., Reference De Vries, Bekkers and Tummers2016).

In this article, we offer quantitative evidence from a large sample of similar government agencies of the uneven distribution of the delegation of important decisions to an expert system. These organizations are geographically dispersed and so are situated within different governance and state arrangements; we also observe these deployments over time. Our statistical model is informed by a strong theory of innovation rooted in the push-pull dichotomy (Dosi, Reference Dosi1982; Rennings, Reference Rennings2000; Taylor et al., Reference Taylor, Rubin and Hounshell2005; Peters et al., Reference Peters, Schneider, Griesshaber and Hoffmann2012; Clausen et al., Reference Clausen, Demircioglu and Alsos2020).

In broad terms, the “when and where” of such innovations depend on “technology-push” and “demand-pull” dynamics that shape how the organization decides whether to deploy an advanced technology (Dosi, Reference Dosi1982). In government agencies, push and pull factors (and the public sector-equivalents of market signals) are theorized as working together with an agency’s innovation capabilities (Clausen et al., Reference Clausen, Demircioglu and Alsos2020). Theory has advanced and our data about such mechanisms has improved, but knowledge gaps remain about such factors and the role of organizational capability across different domains (Peters et al., Reference Peters, Schneider, Griesshaber and Hoffmann2012). This theoretical approach about mechanisms of innovation helps us better understand the conditions under which governments have delegated complex decisions requiring expertise and substantial knowledge to an expert system.

We focus on an expert system as an illustration of the “rise of the machines” (Buchanan and Smith, Reference Buchanan and Smith1988; Watson and Mann, Reference Watson and Mann1988). An expert system is a computer-based program or system that supports decision-making using a choice architecture combined with knowledge stored in a database; an expert system is an inference engine joined with a knowledge base. Since Berry, Berry, and Foster first surveyed leaders about their willingness to use expert systems, few papers have assembled quantitative evidence about the use of expert systems or similar tools in government agencies (Berry et al., Reference Berry, Berry and Foster1998). Most studies have centered instead on the use of traditional information technology applications, the advent of the Internet, the adoption of other communication network platforms, or the take-up of data science techniques (Moon et al., Reference Moon, Lee and Roh2014). Even the broadest quantitative assessments of the use of such algorithm-based decision-making are limited to counting up instances (Long and Gil-Garcia, Reference Long and Gil-Garcia2023) or assessing individual perceptions in limited cases about the early or prospective use of systems like artificial intelligence (e.g., Sun and Medaglia, Reference Sun and Medaglia2019). The most advanced assessments are usually constructed as randomized control trials because they are intended to assess the tool’s effectiveness—not to determine where and when the tool was used (e.g., Döring et al., Reference Döring, Mikkelsen, Madsen and Haug2024). Similarly, the best studies of those implementations are usually case studies (e.g., Van Noordt and Tangi, Reference Van Noordt and Tangi2023).

Our evidence comes from the deployment of an expert system in forensic crime laboratories in the United States. These local, state, and regional laboratories assemble and process criminal justice evidence (McEwen, Reference McEwen2010; Hohl and Stanko, Reference Hohl and Stanko2015; Campbell and Fehler-Cabral, Reference Campbell and Fehler-Cabral2020), so their use of expert systems affects how evidence is discovered and managed. Specifically, the Integrated Ballistic Identification System (“IBIS”) for matching bullets to crimes is an expert system that has been widely discussed, deployed, and debated (Nennstiel and Rahm, Reference Nennstiel and Rahm2006; Braga and Pierce, Reference Braga and Pierce2011; King and Wells, Reference King and Wells2015). Just as with DNA matching, which is also often done via expert system, ballistics imaging is often completed via expert system because the demands on the technical capacity of most laboratories exceed their capabilities.

We use data from two samples of the forensic crime laboratories to test how the use of expert systems depends on key factors in the push-pull-capabilities framework. We offer hypotheses that reflect core understandings of constraints and opportunities encountered by most government agencies, such as the agency’s task environment, professionalism, its network relationships, technology awareness, and the relative level of resources. Because we have two samples, we examine how deployment processes change over time.

We find that the laboratories are early users if they have the capacity to do so. As time proceeds, use is largely associated with budget size, agency task environments, and network attributes—as well as awareness of the use of a generic expert system. Stated use remains surprisingly low given the degree to which technologists have pushed for delegation to machines since the late 1950s. But given use rates, these factors are suggestive about the prospect for even more advanced technologies in government agencies. Early and late adoption, and consequently the inevitable inequities of uneven distribution, fundamentally shift the justice process. Of course, technology adoption is always more than a summary of factors; it is also a reflection of the lived experiences of professionals working in different organizations facing different challenges. The limited evidence seen in this case of expert systems suggests the need for greater attention to the conditions under which such factors affect adoption and use. More importantly, our approach joins together a notable empirical case and a strong theory of innovation to provide broad evidence about the historical utilization of expert systems as algorithms in public sector applications—a missing piece in the puzzle for better understanding those prospects.

In the next section, we consider mechanisms that may drive technology use in the public sector. After that, we review the roles of expert systems within the context of the forensic crime laboratories and their role in the justice process. The fourth section offers hypotheses and the data’s attributes. These sections are followed by our estimation approach and the results of our models. In the conclusion, we reconsider the uneven deployment of expert systems in government.

2. Push, pull, and capability forces in government innovation processes

This article asks whether push forces, pull forces, and organizational capabilities are associated with technology use in a public organization sector. Our focus on the specific use of a technology at the organizational level means we are bridging individual and organizational processes. The historically-important literatures on the “technology acceptance model” (TAM) and the “diffusion of innovations” tell us that the use of such innovations depends on characteristics such as their perceived usefulness, complexity, and accessibility (e.g., Rogers, Reference Rogers1962; Davis, Reference Davis1989; Legris et al., Reference Legris, Ingham and Collerette2003).

At the individual (user) level, we know such decisions can be highly contingent. For instance, more complex versions of the TAM indicate that perceived usefulness itself depends on factors such as the perceived ease of use, subjective norms, and result demonstrability. Perceived usefulness, along with variables such as experience and voluntariness, affects intentions to use such technologies, which then helps determine actual usage. A broad array of empirical studies supports more (not less) complex interpretations of the acceptance of any given technology (Legris et al., Reference Legris, Ingham and Collerette2003).

Likewise, for 50 years we have recognized that organizational acceptance of an emerging technology is affected by innovation attributes, the relative advantages offered by innovations, and organizational fit; additional concerns include complexity, trialability, and observability (Rogers, Reference Rogers1962). Mixtures of these effects—at both the individual and organizational levels—contribute to a mosaic of motivations and incentives that are difficult to tease out in any broad-scale study.

In our specific research context, advanced technologies are widely seen as helping both governments and nonprofit organizations develop sufficient capacity for carrying out their missions (Fredericksen and London, Reference Fredericksen and London2000; Eisinger, Reference Eisinger2002). This is mainly done through the automation of tasks common to such organizations, especially those that require high expertise and specialization. The general view has been that such innovations are useful even if they require investments, process changes, or additional training (e.g., Marler et al., Reference Marler, Liang and Dulebohn2006), although the relative benefits of technology use in public organizations are conditional—that technology is not a universal good but its utility depends on other aspects of the organizational context (e.g., Brudney and Selden, Reference Brudney and Selden1995; Nedovic-Budic and Godschalk, Reference Nedovic-Budic and Godschalk1996; Moon, Reference Moon2002; Norris and Moon, Reference Norris and Moon2005; Jun and Weare, Reference Jun and Weare2011; Mergel and Bretschneider, Reference Mergel and Bretschneider2013; Moon et al., Reference Moon, Lee and Roh2014; Brougham and Haar, Reference Brougham and Haar2018; Mergel, Reference Mergel2018). This flows from competing claims on time and resources within agencies, from conflicts resulting from new workflows and learning curves, and from disruption to routines and power relationships. Technological change is just one of the many competing demands managed by public agencies.

This complex of organizational forces is one reason it is difficult to study innovation in the public sector. In his 1998 book Innovating with Integrity, Sandford Borins assessed the relative balancing of forces for and against adoption—leading to his conclusion that government innovation often comes down to “it depends” (Borins, Reference Borins1998). Because innovation usually depends on context, what we observe in the public sector depends on how mixtures of forces determine innovative strategies, the adoption of new technologies, and different management processes in each public organization. The relative balance of those forces shapes and constrains what we observe at the organizational level.

One main area of agreement, though, is that innovation processes in government organizations are different from those in the private sector (e.g., Rainey et al., Reference Rainey, Backoff and Levine1976; Perry and Rainey, Reference Perry and Rainey1988). Agencies care about service delivery (Brown et al., Reference Brown, Potoski and Van Slyke2006), so technology choice and use are entwined with goals such as transparency, engagement, and communication, along with more traditional concerns like efficiency and effectiveness (e.g., Bertot et al., Reference Bertot, Jaeger and Grimes2010; Desouza and Bhagwatwar, Reference Desouza and Bhagwatwar2012; Reddick and Turner, Reference Reddick and Turner2012; Gil-Garcia et al., Reference Gil-Garcia, Helbig and Ojo2014). This is common with technologies that affect data collection and processing. Even if agencies have supportive goals and leadership (Dawson et al., Reference Dawson, Denford and Desouza2016), their risk-averse cultures can hinder the uptake of new and emerging technologies, especially given political oversight (Savoldelli et al., Reference Savoldelli, Codagnone and Misuraca2014).

This public-private distinction is particularly important in the study of innovation processes. Dosi (Reference Dosi1982) centered attention on the dichotomy between “technology-push” and “demand-pull” as a central way for understanding the more proximate factors that drive the adoption and use of specific technologies in markets and firms. The dichotomy is a powerful framework for understanding innovation; more than just “for the sake of exposition”, it is “a fundamental distinction between the two approaches” that builds on how organizations process market signals (Dosi, Reference Dosi1982, 148).

Specifically, Dosi handles the dichotomy by focusing on processes of search and selection whereby firms navigate new technologies—that “new technologies are selected through a complex interaction between some fundamental economic factors (search for new profit opportunities and for new markets, tendency toward cost saving and automation, etc.), together with powerful institutional factors (the interest and the structure of the existing firm, the effects of government agencies, etc.)” (Dosi, Reference Dosi1982, 157). In aggregate, this means that each firm has a different pathway for taking up and using new technologies that depends on how market and non-market forces interact, with market forces structuring the responses of most firms. The push-pull dichotomy literature has fundamentally shaped our understanding of private-sector innovation (Di Stefano et al., Reference Di Stefano, Gambardella and Verona2012).

Yet, while the broad distinction between push and pull forces remains a powerful way of understanding the factors driving organizational outcomes, recent advances have enriched this theoretical framework in ways that are especially useful for the study of public agencies. Building on our knowledge about the roles of organizational capabilities and resources (Nelson and Winter, Reference Nelson and Winter1977; Di Stefano et al., Reference Di Stefano, Gambardella and Verona2012), Clausen, Demiricioglu, and Alsos argue that organizational capabilities are especially important for public sector organizations as they work hand-in-hand with the complex of demand-pull and technology-push forces for innovation (Clausen et al., Reference Clausen, Demircioglu and Alsos2020). In contrast to the private sector organizations that Dosi discusses, most public sector organizations are largely insulated from traditional market signals such as sales and profits. These broad roles for capabilities and resources are substantiated in the voluminous literature on the resource-based view of organization (Barney, Reference Barney1991; Teece, Reference Teece2007), for which there is substantial evidence in the public sector (Pablo et al., Reference Pablo, Reay, Dewald and Casebeer2007; Lee and Whitford, Reference Lee and Whitford2013). For Clausen, Demiricioglu, and Alsos, the capabilities of public sector organizations (thought of in terms of resources) support their attempts to create public value (Moore, Reference Moore2001; Jørgensen and Bozeman, Reference Jørgensen and Bozeman2007), although we reiterate that capabilities and resources are broad concepts for which there are many possible measurement paths in any given group of public sector organizations (Lee and Whitford, Reference Lee and Whitford2013).

We recognize that there has been a resurgence in interest in the roles of capabilities in technology adoption and utilization studies of the public sector (Favoreu et al., Reference Favoreu, Maurel and Queyroi2024; Selten and Klievink, Reference Selten and Klievink2024), with an increasing focus on dynamic and specific capabilities as precursors for innovation (Birkinshaw et al., Reference Birkinshaw, Zimmermann and Raisch2016) rather than more general dynamic capabilities (Teece, Reference Teece2007). These are important questions that these recent case studies can help tease out in specific settings. In the empirical context we describe further below, we focus on a specific capability partly due to common measurement across organizations and fungibility of that resource (Lee and Whitford, Reference Lee and Whitford2013), partly because fungible resources are especially important for organizations pursuing long-term evolutionary benefits (Li, Reference Li2016).

This differentiation about the roles of capabilities and resources is important for public sector organizations because the original technology-push and demand-pull classification from Dosi is deeply entwined with his view of market forces. While the “concept of technology-push takes a linear supply-side perspective on the innovation process” (i.e., due to advances in science and technology that are then made available to firms), the demand-pull view says that “innovation is fundamentally driven by (expected) market demand that influences the direction and rate of innovative activity” (Clausen et al., Reference Clausen, Demircioglu and Alsos2020, 161). Below we try to operationalize these broad concepts within the context of our sample of public agencies, given the service environment that they navigate, but even the highest-level treatment of these concepts is aided by considering the broader view of Dosi’s framework suggested by affiliated theoretical contributions (Nelson and Winter, Reference Nelson and Winter1977; Di Stefano et al., Reference Di Stefano, Gambardella and Verona2012).

3. Context and data: expert systems in the crime laboratories

We focus on expert systems as progenitors of the use of advanced algorithms (Buchanan and Smith, Reference Buchanan and Smith1988; Rolston, Reference Rolston1988). While expert systems are just one variant, we view their adoption and use as an important indicator of what happens when decisions are delegated to algorithms. In many settings, expert systems are justifiable delegatees because they are already widely accepted in many applied fields (such as finance, accounting, and manufacturing) and also because they provide a starting point for considering a wide array of increasingly-familiar methodologies (e.g., genetic algorithms, model-based reasoning, fuzzy systems, neural networks, machine learning, distributed learning; Liebowitz, Reference Liebowitz1998).

Fields like criminal justice have long debated the use of expert systems in practical applications (Ratledge and Jacoby, Reference Ratledge and Jacoby1989). For example, Baltimore County, Maryland in the United States implemented the Residential Burglary Expert System (REBES) in the 1980s; this implementation followed the development and implementation of expert systems in the Devon and Cornwall constabulary in the United Kingdom. Based on data, those systems generated “if/then” rules using heuristics to help predict burglaries; deficiencies in data collection and analysis subsequently led to other advancements for understanding complex databases (Holmes et al., Reference Holmes, Comstock-Davidson and Hayen2007).

Similar expert systems have become key to understanding data collection and analysis efforts throughout law enforcement. We focus on the IBIS in the United States, a widely-used expert system for ballistics imaging (for comparing spent cartridges from guns). Proprietary algorithms convert digital images of those cartridges into digital signatures, which software then algorithmically matches (National Research Council (U.S.) and Cork, Reference Cork2008). IBIS is implemented at the local organizational level via the National Integrated Ballistic Information Network (NIBIN), which mixes together both machines and an operational structure of agencies and locations (King et al., Reference King, Wells, Katz, Maguire and Frank2013; King and Wells, Reference King and Wells2015). Algorithms like IBIS, as implemented in NIBIN, provide specific mechanisms for law enforcement officials to save time and energy in the pursuit of justice outcomes.

Our data on expert systems comes from a census of US operating crime laboratories. Crime laboratories are an important part of the “evidence assembly” process in criminal justice. The crime laboratories are complex and shifting work environments that analyze millions of pieces of evidence from criminal investigations each year. Due to the nature of their task environments, they operate more like academic laboratories than factories. Primarily because the laboratories employ highly skilled professionals who work more like police detectives than patrol officers, laboratory technicians are highly specialized and utilize complex portfolios of abilities.

The laboratories process and interpret physical evidence collected during criminal investigations. There are laboratories at all levels of government in the United States (e.g., federal, state, local) and they serve many different criminal justice agencies (e.g., the police, prosecutors, courts, correctional facilities). In 2014, the most recent year of data we use, US laboratories handled 3.8 million requests for help, mostly in state and local laboratories (Durose et al., Reference Durose, Burch, Walsh and Tiry2016, 1). A given investigation usually involves multiple requests for processing evidence (e.g., fingerprints, DNA evidence). A significant proportion of laboratories use outside contractors (Durose et al., Reference Durose, Burch, Walsh and Tiry2016, 4). Funding comes from budgets, grants, fees, etc.

We have two samples drawn from two censuses: the first was gathered in 2009 and originally released in 2010 (United States Department of Justice, Office of Justice Programs, Bureau of Justice Statistics, 2012); the second was gathered in 2014 and originally released in 2016 (United States Department of Justice, Office of Justice Programs, Bureau of Justice Statistics, 2017). Our data were collected by the Bureau of Justice Statistics (BJS) as part of the Census of Publicly Funded Forensic Crime Laboratories (CPFFCL). While this is called a census, and it is the most authoritative data source we know of on these organizations, BJS employs a sampling frame that includes all state, county, municipal, and federal crime laboratories (although not all agencies respond to this request). The public funding condition includes those that are solely government-funded, those whose parent organization is a government agency, and those that employ at least one FTE natural scientist for processing evidence in criminal matters. We note that the data do not include organizations that only collect or document evidence (e.g., fingerprints, photography) or other miscellaneous police-based identification units (e.g., predictive policing).

To the best of our knowledge, CPFFCL is the only accurate and comprehensive data source on the operation of crime laboratories in an advanced industrialized democracy. Moreover, these data are provided by these organizations only because the surveying institution is the peak national organization for research on criminal justice studies. The quality of the data, given the nature of this effort, allows us to reach for the goals of this article: to offer quantitative evidence from a large sample of similar government agencies about the uneven distribution of an expert system, where the agencies are dispersed geographically and face different governance and state arrangements (De Vries et al., Reference De Vries, Bekkers and Tummers2016). The regularity of the census allows us to observe the roles of these factors over time.

Specifically, the laboratories employed around 14,300 full-time equivalents (FTEs) in 2014 and had a combined operating budget of $1.7 billion; large labs (more than 25 FTEs) account for over 80% of their combined budget (Durose et al., Reference Durose, Burch, Walsh and Tiry2016, 5). The vast majority of labs are accredited by international professional organizations (Burch et al., Reference Burch, Durose and Walsh2016, 1). Almost all of the laboratories conduct proficiency testing through some combination of declared examinations, blind examinations, and random case reanalysis (Burch et al., Reference Burch, Durose and Walsh2016, 4). Most laboratories have written standards for performance expectations and maintain a written code of ethics (Burch et al., Reference Burch, Durose and Walsh2016, 5). Over time, laboratories have become more likely to employ external certification (Burch et al., Reference Burch, Durose and Walsh2016, 5–6). Depending on the survey, 30%–50% of the laboratories participated in multi-lab systems.

One of the compelling aspects of the laboratories, at least in terms of how they are portrayed on television, is their adoption of emerging technologies. Many think of such technicians and the laboratories as exemplars of what communities want from their criminal justice system: expertise, professionalism, and the relentless pursuit of the truth (Ramsland, Reference Ramsland2006; Byers and Johnson, Reference Byers and Johnson2009). As “arbiters of evidence”, they are portrayed as a counterbalance to police officers or prosecutors pursuing their own incentives. We offer this case as a microcosm of government in a world of algorithms. As technologies like expert systems take hold in these laboratories, we learn more about the prospect for enhanced decision-making for broader purposes, and about what may happen regarding the overall performance of government. In this empirical context, we note that survey respondents are asked about to report on both the general and specific use of expert systems: the uptake of “expert systems” generally and the specific uptake of ballistic identification systems.

4. Hypotheses and variables

Given our literature review, we recognize that technology use is a deep question, but we see value in focusing on aspects shaping the experiences of groups of organizations (Fountain, Reference Fountain2001; Mergel, Reference Mergel2018). In the future, humans will rely on machines for both recommendations and decision-making. Our focus in this section is on the roles of push, pull, and capability factors.

Many government organizations like the crime laboratories face demanding task loads from law enforcement organizations and other constituencies. Expert systems can reduce the strain of a complex and potentially overwhelming task environment (Jun and Weare, Reference Jun and Weare2011). We focus on task demands from criminal justice agencies. Task demands are a common measurement of task environment complexity. Historically, humans complete those task demands, so increasing request loads leads to either hiring more personnel or other efficiency-improving solutions. Expert systems are solutions that reduce the need for additional hires. Of course, this hypothesis only imperfectly maps onto Dosi’s views about market forces. Requests received is the total number of requests for assistance received by the laboratory in the calendar year of the survey (at the end of that calendar year). Our “pull-side” hypothesis about the task environment is as follows:

H1: The probability of use increases when task demands are greater (“demand pull”).

Our independent variable Requests Received is skewed, so we altered it using a Box-Cox zero-skew transformation.

Our first push factors are rooted in the historical public administration literature on the influence of professionalism in government organizations. In general, more professional organizations are associated with enhanced efficiency and effectiveness (Brudney and Selden, Reference Brudney and Selden1995; Jun and Weare, Reference Jun and Weare2011; Miller and Whitford, Reference Miller and Whitford2016). We focus on two pathways for professionalism (accreditation and proficiency testing) in the context of the laboratories. Most laboratories examined are accredited and employed proficiency testing. Accreditation plays the role of an externally enforced professionalism standard, whereas proficiency testing acts as an internally imposed standard. We offer two hypotheses:

H2a: The probability of use increases in accredited agencies (“technology push”).

H2b: The probability of use increases in agencies with proficient workforces (“technology push”).

Accreditation is voluntary; accrediting professional organizations include the American Society of Crime Lab Directors/Laboratory Accreditation Board, International (ASCLD/LAB, International). We assess this hypothesis using Accreditation and Proficiency indices built from multiple underlying indicators. Accreditation is measured by the responses to four questions, where each question asks whether the laboratory received a specific form of accreditation (two types from the American Society of Crime Laboratory Directors, one from Forensic Quality Services, and “any other”). This variable ranges from zero to three in our data (none report four). Proficiency testing takes different forms, and the laboratories use these different methods to assess whether personnel are following industry standards and their relative performance given those standards (Burch et al., Reference Burch, Durose and Walsh2016, 3). The Proficiency Index assesses whether staff were tested in four different ways (blind test, declared examination, random case reanalysis, or any other). The variable ranges from zero to four in our data.

Because laboratories interact with many different groups including vendors in their work, some outsource functions to improve their efficiency, especially given that commercial laboratories may be faster innovators and more likely to use industry-standard best practices (Minicucci and Donahue, Reference Minicucci and Donahue2004; Meier and O’Toole, Reference Meier and O’Toole2009; Jun and Weare, Reference Jun and Weare2011; Manoharan, Reference Manoharan2013). Of course, it is possible that contracting out leads to lower or negligible technology adoption because of shifting responsibility; therefore, we have competing predictions for this variable. Even so, we argue that laboratories that outsource are more likely to use expert systems to increase efficiency and effectiveness; our hypothesis is as follows:

H3: The probability of use increases in agencies that outsource (“technology push”).

This variable is reported as a dichotomous indicator. This is a coarse measure of outsourcing.

Our fourth hypothesis reflects a peculiarity of the data collected by BJS. Both censuses measure the use of algorithms both generally and specifically. Expert System is a dichotomous dependent variable that is a response to the question of whether the laboratory uses expert systems for any purpose. Our dependent variable is NIBIN, which is also a dichotomous dependent variable but specifically addresses whether the laboratory uses the NIBIN system for ballistics matching; as noted, NIBIN is itself an expert system.

One important aspect of the TAM is its focus on individual-level perceptions—for instance of “ease of use” or “perceived usefulness”. This orientation toward perceptions suggests that technology awareness may help drive adoption and use. Accordingly, we should account for such broad perceptions as a potential factor in the use of a specific technology like IBIS via NIBIN. We consider this perceptual precursor as a potential “technology-push” factor. Our hypothesis is as follows:

H4: The probability of use increases when the respondent says that the agency uses expert systems (“technology push”).

We note that more organizations reported using the specific use of an expert system (compared to general use) in both 2009 and 2014. Logically, if a laboratory reports the use of NIBIN, then it should also report the use of an Expert System. Of course, agencies have practical knowledge that an expert system like NIBIN can be useful. It is testable whether awareness of expert systems also increases the likelihood of using a specific one.

We also examine the impact of the forensic laboratory’s organizational context. If laboratories are members of larger, multiple lab consortia, we expect that increased size pushes them toward the adoption new technologies and innovations (more so than their standalone counterparts) due to greater capacity or greater sensitivity (Moon, Reference Moon2002; Holden et al., Reference Holden, Norris and Fletcher2003; Jun and Weare, Reference Jun and Weare2011; Moon et al., Reference Moon, Lee and Roh2014; Connolly et al., Reference Connolly, Bode and Epstein2018). As with contracting out, we also have competing predictions for this variable given the possibility of shifting responsibility. Regardless, our hypothesis is as follows:

H5: The probability of use increases in agencies with multiple laboratories (“organizational capability”).

This variable is reported as a dichotomous indicator.

Our last explanatory variable centers on capability as measured by unit resources. Financial resources are a notable barrier to technology adoption in government agencies (Holden et al., Reference Holden, Norris and Fletcher2003, 339). Higher budgets are associated with innovative cultures and technology adoption (Demircioglu and Audretsch, Reference Demircioglu and Audretsch2017), at least in part because of the costs of potential innovations (Damanpour and Schneider, Reference Damanpour and Schneider2009). We expect that laboratories with robust budgets are more apt to use emerging technology (Brudney and Selden, Reference Brudney and Selden1995; Norris and Moon, Reference Norris and Moon2005; Lee and Whitford, Reference Lee and Whitford2013; Manoharan, Reference Manoharan2013). However, we recognize that budget constraints may also foster adoption of personnel-saving innovations (Kiefer et al., Reference Kiefer, Hartley, Conway and Briner2015). This problem of complex causation when considering resources is well-known (Demircioglu and Audretsch, Reference Demircioglu and Audretsch2017). More generally, financial resources provide commonly-measured approach to observing the role of fungible resources as supportive, dynamic capabilities (Teece, Reference Teece2007; Li, Reference Li2016). Our hypothesis is as follows:

H6: The probability of use increases when budgets are greater (“organizational capability”).

Our variable for resources is Budget; it is skewed, so we also transformed it using a Box-Cox zero-skew transformation.

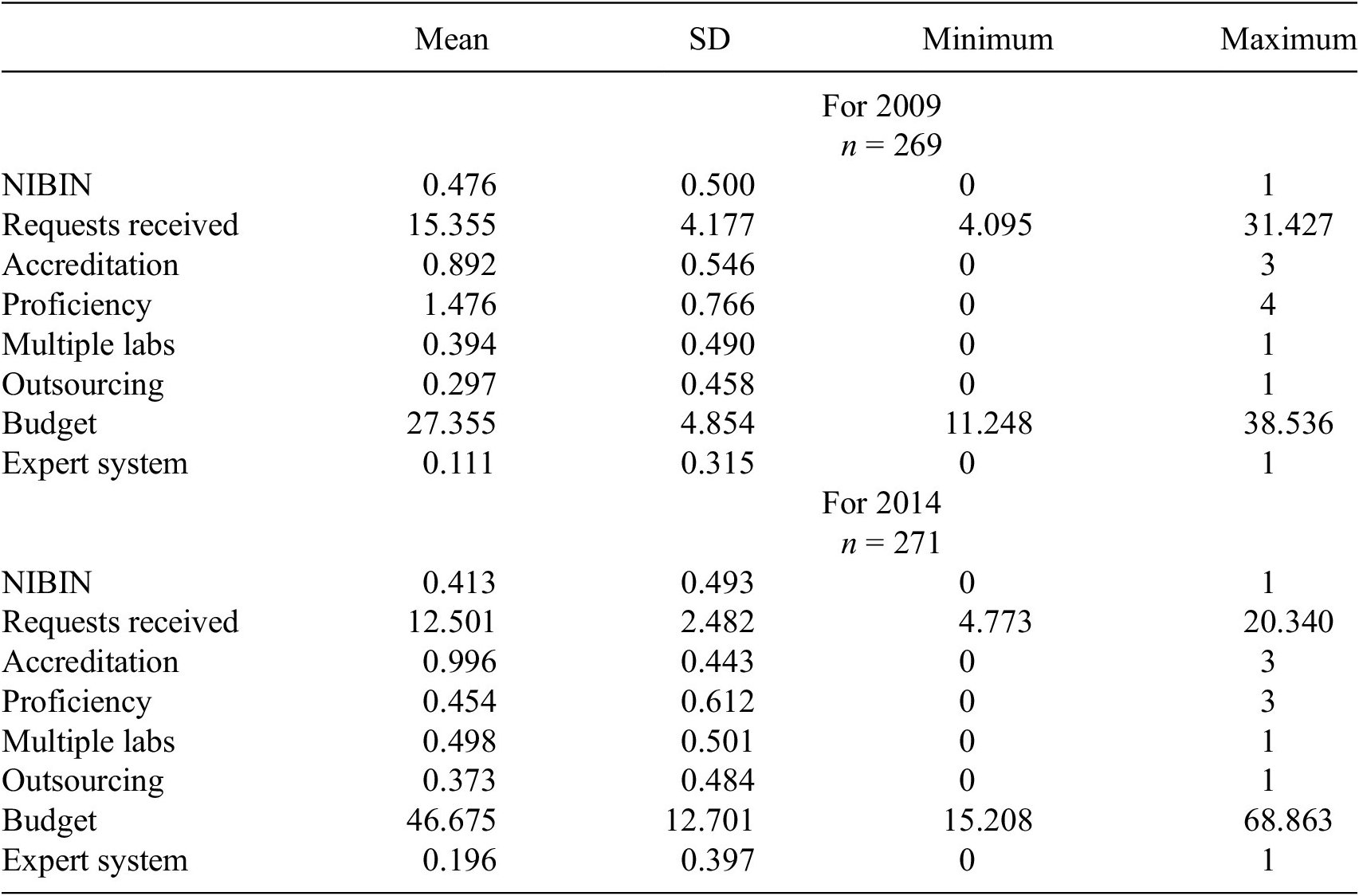

All descriptive statistics (by year) are located in Table 1. The variables suggest that it may be more difficult to measure “technology push” than “demand pull” or “capability” in our data.

Table 1. Descriptive statistics

5. Estimation methods and results

We have one dependent variable measured in two time periods (2009 and 2014).Footnote 1 This structure helps us address the staged impact of these relative factors by observing reports of use at different points in time, which Borins argues for in observing “waves of innovation” (Borins, Reference Borins1998, 293). Moreover, our observations are at a macro (organizational) level (“the organization’s reported use of specific technologies”). In their approach to the estimation of such effects, Clausen, Demiricioglu, and Alsos observe “any” innovation at a high level (Clausen et al., Reference Clausen, Demircioglu and Alsos2020); our model is similarly macro in its orientation.

We offer two probit models to check the model results for two time periods (2009 and 2014). The probit model proposes a likelihood for the incidence of the dependent variable (Pindyck and Rubinfeld, Reference Pindyck and Rubinfeld1998; Greene, Reference Greene2018). All probit models are inherently nonlinear, so the probability of the event occurring in a specific agency is a complex aggregation of factors (a location on a likelihood surface). We fit the models presented with robust standard errors clustered by state. To a degree, this helps address unobservable heterogeneity that occurs across local geographies. For 2009, there are 51 clusters because of one lab located in Washington, DC; for 2014, there are 50 clusters.Footnote 2 The models shown in Tables 2 and 3 fit the data with the Wald χ2 test rejecting (at a high level of significance) the null hypothesis that the coefficients jointly could be restricted to be equal to zero simultaneously.

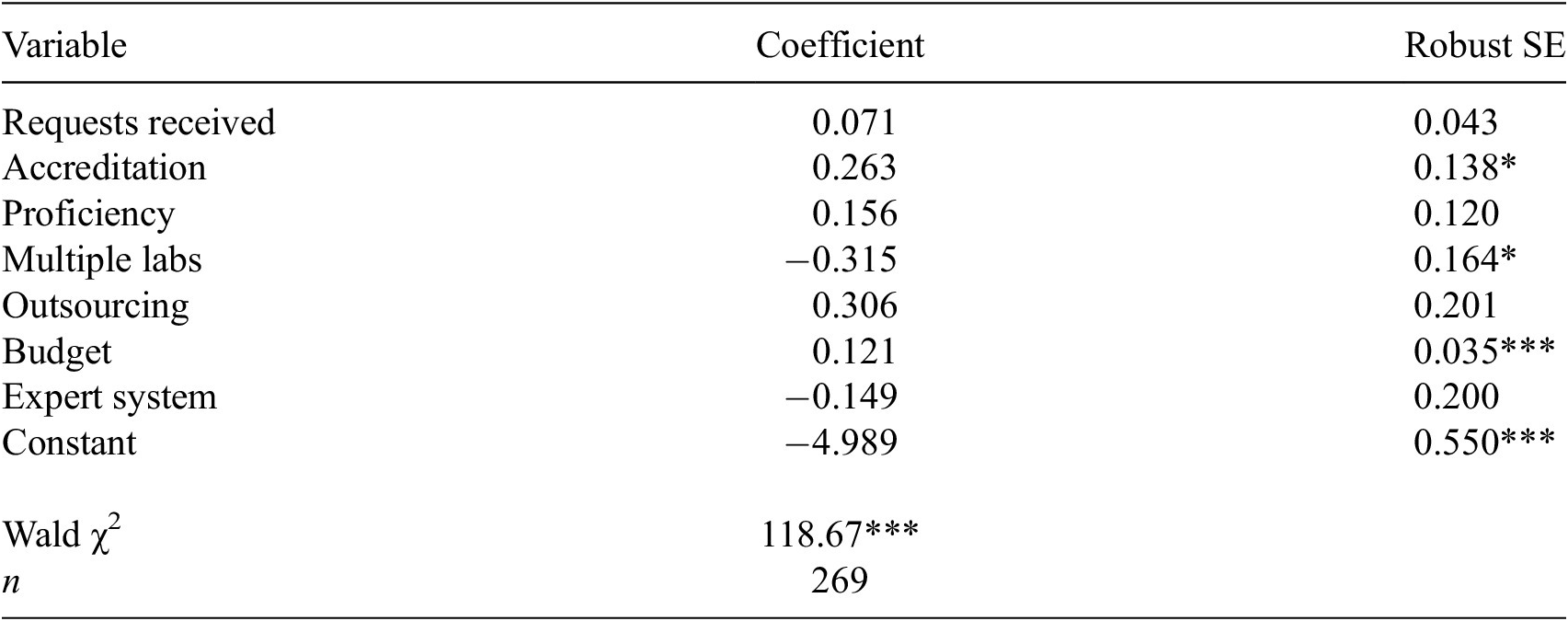

Table 2. Probit model for NIBIN as a dependent variable, 2009

Note: * indicates significance better than 0.10 (two-tailed test), ** indicates significance better than 0.05 (two-tailed test), and *** indicates significance better than 0.01 (two-tailed test).

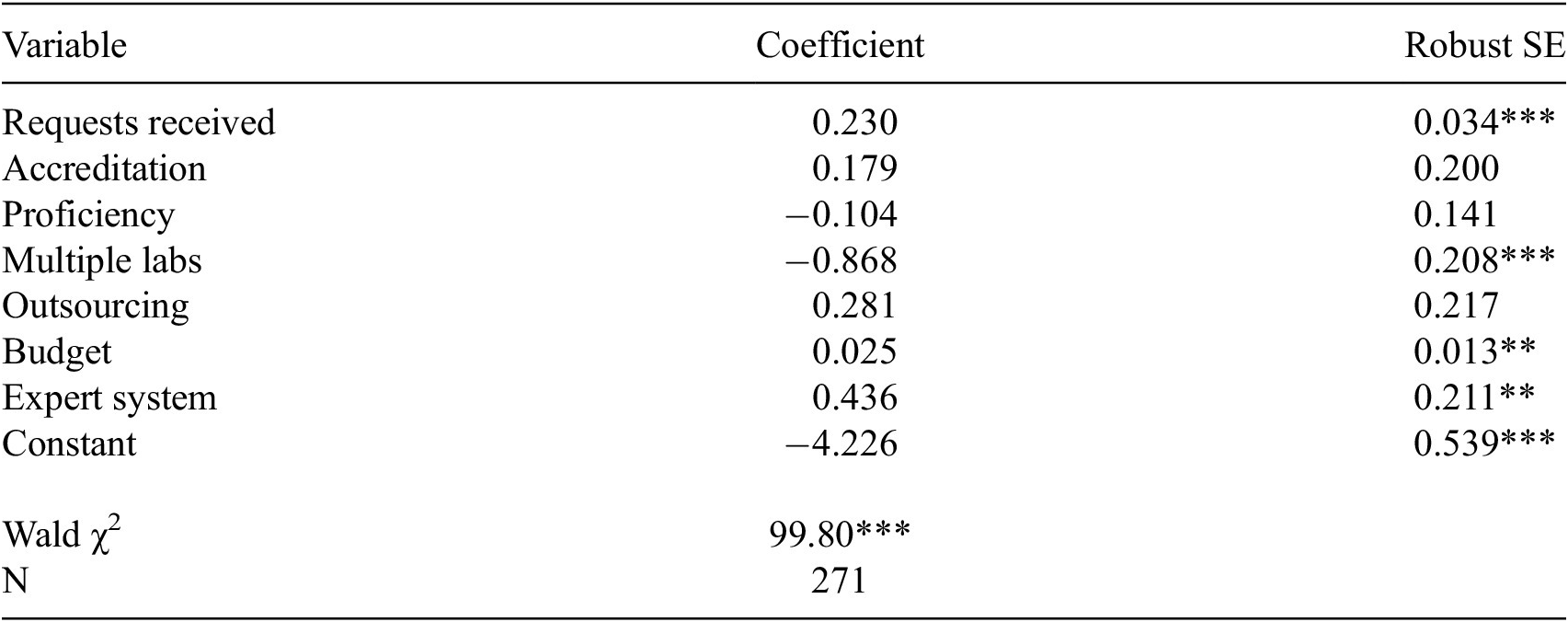

Table 3. Probit model for NIBIN as a dependent variable, 2014

Note: * indicates significance better than 0.10 (two-tailed test), ** indicates significance better than 0.05 (two-tailed test), and *** indicates significance better than 0.01 (two-tailed test).

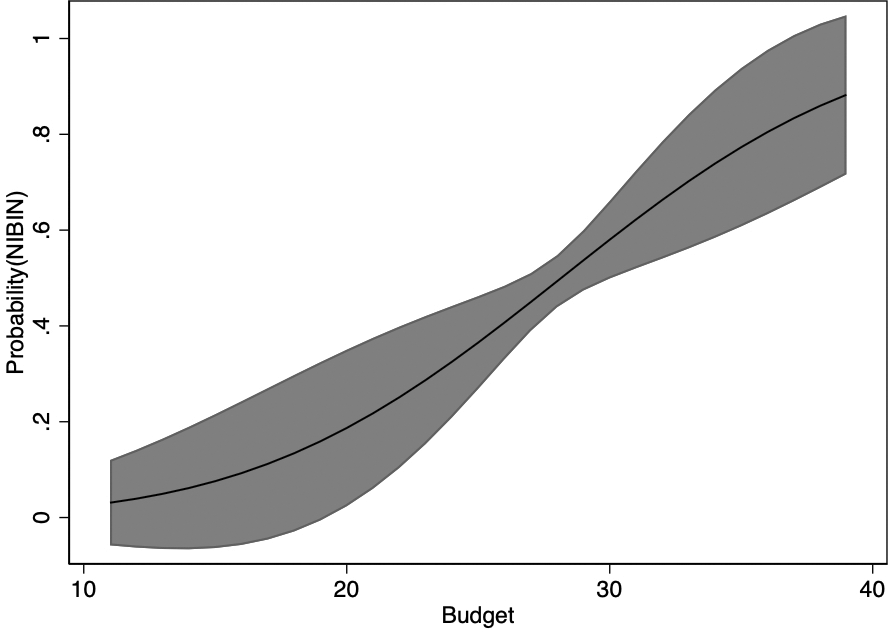

For the 2009 sample, the probit model shows that the probability of use is positively associated with larger budgets. Figure 1 shows the marginal effect for Budget (a measure of “organizational capability”) for the dependent variable; this shows a strong upward sloping effect of Budget (from just above zero probability to just about 0.80 as Budget increases from a minimum value to a maximum).

Figure 1. Estimated impact of budget, 2009.

This is a primary effect in the 2009 model. In addition, there is a moderately-significant (but sizeable) effect of Accreditation (a measure of “technology push”). Comparing those laboratories with three accreditation types (the largest reported value in our data) with those without any, the model suggests an increase in the probability of NIBIN by just over 0.23. Additionally, there is an unexpected (but moderate) effect of having multiple laboratories (a measure of “organizational capability”). The marginal effect suggests that for NIBIN, Multiple Labs decreases the likelihood of NIBIN by just over 0.08 probability. There is more uncertainty about the estimation of either of these effects. None of the coefficients for the other factors is estimated to be significantly different from zero.

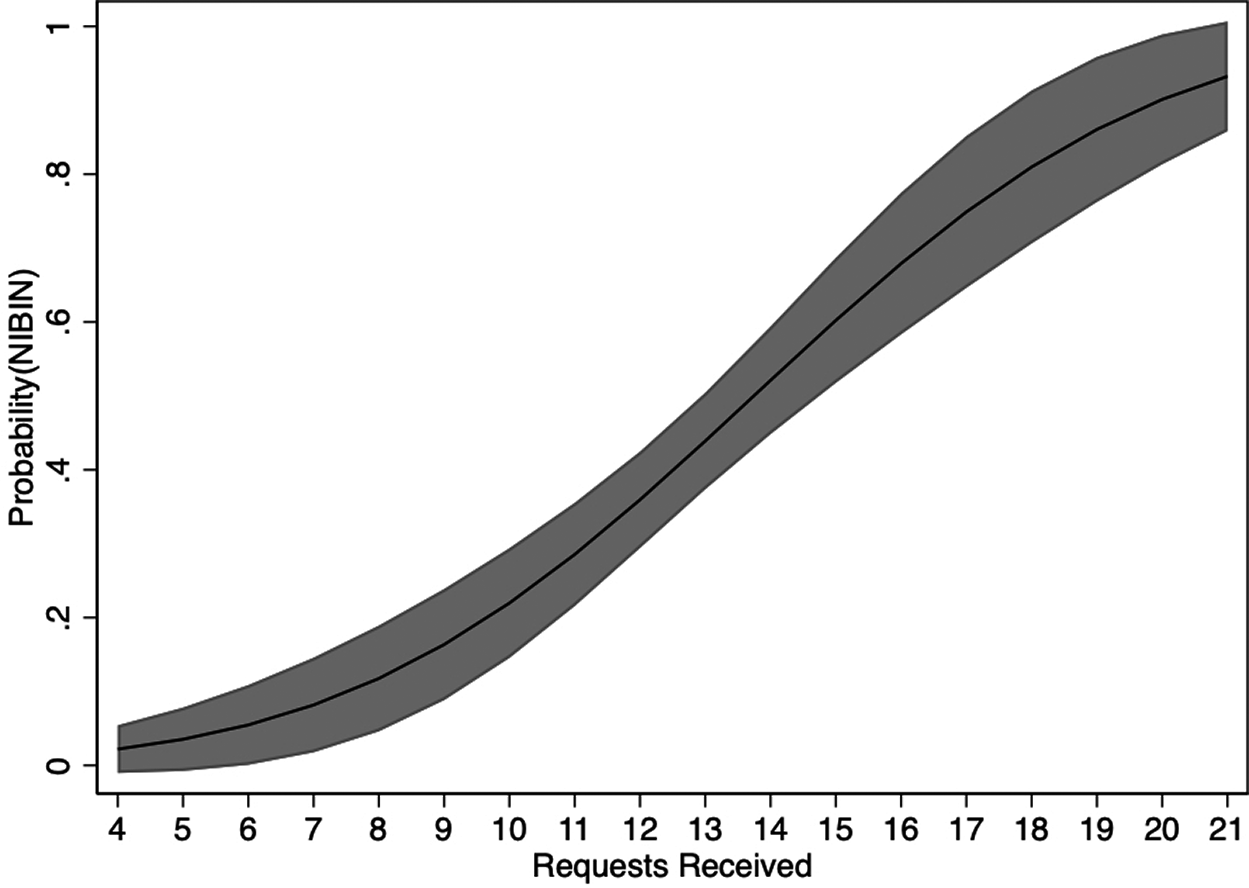

For 2014, the probit equation indicates that the probability of use is positively associated with more requests received (a measure of “demand pull”). Figure 2 shows the estimated marginal effect of Requests Received for 2014 on the probability of a laboratory reporting the use of NIBIN. The effect slopes from almost zero probability to just about 0.90 as Requests Received increases from a minimum value to a maximum.

Figure 2. Estimated impact of requests received, 2014.

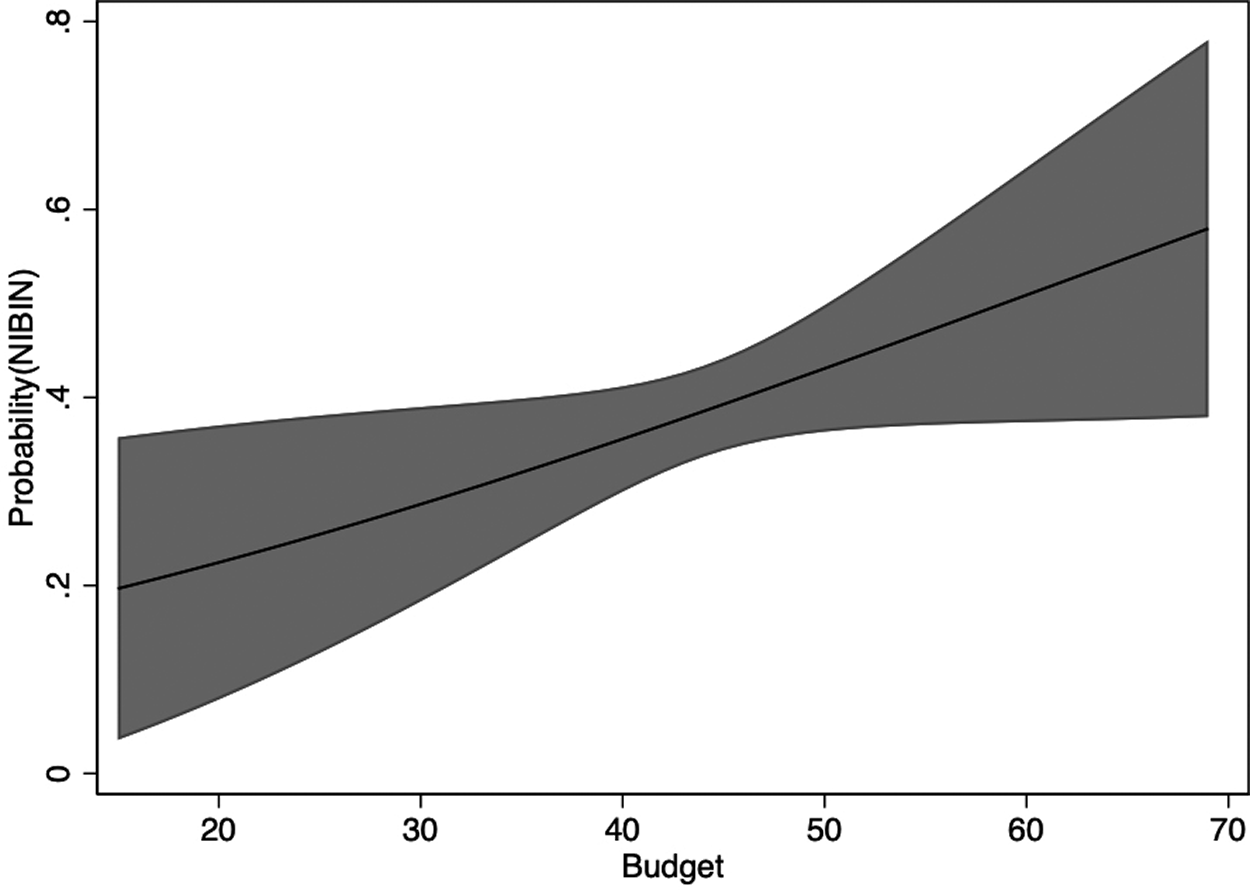

The 2014 data also reveal a different situation regarding the probability of use in agencies with larger budgets. Figure 3 shows the marginal effects of Budget for the dependent variable. The effect of this variable appears weaker than in the 2009 data (although it remains significantly-different from zero at the 0.05 level). The upward sloping effect of Budget now ranges from just under 0.20 probability at the variable’s minimum to under 0.60 at its maximum value.

Figure 3. Estimated impact of budget, 2014.

These are the primary effects in the 2014 model. As with Table 2, there is an unexpected (and sizeable) effect of having multiple laboratories; The marginal effect suggests that having Multiple Labs decreases the likelihood of NIBIN by just over 0.14 probability, a larger effect than was size of the effect in 2009.

Perhaps more interestingly, in 2014 there is a sizeable positive effect for having also reported the use of a generic “expert system”. Those respondents who reported this (the variable Expert System, a measure of “technology push”), were about 0.13 (in probability terms) more likely to also report the use of NIBIN. This is some evidence for a perceptual effect of technology awareness—although since NIBIN is itself an expert system it is also intriguing that this effect is small.

6. Conclusion

In this article, our goal is to offer statistical evidence from a large sample of similar government agencies about factors associated with the uneven distribution of the delegation of important decisions to an expert system. These organizations are geographically dispersed throughout the United States and so are situated within different governance and state arrangements; we also observe these deployments at two points in time. Our statistical model is guided by a strong theory of innovation rooted in the push-pull-capabilities framework (Dosi, Reference Dosi1982; Clausen et al., Reference Clausen, Demircioglu and Alsos2020). We focus on drivers of the use of expert systems that we consider grounded in theoretical and practical foundations. Technology-push and demand-pull factors, along with organizational capabilities, have real-world analogs: the task environment, network attributes, and fungible assets (like budgets). Our statistical results indicate that use is associated with common, observable factors that are central to the lives of those charged with implementing these kinds of tools in government.

Our results also tell us about the specific use of expert systems given general awareness, about early and late adoption, and how different factors contribute to the likelihood of use at different points in time. The use of these tools is uneven but models like the ones presented here can help uncover why distribution varies across organizations and geography. While our evidence is indicative given the difficulty of causal inference in any practice setting, future analyses will help the research community understand better the prospect for such tools given that we cannot randomize these factors to real-world crime laboratories. Even if we could randomize, Dosi’s framework should make it clear that any given agency has its own unique path in these data.

Two themes warrant special emphasis. First, while expert systems have long been held out as progenitors for algorithms in government, we expect that the adoption and use of other algorithms in real-world public agencies will likely take a novel path. As Buchanan and Smith noted in 1988, “expert systems provide important feedback to the science about the strengths and limitations of those (artificial intelligence) methods” (Buchanan and Smith, Reference Buchanan and Smith1988, 23). We expect the same to be true for the organizational adoption and use of such tools: it will depend on the factors underpinning the innovation process. Whether or not we want to recognize it, the tools and their algorithms are already here. In 2009 many crime laboratories used NIBIN; few knew it is an expert system.

The second theme is more telling. We know that crime laboratories are largely ignored in the research literature on public agencies. Yet, the laboratories as government agencies—and even more so police in general—are at the forefront of technology adoption. These agencies are pushing the boundaries. REBES was implemented four decades ago. NIBIN was established in 1999. While both happened long after the original Dartmouth conference, REBES and NIBIN are just two examples of a wide array of technology implementations in policing. Shotspotter listens to communities for shots fired; predictive policing is now common.

We recognize that these themes implicate the general sense that public agencies are not willing and early adopters and users of emerging technologies. Less than half of the organizations profiled here reporting using an expert system known by many watchers of American television police dramas. While crime scene investigators may appear technologically savvy, and indeed some are, they can be simultaneously less savvy than Silicon Valley startups and more savvy than other government agencies. This is why the distribution of a particular decision support technology within government—across agencies, geographies, public problems, or time—may be even more informative than the relative level of use when compared to the private sector. In this practice context, uneven use means uneven justice.

While the growing literature on randomized control trials for assessing the effectiveness of a technology like expert systems plays an important role, the best intentions in policy analysis and evaluation are often undone by the reality of policy implementation and administration. As such, it is helpful to know that factors consistent with broader theories of innovation are also present in a practice environment as narrow as the use of ballistics imaging technology. Moreover, in a selection of the very few push-pull-capabilities applications on the use of specific technologies that have appeared since Clausen et al. (Reference Clausen, Demircioglu and Alsos2020), demand-pull factors like the agency’s task environment and capabilities factors like its budget are common culprits in the story behind differential take-up. While RCTs remain primary evidence about effectiveness, Dosi’s factors reign supreme in driving the non-random assignment of such tools.

It is highly unlikely we will roll back such innovations. We can do better at implementing them, though—and the first step is understanding where, when, and under what conditions they have been implemented. This article helps contribute to that goal, and so we hope provides insight for future studies of other policing innovations that are part of the next machine age.

More importantly, we believe this paper pushes our understanding of such innovations forward by joining together a notable empirical case and a strong theory of innovation to provide broad evidence about the historical utilization of expert systems as algorithms in public sector applications. This connection has been a missing piece in the puzzle for better understanding those prospects in public administration settings. We hope that our approach spurs the adoption of the quantitative analysis of many different organizations working in many different geographic settings under many different constraints as a way of enriching the important understandings drawn from the world of case analysis. The world of algorithms in government is already rich and varied—and so too can be the studies we pursue for better understanding of their dynamics.

Data availability statement

The data that support the findings of this study are openly available in the Inter-university Consortium for Political and Social Research (ICPSR) repository at https://www.icpsr.umich.edu/web/ICPSR/series/258. The 2009 data are located in ICPSR 34340. The 2014 data are located in ICPSR 36759.

Author contributions

Andrew B. Whitford—Conceptualization, Data Curation, Investigation, Methodology, Supervision, Visualization, Writing. Anna M. Whitford—Conceptualization, Data Curation, Investigation, Writing.

Funding statement

This work received no specific grant from any funding agency, commercial or not-for-profit sectors.

Competing interest

The authors declare no competing interests exist.

Comments

No Comments have been published for this article.