1. Introduction

In the spatial Johnson–Mehl growth model, seeds arrive at random times

![]() $t_i$

,

$t_i$

,

![]() $i \in \mathbb{N}$

, at random locations

$i \in \mathbb{N}$

, at random locations

![]() $x_i$

,

$x_i$

,

![]() $i \in \mathbb{N}$

, in

$i \in \mathbb{N}$

, in

![]() $\mathbb{R}^d$

, according to a Poisson process

$\mathbb{R}^d$

, according to a Poisson process

![]() $(x_i,t_i)_{i \in \mathbb{N}}$

on

$(x_i,t_i)_{i \in \mathbb{N}}$

on

![]() $\mathbb{R}^d \times \mathbb{R}_+$

, where

$\mathbb{R}^d \times \mathbb{R}_+$

, where

![]() $\mathbb{R}_+\,:\!=\,[0,\infty)$

. Once a seed is born at time t, it begins to form a cell by growing radially in all directions at a constant speed

$\mathbb{R}_+\,:\!=\,[0,\infty)$

. Once a seed is born at time t, it begins to form a cell by growing radially in all directions at a constant speed

![]() $v\geq 0$

, so that by time

$v\geq 0$

, so that by time

![]() $t^{\prime}$

it occupies the ball of radius

$t^{\prime}$

it occupies the ball of radius

![]() $v(t'-t)$

. The parts of the space claimed by the seeds form the so-called Johnson–Mehl tessellation; see [Reference Chiu, Stoyan, Kendall and Mecke7] and [Reference Okabe, Boots, Sugihara and Chiu16]. This is a generalization of the classical Voronoi tessellation, which is obtained if all births occur simultaneously at time zero.

$v(t'-t)$

. The parts of the space claimed by the seeds form the so-called Johnson–Mehl tessellation; see [Reference Chiu, Stoyan, Kendall and Mecke7] and [Reference Okabe, Boots, Sugihara and Chiu16]. This is a generalization of the classical Voronoi tessellation, which is obtained if all births occur simultaneously at time zero.

The study of such birth–growth processes started with the work of Kolmogorov [Reference Kolmogorov11] to model crystal growth in two dimensions. Since then, this model has seen applications in various contexts, such as phase transition kinetics, polymers, ecological systems, and DNA replications, to name a few; see [Reference Bollobás and Riordan4, Reference Chiu, Stoyan, Kendall and Mecke7, Reference Okabe, Boots, Sugihara and Chiu16] and references therein. A central limit theorem for the Johnson–Mehl model with inhomogeneous arrivals of the seeds was obtained in [Reference Chiu and Quine5].

Variants of the classical spatial birth–growth model can be found, sometimes as a particular case of other models, in many papers. Among them, we mention [Reference Baryshnikov and Yukich2] and [Reference Penrose and Yukich17], where the birth–growth model appears as a particular case of a random sequential packing model, and [Reference Schreiber and Yukich20], which studied a variant of the model with non-uniform deterministic growth patterns. The main tools rely on the concept of stabilization by considering regions where the appearance of new seeds influences the functional of interest.

In this paper, we consider a generalization of the Johnson–Mehl model by introducing random growth speeds for the seeds. This gives rise to many interesting features in the model, most importantly, long-range interactions if the speed can take arbitrarily large values with positive probability. Therefore, the model with random speed is no longer stabilizing in the classical sense of [Reference Lachièze-Rey, Schulte and Yukich13] and [Reference Penrose and Yukich18], since distant points may influence the growth pattern if their speeds are sufficiently high. It should be noted that, even in the constant-speed setting, we substantially improve and extend limit theorems obtained in [Reference Chiu and Quine5].

We consider a birth–growth model, determined by a Poisson process

![]() $\eta$

in

$\eta$

in

![]() $\mathbb{X}\,:\!=\,\mathbb{R}^d\times\mathbb{R}_+\times\mathbb{R}_+$

with intensity measure

$\mathbb{X}\,:\!=\,\mathbb{R}^d\times\mathbb{R}_+\times\mathbb{R}_+$

with intensity measure

![]() $\mu\,:\!=\,\lambda\otimes\theta\otimes\nu$

, where

$\mu\,:\!=\,\lambda\otimes\theta\otimes\nu$

, where

![]() $\lambda$

is the Lebesgue measure on

$\lambda$

is the Lebesgue measure on

![]() $\mathbb{R}^d$

,

$\mathbb{R}^d$

,

![]() $\theta$

is a non-null locally finite measure on

$\theta$

is a non-null locally finite measure on

![]() $\mathbb{R}_+$

, and

$\mathbb{R}_+$

, and

![]() $\nu$

is a probability distribution on

$\nu$

is a probability distribution on

![]() $\mathbb{R}_+$

with

$\mathbb{R}_+$

with

![]() $\nu(\{0\})<1$

. Each point

$\nu(\{0\})<1$

. Each point

![]() ${\boldsymbol{x}}$

of this point process

${\boldsymbol{x}}$

of this point process

![]() $\eta$

has three components

$\eta$

has three components

![]() $(x,t_x,v_x)$

, where

$(x,t_x,v_x)$

, where

![]() $v_x \in \mathbb{R}_+$

denotes the random speed of a seed born at location

$v_x \in \mathbb{R}_+$

denotes the random speed of a seed born at location

![]() $x \in \mathbb{R}^d$

and whose growth commences at time

$x \in \mathbb{R}^d$

and whose growth commences at time

![]() $t_x\in \mathbb{R}_+$

. In a given point configuration, a point

$t_x\in \mathbb{R}_+$

. In a given point configuration, a point

![]() ${\boldsymbol{x}}\,:\!=\,(x,t_x,v_x)$

is said to be exposed if there is no other point

${\boldsymbol{x}}\,:\!=\,(x,t_x,v_x)$

is said to be exposed if there is no other point

![]() $(y,t_y,v_y)$

in the configuration with

$(y,t_y,v_y)$

in the configuration with

![]() $t_y <t_x$

and

$t_y <t_x$

and

![]() $\|x-y\| \le v_y(t_x-t_y)$

, where

$\|x-y\| \le v_y(t_x-t_y)$

, where

![]() $\|\cdot\|$

denotes the Euclidean norm. Notice that the event that a point

$\|\cdot\|$

denotes the Euclidean norm. Notice that the event that a point

![]() $(x,t_x,v_x)\in \eta$

is exposed depends only on the point configuration in the region

$(x,t_x,v_x)\in \eta$

is exposed depends only on the point configuration in the region

Namely,

![]() ${\boldsymbol{x}}$

is exposed if and only if

${\boldsymbol{x}}$

is exposed if and only if

![]() $\eta$

has no points (apart from

$\eta$

has no points (apart from

![]() ${\boldsymbol{x}}$

) in

${\boldsymbol{x}}$

) in

![]() $L_{x,t_x}$

.

$L_{x,t_x}$

.

The growth frontier of the model can be defined as the random field

This is an example of an extremal shot-noise process; see [Reference Heinrich and Molchanov10]. Its value at a point

![]() $y \in \mathbb{R}^d$

corresponds to a seed from

$y \in \mathbb{R}^d$

corresponds to a seed from

![]() $\eta$

whose growth region covers y first. It should be noted here that this covering seed need not be an exposed one. In other words, because of random speeds, it may happen that the cell grown from a non-exposed seed shades a subsequent seed which would be exposed otherwise. This excludes possible applications of our model with random growth speed to crystallisation, where a more natural model would be to not allow a non-exposed seed to affect any future seeds. But this creates a causal chain of influences that seems quite difficult to study with the currently known methods of stabilization for Gaussian approximation.

$\eta$

whose growth region covers y first. It should be noted here that this covering seed need not be an exposed one. In other words, because of random speeds, it may happen that the cell grown from a non-exposed seed shades a subsequent seed which would be exposed otherwise. This excludes possible applications of our model with random growth speed to crystallisation, where a more natural model would be to not allow a non-exposed seed to affect any future seeds. But this creates a causal chain of influences that seems quite difficult to study with the currently known methods of stabilization for Gaussian approximation.

Nonetheless, models such as ours are natural in telecommunication applications, with the speed playing the role of the weight or strength of a particular transmission node, where the growth frontier defined above can be used as a variant of the additive signal-to-interference model from [Reference Baccelli and Błaszczyszyn1, Chapter 5]. Furthermore, similar models can be applied in the ecological or epidemiological context, where a non-visible event influences appearances of others. Suppose we have a barren land and a drone/machine is planting seeds from a mixture of plant species at random times and random locations for reforestation. Each seed, after falling on the ground, starts growing a bush around it at a random speed depending on its species. If a new seed falls on a part of the ground that is already covered in bushes, it is still allowed to form its own bush; i.e., there is no exclusion. Now the number of exposed points in our model above translates to the number of seeds that start a bush on a then barren piece of land, rather than starting on a piece of ground already covered in bushes. This, in some sense, can explain the efficiency of the reforestation process, i.e., what fraction of the seeds were planted on barren land, in contrast to being planted on already existing bushes.

Given a measurable weight function

![]() $h\;:\;\mathbb{R}^d \times \mathbb{R}_+ \to \mathbb{R}_+$

, the main object of interest in this paper is the sum of h over the space–time coordinates

$h\;:\;\mathbb{R}^d \times \mathbb{R}_+ \to \mathbb{R}_+$

, the main object of interest in this paper is the sum of h over the space–time coordinates

![]() $(x,t_x)$

of the exposed points in

$(x,t_x)$

of the exposed points in

![]() $\eta$

. These can be defined as those points

$\eta$

. These can be defined as those points

![]() $(y,t_y)$

where the growth frontier defined at (1.2) has a local minimum (see Section 2 for a precise definition). Our aim is to provide sufficient conditions for Gaussian convergence of such sums. A standard approach for proving Gaussian convergence for such statistics relies on stabilization theory [Reference Baryshnikov and Yukich2, Reference Eichelsbacher, Raič and Schreiber8, Reference Penrose and Yukich17, Reference Schreiber and Yukich20]. While in the stabilization literature one commonly assumes that the so-called stabilization region is a ball around a given reference point, the region

$(y,t_y)$

where the growth frontier defined at (1.2) has a local minimum (see Section 2 for a precise definition). Our aim is to provide sufficient conditions for Gaussian convergence of such sums. A standard approach for proving Gaussian convergence for such statistics relies on stabilization theory [Reference Baryshnikov and Yukich2, Reference Eichelsbacher, Raič and Schreiber8, Reference Penrose and Yukich17, Reference Schreiber and Yukich20]. While in the stabilization literature one commonly assumes that the so-called stabilization region is a ball around a given reference point, the region

![]() $L_{x,t_x}$

is unbounded and it seems that it is not expressible as a ball around

$L_{x,t_x}$

is unbounded and it seems that it is not expressible as a ball around

![]() ${\boldsymbol{x}}$

in some different metric. Moreover, our stabilization region is set to be empty if

${\boldsymbol{x}}$

in some different metric. Moreover, our stabilization region is set to be empty if

![]() ${\boldsymbol{x}}$

is not exposed.

${\boldsymbol{x}}$

is not exposed.

The main challenge when working with random unbounded speeds of growth is that there are possibly very long-range interactions between seeds. This makes the use of balls as stabilization regions vastly suboptimal and necessitates the use of regions of a more general shape. In particular, we only assume that the random growth speed in our model has finite moment of order 7d (see the assumption (C) in Section 2), and this allows for some power-tailed distributions for the speed.

The recent work [Reference Bhattacharjee and Molchanov3] introduced a new notion of region-stabilization which allows for more general regions than balls and, building on the seminal work [Reference Last, Peccati and Schulte14], provides bounds on the rate of Gaussian convergence for certain sums of region-stabilizing score functions. We will utilize this to derive bounds on the Wasserstein and Kolmogorov distances, defined below, between a suitably normalized sum of weights and the standard Gaussian distribution. For real-valued random variables X and Y, the Wasserstein distance between their distributions is given by

where

![]() $\operatorname{Lip}_1$

denotes the class of all Lipschitz functions

$\operatorname{Lip}_1$

denotes the class of all Lipschitz functions

![]() $f\;:\; \mathbb{R} \to \mathbb{R}$

with Lipschitz constant at most one. The Kolmogorov distance between the distributions is given by

$f\;:\; \mathbb{R} \to \mathbb{R}$

with Lipschitz constant at most one. The Kolmogorov distance between the distributions is given by

The rest of the paper is organized as follows. In Section 2, we describe the model and state our main results. In Section 3, we prove a result providing necessary upper and lower bounds for the variance of our statistic of interest. Section 4 presents the proofs of our quantitative bounds.

2. Model and main results

Recall that we work in the space

![]() $\mathbb{X}\,:\!=\,\mathbb{R}^d\times\mathbb{R}_+\times\mathbb{R}_+$

,

$\mathbb{X}\,:\!=\,\mathbb{R}^d\times\mathbb{R}_+\times\mathbb{R}_+$

,

![]() $d \in \mathbb{N}$

, with the Borel

$d \in \mathbb{N}$

, with the Borel

![]() $\sigma$

-algebra. The points from

$\sigma$

-algebra. The points from

![]() $\mathbb{X}$

are written as

$\mathbb{X}$

are written as

![]() ${\boldsymbol{x}}\,:\!=\,(x,t_x,v_x)$

, so that

${\boldsymbol{x}}\,:\!=\,(x,t_x,v_x)$

, so that

![]() ${\boldsymbol{x}}$

designates a seed born in position x at time

${\boldsymbol{x}}$

designates a seed born in position x at time

![]() $t_x$

, which then grows radially in all directions with speed

$t_x$

, which then grows radially in all directions with speed

![]() $v_x$

. For

$v_x$

. For

![]() ${\boldsymbol{x}}\in \mathbb{X}$

, the set

${\boldsymbol{x}}\in \mathbb{X}$

, the set

is the growth region of the seed

![]() ${\boldsymbol{x}}$

. Denote by

${\boldsymbol{x}}$

. Denote by

![]() $\textbf{N}$

the family of

$\textbf{N}$

the family of

![]() $\sigma$

-finite counting measures

$\sigma$

-finite counting measures

![]() $\mathcal{M}$

on

$\mathcal{M}$

on

![]() $\mathbb{X}$

equipped with the smallest

$\mathbb{X}$

equipped with the smallest

![]() $\sigma$

-algebra

$\sigma$

-algebra

![]() $\mathscr{N}\ $

such that the maps

$\mathscr{N}\ $

such that the maps

![]() $\mathcal{M} \mapsto \mathcal{M}(A)$

are measurable for all Borel A. We write

$\mathcal{M} \mapsto \mathcal{M}(A)$

are measurable for all Borel A. We write

![]() ${\boldsymbol{x}}\in\mathcal{M}$

if

${\boldsymbol{x}}\in\mathcal{M}$

if

![]() $\mathcal{M}(\{{\boldsymbol{x}}\})\geq 1$

. For

$\mathcal{M}(\{{\boldsymbol{x}}\})\geq 1$

. For

![]() $\mathcal{M} \in \textbf{N}$

, a point

$\mathcal{M} \in \textbf{N}$

, a point

![]() ${\boldsymbol{x}} \in \mathcal{M}$

is said to be exposed in

${\boldsymbol{x}} \in \mathcal{M}$

is said to be exposed in

![]() $\mathcal{M}$

if it does not belong to the growth region of any other point

$\mathcal{M}$

if it does not belong to the growth region of any other point

![]() ${\boldsymbol{y}}\in\mathcal{M}$

,

${\boldsymbol{y}}\in\mathcal{M}$

,

![]() ${\boldsymbol{y}} \neq {\boldsymbol{x}}$

. Note that the property of being exposed is not influenced by the speed component of

${\boldsymbol{y}} \neq {\boldsymbol{x}}$

. Note that the property of being exposed is not influenced by the speed component of

![]() ${\boldsymbol{x}}$

.

${\boldsymbol{x}}$

.

The influence set

![]() $L_{\boldsymbol{x}}=L_{x,t_x}$

,

$L_{\boldsymbol{x}}=L_{x,t_x}$

,

![]() ${\boldsymbol{x}} \in \mathbb{X}$

, defined at (1.1), is exactly the set of points that were born before time

${\boldsymbol{x}} \in \mathbb{X}$

, defined at (1.1), is exactly the set of points that were born before time

![]() $t_x$

and which at time

$t_x$

and which at time

![]() $t_x$

occupy a region that covers the location x, thereby shading it. Note that

$t_x$

occupy a region that covers the location x, thereby shading it. Note that

![]() ${\boldsymbol{y}}\in L_{\boldsymbol{x}}$

if and only if

${\boldsymbol{y}}\in L_{\boldsymbol{x}}$

if and only if

![]() ${\boldsymbol{x}}\in G_{\boldsymbol{y}}$

. Clearly, a point

${\boldsymbol{x}}\in G_{\boldsymbol{y}}$

. Clearly, a point

![]() ${\boldsymbol{x}}\in\mathcal{M}$

is exposed in

${\boldsymbol{x}}\in\mathcal{M}$

is exposed in

![]() $\mathcal{M}$

if and only if

$\mathcal{M}$

if and only if

![]() $\mathcal{M} (L_{\boldsymbol{x}}\setminus\{x\})=0$

. We write

$\mathcal{M} (L_{\boldsymbol{x}}\setminus\{x\})=0$

. We write

![]() $(y,t_y,v_y)\preceq (x,t_x)$

or

$(y,t_y,v_y)\preceq (x,t_x)$

or

![]() ${\boldsymbol{y}} \preceq {\boldsymbol{x}}$

if

${\boldsymbol{y}} \preceq {\boldsymbol{x}}$

if

![]() ${\boldsymbol{y}}\in L_{x,t_x}$

(recall that the speed component of

${\boldsymbol{y}}\in L_{x,t_x}$

(recall that the speed component of

![]() ${\boldsymbol{x}}$

is irrelevant in such a relation), and so

${\boldsymbol{x}}$

is irrelevant in such a relation), and so

![]() ${\boldsymbol{x}}$

is not an exposed point with respect to

${\boldsymbol{x}}$

is not an exposed point with respect to

![]() $\delta_{{\boldsymbol{y}}}$

, where

$\delta_{{\boldsymbol{y}}}$

, where

![]() $\delta_{{\boldsymbol{y}}}$

denotes the Dirac measure at

$\delta_{{\boldsymbol{y}}}$

denotes the Dirac measure at

![]() ${\boldsymbol{y}}$

.

${\boldsymbol{y}}$

.

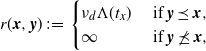

For

![]() $\mathcal{M} \in \textbf{N}$

and

$\mathcal{M} \in \textbf{N}$

and

![]() ${\boldsymbol{x}} \in \mathcal{M}$

, define

${\boldsymbol{x}} \in \mathcal{M}$

, define

A generic way to construct an additive functional on the exposed points is to consider the sum of weights of these points, where each exposed point

![]() ${\boldsymbol{x}}$

contributes a weight

${\boldsymbol{x}}$

contributes a weight

![]() $h({\boldsymbol{x}})$

for some measurable

$h({\boldsymbol{x}})$

for some measurable

![]() $h\;: \; \mathbb{X} \to \mathbb{R}_+$

. In the following we consider weight functions

$h\;: \; \mathbb{X} \to \mathbb{R}_+$

. In the following we consider weight functions

![]() $h({\boldsymbol{x}})$

which are products of two measurable functions

$h({\boldsymbol{x}})$

which are products of two measurable functions

![]() $h_1\; :\;\mathbb {R}^d \to \mathbb{R}_+$

and

$h_1\; :\;\mathbb {R}^d \to \mathbb{R}_+$

and

![]() $h_2\;: \; \mathbb{R}_+ \to \mathbb{R}_+$

of the locations and birth times, respectively, of the exposed points. In particular, we let

$h_2\;: \; \mathbb{R}_+ \to \mathbb{R}_+$

of the locations and birth times, respectively, of the exposed points. In particular, we let

![]() $h_1(x) = \unicode{x1d7d9}_W(x)=\unicode{x1d7d9}\{x \in W\}$

for a window

$h_1(x) = \unicode{x1d7d9}_W(x)=\unicode{x1d7d9}\{x \in W\}$

for a window

![]() $W \subset \mathbb{R}^d$

, and

$W \subset \mathbb{R}^d$

, and

![]() $h_2(t) = \unicode{x1d7d9}\{t \le a\}$

for

$h_2(t) = \unicode{x1d7d9}\{t \le a\}$

for

![]() $a \in (0,\infty)$

. Then

$a \in (0,\infty)$

. Then

is the number of exposed points from

![]() $\mathcal{M}$

located in W and born before time a. Note here that when we add a new point

$\mathcal{M}$

located in W and born before time a. Note here that when we add a new point

![]() ${\boldsymbol{y}}=(y,t_y,v_y) \in \mathbb{R}^d \times \mathbb{R}_+ \times \mathbb{R}_+$

to a configuration

${\boldsymbol{y}}=(y,t_y,v_y) \in \mathbb{R}^d \times \mathbb{R}_+ \times \mathbb{R}_+$

to a configuration

![]() $\mathcal{M} \in \textbf{N}$

not containing it, the change in the value of F is not a function of only

$\mathcal{M} \in \textbf{N}$

not containing it, the change in the value of F is not a function of only

![]() ${\boldsymbol{y}}$

and some local neighborhood of it, but rather depends on points in the configuration that might be very far away. Indeed, for

${\boldsymbol{y}}$

and some local neighborhood of it, but rather depends on points in the configuration that might be very far away. Indeed, for

![]() ${\boldsymbol{y}} \notin \mathcal{M}$

we have

${\boldsymbol{y}} \notin \mathcal{M}$

we have

that is, F may increase by one when

![]() ${\boldsymbol{y}}$

is exposed in

${\boldsymbol{y}}$

is exposed in

![]() $\mathcal{M} + \delta_{{\boldsymbol{y}}}$

, while simultaneously, any point

$\mathcal{M} + \delta_{{\boldsymbol{y}}}$

, while simultaneously, any point

![]() ${\boldsymbol{x}}\in\mathcal{M}$

which was previously exposed in

${\boldsymbol{x}}\in\mathcal{M}$

which was previously exposed in

![]() $\mathcal{M}$

may not be so anymore after the addition of

$\mathcal{M}$

may not be so anymore after the addition of

![]() ${\boldsymbol{y}}$

, if it happens to fall in the influence set

${\boldsymbol{y}}$

, if it happens to fall in the influence set

![]() $L_{(y,t_y)}$

of

$L_{(y,t_y)}$

of

![]() ${\boldsymbol{y}}$

. This necessitates the use of region-stabilization.

${\boldsymbol{y}}$

. This necessitates the use of region-stabilization.

Recall that

![]() $\eta$

is a Poisson process in

$\eta$

is a Poisson process in

![]() $\mathbb{X}$

with intensity measure

$\mathbb{X}$

with intensity measure

![]() $\mu$

, being the product of the Lebesgue measure

$\mu$

, being the product of the Lebesgue measure

![]() $\lambda$

on

$\lambda$

on

![]() $\mathbb{R}^d$

, a non-null locally finite measure

$\mathbb{R}^d$

, a non-null locally finite measure

![]() $\theta$

on

$\theta$

on

![]() $\mathbb{R}_+$

, and a probability measure

$\mathbb{R}_+$

, and a probability measure

![]() $\nu$

on

$\nu$

on

![]() $\mathbb{R}_+$

with

$\mathbb{R}_+$

with

![]() $\nu(\{0\})<1$

. Note that

$\nu(\{0\})<1$

. Note that

![]() $\eta$

is a simple random counting measure. The main goal of this paper is to find sufficient conditions for a Gaussian convergence of

$\eta$

is a simple random counting measure. The main goal of this paper is to find sufficient conditions for a Gaussian convergence of

![]() $F\equiv F(\eta)$

as defined at (2.1). The functional

$F\equiv F(\eta)$

as defined at (2.1). The functional

![]() $F(\eta)$

is a region-stabilizing functional, in the sense of [Reference Bhattacharjee and Molchanov3], and can be represented as

$F(\eta)$

is a region-stabilizing functional, in the sense of [Reference Bhattacharjee and Molchanov3], and can be represented as

![]() $F(\eta)=\sum_{{\boldsymbol{x}} \in \eta} \xi({\boldsymbol{x}}, \eta)$

, where the score function

$F(\eta)=\sum_{{\boldsymbol{x}} \in \eta} \xi({\boldsymbol{x}}, \eta)$

, where the score function

![]() $\xi$

is given by

$\xi$

is given by

with the region of stabilization being

![]() $L_{x,t_x}$

when

$L_{x,t_x}$

when

![]() ${\boldsymbol{x}}$

is an exposed point (see Section 4 for more details). As a convention, let

${\boldsymbol{x}}$

is an exposed point (see Section 4 for more details). As a convention, let

![]() $\xi({\boldsymbol{x}},\mathcal{M})=0$

if

$\xi({\boldsymbol{x}},\mathcal{M})=0$

if

![]() $\mathcal{M}=0$

or if

$\mathcal{M}=0$

or if

![]() $x\notin\mathcal{M}$

. Theorem 2.1 in [Reference Bhattacharjee and Molchanov3] yields ready-to-use bounds on the Wasserstein and Kolmogorov distances between F, suitably normalized, and a standard Gaussian random variable N upon validating Equation (2.1) and the conditions (A1) and (A2) therein. We consistently follow the notation of [Reference Bhattacharjee and Molchanov3].

$x\notin\mathcal{M}$

. Theorem 2.1 in [Reference Bhattacharjee and Molchanov3] yields ready-to-use bounds on the Wasserstein and Kolmogorov distances between F, suitably normalized, and a standard Gaussian random variable N upon validating Equation (2.1) and the conditions (A1) and (A2) therein. We consistently follow the notation of [Reference Bhattacharjee and Molchanov3].

Now we are ready to state our main results. First, we list the necessary assumptions on our model. In the sequel, we drop the

![]() $\lambda$

in Lebesgue integrals and simply write

$\lambda$

in Lebesgue integrals and simply write

![]() ${\textrm{d}} x$

instead of

${\textrm{d}} x$

instead of

![]() $\lambda({\textrm{d}} x)$

. Our assumptions are as follows:

$\lambda({\textrm{d}} x)$

. Our assumptions are as follows:

-

(A) The window W is compact convex with non-empty interior.

-

(B) For all

$x>0$

, where

$x>0$

, where \begin{equation*} \int_0^\infty e^{-x \Lambda(t)} \, \theta({\textrm{d}} t)<\infty, \end{equation*}

(2.3)and

\begin{equation*} \int_0^\infty e^{-x \Lambda(t)} \, \theta({\textrm{d}} t)<\infty, \end{equation*}

(2.3)and \begin{equation} \Lambda(t)\,:\!=\,\omega_d\int_0^t (t-s)^d \theta({\textrm{d}} s) \end{equation}

\begin{equation} \Lambda(t)\,:\!=\,\omega_d\int_0^t (t-s)^d \theta({\textrm{d}} s) \end{equation}

$\omega_d$

is the volume of the d-dimensional unit Euclidean ball.

$\omega_d$

is the volume of the d-dimensional unit Euclidean ball.

-

(C) The moment of

$\nu$

of order 7d is finite, i.e.,

$\nu$

of order 7d is finite, i.e.,

$\nu_{7d}<\infty$

, where

$\nu_{7d}<\infty$

, where \begin{equation*}\nu_{u}\,:\!=\,\int_0^\infty v^u \nu({\textrm{d}} v),\quad u\geq 0. \end{equation*}

\begin{equation*}\nu_{u}\,:\!=\,\int_0^\infty v^u \nu({\textrm{d}} v),\quad u\geq 0. \end{equation*}

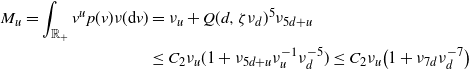

Note that the function

![]() $\Lambda(t)$

given at (2.3) is, up to a constant, the measure of the influence set of any point

$\Lambda(t)$

given at (2.3) is, up to a constant, the measure of the influence set of any point

![]() ${\boldsymbol{x}} \in \mathbb{X}$

with time component

${\boldsymbol{x}} \in \mathbb{X}$

with time component

![]() $t_x=t$

(the measure of the influence set does not depend on the location and speed components of

$t_x=t$

(the measure of the influence set does not depend on the location and speed components of

![]() ${\boldsymbol{x}}$

). Indeed, the

${\boldsymbol{x}}$

). Indeed, the

![]() $\mu$

-content of

$\mu$

-content of

![]() $L_{x,t_x}$

is given by

$L_{x,t_x}$

is given by

\begin{align} \mu(L_{x,t_x}) &=\int_{0}^\infty \int_0^{t_x} \int_{\mathbb{R}^d} \unicode{x1d7d9}_{y \in B_{v_y(t_x-t_y)}(x)} \,{\textrm{d}} y\,\theta({\textrm{d}} t_y)\nu({\textrm{d}} v_y)\\ & =\int_0^\infty \nu({\textrm{d}} v_y) \int_0^{t_x} \omega_d v_y^d(t_x-t_y)^d \theta({\textrm{d}} t_y)=\nu_{d} \Lambda(t_x),\end{align}

\begin{align} \mu(L_{x,t_x}) &=\int_{0}^\infty \int_0^{t_x} \int_{\mathbb{R}^d} \unicode{x1d7d9}_{y \in B_{v_y(t_x-t_y)}(x)} \,{\textrm{d}} y\,\theta({\textrm{d}} t_y)\nu({\textrm{d}} v_y)\\ & =\int_0^\infty \nu({\textrm{d}} v_y) \int_0^{t_x} \omega_d v_y^d(t_x-t_y)^d \theta({\textrm{d}} t_y)=\nu_{d} \Lambda(t_x),\end{align}

where

![]() $B_r(x)$

denotes the closed d-dimensional Euclidean ball of radius r centered at

$B_r(x)$

denotes the closed d-dimensional Euclidean ball of radius r centered at

![]() $x \in\mathbb{R}^d$

. In particular, if

$x \in\mathbb{R}^d$

. In particular, if

![]() $\theta$

is the Lebesgue measure on

$\theta$

is the Lebesgue measure on

![]() $\mathbb{R}_+$

, then

$\mathbb{R}_+$

, then

![]() $\Lambda(t)=\omega_d\,t^{d+1}/(d+1)$

.

$\Lambda(t)=\omega_d\,t^{d+1}/(d+1)$

.

The following theorem is our first main result. We denote by

![]() $(V_j(W))_{0 \le j \le d }$

the intrinsic volumes of W (see [Reference Schneider19, Section 4.1]), and let

$(V_j(W))_{0 \le j \le d }$

the intrinsic volumes of W (see [Reference Schneider19, Section 4.1]), and let

Theorem 2.1. Let

![]() $\eta$

be a Poisson process on

$\eta$

be a Poisson process on

![]() $\mathbb{X}$

with intensity measure

$\mathbb{X}$

with intensity measure

![]() $\mu$

as above, such that the assumptions (A)–(C) hold. Then, for

$\mu$

as above, such that the assumptions (A)–(C) hold. Then, for

![]() $F\,:\!=\,F(\eta)$

as in (2.1) with

$F\,:\!=\,F(\eta)$

as in (2.1) with

![]() $a \in (0,\infty)$

,

$a \in (0,\infty)$

,

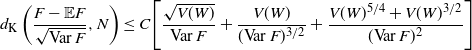

\begin{equation*} d_{\textrm{W}}\left(\frac{F - \mathbb{E} F}{\sqrt{\textrm{Var}\, F}},N\right) \le C \Bigg[\frac{\sqrt{V(W)}}{\textrm{Var}\, F} +\frac{V(W)}{(\textrm{Var}\, F)^{3/2}}\Bigg] \end{equation*}

\begin{equation*} d_{\textrm{W}}\left(\frac{F - \mathbb{E} F}{\sqrt{\textrm{Var}\, F}},N\right) \le C \Bigg[\frac{\sqrt{V(W)}}{\textrm{Var}\, F} +\frac{V(W)}{(\textrm{Var}\, F)^{3/2}}\Bigg] \end{equation*}

and

\begin{equation*} d_{\textrm{K}}\left(\frac{F - \mathbb{E} F}{\sqrt{\textrm{Var}\, F}},N\right) \le C \Bigg[\frac{\sqrt{V(W)}}{\textrm{Var}\, F}\\ +\frac{V(W)}{(\textrm{Var}\, F)^{3/2}} +\frac{V(W)^{5/4} + V(W)^{3/2}}{(\textrm{Var}\, F)^{2}}\Bigg] \end{equation*}

\begin{equation*} d_{\textrm{K}}\left(\frac{F - \mathbb{E} F}{\sqrt{\textrm{Var}\, F}},N\right) \le C \Bigg[\frac{\sqrt{V(W)}}{\textrm{Var}\, F}\\ +\frac{V(W)}{(\textrm{Var}\, F)^{3/2}} +\frac{V(W)^{5/4} + V(W)^{3/2}}{(\textrm{Var}\, F)^{2}}\Bigg] \end{equation*}

for a constant

![]() $C \in (0,\infty)$

which depends on a, d, the first 7d moments of

$C \in (0,\infty)$

which depends on a, d, the first 7d moments of

![]() $\nu$

, and

$\nu$

, and

![]() $\theta$

.

$\theta$

.

To derive a quantitative central limit theorem from Theorem 2.1, a lower bound on the variance is needed. The following proposition provides general lower and upper bounds on the variance, which are then specialized for measures on

![]() $\mathbb{R}_+$

given by

$\mathbb{R}_+$

given by

In the following,

![]() $t_1 \wedge t_2$

denotes

$t_1 \wedge t_2$

denotes

![]() $\min\{t_1,t_2\}$

for

$\min\{t_1,t_2\}$

for

![]() $t_1, t_2 \in \mathbb{R}$

. For

$t_1, t_2 \in \mathbb{R}$

. For

![]() $a \in (0,\infty)$

and

$a \in (0,\infty)$

and

![]() $\tau>-1$

, define the function

$\tau>-1$

, define the function

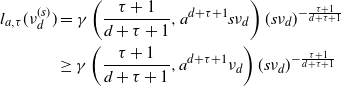

where

![]() $\gamma(p,z)\,:\!=\,\int_0^z t^{p-1}e^{-t}{\textrm{d}} t $

is the lower incomplete gamma function.

$\gamma(p,z)\,:\!=\,\int_0^z t^{p-1}e^{-t}{\textrm{d}} t $

is the lower incomplete gamma function.

Proposition 2.1. Let the assumptions (A)–(C) be in force. For a Poisson process

![]() $\eta$

with intensity measure

$\eta$

with intensity measure

![]() $\mu$

as above and

$\mu$

as above and

![]() $F\,:\!=\,F(\eta)$

as in (2.1),

$F\,:\!=\,F(\eta)$

as in (2.1),

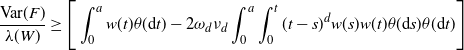

\begin{equation} \frac{\textrm{Var}(F)}{\lambda(W)} \ge \Bigg[\int_{0}^a w(t) \theta({\textrm{d}} t) - 2 \omega_d \nu_{d} \int_0^a \int_0^{t} (t-s)^d w(s) w(t) \theta({\textrm{d}} s) \theta({\textrm{d}} t)\Bigg] \end{equation}

\begin{equation} \frac{\textrm{Var}(F)}{\lambda(W)} \ge \Bigg[\int_{0}^a w(t) \theta({\textrm{d}} t) - 2 \omega_d \nu_{d} \int_0^a \int_0^{t} (t-s)^d w(s) w(t) \theta({\textrm{d}} s) \theta({\textrm{d}} t)\Bigg] \end{equation}

and

\begin{multline} \frac{\textrm{Var}(F)}{\lambda(W)} \le \Bigg[2 \int_{0}^a w(t)^{1/2} \theta({\textrm{d}} t)\\ \quad + \omega_d^2 \nu_{2d} \int_{[0,a]^2} \int_{0}^{t_1 \wedge t_2} (t_1-s)^d (t_2-s)^d w(t_1)^{1/2} w(t_2)^{1/2} \theta({\textrm{d}} s) \theta^2({\textrm{d}}(t_1,t_2))\Bigg], \end{multline}

\begin{multline} \frac{\textrm{Var}(F)}{\lambda(W)} \le \Bigg[2 \int_{0}^a w(t)^{1/2} \theta({\textrm{d}} t)\\ \quad + \omega_d^2 \nu_{2d} \int_{[0,a]^2} \int_{0}^{t_1 \wedge t_2} (t_1-s)^d (t_2-s)^d w(t_1)^{1/2} w(t_2)^{1/2} \theta({\textrm{d}} s) \theta^2({\textrm{d}}(t_1,t_2))\Bigg], \end{multline}

where

If

![]() $\theta$

is given by (2.5), then

$\theta$

is given by (2.5), then

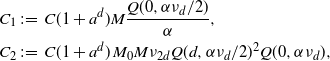

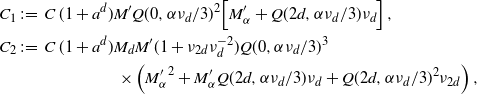

for constants

![]() $C_1, C_1', C_2$

depending on the dimension d and

$C_1, C_1', C_2$

depending on the dimension d and

![]() $\tau$

, and

$\tau$

, and

![]() $C_1,C_2>0$

.

$C_1,C_2>0$

.

We remark here that the lower bound in (2.10) is useful only when

![]() $\tau \le d-1$

. We believe that a positive lower bound still exists when

$\tau \le d-1$

. We believe that a positive lower bound still exists when

![]() $\tau>d-1$

, even though our arguments in general do not apply for such

$\tau>d-1$

, even though our arguments in general do not apply for such

![]() $\tau$

.

$\tau$

.

In the case of a deterministic speed v, Proposition 2.1 provides an explicit condition on

![]() $\theta$

ensuring that the variance scales like the volume of the observation window in the classical Johnson–Mehl growth model. The problem of finding such a condition, explicitly formulated in [Reference Chiu and Quine6, p. 754], arose in [Reference Chiu and Quine5], where asymptotic normality for the number of exposed seeds in a region, as the volume of the region approaches infinity, is obtained under the assumption that the variance scales properly. This was by then only shown numerically for the case when

$\theta$

ensuring that the variance scales like the volume of the observation window in the classical Johnson–Mehl growth model. The problem of finding such a condition, explicitly formulated in [Reference Chiu and Quine6, p. 754], arose in [Reference Chiu and Quine5], where asymptotic normality for the number of exposed seeds in a region, as the volume of the region approaches infinity, is obtained under the assumption that the variance scales properly. This was by then only shown numerically for the case when

![]() $\theta$

is the Lebesgue measure and

$\theta$

is the Lebesgue measure and

![]() $d=1,2,3,4$

. Subsequent papers [Reference Penrose and Yukich17, Reference Schreiber and Yukich20] derived the variance scaling for

$d=1,2,3,4$

. Subsequent papers [Reference Penrose and Yukich17, Reference Schreiber and Yukich20] derived the variance scaling for

![]() $\theta$

being the Lebesgue measure and some generalizations of it, but in a slightly different formulation of the model, in which seeds that do not appear in the observation window are automatically rejected and cannot influence the growth pattern in the region W.

$\theta$

being the Lebesgue measure and some generalizations of it, but in a slightly different formulation of the model, in which seeds that do not appear in the observation window are automatically rejected and cannot influence the growth pattern in the region W.

It should be noted that it might also be possible to use [Reference Lachièze-Rey12, Theorem 1.2] to obtain a quantitative central limit theorem and variance asymptotics for statistics of the exposed points in a domain W which is the union of unit cubes around a subset of points in

![]() $\mathbb{Z}^d$

. For this, one would need to check Assumption 1.1 from the cited paper, which ensures non-degeneracy of the variance, and a moment condition in the form of Equation (1.10) therein. It seems to us that checking Assumption 1.1 may be a challenging task and would involve further assumptions on the model, such as the one we also need in our Proposition 2.1. Controls on the long-range interactions would also be necessary to check [Reference Lachièze-Rey12, Equation (1.10)]. Thus, while this might indeed yield results similar to ours, the goal of the present work is to highlight the application of region-stabilization in this context, which in general is of a different nature from the methods in [Reference Lachièze-Rey12]. For example, the approach in [Reference Lachièze-Rey12] does not apply for Pareto-minimal points in a hypercube considered in [Reference Bhattacharjee and Molchanov3], since there is no polynomial decay in long-range interactions, while region-stabilization yields optimal rates for the Gaussian convergence.

$\mathbb{Z}^d$

. For this, one would need to check Assumption 1.1 from the cited paper, which ensures non-degeneracy of the variance, and a moment condition in the form of Equation (1.10) therein. It seems to us that checking Assumption 1.1 may be a challenging task and would involve further assumptions on the model, such as the one we also need in our Proposition 2.1. Controls on the long-range interactions would also be necessary to check [Reference Lachièze-Rey12, Equation (1.10)]. Thus, while this might indeed yield results similar to ours, the goal of the present work is to highlight the application of region-stabilization in this context, which in general is of a different nature from the methods in [Reference Lachièze-Rey12]. For example, the approach in [Reference Lachièze-Rey12] does not apply for Pareto-minimal points in a hypercube considered in [Reference Bhattacharjee and Molchanov3], since there is no polynomial decay in long-range interactions, while region-stabilization yields optimal rates for the Gaussian convergence.

The bounds in Theorem 2.1 can be specified under two different scenarios. When considering a sequence of weight functions, under suitable conditions Theorem 2.1 provides a quantitative central limit theorem for the corresponding functionals

![]() $(F_n)_{n \in \mathbb{N}}$

. Keeping all other quantities fixed with respect to n, consider the sequence of non-negative location-weight functions on

$(F_n)_{n \in \mathbb{N}}$

. Keeping all other quantities fixed with respect to n, consider the sequence of non-negative location-weight functions on

![]() $\mathbb{R}^d$

given by

$\mathbb{R}^d$

given by

![]() $h_{1,n} = \unicode{x1d7d9}_{n^{1/d} W}$

for a fixed convex body

$h_{1,n} = \unicode{x1d7d9}_{n^{1/d} W}$

for a fixed convex body

![]() $W \subset \mathbb{R}^d$

satisfying (A). In view of Proposition 2.1, this provides the following quantitative central limit theorem.

$W \subset \mathbb{R}^d$

satisfying (A). In view of Proposition 2.1, this provides the following quantitative central limit theorem.

Theorem 2.2. Let the assumptions (A)–(C) be in force. For

![]() $n \in \mathbb{N}$

and

$n \in \mathbb{N}$

and

![]() $\eta$

as in Theorem 2.1, let

$\eta$

as in Theorem 2.1, let

![]() $F_n\,:\!=\,F_n(\eta)$

, where

$F_n\,:\!=\,F_n(\eta)$

, where

![]() $F_n$

is defined as in (2.1) with a independent of n and

$F_n$

is defined as in (2.1) with a independent of n and

![]() $h_1=h_{1,n} = \unicode{x1d7d9}_{n^{1/d}W}$

. Assume that

$h_1=h_{1,n} = \unicode{x1d7d9}_{n^{1/d}W}$

. Assume that

![]() $\theta$

and

$\theta$

and

![]() $\nu$

satisfy

$\nu$

satisfy

where w(t) is given at (2.9). Then there exists a constant

![]() $C \in (0,\infty)$

, depending on a, d, the first 7d moments of

$C \in (0,\infty)$

, depending on a, d, the first 7d moments of

![]() $\nu$

,

$\nu$

,

![]() $\theta$

, and W, such that

$\theta$

, and W, such that

for all

![]() $n\in\mathbb{N}$

. In particular, (2.11) is satisfied for

$n\in\mathbb{N}$

. In particular, (2.11) is satisfied for

![]() $\theta$

given at (2.5) with

$\theta$

given at (2.5) with

![]() $\tau \in ({-}1,d-1]$

.

$\tau \in ({-}1,d-1]$

.

Furthermore, the bound on the Kolmogorov distance is of optimal order; i.e., when (2.11) holds, there exists a constant

![]() $0 < C' \le C$

depending only on a, d, the first 2d moments of

$0 < C' \le C$

depending only on a, d, the first 2d moments of

![]() $\nu$

,

$\nu$

,

![]() $\theta$

, and W, such that

$\theta$

, and W, such that

When (2.11) is satisfied, Theorem 2.2 yields a central limit theorem for the number of exposed seeds born before time

![]() $a\in (0,\infty)$

, with rate of convergence of order

$a\in (0,\infty)$

, with rate of convergence of order

![]() $n^{-1/2}$

. This extends the central limit theorem for the number of exposed seeds from [Reference Chiu and Quine5] in several directions: the model is generalized to random growth speeds, there is no constraint of any kind on the shape of the window W except convexity, and a logarithmic factor is removed from the rate of convergence.

$n^{-1/2}$

. This extends the central limit theorem for the number of exposed seeds from [Reference Chiu and Quine5] in several directions: the model is generalized to random growth speeds, there is no constraint of any kind on the shape of the window W except convexity, and a logarithmic factor is removed from the rate of convergence.

In a different scenario, if

![]() $\theta$

has a power-law density (2.5) with

$\theta$

has a power-law density (2.5) with

![]() $\tau \in ({-}1,d-1]$

, it is possible to explicitly specify the dependence of the bound in Theorem 2.1 on the moments of

$\tau \in ({-}1,d-1]$

, it is possible to explicitly specify the dependence of the bound in Theorem 2.1 on the moments of

![]() $\nu$

, as stated in the following result. Note that for the above choice of

$\nu$

, as stated in the following result. Note that for the above choice of

![]() $\theta$

, the assumption (B) is trivially satisfied. Define

$\theta$

, the assumption (B) is trivially satisfied. Define

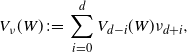

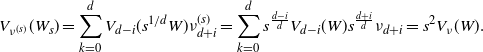

\begin{align}V_\nu(W)\,:\!=\,\sum_{i=0}^d V_{d-i}(W) \nu_{d+i},\end{align}

\begin{align}V_\nu(W)\,:\!=\,\sum_{i=0}^d V_{d-i}(W) \nu_{d+i},\end{align}

which is the sum of the intrinsic volumes of W weighted by the moments of the speed.

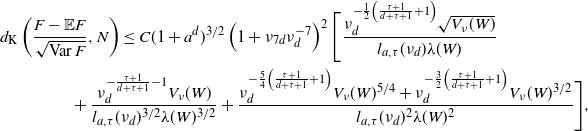

Theorem 2.3. Let the assumptions (A) and (C) be in force. For

![]() $\theta$

given at (2.5) with

$\theta$

given at (2.5) with

![]() $\tau \in ({-}1,$

$\tau \in ({-}1,$

![]() $d-1]$

, consider

$d-1]$

, consider

![]() $F=F(\eta)$

, where

$F=F(\eta)$

, where

![]() $\eta$

is as in Theorem 2.1 and F is defined as in (2.1) with

$\eta$

is as in Theorem 2.1 and F is defined as in (2.1) with

![]() $a \in (0,\infty)$

. Then there exists a constant

$a \in (0,\infty)$

. Then there exists a constant

![]() $C \in (0,\infty)$

, depending only on d and

$C \in (0,\infty)$

, depending only on d and

![]() $\tau$

, such that

$\tau$

, such that

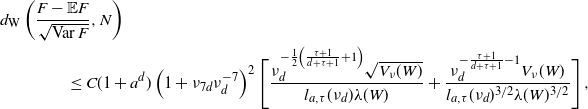

\begin{equation*} d_{\textrm{W}}\left(\frac{F - \mathbb{E} F}{\sqrt{\textrm{Var}\, F}},N\right)\\ \leq C (1+a^d) \left(1+\nu_{7d}\nu_{d}^{-7}\right)^{2} \Bigg[ \frac {\nu_{d}^{-\frac{1}{2}\left(\frac{\tau+1}{d+\tau+1}+1\right)} \sqrt{V_\nu(W)}}{l_{a,\tau}(\nu_d) \lambda(W)} + \frac{\nu_{d}^{-\frac{\tau+1}{d+\tau+1}-1} V_\nu(W)} {l_{a,\tau}(\nu_d)^{3/2}\lambda(W)^{3/2}} \Bigg]\,, \end{equation*}

\begin{equation*} d_{\textrm{W}}\left(\frac{F - \mathbb{E} F}{\sqrt{\textrm{Var}\, F}},N\right)\\ \leq C (1+a^d) \left(1+\nu_{7d}\nu_{d}^{-7}\right)^{2} \Bigg[ \frac {\nu_{d}^{-\frac{1}{2}\left(\frac{\tau+1}{d+\tau+1}+1\right)} \sqrt{V_\nu(W)}}{l_{a,\tau}(\nu_d) \lambda(W)} + \frac{\nu_{d}^{-\frac{\tau+1}{d+\tau+1}-1} V_\nu(W)} {l_{a,\tau}(\nu_d)^{3/2}\lambda(W)^{3/2}} \Bigg]\,, \end{equation*}

and

\begin{equation*} d_{\textrm{K}}\left(\frac{F - \mathbb{E} F}{\sqrt{\textrm{Var}\, F}},N\right) \leq C (1+a^d)^{3/2} \left(1+\nu_{7d}\nu_{d}^{-7}\right)^{2} \Bigg[ \frac {\nu_{d}^{-\frac{1}{2}\left(\frac{\tau+1}{d+\tau+1}+1\right)} \sqrt{V_\nu(W)}}{l_{a,\tau}(\nu_d) \lambda(W)} \\ + \frac{\nu_{d}^{-\frac{\tau+1}{d+\tau+1}-1} V_\nu(W)} {l_{a,\tau}(\nu_d)^{3/2}\lambda(W)^{3/2}} + \frac {\nu_{d}^{-\frac{5}{4}\left(\frac{\tau+1}{d+\tau+1}+1\right)}V_\nu(W)^{5/4} + \nu_{d}^{-\frac{3}{2}\left(\frac{\tau+1}{d+\tau+1}+1\right)} V_\nu(W)^{3/2}} {l_{a,\tau}(\nu_d)^2 \lambda(W)^{2}} \Bigg], \end{equation*}

\begin{equation*} d_{\textrm{K}}\left(\frac{F - \mathbb{E} F}{\sqrt{\textrm{Var}\, F}},N\right) \leq C (1+a^d)^{3/2} \left(1+\nu_{7d}\nu_{d}^{-7}\right)^{2} \Bigg[ \frac {\nu_{d}^{-\frac{1}{2}\left(\frac{\tau+1}{d+\tau+1}+1\right)} \sqrt{V_\nu(W)}}{l_{a,\tau}(\nu_d) \lambda(W)} \\ + \frac{\nu_{d}^{-\frac{\tau+1}{d+\tau+1}-1} V_\nu(W)} {l_{a,\tau}(\nu_d)^{3/2}\lambda(W)^{3/2}} + \frac {\nu_{d}^{-\frac{5}{4}\left(\frac{\tau+1}{d+\tau+1}+1\right)}V_\nu(W)^{5/4} + \nu_{d}^{-\frac{3}{2}\left(\frac{\tau+1}{d+\tau+1}+1\right)} V_\nu(W)^{3/2}} {l_{a,\tau}(\nu_d)^2 \lambda(W)^{2}} \Bigg], \end{equation*}

where

![]() $l_{a,\tau}$

is defined at (2.6).

$l_{a,\tau}$

is defined at (2.6).

Note that our results for the number of exposed points can also be interpreted as quantitative central limit theorems for the number of local minima of the growth frontier, which is of independent interest. As an application of Theorem 2.3, we consider the case when the intensity of the underlying point process grows to infinity. The quantitative central limit theorem for this case is contained in the following result.

Corollary 2.1. Let the assumptions (A) and (C) be in force. Consider

![]() $F(\eta_s)$

defined at (2.1) with

$F(\eta_s)$

defined at (2.1) with

![]() $a \in (0,\infty)$

, evaluated at the Poisson process

$a \in (0,\infty)$

, evaluated at the Poisson process

![]() $\eta_s$

with intensity

$\eta_s$

with intensity

![]() $s\lambda\otimes\theta\otimes\nu$

for

$s\lambda\otimes\theta\otimes\nu$

for

![]() $s \ge 1$

and

$s \ge 1$

and

![]() $\theta$

given at (2.5) with

$\theta$

given at (2.5) with

![]() $\tau \in ({-}1,d-1]$

. Then there exists a finite constant

$\tau \in ({-}1,d-1]$

. Then there exists a finite constant

![]() $C \in (0,\infty)$

depending only on W, d, a,

$C \in (0,\infty)$

depending only on W, d, a,

![]() $\tau$

,

$\tau$

,

![]() $\nu_{d}$

, and

$\nu_{d}$

, and

![]() $\nu_{7d}$

, such that, for all

$\nu_{7d}$

, such that, for all

![]() $s\ge 1$

,

$s\ge 1$

,

Furthermore, the bound on the Kolmogorov distance is of optimal order.

3. Variance estimation

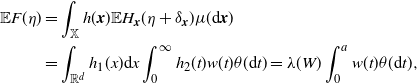

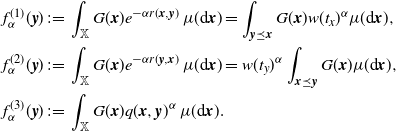

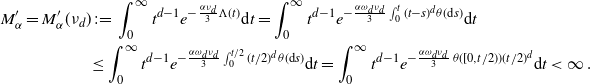

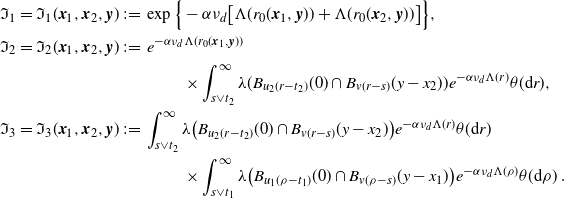

In this section, we estimate the mean and variance of the statistic F, thus providing a proof of Proposition 2.1. Recall the weight function

![]() $h({\boldsymbol{x}})\,:\!=\,h_1(x) h_2(t_x)$

, where

$h({\boldsymbol{x}})\,:\!=\,h_1(x) h_2(t_x)$

, where

![]() $h_1(x)=\unicode{x1d7d9}\{x \in W\}$

and

$h_1(x)=\unicode{x1d7d9}\{x \in W\}$

and

![]() $h_2(t) = \unicode{x1d7d9}\{t \le a\}$

. Notice that by the Mecke formula, the mean of F is given by

$h_2(t) = \unicode{x1d7d9}\{t \le a\}$

. Notice that by the Mecke formula, the mean of F is given by

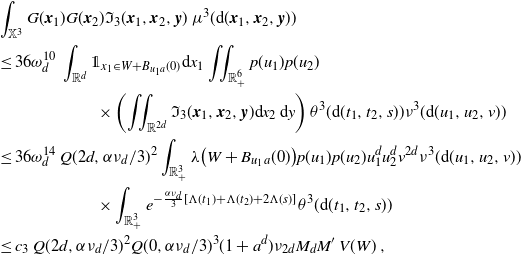

\begin{align} \mathbb{E} F(\eta)&=\int_\mathbb{X} h({\boldsymbol{x}})\mathbb{E} H_{\boldsymbol{x}}(\eta+\delta_{\boldsymbol{x}})\mu({\textrm{d}} {\boldsymbol{x}}) \nonumber\\ &=\int_{\mathbb{R}^d}h_1(x) {\textrm{d}} x \int_0^\infty h_2(t) w(t) \theta({\textrm{d}} t) =\lambda(W)\int_0^a w(t) \theta({\textrm{d}} t),\end{align}

\begin{align} \mathbb{E} F(\eta)&=\int_\mathbb{X} h({\boldsymbol{x}})\mathbb{E} H_{\boldsymbol{x}}(\eta+\delta_{\boldsymbol{x}})\mu({\textrm{d}} {\boldsymbol{x}}) \nonumber\\ &=\int_{\mathbb{R}^d}h_1(x) {\textrm{d}} x \int_0^\infty h_2(t) w(t) \theta({\textrm{d}} t) =\lambda(W)\int_0^a w(t) \theta({\textrm{d}} t),\end{align}

where w(t) is defined at (2.9). In many instances, we will use the simple inequality

Also notice that for

![]() $x \in \mathbb{R}^d$

,

$x \in \mathbb{R}^d$

,

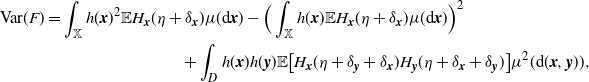

The multivariate Mecke formula (see, e.g., [Reference Last and Penrose15, Theorem 4.4]) yields that

\begin{equation*} \textrm{Var}(F)=\int_\mathbb{X} h({\boldsymbol{x}})^2 \mathbb{E} H_{{\boldsymbol{x}}}(\eta+\delta_{\boldsymbol{x}}) \mu({\textrm{d}} {\boldsymbol{x}}) -\Big(\int_\mathbb{X} h({\boldsymbol{x}}) \mathbb{E} H_{{\boldsymbol{x}}}(\eta+\delta_{\boldsymbol{x}}) \mu({\textrm{d}} {\boldsymbol{x}})\Big)^2 \\ +\int_{D} h({\boldsymbol{x}})h({\boldsymbol{y}}) \mathbb{E} \big[H_{{\boldsymbol{x}}}(\eta+\delta_{{\boldsymbol{y}}}+\delta_{\boldsymbol{x}}) H_{{\boldsymbol{y}}}(\eta+\delta_{{\boldsymbol{x}}}+\delta_{\boldsymbol{y}})\big] \mu^2({\textrm{d}}( {\boldsymbol{x}}, {\boldsymbol{y}})),\end{equation*}

\begin{equation*} \textrm{Var}(F)=\int_\mathbb{X} h({\boldsymbol{x}})^2 \mathbb{E} H_{{\boldsymbol{x}}}(\eta+\delta_{\boldsymbol{x}}) \mu({\textrm{d}} {\boldsymbol{x}}) -\Big(\int_\mathbb{X} h({\boldsymbol{x}}) \mathbb{E} H_{{\boldsymbol{x}}}(\eta+\delta_{\boldsymbol{x}}) \mu({\textrm{d}} {\boldsymbol{x}})\Big)^2 \\ +\int_{D} h({\boldsymbol{x}})h({\boldsymbol{y}}) \mathbb{E} \big[H_{{\boldsymbol{x}}}(\eta+\delta_{{\boldsymbol{y}}}+\delta_{\boldsymbol{x}}) H_{{\boldsymbol{y}}}(\eta+\delta_{{\boldsymbol{x}}}+\delta_{\boldsymbol{y}})\big] \mu^2({\textrm{d}}( {\boldsymbol{x}}, {\boldsymbol{y}})),\end{equation*}

where the double integration is over the region

![]() $D\subset \mathbb{X}$

where the points

$D\subset \mathbb{X}$

where the points

![]() ${\boldsymbol{x}}$

and

${\boldsymbol{x}}$

and

![]() ${\boldsymbol{y}}$

are incomparable (

${\boldsymbol{y}}$

are incomparable (

![]() ${\boldsymbol{x}} \not \preceq {\boldsymbol{y}}$

and

${\boldsymbol{x}} \not \preceq {\boldsymbol{y}}$

and

![]() ${\boldsymbol{y}} \not \preceq {\boldsymbol{x}}$

), i.e.,

${\boldsymbol{y}} \not \preceq {\boldsymbol{x}}$

), i.e.,

It is possible to get rid of one of the Dirac measures in the inner integral, since on D the points are incomparable. Thus, using the translation-invariance of

![]() $\mathbb{E} H_{{\boldsymbol{x}}}(\eta)$

, we have

$\mathbb{E} H_{{\boldsymbol{x}}}(\eta)$

, we have

where

and

Finally, we will use the following simple inequality for the incomplete gamma function:

which holds for all

![]() $x \in \mathbb{R}_+$

and

$x \in \mathbb{R}_+$

and

![]() $b,y>0$

.

$b,y>0$

.

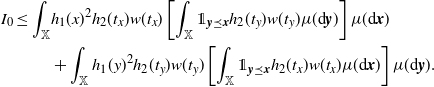

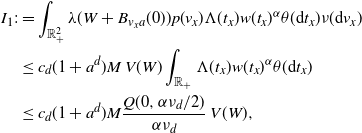

Proof of Proposition

2.1. First, notice that the term

![]() $I_1$

in (3.3) is non-negative, since

$I_1$

in (3.3) is non-negative, since

Furthermore, (3.1) yields that

\begin{align} I_0 \le \int_\mathbb{X} & h_1(x)^2 h_2(t_x) w(t_x) \left[\int_{\mathbb{X}} \unicode{x1d7d9}_{{\boldsymbol{y}} \preceq {\boldsymbol{x}}} h_2(t_y) w(t_y) \mu({\textrm{d}} {\boldsymbol{y}})\right] \mu({\textrm{d}} {\boldsymbol{x}})\\ & + \int_\mathbb{X} h_1(y)^2 h_2(t_y) w(t_y) \left[\int_{\mathbb{X}} \unicode{x1d7d9}_{{\boldsymbol{y}} \preceq {\boldsymbol{x}}} h_2(t_x) w(t_x) \mu({\textrm{d}} {\boldsymbol{x}})\right]\mu({\textrm{d}} {\boldsymbol{y}}). \end{align}

\begin{align} I_0 \le \int_\mathbb{X} & h_1(x)^2 h_2(t_x) w(t_x) \left[\int_{\mathbb{X}} \unicode{x1d7d9}_{{\boldsymbol{y}} \preceq {\boldsymbol{x}}} h_2(t_y) w(t_y) \mu({\textrm{d}} {\boldsymbol{y}})\right] \mu({\textrm{d}} {\boldsymbol{x}})\\ & + \int_\mathbb{X} h_1(y)^2 h_2(t_y) w(t_y) \left[\int_{\mathbb{X}} \unicode{x1d7d9}_{{\boldsymbol{y}} \preceq {\boldsymbol{x}}} h_2(t_x) w(t_x) \mu({\textrm{d}} {\boldsymbol{x}})\right]\mu({\textrm{d}} {\boldsymbol{y}}). \end{align}

Since

![]() ${\boldsymbol{y}} \preceq {\boldsymbol{x}}$

is equivalent to

${\boldsymbol{y}} \preceq {\boldsymbol{x}}$

is equivalent to

![]() $\|y-x\| \le v_y(t_x-t_y)$

, the first summand on the right-hand side above can be simplified as

$\|y-x\| \le v_y(t_x-t_y)$

, the first summand on the right-hand side above can be simplified as

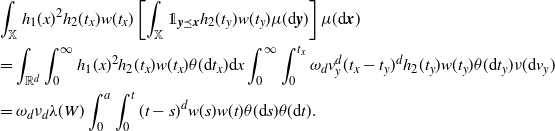

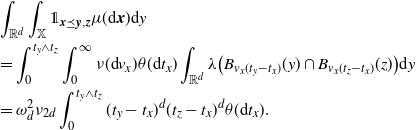

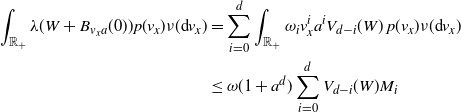

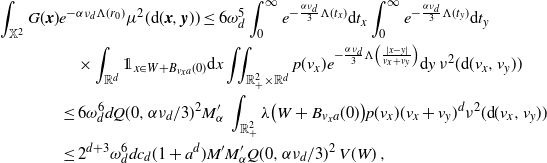

\begin{align} &\int_\mathbb{X} h_1(x)^2 h_2(t_x) w(t_x) \left[\int_{\mathbb{X}} \unicode{x1d7d9}_{{\boldsymbol{y}} \preceq {\boldsymbol{x}}} h_2(t_y) w(t_y) \mu({\textrm{d}} {\boldsymbol{y}})\right] \mu({\textrm{d}} {\boldsymbol{x}}) \\ &=\int_{\mathbb{R}^d}\int_{0}^\infty h_1(x)^2 h_2(t_x) w(t_x) \theta({\textrm{d}} t_x) {\textrm{d}} x \int_0^\infty \int_{0}^{t_x} \omega_d v_y^d (t_x-t_y)^d h_2(t_y) w (t_y) \theta({\textrm{d}} t_y) \nu({\textrm{d}} v_y)\\ & =\omega_d \nu_{d} \lambda(W) \int_0^a \int_0^{t} (t-s)^dw(s) w(t) \theta({\textrm{d}} s) \theta({\textrm{d}} t). \end{align}

\begin{align} &\int_\mathbb{X} h_1(x)^2 h_2(t_x) w(t_x) \left[\int_{\mathbb{X}} \unicode{x1d7d9}_{{\boldsymbol{y}} \preceq {\boldsymbol{x}}} h_2(t_y) w(t_y) \mu({\textrm{d}} {\boldsymbol{y}})\right] \mu({\textrm{d}} {\boldsymbol{x}}) \\ &=\int_{\mathbb{R}^d}\int_{0}^\infty h_1(x)^2 h_2(t_x) w(t_x) \theta({\textrm{d}} t_x) {\textrm{d}} x \int_0^\infty \int_{0}^{t_x} \omega_d v_y^d (t_x-t_y)^d h_2(t_y) w (t_y) \theta({\textrm{d}} t_y) \nu({\textrm{d}} v_y)\\ & =\omega_d \nu_{d} \lambda(W) \int_0^a \int_0^{t} (t-s)^dw(s) w(t) \theta({\textrm{d}} s) \theta({\textrm{d}} t). \end{align}

The second summand in the bound on

![]() $I_0$

, upon interchanging integrals for the second step, turns into

$I_0$

, upon interchanging integrals for the second step, turns into

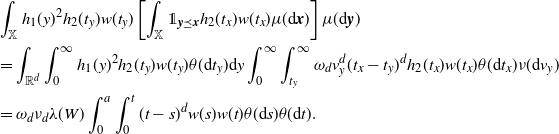

\begin{align} &\int_\mathbb{X} h_1(y)^2 h_2(t_y) w(t_y) \left[\int_{\mathbb{X}} \unicode{x1d7d9}_{{\boldsymbol{y}} \preceq {\boldsymbol{x}}} h_2(t_x) w(t_x) \mu({\textrm{d}} {\boldsymbol{x}})\right]\mu({\textrm{d}} {\boldsymbol{y}})\\ &=\int_{\mathbb{R}^d}\int_{0}^\infty h_1(y)^2 h_2(t_y) w(t_y) \theta({\textrm{d}} t_y) {\textrm{d}} y \int_0^\infty \int_{t_y}^{\infty} \omega_d v_y^d (t_x-t_y)^d h_2(t_x) w (t_x) \theta({\textrm{d}} t_x) \nu({\textrm{d}} v_y)\\ & =\omega_d \nu_{d} \lambda(W) \int_0^a \int_0^{t} (t-s)^d w(s) w(t) \theta({\textrm{d}} s) \theta({\textrm{d}} t). \end{align}

\begin{align} &\int_\mathbb{X} h_1(y)^2 h_2(t_y) w(t_y) \left[\int_{\mathbb{X}} \unicode{x1d7d9}_{{\boldsymbol{y}} \preceq {\boldsymbol{x}}} h_2(t_x) w(t_x) \mu({\textrm{d}} {\boldsymbol{x}})\right]\mu({\textrm{d}} {\boldsymbol{y}})\\ &=\int_{\mathbb{R}^d}\int_{0}^\infty h_1(y)^2 h_2(t_y) w(t_y) \theta({\textrm{d}} t_y) {\textrm{d}} y \int_0^\infty \int_{t_y}^{\infty} \omega_d v_y^d (t_x-t_y)^d h_2(t_x) w (t_x) \theta({\textrm{d}} t_x) \nu({\textrm{d}} v_y)\\ & =\omega_d \nu_{d} \lambda(W) \int_0^a \int_0^{t} (t-s)^d w(s) w(t) \theta({\textrm{d}} s) \theta({\textrm{d}} t). \end{align}

Combining, by (3.3) we obtain (2.7).

To prove (2.8), note that by the Poincaré inequality (see [Reference Last and Penrose15, Section 18.3]),

Observe that

![]() $\eta$

is simple, and for

$\eta$

is simple, and for

![]() $x\notin\eta$

,

$x\notin\eta$

,

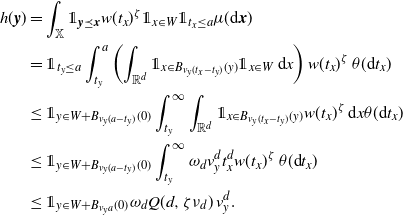

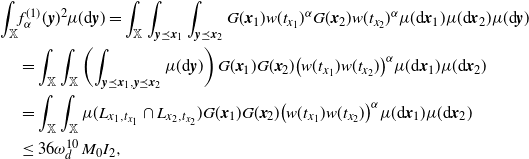

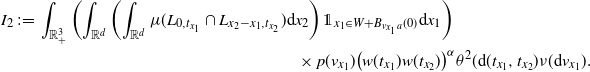

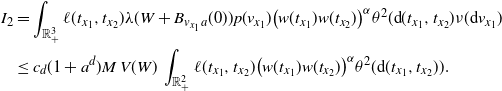

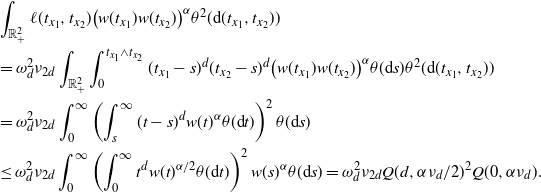

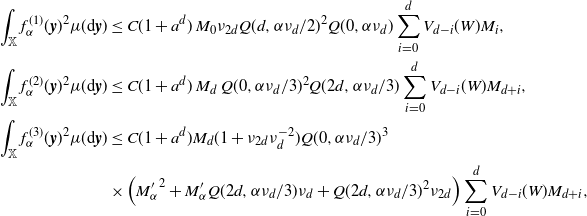

The inequality

in the first step and the Mecke formula in the second step yield that

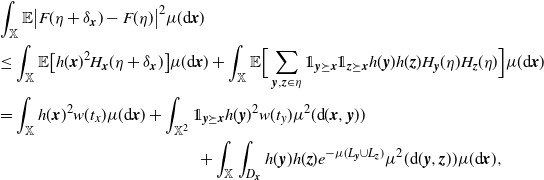

\begin{align} &\int_\mathbb{X} \mathbb{E}\big|F(\eta + \delta_{{\boldsymbol{x}}})- F(\eta)\big|^2 \mu({\textrm{d}} {\boldsymbol{x}})\nonumber\\ &\leq \int_{\mathbb{X}} \mathbb{E}\big[h({\boldsymbol{x}})^2 H_{\boldsymbol{x}}(\eta+\delta_{\boldsymbol{x}})\big]\mu({\textrm{d}}{\boldsymbol{x}}) +\int_{\mathbb{X}} \mathbb{E}\Big[\sum_{{\boldsymbol{y}},{\boldsymbol{z}} \in \eta} \unicode{x1d7d9}_{{\boldsymbol{y}} \succeq {\boldsymbol{x}}}\unicode{x1d7d9}_{{\boldsymbol{z}} \succeq {\boldsymbol{x}}} h({\boldsymbol{y}})h({\boldsymbol{z}}) H_{\boldsymbol{y}}(\eta)H_{\boldsymbol{z}}(\eta)\Big]\mu({\textrm{d}}{\boldsymbol{x}})\nonumber \\ &=\int_\mathbb{X} h({\boldsymbol{x}})^2 w(t_x) \mu({\textrm{d}} {\boldsymbol{x}}) + \int_{\mathbb{X}^2} \unicode{x1d7d9}_{{\boldsymbol{y}} \succeq {\boldsymbol{x}}} h({\boldsymbol{y}})^2 w(t_y) \mu^2({\textrm{d}} ({\boldsymbol{x}},{\boldsymbol{y}}))\nonumber \\ &\qquad\qquad\qquad\qquad \qquad\qquad+ \int_\mathbb{X} \int_{D_{\boldsymbol{x}}} h({\boldsymbol{y}}) h({\boldsymbol{z}}) e^{-\mu(L_{{\boldsymbol{y}}} \cup L_{{\boldsymbol{z}}})} \mu^2({\textrm{d}} ({\boldsymbol{y}},{\boldsymbol{z}})) \mu({\textrm{d}} {\boldsymbol{x}}), \end{align}

\begin{align} &\int_\mathbb{X} \mathbb{E}\big|F(\eta + \delta_{{\boldsymbol{x}}})- F(\eta)\big|^2 \mu({\textrm{d}} {\boldsymbol{x}})\nonumber\\ &\leq \int_{\mathbb{X}} \mathbb{E}\big[h({\boldsymbol{x}})^2 H_{\boldsymbol{x}}(\eta+\delta_{\boldsymbol{x}})\big]\mu({\textrm{d}}{\boldsymbol{x}}) +\int_{\mathbb{X}} \mathbb{E}\Big[\sum_{{\boldsymbol{y}},{\boldsymbol{z}} \in \eta} \unicode{x1d7d9}_{{\boldsymbol{y}} \succeq {\boldsymbol{x}}}\unicode{x1d7d9}_{{\boldsymbol{z}} \succeq {\boldsymbol{x}}} h({\boldsymbol{y}})h({\boldsymbol{z}}) H_{\boldsymbol{y}}(\eta)H_{\boldsymbol{z}}(\eta)\Big]\mu({\textrm{d}}{\boldsymbol{x}})\nonumber \\ &=\int_\mathbb{X} h({\boldsymbol{x}})^2 w(t_x) \mu({\textrm{d}} {\boldsymbol{x}}) + \int_{\mathbb{X}^2} \unicode{x1d7d9}_{{\boldsymbol{y}} \succeq {\boldsymbol{x}}} h({\boldsymbol{y}})^2 w(t_y) \mu^2({\textrm{d}} ({\boldsymbol{x}},{\boldsymbol{y}}))\nonumber \\ &\qquad\qquad\qquad\qquad \qquad\qquad+ \int_\mathbb{X} \int_{D_{\boldsymbol{x}}} h({\boldsymbol{y}}) h({\boldsymbol{z}}) e^{-\mu(L_{{\boldsymbol{y}}} \cup L_{{\boldsymbol{z}}})} \mu^2({\textrm{d}} ({\boldsymbol{y}},{\boldsymbol{z}})) \mu({\textrm{d}} {\boldsymbol{x}}), \end{align}

where

Using that

![]() $x e^{-x/2} \le 1$

for

$x e^{-x/2} \le 1$

for

![]() $x \in \mathbb{R}_+$

, observe that

$x \in \mathbb{R}_+$

, observe that

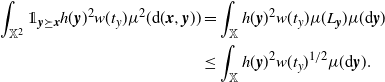

\begin{align} \int_{\mathbb{X}^2} \unicode{x1d7d9}_{{\boldsymbol{y}} \succeq {\boldsymbol{x}}} h({\boldsymbol{y}})^2 w(t_y) \mu^2({\textrm{d}} ({\boldsymbol{x}},{\boldsymbol{y}}))&= \int_\mathbb{X} h({\boldsymbol{y}})^2 w(t_y) \mu(L_{\boldsymbol{y}}) \mu({\textrm{d}} {\boldsymbol{y}}) \nonumber\\ &\le \int_\mathbb{X} h({\boldsymbol{y}})^2 w(t_y)^{1/2} \mu({\textrm{d}} {\boldsymbol{y}}). \end{align}

\begin{align} \int_{\mathbb{X}^2} \unicode{x1d7d9}_{{\boldsymbol{y}} \succeq {\boldsymbol{x}}} h({\boldsymbol{y}})^2 w(t_y) \mu^2({\textrm{d}} ({\boldsymbol{x}},{\boldsymbol{y}}))&= \int_\mathbb{X} h({\boldsymbol{y}})^2 w(t_y) \mu(L_{\boldsymbol{y}}) \mu({\textrm{d}} {\boldsymbol{y}}) \nonumber\\ &\le \int_\mathbb{X} h({\boldsymbol{y}})^2 w(t_y)^{1/2} \mu({\textrm{d}} {\boldsymbol{y}}). \end{align}

Next, using that

![]() $\mu(L_{{\boldsymbol{y}}} \cup L_{{\boldsymbol{z}}}) \ge (\mu(L_{{\boldsymbol{y}}}) + \mu(L_{{\boldsymbol{z}}}))/2$

and that

$\mu(L_{{\boldsymbol{y}}} \cup L_{{\boldsymbol{z}}}) \ge (\mu(L_{{\boldsymbol{y}}}) + \mu(L_{{\boldsymbol{z}}}))/2$

and that

![]() $D_{\boldsymbol{x}} \subseteq \{{\boldsymbol{y}},{\boldsymbol{z}} \succeq {\boldsymbol{x}}\}$

for the first inequality, and (3.1) for the second one, we have

$D_{\boldsymbol{x}} \subseteq \{{\boldsymbol{y}},{\boldsymbol{z}} \succeq {\boldsymbol{x}}\}$

for the first inequality, and (3.1) for the second one, we have

\begin{align} &\int_\mathbb{X} \int_{D_{\boldsymbol{x}}} h({\boldsymbol{y}}) h({\boldsymbol{z}}) e^{-\mu(L_{{\boldsymbol{y}}} \cup L_{{\boldsymbol{z}}})} \mu^2({\textrm{d}} ({\boldsymbol{y}},{\boldsymbol{z}})) \mu({\textrm{d}} {\boldsymbol{x}}) \nonumber\\ &\le \int_\mathbb{X} \int_{\mathbb{X}^2} \unicode{x1d7d9}_{{\boldsymbol{y}},{\boldsymbol{z}} \succeq {\boldsymbol{x}}} h({\boldsymbol{y}}) h({\boldsymbol{z}}) w(t_y)^{1/2}w(t_z)^{1/2} \mu^2({\textrm{d}} ({\boldsymbol{y}},{\boldsymbol{z}})) \mu({\textrm{d}} {\boldsymbol{x}})\nonumber\\ &\le \int_{[0,a]^2} w(t_y)^{1/2}w(t_z)^{1/2} \int_{\mathbb{R}^{2d}} h_1(z)^2 \left(\int_{\mathbb{X}} \unicode{x1d7d9}_{{\boldsymbol{x}} \preceq {\boldsymbol{y}},{\boldsymbol{z}}} \mu({\textrm{d}} {\boldsymbol{x}})\right) {\textrm{d}}(y,z) \theta^2({\textrm{d}}(t_y,t_z)). \end{align}

\begin{align} &\int_\mathbb{X} \int_{D_{\boldsymbol{x}}} h({\boldsymbol{y}}) h({\boldsymbol{z}}) e^{-\mu(L_{{\boldsymbol{y}}} \cup L_{{\boldsymbol{z}}})} \mu^2({\textrm{d}} ({\boldsymbol{y}},{\boldsymbol{z}})) \mu({\textrm{d}} {\boldsymbol{x}}) \nonumber\\ &\le \int_\mathbb{X} \int_{\mathbb{X}^2} \unicode{x1d7d9}_{{\boldsymbol{y}},{\boldsymbol{z}} \succeq {\boldsymbol{x}}} h({\boldsymbol{y}}) h({\boldsymbol{z}}) w(t_y)^{1/2}w(t_z)^{1/2} \mu^2({\textrm{d}} ({\boldsymbol{y}},{\boldsymbol{z}})) \mu({\textrm{d}} {\boldsymbol{x}})\nonumber\\ &\le \int_{[0,a]^2} w(t_y)^{1/2}w(t_z)^{1/2} \int_{\mathbb{R}^{2d}} h_1(z)^2 \left(\int_{\mathbb{X}} \unicode{x1d7d9}_{{\boldsymbol{x}} \preceq {\boldsymbol{y}},{\boldsymbol{z}}} \mu({\textrm{d}} {\boldsymbol{x}})\right) {\textrm{d}}(y,z) \theta^2({\textrm{d}}(t_y,t_z)). \end{align}

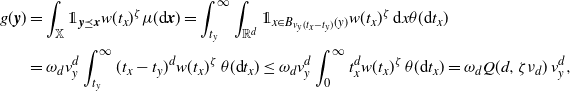

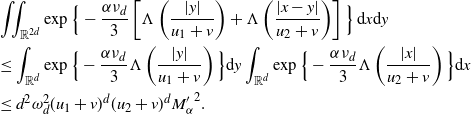

By (3.2),

\begin{align} &\int_{\mathbb{R}^d} \int_{\mathbb{X}} \unicode{x1d7d9}_{{\boldsymbol{x}} \preceq {\boldsymbol{y}},{\boldsymbol{z}}} \mu({\textrm{d}} {\boldsymbol{x}}) {\textrm{d}} y \\ &=\int_{0}^{t_y \wedge t_z} \int_{0}^\infty \nu({\textrm{d}} v_x) \theta({\textrm{d}} t_x) \int_{\mathbb{R}^d} \lambda\big(B_{v_x(t_y-t_x)}(y) \cap B_{v_x(t_z-t_x)}(z)\big) {\textrm{d}} y\\ &=\omega_d^2 \nu_{2d} \int_{0}^{t_y \wedge t_z}(t_y-t_x)^d (t_z-t_x)^d \theta({\textrm{d}} t_x). \end{align}

\begin{align} &\int_{\mathbb{R}^d} \int_{\mathbb{X}} \unicode{x1d7d9}_{{\boldsymbol{x}} \preceq {\boldsymbol{y}},{\boldsymbol{z}}} \mu({\textrm{d}} {\boldsymbol{x}}) {\textrm{d}} y \\ &=\int_{0}^{t_y \wedge t_z} \int_{0}^\infty \nu({\textrm{d}} v_x) \theta({\textrm{d}} t_x) \int_{\mathbb{R}^d} \lambda\big(B_{v_x(t_y-t_x)}(y) \cap B_{v_x(t_z-t_x)}(z)\big) {\textrm{d}} y\\ &=\omega_d^2 \nu_{2d} \int_{0}^{t_y \wedge t_z}(t_y-t_x)^d (t_z-t_x)^d \theta({\textrm{d}} t_x). \end{align}

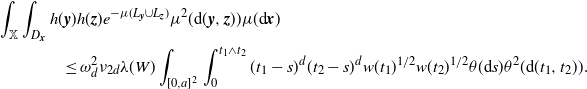

Plugging in (3.7), we obtain

\begin{equation*} \int_\mathbb{X} \int_{D_{\boldsymbol{x}}} h({\boldsymbol{y}}) h({\boldsymbol{z}}) e^{-\mu(L_{{\boldsymbol{y}}} \cup L_{{\boldsymbol{z}}})} \mu^2({\textrm{d}} ({\boldsymbol{y}},{\boldsymbol{z}})) \mu({\textrm{d}} {\boldsymbol{x}}) \\ \le \omega_d^2 \nu_{2d} \lambda(W) \int_{[0,a]^2} \int_{0}^{t_1 \wedge t_2}(t_1-s)^d (t_2-s)^d w(t_1)^{1/2}w(t_2)^{1/2} \theta({\textrm{d}} s) \theta^2({\textrm{d}}(t_1,t_2)). \end{equation*}

\begin{equation*} \int_\mathbb{X} \int_{D_{\boldsymbol{x}}} h({\boldsymbol{y}}) h({\boldsymbol{z}}) e^{-\mu(L_{{\boldsymbol{y}}} \cup L_{{\boldsymbol{z}}})} \mu^2({\textrm{d}} ({\boldsymbol{y}},{\boldsymbol{z}})) \mu({\textrm{d}} {\boldsymbol{x}}) \\ \le \omega_d^2 \nu_{2d} \lambda(W) \int_{[0,a]^2} \int_{0}^{t_1 \wedge t_2}(t_1-s)^d (t_2-s)^d w(t_1)^{1/2}w(t_2)^{1/2} \theta({\textrm{d}} s) \theta^2({\textrm{d}}(t_1,t_2)). \end{equation*}

This in combination with (3.5) and (3.6) proves (2.8).

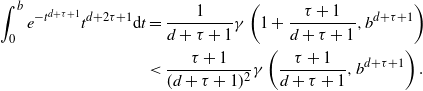

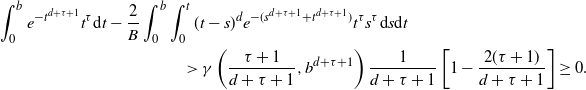

Now we move on to prove (2.10). We first confirm the lower bound. Fix

![]() $\tau \in ({-}1,d-1]$

, as otherwise the bound is trivial, and

$\tau \in ({-}1,d-1]$

, as otherwise the bound is trivial, and

![]() $a \in (0,\infty)$

. Then

$a \in (0,\infty)$

. Then

where

![]() $B\,:\!=\,B(d+1,\tau+1)$

is a value of the beta function. Hence, we have

$B\,:\!=\,B(d+1,\tau+1)$

is a value of the beta function. Hence, we have

![]() $w(t)=\exp\{-B \,\omega_d \nu_{d} t^{d+\tau + 1}\}$

. Plugging in, we obtain

$w(t)=\exp\{-B \,\omega_d \nu_{d} t^{d+\tau + 1}\}$

. Plugging in, we obtain

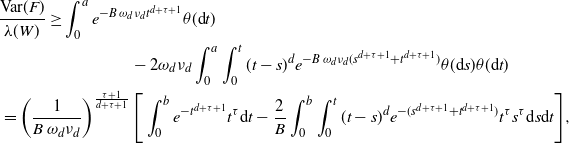

\begin{align} &\frac{\textrm{Var}(F)}{\lambda(W)} \ge \int_{0}^a e^{-B \,\omega_d \nu_{d} t^{d+\tau + 1}} \theta({\textrm{d}} t) \nonumber\\ & \qquad \qquad\qquad \qquad - 2 \omega_d \nu_{d} \int_0^a \int_0^{t} (t-s)^d e^{-B \,\omega_d \nu_{d} (s^{d+\tau +1}+t^{d+\tau+1})} \theta({\textrm{d}} s) \theta({\textrm{d}} t)\nonumber\\ &\, =\left(\frac{1}{B\,\omega_d \nu_{d} }\right)^{\frac{\tau+1}{d+\tau+1}} \Bigg[\int_{0}^b e^{- t^{d+\tau+1}}t^{\tau} {\textrm{d}} t -\frac{2}{B} \int_0^b \int_0^{t} (t-s)^d e^{-(s^{d+\tau+1}+t^{d+\tau+1})} t^{\tau} s^{\tau} {\textrm{d}} s {\textrm{d}} t\Bigg], \end{align}

\begin{align} &\frac{\textrm{Var}(F)}{\lambda(W)} \ge \int_{0}^a e^{-B \,\omega_d \nu_{d} t^{d+\tau + 1}} \theta({\textrm{d}} t) \nonumber\\ & \qquad \qquad\qquad \qquad - 2 \omega_d \nu_{d} \int_0^a \int_0^{t} (t-s)^d e^{-B \,\omega_d \nu_{d} (s^{d+\tau +1}+t^{d+\tau+1})} \theta({\textrm{d}} s) \theta({\textrm{d}} t)\nonumber\\ &\, =\left(\frac{1}{B\,\omega_d \nu_{d} }\right)^{\frac{\tau+1}{d+\tau+1}} \Bigg[\int_{0}^b e^{- t^{d+\tau+1}}t^{\tau} {\textrm{d}} t -\frac{2}{B} \int_0^b \int_0^{t} (t-s)^d e^{-(s^{d+\tau+1}+t^{d+\tau+1})} t^{\tau} s^{\tau} {\textrm{d}} s {\textrm{d}} t\Bigg], \end{align}

where

![]() $b\,:\!=\,a(B \,\omega_d \nu_{d})^{1/(d+\tau+1)}$

. Writing

$b\,:\!=\,a(B \,\omega_d \nu_{d})^{1/(d+\tau+1)}$

. Writing

![]() $s=tu$

for some

$s=tu$

for some

![]() $u \in [0,1]$

, we have

$u \in [0,1]$

, we have

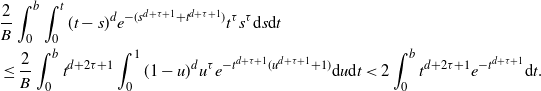

\begin{align} &\frac{2}{B} \int_0^b \int_0^{t} (t-s)^d e^{-(s^{d+\tau+1}+t^{d+\tau+1})} t^{\tau} s^{\tau}{\textrm{d}} s {\textrm{d}} t\\ & \le \frac{2}{B} \int_0^b t^{d+2\tau+1} \int_0^{1} (1-u)^d u^{\tau} e^{-t^{d+\tau+1}(u^{d+\tau+1}+1)} {\textrm{d}} u {\textrm{d}} t < 2\int_0^b t^{d+2\tau+1} e^{-t^{d+\tau+1}} {\textrm{d}} t.\end{align}

\begin{align} &\frac{2}{B} \int_0^b \int_0^{t} (t-s)^d e^{-(s^{d+\tau+1}+t^{d+\tau+1})} t^{\tau} s^{\tau}{\textrm{d}} s {\textrm{d}} t\\ & \le \frac{2}{B} \int_0^b t^{d+2\tau+1} \int_0^{1} (1-u)^d u^{\tau} e^{-t^{d+\tau+1}(u^{d+\tau+1}+1)} {\textrm{d}} u {\textrm{d}} t < 2\int_0^b t^{d+2\tau+1} e^{-t^{d+\tau+1}} {\textrm{d}} t.\end{align}

By substituting

![]() $t^{d+\tau+1}=z$

, it is easy to check that for any

$t^{d+\tau+1}=z$

, it is easy to check that for any

![]() $\rho>-1$

,

$\rho>-1$

,

where

![]() $\gamma$

is the lower incomplete gamma function. In particular, using that

$\gamma$

is the lower incomplete gamma function. In particular, using that

![]() $x\gamma(x,y)>\gamma(x+1,y)$

for

$x\gamma(x,y)>\gamma(x+1,y)$

for

![]() $x,y>0$

, we have

$x,y>0$

, we have

\begin{align} \int_{0}^b e^{- t^{d+\tau+1}}t^{d+2\tau+1} {\textrm{d}} t&=\frac{1}{d+\tau+1} \gamma\left(1+\frac{\tau+1}{d+\tau+1},b^{d+\tau+1}\right)\\ &<\frac{\tau+1}{(d+\tau+1)^2} \gamma\left(\frac{\tau+1}{d+\tau+1},b^{d+\tau+1}\right).\end{align}

\begin{align} \int_{0}^b e^{- t^{d+\tau+1}}t^{d+2\tau+1} {\textrm{d}} t&=\frac{1}{d+\tau+1} \gamma\left(1+\frac{\tau+1}{d+\tau+1},b^{d+\tau+1}\right)\\ &<\frac{\tau+1}{(d+\tau+1)^2} \gamma\left(\frac{\tau+1}{d+\tau+1},b^{d+\tau+1}\right).\end{align}

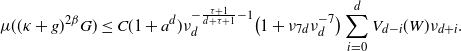

Thus, since

![]() $\tau\in ({-}1,d-1]$

,

$\tau\in ({-}1,d-1]$

,

\begin{equation*} \int_{0}^b e^{- t^{d+\tau+1}}t^\tau {\textrm{d}} t -\frac{2}{B} \int_0^b \int_0^{t} (t-s)^d e^{-(s^{d+\tau+1}+t^{d+\tau+1})} t^\tau s^\tau {\textrm{d}} s {\textrm{d}} t\\ >\gamma\left(\frac{\tau+1}{d+\tau+1},b^{d+\tau+1}\right) \frac{1}{d+\tau+1}\left[1- \frac{2(\tau+1)}{d+\tau+1} \right]\ge 0.\end{equation*}

\begin{equation*} \int_{0}^b e^{- t^{d+\tau+1}}t^\tau {\textrm{d}} t -\frac{2}{B} \int_0^b \int_0^{t} (t-s)^d e^{-(s^{d+\tau+1}+t^{d+\tau+1})} t^\tau s^\tau {\textrm{d}} s {\textrm{d}} t\\ >\gamma\left(\frac{\tau+1}{d+\tau+1},b^{d+\tau+1}\right) \frac{1}{d+\tau+1}\left[1- \frac{2(\tau+1)}{d+\tau+1} \right]\ge 0.\end{equation*}

By (3.8) and (3.4), we obtain the lower bound in (2.10).

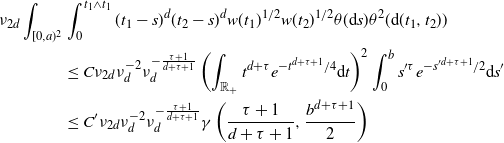

For the upper bound in (2.10), for

![]() $\theta$

as in (2.5), arguing as above we have

$\theta$

as in (2.5), arguing as above we have

Finally, substituting

![]() $s'=(B \,\omega_d \nu_{d})^{\frac{1}{d+\tau+1}} s$

and similarly for

$s'=(B \,\omega_d \nu_{d})^{\frac{1}{d+\tau+1}} s$

and similarly for

![]() $t_1$

and

$t_1$

and

![]() $t_2$

, it is straightforward to see that

$t_2$

, it is straightforward to see that

\begin{align} \nu_{2d} \int_{[0,a)^2} &\int_{0}^{t_1 \wedge t_1} (t_1-s)^d (t_2-s)^d w(t_1)^{1/2}w(t_2)^{1/2} \theta({\textrm{d}} s) \theta^2({\textrm{d}}(t_1,t_2)) \\ &\le C \nu_{2d} \nu_d^{-2} \nu_d^{-\frac{\tau+1}{d+\tau+1}} \left(\int_{\mathbb{R}_+} t^{d+\tau} e^{-t^{d+\tau+1}/4} {\textrm{d}} t\right)^2 \int_{0}^{b} s'^\tau e^{-s'^{d+\tau+1}/2} {\textrm{d}} s'\\ & \le C' \nu_{2d} \nu_d^{-2} \nu_d^{-\frac{\tau+1}{d+\tau+1}} \gamma\left(\frac{\tau+1}{d+\tau+1},\frac{b^{d+\tau+1}}{2}\right)\end{align}

\begin{align} \nu_{2d} \int_{[0,a)^2} &\int_{0}^{t_1 \wedge t_1} (t_1-s)^d (t_2-s)^d w(t_1)^{1/2}w(t_2)^{1/2} \theta({\textrm{d}} s) \theta^2({\textrm{d}}(t_1,t_2)) \\ &\le C \nu_{2d} \nu_d^{-2} \nu_d^{-\frac{\tau+1}{d+\tau+1}} \left(\int_{\mathbb{R}_+} t^{d+\tau} e^{-t^{d+\tau+1}/4} {\textrm{d}} t\right)^2 \int_{0}^{b} s'^\tau e^{-s'^{d+\tau+1}/2} {\textrm{d}} s'\\ & \le C' \nu_{2d} \nu_d^{-2} \nu_d^{-\frac{\tau+1}{d+\tau+1}} \gamma\left(\frac{\tau+1}{d+\tau+1},\frac{b^{d+\tau+1}}{2}\right)\end{align}

for some constants

![]() $C,C^{\prime}$

depending only on d and

$C,C^{\prime}$

depending only on d and

![]() $\tau$

. The upper bound in (2.10) now follows from (2.8) upon using the above computation and (3.4).

$\tau$

. The upper bound in (2.10) now follows from (2.8) upon using the above computation and (3.4).

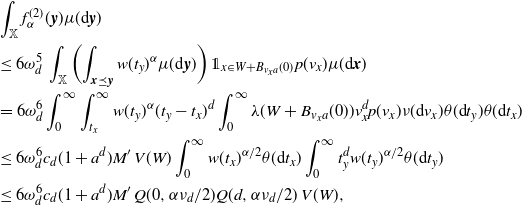

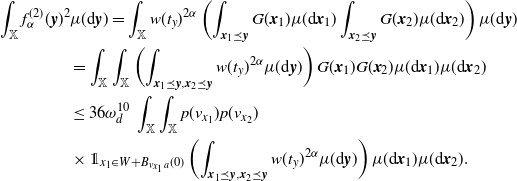

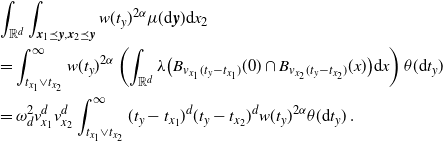

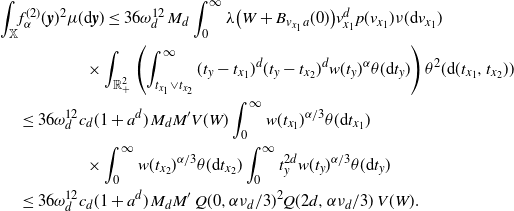

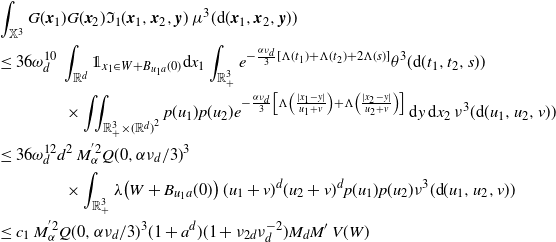

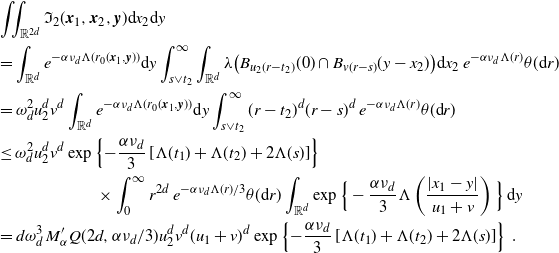

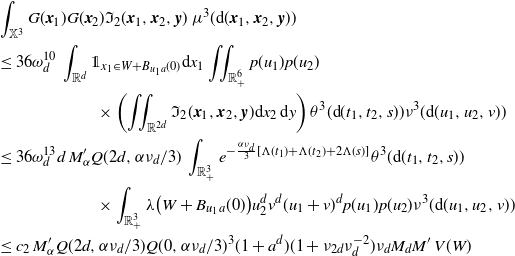

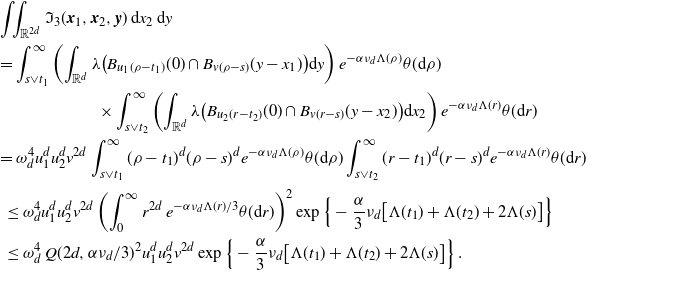

4. Proofs of the theorems

In this section, we derive our main results using [Reference Bhattacharjee and Molchanov3, Theorem 2.1]. While we do not restate this theorem here, referring the reader to [Reference Bhattacharjee and Molchanov3, Section 2], it is important to note that the Poisson process considered in [Reference Bhattacharjee and Molchanov3, Theorem 2.1] has the intensity measure

![]() $s\mathbb{Q}$

obtained by scaling a fixed measure

$s\mathbb{Q}$

obtained by scaling a fixed measure

![]() $\mathbb{Q}$

on

$\mathbb{Q}$

on

![]() $\mathbb{X}$

with s. Nonetheless, the main result is non-asymptotic, and while in the current paper we consider a Poisson process with fixed intensity measure

$\mathbb{X}$

with s. Nonetheless, the main result is non-asymptotic, and while in the current paper we consider a Poisson process with fixed intensity measure

![]() $\mu$

(without a scaling parameter), we can still use [Reference Bhattacharjee and Molchanov3, Theorem 2.1] with

$\mu$

(without a scaling parameter), we can still use [Reference Bhattacharjee and Molchanov3, Theorem 2.1] with

![]() $s=1$

and the measure

$s=1$

and the measure

![]() $\mathbb{Q}$

replaced by

$\mathbb{Q}$

replaced by

![]() $\mu$

. While still following the notation from [Reference Bhattacharjee and Molchanov3], we drop the subscript s for ease of notation.

$\mu$

. While still following the notation from [Reference Bhattacharjee and Molchanov3], we drop the subscript s for ease of notation.

Recall that for

![]() $\mathcal{M} \in \textbf{N}$

, the score function

$\mathcal{M} \in \textbf{N}$

, the score function

![]() $\xi({\boldsymbol{x}},\mathcal{M})$

is defined at (2.2). It is straightforward to check that if

$\xi({\boldsymbol{x}},\mathcal{M})$

is defined at (2.2). It is straightforward to check that if

![]() $\xi ({\boldsymbol{x}}, \mathcal{M}_1)=\xi ({\boldsymbol{x}}, \mathcal{M}_2)$

for some

$\xi ({\boldsymbol{x}}, \mathcal{M}_1)=\xi ({\boldsymbol{x}}, \mathcal{M}_2)$

for some

![]() $\mathcal{M}_1,\mathcal{M}_2 \in \textbf{N}$

with

$\mathcal{M}_1,\mathcal{M}_2 \in \textbf{N}$

with

![]() $0\neq \mathcal{M}_1 \leq \mathcal{M}_2$

(meaning that

$0\neq \mathcal{M}_1 \leq \mathcal{M}_2$

(meaning that

![]() $\mathcal{M}_2-\mathcal{M}_1$

is a nonnegative measure) and

$\mathcal{M}_2-\mathcal{M}_1$

is a nonnegative measure) and

![]() ${\boldsymbol{x}} \in \mathcal{M}_1$

, then

${\boldsymbol{x}} \in \mathcal{M}_1$

, then

![]() $\xi ({\boldsymbol{x}}, \mathcal{M}_1)=\xi ({\boldsymbol{x}}, \mathcal{M})$

for all

$\xi ({\boldsymbol{x}}, \mathcal{M}_1)=\xi ({\boldsymbol{x}}, \mathcal{M})$

for all

![]() $\mathcal{M}\in\textbf{N}$

such that

$\mathcal{M}\in\textbf{N}$

such that

![]() $\mathcal{M}_1\leq \mathcal{M}\leq \mathcal{M}_2$

, so that [Reference Bhattacharjee and Molchanov3, Equation (2.1)] holds. Next we check the assumptions (A1) and (A2) in [Reference Bhattacharjee and Molchanov3].

$\mathcal{M}_1\leq \mathcal{M}\leq \mathcal{M}_2$

, so that [Reference Bhattacharjee and Molchanov3, Equation (2.1)] holds. Next we check the assumptions (A1) and (A2) in [Reference Bhattacharjee and Molchanov3].

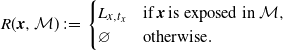

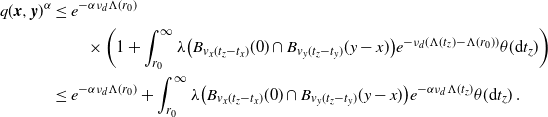

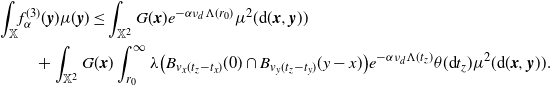

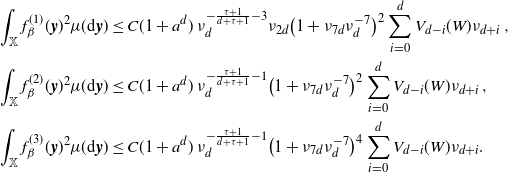

For

![]() $\mathcal{M} \in \textbf{N}$

and

$\mathcal{M} \in \textbf{N}$

and

![]() $x\in\mathcal{M}$

, define the stabilization region

$x\in\mathcal{M}$

, define the stabilization region

\begin{equation*}R({\boldsymbol{x}},\mathcal{M})\,:\!=\,\begin{cases}L_{x,t_x} & \text{if}\; {\boldsymbol{x}} \;\text{is exposed in\ $\mathcal{M}$},\\\varnothing & \text{otherwise}.\end{cases}\end{equation*}

\begin{equation*}R({\boldsymbol{x}},\mathcal{M})\,:\!=\,\begin{cases}L_{x,t_x} & \text{if}\; {\boldsymbol{x}} \;\text{is exposed in\ $\mathcal{M}$},\\\varnothing & \text{otherwise}.\end{cases}\end{equation*}

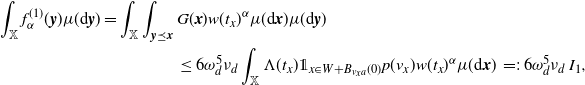

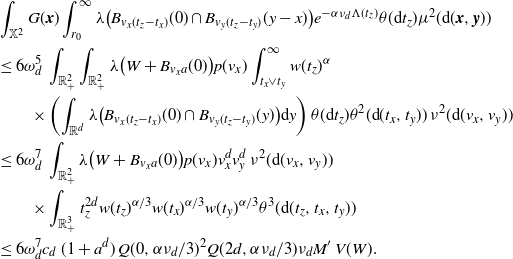

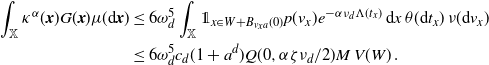

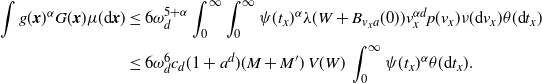

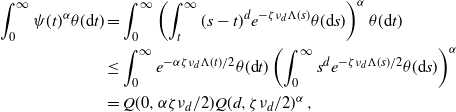

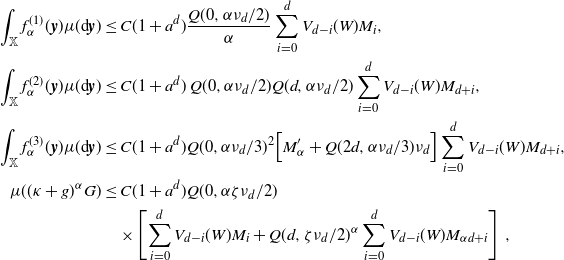

Notice that

and that

and

are measurable functions of

![]() $({\boldsymbol{x}},{\boldsymbol{y}})\in\mathbb{X}^2$

and

$({\boldsymbol{x}},{\boldsymbol{y}})\in\mathbb{X}^2$

and

![]() $({\boldsymbol{x}},{\boldsymbol{y}},{\boldsymbol{z}})\in\mathbb{X}^3$

respectively, with w(t) defined at (2.9). It is not hard to see that R is monotonically decreasing in the second argument, and that for all

$({\boldsymbol{x}},{\boldsymbol{y}},{\boldsymbol{z}})\in\mathbb{X}^3$

respectively, with w(t) defined at (2.9). It is not hard to see that R is monotonically decreasing in the second argument, and that for all

![]() $\mathcal{M}\in\textbf{N}$

and

$\mathcal{M}\in\textbf{N}$

and

![]() ${\boldsymbol{x}}\in\mathcal{M}$

,

${\boldsymbol{x}}\in\mathcal{M}$

,

![]() $\mathcal{M}(R(x,\mathcal{M}))\geq 1$

implies that

$\mathcal{M}(R(x,\mathcal{M}))\geq 1$

implies that

![]() ${\boldsymbol{x}}$

is exposed, so that

${\boldsymbol{x}}$

is exposed, so that

![]() $(\mathcal{M}+\delta_{\boldsymbol{y}})(R(x,\mathcal{M}+\delta_{\boldsymbol{y}}))\geq1$

for all

$(\mathcal{M}+\delta_{\boldsymbol{y}})(R(x,\mathcal{M}+\delta_{\boldsymbol{y}}))\geq1$

for all

![]() ${\boldsymbol{y}}\not\in R({\boldsymbol{x}},\mathcal{M})$

. Moreover, the function R satisfies

${\boldsymbol{y}}\not\in R({\boldsymbol{x}},\mathcal{M})$

. Moreover, the function R satisfies

where

![]() $\mathcal{M}_{R({\boldsymbol{x}},\mathcal{M})}$

denotes the restriction of the measure

$\mathcal{M}_{R({\boldsymbol{x}},\mathcal{M})}$

denotes the restriction of the measure

![]() $\mathcal{M}$

to the region

$\mathcal{M}$

to the region

![]() $R({\boldsymbol{x}},\mathcal{M})$

. It is important to note here that this holds even when

$R({\boldsymbol{x}},\mathcal{M})$

. It is important to note here that this holds even when

![]() ${\boldsymbol{x}}$

is not exposed in

${\boldsymbol{x}}$

is not exposed in

![]() $\mathcal{M}$

, since in this case, the left-hand side is 0 where the right-hand side is 0 by our convention that

$\mathcal{M}$