Policy Significance Statement

For public officials and non-governmental organisations working on AI, this paper recommends exploring existing policy and legislation related to algorithms, automation, data, and digital technology. There are opportunities to draw from previous experiences with multi-stakeholder forums, accountability and human oversight, and model management, amongst others, and to use existing regulatory capabilities for realising responsible AI outcomes.

1. Introduction

There has been significant progress with digital governance in African countries over the past decade; from the passing of personal data protection and cybercrime legislation to the development of national data strategies (Ndubueze, Reference Ndubueze, Holt and Bossler2020; Daigle Reference Daigle2021). More recently, a range of frontier technologies have garnered special attention, usually as part of a wider “fourth industrial revolution” (4IR) agenda (Ayentimi and Burgess, Reference Ayentimi and Burgess2019). Distributed ledger technology, Internet of Things, and unmanned aerial vehicles are a few that have been especially prominent, and in some cases addressed through dedicated strategies.Footnote 1 However, it is artificial intelligence (AI) that has come to dominate much of this emerging technology narrative, mainly because it, along with data, is seen to be a “general purpose technology” that is embedded in or transforming other digital domains and society broadly.Footnote 2

As a result, African states are developing national AI strategies and establishing AI advisory bodies as a vanguard for driving technology development and use. Whilst much of the emphasis on positive benefits arising from AI use, there is also a concern about potential negative effects and charting a more “responsible” approach to AI adoption (Gwagwa, Reference Gwagwa, Kachidza, Siminyu and Smith2021; Shilongo et al., Reference Shilongo, Gaffley, Plantinga, Adams, Olorunju, Mudongo, Schroeder, Chanthalangsy, Khodeli and Xu2022). The notion of “responsible AI” has emerged as a widely used heuristic for ethical AI governance. The term refers to the ethical development, deployment, and use of AI systems that prioritise fairness, accountability, transparency, and societal benefit. The concept underpins a variety of international frameworks for AI governance including the Organisation for Economic Co-operation and Development (OECD) AI PrinciplesFootnote 3, the European Union (EU) Ethics Guidelines for Trustworthy AIFootnote 4, and the Institute of Electrical and Electronics Engineers (IEEE) Global Initiative on Ethics of Autonomous and Intelligent Systems.Footnote 5

Under the rubric of responsible AI, there is a sense that the wide social impact associated with AI adoption requires an elevated level of policy and legislative action to accelerate progress and coordinate a governance response across many sectors. However, these approaches towards AI also carry the risk of “exceptionalism” (McQuillan, Reference McQuillan2015; Calo, Reference Calo2015) around AI governance that overlooks existing institutional and policy roles and capabilities and does not fully consider local digital and data histories. Perceptions that AI possesses unique qualities or capabilities that set it apart from other forms of technology can lead to exaggerated expectations or fears about its potential impact on society, which in turn has the potential to drive policy processes that are disconnected from their broader environment. This is likely to result in various unintended consequences. Amongst others, we may see resistance to proposed laws, a duplication of governance roles and resource requirements, and increased complexity of decision-making and regulation across the digital governance ecosystem. All of this is particularly concerning for African countries’ technology governance as it can reinforce elite dominance of policy activity and an even greater public official dependence on consultants, potentially stifling beneficial innovation and increasing the risk of harm (Guihot et al., Reference Guihot, Matthew and Suzor2017; Larsson and Heintz, Reference Larsson and Heintz2020; Smuha, Reference Smuha2021; Plantinga, Reference Plantinga2024). Moreover, the current approach disregards existing policy and regulatory capabilities that already address the use of data, algorithms, automation, and information and communication technology (ICT) and that offer opportunities for policy learning and adaptation to emerging needs.

Building a responsible AI ecosystem requires a broad range of capabilities, amongst which interdisciplinary collaboration is particularly important. For responsible AI policy to be developed, policymakers require domain-specific governance capabilities with respect to legislation and regulation. However, the challenges of AI also require cross-cutting technical expertise; for example, a sufficient understanding of machine learning (ML) and algorithms. This needs to be matched with capabilities for engaging with social and ethical issues, drawing on insights emerging from the social sciences regarding the implications of AI for culture, behaviour, employment, education, and human interactions, and debates about ethical issues such as fairness, transparency, accountability, privacy, and autonomy (Filgueiras et al., Reference Filgueiras, Mendonça and Almeida2023).

The aim of this paper is to develop a richer picture of where these capabilities exist and to consider pathways for facilitating policy learning around a responsible way forward for AI in Africa. It is critical that policy actors acquire knowledge and insights from past experiences, data, experimentation, and adjacent fields to inform the development and refinement of AI governance and regulation. Such policy learning is particularly important in the African context, as policy stakeholders need to address multidimensional societal challenges whilst navigating distinctive institutional environments and political aspirations. A widely promoted objective on the African continent is to “leapfrog” technology adoption, and this is often justified by examples of mobile telephony and fintech where the conventional infrastructure and services of fixed-line telephones and branch-based banking were “skipped” over (Swartz et al., Reference Swartz, Scheepers, Lindgreen, Yousafzai and Matthee2023). At the same time, there continue to be challenges building sufficient state capacity to effectively support and regulate emerging industries and to realise leapfrogging or other social and economic goals, with a significant dependence on international donor funding and consultants (Heeks, Reference Heeks2002). A contextually appropriate approach to AI governance requires deeper reflection on technology possibilities and how available capacities can be strengthened or prioritised in pursuit of certain technical objectives. But there is also a more fundamental need to consider what values and ethics constitute our societies and to allow these to open up alternative pathways to AI governance and adoption (Mhlambi and Tiribelli, Reference Mhlambi and Tiribelli2023).

To map existing capabilities, and to identify potential areas for policy learning, we first review existing literature to consider whether and how a more “moderate” (Calo, Reference Calo2015) or contextually anchored form of exceptionalism (if any) may be applied to AI governance on the African continent. Then, we use the UNESCO Recommendation on the Ethics of AI (“UNESCO Recommendation”) (UNESCO, 2021a) as a relatively holistic framework to identify domains and subdomains relevant to AI governance. Finally, we apply (and add to) this framework through a study of emerging and established AI, algorithm, automation, data, and ICT governance examples from twelve African countries and consider how they could be relevant to AI governance going forward.

2. Policy learning for AI governance

In seeking a more granular view of AI-relevant governance capabilities, there is a need to better understand what AI, algorithms, and automation are, who is involved in policy and legislation activities, and how governance takes place. Unfortunately, science fiction depictions of AI continue to reinforce its exceptional character and lead to vague narratives about its operation and (future) impact on society. When the scope of a definition is too broad, the potential for developing impactful policy instruments or enforcing regulation is low. As Buiten suggests, “the narrative of AI as an inscrutable concept may reinforce the idea that AI is an uncontrollable force shaping our societies” (Reference Buiten2019: 46). Even our attempts to support a more responsible approach to AI can reinforce this thinking. For example, in seeking to develop regulations that can “control” an “intelligent” technology’s “behaviour” or ensure it is “trustworthy,” we risk associating it with human characteristics and creating the impression of autonomy that is not there. Similar considerations apply to the use of metaphors, a key tool for policy actors involved in cyberlaw and related fields (Calo, Reference Calo2015; Sawhney and Jayakar, Reference Sawhney, Jayakar, Cherry, Wildman and Hammond1999). It is therefore important to develop a more specific language and appropriate metaphors around how AI is developed and used.

2.1 Defining AI and implementation principles

We can start by looking at how AI is defined in current policy narratives. Whilst the UNESCO Recommendation points to an AI system’s “capacity to learn” as a core capability, it does identify two broad sets of methods; more human-configured, “symbolic” machine reasoning and more data-driven, “neural” ML (UNESCO, 2021a:10; Sarker et al., Reference Sarker2021). Similarly, the OECD suggests that AI models may be built using data, with automatic updates as new data are received; and/ or human knowledge, which tends to be more static in the form of fixed rules that determine automated responses or actions (OECD, 2019a; OECD, 2022). Both the OECD and UNESCO policy statements therefore include a mix of both data-driven and symbolic forms of AI separately or in a hybrid form and are centred on the more or less automatic operation of a computer system. For the regulation of more symbolic forms of AI, the focus is likely to be on how humans configure fixed rules and whether the system operates as expected. For more neural approaches to AI, much of the regulatory attention will be on the type of data that is used for training models, how the training is performed, the way new data are used to update the model, and the accuracy (and fairness) of predictions or automated actions (Mehrabi et al., Reference Mehrabi, Morstatter, Saxena, Lerman and Galstyan2021). In the second case, our understanding of “automatic” is potentially much broader, applying to both the operation of the model and also how the model is continually updated.

Defining “responsible” AI requires us to look beyond the technology at the wider network of people and infrastructure in which the technology is deployed, and at the values or principles informing its development and use (Raso, Reference Raso, Tomlinson, Thomas, Hertogh and Kirkham2021). This is particularly important in African contexts where the gap in values or culture has been acknowledged as a key reason for the failure of many information technology projects on the continent (Heeks, Reference Heeks2005). Transparency, accountability, and fairness are examples of ethical principles that are now quite widely promoted and adopted (OECD, 2021). However, AI principles (and their definitions) can vary across contexts. One of these principles is autonomy. Reflecting on the implications of AI for autonomy, Mhlambi and Tiribelli (Reference Mhlambi and Tiribelli2023) note that this principle is usually conceived of in an individual, rational sense. However, they argue, in many African contexts autonomy is relational, grounded in the idea that an “individual cannot exercise autonomy for an individual is connected to and is a person through others” (Mhlambi and Tiribelli, Reference Mhlambi and Tiribelli2023: 875). Reflecting this perspective, the African Charter on Human and Peoples’ Rights (ACHPR) is distinctive amongst international treaties by including “third generation” collective or solidarity rights, in addition to individual rights. It therefore provides a potential basis for a more relational form of AI governance. However, whilst much has been made of grounding AI adoption in relational African values, there is a need for concrete engagement with what those values look like and how individual and collective rights are balanced with respect to AI (Metz, Reference Metz and Okyere-Manu2021).

2.2. Governance stakeholders and structures

As noted earlier, the general-purpose nature of AI and its expected impact on society has been used to justify a more centralised approach to its governance. However, AI policy and regulation have both horizontal and vertical dimensions. Horizontal dimensions apply to AI in general, regardless of the sector or domain of application. Typical horizontal questions include those of data privacy, bias, intellectual property rights, infrastructure, skills and capabilities, and societal risk. At the same time, the application and regulation of AI is characterised by major differences across sectors. In the OECD’s 2021 update on AI policy, almost all of the evaluated countries have focused on health care but a few others are prominent, including transportation, agriculture, energy, and public administration (OECD, 2021: 42). These are similar to the sector priorities identified by African public officials in a recent survey (Sibal and Neupane, Reference Sibal and Neupane2021) and provides an indication of who the key stakeholders in AI governance may have been so far.

In seeking to frame AI governance through an African lens, Wairegi et al. (Reference Wairegi, Omino and Rutenberg2021), suggest that stakeholders may be understood through the different normative claims they make with respect to the technology’s implementation. These claims are centred on three basic stakes: economic opportunity, political equality, and authenticity. Much of the early governance space on AI was occupied by private sector entities prioritising economic opportunity for the firm, whilst governments have been looking to assert leadership and shifting the focus towards political equality. As a result, AI governance has taken a hybrid form with governments sharing responsibility with academia and the private sector (Radu, Reference Radu2021). Hybrid models are seen as valuable to governments because much of the knowledge about the technology is concentrated in private sectors or academic organisations (Taeihagh, Reference Taeihagh2021).

In reality, the drive for responsible AI governance has enrolled a diverse spectrum of expertise, spanning legal, technology, business, and social domains (Filgueiras et al., Reference Filgueiras, Mendonça and Almeida2023). Clearly, technology and operational expertise are pivotal for explaining how systems operate and identifying opportunities and risks that need technology, legal, or social responses. Social and governance expertise is essential for understanding the societal impacts and ethical implications of AI technologies, and in fostering inclusive dialogue to address concerns related to equity, bias, and fairness. Going further, there have been attempts to facilitate bottom-up engagement in policy formulation. Here, the wider “public” may be involved in a “social dialogue” about what should form part of a national AI strategy (OECD, 2021: 20). This dovetails with UNESCO’s support for more bottom-up approaches to AI policy and oversight in Africa, which encourages broad public involvement to ensure that AI adoption is “rooted” in local reality (UNESCO, 2022). Ultimately, this underscores the interdisciplinary nature of AI governance and emphasises the importance of fostering collaboration across diverse fields of knowledge and practice.

In general, however, the technical nature of AI, the pace of policy activity, and the breadth of expected policy impact mean that only a very small proportion of potentially affected stakeholders form part of policy deliberations (Radu, Reference Radu2021). In addition, there is a significant asymmetry in access to information amongst the different actors, such as between technology companies and local regulators (Taeihagh, Reference Taeihagh2021). In AI, this asymmetry is exacerbated by the opaque character of the technology (Rudin, Reference Rudin2019). As a result, many African states are unable to assemble sufficient internal policy and administrative capacity to effectively navigate emerging technologies and engagements with global platform companies (Heeks, Reference Heeks, Gomez-Morantes, Graham, Howson, Mungai, Nicholson and Van Belle2021).

2.3. Policy learning

One way in which African states are seeking to build governance expertise—and address the uncertainty about AI impact and the effectiveness of governance actions—is by adopting adaptive policy and regulatory approaches (Taeihagh, Reference Taeihagh2021). These are usually anchored in one or more “soft” law approaches and usually involve an iterative approach to policy development and adjustment as information is received about the effectiveness (or not) of interventions. Some of these strategies include temporary and experimental legislation with sunset clauses; anticipatory rulemaking that draws on decentralised, institution-specific feedback; increased use of data collection and analysis to assess regulatory impact; iterative development of common law; and legal foresighting to explore future legal developments and to develop shared understanding and language about future possibilities (Guihot et al., Reference Guihot, Matthew and Suzor2017: 443–444).

A widely promoted instrument in African AI governance is the regulatory sandbox. This interest in sandboxes may have arisen from previous exposure to them around the fintech space but also in public administration settings.Footnote 6 As the World Economic Forum (WEF) suggests, sandboxes allow for technology to be tested in as “real an environment as possible before being released to the world” (WEF, 2021: 20). A sandbox aims to support cooperation between regulatory authorities and with other stakeholders and to create a controlled environment for testing and understanding the regulatory implications of AI (OECD, 2023: 19).

Another mechanism of interest is an independent evaluation or assurance organisation that can assess the suitability of different AI-based technologies for adoption. For example, the ITU-WHO Focus Group on Artificial Intelligence for Health aims to establish a “standardized assessment framework for the evaluation of AI-based methods for health, diagnosis, triage or treatment decisions.”Footnote 7 At the same time, there are calls for independently produced empirical evidence to demonstrate the safety and efficacy of these technologies, which is critical for their introduction into certain sectors (for example, see Seneviratne et al., Reference Seneviratne, Shah and Chu2019).

Whilst sandboxes and independent assessment may help policy officials grow their understanding of emerging technologies and the possible impact of a specific product on consumers, they provide more limited insights into the systemic effects associated with the introduction of data-driven digital services. In addition, because sandbox interactions are generally quite intimate environments, unless they are carefully designed to incorporate a broader community of participants, along with rules on transparency or disclosure, they can further obscure the already opaque character of technology-regulatory relationships (Wechsler et al., Reference Wechsler, Perlman and Gurung2018). The same applies to pilot projects and public–private partnerships (PPPs) where governments involve “investor” partners to share the costs and risks, often using proprietary technologies or implemented under confidential arrangements and in the presence of immature or non-existent regulatory safeguards. All of which can increase the risk of regulatory capture and lock-in to certain business models (Guihot et al., Reference Guihot, Matthew and Suzor2017). Again, these critiques reinforce the concern that, via these types of partnership models, AI is coming into use through a “state of exception” (McQuillan, Reference McQuillan2015: 568).

Therefore, understanding how AI implementation is (and can be) embedded in existing values and rights-based frameworks (e.g. country constitutions, public service charters, and international commitments) and legal safeguards (e.g. public procurement and data protection regulations) is critical for its ongoing legitimacy and sustainable adoption on the African continent. We must also be aware of path dependence and how established processes and capabilities, and the lack thereof, determine future outcomes; as well as the need for various supportive institutional and governance preconditions to be met for adaptive or dynamic forms of policymaking to be successful (Moodysson et al., Reference Moodysson, Trippl and Zukauskaite2017; Guihot et al., Reference Guihot, Matthew and Suzor2017). A key concern of this paper is the lack of awareness of what exists or has been done previously. Often, with emerging technologies, new “advocacy communities” develop that may not be familiar with work taking place in adjacent sectors and in previous generations of governance. In addition, the institutional memory associated with public officials and politicians may be lost when changing roles or committees across election cycles (Rayner, Reference Rayner2004). To address these issues, policy actors may look to support “institutional entrepreneurship” and policy learning across the wider socio-economic system (Labory and Bianchi, Reference Labory and Bianchi2021: 1836).

3. Methodology

The objective of this paper is to contribute to a greater awareness of established and emerging policy and legislative capabilities relevant to Africa’s AI governance. To do so, we conducted a desktop-based, qualitative review of content contained in public policy documents, legislation, and government websites across twelve African countries. The research process involved two main steps that were implemented and updated iteratively as information sourcing and analysis progressed.

3.1 Development of initial framework

The first step involved the development of an initial framework based on a content analysis of the Policy Areas section of the UNESCO Recommendation. Whilst several other global and regional initiatives provide guidance on AI governance, the UNESCO Recommendation aligns closely with the main basis of our research: that AI development and use should be sensitive to regional diversity, context, and impact, with “due regard to the precedence and universality of human rights” (UNESCO, 2021a: 28) and the primacy of the rule of law (Adams, Reference Adams2022). Whilst the Policy Areas of the UNESCO Recommendation tend to focus on themes relevant to the entity’s mandate, such as education and culture, the text goes broader in considering a range of social and economic instruments and outcomes. In addition, we sourced information from other frameworks and statements, such as the OECD’s Recommendation of the Council on Artificial Intelligence (OECD, 2019b), to expand on the sub-Policy Areas discussed below.

3.2 Sample country and content analysis, with framework review

The second step involved desktop-based policy and legislation sourcing and content analysis, according to the following inclusion criteria:

-

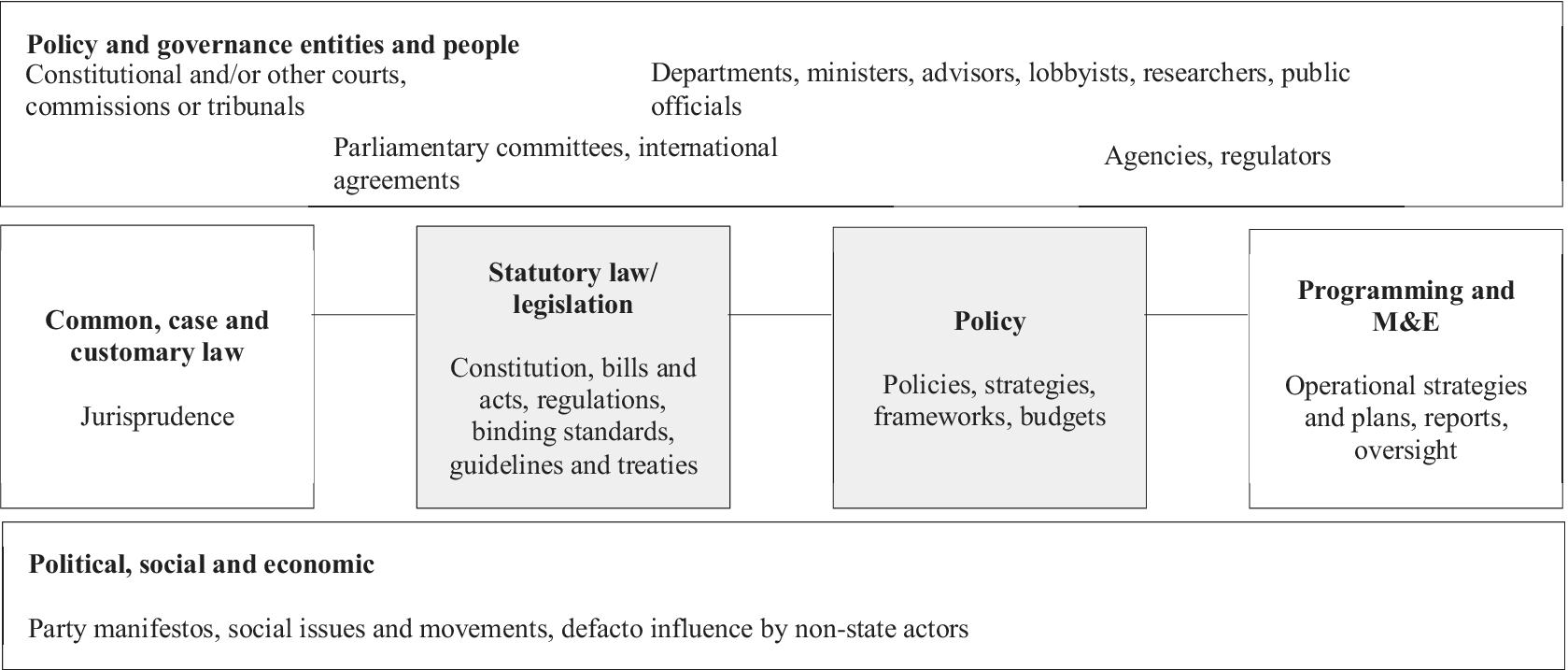

• Type of information sources: As indicated by the grey shading in Figure 1, information sourcing was focused on public interest policy and legislation documents published by executive and legislative branches of government—both approved or enacted, and draft or tabled. This means that we excluded material on jurisprudence, intra-organisational or departmental policies, the status of implementation of policy or governance interventions, and evidence of non-governmental or de facto influence on public interest decision-making.

-

• Jurisdiction: Only content from the national level of government was included, although national government references or activities related to both international and sub-national levels of governance were included.

-

• Date range: The focus of content sourcing was on material published between 2018 and 2023. However, where there were influential pieces of policy or legislation published at any time, such as constitutional provisions on human rights, these were included or referenced.

-

• Policy areas: At first, all policy areas from the UNESCO Recommendation framework were explored at a high level to identify domains in which there were potentially interesting cases of existing algorithm, automation, data, ICT, and AI-related governance. Then, a limited number of focus Policy Areas and sub-Policy Areas were selected for in-depth content analysis. The final sub-Policy Areas included were the following:

-

1. Strategy and multi-stakeholder engagement: This is a sub-Policy Area of Ethical governance and stewardship and typically included AI, 4IR, ICT, and digital strategies developed out of a ministry of ICT or president’s office; usually with the aim of initiating a cross-cutting programme of work across several sectors and attempting to involve multiple stakeholders in governance activities.

-

2. Human dignity and autonomy: This is a cross-cutting sub-Policy Area of Communication and information, Health and social well-being and Gender. Here, the focus was on electronic transactions legislation that enables and defines the boundaries of, automated information processing, including requirements for accountability and human oversight.

-

3. Sector-specific governance: This focuses on sub-Policy Areas related to Economy and labour, Public sector adoption, and Environment and ecosystems.

-

-

• Countries: Ten African countries were included in the analysis based on the location of work by research partners, largely around Southern and East Africa: Botswana, Kenya, Malawi, Mauritius, Mozambique, Namibia, Rwanda, South Africa, Zambia, and Zimbabwe. Two additional countries were added to ensure the sample included cases from West and North Africa: Benin and Egypt. Whilst the included countries are biased towards the southern and eastern regions and a single language (English), they do cover a broad spectrum of population and economic sizes and are therefore a useful starting point for comparative analysis.

Figure 1. Policy and legislative landscape with scope of data collection highlighted in grey.

The process of desktop information collection involved identifying and browsing key government department or agency websites for each country, usually the ministry of ICT, ICT regulatory authority, state ICT agency, and personal data protection authority. We also consulted regional and global legislation databases, including ICT Policy AfricaFootnote 8, International Labour Organisation (ILO) NATLEX,Footnote 9 and World Intellectual Property Organization (WIPO) Lex Database.Footnote 10 Finally, we performed an open internet search for AI-related policy or legislative activities for each country. In this way, we were able to locate the majority of relevant documents and, by triangulating across these sources, determine whether we had saturated document sourcing on the most relevant or recent material.

To explore the retrieved documents, and to support inclusion or exclusion decisions, we started with a search for algorithm, automation, and intelligence-related keywords. This allowed us to quickly identify text that had some direct relevance to AI policy or regulatory capabilities. We then performed more in-depth scanning and reading of documents to understand the context of policy or legislation and to identify other relevant sections of text. This was used in the final analysis and compilation of findings below. Initial actions were therefore largely deductive, guided by the UNESCO Recommendation and our initial framework, and became more inductive as content was analysed and the framework was updated.

In the following sections, we provide an overview of the UNESCO Recommendation and our initial framework. We then present results from the content extraction and analysis from the selected countries.

4. Learning from the UNESCO Recommendation

The UNESCO Recommendation was signed by all 193 member states in November 2021 as “a globally accepted normative instrument” to “guide States in the formulation of their legislation, policies or other instruments regarding AI, consistent with international law” (UNESCO, 2021a: 14). Whilst adoption of this type of recommendation by member states is voluntary, by being endorsed at the highest level they are seen as “possessing great authority” and “intended to influence the development of national laws and practices.”Footnote 11 The intention is clear that the statements in the UNESCO Recommendation are meant to set a direction for national AI policy and legislation.

The voluntary nature but also the explicit intent to influence is important to keep in mind as the UNESCO Recommendation is similar to other recommendations in instructing states to adopt quite specific values, principles, and policy actions. This can seem at odds with calls for, and the reality of, more bottom-up governance processes that governments and citizens may be pursuing.

In structure, the UNESCO Recommendation is similar to, for example, the UNESCO Recommendation on Open Science, also published in 2021 (UNESCO, 2021b). The core of the document outlines a set of values that inspire “desirable behaviour,” followed by a set of principles that “unpack the values underlying them more concretely” so that “the values can be more easily operationalized” in the third part: a set of policy statements and actions that states should or must do (UNESCO, 2021a: 18).

In developing its rationale, the UNESCO Recommendation suggests that ethical values and principles “can help develop and implement rights-based policy measures and legal norms” (UNESCO, 2021a: 6). It is therefore deeply invested in ethics as a vehicle for shaping human rights-based policy instruments and law as the main drivers of responsible AI outcomes. Without exploring questions about the role of ethics in AI—especially as it relates to other critical themes such as decolonisation, autonomy, and the politics of intelligence (Adams, Reference Adams2021; Mhlambi and Tiribelli, Reference Mhlambi and Tiribelli2023)—it is notable that the UNESCO Recommendation draws on ethics as a “holistic,” “comprehensive” framework of “interdependent values, principles and actions” to guide society’s response to AI (UNESCO, 2021a: 10). It is therefore ambitious in the breadth of its attempt to define an agenda for responsible AI governance.

Following the discussion in the previous sections of this paper, it is interesting to see how the UNESCO Recommendation approaches some of these themes. For example, as noted earlier, the definition of AI in this document is similar to that used by the OECD by including a broad spectrum of approaches between more symbolic/ human-configured and more neural/ data-driven approaches.

From a sector perspective, as may be expected, the UNESCO Recommendation focuses on AI implications in relation to “the central domains of UNESCO: education, science, culture, and communication and information” (UNESCO, 2021a: 10). Nonetheless, significant weight is given to economic and environmental sectors, amongst others.

Of particular relevance to this paper is whether and/ or how the UNESCO Recommendation provides guidance on governance processes and arrangements. There is a strong emphasis on alignment with international human rights law. It also suggests that the “complexity of the ethical issues surrounding AI necessitates the cooperation of multiple stakeholders” (UNESCO, 2021a: 14). And so there is regular encouragement for multi-stakeholder cooperation and public consultation. In some areas, the guidance is more specific, such as proposing states establish a “national commission for the ethics of AI” (UNESCO, 2021a: 41). Also, focusing on the use of AI by public authorities, governments “should” adopt a regulatory framework that sets out the procedure for ethical impact assessment with associated auditability and transparency, including multidisciplinary and multi-stakeholder oversight (UNESCO, 2021a: 26). Otherwise, aside from references to personal data protection and an emphasis on aligning with international human rights law, there is an occasional mention of existing policy or legislation, and associated governance arrangements or policy learning processes. Most significant is a paragraph under Policy Area 2: Ethical Governance and Stewardship that gives countries a sense of the governance journey; from establishing norms to developing legislation, and learn-while-doing through prototypes and regulatory sandboxes:

In order to establish norms where these do not exist, or to adapt the existing legal frameworks, Member States should involve all AI actors (including, but not limited to, researchers, representatives of civil society and law enforcement, insurers, investors, manufacturers, engineers, lawyers and users). The norms can mature into best practices, laws and regulations. Member States are further encouraged to use mechanisms such as policy prototypes and regulatory sandboxes to accelerate the development of laws, regulations and policies, including regular reviews thereof, in line with the rapid development of new technologies and ensure that laws and regulations can be tested in a safe environment before being officially adopted (UNESCO, 2021a: 28).

More recently, UNESCO has published a Readiness Assessment Methodology (RAM) to assist member states assess their current skills and infrastructure readiness to develop and adopt AI, as well as their institutional and regulatory capacity to “implement AI ethically and responsibly for all their citizens” (UNESCO, 2023: 6). The RAM goes on to explore whether countries have, for example, developed an AI strategy, or if there are indirect influences on AI-related regulation. It also reflects on a range of potentially relevant legislation and policy, starting with data protection but extending to due process and public sector capacity.

Whilst acknowledging that there are likely to be concerns and questions about the UNESCO Recommendation and RAM content, and how it is to be interpreted or implemented locally, these documents do provide a relatively comprehensive narrative to start exploring the landscape of policy and legislation that AI initiatives may intersect with.

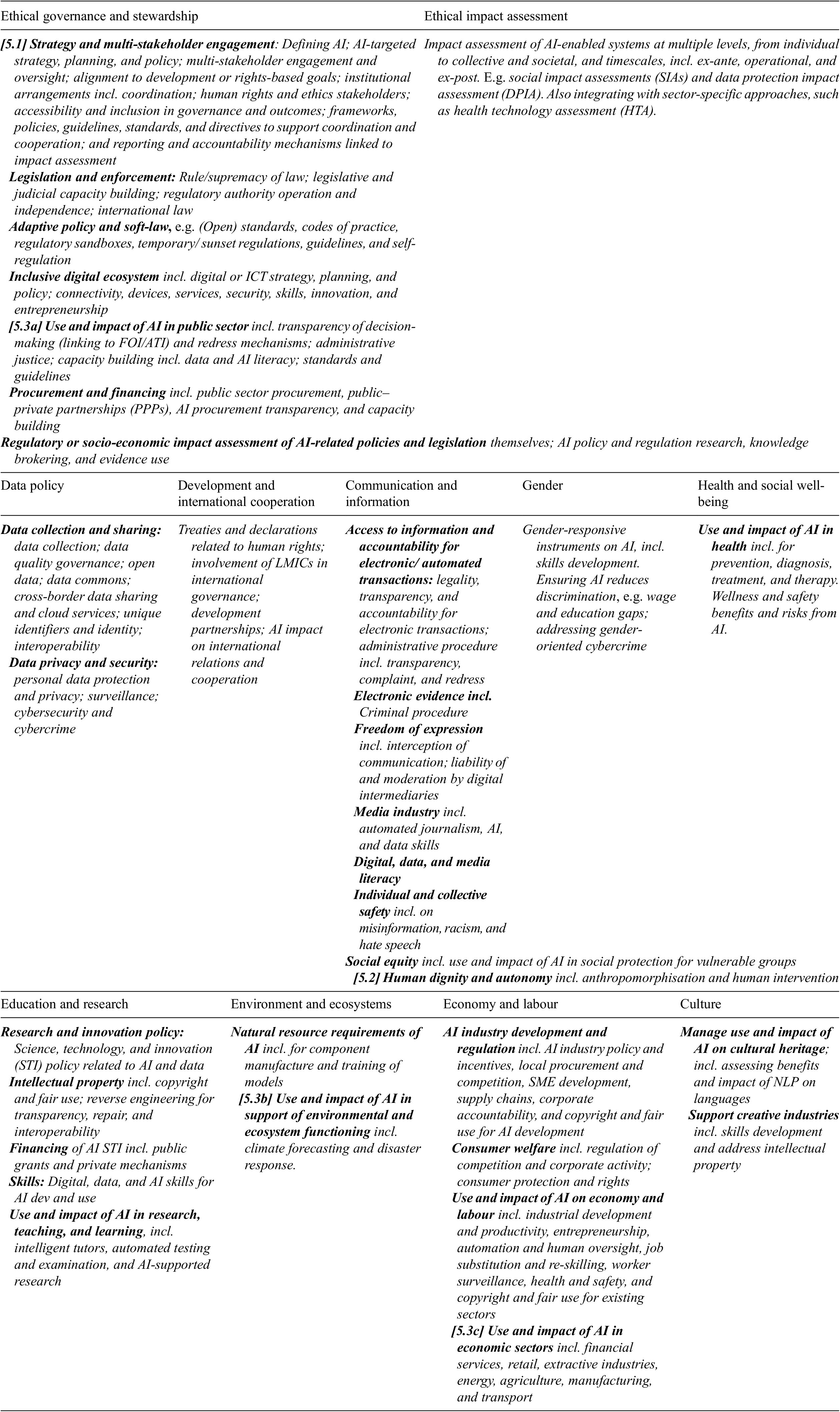

Based on this narrative, we developed a first version of a framework that was then updated to a final version during the country analysis, outlined in Table 1. The framework was mainly used to organise the Policy Areas of the UNESCO Recommendation into a quick reference for sourcing, screening, and analysis, and as a placeholder for adding sub-Policy Areas and associated content as the analysis progressed. The following section presents the results of our analysis and highlights potential opportunities for policy learning.

Table 1. Legislation and policy activities identified via Policy Areas in UNESCO Recommendation on the Ethics of AI

5. Learning from policy and legislation in Africa

There is an established and growing history of technology governance in African countries that will be critical to AI adoption going forward. To better leverage existing capabilities for AI, we may look to the experiences and critiques covering decades of information and decision-support system implementation, often in niche applications; from the medical expert systems of the 1980s (Forster, Reference Forster1992) to the growth of personal data protection legislation (Daigle, Reference Daigle2021), the expansion of digital and biometric national identity systems (Breckenridge, Reference Breckenridge, Dalberto and Banégas2021), and wider e-government and statistical or open data programmes (Davies et al., Reference Davies, Walker, Rubinstein and Perini2019).

More recently, there have been investigations into how existing law applies to automated data processing (Makulilo, Reference Makulilo2013) and now more explicitly to AI (Shilongo et al., Reference Shilongo, Gaffley, Plantinga, Adams, Olorunju, Mudongo, Schroeder, Chanthalangsy, Khodeli and Xu2022). For example, a common provision in personal data protection legislation is that data subjects cannot be subject to legal or similar effects arising from a decision based solely on automatic data processing. However, this constraint is limited to decisions and the use of personal data which means that other types of data and forms of data use are not included (Razzano et al., Reference Razzano, Gillwald, Aguera, Ahmed, Calandro, Matanga, Rens and van der Spuy2020: 45).

The interest in AI has elevated discussions about algorithm, data, and technology ethics and helped deepen our understanding of their often unequal impact on different populations. So, the current window of AI-related activity is also an opportunity to improve our algorithm, automation, and data policy awareness and to critically reflect on which governance capabilities need to be strengthened (or new ones created).

5.1 Strategy and multi-stakeholder engagement

The first Policy Area explored is Strategy and multi-stakeholder engagement. A growing number of countries are pursuing national AI strategies. Their character is reflected in Smart Africa’s AI BlueprintFootnote 12 which suggests that an AI strategy is important for demonstrating leadership and setting an inclusive vision at a national level. As a result, one of the key tensions to manage is the role of different national ministries and their cooperation. Nonetheless, ministries of ICT seem to be the main home for AI-related policy; from EgyptFootnote 13 and BeninFootnote 14 to South AfricaFootnote 15 and Rwanda.Footnote 16

The way in which these strategies have involved different stakeholders is similar. Like Mauritius,Footnote 17 Egypt’s strategy was developed by a national council made up of government officials, “independent experts,” and private sector input.Footnote 18 South AfricaFootnote 19 and Kenya’sFootnote 20 reports addressing AI policy direction were also developed by government-academia-private sector advisory bodies.

Soft law and multi-stakeholder mechanisms are at the centre of these countries’ proposed approach to AI governance. Sandboxes are mentioned regularly in strategies and are active in a number of countries, mainly in the fintech space.Footnote 21 The sandbox idea is noted in the Benin National AI and Big Data Strategy where one of the specific objectives is to “update” the institutional and regulatory framework for AI by establishing “a controlled environment for the development of AI initiatives.”Footnote 22 Often these types of initiatives involve private sector or donor support in the form of finance, skills, and technology.

In general, PPPs and donor partnerships are seen as critical to the development of ICT infrastructure, services, skills, and governance capabilities on the continent. Rwanda is establishing various mechanisms in this direction, such as a “shared risk fund” for government AI projects and a co-investment fund for AI start-ups and incubators.Footnote 23 Beyond AI, in Malawi, PPPs are important to the financial services regulatory sandbox hosted by the Reserve Bank of MalawiFootnote 24 and in the development of government e-services.Footnote 25 In addition, the World Bank-financed Digital Malawi Project is coordinated by the PPP Commission (PPPC) and governed by the PPP ActFootnote 26, the Public Procurement ActFootnote 27, and the World Bank’s procurement rules. This arrangement seems to support a relatively transparent procurement and implementation approach with regular updates on the project website about bidding and award activities. In addition, the public is able to raise concerns through a Grievance Redress Mechanism.Footnote 28 There is also some recognition of the need for public officials to be equipped to manage PPPs. Malawi’s Digital Government Strategy emphasises the need to build capabilities in government departments and agencies “for the purpose of managing sourcing of ICT systems/services […] including Vendor & Contract Management, Quality Assurance, Service Level Management.”Footnote 29 Mozambique’s Strategic Plan for the Information Society similarly recognises the need to develop guidelines for public officials on the procurement of ICT programmes and equipment.Footnote 30

Although “multi-stakeholder,” the above mechanisms are largely confined to national technology and finance actors and focus on economic or technology goals. Nonetheless, many of the emerging AI strategies are also explicit about broader ethics principles and the involvement of a more diverse spectrum of entities. Mauritius calls for a “code of ethics about what AI can and can’t do.”Footnote 31 Egypt’s National AI Strategy considers bias that may arise when transferring a model from one context to another, as well as the unequal impact on employees.Footnote 32 In fact, the country’s National Council for AI has gone on to publish a Charter for Responsible AI that seeks to articulate “Egypt’s interpretation of the various guidelines on ethical and responsible AI [including UNESCO and the OECD], adapted to the local context and combined with actionable insights.”Footnote 33 Rwanda is also explicit in its AI Policy about placing “responsible and inclusive AI” at the centre of its mission and in seeking to embed AI ethics in government operations. This is to be supported by “society consultations,” perhaps pointing to a role for more diverse publics to provide input or oversight.Footnote 34

The Benin Strategy makes an interesting point about accountability in suggesting that all government, private sector, and end user stakeholders

…will need to be involved in the design, implementation, and ongoing monitoring and evaluation of the strategy. This principle includes mutual accountability between duty bearers (State, Local Government, Private Sector Leaders, Civil Society Leaders) and rights holders (the general public and other specific beneficiaries).Footnote 35

Many of these countries have also been or are active in the Open Government Partnership (OGP) that has been both a source of learning on technology use, especially around data sharing, and on how to implement multi-stakeholder governance (or the challenges associated with it). For example, the OGP handbook on managing multi-stakeholder forums includes practical examples of different membership and decision-making models, including pointing to cases from Ghana and other developing countries.Footnote 36

Previously, Malawi has signalled support for multi-stakeholder and public participation in its Digital Government StrategyFootnote 37, and Zambia’s Information and Communications Technology Authority has led initiatives focusing on gender inclusion, such as collecting sex-disaggregated data, establishing a Gender and ICT Portfolio, and developing a draft Gender and ICT Strategy.Footnote 38

In South Africa, there seems to be an attempt to drive inclusion at a more structural level through the 2019 White Paper on Science Technology and Innovation (STI), with direct relevance to AI. In addition to investing in core technology research around AI, the White Paper is concerned about potential exclusion or harm resulting from AI, such as “the risk of gender biases being perpetuated through incorporation into AI applications.”Footnote 39 This history with AI ethics dates back more than a decade when the Centre for Artificial Intelligence Research (CAIR) was established, and a central role for a CAIR unit in the development of the UNESCO Recommendation.Footnote 40

Across the continent, there are many other policies and authorities that take a direct stance on inclusion. For example, in Egypt, the National Academy of Information Technology for Persons with Disabilities (NAID) is an affiliate of the Ministry of Communications and Information Technology and a relatively unique entity on the continent. NAID has been exploring the link between AI and assistive technologies.Footnote 41 Moreover, the country’s National Human Rights Strategy was released in 2021 and addresses the emerging impact of technology on human rights (such as cybercrime) as well as the role of technology in supporting the inclusion of women and increasing awareness about human rights.Footnote 42 Similarly, in South Africa, the Human Rights Commission has been supporting work and facilitating workshops on AI since 2021Footnote 43, and there is a range of legislation and entities addressing discrimination and inclusion that could speak to responsible AI adoption.Footnote 44 Rwanda’s Child Online Protection Policy was published four years ago but it is not clear if any of the extensive set of recommendations were implemented and can be applied to AI going forward.Footnote 45

5.2 Human dignity and autonomy

The second Policy Area examined was Human dignity and autonomy. The UNESCO Recommendation outlines quite broad concerns about human interactions with AI and calls on member states to apply ethical AI principles to brain–computer interfaces and to ensure that users can easily determine whether they are interacting with a living person or an AI system imitating a person, and allow them to refuse interaction with an AI system and request human intervention (UNESCO 2021: 37–38).

Perhaps the most mature area of policy or legislation concerning human–technology interaction in African countries is around electronic transactions and evidence. This was driven by the relatively mundane need to ensure that the exchange of email, digital documents, and electronic signatures is legally recognised. For example, Botswana’s 2014 Electronic Communications and Transactions Act (ECTA) determines that a contract is formed “by the interaction of an automated message system and a person, or by the interaction of automated message systems”Footnote 46 and recognises the admissibility of electronic records as evidence in legal proceedings, subject to the Electronic Records (Evidence) Act.Footnote 47 Similarly, Mozambique’s equivalent of an ECTA establishes the legal effect of electronic messages and signatures, including interactions between automated messaging systems “even if no person has verified or intervened.”Footnote 48 Namibia’s ECTA also provides for the admissibility of electronic evidence and procedures for determination of its evidential weight.Footnote 49 Importantly, across most of these countries’ ECTAs, a natural person has the right to correct or withdraw a data message.

In many countries, there are also transaction-related themes in personal data protection legislation. Zambia’s Data Protection Act is typical in defining the rights of data subjects. This includes rights related to interactions between natural persons and programmed or data-driven systems. Specifically, data subjects cannot be subject to legal or similar effects arising from a decision based solely on automatic data processing. In Rwanda, this specifically includes “Profiling” that is closer to how we see current AI working:

use of personal data to evaluate certain personal aspects relating to a natural person, in particular to analyse and predict aspects concerning that natural person’s performance at work, economic situation, health, personal preferences, interests, reliability, behaviour, location or movements. Footnote 50

Zambia’s Act is similar to Rwanda’s in being relatively explicit about an obligation for human intervention. Data controllers must

implement suitable measures to safeguard the data subject’s rights and freedoms and legitimate interests, including the right to obtain human intervention on the part of the data controller for purposes of enabling the data subject to express the data subject’s point of view and contest the decision. Footnote 51

We see similar sentiments in emerging AI governance. A general guideline in Egypt’s Charter for Responsible AI states:

Final Human Determination is always in place. This means that ultimately, humans are in charge of making decisions, and are able to modify, stop, or retire the AI system if deemed necessary. Individuals with that power must be decided upon by the owner of the system. Footnote 52

The importance of human oversight and determination—both individual and inclusive public oversight—is reiterated throughout the UNESCO Recommendation, as expressed below:

accountability must always lie with natural or legal persons and that AI systems should not be given legal personality themselves (UNESCO, 2021: 28).

How existing legislation addresses accountability in electronic transactions could again be of interest or relevance to AI. There is a specific section in Botswana’s ECTA that is similar to other countries in aiming to protect consumers engaging in automated online transactions (usually the “addressees”) with an “originator.” This includes clarifying the accountability of the “originator” as an “information system programmed by, or on behalf of the originator to operate automatically unless it can be proved that the information system did not properly execute such programming.”Footnote 53 Similar provisions apply to the role of internet service providers (ISPs) in AI. As with MozambiqueFootnote 54 and other countries, Botswana’s ECTA sees electronic communications service providers and similar intermediaries as “mere conduit” if they do not select or modify data received from an originator. In this case, these entities will not have civil or criminal liability for the communication.Footnote 55

Closely linked to the preservation of human dignity and autonomy is the need to address the anthropomorphisation of AI technologies, especially in the “language used to mention them.”Footnote 56 Anthropomorphic language is usually most evident in the micro-level descriptions of how AI works. This often starts with a definition of AI as mimicking the human brain (for the definition of deep learningFootnote 57) or performing “tasks that require intelligence without additional programming; in other words, AI involves human-like thought processes and behaviours.”Footnote 58 Otherwise, the human-like character of AI is depicted across a range of use cases. The Mauritius strategy considers the impact of AI on government services where it is seen to “emulate human performance, for example, by learning, coming to conclusions or engaging in dialogues with people” and being able to “learn, reason and decide at levels similar to that of a human.”Footnote 59 The suggestion that AI “decides” is evident in Egypt’s classification of use cases according to levels of human or technology autonomy. For example:

“B. AI decides, human implements

-

• Identifying Risks in pipelines (Leakage, breakage, etc.)

-

• Staffing & Headcount projections

-

• Commodity Price Prediction, and procurement.”Footnote 60

In general, it seems that AI is being depicted in a more deterministic form than most other technologies, with high levels of autonomy and associated risk. Efforts to “control” AI are therefore a prominent theme. As with most countries, Mauritius expects the legal framework to provide “safeguards” or “guardrails” to guide the development and use of technology. Ultimately this is to ensure “that humans have the ability to override artificial intelligence decisions and maintain control over the technology.”Footnote 61 Of broader interest though are attempts to assert regional influence and “values” onto AI that points to a growing agenda to locally define AI and its use at a more fundamental level.Footnote 62

5.3a–5.3c Sector-specific governance

Ultimately, much of the impact of AI governance will be realised in sector verticals. In many policy areas, cross-cutting legislation will be used to initiate sector-specific regulations—whether determined by government or through self-regulation by stakeholders. For example, South Africa’s Information Regulator is approving codes of conduct as a “voluntary accountability tool” to support (not replace) the requirements of the Protection of Personal Information Act (POPIA).Footnote 63 In other countries and policy domains, there are similar examples of sectors having tailored technology governance to their specific needs that could be relevant to AI governance going forward.

Retail and consumer protection

As a relatively early technology adopter, the retail sector has been navigating a range of increasingly complex and significant governance issues related to e-commerce, data, and automation over the past decade. Whilst ECTA-like legislation laid the foundations for the legality of online purchasing, the growing dominance of international accommodation, taxi, and delivery platforms raises questions about consumer welfare and the viability of local competitors. Importantly, the welfare of consumers may be the domain of one or more (and therefore overlapping) authorities and pieces of legislation, including technology (legality and privacy in electronic transactions), data protection (mainly privacy), competition (mainly pricing), and consumer protection (transparency and complaints handling). In addition, there are potentially many other sector-specific consumer protection actors.

From a competition perspective, a report by South Africa’s Competition Commission on the digital economy notes various areas of concern related to the global platform economy, one of which is control over data assets. Another is profit-maximising AI algorithms leading to (possibly unintentional) collusion on pricing.Footnote 64 Finally, there is a concern that platform recommendation algorithms are biased (“self-preferencing”) towards their own goods and services.Footnote 65 All of these competition issues are relevant to the value provided to consumers, and for addressing potential exploitation. In some countries, competition authorities may even be better resourced than data protection authorities to address privacy issues.Footnote 66

From a consumer protection perspective, in Namibia, a National Consumer Protection Policy aims to eliminate unfair and deceptive practices “directed at the poorest and most vulnerable members of society.”Footnote 67 Significantly, the policy recognises the diversity of existing legislation aimed at promoting consumer welfare and protection—from financial services to health and food—and is therefore developing a harmonised legal framework. Implementation would still be by sector regulators but with support from a central Consumer Protection Office. Similarly, the ECTA in Zambia acknowledges the need for cooperation between ICT regulators and overlapping entities, including consumer protection commissions, on issues such as “unfair trading” associated with electronic transactions.Footnote 68

Financial services

The financial services industry has been an advanced technology user on the continent, which has also meant exploring adaptive policy approaches, such as regulatory sandboxes around fintech.Footnote 69 In addition, financial service industry associations have been actively seeking to interpret and apply (or resist) regulations relevant to responsible or ethical AI. For example, the two approved codes of conduct on POPIA are for the Banking Association of South Africa (BASA) and the Credit Bureau Association (CBA).

BASA recognises that its members use automated decision-making “to provide a profile of the data subject” and outlines commitments on transparency and complaints procedures.Footnote 70 In its code, the CBA suggests that members, such as Experian and Transunion, “do not provide decisions or even the basis for a decision on credit in terms of Regulation 23A of the [National Credit Act, 34 of 2005 (NCA)]”Footnote 71 and does not mention the relationship between automated decision-making and profiling. The NCA and associated regulations describe the steps and methods for calculating affordability. Similar considerations apply to the regulation of insurance that prohibits discrimination based on race, age, and gender amongst other possible criteria that could form part of an AI-based risk rating.Footnote 72 Nuances of interpretation in the context of existing legislation and new laws, codes, and guidelines will become more important as AI adoption and impacts increase.

From a practice perspective, the expansion of model use in the banking sector—for financial risk modelling to human resources decision-making (Deloitte, 2021)—and new international guidelines and regulations post-financial crisis have meant that model risk management (MRM) is now an established capability in many African banks. The impact of AI on MRM on the Continent is an emerging issue (Richman et al., Reference Richman, von Rummell and Wuthrich2019). The associated governance frameworks, although from a heavily regulated space, may offer opportunities for learning in other sectors.

Environment and ecosystems

Another field in which there is relatively strong evidence of public interest model governance is environmental impact assessments (EIAs). EIAs are based on regulated procedures of data collection and consultation with affected stakeholders and are therefore a potentially interesting space in which to consider the relationship between models and other forms of knowledge. In South Africa, the regulations around EIAs aim to ensure transparency around the method being used (and associated predictions of impact), whilst giving key stakeholders opportunities to understand the EIA process and outcomes and to make representations to relevant authorities where they have a disagreement.Footnote 73 Closely connected to EIAs are air quality impact assessments that are governed by a “code of practice” on air dispersion modelling. This code provides guidance on approved models that can be used. The code also requires that sufficient information is made available to allow authorities to make appropriate decisions.Footnote 74 EIAs are seen as likely targets for AI in other parts of Africa too. Concerns about AI impact are leading to a renewed interrogation of established algorithmic practices in French-speaking countries of sub-Saharan Africa, with researchers pointing out biases in the Leopold matrix and Fecteau grid as popular EIA tools (Yentcharé and Sedami, Reference Yentcharé, Sedami, Ncube, Oriakhogba, Schonwetter and Rutenberg2023).

More broadly, the environment and ecosystems sector may also provide insights into how data collection and use, as a key element of AI implementation, can be more responsible. One of these insights relates to the empowerment of local stakeholders. For example, a recent commentary has considered how Namibia’s Nature Conservation Amendment Act of 1996 may be applied to data governance (Shilongo, Reference Shilongo2023). The Act makes provision for community conservation governance via the establishment of a Conservancy Committee that not only seeks to ensure that members of a community derive benefits from the use and sustainable management of conservancies and its resources but also that community members within a specific conservation constituency know their rights. Learning from such structures and legislation, an equivalent community data committee and/ or worker could broker relationships with external technology providers and governance actors.

Public sector adoption

Ultimately, many of these sectors are themselves shaped by more generic public administration practices and rules as a sector in itself. A key public sector policy action in emerging AI strategies is the training of public officials. To ensure “Trustworthy AI adoption in the public sector,” Rwanda is establishing capacity-building programmes for civil servants in regulatory, policymaking, or legislative roles, for those expected to use AI, and for those likely to be procuring AI solutions. This is to be supported by matching funding initiatives, hackathons, procurement guidelines, and maturity assessment.Footnote 75 And, finally, there are plans to embed “AI Ethics” functions in ministry and agency digital governance environments.

At the same time, it is important to note that many countries already have established frameworks, policies, and legislation that provide direction to government entities and public officials on ethical issues. In South Africa, the Batho Pele principles in the 1997 White Paper on Transforming Public Service DeliveryFootnote 76 call on public service providers to ensure better provision of information, increased openness and transparency, and opportunities for the public to remedy mistakes and failures; all of which provide a framework for how the rights of people may be protected or fulfilled with increased AI use. Moreover, the law governing administrative justice speaks to the right of persons to “administrative action that is lawful, reasonable and procedurally fair and that everyone whose rights have been adversely affected by administrative action has the right to be given written reasons.”Footnote 77 This is becoming increasingly important for both private and public entities because of the expanding role of (and potential abuse by) digital and AI service providers in South Africa’s public sector (Razzano, Reference Razzano2020).

It is for these reasons that access to information (ATI) legislation has a key role to play in helping the public to understand what and how personal or other information was used in (algorithm-assisted or not) decision-making. African states continue to grapple with the passing of ATI legislation. As recently as 2022, the Namibian National Assembly passed an Access to Information Bill.Footnote 78 Where legislation has been enacted, implementation and enforcement continue to be a challenge, partly due to conflicts with secrecy or similar laws and norms, but also because of the administrative requirements for classifying and releasing information.

Going forward, coordination and accountability are seen as key issues for the public sector. Because AI and ICT more broadly are seen as cross-cutting issues, the ICT ministries and authorities are expected to play a central role in public sector projects and in supporting accountability. For example, in Egypt, the Charter for Responsible AI requires that

Government AI projects, similar to Digital Transformation projects, should be commissioned and supervised by Ministry of Communications and Information Technology (MCIT) in order to ensure compliance with these guidelines and the credibility and quality of data and developers involved in the development of AI systems. MCIT presents periodically on status of those projects to the National AI Council. [Accountability]Footnote 79.

Another example of efforts to assert central oversight and coordination is Kenya’s 2023 Government ICT Standards that define requirements that AI systems deployed in government entities must follow, including aligning with International Organization for Standardization (ISO) standards. This is to “meet critical objectives for functionality, interoperability, and trustworthiness.”Footnote 80

6. Discussion

When looking at AI governance in African countries, it is hard to say what amount of AI exceptionalism is warranted, and where dedicated policies, legislation, and regulatory institutions are needed (Calo, Reference Calo2015). However, by reflecting on existing capabilities, we can start to identify opportunities for policy learning, between sectors and countries.

A key starting point is the role and operation of multi-stakeholder arrangements. These structures can enhance the legitimacy of policy processes, help governments access financial or technical resources, expand access to relevant information, and support oversight. There are also risks and challenges with these structures. Malawi and Mozambique’s experience with PPPs and their efforts to ensure transparency and build the contract management skills of public officials seem relevant to the management of joint AI initiatives. This is especially applicable to AI because of uncertainty about how to procure AI-based technology (Nagitta et al., Reference Nagitta, Mugurusi, Obicci and Awuor2022) and the lack of transparency inherent to its implementation (Rudin, Reference Rudin2019). In addition, there continue to be challenges in establishing and managing multi-stakeholder forums as policy formulation or oversight structures. More explicit attention can be directed to learning from experiences with, for example, national OGP implementations and how these can balance governments’ political and legal responsibility with meaningful involvement of other actors. In addition, there are interesting initiatives aimed more directly at achieving ethical outcomes, such as Egypt’s Charter on Responsible AI that calls for “mutual accountability” between expert leaders and the wider public. More practically, recent activities by the Human Rights Commission in South Africa, supported by discrimination-related legislation, and work by NAID in Egypt suggest that there are opportunities to strengthen the existing work (and oversight/enforcement activities) of inclusion and rights-focused entities on digital, data, and AI.

In other policy areas, we see similar opportunities for policy learning. One of these is at the intersection of electronic transactions, evidence, and personal data protection legislation; mainly around the legality of automated processes, accountability (and liability) for outcomes, and the role of human oversight. In some cases, there is more direct reference to current AI-related practices such as Rwanda’s limits on profiling and predicting behaviour. As noted earlier, the extent to which these areas of legislation need to be explicit about current AI activities and risks versus allowing for a more incremental common or soft law response is a key question (Guihot et al., Reference Guihot, Matthew and Suzor2017; Calo, Reference Calo2015).

In addition, certain sector-specific legislation and policy has already engaged quite extensively with these issues. Consumer protection around electronic commerce, as well as in financial services—especially in credit—have long been concerned with unfair profiling and manipulation of consumer behaviour. Moreover, for many years now the financial sector has needed to address risk related to the use of models. Whilst a very different field, environmental sector EIAs and air quality assessment provide an interesting example of how model management regulation is integrated with wider transparency requirements. The health sector was not included in our analysis, but there is a growing body of Africa-centric research around AI governance that is likely to provide useful insights around specific issues, such as accountability and liability, which could be relevant to other sectors (Bottomley and Thaldar, Reference Bottomley and Thaldar2023).

The environmental and ecosystems policy area also seems to offer opportunities for learning from community-based governance arrangements, as proposed for data governance in Namibia (Shilongo, Reference Shilongo2023). In a similar vein, there could be more investigation into more bottom-up data science and AI initiatives seeking to shape responsible practices, mainly within the developer community. For example, South African digital and data social enterprises, Lelapa AIFootnote 81 and OpenUpFootnote 82, have recently published guidance on responsible design practices, and an Egyptian AI start-up has been exploring the intersection between society and technology from a local perspective.Footnote 83

In general, data governance is one of a few areas that is critical to current ML-oriented AI implementation and a key topic for further research. There are many issues to address around data collection, sharing, analysis, privacy, and security. Possible policy actions could include bottom-up and commons-based models of governance, as explored in the African agriculture space (Baarbé et al., Reference Baarbé, Blom and De Beer2019). And there are established data governance capabilities in national statistical offices (NSOs). NSOs have established processes for managing data quality and have been building expertise around the management of unstructured administrative data—which would be relevant to AI data pipelines.Footnote 84

Other topics for further research include the role and effectiveness of adaptive policy and regulation methods in African technology governance, including regulatory sandboxes, and whether they could be relevant to AI (Guihot et al., Reference Guihot, Matthew and Suzor2017). In addition, the language in many of the emerging AI policies seems to reinforce ideas of technology autonomy, which can undermine the sense of agency amongst (local) governance actors. By looking at how automation, algorithms, and data are talked about in other sectors and countries, we may be able to find better definitions and metaphors to inform our policy and legislative activities (Buiten, Reference Buiten2019). Finally, the role of international norms and law is significant and a key focus (and objective) of the UNESCO Recommendation. The impact of the European Union’s General Data Protection Regulation (GDPR) on African data protection legislation has been widely documented (e.g. Makulilo, Reference Makulilo2013) and has reinforced the role of local DPAs in responsible AI governance (Gwagwa et al., Reference Gwagwa, Kachidza, Siminyu and Smith2021). More recent have been African requests to accede to other AI-relevant EU treaties such as Convention 108+.Footnote 85 Also significant is the African Continental Free Trade Agreement (AfCFTA) becoming operational in 2021Footnote 86 and the coming into effect of the African Union (AU) Convention on Cyber Security and Personal Data Protection in June 2023.Footnote 87

7. Conclusion

The aim of this paper was to develop a richer picture of where existing governance capabilities may exist and to consider pathways for facilitating policy learning around a responsible way forward for AI in Africa. This was done through a close reading of the UNESCO Recommendation on the Ethics of AI and a sample of African data, algorithm, ICT, and AI policy and legislation examples.

As AI intersects with various policy domains, claims of influence emerge around areas of expertise or credibility. From this limited investigation, we see potentially important governance capabilities across a range of cross-cutting areas, such as human intervention in electronic transactions, rights and discrimination-focused legislation, transparent management of digital PPPs, and technology procurement capacity building. We also see established capabilities in sector verticals, such as model management frameworks for banking and air quality monitoring, and consumer protection in e-commerce.

This does not mean there are no challenges with formulating, implementing, or enforcing policy and legislation in these areas. It is for this reason that we should not let the attention on AI distract us from investing in the core digital and data governance capabilities (and associated infrastructures, skills, etc.) that enable the development and adoption of innovative technologies. We can then critically consider whether and when dedicated AI policy, legislation, and institutional arrangements may be appropriate.

More work is therefore needed to understand the language, mechanisms, and processes of policy and institutional learning through comparative policy analysis and in-country research. This will help policy actors understand potential pathways for improving governance capabilities around data, automation, algorithms, and AI.

Acknowledgements

N/A

Author contribution

Conceptualisation and methodology: P.P. and K.S. Investigation: P.P., A.U., and O.M. Writing original draft: P.P., K.S., O.M., M.G., and G.R. All authors approved the final submitted draft.

Data availability statement

N/A

Provenance

This article is part of the Data for Policy 2024 Proceedings and was accepted in Data & Policy on the strength of the Conference’s review process.

Funding statement

This research was supported by a grant to Research ICT Africa from the International Development Research Centre (IDRC) for the African Observatory on Responsible Artificial Intelligence. The funder had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

Competing interest

The authors declare none.

Comments

No Comments have been published for this article.