1 Introduction

Let q denote a large prime, and

![]() $\chi $

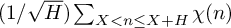

a non-principal Dirichlet character modulo q. In this paper, we will be interested in the statistical behaviour of sums

$\chi $

a non-principal Dirichlet character modulo q. In this paper, we will be interested in the statistical behaviour of sums

where

![]() $H = H(q)$

is some function.

$H = H(q)$

is some function.

Since

![]() $\chi $

has period q, we may restrict attention to

$\chi $

has period q, we may restrict attention to

![]() $1 \leq H \leq q$

. The case of long sums, where

$1 \leq H \leq q$

. The case of long sums, where

![]() $H(q) \asymp q$

as

$H(q) \asymp q$

as

![]() $q \rightarrow \infty $

, has been quite extensively studied. See, for example, the work of Granville and Soundararajan [Reference Granville and Soundararajan7] and of Bober, Goldmakher, Granville and Koukoulopoulos [Reference Bober, Goldmakher, Granville and Koukoulopoulos2] investigating the largest possible values of character sums, and the recent work of Hussain [Reference Hussain12] on the behaviour of the paths

$q \rightarrow \infty $

, has been quite extensively studied. See, for example, the work of Granville and Soundararajan [Reference Granville and Soundararajan7] and of Bober, Goldmakher, Granville and Koukoulopoulos [Reference Bober, Goldmakher, Granville and Koukoulopoulos2] investigating the largest possible values of character sums, and the recent work of Hussain [Reference Hussain12] on the behaviour of the paths

![]() $t \mapsto \sum _{n \leq qt} \chi (n)$

. In this paper, we focus instead on short sums, where

$t \mapsto \sum _{n \leq qt} \chi (n)$

. In this paper, we focus instead on short sums, where

![]() $H(q) = o(q)$

as

$H(q) = o(q)$

as

![]() $q \rightarrow \infty $

. Our primary focus shall be on the situation where

$q \rightarrow \infty $

. Our primary focus shall be on the situation where

![]() $\chi $

is fixed for given q, and the start point

$\chi $

is fixed for given q, and the start point

![]() $x \in \{0,1,\ldots ,q-1\}$

varies, although we will touch on what happens when

$x \in \{0,1,\ldots ,q-1\}$

varies, although we will touch on what happens when

![]() $\chi $

varies as well.

$\chi $

varies as well.

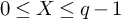

This problem was studied by Davenport and Erdős [Reference Davenport and Erdős5], who proved that if

![]() $\chi = \left (\frac {\cdot }{q}\right )$

is the Legendre symbol, and if the function H satisfies

$\chi = \left (\frac {\cdot }{q}\right )$

is the Legendre symbol, and if the function H satisfies

![]() $H \rightarrow \infty $

but

$H \rightarrow \infty $

but

![]() $(\log H)/\log q \rightarrow 0$

as

$(\log H)/\log q \rightarrow 0$

as

![]() $q \rightarrow \infty $

, and if

$q \rightarrow \infty $

, and if

![]() $X \in \{0,1,\ldots ,q-1\}$

is uniformly random, then one has convergence in distribution to a standard Gaussian,

$X \in \{0,1,\ldots ,q-1\}$

is uniformly random, then one has convergence in distribution to a standard Gaussian,

Recall this means that for each fixed

![]() $w \in \mathbb {R}$

, we have

$w \in \mathbb {R}$

, we have

where

![]() $\Phi (w) := (1/\sqrt {2\pi }) \int _{-\infty }^{w} e^{-z^{2}/2} dz$

is the standard Gaussian cumulative distribution function. Lamzouri [Reference Lamzouri14] recently extended this to more general Dirichlet characters. He showed that if one chooses a non-real character

$\Phi (w) := (1/\sqrt {2\pi }) \int _{-\infty }^{w} e^{-z^{2}/2} dz$

is the standard Gaussian cumulative distribution function. Lamzouri [Reference Lamzouri14] recently extended this to more general Dirichlet characters. He showed that if one chooses a non-real character

![]() $\chi $

modulo each prime q (in any way), then under the same conditions on H as Davenport and Erdős [Reference Davenport and Erdős5], one has

$\chi $

modulo each prime q (in any way), then under the same conditions on H as Davenport and Erdős [Reference Davenport and Erdős5], one has

where

![]() $Z_1 , Z_2$

are independent

$Z_1 , Z_2$

are independent

![]() $N(0,1/2)$

random variables. Lamzouri [Reference Lamzouri14] also obtained a quantitative rate of convergence (in the sense of Kolmogorov distance). We also mention slightly earlier work of Mak and Zaharescu [Reference Mak and Zaharescu15], who proved separate distributional convergence results for the real and imaginary parts of

$N(0,1/2)$

random variables. Lamzouri [Reference Lamzouri14] also obtained a quantitative rate of convergence (in the sense of Kolmogorov distance). We also mention slightly earlier work of Mak and Zaharescu [Reference Mak and Zaharescu15], who proved separate distributional convergence results for the real and imaginary parts of

![]() $\frac {S_{\chi ,H}(X)}{\sqrt {H}}$

, and more generally for the projections of various kinds of moving character sum onto lines through the origin.

$\frac {S_{\chi ,H}(X)}{\sqrt {H}}$

, and more generally for the projections of various kinds of moving character sum onto lines through the origin.

All of these results, and many related ones (e.g., the work of Perret-Gentil [Reference Perret-Gentil20] on short sums of l-adic trace functions), ultimately depend on a moment method. For example, in the case

![]() $\chi = \left (\frac {\cdot }{q}\right )$

, Davenport and Erdős calculated

$\chi = \left (\frac {\cdot }{q}\right )$

, Davenport and Erdős calculated

$$ \begin{align*}\frac{1}{q} \sum_{0 \leq x \leq q-1} \left(\frac{S_{\chi,H}(x)}{\sqrt{H}} \right)^j = \frac{1}{q H^{j/2}} \sum_{1 \leq h_1 , \ldots, h_j \leq H} \sum_{0 \leq x \leq q-1} \left(\frac{x + h_1}{q}\right) \left(\frac{x + h_2}{q}\right) ... \left(\frac{x + h_j}{q}\right) ,\end{align*} $$

$$ \begin{align*}\frac{1}{q} \sum_{0 \leq x \leq q-1} \left(\frac{S_{\chi,H}(x)}{\sqrt{H}} \right)^j = \frac{1}{q H^{j/2}} \sum_{1 \leq h_1 , \ldots, h_j \leq H} \sum_{0 \leq x \leq q-1} \left(\frac{x + h_1}{q}\right) \left(\frac{x + h_2}{q}\right) ... \left(\frac{x + h_j}{q}\right) ,\end{align*} $$

showing that for each fixed

![]() $j \in \mathbb {N}$

, this converges to the standard normal moment

$j \in \mathbb {N}$

, this converges to the standard normal moment

![]() $(1/\sqrt {2\pi }) \int _{-\infty }^{\infty } z^j e^{-z^{2}/2} dz$

as

$(1/\sqrt {2\pi }) \int _{-\infty }^{\infty } z^j e^{-z^{2}/2} dz$

as

![]() $q \rightarrow \infty $

. It is well known that the normal distribution is sufficiently nice that moment convergence implies convergence in distribution. The key to performing the moment calculation is that for a given tuple

$q \rightarrow \infty $

. It is well known that the normal distribution is sufficiently nice that moment convergence implies convergence in distribution. The key to performing the moment calculation is that for a given tuple

![]() $(h_1 , \ldots , h_j)$

of shifts, if any shift h occurs with odd multiplicity, then the sum over x is

$(h_1 , \ldots , h_j)$

of shifts, if any shift h occurs with odd multiplicity, then the sum over x is

![]() $\ll _{j} \sqrt {q}$

, by the Weil bound. Under the condition

$\ll _{j} \sqrt {q}$

, by the Weil bound. Under the condition

![]() $(\log H)/\log q \rightarrow 0$

, all these terms together give a contribution

$(\log H)/\log q \rightarrow 0$

, all these terms together give a contribution

$$ \begin{align*}\ll_{j} \frac{1}{\sqrt{q} H^{j/2}} \sum_{\substack{1 \leq h_1 , \ldots, h_j \leq H , \\ \text{a shift occurs with odd multiplicity}}} 1 \leq \frac{H^{j/2}}{\sqrt{q}} \rightarrow 0 \quad \text{as } q \rightarrow \infty .\end{align*} $$

$$ \begin{align*}\ll_{j} \frac{1}{\sqrt{q} H^{j/2}} \sum_{\substack{1 \leq h_1 , \ldots, h_j \leq H , \\ \text{a shift occurs with odd multiplicity}}} 1 \leq \frac{H^{j/2}}{\sqrt{q}} \rightarrow 0 \quad \text{as } q \rightarrow \infty .\end{align*} $$

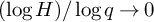

If one drops the condition

![]() $(\log H)/\log q \rightarrow 0$

, then this method seems to break down.

$(\log H)/\log q \rightarrow 0$

, then this method seems to break down.

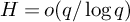

Lamzouri [Reference Lamzouri14] made the following conjecture about what happens for larger H.

Conjecture 1 (Lamzouri, 2013).

Suppose that

![]() $H \rightarrow \infty $

but

$H \rightarrow \infty $

but

![]() $H = o(q/\log q)$

as the prime

$H = o(q/\log q)$

as the prime

![]() $q \rightarrow \infty $

. Then if

$q \rightarrow \infty $

. Then if

![]() $\chi = \left (\frac {\cdot }{q}\right )$

is the Legendre symbol, and if

$\chi = \left (\frac {\cdot }{q}\right )$

is the Legendre symbol, and if

![]() $X \in \{0,1,\ldots ,q-1\}$

is uniformly random, we have

$X \in \{0,1,\ldots ,q-1\}$

is uniformly random, we have

If we choose a non-real character

![]() $\chi $

modulo each prime q (in any way), then on the same range of H we have

$\chi $

modulo each prime q (in any way), then on the same range of H we have

where

![]() $Z_1 , Z_2$

are independent

$Z_1 , Z_2$

are independent

![]() $N(0,1/2)$

random variables.

$N(0,1/2)$

random variables.

Our goal here is the further investigation of Lamzouri’s conjecture. Prior to this, we briefly explain the origins of the conjecture, and in particular of the condition

![]() $H = o(q/\log q)$

. For each prime p, let

$H = o(q/\log q)$

. For each prime p, let

![]() $f(p)$

be an independent random variable taking values

$f(p)$

be an independent random variable taking values

![]() $\pm 1$

with probability 1/2 each (i.e., a Rademacher random variable), and then for each

$\pm 1$

with probability 1/2 each (i.e., a Rademacher random variable), and then for each

![]() $n \in \mathbb {N}$

, define

$n \in \mathbb {N}$

, define

$$ \begin{align*}f(n) := \prod_{p^{\alpha} ||n} f(p)^{\alpha} ,\end{align*} $$

$$ \begin{align*}f(n) := \prod_{p^{\alpha} ||n} f(p)^{\alpha} ,\end{align*} $$

where

![]() $p^{\alpha } ||n$

means that

$p^{\alpha } ||n$

means that

![]() $p^{\alpha }$

is the highest power of p that divides n. We shall refer to such f as an extended Rademacher random multiplicative function and think of f as a random model for the Legendre symbol

$p^{\alpha }$

is the highest power of p that divides n. We shall refer to such f as an extended Rademacher random multiplicative function and think of f as a random model for the Legendre symbol

![]() $\left (\frac {n}{q}\right )$

as q varies. Similarly, to model a complex Dirichlet character

$\left (\frac {n}{q}\right )$

as q varies. Similarly, to model a complex Dirichlet character

![]() $\chi (n)$

, we let

$\chi (n)$

, we let

![]() $f(p)$

be uniformly distributed on the complex unit circle (i.e., Steinhaus random variables) and again define

$f(p)$

be uniformly distributed on the complex unit circle (i.e., Steinhaus random variables) and again define

![]() $f(n) := \prod _{p^{\alpha } ||n} f(p)^{\alpha }$

, a Steinhaus random multiplicative function. Chatterjee and Soundararajan [Reference Chatterjee and Soundararajan4] showed that for a very similarFootnote

1

kind of real random function f, one has

$f(n) := \prod _{p^{\alpha } ||n} f(p)^{\alpha }$

, a Steinhaus random multiplicative function. Chatterjee and Soundararajan [Reference Chatterjee and Soundararajan4] showed that for a very similarFootnote

1

kind of real random function f, one has

$$ \begin{align*}\frac{\sum_{x < n \leq x+y} f(n)}{\sqrt{\mathbb{E} \left(\sum_{x < n \leq x+y} f(n) \right)^2 }} \stackrel{d}{\rightarrow} N(0,1) \quad \text{as } x \rightarrow \infty\end{align*} $$

$$ \begin{align*}\frac{\sum_{x < n \leq x+y} f(n)}{\sqrt{\mathbb{E} \left(\sum_{x < n \leq x+y} f(n) \right)^2 }} \stackrel{d}{\rightarrow} N(0,1) \quad \text{as } x \rightarrow \infty\end{align*} $$

provided the interval length

![]() $y=y(x)$

satisfies

$y=y(x)$

satisfies

![]() $x^{1/5}\log x \ll y = o(x/\log x)$

. Lamzouri’s conjectured condition

$x^{1/5}\log x \ll y = o(x/\log x)$

. Lamzouri’s conjectured condition

![]() $H = o(q/\log q)$

is analogous to Chatterjee and Soundararajan’s upper bound on y.

$H = o(q/\log q)$

is analogous to Chatterjee and Soundararajan’s upper bound on y.

There are at least two issues that need to be understood when considering whether the random multiplicative model is a good one for

![]() $S_{\chi ,H}(X)$

. The first is whether a random multiplicative function captures all of the important structure of a Dirichlet character, which in particular has an additional periodicity property. The second is whether one can infer things about

$S_{\chi ,H}(X)$

. The first is whether a random multiplicative function captures all of the important structure of a Dirichlet character, which in particular has an additional periodicity property. The second is whether one can infer things about

![]() $S_{\chi ,H}(X)$

, where the function

$S_{\chi ,H}(X)$

, where the function

![]() $\chi $

is fixed (for given q) and the start point X of the interval randomly varies, from things about

$\chi $

is fixed (for given q) and the start point X of the interval randomly varies, from things about

![]() $\sum _{x < n \leq x+y} f(n)$

where the interval is fixed (for given x) and the function f randomly varies. One might think that if the latter is a good model for character sums, it would rather be for the case of a fixed interval for given q and randomly varying character

$\sum _{x < n \leq x+y} f(n)$

where the interval is fixed (for given x) and the function f randomly varies. One might think that if the latter is a good model for character sums, it would rather be for the case of a fixed interval for given q and randomly varying character

![]() $\chi $

mod q.

$\chi $

mod q.

1.1 Statement of results

Our main results are negative, showing that Conjecture 1 is not correct as stated.

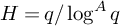

Theorem 1. Let

![]() $A> 0$

be arbitrary but fixed, and set

$A> 0$

be arbitrary but fixed, and set

![]() $H(q) = q/\log ^{A}q$

. Then as q varies over all large primes, with

$H(q) = q/\log ^{A}q$

. Then as q varies over all large primes, with

![]() $\chi = \left (\frac {\cdot }{q}\right )$

denoting the unique corresponding quadratic character, we have

$\chi = \left (\frac {\cdot }{q}\right )$

denoting the unique corresponding quadratic character, we have

Theorem 2. Let

![]() $A> 0$

be arbitrary but fixed, and set

$A> 0$

be arbitrary but fixed, and set

![]() $H(q) = q/\log ^{A}q$

. Then as q varies over all large primes, there exists a corresponding sequence of non-real characters

$H(q) = q/\log ^{A}q$

. Then as q varies over all large primes, there exists a corresponding sequence of non-real characters

![]() $\chi $

modulo q for which

$\chi $

modulo q for which

where

![]() $Z_1 , Z_2$

are independent

$Z_1 , Z_2$

are independent

![]() $N(0,1/2)$

random variables.

$N(0,1/2)$

random variables.

It may not be very illuminating just to say that something does not converge to a specified limit object. In fact, in the real case covered by Theorem 1, we will show that there exists an infinite sequence of primes q along which

![]() $\frac {S_{\chi ,H}(X)}{\sqrt {H}}$

has properties that forbid it from closely approaching the

$\frac {S_{\chi ,H}(X)}{\sqrt {H}}$

has properties that forbid it from closely approaching the

![]() $N(0,1)$

limit. This special sequence consists of primes q for which

$N(0,1)$

limit. This special sequence consists of primes q for which

![]() $\left (\frac {\cdot }{q}\right )$

is ‘highly biased’, in the sense that its partial sums up to about

$\left (\frac {\cdot }{q}\right )$

is ‘highly biased’, in the sense that its partial sums up to about

![]() $q/H$

are not small. Similarly, in the non-real case covered by Theorem 2, the bad character

$q/H$

are not small. Similarly, in the non-real case covered by Theorem 2, the bad character

![]() $\chi $

that we select for each prime q is such that its partial sum up to about

$\chi $

that we select for each prime q is such that its partial sum up to about

![]() $q/H$

has large modulus.

$q/H$

has large modulus.

To explain further, if

![]() $\chi $

is primitive mod q (so for q prime, any non-principal character is admissible), then Pólya’s Fourier expansion for character sums implies that

$\chi $

is primitive mod q (so for q prime, any non-principal character is admissible), then Pólya’s Fourier expansion for character sums implies that

$$ \begin{align*}S_{\chi, H}(x) = \frac{\tau(\chi)}{2\pi i} \sum_{0 < |k| < q/2} \frac{\overline{\chi}(-k)}{k} e(kx/q) (e(kH/q) - 1) + O(\log q) .\end{align*} $$

$$ \begin{align*}S_{\chi, H}(x) = \frac{\tau(\chi)}{2\pi i} \sum_{0 < |k| < q/2} \frac{\overline{\chi}(-k)}{k} e(kx/q) (e(kH/q) - 1) + O(\log q) .\end{align*} $$

Here,

![]() $\tau (\chi )$

denotes the Gauss sum, of absolute value

$\tau (\chi )$

denotes the Gauss sum, of absolute value

![]() $\sqrt {q}$

, and

$\sqrt {q}$

, and

![]() $e(\cdot ) = e^{2\pi i \cdot }$

denotes the complex exponential. When

$e(\cdot ) = e^{2\pi i \cdot }$

denotes the complex exponential. When

![]() $|k| \leq q/H$

, we have

$|k| \leq q/H$

, we have

![]() $(1/k) (e(kH/q) - 1) \approx 2\pi i (H/q)$

, and it turns out that (on average over x) these are essentially the only terms that make a significant contribution, so

$(1/k) (e(kH/q) - 1) \approx 2\pi i (H/q)$

, and it turns out that (on average over x) these are essentially the only terms that make a significant contribution, so

$$ \begin{align} \frac{S_{\chi, H}(x)}{\sqrt{H}} \approx \frac{\tau(\chi)\sqrt{H}}{q} \sum_{0 < |k| < q/H} \overline{\chi}(-k) e(kx/q). \end{align} $$

$$ \begin{align} \frac{S_{\chi, H}(x)}{\sqrt{H}} \approx \frac{\tau(\chi)\sqrt{H}}{q} \sum_{0 < |k| < q/H} \overline{\chi}(-k) e(kx/q). \end{align} $$

Now if

![]() $H = q/\log ^{A}q$

, and so

$H = q/\log ^{A}q$

, and so

![]() $q/H = \log ^{A}q$

, we can find characters

$q/H = \log ^{A}q$

, we can find characters

![]() $\chi $

for which

$\chi $

for which

![]() $|\sum _{0 < k < q/H} \chi (k)| \gg _{A} q/H$

. For such characters, we can think of

$|\sum _{0 < k < q/H} \chi (k)| \gg _{A} q/H$

. For such characters, we can think of

![]() $S_{\chi , H}(x)/\sqrt {H}$

as having a significant piece resembling the scaled Dirichlet kernel

$S_{\chi , H}(x)/\sqrt {H}$

as having a significant piece resembling the scaled Dirichlet kernel

![]() $(\tau (\chi )\sqrt {H}/q) \sum _{0 < |k| < q/H} e(kx/q)$

. The Dirichlet kernel certainly does not have Gaussian behaviour as x varies and

$(\tau (\chi )\sqrt {H}/q) \sum _{0 < |k| < q/H} e(kx/q)$

. The Dirichlet kernel certainly does not have Gaussian behaviour as x varies and

![]() $q \rightarrow \infty $

; in fact (since it has relatively small

$q \rightarrow \infty $

; in fact (since it has relatively small

![]() $L^{1}$

norm), it converges to 0 in probability, which suggests it is unlikely that

$L^{1}$

norm), it converges to 0 in probability, which suggests it is unlikely that

![]() $S_{\chi , H}(x)/\sqrt {H}$

can converge to the desired Gaussian. This argument can be made rigorous by subtracting a suitable multiple of the Dirichlet kernel from

$S_{\chi , H}(x)/\sqrt {H}$

can converge to the desired Gaussian. This argument can be made rigorous by subtracting a suitable multiple of the Dirichlet kernel from

![]() $S_{\chi , H}(x)/\sqrt {H}$

, which makes no difference to the putative convergence in distribution but reduces the variance of the sum.

$S_{\chi , H}(x)/\sqrt {H}$

, which makes no difference to the putative convergence in distribution but reduces the variance of the sum.

Note that the use of Pólya’s Fourier expansion imports information about the periodicity of

![]() $\chi $

mod q into our analysis.

$\chi $

mod q into our analysis.

The characters used in the proofs of Theorems 1 and 2 are quite special, suggesting that Lamzouri’s conjecture might be true for almost all q for real characters, or for almost all characters for each q for non-real characters. Another reason for believing this comes from thinking more carefully about the representation (1.1). In a famous classical paper, Salem and Zygmund [Reference Salem and Zygmund21] showed that for almost all sequences of independent

![]() $\pm 1$

coefficients, the partial Fourier series with those coefficients satisfy a central limit theorem when the ‘frequency’ (corresponding to

$\pm 1$

coefficients, the partial Fourier series with those coefficients satisfy a central limit theorem when the ‘frequency’ (corresponding to

![]() $x/q$

in our setup) is chosen uniformly at random. Thus, if we believe that the values of a typical Dirichlet character are somewhat ‘random looking’, we might expect to have a central limit theorem as the length

$x/q$

in our setup) is chosen uniformly at random. Thus, if we believe that the values of a typical Dirichlet character are somewhat ‘random looking’, we might expect to have a central limit theorem as the length

![]() $q/H$

tends to infinity. This translates into a condition

$q/H$

tends to infinity. This translates into a condition

![]() $H = o(q)$

rather than the condition

$H = o(q)$

rather than the condition

![]() $H = o(q/\log q)$

proposed by Lamzouri [Reference Lamzouri14].

$H = o(q/\log q)$

proposed by Lamzouri [Reference Lamzouri14].

In this positive ‘almost all’ direction, we establish the following.

Theorem 3. Let

![]() $H = H(q)$

satisfy

$H = H(q)$

satisfy

![]() $\frac {\log (q/H)}{\log q} \rightarrow 0$

and

$\frac {\log (q/H)}{\log q} \rightarrow 0$

and

![]() $H = o(q)$

as the prime

$H = o(q)$

as the prime

![]() $q \rightarrow \infty $

. Then there exists a subset

$q \rightarrow \infty $

. Then there exists a subset

![]() $\mathcal {P}_{H}$

of primes, which satisfies

$\mathcal {P}_{H}$

of primes, which satisfies

$$ \begin{align*}\frac{\#(\mathcal{P}_{H} \cap [Q,2Q])}{\#\{ Q \leq q \leq 2Q : \; q \; \text{prime}\}} \geq 1 - O(e^{-\min_{Q \leq q \leq 2Q}\log^{3/4}(q/H)})\end{align*} $$

$$ \begin{align*}\frac{\#(\mathcal{P}_{H} \cap [Q,2Q])}{\#\{ Q \leq q \leq 2Q : \; q \; \text{prime}\}} \geq 1 - O(e^{-\min_{Q \leq q \leq 2Q}\log^{3/4}(q/H)})\end{align*} $$

(say) for all

![]() $Q = 2^j, j \in \mathbb {N}$

, such that if

$Q = 2^j, j \in \mathbb {N}$

, such that if

![]() $\chi = \left (\frac {\cdot }{q}\right )$

, we have

$\chi = \left (\frac {\cdot }{q}\right )$

, we have

Theorem 4. Let

![]() $H = H(q)$

satisfy

$H = H(q)$

satisfy

![]() $\frac {\log (q/H)}{\log q} \rightarrow 0$

and

$\frac {\log (q/H)}{\log q} \rightarrow 0$

and

![]() $H = o(q)$

as the prime

$H = o(q)$

as the prime

![]() $q \rightarrow \infty $

. Then there exist sets

$q \rightarrow \infty $

. Then there exist sets

![]() $\mathcal {G}_{q, H}$

of characters mod q, satisfying

$\mathcal {G}_{q, H}$

of characters mod q, satisfying

![]() $\#\mathcal {G}_{q,H} \geq q(1 - O(e^{-\log ^{3/4}(q/H)}))$

, such that for any choice of

$\#\mathcal {G}_{q,H} \geq q(1 - O(e^{-\log ^{3/4}(q/H)}))$

, such that for any choice of

![]() $\chi \in \mathcal {G}_{q, H}$

, we have

$\chi \in \mathcal {G}_{q, H}$

, we have

where

![]() $Z_1 , Z_2$

are independent

$Z_1 , Z_2$

are independent

![]() $N(0,1/2)$

random variables.

$N(0,1/2)$

random variables.

The proofs of Theorems 3 and 4 again use the trigonometric series approximation to

![]() $S_{\chi , H}(x)/\sqrt {H}$

, which can be reworked slightly into a form (roughly speaking) like

$S_{\chi , H}(x)/\sqrt {H}$

, which can be reworked slightly into a form (roughly speaking) like

$$ \begin{align} \frac{S_{\chi, H}(x)}{\sqrt{H}} \approx \frac{2\sqrt{q}}{\pi \sqrt{H}} \sum_{0 < k < q/H} \frac{\overline{\chi}(k) \sin(\pi k H/q)}{k} \cos(2 \pi k x/q). \end{align} $$

$$ \begin{align} \frac{S_{\chi, H}(x)}{\sqrt{H}} \approx \frac{2\sqrt{q}}{\pi \sqrt{H}} \sum_{0 < k < q/H} \frac{\overline{\chi}(k) \sin(\pi k H/q)}{k} \cos(2 \pi k x/q). \end{align} $$

In fact, looking at

![]() $S_{\chi , H}(X)/\sqrt {H}$

for

$S_{\chi , H}(X)/\sqrt {H}$

for

![]() $X \in \{0,1,\ldots ,q-1\}$

uniformly random turns out to be roughly equivalent to looking at

$X \in \{0,1,\ldots ,q-1\}$

uniformly random turns out to be roughly equivalent to looking at

![]() $\frac {2\sqrt {q}}{\pi \sqrt {H}} \sum _{0 < k < q/H} \frac {\overline {\chi }(k) \sin (\pi k H/q)}{k} \cos (2 \pi k \theta )$

, for

$\frac {2\sqrt {q}}{\pi \sqrt {H}} \sum _{0 < k < q/H} \frac {\overline {\chi }(k) \sin (\pi k H/q)}{k} \cos (2 \pi k \theta )$

, for

![]() $\theta \in [0,1]$

uniformly random. This latter small change is not really important, but neatens the writing.

$\theta \in [0,1]$

uniformly random. This latter small change is not really important, but neatens the writing.

Since moment convergence implies distributional convergence to the Gaussian, to prove Theorem 3, it would suffice (roughly speaking) to show the existence of a subsequence

![]() $\mathcal {P}_H$

of primes such that, for each fixed

$\mathcal {P}_H$

of primes such that, for each fixed

![]() $j \in \mathbb {N}$

, we have

$j \in \mathbb {N}$

, we have

$$ \begin{align*}\int_{0}^{1} \left( \frac{2\sqrt{q}}{\pi \sqrt{H}} \sum_{1 \leq k < q/H} \frac{\left(\frac{k}{q}\right) \sin(\pi k H/q)}{k} \cos(2\pi k\theta) \right)^{j} d\theta \rightarrow (1/\sqrt{2\pi}) \int_{-\infty}^{\infty} z^j e^{-z^{2}/2} dz\end{align*} $$

$$ \begin{align*}\int_{0}^{1} \left( \frac{2\sqrt{q}}{\pi \sqrt{H}} \sum_{1 \leq k < q/H} \frac{\left(\frac{k}{q}\right) \sin(\pi k H/q)}{k} \cos(2\pi k\theta) \right)^{j} d\theta \rightarrow (1/\sqrt{2\pi}) \int_{-\infty}^{\infty} z^j e^{-z^{2}/2} dz\end{align*} $$

as

![]() $q \rightarrow \infty , q \in \mathcal {P}_{H}$

. To do this, we can try to calculate the average (square) discrepancy between the actual and the Gaussian moments as q varies in each dyadic interval – namely,

$q \rightarrow \infty , q \in \mathcal {P}_{H}$

. To do this, we can try to calculate the average (square) discrepancy between the actual and the Gaussian moments as q varies in each dyadic interval – namely,

$$ \begin{align*}& \frac{\log Q}{Q} \sum_{\substack{Q \leq q \leq 2Q, \\ q \; \text{prime}}} \\ &\quad \times\left| \int_{0}^{1} \left( \frac{2\sqrt{q}}{\pi \sqrt{H}} \sum_{1 \leq k < q/H} \frac{\left(\frac{k}{q}\right) \sin(\pi k H/q)}{k} \cos(2\pi k\theta) \right)^{j} d\theta - \frac{1}{\sqrt{2\pi}} \int_{-\infty}^{\infty} z^j e^{-z^{2}/2} dz \right|^2 .\end{align*} $$

$$ \begin{align*}& \frac{\log Q}{Q} \sum_{\substack{Q \leq q \leq 2Q, \\ q \; \text{prime}}} \\ &\quad \times\left| \int_{0}^{1} \left( \frac{2\sqrt{q}}{\pi \sqrt{H}} \sum_{1 \leq k < q/H} \frac{\left(\frac{k}{q}\right) \sin(\pi k H/q)}{k} \cos(2\pi k\theta) \right)^{j} d\theta - \frac{1}{\sqrt{2\pi}} \int_{-\infty}^{\infty} z^j e^{-z^{2}/2} dz \right|^2 .\end{align*} $$

If this tends to zero at a sufficient rate as

![]() $Q \rightarrow \infty $

, on a range of j that grows to infinity as

$Q \rightarrow \infty $

, on a range of j that grows to infinity as

![]() $Q \rightarrow \infty $

(recall that we need convergence of all fixed integer moments to guarantee convergence to the Gaussian), then we can form

$Q \rightarrow \infty $

(recall that we need convergence of all fixed integer moments to guarantee convergence to the Gaussian), then we can form

![]() $\mathcal {P}_H$

from all the many primes in each interval

$\mathcal {P}_H$

from all the many primes in each interval

![]() $[Q,2Q]$

where the discrepancy is simultaneously small for a suitable range of j.

$[Q,2Q]$

where the discrepancy is simultaneously small for a suitable range of j.

Provided that

![]() $(q/H)^j$

is small compared with Q, so the periodicity of the characters

$(q/H)^j$

is small compared with Q, so the periodicity of the characters

![]() $\left (\frac {k}{q}\right )$

does not intervene, one expects the left-hand side in the above display to be close to the corresponding one where

$\left (\frac {k}{q}\right )$

does not intervene, one expects the left-hand side in the above display to be close to the corresponding one where

![]() $\left (\frac {k}{q}\right )$

is replaced by an extended Rademacher random multiplicative function

$\left (\frac {k}{q}\right )$

is replaced by an extended Rademacher random multiplicative function

![]() $f(k)$

, and the normalised sum

$f(k)$

, and the normalised sum

![]() $\frac {\log Q}{Q} \sum _{\substack {Q \leq q \leq 2Q, \\ q \; \text {prime}}}$

is replaced by an expectation

$\frac {\log Q}{Q} \sum _{\substack {Q \leq q \leq 2Q, \\ q \; \text {prime}}}$

is replaced by an expectation

![]() $\mathbb {E}$

. There are technical challenges in establishing this because

$\mathbb {E}$

. There are technical challenges in establishing this because

![]() $q/H(q)$

might also vary with q in the sum, and averaging over primes q entails non-trivial issues with the distribution of primes, but these problems can be overcome (see Number Theory Result 3 and Section 6.3 below; essentially one needs to show that the

$q/H(q)$

might also vary with q in the sum, and averaging over primes q entails non-trivial issues with the distribution of primes, but these problems can be overcome (see Number Theory Result 3 and Section 6.3 below; essentially one needs to show that the

![]() $\left (\frac {k}{q}\right )$

for varying q have similar correlation/orthogonality properties to the random

$\left (\frac {k}{q}\right )$

for varying q have similar correlation/orthogonality properties to the random

![]() $f(k)$

). Unfortunately, the condition that

$f(k)$

). Unfortunately, the condition that

![]() $(q/H)^j$

is small compared with Q, for each fixed j, forces the unwanted condition

$(q/H)^j$

is small compared with Q, for each fixed j, forces the unwanted condition

![]() $\frac {\log (q/H)}{\log q} \rightarrow 0$

in Theorem 3. This is similar to the condition

$\frac {\log (q/H)}{\log q} \rightarrow 0$

in Theorem 3. This is similar to the condition

![]() $(\log H)/\log q \rightarrow 0$

that appeared in the work of Davenport and Erdős [Reference Davenport and Erdős5], Lamzouri [Reference Lamzouri14] and others.

$(\log H)/\log q \rightarrow 0$

that appeared in the work of Davenport and Erdős [Reference Davenport and Erdős5], Lamzouri [Reference Lamzouri14] and others.

Finally, we need upper bounds for quantities like

$$ \begin{align*}\mathbb{E} \left| \int_{0}^{1} \left( \frac{2\sqrt{Q}}{\pi \sqrt{H}} \sum_{1 \leq k < Q/H} \frac{f(k) \sin(\pi k H/Q)}{k} \cos(2\pi k\theta) \right)^{j} d\theta - \frac{1}{\sqrt{2\pi}} \int_{-\infty}^{\infty} z^j e^{-z^{2}/2} dz \right|^2 ,\end{align*} $$

$$ \begin{align*}\mathbb{E} \left| \int_{0}^{1} \left( \frac{2\sqrt{Q}}{\pi \sqrt{H}} \sum_{1 \leq k < Q/H} \frac{f(k) \sin(\pi k H/Q)}{k} \cos(2\pi k\theta) \right)^{j} d\theta - \frac{1}{\sqrt{2\pi}} \int_{-\infty}^{\infty} z^j e^{-z^{2}/2} dz \right|^2 ,\end{align*} $$

where

![]() $f(k)$

is a random multiplicative function. This arithmetic input can be extracted from a nice recent paper of Benatar, Nishry and Rodgers [Reference Benatar, Nishry and Rodgers1]. They were interested in almost sure central limit theorems and size bounds for random trigonometric polynomials

$f(k)$

is a random multiplicative function. This arithmetic input can be extracted from a nice recent paper of Benatar, Nishry and Rodgers [Reference Benatar, Nishry and Rodgers1]. They were interested in almost sure central limit theorems and size bounds for random trigonometric polynomials

![]() $\frac {1}{\sqrt {N}} \sum _{n \leq N} f(n) e(n\theta )$

and directly calculated such expectations using a point counting argument drawing on work of Vaughan and Wooley [Reference Vaughan and Wooley23]. Ultimately, one needs to count tuples

$\frac {1}{\sqrt {N}} \sum _{n \leq N} f(n) e(n\theta )$

and directly calculated such expectations using a point counting argument drawing on work of Vaughan and Wooley [Reference Vaughan and Wooley23]. Ultimately, one needs to count tuples

![]() $(n_1, \ldots , n_{2j})$

satisfying a small collection of linear and multiplicative equations.

$(n_1, \ldots , n_{2j})$

satisfying a small collection of linear and multiplicative equations.

As Benatar, Nishry and Rodgers [Reference Benatar, Nishry and Rodgers1] comment, one can also analyse the distribution of

![]() $\frac {1}{\sqrt {N}} \sum _{n \leq N} f(n) e(n\theta )$

using martingale methods, and this was done in unpublished work of the present author (see the paper [Reference Harper9] for an application of martingales to a different distributional problem for random multiplicative functions, and the recent paper [Reference Soundararajan and Xu22] of Soundararajan and Xu for various such applications, including to trigonometric polynomials with random multiplicative coefficients). But to transfer these conclusions to character sums, one would seem to again need moment estimates on the random multiplicative side, not just distributional convergence. These could be obtained (e.g., one can use Burkholder’s inequalities [Reference Burkholder3] and some calculation to show that all moments remain bounded as

$\frac {1}{\sqrt {N}} \sum _{n \leq N} f(n) e(n\theta )$

using martingale methods, and this was done in unpublished work of the present author (see the paper [Reference Harper9] for an application of martingales to a different distributional problem for random multiplicative functions, and the recent paper [Reference Soundararajan and Xu22] of Soundararajan and Xu for various such applications, including to trigonometric polynomials with random multiplicative coefficients). But to transfer these conclusions to character sums, one would seem to again need moment estimates on the random multiplicative side, not just distributional convergence. These could be obtained (e.g., one can use Burkholder’s inequalities [Reference Burkholder3] and some calculation to show that all moments remain bounded as

![]() $N \rightarrow \infty $

, and this combined with distributional convergence implies they must all converge to the desired Gaussian moments), but it seems simpler to rely on the existing calculations of Benatar, Nishry and Rodgers [Reference Benatar, Nishry and Rodgers1].

$N \rightarrow \infty $

, and this combined with distributional convergence implies they must all converge to the desired Gaussian moments), but it seems simpler to rely on the existing calculations of Benatar, Nishry and Rodgers [Reference Benatar, Nishry and Rodgers1].

In the complex case in Theorem 4, one proceeds exactly similarly in studying the square discrepancy from the moments of the complex Gaussian

![]() $Z_1 + iZ_2$

, now averaging over all

$Z_1 + iZ_2$

, now averaging over all

![]() $\chi $

mod q rather than over

$\chi $

mod q rather than over

![]() $Q \leq q \leq 2Q$

. Provided that

$Q \leq q \leq 2Q$

. Provided that

![]() $1 \leq n_1, n_2 < q$

, say, we have the identity

$1 \leq n_1, n_2 < q$

, say, we have the identity

![]() $\frac {1}{q-1} \sum _{\chi \; \text {mod} \; q} \chi (n_1) \overline {\chi }(n_2) = \textbf {1}_{n_1 \equiv n_2 \; \text {mod} \; q} = \textbf {1}_{n_1 = n_2} = \mathbb {E} f(n_1) \overline {f}(n_2)$

, where

$\frac {1}{q-1} \sum _{\chi \; \text {mod} \; q} \chi (n_1) \overline {\chi }(n_2) = \textbf {1}_{n_1 \equiv n_2 \; \text {mod} \; q} = \textbf {1}_{n_1 = n_2} = \mathbb {E} f(n_1) \overline {f}(n_2)$

, where

![]() $f(\cdot )$

denotes a Steinhaus random multiplicative function. This exact equality makes it much easier to establish the connection with random multiplicative functions than in Theorem 3, but the condition

$f(\cdot )$

denotes a Steinhaus random multiplicative function. This exact equality makes it much easier to establish the connection with random multiplicative functions than in Theorem 3, but the condition

![]() $1 \leq n_1, n_2 < q$

ultimately forces the same unwanted constraint

$1 \leq n_1, n_2 < q$

ultimately forces the same unwanted constraint

![]() $\frac {\log (q/H)}{\log q} \rightarrow 0$

.

$\frac {\log (q/H)}{\log q} \rightarrow 0$

.

1.2 Discussion and open questions

Our results leave open several problems about the behaviour of

![]() $S_{\chi , H}(x)/\sqrt {H}$

, and related issues.

$S_{\chi , H}(x)/\sqrt {H}$

, and related issues.

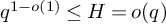

The results of Davenport and Erdős [Reference Davenport and Erdős5] and of Lamzouri [Reference Lamzouri14] establish a central limit theorem for all characters provided

![]() $H \rightarrow \infty $

but

$H \rightarrow \infty $

but

![]() $H = q^{o(1)}$

, and our results establish a central limit theorem for almost all characters provided

$H = q^{o(1)}$

, and our results establish a central limit theorem for almost all characters provided

![]() $q^{1-o(1)} \leq H = o(q)$

. Moreover, we have shown that one cannot hope to prove a central limit theorem for all characters when

$q^{1-o(1)} \leq H = o(q)$

. Moreover, we have shown that one cannot hope to prove a central limit theorem for all characters when

![]() $\log (q/H)/\log \log q$

is bounded. Given this state of affairs, one can ask the following:

$\log (q/H)/\log \log q$

is bounded. Given this state of affairs, one can ask the following:

-

(i) How does

$S_{\chi , H}(x)/\sqrt {H}$

behave on the missing range

$S_{\chi , H}(x)/\sqrt {H}$

behave on the missing range

$q^{o(1)} \leq H \leq q^{1-o(1)}$

?

$q^{o(1)} \leq H \leq q^{1-o(1)}$

? -

(ii) Indeed, should it be possible to prove a central limit theorem for all characters provided

$H \rightarrow \infty $

and

$H \rightarrow \infty $

and

$\log (q/H)/\log \log q \rightarrow \infty $

?

$\log (q/H)/\log \log q \rightarrow \infty $

?

The author tentatively conjectures that the answer to (ii) is Yes. In view of Corollary A of Granville and Soundararajan [Reference Granville and Soundararajan6], if the Generalised Riemann Hypothesis is true, then

![]() $\sum _{n \leq x} \chi (n) = o(x)$

whenever

$\sum _{n \leq x} \chi (n) = o(x)$

whenever

![]() $\chi $

is a non-principal character mod q, and

$\chi $

is a non-principal character mod q, and

![]() $(\log x)/\log \log q \rightarrow \infty $

. This means that, assuming GRH, there would be no construction along the lines of Theorems 1 and 2 available once

$(\log x)/\log \log q \rightarrow \infty $

. This means that, assuming GRH, there would be no construction along the lines of Theorems 1 and 2 available once

![]() $\log (q/H)/\log \log q \rightarrow \infty $

. So if one believes this is the only barrier to a central limit theorem holding, as is somewhat suggested by the representation (1.1) together with the classical work of Salem and Zygmund [Reference Salem and Zygmund21] on random Fourier series, then one arrives at this conjecture.

$\log (q/H)/\log \log q \rightarrow \infty $

. So if one believes this is the only barrier to a central limit theorem holding, as is somewhat suggested by the representation (1.1) together with the classical work of Salem and Zygmund [Reference Salem and Zygmund21] on random Fourier series, then one arrives at this conjecture.

However, proving such a result seems difficult. First, the best unconditional estimates we have of the form

![]() $\sum _{n \leq x} \chi (n) = o(x)$

, where

$\sum _{n \leq x} \chi (n) = o(x)$

, where

![]() $\chi $

is any non-principal character modulo a prime q (one can sometimes do better for special non-prime moduli), are Burgess-type estimates requiring that

$\chi $

is any non-principal character modulo a prime q (one can sometimes do better for special non-prime moduli), are Burgess-type estimates requiring that

![]() $x \geq q^{1/4 - o(1)}$

. Thus, we would need to assume results like GRH merely to exclude the kind of construction from Theorems 1 and 2 from cropping up. But even allowing such unproved arithmetical results, there is no clear way to go on and establish a central limit theorem on the full range of H in (ii). The problem of understanding the distribution of

$x \geq q^{1/4 - o(1)}$

. Thus, we would need to assume results like GRH merely to exclude the kind of construction from Theorems 1 and 2 from cropping up. But even allowing such unproved arithmetical results, there is no clear way to go on and establish a central limit theorem on the full range of H in (ii). The problem of understanding the distribution of

![]() $(\tau (\chi )\sqrt {H}/q) \sum _{0 < |k| < q/H} \overline {\chi }(-k) e(k\theta )$

, where

$(\tau (\chi )\sqrt {H}/q) \sum _{0 < |k| < q/H} \overline {\chi }(-k) e(k\theta )$

, where

![]() $\theta = X/q$

is random but the coefficients

$\theta = X/q$

is random but the coefficients

![]() $\overline {\chi }(-k)$

are deterministic, is just one example of the important general problem of understanding the distribution of

$\overline {\chi }(-k)$

are deterministic, is just one example of the important general problem of understanding the distribution of

![]() $\sum _{k} a_k e(k\theta )$

, where

$\sum _{k} a_k e(k\theta )$

, where

![]() $a_k$

are interesting deterministic coefficients. See, for example, the work of Hughes and Rudnick [Reference Hughes and Rudnick11] on lattice points in annuli. They encounter similar sums where the

$a_k$

are interesting deterministic coefficients. See, for example, the work of Hughes and Rudnick [Reference Hughes and Rudnick11] on lattice points in annuli. They encounter similar sums where the

![]() $a_k$

involve the number of representations of k as a sum of two squares, and the range of their main theorem involves a similar (conjecturally unnecessary) restriction as in Theorems 3 and 4 to allow a proof by the method of moments.

$a_k$

involve the number of representations of k as a sum of two squares, and the range of their main theorem involves a similar (conjecturally unnecessary) restriction as in Theorems 3 and 4 to allow a proof by the method of moments.

Indeed, even extending our ‘almost all’ results to a wider range of H would be very interesting, and does not seem easily attackable.

Another, perhaps rather specialised, question is the following:

-

(iii) What can be said about the distribution of

$S_{\chi , H}(X)/\sqrt {H}$

, for those characters

$S_{\chi , H}(X)/\sqrt {H}$

, for those characters

$\chi $

and interval lengths H where it does not satisfy the expected central limit theorem?

$\chi $

and interval lengths H where it does not satisfy the expected central limit theorem?

We can also return to the random multiplicative functions

![]() $f(n)$

that motivated Lamzouri’s conjecture [Reference Lamzouri14] and played a role in the proofs of Theorems 3 and 4. As discussed earlier, and perhaps demonstrated by Theorems 1 and 2, the author does not believe that Chatterjee and Soundararajan’s work [Reference Chatterjee and Soundararajan4] on

$f(n)$

that motivated Lamzouri’s conjecture [Reference Lamzouri14] and played a role in the proofs of Theorems 3 and 4. As discussed earlier, and perhaps demonstrated by Theorems 1 and 2, the author does not believe that Chatterjee and Soundararajan’s work [Reference Chatterjee and Soundararajan4] on

![]() $\sum _{x < n \leq x+y} f(n)$

provides a natural model for

$\sum _{x < n \leq x+y} f(n)$

provides a natural model for

![]() $S_{\chi , H}(X)$

. But the study of

$S_{\chi , H}(X)$

. But the study of

![]() $\sum _{x < n \leq x+y} f(n)$

is very interesting in its own right. Although Chatterjee and Soundararajan only obtainedFootnote

2

a Gaussian limiting distribution when

$\sum _{x < n \leq x+y} f(n)$

is very interesting in its own right. Although Chatterjee and Soundararajan only obtainedFootnote

2

a Gaussian limiting distribution when

![]() $x^{1/5}\log x \ll y = o(x/\log x)$

, they did not show that their upper bound on y is optimal, and recent work of Soundararajan and Xu [Reference Soundararajan and Xu22] extends the range to

$x^{1/5}\log x \ll y = o(x/\log x)$

, they did not show that their upper bound on y is optimal, and recent work of Soundararajan and Xu [Reference Soundararajan and Xu22] extends the range to

However, it follows directly from work of the author [Reference Harper10] that if

![]() $\frac {y \sqrt {\log \log x}}{x} \rightarrow \infty $

as

$\frac {y \sqrt {\log \log x}}{x} \rightarrow \infty $

as

![]() $x \rightarrow \infty $

, then

$x \rightarrow \infty $

, then

$$ \begin{align*}\frac{\mathbb{E}|\sum_{x < n \leq x+y} f(n)|}{\sqrt{y}} \leq \frac{\mathbb{E}|\sum_{n \leq x} f(n)| + \mathbb{E}|\sum_{n \leq x+y} f(n)|}{\sqrt{y}} \rightarrow 0 .\end{align*} $$

$$ \begin{align*}\frac{\mathbb{E}|\sum_{x < n \leq x+y} f(n)|}{\sqrt{y}} \leq \frac{\mathbb{E}|\sum_{n \leq x} f(n)| + \mathbb{E}|\sum_{n \leq x+y} f(n)|}{\sqrt{y}} \rightarrow 0 .\end{align*} $$

This implies (by Markov’s inequality) that

![]() $\sum _{x < n \leq x+y} f(n)$

converges in probability to 0 rather than converging to a standard Gaussian, when renormalised by its standard deviation. As Soundararajan and Xu [Reference Soundararajan and Xu22] remark, by looking inside the proofs from [Reference Harper10], one can show that

$\sum _{x < n \leq x+y} f(n)$

converges in probability to 0 rather than converging to a standard Gaussian, when renormalised by its standard deviation. As Soundararajan and Xu [Reference Soundararajan and Xu22] remark, by looking inside the proofs from [Reference Harper10], one can show that

![]() $\mathbb {E} \frac {|\sum _{x < n \leq x+y} f(n)|}{\sqrt {y}} \rightarrow 0$

even for somewhat smaller y. Thus, there is at least one qualitative transition in the distributional behaviour of

$\mathbb {E} \frac {|\sum _{x < n \leq x+y} f(n)|}{\sqrt {y}} \rightarrow 0$

even for somewhat smaller y. Thus, there is at least one qualitative transition in the distributional behaviour of

![]() $\sum _{x < n \leq x+y} f(n)$

when y approaches x, and the exact location and nature of this remains to be understood.

$\sum _{x < n \leq x+y} f(n)$

when y approaches x, and the exact location and nature of this remains to be understood.

As also noted earlier, the author believes that

![]() $\sum _{x < n \leq x+y} f(n)$

will be a good model for the behaviour of

$\sum _{x < n \leq x+y} f(n)$

will be a good model for the behaviour of

![]() $\sum _{x < n \leq x+y} \chi (n)$

where

$\sum _{x < n \leq x+y} \chi (n)$

where

![]() $x, y(x)$

are fixed and the character

$x, y(x)$

are fixed and the character

![]() $\chi $

varies mod q, at least provided

$\chi $

varies mod q, at least provided

![]() $x \leq \sqrt {q}$

, say (for x close to q, one will again need to be more careful to account for the periodicity of

$x \leq \sqrt {q}$

, say (for x close to q, one will again need to be more careful to account for the periodicity of

![]() $\chi $

). It would be very interesting to obtain rigorous results on the distribution of

$\chi $

). It would be very interesting to obtain rigorous results on the distribution of

![]() $\sum _{x < n \leq x+y} \chi (n)$

for varying

$\sum _{x < n \leq x+y} \chi (n)$

for varying

![]() $\chi $

.

$\chi $

.

Finally, we might wonder the following.

-

(iv) When

$f(n)$

is a realisation of a Steinhaus or (extended) Rademacher random multiplicative function, what is the distribution of

$f(n)$

is a realisation of a Steinhaus or (extended) Rademacher random multiplicative function, what is the distribution of

$\sum _{x < n \leq x+H} f(n)$

as x varies over a long interval?

$\sum _{x < n \leq x+H} f(n)$

as x varies over a long interval?

Although the proofs of Theorems 3 and 4 use the random multiplicative model

![]() $f(n)$

for

$f(n)$

for

![]() $\chi (n)$

, they do not address (iv) because they only use this after first passing to the representation (1.2), the truth of which depends on special properties of Dirichlet characters. Our arguments say nothing directly about the ‘model’ object

$\chi (n)$

, they do not address (iv) because they only use this after first passing to the representation (1.2), the truth of which depends on special properties of Dirichlet characters. Our arguments say nothing directly about the ‘model’ object

![]() $\sum _{x < n \leq x+H} f(n)$

. Of course, a little care is required to sensibly interpret question (iv). For example, the function

$\sum _{x < n \leq x+H} f(n)$

. Of course, a little care is required to sensibly interpret question (iv). For example, the function

![]() $f(n)$

that is 1 for all n on some long initial segment is a realisation of a random multiplicative function and has rather exceptional behaviour, but it is a realisation that occurs with extremely small probability. A natural problem might be to investigate the distribution of

$f(n)$

that is 1 for all n on some long initial segment is a realisation of a random multiplicative function and has rather exceptional behaviour, but it is a realisation that occurs with extremely small probability. A natural problem might be to investigate the distribution of

![]() $\sum _{x < n \leq x+H} f(n)$

for ‘most’ realisations of f, somewhat analogously to Theorems 3 and 4. Relevant work in the literature includes Najnudel’s paper [Reference Najnudel18], which explores the joint distribution of the tuple

$\sum _{x < n \leq x+H} f(n)$

for ‘most’ realisations of f, somewhat analogously to Theorems 3 and 4. Relevant work in the literature includes Najnudel’s paper [Reference Najnudel18], which explores the joint distribution of the tuple

![]() $(f(x),f(x+1), \ldots , f(x+H))$

for x varying and H fixed (or slowly growing).

$(f(x),f(x+1), \ldots , f(x+H))$

for x varying and H fixed (or slowly growing).

Remark added in review, March 2024. Following the release of a preprint version of the present article, question (iv) was investigated by Pandey, Wang and Xu [Reference Pandey, Wang and Xu19]. Using a moment method, they can show that

![]() $\frac {1}{\sqrt {H}} \sum _{x < n \leq x+H} f(n)$

typically (i.e., for most realisations of Steinhaus random multiplicative

$\frac {1}{\sqrt {H}} \sum _{x < n \leq x+H} f(n)$

typically (i.e., for most realisations of Steinhaus random multiplicative

![]() $f(n)$

) has an approximately Gaussian distribution as

$f(n)$

) has an approximately Gaussian distribution as

![]() $x \leq X$

varies, provided that

$x \leq X$

varies, provided that

![]() $H = H(X) \rightarrow \infty $

but

$H = H(X) \rightarrow \infty $

but

![]() $\log (X/H)/\log \log X \rightarrow \infty $

as

$\log (X/H)/\log \log X \rightarrow \infty $

as

![]() $X \rightarrow \infty $

.

$X \rightarrow \infty $

.

2 Tools for Theorems 1 and 2

The proofs of Theorems 1 and 2 rest on the following simple principle.

Probability Result 1. Let

![]() $0 \leq \tau < 1$

, and suppose

$0 \leq \tau < 1$

, and suppose

![]() $(V_n)_{n=1}^{\infty }$

is a sequence of real or complex valued random variables satisfying

$(V_n)_{n=1}^{\infty }$

is a sequence of real or complex valued random variables satisfying

![]() $\mathbb {E}|V_n|^2 \leq \tau $

for all n. Then if Z is any real or complex valued random variable such that

$\mathbb {E}|V_n|^2 \leq \tau $

for all n. Then if Z is any real or complex valued random variable such that

![]() $\mathbb {E}|Z|^2 = 1$

, we have

$\mathbb {E}|Z|^2 = 1$

, we have

Proof of Probability Result 1.

Choose

![]() $a \in \mathbb {R}$

such that

$a \in \mathbb {R}$

such that

![]() $\mathbb {E}\min \{|Z|^2, a^2\} \geq (1+\tau )/2$

(such a exists by the monotone convergence theorem). Since

$\mathbb {E}\min \{|Z|^2, a^2\} \geq (1+\tau )/2$

(such a exists by the monotone convergence theorem). Since

![]() $v \mapsto \min \{|v|^2 , a^2\}$

is a continuous bounded function on

$v \mapsto \min \{|v|^2 , a^2\}$

is a continuous bounded function on

![]() $\mathbb {C}$

, if we had

$\mathbb {C}$

, if we had

![]() $V_n \stackrel {d}{\rightarrow } Z$

, then we would have

$V_n \stackrel {d}{\rightarrow } Z$

, then we would have

But this is impossible since clearly

![]() $\mathbb {E} \min \{|V_n|^2 , a^2\} \leq \mathbb {E}|V_n|^2 \leq \tau < (1+\tau )/2$

.

$\mathbb {E} \min \{|V_n|^2 , a^2\} \leq \mathbb {E}|V_n|^2 \leq \tau < (1+\tau )/2$

.

We remark that although Probability Result 1 is simple, there is a non-trivial issue involved which it is important to recognise. Thus, the analogous statement in which for some

![]() $\nu> 1$

our sequence satisfied

$\nu> 1$

our sequence satisfied

![]() $\mathbb {E} |V_n|^2 \geq \nu $

for all n would be false, as can easily be shown by examples. In general, the failure of moments to converge to the moments of a supposed limit distribution need not, by itself, imply that convergence in distribution is not happening since moments may be inflated by events whose probabilities tend to zero, and which are therefore irrelevant to convergence in distribution.

$\mathbb {E} |V_n|^2 \geq \nu $

for all n would be false, as can easily be shown by examples. In general, the failure of moments to converge to the moments of a supposed limit distribution need not, by itself, imply that convergence in distribution is not happening since moments may be inflated by events whose probabilities tend to zero, and which are therefore irrelevant to convergence in distribution.

As explained in the Introduction, Theorems 1 and 2 will also rely on the existence of non-principal characters with large partial sums. In the non-real case, the existence of such characters follows immediately from work of Granville and Soundararajan [Reference Granville and Soundararajan6].

Number Theory Result 1 (see Theorem 3 of Granville and Soundararajan [Reference Granville and Soundararajan6], 2001).

Let

![]() $A> 0$

, and suppose q is a prime (say) that is sufficiently large in terms of A. Then for any

$A> 0$

, and suppose q is a prime (say) that is sufficiently large in terms of A. Then for any

![]() $2 \leq x \leq \log ^{A}q$

, there exist at least

$2 \leq x \leq \log ^{A}q$

, there exist at least

![]() $q^{1 - \frac {2}{\log x}}$

characters

$q^{1 - \frac {2}{\log x}}$

characters

![]() $\chi $

mod q for which

$\chi $

mod q for which

$$ \begin{align*}\left| \sum_{n \leq x} \chi(n) \right| \geq x \rho(A) \left(1 + O \left(\frac{1}{\log x} + \frac{\log x (\log\log\log q)^2}{(\log\log q)^2}\right) \right) ,\end{align*} $$

$$ \begin{align*}\left| \sum_{n \leq x} \chi(n) \right| \geq x \rho(A) \left(1 + O \left(\frac{1}{\log x} + \frac{\log x (\log\log\log q)^2}{(\log\log q)^2}\right) \right) ,\end{align*} $$

where

![]() $\rho (A)> 0$

denotes the Dickman function.

$\rho (A)> 0$

denotes the Dickman function.

In the real case, Granville and Soundararajan (see Theorem 9 of [Reference Granville and Soundararajan6]) also proved that for any fixed A, if q is large and

![]() $x = ((1/3)\log q)^A$

, then there exists a fundamental discriminant

$x = ((1/3)\log q)^A$

, then there exists a fundamental discriminant

![]() $q \leq |D| \leq 2q$

for which

$q \leq |D| \leq 2q$

for which

$$ \begin{align*}\sum_{n \leq x} \left(\frac{D}{n}\right) \geq x (\rho(A) + o(1)) .\end{align*} $$

$$ \begin{align*}\sum_{n \leq x} \left(\frac{D}{n}\right) \geq x (\rho(A) + o(1)) .\end{align*} $$

Unfortunately this is not quite sufficient for our purposes because we need to find biased real characters

![]() $\left (\frac {\cdot }{q}\right )$

where q is prime. But by reorganising Granville and Soundararajan’s proof a little, and inserting information about the zero-free region and exceptional zeros of Dirichlet L-functions, one can prove such a statement. This has been done by Kalmynin [Reference Kalmynin13].

$\left (\frac {\cdot }{q}\right )$

where q is prime. But by reorganising Granville and Soundararajan’s proof a little, and inserting information about the zero-free region and exceptional zeros of Dirichlet L-functions, one can prove such a statement. This has been done by Kalmynin [Reference Kalmynin13].

Number Theory Result 2 (see Theorem 3 of Kalmynin [Reference Kalmynin13], 2019).

For any fixed

![]() $B> 0$

, there exists a small constant

$B> 0$

, there exists a small constant

![]() $c(B)> 0$

such that the following is true. If Q is sufficiently large in terms of B, then for any

$c(B)> 0$

such that the following is true. If Q is sufficiently large in terms of B, then for any

![]() $1 \leq x \leq \log ^{B}Q$

, there exists a prime

$1 \leq x \leq \log ^{B}Q$

, there exists a prime

![]() $Q < q \leq 2Q$

such that

$Q < q \leq 2Q$

such that

$$ \begin{align*}\sum_{n \leq x} \left(\frac{n}{q}\right) \geq c(B) x .\end{align*} $$

$$ \begin{align*}\sum_{n \leq x} \left(\frac{n}{q}\right) \geq c(B) x .\end{align*} $$

Proof of Number Theory Result 2.

Theorem 3 of Kalmynin [Reference Kalmynin13] directly implies Number Theory Result 2 provided that

![]() $B \geq 1$

and

$B \geq 1$

and

![]() $x = \log ^{B}Q$

. However, the lower bound for

$x = \log ^{B}Q$

. However, the lower bound for

![]() $S_{0}(Q)$

obtained in Kalmynin’s proof implies that one can find primes

$S_{0}(Q)$

obtained in Kalmynin’s proof implies that one can find primes

![]() $Q < q \leq 2Q$

such that

$Q < q \leq 2Q$

such that

![]() $\left (\frac {n}{q}\right ) = 1$

for all

$\left (\frac {n}{q}\right ) = 1$

for all

![]() $n \leq \log ^{1/3}Q$

. This means that if

$n \leq \log ^{1/3}Q$

. This means that if

![]() $x \leq \log ^{1/3}Q$

, then one can make

$x \leq \log ^{1/3}Q$

, then one can make

![]() $\sum _{n \leq x} \left (\frac {n}{q}\right )$

maximally large. And if

$\sum _{n \leq x} \left (\frac {n}{q}\right )$

maximally large. And if

![]() $\log ^{1/3}Q < x \leq \log ^{B}Q$

, then one can run Kalmynin’s proof with the sum over

$\log ^{1/3}Q < x \leq \log ^{B}Q$

, then one can run Kalmynin’s proof with the sum over

![]() $n \leq \log ^{B}Q$

replaced by a sum over

$n \leq \log ^{B}Q$

replaced by a sum over

![]() $n \leq x$

without changing anything, giving the desired conclusion.

$n \leq x$

without changing anything, giving the desired conclusion.

3 Proof of Theorem 1

Let

![]() $K \geq 1$

. If

$K \geq 1$

. If

![]() $\chi $

is a primitive character modulo q, then Pólya’s Fourier expansion (see, for example, display (9.19) of Montgomery and Vaughan [Reference Montgomery and Vaughan17], noting that the restriction

$\chi $

is a primitive character modulo q, then Pólya’s Fourier expansion (see, for example, display (9.19) of Montgomery and Vaughan [Reference Montgomery and Vaughan17], noting that the restriction

![]() $K \leq q^{1-\epsilon }$

there is unnecessary if one is happy with a general error term

$K \leq q^{1-\epsilon }$

there is unnecessary if one is happy with a general error term

![]() $\frac {q\log q}{K}$

rather than

$\frac {q\log q}{K}$

rather than

![]() $\frac {\phi (q) \log q}{K}$

) yields that

$\frac {\phi (q) \log q}{K}$

) yields that

$$ \begin{align*}\sum_{n \leq x} \chi(n) = \frac{\tau(\chi)}{2\pi i} \sum_{0 < |k| \leq K} \frac{\overline{\chi}(-k)}{k} (e(kx/q) - 1) + O \left(1 + \frac{q\log q}{K}\right) ,\end{align*} $$

$$ \begin{align*}\sum_{n \leq x} \chi(n) = \frac{\tau(\chi)}{2\pi i} \sum_{0 < |k| \leq K} \frac{\overline{\chi}(-k)}{k} (e(kx/q) - 1) + O \left(1 + \frac{q\log q}{K}\right) ,\end{align*} $$

so in particular,

$$ \begin{align} S_{\chi, H}(x) = \frac{\tau(\chi)}{2\pi i} \sum_{0 < |k| < q/2} \frac{\overline{\chi}(-k)}{k} e(kx/q) (e(kH/q) - 1) + O(\log q). \end{align} $$

$$ \begin{align} S_{\chi, H}(x) = \frac{\tau(\chi)}{2\pi i} \sum_{0 < |k| < q/2} \frac{\overline{\chi}(-k)}{k} e(kx/q) (e(kH/q) - 1) + O(\log q). \end{align} $$

Here,

![]() $\tau (\chi )$

denotes the Gauss sum, having absolute value

$\tau (\chi )$

denotes the Gauss sum, having absolute value

![]() $\sqrt {q}$

for primitive

$\sqrt {q}$

for primitive

![]() $\chi $

.

$\chi $

.

Recall that X denotes a random variable having the discrete uniform distribution on

![]() $\{0,1,\ldots ,q-1\}$

(this is the randomness with respect to which we will shortly calculate expectations

$\{0,1,\ldots ,q-1\}$

(this is the randomness with respect to which we will shortly calculate expectations

![]() $\mathbb {E}$

), and that

$\mathbb {E}$

), and that

![]() $H = H(q) = q/\log ^{A}q$

, and that

$H = H(q) = q/\log ^{A}q$

, and that

![]() $\chi = \left (\frac {\cdot }{q}\right )$

is real-valued in Theorem 1. Next let

$\chi = \left (\frac {\cdot }{q}\right )$

is real-valued in Theorem 1. Next let

![]() $0 < \delta \leq 1$

be a parameter, that will be fixed later, and define

$0 < \delta \leq 1$

be a parameter, that will be fixed later, and define

![]() $\alpha = \alpha (\delta ) \in \mathbb {R}$

by

$\alpha = \alpha (\delta ) \in \mathbb {R}$

by

$$ \begin{align*}\sum_{1 \leq k \leq \delta q/H} \overline{\chi}(-k) = \sum_{1 \leq k \leq \delta q/H} \chi(-k) = \alpha \sum_{1 \leq k \leq \delta q/H} 1 ,\end{align*} $$

$$ \begin{align*}\sum_{1 \leq k \leq \delta q/H} \overline{\chi}(-k) = \sum_{1 \leq k \leq \delta q/H} \chi(-k) = \alpha \sum_{1 \leq k \leq \delta q/H} 1 ,\end{align*} $$

and define

$$ \begin{align*}G_{\chi, H}(x) := \frac{\alpha \tau(\chi) H}{q} \sum_{1 \leq k \leq \delta q/H} e(kx/q) .\end{align*} $$

$$ \begin{align*}G_{\chi, H}(x) := \frac{\alpha \tau(\chi) H}{q} \sum_{1 \leq k \leq \delta q/H} e(kx/q) .\end{align*} $$

As discussed in the Introduction,

![]() $G_{\chi , H}(x)$

is the scaled Dirichlet kernel that we shall strategically subtract from

$G_{\chi , H}(x)$

is the scaled Dirichlet kernel that we shall strategically subtract from

![]() $S_{\chi , H}(x)$

. (The small parameter

$S_{\chi , H}(x)$

. (The small parameter

![]() $\delta $

is only present for technical reasons, to control lower order terms in Taylor expansions of the complex exponential.)

$\delta $

is only present for technical reasons, to control lower order terms in Taylor expansions of the complex exponential.)

Before embarking on our main computations, we record some basic observations. By expanding the square and using the fact that

![]() $\mathbb {E} e(k_1 X/q) e(-k_2 X/q) = \mathbb {E} e((k_1 - k_2)X/q) = 0$

when

$\mathbb {E} e(k_1 X/q) e(-k_2 X/q) = \mathbb {E} e((k_1 - k_2)X/q) = 0$

when

![]() $-q/2 < k_1 \neq k_2 < q/2$

, we find

$-q/2 < k_1 \neq k_2 < q/2$

, we find

$$ \begin{align*}\mathbb{E}|G_{\chi, H}(X)|^2 = \frac{\alpha^2 H^2}{q} \mathbb{E}|\sum_{1 \leq k \leq \delta q/H} e(kX/q)|^2 = \frac{\alpha^2 H^2}{q} \sum_{1 \leq k \leq \delta q/H} 1 \leq H ,\end{align*} $$

$$ \begin{align*}\mathbb{E}|G_{\chi, H}(X)|^2 = \frac{\alpha^2 H^2}{q} \mathbb{E}|\sum_{1 \leq k \leq \delta q/H} e(kX/q)|^2 = \frac{\alpha^2 H^2}{q} \sum_{1 \leq k \leq \delta q/H} 1 \leq H ,\end{align*} $$

as well as

$$ \begin{align*}\mathbb{E}\Bigg|\frac{\tau(\chi)}{2\pi i} \sum_{0 < |k| < q/2} \frac{\overline{\chi}(-k)}{k} e(kX/q) (e(kH/q) - 1) \Bigg|^2 = \frac{q}{(2\pi)^2} \sum_{0 < |k| < q/2} \frac{1}{k^2} |e(kH/q) - 1|^2 .\end{align*} $$

$$ \begin{align*}\mathbb{E}\Bigg|\frac{\tau(\chi)}{2\pi i} \sum_{0 < |k| < q/2} \frac{\overline{\chi}(-k)}{k} e(kX/q) (e(kH/q) - 1) \Bigg|^2 = \frac{q}{(2\pi)^2} \sum_{0 < |k| < q/2} \frac{1}{k^2} |e(kH/q) - 1|^2 .\end{align*} $$

Since

![]() $\mathbb {E}|S_{\chi , H}(X)|^2 = \mathbb {E}|\sum _{X < n \leq X+H} \chi (n)|^2$

is

$\mathbb {E}|S_{\chi , H}(X)|^2 = \mathbb {E}|\sum _{X < n \leq X+H} \chi (n)|^2$

is

(explaining why

![]() $\frac {S_{\chi , H}(X)}{\sqrt {H}}$

is the natural renormalisation to consider), we deduce that

$\frac {S_{\chi , H}(X)}{\sqrt {H}}$

is the natural renormalisation to consider), we deduce that

$$ \begin{align*} \frac{q}{(2\pi)^2} \sum_{0 < |k| < q/2} \frac{1}{k^2} |e(kH/q) - 1|^2 & = \mathbb{E}|S_{\chi, H}(X) + O(\log q)|^2 \nonumber \\ & = \mathbb{E}|S_{\chi, H}(X)|^2 + O(\log q \mathbb{E}|S_{\chi, H}(X)| + \log^{2}q) \nonumber \\ & = (1+o(1))H \nonumber \end{align*} $$

$$ \begin{align*} \frac{q}{(2\pi)^2} \sum_{0 < |k| < q/2} \frac{1}{k^2} |e(kH/q) - 1|^2 & = \mathbb{E}|S_{\chi, H}(X) + O(\log q)|^2 \nonumber \\ & = \mathbb{E}|S_{\chi, H}(X)|^2 + O(\log q \mathbb{E}|S_{\chi, H}(X)| + \log^{2}q) \nonumber \\ & = (1+o(1))H \nonumber \end{align*} $$

when

![]() $H = H(q) = q/\log ^{A}q$

(and indeed on a much larger range of H as well).

$H = H(q) = q/\log ^{A}q$

(and indeed on a much larger range of H as well).

Next, using (3.1), expanding the square, and calculating as above (and using the Cauchy–Schwarz inequality and the above estimates of

![]() $\mathbb {E}|G_{\chi , H}(X)|^2$

and

$\mathbb {E}|G_{\chi , H}(X)|^2$

and

![]() $\mathbb {E}|S_{\chi , H}(X)|^2$

to control the contribution from the

$\mathbb {E}|S_{\chi , H}(X)|^2$

to control the contribution from the

![]() $O(\log q)$

term), we find

$O(\log q)$

term), we find

$$ \begin{align} & \mathbb{E}|S_{\chi, H}(X) - G_{\chi, H}(X)|^2 \nonumber \\ &\quad = \mathbb{E}\Bigg|\tau(\chi) \sum_{1 \leq k \leq \delta q/H} e(kX/q) \left(\frac{\overline{\chi}(-k)}{k} \frac{(e(kH/q) - 1)}{2\pi i} - \frac{\alpha H}{q} \right) + \nonumber \\ &\qquad + \frac{\tau(\chi)}{2\pi i} \sum_{\substack{0 < |k| < q/2 , \\ k \notin [1,\delta q/H]}} \frac{\overline{\chi}(-k)}{k} e(kX/q) (e(kH/q) - 1) + O(\log q) \Bigg|^2 \nonumber \\ &\quad = q \sum_{1 \leq k \leq \delta q/H} \left|\frac{\overline{\chi}(-k)}{k} \frac{(e(kH/q) - 1)}{2\pi i} - \frac{\alpha H}{q} \right|^2 + \frac{q}{(2\pi)^2} \sum_{\substack{0 < |k| < q/2 , \\ k \notin [1,\delta q/H]}} \frac{1}{k^2} |e(kH/q) - 1|^2 + \nonumber \\ &\qquad + O(\sqrt{H} \log q + \log^{2}q ). \end{align} $$

$$ \begin{align} & \mathbb{E}|S_{\chi, H}(X) - G_{\chi, H}(X)|^2 \nonumber \\ &\quad = \mathbb{E}\Bigg|\tau(\chi) \sum_{1 \leq k \leq \delta q/H} e(kX/q) \left(\frac{\overline{\chi}(-k)}{k} \frac{(e(kH/q) - 1)}{2\pi i} - \frac{\alpha H}{q} \right) + \nonumber \\ &\qquad + \frac{\tau(\chi)}{2\pi i} \sum_{\substack{0 < |k| < q/2 , \\ k \notin [1,\delta q/H]}} \frac{\overline{\chi}(-k)}{k} e(kX/q) (e(kH/q) - 1) + O(\log q) \Bigg|^2 \nonumber \\ &\quad = q \sum_{1 \leq k \leq \delta q/H} \left|\frac{\overline{\chi}(-k)}{k} \frac{(e(kH/q) - 1)}{2\pi i} - \frac{\alpha H}{q} \right|^2 + \frac{q}{(2\pi)^2} \sum_{\substack{0 < |k| < q/2 , \\ k \notin [1,\delta q/H]}} \frac{1}{k^2} |e(kH/q) - 1|^2 + \nonumber \\ &\qquad + O(\sqrt{H} \log q + \log^{2}q ). \end{align} $$

Using the Taylor expansion

![]() $e(kH/q) = 1 + 2\pi i kH/q + O((kH/q)^2)$

, the first sum here is seen to be

$e(kH/q) = 1 + 2\pi i kH/q + O((kH/q)^2)$

, the first sum here is seen to be

$$ \begin{align*} q \sum_{1 \leq k \leq \delta q/H} \left|\frac{\overline{\chi}(-k)H}{q} + O\left(\frac{\delta H}{q}\right) - \frac{\alpha H}{q} \right|^2 & = \frac{H^2}{q} \sum_{1 \leq k \leq \delta q/H} \left|\overline{\chi}(-k) + O(\delta) - \alpha \right|^2 \nonumber \\ & = \frac{H^2}{q} \sum_{1 \leq k \leq \delta q/H} \left|\overline{\chi}(-k) - \alpha \right|^2 + O(\delta^2 H). \nonumber \end{align*} $$

$$ \begin{align*} q \sum_{1 \leq k \leq \delta q/H} \left|\frac{\overline{\chi}(-k)H}{q} + O\left(\frac{\delta H}{q}\right) - \frac{\alpha H}{q} \right|^2 & = \frac{H^2}{q} \sum_{1 \leq k \leq \delta q/H} \left|\overline{\chi}(-k) + O(\delta) - \alpha \right|^2 \nonumber \\ & = \frac{H^2}{q} \sum_{1 \leq k \leq \delta q/H} \left|\overline{\chi}(-k) - \alpha \right|^2 + O(\delta^2 H). \nonumber \end{align*} $$

Moreover, since we chose

![]() $\alpha $

to be the mean value of

$\alpha $

to be the mean value of

![]() $\overline {\chi }(-k) (= \chi (-k))$

over the interval

$\overline {\chi }(-k) (= \chi (-k))$

over the interval

![]() $1 \leq k \leq \delta q/H$

, this simplifies to

$1 \leq k \leq \delta q/H$

, this simplifies to

$$ \begin{align*}(1- \alpha^2) \frac{H^2}{q} \Bigg( \sum_{1 \leq k \leq \delta q/H} 1 \Bigg) + O(\delta^2 H) ,\end{align*} $$

$$ \begin{align*}(1- \alpha^2) \frac{H^2}{q} \Bigg( \sum_{1 \leq k \leq \delta q/H} 1 \Bigg) + O(\delta^2 H) ,\end{align*} $$

which we can rewrite (again using the Taylor expansion of the exponential) as

$$ \begin{align*} & \frac{H^2}{q} \Bigg( \sum_{1 \leq k \leq \delta q/H} 1 \Bigg) - \delta \alpha^2 H + O \left(\delta^2 H + \frac{H^2}{q}\right) \\ &\quad = \frac{q}{(2\pi)^2} \sum_{1 \leq k \leq \delta q/H} \frac{1}{k^2} |e(kH/q) - 1|^2 - \delta \alpha^2 H + O \left(\delta^2 H + \frac{H^2}{q}\right) .\end{align*} $$

$$ \begin{align*} & \frac{H^2}{q} \Bigg( \sum_{1 \leq k \leq \delta q/H} 1 \Bigg) - \delta \alpha^2 H + O \left(\delta^2 H + \frac{H^2}{q}\right) \\ &\quad = \frac{q}{(2\pi)^2} \sum_{1 \leq k \leq \delta q/H} \frac{1}{k^2} |e(kH/q) - 1|^2 - \delta \alpha^2 H + O \left(\delta^2 H + \frac{H^2}{q}\right) .\end{align*} $$

Inserting this in (3.2), and using our earlier calculation that

![]() $\frac {q}{(2\pi )^2} \sum _{0 < |k| < q/2} \frac {1}{k^2} |e(kH/q) - 1|^2 = (1+o(1))H$

, we deduce

$\frac {q}{(2\pi )^2} \sum _{0 < |k| < q/2} \frac {1}{k^2} |e(kH/q) - 1|^2 = (1+o(1))H$

, we deduce

In particular, note that if

![]() $H(q) = q/\log ^{A}q$

, then we have

$H(q) = q/\log ^{A}q$

, then we have

![]() $\delta q/H \leq q/H = \log ^{A}q$

. Thus, by Number Theory Result 2, there exist arbitrarily large primes q for which, with

$\delta q/H \leq q/H = \log ^{A}q$

. Thus, by Number Theory Result 2, there exist arbitrarily large primes q for which, with

![]() $\chi = \left (\frac {\cdot }{q}\right )$

, we have

$\chi = \left (\frac {\cdot }{q}\right )$

, we have

$$ \begin{align*}\Bigg| \sum_{1 \leq k \leq \delta q/H} \overline{\chi}(-k) \Bigg| = \Bigg| \sum_{1 \leq k \leq \delta q/H} \chi(k) \Bigg| \geq c(A) \delta q/H .\end{align*} $$

$$ \begin{align*}\Bigg| \sum_{1 \leq k \leq \delta q/H} \overline{\chi}(-k) \Bigg| = \Bigg| \sum_{1 \leq k \leq \delta q/H} \chi(k) \Bigg| \geq c(A) \delta q/H .\end{align*} $$

In other words, for such q, we will have

![]() $|\alpha | \geq c(A)$

. So if we fix the choice

$|\alpha | \geq c(A)$

. So if we fix the choice

![]() $\delta = c_0 c(A)^2$

, for a suitably small absolute constant

$\delta = c_0 c(A)^2$

, for a suitably small absolute constant

![]() $c_0> 0$

to neutralise the implicit constant in the

$c_0> 0$

to neutralise the implicit constant in the

![]() $O(\delta ^2)$

term, we will have

$O(\delta ^2)$

term, we will have

$$ \begin{align} \mathbb{E}\left| \frac{S_{\chi, H}(X) - G_{\chi, H}(X)}{\sqrt{H}} \right|^2 = 1 - \delta\alpha^2 + O(\delta^2) + o(1) \leq 1 - (c_{0}/2) c(A)^4 \end{align} $$

$$ \begin{align} \mathbb{E}\left| \frac{S_{\chi, H}(X) - G_{\chi, H}(X)}{\sqrt{H}} \right|^2 = 1 - \delta\alpha^2 + O(\delta^2) + o(1) \leq 1 - (c_{0}/2) c(A)^4 \end{align} $$

whenever q is a large enough prime coming from Number Theory Result 2.

Now on the other hand, using the formula for summing a geometric progression, we may calculate explicitly that, with

![]() $|| \cdot ||$

denoting distance to the nearest integer,

$|| \cdot ||$

denoting distance to the nearest integer,

$$ \begin{align*}\mathbb{E}|G_{\chi, H}(X)| &= \frac{|\alpha| H}{\sqrt{q}} \mathbb{E}\left|\sum_{1 \leq k \leq \delta q/H} e(kX/q) \right| \\ &\ll \frac{H}{\sqrt{q}} \mathbb{E} \min\left\{\frac{\delta q}{H}, \frac{1}{||X/q||} \right\} \ll \frac{H}{\sqrt{q}} (1 + \log(\delta q/H)) ,\end{align*} $$

$$ \begin{align*}\mathbb{E}|G_{\chi, H}(X)| &= \frac{|\alpha| H}{\sqrt{q}} \mathbb{E}\left|\sum_{1 \leq k \leq \delta q/H} e(kX/q) \right| \\ &\ll \frac{H}{\sqrt{q}} \mathbb{E} \min\left\{\frac{\delta q}{H}, \frac{1}{||X/q||} \right\} \ll \frac{H}{\sqrt{q}} (1 + \log(\delta q/H)) ,\end{align*} $$

and therefore,

$$ \begin{align*}\mathbb{E}\left|\frac{G_{\chi, H}(X)}{\sqrt{H}} \right| \ll \frac{(1 + \log(\delta q/H))}{\sqrt{q/H}} \ll \frac{\log(q/H)}{\sqrt{q/H}} \rightarrow 0 \quad \text{as } q \rightarrow \infty .\end{align*} $$

$$ \begin{align*}\mathbb{E}\left|\frac{G_{\chi, H}(X)}{\sqrt{H}} \right| \ll \frac{(1 + \log(\delta q/H))}{\sqrt{q/H}} \ll \frac{\log(q/H)}{\sqrt{q/H}} \rightarrow 0 \quad \text{as } q \rightarrow \infty .\end{align*} $$

By Markov’s inequality, it follows that

![]() $\frac {G_{\chi , H}(X)}{\sqrt {H}}$

converges in probability to zero as

$\frac {G_{\chi , H}(X)}{\sqrt {H}}$

converges in probability to zero as

![]() $q \rightarrow \infty $

, and so if

$q \rightarrow \infty $

, and so if

![]() $\frac {S_{\chi , H}(X)}{\sqrt {H}} \stackrel {d}{\rightarrow } N(0,1)$

, then we must also have

$\frac {S_{\chi , H}(X)}{\sqrt {H}} \stackrel {d}{\rightarrow } N(0,1)$

, then we must also have

![]() $\frac {S_{\chi , H}(X) - G_{\chi , H}(X)}{\sqrt {H}} \stackrel {d}{\rightarrow } N(0,1)$

.

$\frac {S_{\chi , H}(X) - G_{\chi , H}(X)}{\sqrt {H}} \stackrel {d}{\rightarrow } N(0,1)$

.

But combining Probability Result 1 with (3.3), we see this convergence in distribution is impossible, which proves Theorem 1.

4 Proof of Theorem 2

The proof of Theorem 2 is extremely similar to that of Theorem 1, so we simply make a few remarks to reassure the reader that no additional difficulties arise.

Indeed, this time, we define

![]() $\alpha = \alpha (\delta ) \in \mathbb {C}$

by

$\alpha = \alpha (\delta ) \in \mathbb {C}$

by

$$ \begin{align*}\sum_{1 \leq k \leq \delta q/H} \overline{\chi}(-k) = \alpha \sum_{1 \leq k \leq \delta q/H} 1 ,\end{align*} $$

$$ \begin{align*}\sum_{1 \leq k \leq \delta q/H} \overline{\chi}(-k) = \alpha \sum_{1 \leq k \leq \delta q/H} 1 ,\end{align*} $$

and again we set