1. Introduction

Surface degradation in flow-related engineering applications can take various forms, such as wearing or fouling, resulting in roughness on the solid surfaces. The most significant effect of surface roughness, in a practical sense, is an increase in the skin-friction drag under turbulent flow conditions. As an example, the uncertainties in the prediction of roughness-induced skin friction on ship hulls subjected to bio-fouling can cause multiple billion dollars of energy waste every year (Schultz et al. Reference Schultz, Bendick, Holm and Hertel2011; Chung et al. Reference Chung, Hutchins, Schultz and Flack2021). Understandably, study of turbulent flow over rough surfaces has been an active area of research for nearly a century (Nikuradse Reference Nikuradse1933; Schlichting Reference Schlichting1936; Perry, Schofield & Joubert Reference Perry, Schofield and Joubert1969; Krogstad, Antonia & Browne Reference Krogstad, Antonia and Browne1992; Raupach Reference Raupach1992; Bhaganagar, Kim & Coleman Reference Bhaganagar, Kim and Coleman2004; Busse, Thakkar & Sandham Reference Busse, Thakkar and Sandham2017; Jouybari et al. Reference Jouybari, Seo, Yuan, Mittal and Meneveau2022).

The seminal work by Nikuradse (Reference Nikuradse1933) has provided the following researchers with a common ‘currency’ to measure roughness-induced drag; that is, equivalent sand-grain roughness size, ![]() $k_s$, defined as the sand-grain size in Nikuradse's experiments producing the same skin-friction coefficient as a rough surface of interest in the fully rough regime. Equivalent sand-grain size is related to the downward shift in the logarithmic region of the inner-scaled mean velocity profile observed widely on rough walls, which is referred to as roughness function

$k_s$, defined as the sand-grain size in Nikuradse's experiments producing the same skin-friction coefficient as a rough surface of interest in the fully rough regime. Equivalent sand-grain size is related to the downward shift in the logarithmic region of the inner-scaled mean velocity profile observed widely on rough walls, which is referred to as roughness function ![]() $\Delta U^+$ (Hama Reference Hama1954). In the fully rough regime, where the skin-friction coefficient is independent of Reynolds number,

$\Delta U^+$ (Hama Reference Hama1954). In the fully rough regime, where the skin-friction coefficient is independent of Reynolds number,

where ![]() $\kappa$ is the von Kármán constant, and

$\kappa$ is the von Kármán constant, and ![]() $B$ is the smooth-wall log-law intercept (Jiménez Reference Jiménez2004).

$B$ is the smooth-wall log-law intercept (Jiménez Reference Jiménez2004).

One must note that ![]() $k_s$ is, by definition, a flow variable and not a geometric one. As a result, for any ‘new’ rough surface, it needs to be determined through a (physical or high-fidelity numerical) experiment in which the skin-friction drag is measured. Obviously, such an exercise is not practical in many applications; therefore, a great amount of effort in the past few decades has been devoted to determining

$k_s$ is, by definition, a flow variable and not a geometric one. As a result, for any ‘new’ rough surface, it needs to be determined through a (physical or high-fidelity numerical) experiment in which the skin-friction drag is measured. Obviously, such an exercise is not practical in many applications; therefore, a great amount of effort in the past few decades has been devoted to determining ![]() $k_s$ of an arbitrary roughness a priori, i.e. based merely on its geometry (see e.g. van Rij, Belnap & Ligrani Reference van Rij, Belnap and Ligrani2002; Flack & Schultz Reference Flack and Schultz2010; Chan et al. Reference Chan, MacDonald, Chung, Hutchins and Ooi2015; Forooghi et al. Reference Forooghi, Stroh, Magagnato, Jakirlić and Frohnapfel2017; Thakkar, Busse & Sandham Reference Thakkar, Busse and Sandham2017; Flack, Schultz & Barros Reference Flack, Schultz and Barros2020). A comprehensive description of these efforts can be found in the reviews by Chung et al. (Reference Chung, Hutchins, Schultz and Flack2021) and Flack & Chung (Reference Flack and Chung2022). Essentially, they can be summarized as attempts to regress correlations between

$k_s$ of an arbitrary roughness a priori, i.e. based merely on its geometry (see e.g. van Rij, Belnap & Ligrani Reference van Rij, Belnap and Ligrani2002; Flack & Schultz Reference Flack and Schultz2010; Chan et al. Reference Chan, MacDonald, Chung, Hutchins and Ooi2015; Forooghi et al. Reference Forooghi, Stroh, Magagnato, Jakirlić and Frohnapfel2017; Thakkar, Busse & Sandham Reference Thakkar, Busse and Sandham2017; Flack, Schultz & Barros Reference Flack, Schultz and Barros2020). A comprehensive description of these efforts can be found in the reviews by Chung et al. (Reference Chung, Hutchins, Schultz and Flack2021) and Flack & Chung (Reference Flack and Chung2022). Essentially, they can be summarized as attempts to regress correlations between ![]() $k_s$ (or

$k_s$ (or ![]() $\Delta U^+$) and a few statistical parameters of roughness geometry based on available data. Some widely used parameters in this context are skewness of roughness height probability density function (p.d.f.) (Flack & Schultz Reference Flack and Schultz2010), effective (or mean absolute) slope (Napoli, Armenio & DeMarchis Reference Napoli, Armenio and DeMarchis2008), and correlation length of rough surface geometry (Thakkar et al. Reference Thakkar, Busse and Sandham2017).

$\Delta U^+$) and a few statistical parameters of roughness geometry based on available data. Some widely used parameters in this context are skewness of roughness height probability density function (p.d.f.) (Flack & Schultz Reference Flack and Schultz2010), effective (or mean absolute) slope (Napoli, Armenio & DeMarchis Reference Napoli, Armenio and DeMarchis2008), and correlation length of rough surface geometry (Thakkar et al. Reference Thakkar, Busse and Sandham2017).

As a result of increased computational capacities in recent years, direct numerical simulations (DNS) have become a source of data for development of accurate roughness correlations as pointed out by Flack (Reference Flack2018). In this regard, the idea of DNS in minimal channels, proposed by Chung et al. (Reference Chung, Chan, MacDonald, Hutchins and Ooi2015), has enabled characterizing larger numbers of roughness samples with a certain computational resource. Availability of more data, on the one hand, has opened the door to utilization of machine learning (ML) based regression tools, and on the other hand, enables inclusion of more roughness information (beyond only a few parameters) as the input to such tools. The latter point is particularly important since there is increasing evidence that both statistical and spectral information on roughness geometry are required for prediction of flow response to a multi-scale roughness (Alves Portela, Busse & Sandham Reference Alves Portela, Busse and Sandham2021). In this regard, it has been shown that ![]() $k_s$ for multi-scale random roughness can be determined nearly uniquely with a combined knowledge of roughness height p.d.f. and its power spectrum (PS) (Yang et al. Reference Yang, Stroh, Chung and Forooghi2022, Reference Yang, Velandia, Bansmer, Stroh and Forooghi2023).

$k_s$ for multi-scale random roughness can be determined nearly uniquely with a combined knowledge of roughness height p.d.f. and its power spectrum (PS) (Yang et al. Reference Yang, Stroh, Chung and Forooghi2022, Reference Yang, Velandia, Bansmer, Stroh and Forooghi2023).

The first ML-based ‘data-driven’ tool for prediction of ![]() $k_s$ has been reported recently by Jouybari et al. (Reference Jouybari, Yuan, Brereton and Murillo2021). These authors used deep neural network and Gaussian process regression to train models with 17 inputs, including widely used roughness parameters and their products. The training data for their model are obtained from DNS of flow over certain types of artificially generated roughness, which were also used to evaluate the model. Lee et al. (Reference Lee, Yang, Forooghi, Stroh and Bagheri2022) used a neural network similar to that of Jouybari et al. (Reference Jouybari, Yuan, Brereton and Murillo2021) and showed that improvements in predictive performance can be achieved if the network is ‘pre-trained’ on existing empirical correlations. While these pioneering works deliver promising results, the data-driven approach arguably has the potential to realize truly universal models, which can generalize beyond a certain class of roughness. The present work is an attempt to explore this potential. To this end, a model is trained on a wide variety of multi-scale irregular roughness samples, selected based on an adaptive approach (explained shortly), which is aimed at enhancing the universality of the predictions. This is evaluated using ‘unseen’ roughness from different testing data sets with different natures. Moreover, unlike the previous efforts, the present model incorporates the complete p.d.f. and PS of roughness as inputs rather than a finite set of predetermined parameters.

$k_s$ has been reported recently by Jouybari et al. (Reference Jouybari, Yuan, Brereton and Murillo2021). These authors used deep neural network and Gaussian process regression to train models with 17 inputs, including widely used roughness parameters and their products. The training data for their model are obtained from DNS of flow over certain types of artificially generated roughness, which were also used to evaluate the model. Lee et al. (Reference Lee, Yang, Forooghi, Stroh and Bagheri2022) used a neural network similar to that of Jouybari et al. (Reference Jouybari, Yuan, Brereton and Murillo2021) and showed that improvements in predictive performance can be achieved if the network is ‘pre-trained’ on existing empirical correlations. While these pioneering works deliver promising results, the data-driven approach arguably has the potential to realize truly universal models, which can generalize beyond a certain class of roughness. The present work is an attempt to explore this potential. To this end, a model is trained on a wide variety of multi-scale irregular roughness samples, selected based on an adaptive approach (explained shortly), which is aimed at enhancing the universality of the predictions. This is evaluated using ‘unseen’ roughness from different testing data sets with different natures. Moreover, unlike the previous efforts, the present model incorporates the complete p.d.f. and PS of roughness as inputs rather than a finite set of predetermined parameters.

Considerable attention has been paid in recent literature to the multi-scale nature of realistic roughness and the significance of its ‘spectral content’. It has been suggested that beyond a certain threshold, large roughness wavelengths may impact the roughness-induced drag less significantly (Barros, Schultz & Flack Reference Barros, Schultz and Flack2018; Yang et al. Reference Yang, Stroh, Chung and Forooghi2022). While parametric studies of roughness PS (Anderson & Meneveau Reference Anderson and Meneveau2011; Barros et al. Reference Barros, Schultz and Flack2018) or Fourier filtering (Busse, Lützner & Sandham Reference Busse, Lützner and Sandham2015; Alves Portela et al. Reference Alves Portela, Busse and Sandham2021) can shed light on this matter, in the present work we explore the possibility of evaluating directly contributions of different roughness scales utilizing the information embedded in the data-driven model developed in this study. This is motivated by the fact that the (discretized) PS is a direct input to the model, which hints at the potential to extract information about the role of different wavelengths through interpretation of the model.

In order to train the data-driven roughness model, ![]() $k_s$ for several roughness samples should be determined. This is referred to as ‘labelling’ those samples, borrowing the term from the ML terminology. Moreover, each roughness sample along with its

$k_s$ for several roughness samples should be determined. This is referred to as ‘labelling’ those samples, borrowing the term from the ML terminology. Moreover, each roughness sample along with its ![]() $k_s$ value is called a training ‘data point’. One should note that labelling is a computationally expensive process due to the need to perform DNS. In dealing with such scenarios, ML methods classified under active learning (AL) – also known as query-based learning (Abe & Mamitsuka Reference Abe and Mamitsuka1998) or optimal experimental design (Fedorov Reference Fedorov1972) in different contexts – have been proven particularly advantageous (Zhu et al. Reference Zhu, Stolcke, Chen and Morgan2005; Settles & Craven Reference Settles and Craven2008; Bangert et al. Reference Bangert, Moon, Woo, Didari and Hao2021). In AL, selection of the training data is navigated in a way such that the information gain from a certain amount of available data is maximized (Settles Reference Settles2009). The ‘informativeness’ of a potential data point is commonly measured by the uncertainty in its prediction, which needs to be determined without labelling, e.g. through the standard deviation of the predictive distribution of a Bayesian model (Gal & Ghahramani Reference Gal and Ghahramani2016) or the variation of the predictions among a number of individual models (Raychaudhuri & Hamey Reference Raychaudhuri and Hamey1995).

$k_s$ value is called a training ‘data point’. One should note that labelling is a computationally expensive process due to the need to perform DNS. In dealing with such scenarios, ML methods classified under active learning (AL) – also known as query-based learning (Abe & Mamitsuka Reference Abe and Mamitsuka1998) or optimal experimental design (Fedorov Reference Fedorov1972) in different contexts – have been proven particularly advantageous (Zhu et al. Reference Zhu, Stolcke, Chen and Morgan2005; Settles & Craven Reference Settles and Craven2008; Bangert et al. Reference Bangert, Moon, Woo, Didari and Hao2021). In AL, selection of the training data is navigated in a way such that the information gain from a certain amount of available data is maximized (Settles Reference Settles2009). The ‘informativeness’ of a potential data point is commonly measured by the uncertainty in its prediction, which needs to be determined without labelling, e.g. through the standard deviation of the predictive distribution of a Bayesian model (Gal & Ghahramani Reference Gal and Ghahramani2016) or the variation of the predictions among a number of individual models (Raychaudhuri & Hamey Reference Raychaudhuri and Hamey1995).

Two major AL categories can be identified in the literature (Lang & Baum Reference Lang and Baum1992; Lewis & Gale Reference Lewis and Gale1994; Angluin Reference Angluin2004). The methods based on membership query synthesis expand an existing data set by creating and labelling new samples that the model is most curious about. In contrast, the methods based on pool-based sampling utilize a ‘bounded’ unlabelled data set (also called a repository) ![]() $\mathcal {U}$, select and label the most informative samples from

$\mathcal {U}$, select and label the most informative samples from ![]() $\mathcal {U}$, and include them in the labelled training data set

$\mathcal {U}$, and include them in the labelled training data set ![]() $\mathcal {L}$. In the present work, pool-based sampling is deemed more suitable as it can prevent creating unrealistic samples (Lang & Baum Reference Lang and Baum1992). Moreover, identification of the most informative samples follows a query-by-committee (QBC) strategy (Seung, Opper & Sompolinsky Reference Seung, Opper and Sompolinsky1992), in which variance in the outputs of an ensemble of individual models (the committee) is the basis for the next query. A detailed description of the implemented QBC is provided in § 2.

$\mathcal {L}$. In the present work, pool-based sampling is deemed more suitable as it can prevent creating unrealistic samples (Lang & Baum Reference Lang and Baum1992). Moreover, identification of the most informative samples follows a query-by-committee (QBC) strategy (Seung, Opper & Sompolinsky Reference Seung, Opper and Sompolinsky1992), in which variance in the outputs of an ensemble of individual models (the committee) is the basis for the next query. A detailed description of the implemented QBC is provided in § 2.

In summary, the present work aims to answer two questions; first, whether ‘universal’ data-driven predictions of ![]() $k_s$ can be approached using a complete statistical-spectral representation of roughness (i.e. with p.d.f. and PS as inputs). We leverage AL to facilitate achieving this goal. The second question is whether and how the information embedded in a data-driven model can provide insight on the contributions of different roughness scales to the added drag. Following this introduction, the roughness generation approach, DNS and the ML methodology are described in § 2. In § 3, first the results and performance of the model are discussed, then the analysis of drag-relevant scales is presented. Section 4 summarizes the main conclusions.

$k_s$ can be approached using a complete statistical-spectral representation of roughness (i.e. with p.d.f. and PS as inputs). We leverage AL to facilitate achieving this goal. The second question is whether and how the information embedded in a data-driven model can provide insight on the contributions of different roughness scales to the added drag. Following this introduction, the roughness generation approach, DNS and the ML methodology are described in § 2. In § 3, first the results and performance of the model are discussed, then the analysis of drag-relevant scales is presented. Section 4 summarizes the main conclusions.

2. Methodology

2.1. Roughness repository

The (unlabelled) roughness ‘repository’ ![]() $\mathcal {U}$ is constructed by a collection of 4200 artificial irregular rough surfaces. These surfaces are generated through a mathematical roughness generation method where the PS and p.d.f. of each roughness can be prescribed (Pérez-Ràfols & Almqvist Reference Pérez-Ràfols and Almqvist2019). For creation of the present repository, p.d.f. and PS are parametrized, as described shortly, and their parameters are varied randomly within a realistic range to generate a variety of roughness samples while imitating the random nature of roughness formation in practical applications.

$\mathcal {U}$ is constructed by a collection of 4200 artificial irregular rough surfaces. These surfaces are generated through a mathematical roughness generation method where the PS and p.d.f. of each roughness can be prescribed (Pérez-Ràfols & Almqvist Reference Pérez-Ràfols and Almqvist2019). For creation of the present repository, p.d.f. and PS are parametrized, as described shortly, and their parameters are varied randomly within a realistic range to generate a variety of roughness samples while imitating the random nature of roughness formation in practical applications.

In total, three types of p.d.f. – namely, Gaussian, Weibull and bimodal – are used, and for each new roughness added to the repository, one type is randomly selected. The Weibull distribution of random variable ![]() $k$ – here the roughness height – follows

$k$ – here the roughness height – follows

where the shape parameter ![]() $0.7< K<1.7$ is selected randomly with

$0.7< K<1.7$ is selected randomly with ![]() $\beta =1.0$. In the present notation,

$\beta =1.0$. In the present notation, ![]() $k$ denotes the local roughness height as a function of wall-parallel coordinates

$k$ denotes the local roughness height as a function of wall-parallel coordinates ![]() $(x,z)$. The bimodal distribution is obtained by combining two Gaussian distributions through (Peng & Bhushan Reference Peng and Bhushan2000)

$(x,z)$. The bimodal distribution is obtained by combining two Gaussian distributions through (Peng & Bhushan Reference Peng and Bhushan2000)

where ![]() $f_{G}(x|\mu,\sigma )$ is the p.d.f. of the Gaussian distribution with randomized mean

$f_{G}(x|\mu,\sigma )$ is the p.d.f. of the Gaussian distribution with randomized mean ![]() $0<\mu <0.5$ and randomized standard deviation

$0<\mu <0.5$ and randomized standard deviation ![]() $0<\sigma <0.5$. The p.d.f. variable

$0<\sigma <0.5$. The p.d.f. variable ![]() $k$ is then scaled from 0 to the roughness peak-to-trough height

$k$ is then scaled from 0 to the roughness peak-to-trough height ![]() $k_{t}=\max (k)-\min (k)$, whose value is determined randomly in the range

$k_{t}=\max (k)-\min (k)$, whose value is determined randomly in the range ![]() $0.06< k_{t}/H<0.18$, where

$0.06< k_{t}/H<0.18$, where ![]() $H$ is the channel half-height.

$H$ is the channel half-height.

The PS of the roughness samples in the repository is controlled by two randomized parameters, namely the roll-off length ![]() $L_r$ (Jacobs, Junge & Pastewka Reference Jacobs, Junge and Pastewka2017) and the power-law decline rate

$L_r$ (Jacobs, Junge & Pastewka Reference Jacobs, Junge and Pastewka2017) and the power-law decline rate ![]() $\theta _{PS}$ (Lyashenko, Pastewka & Persson Reference Lyashenko, Pastewka and Persson2013), whose values are selected in the ranges

$\theta _{PS}$ (Lyashenko, Pastewka & Persson Reference Lyashenko, Pastewka and Persson2013), whose values are selected in the ranges ![]() $0.1< L_r/(\log (\lambda _0/\lambda _1))<0.6$ and

$0.1< L_r/(\log (\lambda _0/\lambda _1))<0.6$ and ![]() $-3<\theta _{PS}<-0.1$. Here,

$-3<\theta _{PS}<-0.1$. Here, ![]() $\lambda _0$ and

$\lambda _0$ and ![]() $\lambda _1$ represent the upper and lower bounds of the PS, or the largest and smallest wavelengths forming the roughness topography. Random perturbations are added to the PS to achieve higher randomness in PS. The lower bound of the roughness wavelength is set to

$\lambda _1$ represent the upper and lower bounds of the PS, or the largest and smallest wavelengths forming the roughness topography. Random perturbations are added to the PS to achieve higher randomness in PS. The lower bound of the roughness wavelength is set to ![]() $\lambda _1=0.04{H}$ to ensure that the finest structures can be discretized by an adequate number of grid points. The upper bound of the roughness wavelength

$\lambda _1=0.04{H}$ to ensure that the finest structures can be discretized by an adequate number of grid points. The upper bound of the roughness wavelength ![]() $\lambda _0$ is selected randomly in the range

$\lambda _0$ is selected randomly in the range ![]() $0.5{H}<\lambda _0<2{H}$. As will be discussed later, the roughness sample size as well as the simulation domain size should both be adjusted to accommodate this wavelength.

$0.5{H}<\lambda _0<2{H}$. As will be discussed later, the roughness sample size as well as the simulation domain size should both be adjusted to accommodate this wavelength.

Eventually, 4200 separate pairs of p.d.f. and PS are generated using the described random process, each leading to one rough surface added to the repository ![]() $\mathcal {U}$. A representation of the parameter space covered by these samples is illustrated in § 3.1. Moreover, examples of the generated samples can be seen in Appendix A.

$\mathcal {U}$. A representation of the parameter space covered by these samples is illustrated in § 3.1. Moreover, examples of the generated samples can be seen in Appendix A.

2.2. Direct numerical simulations

Direct numerical simulations are employed to solve the turbulent flow over selected rough surfaces from the repository in a plane channel driven by a constant pressure gradient. Each simulation leads to determination of the ![]() $k_s$ value for the respective roughness sample – a practice referred to as ‘labelling’ in this paper. The DNS are performed with a pseudo-spectral Navier–Stokes solver SIMSON (Chevalier et al. Reference Chevalier, Schlatter, Lundbladh and Henningson2007). Fourier and Chebyshev series are employed for the discretization in wall-parallel and wall-normal directions, respectively. Time integration is carried out using a third-order Runge–Kutta method for the advective and forcing terms, and a second-order Crank–Nicolson method for the viscous terms. The roughness representation in the fluid domain is based on the immersed boundary method (IBM) of Goldstein, Handler & Sirovich (Reference Goldstein, Handler and Sirovich1993). The code and the IBM have been validated previously and used in several publications in the past (Forooghi et al. Reference Forooghi, Stroh, Schlatter and Frohnapfel2018a; Vanderwel et al. Reference Vanderwel, Stroh, Kriegseis, Frohnapfel and Ganapathisubramani2019; Yang et al. Reference Yang, Stroh, Chung and Forooghi2022). The solved Navier–Stokes equation gives

$k_s$ value for the respective roughness sample – a practice referred to as ‘labelling’ in this paper. The DNS are performed with a pseudo-spectral Navier–Stokes solver SIMSON (Chevalier et al. Reference Chevalier, Schlatter, Lundbladh and Henningson2007). Fourier and Chebyshev series are employed for the discretization in wall-parallel and wall-normal directions, respectively. Time integration is carried out using a third-order Runge–Kutta method for the advective and forcing terms, and a second-order Crank–Nicolson method for the viscous terms. The roughness representation in the fluid domain is based on the immersed boundary method (IBM) of Goldstein, Handler & Sirovich (Reference Goldstein, Handler and Sirovich1993). The code and the IBM have been validated previously and used in several publications in the past (Forooghi et al. Reference Forooghi, Stroh, Schlatter and Frohnapfel2018a; Vanderwel et al. Reference Vanderwel, Stroh, Kriegseis, Frohnapfel and Ganapathisubramani2019; Yang et al. Reference Yang, Stroh, Chung and Forooghi2022). The solved Navier–Stokes equation gives

where ![]() $\boldsymbol {u}=(u,v,w)^{\rm T}$ is the velocity vector, and

$\boldsymbol {u}=(u,v,w)^{\rm T}$ is the velocity vector, and ![]() $P_x$ is the mean pressure gradient in the flow direction added as a constant and uniform source term to the momentum equation to drive the flow. Moreover,

$P_x$ is the mean pressure gradient in the flow direction added as a constant and uniform source term to the momentum equation to drive the flow. Moreover, ![]() $p$,

$p$, ![]() $\boldsymbol {e}_x$,

$\boldsymbol {e}_x$, ![]() $\rho$,

$\rho$, ![]() $\nu$ and

$\nu$ and ![]() $\boldsymbol {f}_{IBM}$ denote pressure fluctuation, streamwise unit vector, density, kinematic viscosity and external body force term due to the IBM, respectively. Periodic boundary conditions are applied in the streamwise and spanwise directions. The friction Reynolds number is defined as

$\boldsymbol {f}_{IBM}$ denote pressure fluctuation, streamwise unit vector, density, kinematic viscosity and external body force term due to the IBM, respectively. Periodic boundary conditions are applied in the streamwise and spanwise directions. The friction Reynolds number is defined as ![]() $Re_\tau =u_\tau ({H}-k_{md})/\nu$, where

$Re_\tau =u_\tau ({H}-k_{md})/\nu$, where ![]() $u_\tau =\sqrt {\tau _w/\rho }$ and

$u_\tau =\sqrt {\tau _w/\rho }$ and ![]() $\tau _w=-P_x ({H}-k_{md})$ are the friction velocity and the wall shear stress, respectively. Here,

$\tau _w=-P_x ({H}-k_{md})$ are the friction velocity and the wall shear stress, respectively. Here, ![]() $H$ and

$H$ and ![]() $H-k_{md}$ are channel half-height without and with roughness,

$H-k_{md}$ are channel half-height without and with roughness, ![]() $k_{md}$ being the mean (meltdown) roughness height. In the present work, all simulations are performed at

$k_{md}$ being the mean (meltdown) roughness height. In the present work, all simulations are performed at ![]() $Re_\tau =800$.

$Re_\tau =800$.

Due to the high computational demand of many DNS, the concept of DNS in minimal channels (Chung et al. Reference Chung, Chan, MacDonald, Hutchins and Ooi2015; MacDonald et al. Reference MacDonald, Chung, Hutchins, Chan, Ooi and García-Mayoral2016) is adopted for the considered simulations. Recently, Yang et al. (Reference Yang, Stroh, Chung and Forooghi2022) showed the applicability of this concept for flow over irregular roughness subject to certain criteria. Accordingly, a roughness function over a rough surface can be predicted accurately by a comparison of mean velocity profiles in smooth and rough minimal channels if the size of the channels satisfies the following conditions:

\begin{equation} L_z^+\geq \max\left(100,\frac{\tilde{k}^+}{0.4},\lambda_0^+\right),\quad L_x^+\geq \max\left(1000,3L_z^+,\lambda_0^+\right ). \end{equation}

\begin{equation} L_z^+\geq \max\left(100,\frac{\tilde{k}^+}{0.4},\lambda_0^+\right),\quad L_x^+\geq \max\left(1000,3L_z^+,\lambda_0^+\right ). \end{equation}

Here, ![]() $L_z$ and

$L_z$ and ![]() $L_x$ are the spanwise and streamwise extents of the minimal channel, respectively,

$L_x$ are the spanwise and streamwise extents of the minimal channel, respectively, ![]() $\lambda _0$ is the largest wavelength in the roughness spectrum, and

$\lambda _0$ is the largest wavelength in the roughness spectrum, and ![]() $\tilde {k}$ is the characteristic physical roughness height. The plus superscript indicates viscous scaling hereafter. The above condition suggests that the minimal channel size of each roughness should be determined based on

$\tilde {k}$ is the characteristic physical roughness height. The plus superscript indicates viscous scaling hereafter. The above condition suggests that the minimal channel size of each roughness should be determined based on ![]() $\lambda _0$ (which in practice defines the most strict constraint). As described before,

$\lambda _0$ (which in practice defines the most strict constraint). As described before, ![]() $\lambda _0$ is known for each generated roughness sample. Table 1 summarizes the simulation set up for all DNS based on the respective

$\lambda _0$ is known for each generated roughness sample. Table 1 summarizes the simulation set up for all DNS based on the respective ![]() $\lambda _0$ value. Due to the different sizes of the simulation domains, the chosen numbers of grid points differ according to the mesh size, but in all cases,

$\lambda _0$ value. Due to the different sizes of the simulation domains, the chosen numbers of grid points differ according to the mesh size, but in all cases, ![]() $\varDelta _{x,z}^+\leq 4$. In wall-normal directions, cosine stretching mesh is adopted for the Chebyshev discretization. The mesh independence is confirmed in a set of additional tests.

$\varDelta _{x,z}^+\leq 4$. In wall-normal directions, cosine stretching mesh is adopted for the Chebyshev discretization. The mesh independence is confirmed in a set of additional tests.

Table 1. Simulation set-ups.

For each investigated roughness, ![]() $\Delta U^+$ is determined from the offset in the logarithmic velocity profile comparing corresponding rough and smooth DNS. Notably, when plotting mean velocity profiles, zero-plane displacement

$\Delta U^+$ is determined from the offset in the logarithmic velocity profile comparing corresponding rough and smooth DNS. Notably, when plotting mean velocity profiles, zero-plane displacement ![]() $y_0$ is applied in order to achieve parallel velocity profiles in the logarithmic layer, where

$y_0$ is applied in order to achieve parallel velocity profiles in the logarithmic layer, where ![]() $y_0$ is determined as the moment centroid of the drag profile on the rough surface following Jackson's method (Jackson Reference Jackson1981). It is worth noting that in the extensive literature on rough wall-bounded turbulent flows, various definitions of

$y_0$ is determined as the moment centroid of the drag profile on the rough surface following Jackson's method (Jackson Reference Jackson1981). It is worth noting that in the extensive literature on rough wall-bounded turbulent flows, various definitions of ![]() $y_0$ have been proposed, and furthermore, the choice of virtual wall position can affect the predicted rough-wall shear stress

$y_0$ have been proposed, and furthermore, the choice of virtual wall position can affect the predicted rough-wall shear stress ![]() $\tau _w$ and thus the resulting

$\tau _w$ and thus the resulting ![]() $k_s$ value (Chan-Braun, García-Villalba & Uhlmann Reference Chan-Braun, García-Villalba and Uhlmann2011). Therefore, it is important to recognize this as a possible source of uncertainty, and take into account the definitions of

$k_s$ value (Chan-Braun, García-Villalba & Uhlmann Reference Chan-Braun, García-Villalba and Uhlmann2011). Therefore, it is important to recognize this as a possible source of uncertainty, and take into account the definitions of ![]() $\tau _w$ and

$\tau _w$ and ![]() $y_0$ when comparing data from different sources.

$y_0$ when comparing data from different sources.

It is also important to determine if the flow has reached the fully rough regime in each simulation. To this end, ![]() $\Delta U^+$ is combined with (1.1) to yield a testing value of

$\Delta U^+$ is combined with (1.1) to yield a testing value of ![]() $k_s^+$. Then, following the threshold adopted by Jouybari et al. (Reference Jouybari, Yuan, Brereton and Murillo2021), a roughness with

$k_s^+$. Then, following the threshold adopted by Jouybari et al. (Reference Jouybari, Yuan, Brereton and Murillo2021), a roughness with ![]() $k_s^+\geq 50$ is deemed to be in the fully rough regime, and all samples not matching this criterion are excluded from the training or testing process. The selected threshold

$k_s^+\geq 50$ is deemed to be in the fully rough regime, and all samples not matching this criterion are excluded from the training or testing process. The selected threshold ![]() $k_s^+\geq 50$ is somewhat lower than the common threshold of

$k_s^+\geq 50$ is somewhat lower than the common threshold of ![]() $k_s^+\geq 70$ (Flack & Schultz Reference Flack and Schultz2010) and thus may introduce into the database some data points with limited transitionally rough behaviour. This threshold is, however, chosen deliberately as a trade-off to maximize the number of training data given the limited computational resources. One should note that an increase in the threshold value of

$k_s^+\geq 70$ (Flack & Schultz Reference Flack and Schultz2010) and thus may introduce into the database some data points with limited transitionally rough behaviour. This threshold is, however, chosen deliberately as a trade-off to maximize the number of training data given the limited computational resources. One should note that an increase in the threshold value of ![]() $k_s^+$ while maintaining the same parameter space would be possible by increasing

$k_s^+$ while maintaining the same parameter space would be possible by increasing ![]() $Re_\tau$. This would, however, lead to an obvious compromise in the final performance of the model by reducing the number of training data points at a given computational cost.

$Re_\tau$. This would, however, lead to an obvious compromise in the final performance of the model by reducing the number of training data points at a given computational cost.

Overall, 85 roughness samples are DNS-labelled and eventually included in the labelled data set ![]() $\mathcal {L}$ to train the final AL-based model. The procedure for selection of these training samples is explained in detail in the following. Eight out of the 85 labelled samples are located in the range of

$\mathcal {L}$ to train the final AL-based model. The procedure for selection of these training samples is explained in detail in the following. Eight out of the 85 labelled samples are located in the range of ![]() $50\leqslant k_s^+\leqslant 70$. We observe that incorporating these samples into the training process improves model performance. This improvement in the model performance can be attributed both to the incorporation of more informative samples according to AL as well as to the regularization effect of data diversity introduced by including transitionally rough training samples, which makes the model more robust and mitigates over-fitting (Bishop Reference Bishop1995; Reed & Marks Reference Reed and Marks1999).

$50\leqslant k_s^+\leqslant 70$. We observe that incorporating these samples into the training process improves model performance. This improvement in the model performance can be attributed both to the incorporation of more informative samples according to AL as well as to the regularization effect of data diversity introduced by including transitionally rough training samples, which makes the model more robust and mitigates over-fitting (Bishop Reference Bishop1995; Reed & Marks Reference Reed and Marks1999).

2.3. Machine learning

The ML model in the present work is constructed in a QBC fashion by building an ensemble neural network (ENN) model consisting of 50 independent neural networks (NNs) with identical architecture as the ‘committee’ members. Similar to the methods proposed by Raychaudhuri & Hamey (Reference Raychaudhuri and Hamey1995) and Burbidge, Jem & King (Reference Burbidge, Jem and King2007), the prediction uncertainty of the ENN model is defined as the variance of the predictions among the members, ![]() $\sigma _{k_r}$.

$\sigma _{k_r}$.

The workflow of the AL framework is sketched in figure 1. Two collections of roughness samples are included in the framework. These are the (unlabelled) repository ![]() $\mathcal {U}$ and the (labelled) training data set

$\mathcal {U}$ and the (labelled) training data set ![]() $\mathcal {L}$. As a starting point in the AL framework, 30 samples are selected randomly from the repository, labelled (i.e. their

$\mathcal {L}$. As a starting point in the AL framework, 30 samples are selected randomly from the repository, labelled (i.e. their ![]() $k_s$ is calculated) through DNS, and used to train a first ENN model, which is referred to as the ‘base model’. This preliminary base model is subsequently improved throughout multiple AL iterations. In each AL iteration, approximately 20 new roughness samples from

$k_s$ is calculated) through DNS, and used to train a first ENN model, which is referred to as the ‘base model’. This preliminary base model is subsequently improved throughout multiple AL iterations. In each AL iteration, approximately 20 new roughness samples from ![]() $\mathcal {U}$ are DNS-labelled and added to

$\mathcal {U}$ are DNS-labelled and added to ![]() $\mathcal {L}$ for training of the ENN. These are the samples in

$\mathcal {L}$ for training of the ENN. These are the samples in ![]() $\mathcal {U}$ with the highest prediction variances according to the most recent ENN. This QBC strategy leads to an effective exploration of the repository and adding the new data at the most uncertain regions of the parameter space.

$\mathcal {U}$ with the highest prediction variances according to the most recent ENN. This QBC strategy leads to an effective exploration of the repository and adding the new data at the most uncertain regions of the parameter space.

Figure 1. Schematic of the AL framework.

The function of the ENN model is to regress the (dimensionless) equivalent sand-grain roughness ![]() $k_r=k_s/k_{99}$, and to calculate the variance of the predictions

$k_r=k_s/k_{99}$, and to calculate the variance of the predictions ![]() $\sigma _{k_r}$ as a basis for QBC (

$\sigma _{k_r}$ as a basis for QBC (![]() $k_{99}$ is the 99 % confidence interval of the roughness p.d.f., which is used as the representative physical scale of roughness height in this paper). The ENN is composed of multiple NNs with similar structures that is shown in figure 2. The input vector

$k_{99}$ is the 99 % confidence interval of the roughness p.d.f., which is used as the representative physical scale of roughness height in this paper). The ENN is composed of multiple NNs with similar structures that is shown in figure 2. The input vector ![]() $\boldsymbol {I}$ of the NN contains the discretized roughness p.d.f. and PS along with three additional characteristic features of the rough surface, i.e.

$\boldsymbol {I}$ of the NN contains the discretized roughness p.d.f. and PS along with three additional characteristic features of the rough surface, i.e. ![]() $k_{t}/k_{99}$ and the normalized largest and smallest roughness wavelength

$k_{t}/k_{99}$ and the normalized largest and smallest roughness wavelength ![]() $\lambda _0^*=\lambda _0/k_{99}$ and

$\lambda _0^*=\lambda _0/k_{99}$ and ![]() $\lambda _1^*=\lambda _1/k_{99}$, respectively. The input elements in

$\lambda _1^*=\lambda _1/k_{99}$, respectively. The input elements in ![]() $\boldsymbol {I}$ that represent the roughness p.d.f. and PS are obtained by discretizing equidistantly the roughness p.d.f. and PS each into 30 values within the height range

$\boldsymbol {I}$ that represent the roughness p.d.f. and PS are obtained by discretizing equidistantly the roughness p.d.f. and PS each into 30 values within the height range ![]() $0< k< k_{t}$ and the wavenumber range

$0< k< k_{t}$ and the wavenumber range ![]() $2{\rm \pi} /\lambda _1>2{\rm \pi} /\lambda >2{\rm \pi} /\lambda _0$. Each NN in the ensemble is constructed with one input layer with 63 (

$2{\rm \pi} /\lambda _1>2{\rm \pi} /\lambda >2{\rm \pi} /\lambda _0$. Each NN in the ensemble is constructed with one input layer with 63 (![]() $3+30+30$) input elements, three hidden layers with 64, 128 and 32 nonlinear neurons with rectified linear units (ReLUs) activation (

$3+30+30$) input elements, three hidden layers with 64, 128 and 32 nonlinear neurons with rectified linear units (ReLUs) activation (![]() $\max \{0,x\}$), and one linear neuron in the output layer. The optimal number of neurons at each layer is determined through a grid search of a range of numbers that achieves the lowest model prediction error on

$\max \{0,x\}$), and one linear neuron in the output layer. The optimal number of neurons at each layer is determined through a grid search of a range of numbers that achieves the lowest model prediction error on ![]() $\mathcal {T}_{inter}$. The L2-regularization is applied to the loss function. Adaptive momentum estimation (Adam) is employed to train the model. The final prediction of the ENN is defined as the mean prediction over the 50 NNs, namely

$\mathcal {T}_{inter}$. The L2-regularization is applied to the loss function. Adaptive momentum estimation (Adam) is employed to train the model. The final prediction of the ENN is defined as the mean prediction over the 50 NNs, namely ![]() $\mu _{k_r}=\sum _{i=1}^{50}\hat {k}_{r,i}/50$, where

$\mu _{k_r}=\sum _{i=1}^{50}\hat {k}_{r,i}/50$, where ![]() $\hat {k}_r$ represents the prediction of a single NN, and the index

$\hat {k}_r$ represents the prediction of a single NN, and the index ![]() $i$ indicates the index of the NN. The prediction variance is calculated as

$i$ indicates the index of the NN. The prediction variance is calculated as ![]() $\sigma _{k_r}=\sqrt {\sum _{i=1}^{50}(\hat {k}_{r,i}-\mu _{k_r})^2/50}$. It is worth noting that each NN in the ENN model is trained individually based on 90 % of the randomly selected samples in the labelled data set

$\sigma _{k_r}=\sqrt {\sum _{i=1}^{50}(\hat {k}_{r,i}-\mu _{k_r})^2/50}$. It is worth noting that each NN in the ENN model is trained individually based on 90 % of the randomly selected samples in the labelled data set ![]() $\mathcal {L}$, while the rest of the samples are used for validation. The initial weights of the neurons in each NN are assigned randomly at the beginning of the training process. In such a way, the diversity among the QBC members is ensured, which is an important factor in determining the generalization of the ENN model (Melville & Mooney Reference Melville and Mooney2003). It is important to note that the current ensemble members used in the model are deterministic NNs, and the uncertainty of the training data from DNS is assumed to be minimal. However, when considering experimental training data, where (aleatoric) uncertainties arise from possible measurement errors, the performance of the current ENN approach may be compromised due to its limited capability in handling such uncertainties. In these scenarios, the utilization of probabilistic models – such as Bayesian NNs – may be more suitable as they allow for the explicit incorporation of measurement uncertainties.

$\mathcal {L}$, while the rest of the samples are used for validation. The initial weights of the neurons in each NN are assigned randomly at the beginning of the training process. In such a way, the diversity among the QBC members is ensured, which is an important factor in determining the generalization of the ENN model (Melville & Mooney Reference Melville and Mooney2003). It is important to note that the current ensemble members used in the model are deterministic NNs, and the uncertainty of the training data from DNS is assumed to be minimal. However, when considering experimental training data, where (aleatoric) uncertainties arise from possible measurement errors, the performance of the current ENN approach may be compromised due to its limited capability in handling such uncertainties. In these scenarios, the utilization of probabilistic models – such as Bayesian NNs – may be more suitable as they allow for the explicit incorporation of measurement uncertainties.

Figure 2. Schematic of a single NN in an ENN.

2.4. Testing data sets

In the present work, three distinct testing data sets are introduced to evaluate the model performance and its universality. The difference among the data sets lies in the nature and origin of the samples that they contain. The first data set, ![]() $\mathcal {T}_{inter}$, is composed of 20 samples chosen randomly from

$\mathcal {T}_{inter}$, is composed of 20 samples chosen randomly from ![]() $\mathcal {U}$ that have never been seen by the model during the training process.

$\mathcal {U}$ that have never been seen by the model during the training process.

Despite the fact that the employed roughness generation method can generate irregular, multi-scale surfaces resembling realistic roughness, we test the model separately for additional rough surfaces extracted from scanning of naturally occurring roughness, which form the second testing data set, ![]() $\mathcal {T}_{{ext,1}}$. There are five samples in this ‘external’ data set. These include roughness generated by ice accretion (Velandia & Bansmer Reference Velandia and Bansmer2019), deposit in internal combustion engine (Forooghi et al. Reference Forooghi, Weidenlener, Magagnato, Böhm, Kubach, Koch and Frohnapfel2018b), and a grit-blasted surface (Thakkar et al. Reference Thakkar, Busse and Sandham2017). In addition to that, we test the model against a second external data set,

$\mathcal {T}_{{ext,1}}$. There are five samples in this ‘external’ data set. These include roughness generated by ice accretion (Velandia & Bansmer Reference Velandia and Bansmer2019), deposit in internal combustion engine (Forooghi et al. Reference Forooghi, Weidenlener, Magagnato, Böhm, Kubach, Koch and Frohnapfel2018b), and a grit-blasted surface (Thakkar et al. Reference Thakkar, Busse and Sandham2017). In addition to that, we test the model against a second external data set, ![]() $\mathcal {T}_{{ext,2}}$, which contains irregular roughness samples from the database provided by Jouybari et al. (Reference Jouybari, Yuan, Brereton and Murillo2021). In this data set, many roughness samples are generated by placing ellipsoidal elements of different sizes and orientations on a smooth wall, making them rather distinct from the type of roughness used to train the model. We separate this testing data set from the other two as it contains a specific type of artificial roughness.

$\mathcal {T}_{{ext,2}}$, which contains irregular roughness samples from the database provided by Jouybari et al. (Reference Jouybari, Yuan, Brereton and Murillo2021). In this data set, many roughness samples are generated by placing ellipsoidal elements of different sizes and orientations on a smooth wall, making them rather distinct from the type of roughness used to train the model. We separate this testing data set from the other two as it contains a specific type of artificial roughness.

3. Results

3.1. Assessment of the AL framework

In this subsection, we explore if the AL framework enhances the training behaviour of the model. To do so, we compare a model trained with AL-selected data points to one trained with an arbitrary selection of data points. To avoid the computational cost of running many eventually unused DNS, the comparison is made for only one AL iteration. Figure 3 shows all p.d.f. and PS pairs contained in the repository ![]() $\mathcal {U}$ (grey) and those randomly selected for the initial base model (green), as well as those selected for further training (other colours). The wide range of available roughness can be understood from the area covered by grey curves. As explained before, once the base model is trained using the initial randomly selected data set, it is used to determine which samples from the repository

$\mathcal {U}$ (grey) and those randomly selected for the initial base model (green), as well as those selected for further training (other colours). The wide range of available roughness can be understood from the area covered by grey curves. As explained before, once the base model is trained using the initial randomly selected data set, it is used to determine which samples from the repository ![]() $\mathcal {U}$ should be selected for the next round of training. In figure 4(a), the green line shows the prediction variance

$\mathcal {U}$ should be selected for the next round of training. In figure 4(a), the green line shows the prediction variance ![]() $\sigma _{k_r}$ of all roughness samples in the repository based on the base model. Here, the abscissa is the sample number sorted from high to low

$\sigma _{k_r}$ of all roughness samples in the repository based on the base model. Here, the abscissa is the sample number sorted from high to low ![]() $\sigma _{k_r}$ values. According to the AL framework, the samples selected for the next round are the ones with the largest

$\sigma _{k_r}$ values. According to the AL framework, the samples selected for the next round are the ones with the largest ![]() $\sigma _{k_r}$. These are shown in red in figure 3. For comparison, a second sampling strategy (denoted as EQ) is employed in which the same number of samples as in AL are selected, but they are distributed equidistantly along the abscissa of figure 4(a). These samples are shown in blue in figure 3. It is observed clearly in figure 3 that the AL model explores surfaces that are least similar to those in the initial data set (green) and tend to cover the entire repository, with a higher weight given to the marginal cases. Furthermore, the parameter distribution as well as the corresponding

$\sigma _{k_r}$. These are shown in red in figure 3. For comparison, a second sampling strategy (denoted as EQ) is employed in which the same number of samples as in AL are selected, but they are distributed equidistantly along the abscissa of figure 4(a). These samples are shown in blue in figure 3. It is observed clearly in figure 3 that the AL model explores surfaces that are least similar to those in the initial data set (green) and tend to cover the entire repository, with a higher weight given to the marginal cases. Furthermore, the parameter distribution as well as the corresponding ![]() $k_r$ values of the selected roughness by means of AL and EQ is compared in the insets of figure 4. It can be seen that both the AL and EQ models generally prioritize selecting samples within the waviness regime, i.e. effective slope

$k_r$ values of the selected roughness by means of AL and EQ is compared in the insets of figure 4. It can be seen that both the AL and EQ models generally prioritize selecting samples within the waviness regime, i.e. effective slope ![]() $ES<0.35$ (Napoli et al. Reference Napoli, Armenio and DeMarchis2008). This preference may arise from the fact that the resulting drag in the waviness regime (

$ES<0.35$ (Napoli et al. Reference Napoli, Armenio and DeMarchis2008). This preference may arise from the fact that the resulting drag in the waviness regime (![]() $ES<0.35$) is sensitive to changes in

$ES<0.35$) is sensitive to changes in ![]() $ES$ (Schultz & Flack Reference Schultz and Flack2009). Conversely, beyond this regime (

$ES$ (Schultz & Flack Reference Schultz and Flack2009). Conversely, beyond this regime (![]() $ES > 0.35$), the resulting

$ES > 0.35$), the resulting ![]() $\Delta U^+$ saturates in relation to increasing

$\Delta U^+$ saturates in relation to increasing ![]() $ES$, making these samples less interesting for both labelling strategies. On the other hand, the AL model particularly tends to sample the roughness with positive skewness and low correlation length. This can similarly be a result of the roughness effect being highly sensitive to the variations in roughness statistics within these ranges of parameters, which is in line with previous findings (Schultz & Flack Reference Schultz and Flack2009; Busse & Jelly Reference Busse and Jelly2023).

$ES$, making these samples less interesting for both labelling strategies. On the other hand, the AL model particularly tends to sample the roughness with positive skewness and low correlation length. This can similarly be a result of the roughness effect being highly sensitive to the variations in roughness statistics within these ranges of parameters, which is in line with previous findings (Schultz & Flack Reference Schultz and Flack2009; Busse & Jelly Reference Busse and Jelly2023).

Figure 3. Plots of (a) PS and (b) p.d.f. of 4200 roughness samples in the roughness repository (grey). The samples selected for training are distinguished with different colours. While the AL model tends to explore the PS and p.d.f. domain, the EQ model contains samples that are placed closely to the known initial database.

Figure 4. (a) Prediction variance ![]() $\sigma _{k_r}$ obtained by three different models for all the samples in repository

$\sigma _{k_r}$ obtained by three different models for all the samples in repository ![]() $\mathcal {U}$. (b) The average error obtained by the three models for 10 high-variance samples and 10 low-variance samples in

$\mathcal {U}$. (b) The average error obtained by the three models for 10 high-variance samples and 10 low-variance samples in ![]() $\mathcal {T}_{inter}$ (sorted based on the variance of the base model). The total averaged errors are displayed in the legend. Insets show the distribution of the statistical parameters as well as the corresponding

$\mathcal {T}_{inter}$ (sorted based on the variance of the base model). The total averaged errors are displayed in the legend. Insets show the distribution of the statistical parameters as well as the corresponding ![]() $k_r$ of the new samples with AL and EQ sampling strategies with identical colour code.

$k_r$ of the new samples with AL and EQ sampling strategies with identical colour code.

Subsequently, two separate models are trained based on the AL and EQ strategies. These models are applied separately to determine the variance of prediction for roughness in the repository, and the results are depicted in figure 4(a) using red and blue lines. It is evident from the results that both the AL and EQ models generally reduce the prediction variance. However, a more substantial decline in the values of ![]() $\sigma _{k_r}$ is achieved by the AL model. This is the expected behaviour as AL is designed to reduce the prediction uncertainty by targeting regions of the parameter space where the uncertainty is the largest. Interestingly, some increase in

$\sigma _{k_r}$ is achieved by the AL model. This is the expected behaviour as AL is designed to reduce the prediction uncertainty by targeting regions of the parameter space where the uncertainty is the largest. Interestingly, some increase in ![]() $\sigma _{k_r}$ of the EQ model can be observed for a number of samples with very high

$\sigma _{k_r}$ of the EQ model can be observed for a number of samples with very high ![]() $\sigma _{k_r}$, which can be a sign that the performance of the EQ model in the ‘difficult’ tasks deteriorates as it is not trained well for those tasks due to ineffective selection of its training data. Moreover, the prediction errors (calculated based on correct

$\sigma _{k_r}$, which can be a sign that the performance of the EQ model in the ‘difficult’ tasks deteriorates as it is not trained well for those tasks due to ineffective selection of its training data. Moreover, the prediction errors (calculated based on correct ![]() $k_s$ values of testing data set

$k_s$ values of testing data set ![]() $\mathcal {T}_{inter}$ obtained by DNS) are illustrated in figure 4(b). The averaged prediction errors,

$\mathcal {T}_{inter}$ obtained by DNS) are illustrated in figure 4(b). The averaged prediction errors, ![]() $Err$, achieved by the base model, the AL model and the EQ model for the entire

$Err$, achieved by the base model, the AL model and the EQ model for the entire ![]() $\mathcal {T}_{inter}$ are 19.1 %, 16.0 % and 22.0 %, respectively. While the AL model yields a meaningful reduction in

$\mathcal {T}_{inter}$ are 19.1 %, 16.0 % and 22.0 %, respectively. While the AL model yields a meaningful reduction in ![]() $Err$, the overall performance of the EQ model deteriorates, possibly due to the over-fitting, which in our case refers to the condition where the model is trained to fit a limited number of relatively similar data points so precisely that its ability to extrapolate on dissimilar testing data is degraded (Hastie, Tibshirani & Friedman Reference Hastie, Tibshirani and Friedman2009). To better analyse this observation, the testing data set

$Err$, the overall performance of the EQ model deteriorates, possibly due to the over-fitting, which in our case refers to the condition where the model is trained to fit a limited number of relatively similar data points so precisely that its ability to extrapolate on dissimilar testing data is degraded (Hastie, Tibshirani & Friedman Reference Hastie, Tibshirani and Friedman2009). To better analyse this observation, the testing data set ![]() $\mathcal {T}_{inter}$ is split evenly into two subsets according to their

$\mathcal {T}_{inter}$ is split evenly into two subsets according to their ![]() $\sigma _{k_r}$, namely the high- and low-variance subsets. The

$\sigma _{k_r}$, namely the high- and low-variance subsets. The ![]() $Err$ values for both high- and low-variance subsets are illustrated in the figure. It is clear that while the EQ strategy improves the model performance for the already low-variance test data, its error increases for high-variance test data, which can be taken as an indication of over-fitting as described above. The AL sampling strategy, in contrast, seems to protect the model from over-fitting – especially in the circumstance of a small training data set – hence the error is reduced for both high- and low-certainty test data as a result of effective selection of training data.

$Err$ values for both high- and low-variance subsets are illustrated in the figure. It is clear that while the EQ strategy improves the model performance for the already low-variance test data, its error increases for high-variance test data, which can be taken as an indication of over-fitting as described above. The AL sampling strategy, in contrast, seems to protect the model from over-fitting – especially in the circumstance of a small training data set – hence the error is reduced for both high- and low-certainty test data as a result of effective selection of training data.

3.2. Performance of the final model

Having demonstrated the advantage of AL over random sampling, three additional AL iterations are carried out. The distributions of the PS and p.d.f. of the selected roughness from the second to the fourth AL iterations are displayed in figure 3 with black lines. A number of roughness maps from each AL round are also displayed in Appendix A.

The total number of data points for training of the model after four iterations adds up to 85; these are the data that form ![]() $\mathcal {L}$. The scatter plots of some widely investigated roughness parameters in

$\mathcal {L}$. The scatter plots of some widely investigated roughness parameters in ![]() $\mathcal {L}$ as well as in the unlabelled repository

$\mathcal {L}$ as well as in the unlabelled repository ![]() $\mathcal {U}$ are displayed in the lower left part of figure 5(a). In the figure,

$\mathcal {U}$ are displayed in the lower left part of figure 5(a). In the figure, ![]() $k(x,z)$ is the elevation map of the roughness,

$k(x,z)$ is the elevation map of the roughness, ![]() $Sk=1/(Sk_{rms}^3)\int _S(k-k_{md})^3\,\mathrm {d}S$ represents the skewness, where

$Sk=1/(Sk_{rms}^3)\int _S(k-k_{md})^3\,\mathrm {d}S$ represents the skewness, where ![]() $S$ is the wall-projected surface area, and

$S$ is the wall-projected surface area, and ![]() $k_{md}=(1/S)\int _Sk\,\mathrm {d}S$ is the meltdown height of the roughness. The effective slope is defined as

$k_{md}=(1/S)\int _Sk\,\mathrm {d}S$ is the meltdown height of the roughness. The effective slope is defined as ![]() $ES =(1/S)\int _S|\partial k/\partial x|\,\mathrm {d}S$. Here,

$ES =(1/S)\int _S|\partial k/\partial x|\,\mathrm {d}S$. Here, ![]() $L^{Corr}$ is the correlation length representing the horizontal separation at which the roughness height autocorrelation function drops under 0.2. An inverse correlation can be observed between

$L^{Corr}$ is the correlation length representing the horizontal separation at which the roughness height autocorrelation function drops under 0.2. An inverse correlation can be observed between ![]() $L^{Corr}$ and

$L^{Corr}$ and ![]() $ES$, which is expected as roughness with larger dominant wavelength tends to have lower mean slope. The distribution of other statistics in

$ES$, which is expected as roughness with larger dominant wavelength tends to have lower mean slope. The distribution of other statistics in ![]() $\mathcal {U}$ appears to be reasonably random.

$\mathcal {U}$ appears to be reasonably random.

Figure 5. (a) Pair plots of roughness statistics. Lower left: the distributions of the samples in ![]() $\mathcal {U}$ (grey) and

$\mathcal {U}$ (grey) and ![]() $\mathcal {L}$ (green). Diagonal: histograms of single roughness statistics in

$\mathcal {L}$ (green). Diagonal: histograms of single roughness statistics in ![]() $\mathcal {U}$. Upper right: joint probability distributions of statistics overlaid by test data in

$\mathcal {U}$. Upper right: joint probability distributions of statistics overlaid by test data in ![]() $\mathcal {T}_{inter}$ (orange) and

$\mathcal {T}_{inter}$ (orange) and ![]() $\mathcal {T}_{{ext,1\&2}}$ (purple). (b) Values of

$\mathcal {T}_{{ext,1\&2}}$ (purple). (b) Values of ![]() $k_r=k_s/k_{99}$ obtained from DNS (ground truth) as a function of the selected statistics. Colour code is the same as in (a).

$k_r=k_s/k_{99}$ obtained from DNS (ground truth) as a function of the selected statistics. Colour code is the same as in (a).

For the sake of comparison, additionally the test data are represented in the upper right part of figure 5(a), with orange (for ![]() $\mathcal {T}_{inter}$) and purple (for

$\mathcal {T}_{inter}$) and purple (for ![]() $\mathcal {T}_{{ext, 1\&2}}$) symbols. It is worth noting that only the roughness samples that locate in the fully rough regime at the currently investigated

$\mathcal {T}_{{ext, 1\&2}}$) symbols. It is worth noting that only the roughness samples that locate in the fully rough regime at the currently investigated ![]() $Re_\tau$ are included in

$Re_\tau$ are included in ![]() $\mathcal {L}$ and shown in the figure. Figure 5(b) shows the values of

$\mathcal {L}$ and shown in the figure. Figure 5(b) shows the values of ![]() $k_r$ (from DNS) against the three roughness statistics for all labelled data in the training and testing data sets. As can be observed clearly in the figure, while equivalent sand-grain roughness shows some general correlation with each of these statistics (increasing with

$k_r$ (from DNS) against the three roughness statistics for all labelled data in the training and testing data sets. As can be observed clearly in the figure, while equivalent sand-grain roughness shows some general correlation with each of these statistics (increasing with ![]() $Sk$ and

$Sk$ and ![]() $ES$, decreasing with

$ES$, decreasing with ![]() $L^{Corr}$), the collapse of data is far from perfect. Clearly, no roughness statistics can capture entirely the effect of an irregular multi-scale roughness topography on drag, which is essentially a motivation behind seeking an NN-based model to find the functional relation between

$L^{Corr}$), the collapse of data is far from perfect. Clearly, no roughness statistics can capture entirely the effect of an irregular multi-scale roughness topography on drag, which is essentially a motivation behind seeking an NN-based model to find the functional relation between ![]() $k_s$ and a higher-order representation of roughness (here p.d.f. and PS).

$k_s$ and a higher-order representation of roughness (here p.d.f. and PS).

Eventually, the final model is trained on the entire labelled data set ![]() $\mathcal {L}$. The mean and maximum error values achieved by this model on all three testing data sets, as well as those errors after each training round, are displayed separately in figure 6. The figure shows a generally decreasing trend in both mean and maximum error as the model is trained progressively for more AL rounds, despite some exceptions to the general trend in the first two rounds when the number of data points is low. It is notable that the AL model is particularly successful in bringing down the maximum error, and hence can be considered reliable over a wide range of scenarios.

$\mathcal {L}$. The mean and maximum error values achieved by this model on all three testing data sets, as well as those errors after each training round, are displayed separately in figure 6. The figure shows a generally decreasing trend in both mean and maximum error as the model is trained progressively for more AL rounds, despite some exceptions to the general trend in the first two rounds when the number of data points is low. It is notable that the AL model is particularly successful in bringing down the maximum error, and hence can be considered reliable over a wide range of scenarios.

Figure 6. The arithmetically averaged ![]() $Err$ (%) as well as maximum

$Err$ (%) as well as maximum ![]() $Err$ of the model after different training rounds on each of the testing data sets

$Err$ of the model after different training rounds on each of the testing data sets ![]() $\mathcal {T}_{inter}$,

$\mathcal {T}_{inter}$, ![]() $\mathcal {T}_{ext,1}$ and

$\mathcal {T}_{ext,1}$ and ![]() $\mathcal {T}_{ext,2}$. The mean

$\mathcal {T}_{ext,2}$. The mean ![]() $Err$ is represented with a closed circle, while the maximum

$Err$ is represented with a closed circle, while the maximum ![]() $Err$ is displayed with an open circle of corresponding colour. The maximum

$Err$ is displayed with an open circle of corresponding colour. The maximum ![]() $Err$ for

$Err$ for ![]() $\mathcal {T}_{{ext,2}}$ at AL round 1 is out of the plot range.

$\mathcal {T}_{{ext,2}}$ at AL round 1 is out of the plot range.

One should mention that the model performs consistently well for three different testing data sets with different natures. While the data set ![]() $\mathcal {T}_{inter}$ covers an extensive parameter space – hence containing more extreme cases – it is generated employing the same method as the training data. Therefore, to avoid a biased evaluation of the model, two ‘external’ testing data sets from literature are also included. The data set

$\mathcal {T}_{inter}$ covers an extensive parameter space – hence containing more extreme cases – it is generated employing the same method as the training data. Therefore, to avoid a biased evaluation of the model, two ‘external’ testing data sets from literature are also included. The data set ![]() $\mathcal {T}_{{ext,2}}$ is believed to be particularly challenging for the model, since it is formed by roughness generated artificially using discrete elements (Jouybari et al. Reference Jouybari, Yuan, Brereton and Murillo2021), which is fundamentally different from the target roughness of this study. Nevertheless, the final model yields very similar errors for all data sets; what can be taken as an indication of its generalizability. The averaged errors of the final model within the data sets

$\mathcal {T}_{{ext,2}}$ is believed to be particularly challenging for the model, since it is formed by roughness generated artificially using discrete elements (Jouybari et al. Reference Jouybari, Yuan, Brereton and Murillo2021), which is fundamentally different from the target roughness of this study. Nevertheless, the final model yields very similar errors for all data sets; what can be taken as an indication of its generalizability. The averaged errors of the final model within the data sets ![]() $\mathcal {T}_{inter}$,

$\mathcal {T}_{inter}$, ![]() $\mathcal {T}_{{ext,1}}$, and

$\mathcal {T}_{{ext,1}}$, and ![]() $\mathcal {T}_{{ext,2}}$ are approximately 9.3 %, 5.2 % and 10.2 %, respectively.

$\mathcal {T}_{{ext,2}}$ are approximately 9.3 %, 5.2 % and 10.2 %, respectively.

It is crucial to acknowledge that the present model is developed under the assumption of statistical surface homogeneity. However, when reaching beyond this assumption, the presence of surface heterogeneity introduces additional complexity to the problem that cannot be represented adequately by the current training samples. As a consequence, the effect of heterogeneous roughness structures (Hinze Reference Hinze1967; Stroh et al. Reference Stroh, Schäfer, Frohnapfel and Forooghi2020) cannot be accounted for adequately by the current model.

3.3. Data-driven exploration of drag-relevant roughness scales

The fact that naturally occurring roughness usually has a multi-scale nature with continuous spectrum is well established (Sayles & Thomas Reference Sayles and Thomas1978). How spectral content of roughness affects skin-friction drag, and whether a certain range of length scales dominates it, are, however, questions receiving attention more recently (Anderson & Meneveau Reference Anderson and Meneveau2011; Mejia-Alvarez & Christensen Reference Mejia-Alvarez and Christensen2010; Barros et al. Reference Barros, Schultz and Flack2018; Medjnoun et al. Reference Medjnoun, Rodriguez-Lopez, Ferreira, Griffiths, Meyers and Ganapathisubramani2021). In this sense, Busse et al. (Reference Busse, Lützner and Sandham2015) applied low-pass Fourier filtering to a realistic roughness and observed no significant effect on skin-friction drag when the filtered wavelengths were lower than a certain threshold. On the other hand, Barros et al. (Reference Barros, Schultz and Flack2018) used high-pass filtering and suggested that very large length scales may not contribute significantly to drag. Alves Portela et al. (Reference Alves Portela, Busse and Sandham2021) examined three filtered surfaces, each maintaining one-third of the original spectral content associated with large, intermediate or small scales. In all cases, the filtered scales were shown to include ‘drag-relevant’ information. While both lower and higher limits of drag-relevant scales (if they exist) can be a matter of discussion, the present study focuses mainly on the latter. Possibly related to that question, Schultz & Flack (Reference Schultz and Flack2009) documented the equivalent sand-grain size of pyramid-like roughness with wavelengths higher (hence lower effective slopes) than a certain value not to scale in the same way as those with smaller wavelengths. These authors coined the term ‘wavy’ for the high-wavelength roughness behaviour. Later, Yuan & Piomelli (Reference Yuan and Piomelli2014a) revealed that the wavy regime may emerge at a different threshold (in terms of effective slope) in a multi-scale roughness compared to the single-scale pyramid-like roughness. Recently, Yang et al. (Reference Yang, Stroh, Chung and Forooghi2022) showed that the spectral coherence of roughness topography and time-averaged drag force on a rough wall drops at large streamwise wavelengths, which, in line with the finding of Barros et al. (Reference Barros, Schultz and Flack2018), suggests decreasing drag relevance of large scales.

In the present work, we are particularly interested to explore the possibility of extracting the drag-relevant scales from the knowledge embedded in the data-driven model. In doing so, we employ the layer-wise relevance propagation (LRP) technique (Bach et al. Reference Bach, Binder, Montavon, Klauschen, Müller and Samek2015), which has proven successful previously in other contexts as a way to interpret decisions of NN models (Samek et al. Reference Samek, Binder, Montavon, Lapuschkin and Müller2017; Arras et al. Reference Arras, Horn, Montavon, Müller and Samek2017). LRP is an instance-based technique, which can be used to quantify the contribution of each input feature (here points in discretized p.d.f. and PS) to the output of the model (here ![]() $k_r=k_s/k_{99}$) for a single test case (here a roughness sample). According to this technique, the contribution score (or relevance) of neuron

$k_r=k_s/k_{99}$) for a single test case (here a roughness sample). According to this technique, the contribution score (or relevance) of neuron ![]() $j$ at each layer of the deep NN can be expressed as

$j$ at each layer of the deep NN can be expressed as

\begin{equation} R_j=\sum_l \left (\frac{a_jw_{jl}}{\sum_{j}a_jw_{jl}} \right )R_l, \end{equation}

\begin{equation} R_j=\sum_l \left (\frac{a_jw_{jl}}{\sum_{j}a_jw_{jl}} \right )R_l, \end{equation}

where ![]() $R_l$ is the contribution score of neuron

$R_l$ is the contribution score of neuron ![]() $l$ in the subsequent layer. In (3.1),

$l$ in the subsequent layer. In (3.1), ![]() $w$ and

$w$ and ![]() $a$ are the weight and activation of the neuron that are obtained when the model is used to predict one instance (here the

$a$ are the weight and activation of the neuron that are obtained when the model is used to predict one instance (here the ![]() $k_r$ for the roughness sample of interest). Note that in our NN, the last layer corresponds to the predicted output, and the first layer to the input roughness information. For better interpretability, we assign the value 1 to the contribution score (or relevance) of the output neuron. As a result, the sum of contribution scores of all inputs must be 1. Note that the contribution scores shown in this section are averaged over the 50 NN members.

$k_r$ for the roughness sample of interest). Note that in our NN, the last layer corresponds to the predicted output, and the first layer to the input roughness information. For better interpretability, we assign the value 1 to the contribution score (or relevance) of the output neuron. As a result, the sum of contribution scores of all inputs must be 1. Note that the contribution scores shown in this section are averaged over the 50 NN members.

In order to extract drag-relevant scales, we consider the following idea. A wavelength that does not affect ![]() $k_s$ (which is a measure of added drag) still contributes to an increasing variance of the roughness height, and hence

$k_s$ (which is a measure of added drag) still contributes to an increasing variance of the roughness height, and hence ![]() $k_{99}$. Therefore, the related output of the NN, which is the ratio

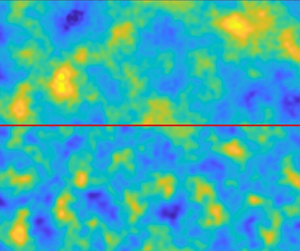

$k_{99}$. Therefore, the related output of the NN, which is the ratio ![]() $k_s/k_{99}$, is decreased. An input that decreases the output shows a negative LRP contribution score. With that in mind, figure 7 shows three exemplary roughness samples (named A, B and C) and their discretized PS. Each discrete wavenumber in a PS is an input to the model, thus has a contribution score, which is indicated using the specified colour code. The spectra are shown in pre-multiplied form, and the p.d.f. of each roughness is also displayed. Samples with both Gaussian and non-Gaussian p.d.f.s are included. It is observed in figure 7 that the small wavenumbers (i.e. large wavelengths) generally have more negative contribution scores, which is in accordance to the suggestion of Barros et al. (Reference Barros, Schultz and Flack2018). Indeed, the most negative contributions belong consistently to the largest wavelengths for all samples. On the other hand, smaller wavelengths generally show larger contribution scores, but the trend is not monotonic. This might indicate that drag-relevant scales reside within a certain range of the spectral content.

$k_s/k_{99}$, is decreased. An input that decreases the output shows a negative LRP contribution score. With that in mind, figure 7 shows three exemplary roughness samples (named A, B and C) and their discretized PS. Each discrete wavenumber in a PS is an input to the model, thus has a contribution score, which is indicated using the specified colour code. The spectra are shown in pre-multiplied form, and the p.d.f. of each roughness is also displayed. Samples with both Gaussian and non-Gaussian p.d.f.s are included. It is observed in figure 7 that the small wavenumbers (i.e. large wavelengths) generally have more negative contribution scores, which is in accordance to the suggestion of Barros et al. (Reference Barros, Schultz and Flack2018). Indeed, the most negative contributions belong consistently to the largest wavelengths for all samples. On the other hand, smaller wavelengths generally show larger contribution scores, but the trend is not monotonic. This might indicate that drag-relevant scales reside within a certain range of the spectral content.