1 Introduction

Since the first report of the use of microscopes for observation in the 17th century, optical microscopes have played a central role in helping to untangle complex biological mysteries. Numerous scientific advancements and manufacturing innovations over the past three centuries have led to advanced optical microscope designs with significantly improved image quality. However, due to the physical nature of wave propagation and diffraction, there is a fundamental diffraction barrier in optical imaging systems, which is called the resolution limit. This resolution limit is one of the most important characteristics of an imaging system. In the 19th century, Rayleigh [Reference Rayleigh35] gave a well-known criterion for determining the resolution limit (Rayleigh’s diffraction limit) for distinguishing two point sources, which is extensively used in optical microscopes for analyzing the resolution. The problem to resolve point sources separated below the Rayleigh diffraction limit is then called super-resolution and is commonly known to be very challenging for single snapshot. However, Rayleigh’s criterion is based on intuitive notions and is more applicable to observations with the human eye. It also neglects the effect of the noise in the measurements and the aberrations in the modeling. Due to the rapid advancement of technologies, modern imaging data is generally captured using intricate imaging techniques and sensitive cameras, and may also be subject to analysis by complex processing algorithms. Thus, Rayleigh’s deterministic resolution criterion is not well adapted to current quantitative imaging techniques, necessitating new and more rigorous definitions of resolution limit with respect to the noise, model and imaging methods [Reference Ram, Ward and Ober34].

Our previous works [Reference Liu and Zhang27, Reference Liu and Zhang26, Reference Liu and Zhang25, Reference Liu and Ammari22] have achieved certain success in this respect and enable us to understand the performance of some super-resolution algorithms. Nevertheless, the derived estimates are still lacking enough guiding significance in practice on the possibility of super-resolution.

In this paper, we introduce new and direct insights into the stability of super-resolution problems and significantly enhance many estimates to have practical significance. Our findings reveal several new facts; for example, we theoretically demonstrate that super-resolution from a single snapshot is indeed feasible in practice.

1.1 Resolution limit, super-resolution and diffraction limit

1.1.1 Classical criteria for the resolution limit

The resolution of an optical microscope is usually defined as the shortest distance between two points on a specimen that can still be distinguished by the observer or camera system as separate entities, but it is in some sense ambiguous. In fact, it does not explicitly require that just the source number should be detected or the source locations should be stably reconstructed. From the mathematical perspective, recovering the source number and stably reconstructing the source locations are actually two different tasks [Reference Liu and Zhang26] demanding different separation distances.

Historically, the resolution of optical microscopy focuses mainly on correctly detecting the number of sources, rather than on stably recovering the locations. This can be seen from the below discussions for the classical and semi-classical results on the resolution.

In the 18th and 19th centuries, many classical criteria were proposed to determine the resolution limit. For example, Rayleigh thought that two point sources observed are regarded as just resolved when the principal diffraction maximum of one Airy disk coincides with the first minimum of the other, as is shown by Figure 1. Since the separation of sources is still relatively large, not only the source number can be detected, but also the source locations can be stably recovered.

Figure 1 Rayleigh’s criterion and Rayleigh’s diffraction limit.

However, Rayleigh’s choice of resolution limit is based on the presumed resolving ability of human visual system, which at first glance seems arbitrary. In fact, Rayleigh said about his criterion that

‘This rule is convenient on account of its simplicity and it is sufficiently accurate in view of the necessary uncertainty as to what exactly is meant by resolution.’

As is shown in Figure 1, the Rayleigh diffraction limit results in an

![]() $\sim 20\%$

dip in intensity between the two peaks of Airy disks [Reference Demmerle, Wegel, Schermelleh and Dobbie9]. Schuster pointed out in 1904 [Reference Schuster38] that the dip in intensity necessary to indicate resolution is a physiological phenomenon and there are other forms of spectroscopic investigation besides that of eye observation. Many alternative criteria were proposed by other physicists as illustrated in Figure 2.

$\sim 20\%$

dip in intensity between the two peaks of Airy disks [Reference Demmerle, Wegel, Schermelleh and Dobbie9]. Schuster pointed out in 1904 [Reference Schuster38] that the dip in intensity necessary to indicate resolution is a physiological phenomenon and there are other forms of spectroscopic investigation besides that of eye observation. Many alternative criteria were proposed by other physicists as illustrated in Figure 2.

Figure 2 Different resolution limits.

A more mathematically rigorous criterion was proposed by Sparrow [Reference Sparrow42], who advocates that the resolution limit should be the distance between two point sources where the images no longer have a dip between the central peaks of each Airy disk (as illustrated by Figure 2). Based on Sparrow’s criterion, the two point sources are so close that the source locations may not be stably resolved although the source number is detected. Indeed, the Sparrow resolution limit is less relevant with practical use [Reference Chen and Moitra6, Reference Demmerle, Wegel, Schermelleh and Dobbie9] because it is very signal-to-noise dependent and has no easy comparison to a readily measured value in real images. Therefore, these classical resolution criteria focus on the smallest distance between two sources above which we can be sure that there are two sources, regardless of whether their locations can be resolved stably or not.

The classical resolution criteria mentioned above deal with calculated images that are described by a known and exact mathematical model of the intensity distribution. However, if one has perfect access to the intensity profile of the diffraction image of two point sources, one could locate the exact source despite the diffraction. There would be no resolution limit for the reconstruction. This simple fact has been noticed by many researchers in the field [Reference Toraldo Di Francia12, Reference Den Dekker and van den Bos10, Reference Chen and Moitra6]. Moreover, an imaging system constructed without any aberration or irregularity is not practical because the shape of the point-spread function is never known exactly and the measurement noise is inevitable [Reference Ronchi36, Reference Den Dekker and van den Bos10]. Therefore, a rigorous and practically meaningful resolution limit could only be set when taking into account the aberrations and measurement noises [Reference Ronchi36, Reference Goodman16]. In this setting, the images (detected by detectors in practice) were categorized as detected images by Ronchi [Reference Ronchi36], and their resolution was advocated to be more important to investigate than the resolution defined by those classical criteria. Inspired by this, many researchers have analyzed the two-point resolution from the perspective of statistical inference [Reference Helstrom18, Reference Helstrom19, Reference Lucy29, Reference Lucy28, Reference Goodman16, Reference Shahram and Milanfar39, Reference Shahram and Milanfar40, Reference Shahram and Milanfar41]. For instance, in [Reference Helstrom19], Helstrom has shown that the resolution of two identical objects depends on deciding whether they are both present in the field of view or only one of them is there, and their resolvability is measured by the probability of making this decision correctly. In all the papers mentioned above, the authors have derived quasi-explicit formulas or estimations for the minimum SNR that is required to discriminate two point sources or for the possibility of a correct decision. Although the resolutions (or the requirement) in this respect were thoroughly explored in these works which spanned the course of several decades, these results are rarely (even never) utilized in practical applications due to their complexity.

1.1.2 Concept of super-resolution

We next introduce the concept of super-resolution. Super-resolution microscopy is a series of techniques in optical microscopy that allow such images to have resolutions higher than those imposed by the diffraction limit (Rayleigh resolution limit). Due to the breakthrough in super-resolution techniques in recent years, this topic becomes increasingly popular in various fields, and the concept of super-resolution becomes very general. Some literature claims super-resolution, although theoretically, the sources should be separated by a distance above the Rayleigh limit. Bounds on the recovery of the amplitudes (or intensities) of the sources have been derived. Nevertheless, the original concept of super-resolution actually focuses mainly on both detecting the source number and recovering the source locations.

To the best of our knowledge, there is no unique and mathematically rigorous definition of super-resolution. As we have said, the number detection and location recovery are two inherently different [Reference Liu and Zhang26] but important tasks in the super-resolution; thus, we consider two different super-resolutions in the current paper. One is achieving resolution better than the Rayleigh diffraction limit in detecting the correct source number and is named ‘super-resolution in number detection’. The other is achieving resolution better than the Rayleigh diffraction limit in stably recovering the source locations and is named ‘super-resolution in location recovery’.

1.2 Previous mathematical contributions

Before introducing the mathematical contributions of this work, let us first introduce the mathematical model for the imaging problem in k-dimensional space. We consider the collection of point sources as a discrete measure

![]() $\mu =\sum _{j=1}^{n}a_{j}\delta _{\mathbf{y}_j}$

, where

$\mu =\sum _{j=1}^{n}a_{j}\delta _{\mathbf{y}_j}$

, where

![]() $\mathbf{y}_j \in \mathbb R^k,j=1,\cdots ,n$

represent the location of the point sources and the

$\mathbf{y}_j \in \mathbb R^k,j=1,\cdots ,n$

represent the location of the point sources and the

![]() $a_j$

’s their amplitudes. The imaging problem is to recover the sources

$a_j$

’s their amplitudes. The imaging problem is to recover the sources

![]() $\mu $

from its noisy Fourier data,

$\mu $

from its noisy Fourier data,

$$ \begin{align} \mathbf Y(\boldsymbol{{\omega}}) = \mathscr F[\mu] (\boldsymbol{{\omega}}) + \mathbf W(\boldsymbol{{\omega}})= \sum_{j=1}^{n}a_j e^{i \mathbf{y}_j\cdot \boldsymbol{{\omega}}} + \mathbf W(\boldsymbol{{\omega}}), \ \boldsymbol{{\omega}} \in \mathbb R^k, \ ||\boldsymbol{{\omega}}||_2\leqslant \Omega, \end{align} $$

$$ \begin{align} \mathbf Y(\boldsymbol{{\omega}}) = \mathscr F[\mu] (\boldsymbol{{\omega}}) + \mathbf W(\boldsymbol{{\omega}})= \sum_{j=1}^{n}a_j e^{i \mathbf{y}_j\cdot \boldsymbol{{\omega}}} + \mathbf W(\boldsymbol{{\omega}}), \ \boldsymbol{{\omega}} \in \mathbb R^k, \ ||\boldsymbol{{\omega}}||_2\leqslant \Omega, \end{align} $$

where

![]() $\mathscr F[\cdot ]$

denotes the Fourier transform in the k-dimensional space,

$\mathscr F[\cdot ]$

denotes the Fourier transform in the k-dimensional space,

![]() $\Omega $

is the cut-off frequency, and

$\Omega $

is the cut-off frequency, and

![]() $\mathbf W$

represents the total effect of noise and aberrations. We assume that

$\mathbf W$

represents the total effect of noise and aberrations. We assume that

with

![]() $\sigma $

being the noise level. We denote, respectively, the magnitude of the signal and the minimum separation distance between sources by

$\sigma $

being the noise level. We denote, respectively, the magnitude of the signal and the minimum separation distance between sources by

As most of our analyses are on a one-dimensional space, throughout the paper, we use

![]() $y_j, \omega $

to denote the one-dimensional source locations and frequencies and reserve

$y_j, \omega $

to denote the one-dimensional source locations and frequencies and reserve

![]() $\mathbf{y}_j, \boldsymbol {{\omega }}$

for the problem in spaces of general dimensionality. Especially, we denote the one-dimensional sources as

$\mathbf{y}_j, \boldsymbol {{\omega }}$

for the problem in spaces of general dimensionality. Especially, we denote the one-dimensional sources as

![]() $\mu =\sum _{j=1}^{n}a_{j}\delta _{y_j}$

and the noisy measurement as

$\mu =\sum _{j=1}^{n}a_{j}\delta _{y_j}$

and the noisy measurement as

$$\begin{align*}\mathbf Y(\omega) = \sum_{j=1}^{n}a_j e^{i y_j\omega} + \mathbf W(\omega),\quad \omega \in [-\Omega, \Omega]. \end{align*}$$

$$\begin{align*}\mathbf Y(\omega) = \sum_{j=1}^{n}a_j e^{i y_j\omega} + \mathbf W(\omega),\quad \omega \in [-\Omega, \Omega]. \end{align*}$$

Model (1) is commonly used in the field of applied mathematics for theoretically analyzing the imaging problem [Reference Donoho13, Reference Candès and Fernandez-Granda5, Reference Batenkov, Goldman and Yomdin3]. It is directly the model in the frequency domain for the imaging modalities with

![]() $\mathrm {sinc}(||\mathbf{x} ||_2)$

being the point spread function [Reference Den Dekker and van den Bos10]. Its discrete form is also a standard model in array signal processing. Moreover, even for imaging with general point spread functions or optical transfer functions, some of the imaging enhancements such as inverse filtering method [Reference Frieden15] will modify the measurements in the frequency domain to (1). These ensure the generality of the model (1) in the fields of imaging and array signal processing.

$\mathrm {sinc}(||\mathbf{x} ||_2)$

being the point spread function [Reference Den Dekker and van den Bos10]. Its discrete form is also a standard model in array signal processing. Moreover, even for imaging with general point spread functions or optical transfer functions, some of the imaging enhancements such as inverse filtering method [Reference Frieden15] will modify the measurements in the frequency domain to (1). These ensure the generality of the model (1) in the fields of imaging and array signal processing.

Based on Rayleigh’s criterion, the corresponding resolution limit for imaging with the point spread function

![]() $\mathrm {sinc}(||\mathbf{x}||_2)^2$

is

$\mathrm {sinc}(||\mathbf{x}||_2)^2$

is

![]() $\frac {\pi }{\Omega }$

. It was shown by many mathematical studies that

$\frac {\pi }{\Omega }$

. It was shown by many mathematical studies that

![]() $\frac {\pi }{\Omega }$

is also the critical limit for the imaging model (1). To be more specific, in [Reference Donoho13], Donoho demonstrated that for sources on grid points spacing by

$\frac {\pi }{\Omega }$

is also the critical limit for the imaging model (1). To be more specific, in [Reference Donoho13], Donoho demonstrated that for sources on grid points spacing by

![]() $\Delta \geqslant \frac {\pi }{\Omega }$

, the stable recovery is possible from (1) in dimension one, but the stability becomes much worse in the case when

$\Delta \geqslant \frac {\pi }{\Omega }$

, the stable recovery is possible from (1) in dimension one, but the stability becomes much worse in the case when

![]() $\Delta < \frac {\pi }{\Omega }$

. Therefore, in the same way as [Reference Donoho13], we regard

$\Delta < \frac {\pi }{\Omega }$

. Therefore, in the same way as [Reference Donoho13], we regard

![]() $\frac {\pi }{\Omega }$

as the Rayleigh limit in this paper, and super-resolution refers to obtaining a better resolution than

$\frac {\pi }{\Omega }$

as the Rayleigh limit in this paper, and super-resolution refers to obtaining a better resolution than

![]() $\frac {\pi }{\Omega }$

.

$\frac {\pi }{\Omega }$

.

For the mathematical theory of super-resolving n point sources or infinity point sources, to the best of our knowledge, the first result was derived by Donoho in 1992 [Reference Donoho13]. He developed a theory from the optimal recovery point of view to explain the possibility and difficulties of super-resolution via sparsity constraint. He considered discrete measures

![]() $\mu $

supported on a lattice

$\mu $

supported on a lattice

![]() $\{k\Delta \}_{k=-\infty }^{\infty }$

and regularized by a so-called ‘Rayleigh index’ R. The available measurement is the noisy Fourier data of

$\{k\Delta \}_{k=-\infty }^{\infty }$

and regularized by a so-called ‘Rayleigh index’ R. The available measurement is the noisy Fourier data of

![]() $\mu $

like model (1) in a one-dimensional space. He showed that the minimax error

$\mu $

like model (1) in a one-dimensional space. He showed that the minimax error

![]() $E^*$

for the amplitude recovery with noise level

$E^*$

for the amplitude recovery with noise level

![]() $\sigma $

was bounded as

$\sigma $

was bounded as

$$ \begin{align*} \beta_1(R,\Omega)\left(\frac{1}{\Delta}\right)^{2R-1}\sigma\leqslant E^* \leqslant \beta_2(R,\Omega) \left(\frac{1}{\Delta}\right)^{4R+1}\sigma \end{align*} $$

$$ \begin{align*} \beta_1(R,\Omega)\left(\frac{1}{\Delta}\right)^{2R-1}\sigma\leqslant E^* \leqslant \beta_2(R,\Omega) \left(\frac{1}{\Delta}\right)^{4R+1}\sigma \end{align*} $$

for certain small

![]() $\Delta $

. His results emphasize the importance of sparsity in the super-resolution. In recent years, due to the impressive development of super-resolution modalities in biological imaging [Reference Hell and Wichmann17, Reference Westphal, Rizzoli, Lauterbach, Kamin, Jahn and Hell47, Reference Hess, Girirajan and Mason20, Reference Betzig, Patterson, Sougrat, Lindwasser, Olenych, Bonifacino, Davidson, Lippincott-Schwartz and Hess4, Reference Rust, Bates and Zhuang37] and super-resolution algorithms in applied mathematics [Reference Candès and Fernandez-Granda5, Reference Duval and Peyré14, Reference Poon and Peyré33, Reference Tang, Bhaskar, Shah and Recht45, Reference Tang, Bhaskar and Recht44, Reference Morgenshtern and Candes32, Reference Morgenshtern31, Reference Denoyelle, Duval and Peyré11], the inherent super-resolving capacity of the imaging problem becomes increasingly popular and the one-dimensional case was well-studied. In [Reference Demanet and Nguyen8], the authors considered resolving the amplitudes of n-sparse point sources supported on a grid and improved the results of Donoho. Concretely, they showed that the scaling of the noise level for the minimax error should be

$\Delta $

. His results emphasize the importance of sparsity in the super-resolution. In recent years, due to the impressive development of super-resolution modalities in biological imaging [Reference Hell and Wichmann17, Reference Westphal, Rizzoli, Lauterbach, Kamin, Jahn and Hell47, Reference Hess, Girirajan and Mason20, Reference Betzig, Patterson, Sougrat, Lindwasser, Olenych, Bonifacino, Davidson, Lippincott-Schwartz and Hess4, Reference Rust, Bates and Zhuang37] and super-resolution algorithms in applied mathematics [Reference Candès and Fernandez-Granda5, Reference Duval and Peyré14, Reference Poon and Peyré33, Reference Tang, Bhaskar, Shah and Recht45, Reference Tang, Bhaskar and Recht44, Reference Morgenshtern and Candes32, Reference Morgenshtern31, Reference Denoyelle, Duval and Peyré11], the inherent super-resolving capacity of the imaging problem becomes increasingly popular and the one-dimensional case was well-studied. In [Reference Demanet and Nguyen8], the authors considered resolving the amplitudes of n-sparse point sources supported on a grid and improved the results of Donoho. Concretely, they showed that the scaling of the noise level for the minimax error should be

![]() $\mathrm {SRF}^{2n-1}$

, where

$\mathrm {SRF}^{2n-1}$

, where

![]() $\mathrm {SRF}:= \frac {1}{\Delta \Omega }$

is the super-resolution factor. Similar results for multi-clumps cases were also derived in [Reference Batenkov, Demanet, Goldman and Yomdin2, Reference Li and Liao21]. Recently in [Reference Batenkov, Goldman and Yomdin3], the authors derived sharp minimax errors for the location and the amplitude recovery of off-the-grid sources. They showed that for

$\mathrm {SRF}:= \frac {1}{\Delta \Omega }$

is the super-resolution factor. Similar results for multi-clumps cases were also derived in [Reference Batenkov, Demanet, Goldman and Yomdin2, Reference Li and Liao21]. Recently in [Reference Batenkov, Goldman and Yomdin3], the authors derived sharp minimax errors for the location and the amplitude recovery of off-the-grid sources. They showed that for

![]() $\sigma \lesssim (\mathrm {SRF})^{-2p+1}$

, where p is the number of nodes that form a cluster of certain type, the minimax error rate for reconstruction of the clustered nodes is of the order

$\sigma \lesssim (\mathrm {SRF})^{-2p+1}$

, where p is the number of nodes that form a cluster of certain type, the minimax error rate for reconstruction of the clustered nodes is of the order

![]() $(\mathrm {SRF})^{2p-2} \frac {\sigma }{\Omega }$

, while for recovering the corresponding amplitudes, the rate is of the order

$(\mathrm {SRF})^{2p-2} \frac {\sigma }{\Omega }$

, while for recovering the corresponding amplitudes, the rate is of the order

![]() $(\mathrm {SRF})^{2p-1}\sigma $

. Moreover, the corresponding minimax rates for the recovery of the non-clustered nodes and amplitudes are

$(\mathrm {SRF})^{2p-1}\sigma $

. Moreover, the corresponding minimax rates for the recovery of the non-clustered nodes and amplitudes are

![]() $\frac {\sigma }{\Omega }$

and

$\frac {\sigma }{\Omega }$

and

![]() $\sigma $

, respectively. This was generalized to the case of resolving positive sources in [Reference Liu and Ammari23] recently. We also refer the readers to [Reference Moitra30, Reference Chen and Moitra6] for understanding the resolution limit from the perceptive of sample complexity and to [Reference Tang43, Reference Ferreira Da Costa and Chi7] for the resolving limit of some algorithms.

$\sigma $

, respectively. This was generalized to the case of resolving positive sources in [Reference Liu and Ammari23] recently. We also refer the readers to [Reference Moitra30, Reference Chen and Moitra6] for understanding the resolution limit from the perceptive of sample complexity and to [Reference Tang43, Reference Ferreira Da Costa and Chi7] for the resolving limit of some algorithms.

In order to characterize the exact resolution rather than the minimax error in recovering multiple point sources, in the earlier works [Reference Liu and Zhang27, Reference Liu and Zhang26, Reference Liu and Zhang25, Reference Liu and Ammari22, Reference Liu, He and Ammari24], we have defined the so-called ‘computational resolution limits’, which characterize the minimum required distance between point sources so that their number and locations can be stably resolved under certain noise level. By developing a nonlinear approximation theory in so-called Vandermonde spaces, we have derived sharp bounds for computational resolution limits in the one-dimensional super-resolution problem (1). In particular, we have shown in [Reference Liu and Zhang26] that the computational resolution limits for the number and location recoveries should be bounded above by, respectively,

![]() $\frac {4.4e\pi }{\Omega }\left (\frac {\sigma }{m_{\min }}\right )^{\frac {1}{2 n-2}}$

and

$\frac {4.4e\pi }{\Omega }\left (\frac {\sigma }{m_{\min }}\right )^{\frac {1}{2 n-2}}$

and

![]() $\frac {5.88 e\pi }{\Omega }\left (\frac {\sigma }{m_{\min }}\right )^{\frac {1}{2 n-1}}$

, where the noise level

$\frac {5.88 e\pi }{\Omega }\left (\frac {\sigma }{m_{\min }}\right )^{\frac {1}{2 n-1}}$

, where the noise level

![]() $\sigma $

and signal magnitude

$\sigma $

and signal magnitude

![]() $m_{\min }$

are defined as in (2) and (3), respectively. In the present paper, we substantially improve these estimates to have practical significance.

$m_{\min }$

are defined as in (2) and (3), respectively. In the present paper, we substantially improve these estimates to have practical significance.

1.3 Our main contributions in this paper

Our first contribution is two location-amplitude identities characterizing the relations between locations and amplitudes of true and recovered sources in the one-dimensional super-resolution problem. These identities allow us to directly derive the super-resolution capability for number, location and amplitude recovery in the super-resolution problem and improve state-of-the-art estimations to an unprecedented level to have practical significance. Although these nonlinear inverse problems are known to be very challenging, we now have a clear and simple picture of all of them, which allows us to solve them in a unified way in just a few pages. To be more specific, we prove that, under model (1) in dimension one, it is definitely possible to detect the correct source number when the sources are separated by

where

![]() $\frac {\sigma }{m_{\min }}$

represents the inverse of the signal-to-noise ratio (

$\frac {\sigma }{m_{\min }}$

represents the inverse of the signal-to-noise ratio (

![]() $\mathrm {SNR}$

). This substantially improves the estimate in [Reference Liu and Zhang26] and indicates that super-resolution in detecting correct source number (i.e., surpassing the diffraction limit

$\mathrm {SNR}$

). This substantially improves the estimate in [Reference Liu and Zhang26] and indicates that super-resolution in detecting correct source number (i.e., surpassing the diffraction limit

![]() $\frac {\pi }{\Omega }$

) is definitely possible when

$\frac {\pi }{\Omega }$

) is definitely possible when

![]() $\frac {m_{\min }}{\sigma }\geqslant (2e)^{2n-2}$

. Moreover, for the case when resolving two sources, the requirement for the separation is improved to

$\frac {m_{\min }}{\sigma }\geqslant (2e)^{2n-2}$

. Moreover, for the case when resolving two sources, the requirement for the separation is improved to

$$\begin{align*}d_{\min}\geqslant \frac{2\arcsin\left(2\left(\frac{\sigma}{m_{\min}}\right)^{\frac{1}{2}}\right)}{\Omega}, \end{align*}$$

$$\begin{align*}d_{\min}\geqslant \frac{2\arcsin\left(2\left(\frac{\sigma}{m_{\min}}\right)^{\frac{1}{2}}\right)}{\Omega}, \end{align*}$$

indicating that surpassing the Rayleigh limit in distinguishing two sources is possible when

![]() $\mathrm {SNR}>4$

. This is the first time where it is demonstrated theoretically that super-resolution (‘in number detection’) is actually possible in practice. For the stable location recovery, the estimate is improved to

$\mathrm {SNR}>4$

. This is the first time where it is demonstrated theoretically that super-resolution (‘in number detection’) is actually possible in practice. For the stable location recovery, the estimate is improved to

as compared to the previous result in [Reference Liu and Zhang26], indicating that the location recovery is stable when

![]() $\frac {m_{\min }}{\sigma }\geqslant (2.36 e)^{2n-1}$

. Moreover, under the same separation condition, we also obtain stability results for amplitude recovery and a certain

$\frac {m_{\min }}{\sigma }\geqslant (2.36 e)^{2n-1}$

. Moreover, under the same separation condition, we also obtain stability results for amplitude recovery and a certain

![]() $l_0$

minimization algorithm in the super-resolution problem. These results provide us with a quantitative understanding of the super-resolution of multiple sources. Since our method is rather direct, it is very hard to substantially improve the estimates now, and we even roughly know to what extent the constant factor in the estimates can be improved.

$l_0$

minimization algorithm in the super-resolution problem. These results provide us with a quantitative understanding of the super-resolution of multiple sources. Since our method is rather direct, it is very hard to substantially improve the estimates now, and we even roughly know to what extent the constant factor in the estimates can be improved.

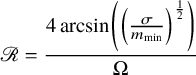

Our second crucial result is the theoretical proof of a two-point resolution limit in multi-dimensional spaces under only an assumption on the noise level. It is given by

$$ \begin{align} \frac{4\arcsin \left(\left(\frac{\sigma}{m_{\min}}\right)^{\frac{1}{2}} \right)}{\Omega} \end{align} $$

$$ \begin{align} \frac{4\arcsin \left(\left(\frac{\sigma}{m_{\min}}\right)^{\frac{1}{2}} \right)}{\Omega} \end{align} $$

for

![]() $\frac {\sigma }{m_{\min }}\leqslant \frac {1}{2}$

. In the case when

$\frac {\sigma }{m_{\min }}\leqslant \frac {1}{2}$

. In the case when

![]() $\frac {\sigma }{m_{\min }}>\frac {1}{2}$

, there is no super-resolution under certain circumstances. Our results show that, for resolving any two point sources, the resolution can exceed the Rayleigh limit when

$\frac {\sigma }{m_{\min }}>\frac {1}{2}$

, there is no super-resolution under certain circumstances. Our results show that, for resolving any two point sources, the resolution can exceed the Rayleigh limit when

![]() $\mathrm {SNR}>2$

. When

$\mathrm {SNR}>2$

. When

![]() $\mathrm {SNR}>4$

, one can achieve

$\mathrm {SNR}>4$

, one can achieve

![]() $1.5$

times improvement of the Rayleigh limit. This finding indicates that obtaining a resolution far better than the Rayleigh limit in practical imaging and direction-of-arrival problems is possible with refined sensors. As a comparison, former works for the two-point resolution [Reference Helstrom18, Reference Helstrom19, Reference Lucy29, Reference Lucy28, Reference Goodman16, Reference Shahram and Milanfar39, Reference Shahram and Milanfar40, Reference Shahram and Milanfar41] consider the model in the physical domain with the imaging process or the noise being random. In addition, the derived estimate is relatively complicated as the model considered is more complex than (1). For example, the

$1.5$

times improvement of the Rayleigh limit. This finding indicates that obtaining a resolution far better than the Rayleigh limit in practical imaging and direction-of-arrival problems is possible with refined sensors. As a comparison, former works for the two-point resolution [Reference Helstrom18, Reference Helstrom19, Reference Lucy29, Reference Lucy28, Reference Goodman16, Reference Shahram and Milanfar39, Reference Shahram and Milanfar40, Reference Shahram and Milanfar41] consider the model in the physical domain with the imaging process or the noise being random. In addition, the derived estimate is relatively complicated as the model considered is more complex than (1). For example, the

![]() $\mathrm {SNR}$

governing object detectability in [Reference Helstrom19] is given by

$\mathrm {SNR}$

governing object detectability in [Reference Helstrom19] is given by

$$\begin{align*}\mathrm{SNR} = (E/N)(TW)^{-\frac{1}{2}} \left|\int_A \phi_s(\mathbf{r}, \mathbf{r}) d^2 \mathbf{r}\right|{}^{-1}\times\left[\int_A \int_A\left|\phi_s\left(\mathbf{r}_1, \mathbf{r}_2\right)\right|{}^2 d^2 \mathbf{r}_1 d^2 \mathbf{r}_2\right]^{\frac{1}{2}}, \end{align*}$$

$$\begin{align*}\mathrm{SNR} = (E/N)(TW)^{-\frac{1}{2}} \left|\int_A \phi_s(\mathbf{r}, \mathbf{r}) d^2 \mathbf{r}\right|{}^{-1}\times\left[\int_A \int_A\left|\phi_s\left(\mathbf{r}_1, \mathbf{r}_2\right)\right|{}^2 d^2 \mathbf{r}_1 d^2 \mathbf{r}_2\right]^{\frac{1}{2}}, \end{align*}$$

where

![]() $\phi _s(\cdot )$

denotes the autocovariance function of the field in the aperture plane and

$\phi _s(\cdot )$

denotes the autocovariance function of the field in the aperture plane and

![]() $E, N, T, W, A$

represent other factors.

$E, N, T, W, A$

represent other factors.

The estimate of two-point resolution can be directly extended to the following more general setting:

$$ \begin{align} \mathbf Y(\boldsymbol{{\omega}}) = \chi(\boldsymbol{{\omega}})\mathscr F[\mu] (\boldsymbol{{\omega}}) + \mathbf W(\boldsymbol{{\omega}})= \sum_{j=1}^{n}a_j \chi(\boldsymbol{{\omega}}) e^{i \mathbf{y}_j\cdot \boldsymbol{{\omega}}} + \mathbf W(\boldsymbol{{\omega}}), \ \boldsymbol{{\omega}} \in \mathbb R^k, \ ||\boldsymbol{{\omega}}||_2\leqslant \Omega, \end{align} $$

$$ \begin{align} \mathbf Y(\boldsymbol{{\omega}}) = \chi(\boldsymbol{{\omega}})\mathscr F[\mu] (\boldsymbol{{\omega}}) + \mathbf W(\boldsymbol{{\omega}})= \sum_{j=1}^{n}a_j \chi(\boldsymbol{{\omega}}) e^{i \mathbf{y}_j\cdot \boldsymbol{{\omega}}} + \mathbf W(\boldsymbol{{\omega}}), \ \boldsymbol{{\omega}} \in \mathbb R^k, \ ||\boldsymbol{{\omega}}||_2\leqslant \Omega, \end{align} $$

where

![]() $\chi (\boldsymbol {{\omega }})=0$

or

$\chi (\boldsymbol {{\omega }})=0$

or

![]() $1$

,

$1$

,

![]() $\chi (\mathbf{0})=1$

and

$\chi (\mathbf{0})=1$

and

![]() $\chi (\boldsymbol {{\omega }})=1, ||\boldsymbol {{\omega }}||_2 =\Omega $

. This enables the application of our results to line spectral estimations and directional-of-arrival in signal processing. Moreover, our findings can be applied to imaging systems with general optical transfer functions. A new fact revealed in this paper is that the two-point resolution is actually determined by the boundary points of the transfer function and is not that dependent on the interior frequency information. Also, as revealed in Section 5, the measurements at

$\chi (\boldsymbol {{\omega }})=1, ||\boldsymbol {{\omega }}||_2 =\Omega $

. This enables the application of our results to line spectral estimations and directional-of-arrival in signal processing. Moreover, our findings can be applied to imaging systems with general optical transfer functions. A new fact revealed in this paper is that the two-point resolution is actually determined by the boundary points of the transfer function and is not that dependent on the interior frequency information. Also, as revealed in Section 5, the measurements at

![]() $\boldsymbol {{\omega }} =\mathbf{0}$

and

$\boldsymbol {{\omega }} =\mathbf{0}$

and

![]() $\left |\left |{\boldsymbol {{\omega }}}\right |\right |=\Omega $

are already enough for the algorithm which provably achieves the resolution limit.

$\left |\left |{\boldsymbol {{\omega }}}\right |\right |=\Omega $

are already enough for the algorithm which provably achieves the resolution limit.

In the last part of the paper, we find an algorithm that achieves the optimal resolution when distinguishing two sources and conducts many numerical experiments to manifest its optimal performance and phase transition. Although the noise and the aberration are inevitable and the point source is not an exact delta point, our results still indicate that super-resolving two sources in practice is possible for general imaging modalities, due to the excellent noise tolerance. We plan to examine the practical feasibility of our method in the near future.

To summarize, by this paper, we have shed light on understanding quantitatively when super-resolution is definitely possible and when it is not. It has been disclosed by our results that super-resolution when distinguishing two sources is far more possible than what was commonly recognized.

1.4 Organization of the paper

The paper is organized in the following way. In Section 2, we present the theory of location-amplitude identities. In Section 3, we derive stability results for recovering the number, locations and amplitudes of sources in the one-dimensional super-resolution problem. In Section 4, we derive the exact formula of the two-point resolution limit, and, in Section 5, we devise algorithms achieving exactly the optimal resolution in distinguishing images from one and two sources. The Appendix consists of some useful inequalities.

2 Location-amplitude identities

In this section, we intend to derive two location-amplitude identities that characterize the relations between source locations and amplitudes in the one-dimensional super-resolution problem. We start from the following elementary model in dimension one:

where

![]() $\widehat \mu , \mu $

are discrete measures,

$\widehat \mu , \mu $

are discrete measures,

![]() $\mathscr F[f]=\int _{\mathbb R} e^{iy\omega } f(y)dy$

denotes the Fourier transform, and

$\mathscr F[f]=\int _{\mathbb R} e^{iy\omega } f(y)dy$

denotes the Fourier transform, and

![]() $\mathbf{w}(\omega ) = \mathscr F[\widehat \mu ](\omega ) - \mathscr F[\mu ](\omega )$

. To be more specific, we set

$\mathbf{w}(\omega ) = \mathscr F[\widehat \mu ](\omega ) - \mathscr F[\mu ](\omega )$

. To be more specific, we set

![]() $\mu =\sum _{j=1}^na_j\delta _{y_j}$

and

$\mu =\sum _{j=1}^na_j\delta _{y_j}$

and

![]() $\widehat \mu = \sum _{j=1}^d\widehat a_j\delta _{\widehat y_j}$

with

$\widehat \mu = \sum _{j=1}^d\widehat a_j\delta _{\widehat y_j}$

with

![]() $a_j, \widehat a_j$

being the source amplitudes and

$a_j, \widehat a_j$

being the source amplitudes and

![]() $y_j, \widehat y_j$

the source locations.

$y_j, \widehat y_j$

the source locations.

2.1 Statement of the identities

Based on the above model, we have the following location-amplitude identities.

Theorem 2.1 (Location-amplitude identities).

Consider the model

where

![]() $\widehat \mu = \sum _{j=1}^d\widehat a_j\delta _{\widehat y_j}$

and

$\widehat \mu = \sum _{j=1}^d\widehat a_j\delta _{\widehat y_j}$

and

![]() $\mu =\sum _{j=1}^na_j\delta _{y_j}$

. For any fixed

$\mu =\sum _{j=1}^na_j\delta _{y_j}$

. For any fixed

![]() $y_t$

and

$y_t$

and

![]() $\widehat y_{t^{\prime }}$

, define the set

$\widehat y_{t^{\prime }}$

, define the set

![]() $S_t$

containing all

$S_t$

containing all

![]() $y_j$

’s and

$y_j$

’s and

![]() $\widehat y_j$

’s except

$\widehat y_j$

’s except

![]() $y_t, \widehat y_{t^{\prime }}$

that

$y_t, \widehat y_{t^{\prime }}$

that

Let

![]() $\# S_t$

be the number of elements in

$\# S_t$

be the number of elements in

![]() $S_t$

(i.e.,

$S_t$

(i.e.,

![]() $n+d-2$

). Then, for any

$n+d-2$

). Then, for any

![]() $0< \omega ^{*\leqslant } \frac {\Omega }{\#S_t}$

, we have the following relations:

$0< \omega ^{*\leqslant } \frac {\Omega }{\#S_t}$

, we have the following relations:

$$ \begin{align} &\widehat {a}_{t^{\prime}}\prod_{q\in S_t}\left(e^{i\widehat y_{t^{\prime}}\omega^*}-e^{i q\omega^*}\right)- a_t \prod_{q\in S_t} \left(e^{i y_t\omega^*}- e^{i q\omega^*} \right)= \mathbf{w}_1^{\top}\mathbf{v}. \end{align} $$

$$ \begin{align} &\widehat {a}_{t^{\prime}}\prod_{q\in S_t}\left(e^{i\widehat y_{t^{\prime}}\omega^*}-e^{i q\omega^*}\right)- a_t \prod_{q\in S_t} \left(e^{i y_t\omega^*}- e^{i q\omega^*} \right)= \mathbf{w}_1^{\top}\mathbf{v}. \end{align} $$

Moreover, for any

![]() $0< \omega ^*\leqslant \frac {\Omega }{\#S_t+1}$

, we have

$0< \omega ^*\leqslant \frac {\Omega }{\#S_t+1}$

, we have

$$ \begin{align} a_t \prod_{q\in S_t\cup \{\widehat y_{t^{\prime}}\}}\left(e^{iy_t\omega^*} - e^{iq\omega^*}\right) = \left(e^{i \widehat y_{t^{\prime}}\omega^*}\mathbf{w}_1- \mathbf{w}_2\right)^{\top}\mathbf{v}. \end{align} $$

$$ \begin{align} a_t \prod_{q\in S_t\cup \{\widehat y_{t^{\prime}}\}}\left(e^{iy_t\omega^*} - e^{iq\omega^*}\right) = \left(e^{i \widehat y_{t^{\prime}}\omega^*}\mathbf{w}_1- \mathbf{w}_2\right)^{\top}\mathbf{v}. \end{align} $$

Here,

![]() $\mathbf{w}_1 = (\mathbf{w}(0), \mathbf{w}(\omega ^*), \cdots , \mathbf{w}(\#S_t\omega ^*))^{\top }$

,

$\mathbf{w}_1 = (\mathbf{w}(0), \mathbf{w}(\omega ^*), \cdots , \mathbf{w}(\#S_t\omega ^*))^{\top }$

,

![]() $\mathbf{w}_2 = (\mathbf{w}(1), \mathbf{w}(\omega ^*), \cdots , \mathbf{w}((\#S_t+1)\omega ^*))^{\top }$

and the vector

$\mathbf{w}_2 = (\mathbf{w}(1), \mathbf{w}(\omega ^*), \cdots , \mathbf{w}((\#S_t+1)\omega ^*))^{\top }$

and the vector

![]() $\mathbf{v}$

is given by

$\mathbf{v}$

is given by

$$\begin{align*}\left((-1)^{\# S_t}\sum_{\{q_1,\cdots, q_{\#S_t}\}\in S_{j, \# S_t}} e^{iq_1\omega^*}\cdots e^{iq_{\#S_t}\omega^*}, \ \cdots,\ (-1)^2\sum_{\{q_1, q_2\}\in S_{t,2}} e^{iq_1\omega^*}e^{iq_2\omega^*}, \ (-1)\sum_{\{q_1\}\in S_{t,1}} e^{iq_1\omega^*}, 1 \right)^{\top}, \end{align*}$$

$$\begin{align*}\left((-1)^{\# S_t}\sum_{\{q_1,\cdots, q_{\#S_t}\}\in S_{j, \# S_t}} e^{iq_1\omega^*}\cdots e^{iq_{\#S_t}\omega^*}, \ \cdots,\ (-1)^2\sum_{\{q_1, q_2\}\in S_{t,2}} e^{iq_1\omega^*}e^{iq_2\omega^*}, \ (-1)\sum_{\{q_1\}\in S_{t,1}} e^{iq_1\omega^*}, 1 \right)^{\top}, \end{align*}$$

where

$S_{t,p}:=\left \{\{q_1, \cdots , q_p\}\bigg | q_j\in S_t, 1\leqslant j\leqslant p, q_{j^{\prime }} \ \text{and}\ q_{j^{\prime \prime }}\ \text {are different elements in}\ S_t \text {when} j^{\prime }\neq j^{\prime \prime } \right \}, p=1,\cdots , \#S_t$

.

$S_{t,p}:=\left \{\{q_1, \cdots , q_p\}\bigg | q_j\in S_t, 1\leqslant j\leqslant p, q_{j^{\prime }} \ \text{and}\ q_{j^{\prime \prime }}\ \text {are different elements in}\ S_t \text {when} j^{\prime }\neq j^{\prime \prime } \right \}, p=1,\cdots , \#S_t$

.

For the convenience of the applications of our location-amplitude identities, we derive the following corollary, as a direct consequence of Theorem 2.1.

Corollary 2.2. Consider the model

where

![]() $\widehat \mu = \sum _{j=1}^d\widehat a_j\delta _{\widehat y_j}$

and

$\widehat \mu = \sum _{j=1}^d\widehat a_j\delta _{\widehat y_j}$

and

![]() $\mu =\sum _{j=1}^na_j\delta _{y_j}$

and assume that

$\mu =\sum _{j=1}^na_j\delta _{y_j}$

and assume that

![]() $|\mathbf{w}(\omega )|< \sigma , \omega \in [0, \Omega ]$

. For any fixed

$|\mathbf{w}(\omega )|< \sigma , \omega \in [0, \Omega ]$

. For any fixed

![]() $y_t$

and

$y_t$

and

![]() $\widehat y_{t'}$

, define the set

$\widehat y_{t'}$

, define the set

![]() $S_t$

as

$S_t$

as

Let

![]() $\# S_t$

be the number of elements in

$\# S_t$

be the number of elements in

![]() $S_t$

(i.e.,

$S_t$

(i.e.,

![]() $n+d-2$

). Then, for any

$n+d-2$

). Then, for any

![]() $0< \omega ^*\leqslant \frac {\Omega }{\#S_t}$

, we have

$0< \omega ^*\leqslant \frac {\Omega }{\#S_t}$

, we have

$$ \begin{align} \left|\widehat {a}_{t'}\prod_{q\in S_t}\left(e^{i\widehat y_{t'}\omega^*}-e^{i q\omega^*}\right)- a_t \prod_{q\in S_t} \left(e^{i y_t\omega^*}- e^{i q\omega^*} \right)\right| < 2^{\#S_t}\sigma. \end{align} $$

$$ \begin{align} \left|\widehat {a}_{t'}\prod_{q\in S_t}\left(e^{i\widehat y_{t'}\omega^*}-e^{i q\omega^*}\right)- a_t \prod_{q\in S_t} \left(e^{i y_t\omega^*}- e^{i q\omega^*} \right)\right| < 2^{\#S_t}\sigma. \end{align} $$

Moreover, for any

![]() $0< \omega ^*\leqslant \frac {\Omega }{\#S_t+1}$

, we have

$0< \omega ^*\leqslant \frac {\Omega }{\#S_t+1}$

, we have

$$ \begin{align} \left|a_t \prod_{q\in S_t\cup \{\widehat y_{t'}\}}\left(e^{iy_t\omega^*} - e^{iq\omega^*}\right) \right|< 2^{\#S_t+1}\sigma. \end{align} $$

$$ \begin{align} \left|a_t \prod_{q\in S_t\cup \{\widehat y_{t'}\}}\left(e^{iy_t\omega^*} - e^{iq\omega^*}\right) \right|< 2^{\#S_t+1}\sigma. \end{align} $$

Proof. This is a direct consequence of Theorem 2.1 in view of

2.2 Proof of Theorem 2.1

Before starting the proof, we first introduce some notation and lemmas. Denote by

The following lemma on the inverse of the Vandermonde matrix is standard.

Lemma 2.3. Let

![]() $V_k$

be the Vandermonde matrix

$V_k$

be the Vandermonde matrix

![]() $\left (\phi _{0, k-1}(t_1), \cdots , \phi _{0, k-1}(t_k)\right )$

. Then its inverse

$\left (\phi _{0, k-1}(t_1), \cdots , \phi _{0, k-1}(t_k)\right )$

. Then its inverse

![]() $V_k^{-1}=B$

can be specified as follows:

$V_k^{-1}=B$

can be specified as follows:

$$\begin{align*}B_{j q}=\left\{\begin{array}{cc}(-1)^{k-q}\left(\frac{\sum_{\substack{1 \leqslant m_1<\ldots<m_{k-q} \leqslant k \\ m_1, \ldots, m_{k-q} \neq j}} t_{m_1} \cdots t_{m_{k-q}}}{\prod_{\substack{1 \leqslant m \leqslant k \\ m \neq j}}\left(t_j-t_m\right)}\right), & \quad 1 \leqslant q<k, \\ \frac{1}{\prod_{\substack{1 \leqslant m \leqslant k \\ m \neq j}}\left(t_j-t_m\right)}, & \quad q=k. \end{array}\right. \end{align*}$$

$$\begin{align*}B_{j q}=\left\{\begin{array}{cc}(-1)^{k-q}\left(\frac{\sum_{\substack{1 \leqslant m_1<\ldots<m_{k-q} \leqslant k \\ m_1, \ldots, m_{k-q} \neq j}} t_{m_1} \cdots t_{m_{k-q}}}{\prod_{\substack{1 \leqslant m \leqslant k \\ m \neq j}}\left(t_j-t_m\right)}\right), & \quad 1 \leqslant q<k, \\ \frac{1}{\prod_{\substack{1 \leqslant m \leqslant k \\ m \neq j}}\left(t_j-t_m\right)}, & \quad q=k. \end{array}\right. \end{align*}$$

The following lemma can be deduced from the inverse of the Vandermonde matrix, and the readers can check Lemma 5 in [Reference Liu and Zhang26] for a simple proof, although the numbers there are real numbers.

Lemma 2.4. Let

![]() $t_1, \cdots , t_k$

be k different complex numbers. For

$t_1, \cdots , t_k$

be k different complex numbers. For

![]() $t\in \mathbb C$

, we have

$t\in \mathbb C$

, we have

$$\begin{align*}\left(V_k^{-1}\phi_{0,k-1}(t)\right)_{j}=\prod_{1\leqslant q\leqslant k,q\neq j}\frac{t- t_q}{t_j- t_q}, \end{align*}$$

$$\begin{align*}\left(V_k^{-1}\phi_{0,k-1}(t)\right)_{j}=\prod_{1\leqslant q\leqslant k,q\neq j}\frac{t- t_q}{t_j- t_q}, \end{align*}$$

where

![]() $V_k:= \big (\phi _{0,k-1}(t_1),\cdots ,\phi _{0,k-1}(t_k)\big )$

with

$V_k:= \big (\phi _{0,k-1}(t_1),\cdots ,\phi _{0,k-1}(t_k)\big )$

with

![]() $\phi _{0, k-1}(\cdot )$

being defined by (12).

$\phi _{0, k-1}(\cdot )$

being defined by (12).

Now we start the main proof.

Proof. We only prove the theorem for

![]() $\omega ^* \leqslant \frac {\Omega }{\#S_t+1}$

. The case when

$\omega ^* \leqslant \frac {\Omega }{\#S_t+1}$

. The case when

![]() $\omega ^* \leqslant \frac {\Omega }{\#S_t}$

for (7) is obvious afterwards. We fix

$\omega ^* \leqslant \frac {\Omega }{\#S_t}$

for (7) is obvious afterwards. We fix

![]() $t\in \{1,\cdots , n\}$

in the following derivations. From (6), we can write

$t\in \{1,\cdots , n\}$

in the following derivations. From (6), we can write

where

![]() $\widehat a = (\widehat a_1, \cdots , \widehat a_d)^{\top }$

,

$\widehat a = (\widehat a_1, \cdots , \widehat a_d)^{\top }$

,

![]() $a = (a_1, \cdots , a_n)^{\top }, W =\left (\mathbf{w}(0), \mathbf{w}(\omega ^*), \cdots , \mathbf{w}((\#S_t+1)\omega ^*)\right )^{\top }$

and

$a = (a_1, \cdots , a_n)^{\top }, W =\left (\mathbf{w}(0), \mathbf{w}(\omega ^*), \cdots , \mathbf{w}((\#S_t+1)\omega ^*)\right )^{\top }$

and

$$ \begin{align*} &\widehat A = \left( \phi_{0,\#S_t+1}(e^{i \widehat y_1 \omega^*}), \ \phi_{0, \#S_t+1}(e^{i \widehat y_2 \omega^*}), \ \cdots, \ \phi_{0,\#S_t+1}(e^{i \widehat y_d \omega^*})\right), \\ & A = \left( \phi_{0,\#S_t+1}(e^{i y_1 \omega^*}), \ \phi_{0,\#S_t+1}(e^{i y_2 \omega^*}), \ \cdots, \ \phi_{0,\#S_t+1}(e^{i y_n \omega^*})\right), \end{align*} $$

$$ \begin{align*} &\widehat A = \left( \phi_{0,\#S_t+1}(e^{i \widehat y_1 \omega^*}), \ \phi_{0, \#S_t+1}(e^{i \widehat y_2 \omega^*}), \ \cdots, \ \phi_{0,\#S_t+1}(e^{i \widehat y_d \omega^*})\right), \\ & A = \left( \phi_{0,\#S_t+1}(e^{i y_1 \omega^*}), \ \phi_{0,\#S_t+1}(e^{i y_2 \omega^*}), \ \cdots, \ \phi_{0,\#S_t+1}(e^{i y_n \omega^*})\right), \end{align*} $$

with

![]() $0< \omega ^*\leqslant \frac {\Omega }{\#S_t+1}$

. We further decompose (13) into the following two equations:

$0< \omega ^*\leqslant \frac {\Omega }{\#S_t+1}$

. We further decompose (13) into the following two equations:

where

![]() $\mathbf{w}_1 = (\mathbf{w}(0), \mathbf{w}(\omega ^*), \cdots , \mathbf{w}(\#S_t \omega ^*))^{\top }$

,

$\mathbf{w}_1 = (\mathbf{w}(0), \mathbf{w}(\omega ^*), \cdots , \mathbf{w}(\#S_t \omega ^*))^{\top }$

,

![]() $\mathbf{w}_2 = (\mathbf{w}(\omega ^*), \cdots , \mathbf{w}((\#S_t+1)\omega ^*))^{\top }$

and

$\mathbf{w}_2 = (\mathbf{w}(\omega ^*), \cdots , \mathbf{w}((\#S_t+1)\omega ^*))^{\top }$

and

$$ \begin{align*} B_1 &= \left( \phi_{0, \#S_t}(e^{i y_{1}\omega^*}),\ \cdots,\ \phi_{0, \#S_t}(e^{i y_{n}\omega^*}),\ \phi_{0, \#S_t}(e^{i\widehat y_{1}\omega^*}),\ \cdots, \phi_{0, \#S_t}(e^{i\widehat y_{t'-1}\omega^*}),\right. \\ & \qquad \qquad \ \left. \phi_{0, \#S_t}(e^{i\widehat y_{t'+1}\omega^*}),\ \cdots, \ \phi_{0, \#S_t}(e^{i \widehat y_{d}\omega^*}) \right),\\ B_2 &= \left( \phi_{1, \#S_t+1}(e^{i y_{1}\omega^*}),\ \cdots,\ \phi_{1, \#S_t+1}(e^{i y_{n}\omega^*}),\ \phi_{1, \#S_t+1}(e^{i\widehat y_{1}\omega^*}),\ \cdots, \phi_{1, \#S_t+1}(e^{i\widehat y_{t'-1}\omega^*}),\right. \\ & \qquad \qquad \ \left. \phi_{1, \#S_t+1}(e^{i\widehat y_{t'+1}\omega^*}),\ \cdots, \ \phi_{1, \#S_t+1}(e^{i \widehat y_{d}\omega^*}) \right). \end{align*} $$

$$ \begin{align*} B_1 &= \left( \phi_{0, \#S_t}(e^{i y_{1}\omega^*}),\ \cdots,\ \phi_{0, \#S_t}(e^{i y_{n}\omega^*}),\ \phi_{0, \#S_t}(e^{i\widehat y_{1}\omega^*}),\ \cdots, \phi_{0, \#S_t}(e^{i\widehat y_{t'-1}\omega^*}),\right. \\ & \qquad \qquad \ \left. \phi_{0, \#S_t}(e^{i\widehat y_{t'+1}\omega^*}),\ \cdots, \ \phi_{0, \#S_t}(e^{i \widehat y_{d}\omega^*}) \right),\\ B_2 &= \left( \phi_{1, \#S_t+1}(e^{i y_{1}\omega^*}),\ \cdots,\ \phi_{1, \#S_t+1}(e^{i y_{n}\omega^*}),\ \phi_{1, \#S_t+1}(e^{i\widehat y_{1}\omega^*}),\ \cdots, \phi_{1, \#S_t+1}(e^{i\widehat y_{t'-1}\omega^*}),\right. \\ & \qquad \qquad \ \left. \phi_{1, \#S_t+1}(e^{i\widehat y_{t'+1}\omega^*}),\ \cdots, \ \phi_{1, \#S_t+1}(e^{i \widehat y_{d}\omega^*}) \right). \end{align*} $$

We first consider the case when all the

![]() $e^{y_j \omega ^*}$

’s and

$e^{y_j \omega ^*}$

’s and

![]() $e^{\widehat y_j\omega ^*}$

’s are distinct. Thus,

$e^{\widehat y_j\omega ^*}$

’s are distinct. Thus,

![]() $b=(b_1, \cdots , b_{\#S_t+1})$

in (14) is such that

$b=(b_1, \cdots , b_{\#S_t+1})$

in (14) is such that

$$\begin{align*}b_{l} = \left\{\begin{array}{ll} a_l, & 1\leqslant l\leqslant n, \\ - \widehat a_{l-n}, & n<l\leqslant n+t'-1,\\ - \widehat a_{l-n+1}, & n+t'-1<l. \end{array}\right. \end{align*}$$

$$\begin{align*}b_{l} = \left\{\begin{array}{ll} a_l, & 1\leqslant l\leqslant n, \\ - \widehat a_{l-n}, & n<l\leqslant n+t'-1,\\ - \widehat a_{l-n+1}, & n+t'-1<l. \end{array}\right. \end{align*}$$

Observe that

$$ \begin{align*} &B_2 = B_1 \mathrm{diag}\left(e^{iy_1\omega^*} ,\cdots, e^{i y_n \omega^*}, e^{i \widehat y_{1} \omega^*}, \cdots, e^{i \widehat y_{t'-1} \omega^*}, e^{i \widehat y_{t'+1} \omega^*},\cdots, e^{i \widehat y_{d} \omega^*}\right),\\ & \phi_{1, \#S_t+1}(e^{i y_{l}\omega^*}) = e^{i y_{l}\omega^*}\phi_{0, \#S_t}(e^{i y_{l}\omega^*}). \end{align*} $$

$$ \begin{align*} &B_2 = B_1 \mathrm{diag}\left(e^{iy_1\omega^*} ,\cdots, e^{i y_n \omega^*}, e^{i \widehat y_{1} \omega^*}, \cdots, e^{i \widehat y_{t'-1} \omega^*}, e^{i \widehat y_{t'+1} \omega^*},\cdots, e^{i \widehat y_{d} \omega^*}\right),\\ & \phi_{1, \#S_t+1}(e^{i y_{l}\omega^*}) = e^{i y_{l}\omega^*}\phi_{0, \#S_t}(e^{i y_{l}\omega^*}). \end{align*} $$

We rewrite (14) as

$$ \begin{align} \begin{aligned} &\widehat {a}_{t'} \phi_{0, \#S_t}(e^{i \widehat y_{t'}\omega^*}) = B_1 b + \mathbf{w}_1,\\ &e^{i \widehat y_{t'}\omega^*}\widehat {a}_{t'} \phi_{0, \#S_t}(e^{i \widehat y_{t'}\omega^*}) = B_1\mathrm{diag}\left(e^{i y_1\omega^*} ,\cdots, e^{i y_n \omega^*}, e^{i \widehat y_{1} \omega^*}, \cdots, e^{i \widehat y_{t'-1} \omega^*}, e^{i \widehat y_{t'+1} \omega^*},\cdots, e^{i \widehat y_{d} \omega^*}\right)b + \mathbf{w}_2. \end{aligned} \end{align} $$

$$ \begin{align} \begin{aligned} &\widehat {a}_{t'} \phi_{0, \#S_t}(e^{i \widehat y_{t'}\omega^*}) = B_1 b + \mathbf{w}_1,\\ &e^{i \widehat y_{t'}\omega^*}\widehat {a}_{t'} \phi_{0, \#S_t}(e^{i \widehat y_{t'}\omega^*}) = B_1\mathrm{diag}\left(e^{i y_1\omega^*} ,\cdots, e^{i y_n \omega^*}, e^{i \widehat y_{1} \omega^*}, \cdots, e^{i \widehat y_{t'-1} \omega^*}, e^{i \widehat y_{t'+1} \omega^*},\cdots, e^{i \widehat y_{d} \omega^*}\right)b + \mathbf{w}_2. \end{aligned} \end{align} $$

Since all the

![]() $e^{iy_j\omega ^*}$

’s and

$e^{iy_j\omega ^*}$

’s and

![]() $e^{i\widehat y_j \omega ^*}$

’s are pairwise distinct,

$e^{i\widehat y_j \omega ^*}$

’s are pairwise distinct,

![]() $B_1$

is a regular matrix. We multiply both sides of the above equations by the inverse of

$B_1$

is a regular matrix. We multiply both sides of the above equations by the inverse of

![]() $B_1$

to get from Lemma 2.4 that

$B_1$

to get from Lemma 2.4 that

$$ \begin{align} \widehat {a}_{t'}\prod_{q\in S_t}\frac{e^{i\widehat y_{t'}\omega^*}-e^{i q\omega^*}}{e^{i y_t\omega^*}- e^{i q\omega^*}} &= a_t + (B_1^{-1})_{t} \mathbf{w}_1, \end{align} $$

$$ \begin{align} \widehat {a}_{t'}\prod_{q\in S_t}\frac{e^{i\widehat y_{t'}\omega^*}-e^{i q\omega^*}}{e^{i y_t\omega^*}- e^{i q\omega^*}} &= a_t + (B_1^{-1})_{t} \mathbf{w}_1, \end{align} $$

$$ \begin{align} e^{i \widehat y_{t'}\omega^*} \widehat {a}_{t'}\prod_{q\in S_t}\frac{e^{i\widehat y_{t'}\omega^*}-e^{i q\omega^*}}{e^{i y_t\omega^*}- e^{i q\omega^*}} &= e^{i y_{t}\omega^*} a_t + (B_1^{-1})_{t} \mathbf{w}_2, \end{align} $$

$$ \begin{align} e^{i \widehat y_{t'}\omega^*} \widehat {a}_{t'}\prod_{q\in S_t}\frac{e^{i\widehat y_{t'}\omega^*}-e^{i q\omega^*}}{e^{i y_t\omega^*}- e^{i q\omega^*}} &= e^{i y_{t}\omega^*} a_t + (B_1^{-1})_{t} \mathbf{w}_2, \end{align} $$

where

![]() $(B_1^{-1})_{t}$

is the t-th row of

$(B_1^{-1})_{t}$

is the t-th row of

![]() $B_1^{-1}$

. By Lemma 2.3, it follows that

$B_1^{-1}$

. By Lemma 2.3, it follows that

$$ \begin{align} (B_1^{-1})_{t}\mathbf{w}_1 = \frac{\sum_{p=0}^{\#S_t-1}\left(\mathbf{w}(p \omega^*) (-1)^{\#S_t-p}\sum_{\{q_1,\cdots, q_{\#S_{t}-p}\}\in S_{t, \#S_t-p}} e^{iq_1\omega^*}\cdots e^{iq_{\#S_t-p}\omega^*}\right)+ \mathbf{w}(\#S_t \omega^*)}{\prod_{q\in S_t}(e^{iy_t\omega^*} - e^{i q \omega^*})}. \end{align} $$

$$ \begin{align} (B_1^{-1})_{t}\mathbf{w}_1 = \frac{\sum_{p=0}^{\#S_t-1}\left(\mathbf{w}(p \omega^*) (-1)^{\#S_t-p}\sum_{\{q_1,\cdots, q_{\#S_{t}-p}\}\in S_{t, \#S_t-p}} e^{iq_1\omega^*}\cdots e^{iq_{\#S_t-p}\omega^*}\right)+ \mathbf{w}(\#S_t \omega^*)}{\prod_{q\in S_t}(e^{iy_t\omega^*} - e^{i q \omega^*})}. \end{align} $$

Thus, multiplying

![]() $\prod _{q\in S_t}\left (e^{i y_t\omega ^*}- e^{i q\omega ^*}\right )$

in both sides of (16) proves (7) in the case when all the

$\prod _{q\in S_t}\left (e^{i y_t\omega ^*}- e^{i q\omega ^*}\right )$

in both sides of (16) proves (7) in the case when all the

![]() $e^{iy_j\omega ^*}$

’s and

$e^{iy_j\omega ^*}$

’s and

![]() $e^{i\widehat y_j \omega ^*}$

’s are pairwise distinct. Furthermore, equation (16) times

$e^{i\widehat y_j \omega ^*}$

’s are pairwise distinct. Furthermore, equation (16) times

![]() $e^{i \widehat y_{t'} \omega ^*}$

minus (17) yields

$e^{i \widehat y_{t'} \omega ^*}$

minus (17) yields

Similarly, further expanding

![]() $(B_1^{-1})_{t}\left (e^{i \widehat y_{t'} \omega ^*}\mathbf{w}_1-\mathbf{w}_2\right )$

explicitly by Lemma 2.3 and multiplying

$(B_1^{-1})_{t}\left (e^{i \widehat y_{t'} \omega ^*}\mathbf{w}_1-\mathbf{w}_2\right )$

explicitly by Lemma 2.3 and multiplying

![]() $\prod _{q\in S_t}\left (e^{i y_t\omega ^*}- e^{i q\omega ^*}\right )$

in both sides above yields (8).

$\prod _{q\in S_t}\left (e^{i y_t\omega ^*}- e^{i q\omega ^*}\right )$

in both sides above yields (8).

Finally, we consider the case when the

![]() $e^{iy_j\omega ^*}$

’s and

$e^{iy_j\omega ^*}$

’s and

![]() $e^{i\widehat y_j \omega ^*}$

’s are not pairwise distinct. Since it is a limiting case of the above cases, (7) and (8) still hold in this case. This completes the proof.

$e^{i\widehat y_j \omega ^*}$

’s are not pairwise distinct. Since it is a limiting case of the above cases, (7) and (8) still hold in this case. This completes the proof.

3 Stability of super-resolution in dimension one

In this section, based on our location-amplitude identities, we analyze the super-resolution capability of the reconstruction of the numbers, locations and amplitudes of off-the-grid sources in the one-dimensional super-resolution problem. Note that these problems have been analyzed in [Reference Liu and Zhang26, Reference Batenkov, Goldman and Yomdin3] from different perspectives, but the proofs are over several tens of pages. Now, by our method, we have a direct and clear picture of all these problems, which allows us to prove them in a unified way and in less than ten pages.

We consider the imaging model (1) and focus on the one-dimensional case in this section. Since the source locations

![]() $\mathbf{y}_j$

’s are the supports of the Dirac masses in

$\mathbf{y}_j$

’s are the supports of the Dirac masses in

![]() $\mu $

, we use the support recovery for a substitution of the location reconstruction throughout the paper.

$\mu $

, we use the support recovery for a substitution of the location reconstruction throughout the paper.

Since we focus on the resolution limit case, we consider the case when the point sources are tightly spaced and form a cluster. To be more specific, we denote the ball in the k-dimensional space by

$$ \begin{align} B_\delta^k(\mathbf{x}):=\left\{\mathbf{y} \ \bigg| \mathbf{y} \in \mathbb{R}^k,\|\mathbf{y}-\mathbf{x}\|_2<\delta\right\}, \end{align} $$

$$ \begin{align} B_\delta^k(\mathbf{x}):=\left\{\mathbf{y} \ \bigg| \mathbf{y} \in \mathbb{R}^k,\|\mathbf{y}-\mathbf{x}\|_2<\delta\right\}, \end{align} $$

and assume

![]() $\mathbf {y}_j \in B_{\frac {(n-1) \pi }{2\Omega }}^k(\mathbf {0}), j=1, \ldots , n$

, or equivalently

$\mathbf {y}_j \in B_{\frac {(n-1) \pi }{2\Omega }}^k(\mathbf {0}), j=1, \ldots , n$

, or equivalently

![]() $\left \|\mathbf {y}_j\right \|_2<\frac {(n-1) \pi }{2 \Omega }$

. This assumption is a common assumption for super-resolving the off-the-grid sources [Reference Liu and Zhang26, Reference Batenkov, Goldman and Yomdin3] and is necessary for the analysis. Since we are interested in resolving closely-spaced sources, it is also reasonable. We remark that our results for sources in

$\left \|\mathbf {y}_j\right \|_2<\frac {(n-1) \pi }{2 \Omega }$

. This assumption is a common assumption for super-resolving the off-the-grid sources [Reference Liu and Zhang26, Reference Batenkov, Goldman and Yomdin3] and is necessary for the analysis. Since we are interested in resolving closely-spaced sources, it is also reasonable. We remark that our results for sources in

![]() $B_{\frac {(n-1) \pi }{2\Omega }}^k(\mathbf {0})$

can be directly generalized to sources in

$B_{\frac {(n-1) \pi }{2\Omega }}^k(\mathbf {0})$

can be directly generalized to sources in

![]() $B_{\frac {(n-1) \pi }{2\Omega }}^k(\mathbf {x}),\ \mathbf{x}\in \mathbb R^k$

.

$B_{\frac {(n-1) \pi }{2\Omega }}^k(\mathbf {x}),\ \mathbf{x}\in \mathbb R^k$

.

The reconstruction process is usually targeting at some specific solutions in a so-called admissible set, which comprises discrete measures whose Fourier data are sufficiently close to

![]() $\mathbf{Y}$

. In general, every admissible measure is possibly the ground truth, and it is impossible to distinguish which one is closer to the ground truth without any additional prior information. In our problem, we introduce the following concept of

$\mathbf{Y}$

. In general, every admissible measure is possibly the ground truth, and it is impossible to distinguish which one is closer to the ground truth without any additional prior information. In our problem, we introduce the following concept of

![]() $\sigma $

-admissible discrete measures. For simplicity, we also call them

$\sigma $

-admissible discrete measures. For simplicity, we also call them

![]() $\sigma $

-admissible measures.

$\sigma $

-admissible measures.

Definition 3.1. Given the measurement

![]() $\mathbf Y$

in (1),

$\mathbf Y$

in (1),

![]() $\widehat \mu =\sum _{j=1}^{d} \widehat a_j \delta _{\mathbf {\widehat y}_j}$

is said to be a

$\widehat \mu =\sum _{j=1}^{d} \widehat a_j \delta _{\mathbf {\widehat y}_j}$

is said to be a

![]() $\sigma $

-admissible discrete measure of

$\sigma $

-admissible discrete measure of

![]() $\mathbf Y$

if

$\mathbf Y$

if

If further

![]() $\widehat a_j>0, j=1, \cdots , d$

, then

$\widehat a_j>0, j=1, \cdots , d$

, then

![]() $\widehat \mu $

is said to be a positive

$\widehat \mu $

is said to be a positive

![]() $\sigma $

-admissible discrete measure of

$\sigma $

-admissible discrete measure of

![]() $\mathbf Y$

.

$\mathbf Y$

.

3.1 Stability of number detection

In this section, we estimate the super-resolving capability of number detection in the super-resolution problem. We introduce the concept of computational resolution limit for number detection [Reference Liu and Zhang27, Reference Liu and Zhang26, Reference Liu and Zhang25] and present a sharp bound for it.

Note the set of

![]() $\sigma $

-admissible measures of

$\sigma $

-admissible measures of

![]() $\mathbf Y$

characterizes all possible solutions to our super-resolution problem with the given measurement

$\mathbf Y$

characterizes all possible solutions to our super-resolution problem with the given measurement

![]() $\mathbf Y$

. Detecting the source number n is possible only if all of the admissible measures have at least n supports; otherwise, it is impossible to detect the correct source number without additional a priori information. Thus, following definitions similar to those in [Reference Liu and Zhang26, Reference Liu and Zhang27, Reference Liu and Zhang25], we define the computational resolution limit for the number detection problem as follows.

$\mathbf Y$

. Detecting the source number n is possible only if all of the admissible measures have at least n supports; otherwise, it is impossible to detect the correct source number without additional a priori information. Thus, following definitions similar to those in [Reference Liu and Zhang26, Reference Liu and Zhang27, Reference Liu and Zhang25], we define the computational resolution limit for the number detection problem as follows.

Definition 3.2. The computational resolution limit to the number detection problem in the super-resolution of sources in

![]() $\mathbb R^k$

is defined as the smallest nonnegative number

$\mathbb R^k$

is defined as the smallest nonnegative number

![]() $\mathscr D_{num}(k, n)$

such that for all n-sparse measures

$\mathscr D_{num}(k, n)$

such that for all n-sparse measures

![]() $\sum _{j=1}^{n}a_{j}\delta _{\mathbf{y}_j}, a_j\in \mathbb C,\mathbf{y}_j \in B_{\frac {(n-1) \pi }{2\Omega }}^k(\mathbf {0})$

and the associated measurement

$\sum _{j=1}^{n}a_{j}\delta _{\mathbf{y}_j}, a_j\in \mathbb C,\mathbf{y}_j \in B_{\frac {(n-1) \pi }{2\Omega }}^k(\mathbf {0})$

and the associated measurement

![]() $\mathbf{Y}$

in (1), if

$\mathbf{Y}$

in (1), if

then there does not exist any

![]() $\sigma $

-admissible measure of

$\sigma $

-admissible measure of

![]() $\mathbf Y$

with less than n supports. In particular, when considering positive sources and positive

$\mathbf Y$

with less than n supports. In particular, when considering positive sources and positive

![]() $\sigma $

-admissible measures, the corresponding computational resolution limit is denoted by

$\sigma $

-admissible measures, the corresponding computational resolution limit is denoted by

![]() $\mathscr D_{num}^+(k, n)$

.

$\mathscr D_{num}^+(k, n)$

.

The definition of ‘computational resolution limit’ emphasizes the impossibility of correctly detecting the number of very close sources by any means. It depends crucially on the signal-to-noise ratio and the sparsity of the sources, which is fundamentally different from all the classical resolution limits [Reference Abbe1, Reference Volkmann46, Reference Rayleigh35, Reference Schuster38, Reference Sparrow42] that depend only on the cutoff frequency.

Our first result is a sharp estimate for the upper bound of the computational resolution limit in the one-dimensional super-resolution problem. As we have said, we will use

![]() $y_j$

’s to denote the one-dimensional source locations.

$y_j$

’s to denote the one-dimensional source locations.

Theorem 3.3. Let the measurement

![]() $\mathbf Y$

in (1) be generated by any one-dimensional source

$\mathbf Y$

in (1) be generated by any one-dimensional source

![]() $\mu =\sum _{j=1}^{n}a_j\delta _{y_j}$

with

$\mu =\sum _{j=1}^{n}a_j\delta _{y_j}$

with

![]() $y_j \in B_{\frac {(n-1) \pi }{2\Omega }}^1(0), j=1,\cdots , n$

. Let

$y_j \in B_{\frac {(n-1) \pi }{2\Omega }}^1(0), j=1,\cdots , n$

. Let

![]() $n\geqslant 2$

and assume that the following separation condition is satisfied:

$n\geqslant 2$

and assume that the following separation condition is satisfied:

where

![]() $\sigma , m_{\min }$

are defined as in (2), (3), respectively. Then there does not exist any

$\sigma , m_{\min }$

are defined as in (2), (3), respectively. Then there does not exist any

![]() $\sigma $

-admissible measures of

$\sigma $

-admissible measures of

![]() $\mathbf Y$

with less than n supports. Moreover, for the cases when

$\mathbf Y$

with less than n supports. Moreover, for the cases when

![]() $n=2$

and

$n=2$

and

![]() $n=3$

, if

$n=3$

, if

$$\begin{align*}\min_{p\neq j}\Bigg|y_p-y_j\Bigg|\geqslant \frac{2\arcsin\left(2\left(\frac{\sigma}{m_{\min}}\right)^{\frac{1}{2}}\right)}{\Omega}, \quad \min_{p\neq j}\Bigg|y_p-y_j\Bigg|\geqslant \frac{2\pi}{\Omega }\Big(\frac{8\sigma}{m_{\min}}\Big)^{\frac{1}{4}}, \quad \text{respectively}, \end{align*}$$

$$\begin{align*}\min_{p\neq j}\Bigg|y_p-y_j\Bigg|\geqslant \frac{2\arcsin\left(2\left(\frac{\sigma}{m_{\min}}\right)^{\frac{1}{2}}\right)}{\Omega}, \quad \min_{p\neq j}\Bigg|y_p-y_j\Bigg|\geqslant \frac{2\pi}{\Omega }\Big(\frac{8\sigma}{m_{\min}}\Big)^{\frac{1}{4}}, \quad \text{respectively}, \end{align*}$$

then there does not exist any

![]() $\sigma $

-admissible measures of

$\sigma $

-admissible measures of

![]() $\mathbf Y$

with less than n supports.

$\mathbf Y$

with less than n supports.

Theorem 3.3 gives a sharper upper bound for the computational resolution limit

![]() $\mathscr D_{num}(1, n)$

compared to the one in [Reference Liu and Zhang26]. By the new estimate (3.3), it is already possible to surpass the Rayleigh limit

$\mathscr D_{num}(1, n)$

compared to the one in [Reference Liu and Zhang26]. By the new estimate (3.3), it is already possible to surpass the Rayleigh limit

![]() $\frac {\pi }{\Omega }$

in detecting source number when

$\frac {\pi }{\Omega }$

in detecting source number when

![]() $\frac {m_{\min }}{\sigma }\geqslant (2e)^{2n-2}$

. Moreover, this upper bound is shown to be sharp by a lower bound provided in [Reference Liu, He and Ammari24]. Thus, we can conclude that

$\frac {m_{\min }}{\sigma }\geqslant (2e)^{2n-2}$

. Moreover, this upper bound is shown to be sharp by a lower bound provided in [Reference Liu, He and Ammari24]. Thus, we can conclude that

$$\begin{align*}\frac{2e^{-1}}{\Omega }\Big(\frac{\sigma}{m_{\min}}\Big)^{\frac{1}{2n-2}}< \mathscr D_{num}(1, n) \leqslant \frac{2e\pi}{\Omega }\Big(\frac{\sigma}{m_{\min}}\Big)^{\frac{1}{2n-2}}. \end{align*}$$

$$\begin{align*}\frac{2e^{-1}}{\Omega }\Big(\frac{\sigma}{m_{\min}}\Big)^{\frac{1}{2n-2}}< \mathscr D_{num}(1, n) \leqslant \frac{2e\pi}{\Omega }\Big(\frac{\sigma}{m_{\min}}\Big)^{\frac{1}{2n-2}}. \end{align*}$$

It is also easy to generalize the estimates in Theorem 3.3 to high-dimensional spaces by methods in [Reference Liu and Zhang25, Reference Liu and Ammari22], whereby we can obtain that

$$\begin{align*}\frac{2e^{-1}}{\Omega }\Big(\frac{\sigma}{m_{\min}}\Big)^{\frac{1}{2n-2}}< \mathscr D_{num}(k, n) \leqslant \frac{C_1(k,n)}{\Omega }\Big(\frac{\sigma}{m_{\min}}\Big)^{\frac{1}{2n-2}} \end{align*}$$

$$\begin{align*}\frac{2e^{-1}}{\Omega }\Big(\frac{\sigma}{m_{\min}}\Big)^{\frac{1}{2n-2}}< \mathscr D_{num}(k, n) \leqslant \frac{C_1(k,n)}{\Omega }\Big(\frac{\sigma}{m_{\min}}\Big)^{\frac{1}{2n-2}} \end{align*}$$

for a constant

![]() $C_1(k,n)$

.

$C_1(k,n)$

.

In particular, for the case when

![]() $n=2$

, our estimate demonstrates that when the signal-to-noise ratio

$n=2$

, our estimate demonstrates that when the signal-to-noise ratio

![]() $\mathrm {SNR}>4$

, then the resolution is better than the Rayleigh limit and the ‘super-resolution in number detection’ can be achieved thusly. This result is already practically important. As we will see later, our estimate is very sharp and close to the true two-point resolution limit.

$\mathrm {SNR}>4$

, then the resolution is better than the Rayleigh limit and the ‘super-resolution in number detection’ can be achieved thusly. This result is already practically important. As we will see later, our estimate is very sharp and close to the true two-point resolution limit.

Remark. We remark that our new techniques also provide a way to analyze the stability of number detection for sources with multi-cluster patterns. Our former method (also the only one we know of) for analyzing the stability of number detection cannot handle such cases. The technique here is the first known method that can tackle the issue. But since the current paper focuses on understanding the resolution limits in the super-resolution, the multi-cluster case is out of scope and we leave it as a future work.

We now present the proof of Theorem 3.3. The problem is essentially a nonlinear approximation problem where we have to optimize the approximation over the coupled factors: source number d, source locations

![]() $\widehat y_j$

’s and amplitudes

$\widehat y_j$

’s and amplitudes

![]() $\widehat a_j$

’s. Here, by leveraging the location-amplitude identities, we prove it in a rather simple and direct way.

$\widehat a_j$

’s. Here, by leveraging the location-amplitude identities, we prove it in a rather simple and direct way.

We first denote for an integer

![]() $k\geqslant 1$

,

$k\geqslant 1$

,

$$ \begin{align} \begin{aligned} &\zeta(k)= \left\{ \begin{array}{cc} (\frac{k-1}{2}!)^2,& \text{if } k \text{ is odd,}\\ (\frac{k}{2})!(\frac{k-2}{2})!,& \text{if } k \text{ is even,} \end{array} \right. \quad \xi(k)=\left\{ \begin{array}{cc} \frac{1}{2}, & \text{if } k=1,\\ \frac{(\frac{k-1}{2})!(\frac{k-3}{2})!}{4},& \text{if } k \text{ is odd},\,\, k\geqslant 3,\\ \frac{(\frac{k-2}{2}!)^2}{4},& \text{if } k \text{ is even}. \end{array} \right. \end{aligned} \end{align} $$

$$ \begin{align} \begin{aligned} &\zeta(k)= \left\{ \begin{array}{cc} (\frac{k-1}{2}!)^2,& \text{if } k \text{ is odd,}\\ (\frac{k}{2})!(\frac{k-2}{2})!,& \text{if } k \text{ is even,} \end{array} \right. \quad \xi(k)=\left\{ \begin{array}{cc} \frac{1}{2}, & \text{if } k=1,\\ \frac{(\frac{k-1}{2})!(\frac{k-3}{2})!}{4},& \text{if } k \text{ is odd},\,\, k\geqslant 3,\\ \frac{(\frac{k-2}{2}!)^2}{4},& \text{if } k \text{ is even}. \end{array} \right. \end{aligned} \end{align} $$

We also define for positive integers

![]() $p, q$

, and

$p, q$

, and

![]() $z_1, \cdots , z_p, \widehat z_1, \cdots , \widehat z_q \in \mathbb C$

, the following vector in

$z_1, \cdots , z_p, \widehat z_1, \cdots , \widehat z_q \in \mathbb C$

, the following vector in

![]() $\mathbb {R}^p$

:

$\mathbb {R}^p$

:

$$ \begin{align} \eta_{p,q}(z_1,\cdots,z_{p}, \widehat z_1,\cdots,\widehat z_q)=\left(\begin{array}{c} |(z_1-\widehat z_1)|\cdots|(z_1-\widehat z_q)|\\ |(z_2-\widehat z_1)|\cdots|(z_2-\widehat z_q)|\\ \vdots\\ |(z_{p}-\widehat z_1)|\cdots|(z_{p}-\widehat z_q)| \end{array}\right). \end{align} $$

$$ \begin{align} \eta_{p,q}(z_1,\cdots,z_{p}, \widehat z_1,\cdots,\widehat z_q)=\left(\begin{array}{c} |(z_1-\widehat z_1)|\cdots|(z_1-\widehat z_q)|\\ |(z_2-\widehat z_1)|\cdots|(z_2-\widehat z_q)|\\ \vdots\\ |(z_{p}-\widehat z_1)|\cdots|(z_{p}-\widehat z_q)| \end{array}\right). \end{align} $$

We recall the following useful lemmas.

Lemma 3.4. Let

![]() $-\frac {\pi }{2}\leqslant \theta _1<\theta _2<\cdots <\theta _{k} \leqslant \frac {\pi }{2}$

with

$-\frac {\pi }{2}\leqslant \theta _1<\theta _2<\cdots <\theta _{k} \leqslant \frac {\pi }{2}$

with

![]() $\min _{p\neq j}|\theta _p-\theta _j|=\theta _{\min }$

. We have the estimate

$\min _{p\neq j}|\theta _p-\theta _j|=\theta _{\min }$

. We have the estimate

$$\begin{align*}\prod_{1\leqslant p\leqslant k,p\neq j}{\left|e^{i\theta_j}-e^{i\theta_p}\right|}\geqslant \zeta(k)\left(\frac{2\theta_{\min}}{\pi}\right)^{k-1},\ j=1,\cdots, k, \end{align*}$$

$$\begin{align*}\prod_{1\leqslant p\leqslant k,p\neq j}{\left|e^{i\theta_j}-e^{i\theta_p}\right|}\geqslant \zeta(k)\left(\frac{2\theta_{\min}}{\pi}\right)^{k-1},\ j=1,\cdots, k, \end{align*}$$

where

![]() $\zeta (k)$

is defined in (21).

$\zeta (k)$

is defined in (21).

Proof. Note that

Then we have

$$ \begin{align*} \prod_{1\leqslant p\leqslant k,p\neq j}{\left|e^{i\theta_j}-e^{i\theta_p}\right|}\ \geqslant \left(\frac{2}{\pi}\right)^{k-1} \prod_{1\leqslant p\leqslant k,p\neq j}{\left|\theta_j-\theta_p\right|} \geqslant \zeta(k)\left(\frac{2\theta_{\min}}{\pi}\right)^{k-1}.\\[-40pt] \end{align*} $$

$$ \begin{align*} \prod_{1\leqslant p\leqslant k,p\neq j}{\left|e^{i\theta_j}-e^{i\theta_p}\right|}\ \geqslant \left(\frac{2}{\pi}\right)^{k-1} \prod_{1\leqslant p\leqslant k,p\neq j}{\left|\theta_j-\theta_p\right|} \geqslant \zeta(k)\left(\frac{2\theta_{\min}}{\pi}\right)^{k-1}.\\[-40pt] \end{align*} $$

Lemma 3.5. Let

![]() $-\frac {\pi }{2}\leqslant \theta _1<\theta _2<\cdots <\theta _{k+1} \leqslant \frac {\pi }{2}$

. Assume that

$-\frac {\pi }{2}\leqslant \theta _1<\theta _2<\cdots <\theta _{k+1} \leqslant \frac {\pi }{2}$

. Assume that

![]() $\min _{p\neq j}|\theta _p-\theta _j|=\theta _{\min }$

. Then for any

$\min _{p\neq j}|\theta _p-\theta _j|=\theta _{\min }$

. Then for any

![]() $\widehat \theta _1,\cdots , \widehat \theta _k\in \mathbb R$

, we have the following estimate:

$\widehat \theta _1,\cdots , \widehat \theta _k\in \mathbb R$

, we have the following estimate:

$$\begin{align*}\left|\left|{\eta_{k+1,k}(e^{i\theta_1},\cdots,e^{i\theta_{k+1}},e^{i \widehat \theta_1},\cdots,e^{i\widehat \theta_k})}\right|\right|{}_{\infty}\geqslant \xi(k)\left(\frac{2\theta_{\min}}{\pi}\right)^k, \end{align*}$$

$$\begin{align*}\left|\left|{\eta_{k+1,k}(e^{i\theta_1},\cdots,e^{i\theta_{k+1}},e^{i \widehat \theta_1},\cdots,e^{i\widehat \theta_k})}\right|\right|{}_{\infty}\geqslant \xi(k)\left(\frac{2\theta_{\min}}{\pi}\right)^k, \end{align*}$$

where

![]() $\eta _{k+1,k}$

is defined as in (22).

$\eta _{k+1,k}$

is defined as in (22).

Proof. See Corollary 7 in [Reference Liu and Zhang26].

Proof. We are now ready to prove Theorem 3.3. Suppose that

![]() $\widehat \mu =\sum _{j=1}^{d}\widehat a_j \delta _{\widehat y_j}, d\leqslant n-1$

is a

$\widehat \mu =\sum _{j=1}^{d}\widehat a_j \delta _{\widehat y_j}, d\leqslant n-1$

is a

![]() $\sigma $

-admissible measure of

$\sigma $

-admissible measure of

![]() $\mathbf{Y}$

. By the Definition 3.1 and the model (1), we have

$\mathbf{Y}$

. By the Definition 3.1 and the model (1), we have

for some

![]() $\mathbf{W}_1$

with

$\mathbf{W}_1$

with

![]() $|\mathbf{W}_1(\omega )|<2\sigma $