Policy Significance Statement

This work’s policy implications are for decision-makers and practitioners of data-driven innovation in the public sector committed to ethical practice. We join those who argue for flexible and reasonable policymaking that considers the context. We suggest that a virtue-based approach can support their efforts to connect abstract and unchanging principles with contextual and shifting demands of day-to-day practice.

1. Introduction

Researchers have long recognized the potential and realized risks involved with data-driven innovation (e.g., Shattuck, Reference Shattuck1984). To address these concerns, administrations and governments have been developing policies and tools to address the social effects of these innovative systems.Footnote 1 Based on a study by the Ada Lovelace Institute (2021), overwhelmingly the most common type of policy instrument chosen for this purpose is “Principles and guidelines.”

However, the definition of principles is not sufficient for artificial intelligence (AI), or data-driven innovation in general, to be ethically sustainable (for criticism of principlism in general, see, e.g., Ten Have, Reference Ten Have, ten Have and Gordijn2001; Page, Reference Page2012; Groves, Reference Groves2015). Some reasons identified are the lack of tools and methods to translate principles into practice (Mittelstadt, Reference Mittelstadt2019; Vakkuri et al., Reference Vakkuri, Kemell, Kultanen, Siponen and Abrahamsson2019), oversimplifying complex social constructs into idealized measures (Hagendorff, Reference Hagendorff2022), and ethical guidelines being prone to manipulation by industry actors (Rességuier and Rodrigues, Reference Rességuier and Rodrigues2020).

In this paper, we propose and illustrate a method for conducting this translation, which has been inspired by our reading of Aristotelian virtue ethics. We examine two instances of existing processes of data-driven innovation in the city of Helsinki and describe them through concepts of virtue and phronêsis, or practical reason. Using a dialogic case-study approach (Rule and John, Reference Rule and John2015), we describe how data scientists, as practitioners of data-driven innovation, must and do engage their phronêsis in order to make ethically charged decisions. We give illustrative examples of four “bridging” data-driven innovation virtues that help connect the principles of the organization and the practical decisions made by innovation practitioners, which support this engagement.

We situate ourselves in an emerging field of practicing ethics in research and innovation. This body of literature was comprehensively reviewed recently by Reijers et al. (Reference Reijers, Wright, Brey, Weber, Rodrigues, O’Sullivan and Gordijn2018). As they write, “the complexity and ambivalence of ethical issues emerging from the design and outcomes of contemporary R&I [research and innovation] call for the development of comprehensive methods that can be used by ethicists, researchers, policymakers and various other stakeholders (technology users, companies, etc.)” (p. 1439).

Following the typology suggested by Reijers et al. (Reference Reijers, Wright, Brey, Weber, Rodrigues, O’Sullivan and Gordijn2018), our focus is primarily on contributing to the development of “intra” (as opposed to “ex ante” or “ex post”) methods of practicing ethics in innovation processes. That is, we focus on practices that come into play in the design and testing stages of the data-driven innovation process. Common challenges for these “intra” methods have been identified in the literature as, first, their inability to (help) integrate ethics into the work of the practitioners themselves (Borning and Muller, Reference Borning and Muller2012; for critiques along these lines, see, e.g., Brey, Reference Brey2000; Le Dantec et al., Reference Le Dantec, Poole and Wyche2009). A second common challenge for “intra” methods is a lack of theoretical grounding in practices designed to embed values into the innovation process (for critiques along these lines, see, e.g., Albrechtslund, Reference Albrechtslund2007; Manders-Huits, Reference Manders-Huits2011; van de Poel, Reference van de Poel, Michelfelder, McCarthy and Goldberg2013).

With this commentary, we want to advance a theoretically grounded, virtue-based approach, which is practice-oriented and can be linked to the daily work of data scientists and other practitioners of data-driven innovation, especially in the context of the public sector. Such an approach allows us to describe how practitioners engage their phronêsis in ethical decision-making in a context-sensitive and dynamic environment, where the appropriate application of abstract principles will be different from case to case. This flexibility is particularly important in the context of innovation, which always entails novelty.

The structure of the paper is as follows. First, the section “Virtue and the Smart City” briefly reviews the existing literature and describes our interpretation of virtue ethics in the context of data-driven innovation in the smart city. Second, the section “Dialogic Case Study” briefly describes our methodological approach and the cases studied. Third, the section “Phronêsis for the Data Scientist” analyzes the two cases and proposes four examples of how thinking through virtues can help bridge the gap between abstract ethical principles and algorithmic choices made by the data scientist. Finally, we provide concluding remarks in the “Discussion” section.

2. Virtue and the Smart City

We next briefly describe our understanding of two key concepts of Aristotle’s ethics,Footnote 2 virtue and phronêsis, which will help us sketch out a theoretical frame to translate ethical principles into more practical terms.

Our choice to apply Aristotelian language and concepts to the ethics of innovation and technology is by no means unprecedented. Virtue ethical approaches to these domains have been explored and convincingly defended in recent years, especially by Vallor (Reference Vallor2016) and others (such as Blok et al., Reference Blok, Gremmen and Wesselink2015; Sand, Reference Sand2018; Barford, Reference Barford2019; Costello, Reference Costello2019; Reijers, Reference Reijers2020; Frigo et al., Reference Frigo, Marthaler, Albers, Ott and Hillerbrand2021; Astola et al., Reference Astola, Bombaerts, Spahn and Royakkers2022). As Barford summarizes: “the human qualities studied by virtue ethics—e.g., personal character and habits of acting and choosing with practical wisdom so as to promote human flourishing and not only one’s personal goals or those of one’s employer, to judge and to prioritize—are required successfully to engage in values-based ethical design [of ICT systems],” p. 1.

Having said that, there is a frequent criticism leveled at virtue ethical theories regarding their alleged inability to effectively guide action (see, e.g., Louden, Reference Louden, Crisp and Slote1997). However, as Sand (Reference Sand2018) responds to this criticism, virtue ethics can successfully contribute to addressing problems of applied ethics by generating moral knowledge. Further, as Sand also points out, effective action guidance is a more general problem for all normative theories when applied to wicked problems and not specific to virtue theories. Therefore we, alongside Annas (Reference Annas2011) and Vallor (Reference Vallor2016), find it useful to apply analysis inspired by virtue ethics in the context of technological innovation.

In Aristotelian thinking, virtue is a deliberate practice—a way of acting— that enables its possessor consistently to excel in their function. While virtues are more than mere “simple dispositions to engage in certain behaviors stereotypical of the virtues” (Russell, Reference Russell and Polansky2014, p. 203), it is critical to note that they do come about as the result of habituation (êthos) or “the repeated performance and practice of the actions typical of the virtues” (Basilio, Reference Basilio2021, p. 531). Further, there is a distinction between virtues of character and intellectual virtues. The exercise of specifically virtues of character is what enables one to perform one’s function well, while the exercise of intellectual virtue enables one to arrive at the truth. It is important to stress here that this notion of virtue is one crucially involving activity and not of only being (explored by, e.g., Broadie, Reference Broadie1993). Merely possessing a virtue is not sufficient, rather it must be accompanied by activity.

Being virtuous in one’s practice is a deliberate balance. For Aristotle, virtue of character is a mean state that is destroyed by the extremes of deficiency or excess. For example, courage is the virtue (mean) that lies at the right distance from foolhardiness (excess) and cowardice (deficiency). It refers to the right balance of drive and caution appropriate for the situation at hand. Virtue does not necessarily mean being equidistant from the extremes, and the appropriate position shifts from situation to situation. It is a matter of judgement, or what Aristotle refers to as the intellectual virtue of phronêsis, practical reason, to determine the appropriate balance or mean between deficiency and excess for one’s specific situation.

A city’s ethical principles for data or AI use can be understood as representations of the (aspirational) virtues of the smart city. The successful exercise of phronêsis can then be understood as finding the right balance and a way to apply the abstract ethical principles in the concrete practices and processes. So understood, it becomes imperative for our aim—which is to bridge the gap between principle and practice—to consider in more detail the exercise of phronêsis, to which task we turn next through employing a dialogic case study.

3. Dialogic Case Study Approach

Our goal in this paper is to illustrate how a virtue-based approach can bridge the gap between high-level principles for the city as a whole with the hands-on work of data scientists and others as they engage in the practices of data-driven innovation. In what follows, we aim to illustrate how virtues operate on a practical level, namely with regard to the individuals—in our cases, data scientists—whose actions make up parts of the practices that are data-driven innovation. To study this, we explore two data-driven innovation cases being developed by the data and analytics team in the city of Helsinki.

To study these cases, we use a bidirectional dialogic case study approach (Rule and John, Reference Rule and John2015). Our purpose here is to comment on the theory using the two concrete example cases and more generally to show how to bridge the gap between theory and practice. With this approach, the cases and theory engage in two ways: by both building the theory from the cases and by testing it with the cases—enabling a two-way commentary.

The first case involves advanced data analytics developed to provide better quality services for the long-term unemployed. The context for this case is a large pilot project in Finland in which the responsibility of employment services is being transitioned from a national agency to the level of individual municipal governments.Footnote 3 This case involves personal data collected about individuals and their use of employment and other services provided by the city. The purpose of the algorithm developed is to find a personalized path for job-seekers towards employment or other occupation. The innovative aspect in this case is in aiming to include, in addition to simple employment services use data, also data from complementary sources that the city controls. This combination would allow for more personalized and predictive service provision, with the stated goals including better served city residents.

The second case involves developing a machine learning algorithm to enable third-party event organizers more effectively to connect with residents and visitors of the city. This case involves event description data and the purpose of the algorithm in this case is to automatically and meaningfully classify and categories the events. The innovative aspect here is that, instead of a more typical big data approach where access to resources largely determines success, the aim is more equitable and non-discriminatory treatment of both the two groups, event organizers, and potential audiences. For example, on the organizer side the goal includes providing equal visibility to different parties regardless of their characteristics such as size of marketing budget. Meanwhile, on the audience side, the goal includes deliberate exposure to events––search results and recommendations––that is, accurately tailored to an individual’s specific interests given that those interests may appear as negligible niche outliers in a mass data analysis approach and, as a result, get filtered out of view.

For the purposes of this theoretical commentary, we relied on a single informant in the city of Helsinki, a data scientist representing the project coordination and data science perspectives, with whom we had three, approximately 90-min interview sessions. We also used project documentation and publicly available online materials to gather additional perspectives. In addition, the authors are former employees of the city of Helsinki with first-hand experience and knowledge of the data governance, management, strategy, and data policy perspectives both in general and regarding these specific cases.Footnote 4

To analyze the case materials, we used an iterative approach. In between each interview session, we re-examined our case materials and iteratively developed an updated synthesis bridging the practices evidenced in cases and theories. In particular, we analyzed the examples of algorithmic choices described by the informant and mapped them using Vallor’s (Reference Vallor2016) technomoral virtues. We then engaged again in a dialogue with the informant to gather additional information and missing perspectives. After three iterations, our analysis saturated and resulted in the identification of the four common themes as presented in Table 1.

Table 1. Four data-driven innovation virtues linking technomoral virtues and technological choices

It is important to note here that cities are typically rather conservative organizations and are also highly regulated by national and/or European Union legislation. This is especially the case with regard to the use of (personal) data and its combination from distinct registers. While our chosen cases may at first sound like fairly simple or ordinary data science projects, they in reality require novel and innovative approaches involving technologies, service design, legal interpretation, recruitment strategy, procurement standards, overall policy, and management in the city government.

4. Phronêsis for the Data Scientist

We next turn into the results of our study. Overall, we find that data scientists, as practitioners of data-driven innovation, must engage their practical reason, phronêsis, to make ethically charged decisions or, in the language of virtue, to find the appropriate balance between deficiency and excess, in the context of those decisions.

Merely exercising one’s phronêsis does not, of course, guarantee optimal or even good results, nor is it intended to do so. Further, at times individuals may be constrained by their institutional environment in a way that hinders acting upon what one deems appropriate. While phronêsis is helpful when speaking about the ethics of individuals, more work is needed to understand the ethics of organizations that comprise more than just individuals (for a similar conclusion, see Mittelstadt, Reference Mittelstadt2019, p. 555.)

Analysis of the two cases studied led to the identification of four common themes, described as examples of translational or bridging virtues of data-driven innovation (see the middle column of Table 1). These virtues can be considered as specific instances of one or more of Vallor’s more general technomoral virtues, which are mentioned in the leftmost column. Vallor’s still fairly generic virtues turned out to be most useful as an approximation and a stand-in for a set of data ethics principles at the city. Finally, via these more specific, bridging virtues of data-driven innovation, we are able to make much more direct and immediate connections to the particular technical and algorithmic choices that the data scientist faces on a day-to-day basis (visually the middle column is the bridge between the two ends). Examples of these choices present in our cases are given in the right-most column.

It bears emphasizing again here that each row of this table represents an illustrative example of the kind of bridge we seek, and each cell in the middle column likewise is an example that we happened upon in the course of this particular research effort. Neither the set of rows or the set of what we call “virtues of data-driven innovation” in the middle column are to be considered exhaustive or comprehensive––nor indeed are the virtues mentioned not in need of (further) moral justification. Our purpose here is only to visualize the missing layer between principles and practices and to show how the space between the leftmost and rightmost columns might be filled out in the instance of this specific data scientist and these two specific cases. Next, we briefly discuss each of the four virtues of data-driven innovation mentioned in the middle column.

4.1. Responsible use of personal data

This virtue concerns practically all cases involving personal data and, due to the requirements of the EU GDPR, it is one of the best-known topics in data-driven innovation in Europe. Personal data has considerable value and utility potential while being subject to significant privacy protection requirements to safeguard against abuse (see, e.g., Politou et al., Reference Politou, Alepis and Patsakis2018). Further, responsibility and the closely linked notion of accountability are key aspects of nearly all existing sets of AI ethics principles.

In terms of the general technomoral virtues, this virtue relates to holding on to the moral whole (perspective) in that focus must not narrow too much to either maximizing value (data abuse, or excessive exploitative data practices) or minimizing potential harm (denial of data, or refusal to process personal data at all) and to being compassionate for others (empathy) in that it must always be kept in mind that the data are intimately connected with real, flesh-and-blood people.

The technical process of adjusting the level of anonymization, for which a plurality of techniques pose additional challenges, is an example of a data scientist’s exercise of phronêsis. By choosing an appropriate level and type of anonymization the data scientist can balance the information requirements by the algorithm in question while preserving privacy of data subjects.

4.2. Evidence-based decisions

Data-driven management in a public-sector organization involves dealing with heterogeneous, incomplete, and/or poor-quality data, as well as approximations and probabilities. The practitioners responsible for choosing, processing, and visualizing the relevant data as evidence for decision-makers or developing automated models must therefore exercise this virtue to be successful in delivering the best possible results, that is, the evidence as well as description of its limitations.

In terms of the general technomoral virtues, this relates to respecting truth (honesty), in that evidence is sought and given due weight in decision-making processes, and to knowing what we do not know (humility), in that limitations of any data presented as evidence are included in that presentation.

An example of a method at the data scientist’s disposal in preparing data and developing models are the adjustments to the generalizability of the model used to generate evidence. Excess in this case is hyper-precision by overfitting the model. Overfitting makes the model too specific and hinders its generalizability and so limits its utility for decision-makers. Deficiency in this regard is underfitting, which fails to capture the essential trends in the data and so produces results that are so general as to be meaningless. Phronêsis is needed here for finding the appropriate fit.

4.3. Fair treatment of all actors

An inherent challenge with data science, and its application in data-driven innovation, is that biassed data leads to biassed results. Bias, while inevitable, cannot be allowed to result in discriminatory, unequitable, or unfair practices or policies. So one of the challenges facing the data scientist is recognizing bias where it appears––in the data and its results––and responding appropriately in order for the bias not to cause unwanted treatment of the people involved. More broadly, ensuring both individual and group fairness at the same time is challenging (Dwork et al., Reference Dwork, Hardt, Pitassi, Reingold and Zemel2012) but not necessarily conflicting and may often in fact be a case of mere misconception that can be solved with a nuanced consideration of the sources of unfairness (Binns, Reference Binns2020).

In terms of the general technomoral virtues, this relates to upholding rightness (justice), in that the goal is to prevent injustice and discriminatory effects, and having compassionate concern for others (empathy), in that the social and other context of the people involved will require adjusting for.

To practice this virtue, the data scientist, for example, has to understand the possible skewness of training data and use normalization, over-sampling, or other means to balance class representation. To find the right corrective measures in the service of the vulnerable in particular is key here. This virtue is a prime example of how important the individual exercise phronêsis is in the realization of the larger goals of data-driven innovation, as one cannot rely on one exact mathematical measure to take.

4.4. Service to individuals in society

When designing and developing public services, one needs to weigh the interests of the individual against those of society or larger community. For a public-sector body, resources are limited and individually tailored care and services are not often possible within the bounds of those resources. The task in these cases is to ensure that most people are adequately served while no one, regardless of the complexity of their needs, is left behind.

In terms of the general technomoral virtues, this relates to holding on to the moral whole (perspective), in that one must retain the big picture of societal well-being while caring for specific people and their needs, and skillful adaptation to change (flexibility), in that the right approach to any given case will have to be dynamic are react to changes in the environment and society as well as emerging individual needs.

Excess in this virtue is a kind of erasure of individual experience and difference, while deficiency is prioritizing the individual above all other concerns. For this virtue, which could be described as the right consideration for the collective with respect to the individual and vice versa, we did not observe a single common mechanism for the data scientist to deploy in exercising their phonêsis as was the case for the three preceding virtues. We did, however, observe two particular actions taken.

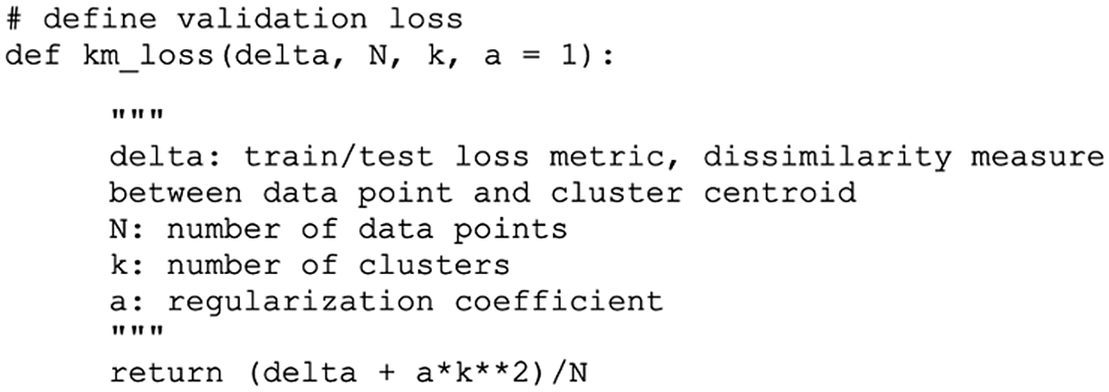

The first was to parametrize the loss functionFootnote 5 in a clustering algorithm (see the code excerpt from our case in Figure 1). By choosing different values for the parameters, a data scientist can balance between a preference for larger or smaller clusters. In practice, preferring larger clusters means providing a more generic outcome that approximates the need of more people at once and preferring smaller clusters produces more precisely targetable groups.

Figure 1. Code excerpt for balancing between community and individual utility.

The second action we observed was in response to the observation that algorithms often tend to favor the general masses and that, as a result, rare and exceptional instances are not found. This is a fundamental problem in all reasoning based on statistical analysis, but it takes various forms in different contexts and so there are multiple approaches possible to address it. The action we observed was to add randomness. By increasing randomness, a data scientist can balance toward niche categories by equally suggesting some unexpected and rare instances.

To conclude this section, some summary remarks. In the course of our work, we saw that our informant was continuously exercising their phronêsis in addition to their technical skills and knowledge to be able to do their work. We conceptualize this as their activity of attempting to find the appropriate balance between deficiency and excess, that is, navigating the landscape of virtue with pronêsis. In the first case study, we noticed that a balance was being sought between sufficient anonymization of personal data of the people using employment services and the usefulness of the data in terms of information richness and utility. In the second case, the balance to be struck involved the generalizability of the ML-based classification model and how well the model finds specific solutions.

5. Discussion

In this commentary, we have described theoretical and practical ways in which we can use virtue ethics to understand and to inform practices of data-driven innovation in the public sector. We have described a theoretical grounding for our approach that is inspired by our reading of Aristotle, and an illustrative application of that approach.

Our motivation in developing our approach has been to bridge the gap between abstract ethical principles, which are increasingly common for organizations practicing data-driven innovation, and the practical choices that data scientists and other practitioners make every day in order to do their jobs. We have done so by using a dialogic case study approach (Rule and John, Reference Rule and John2015) to apply conceptual tools from virtue ethics in our study of two concrete cases of data-driven innovation at the city of Helsinki. Through this approach, we identified four themes and described four corresponding, illustrative “virtues of data-driven innovation.” In doing so, we showed how our approach might handle bridging the gap between general principles and concrete practices of civil servants as they engage their phronêsis to produce virtuous outcomes.

A major insight gleaned from exposing our theory to our practitioner’s first-hand experience was the deepened appreciation of how essential the individual practitioners’ moral sensitivity and judgement, phronêsis, is especially in contexts where the explicit and acknowledged structures and processes for translating values and principles into practice are largely absent, as is most often the case in organizations today. We believe that increasing visibility of, verbalizing, and adding transparency to processes of data-driven innovation will help not only understand but also to govern them better. This importance is further intensified in cases of data-driven innovation in which by definition novel and unknown contexts are explored.

Our work contributes within the field of practicing ethics in research and innovation and it addresses the two commonly cited issues facing methods that attempt to introduce or strengthen ethical thinking and doing in the phases of innovation design and testing (so-called intra methods): their lack of theoretical grounding and their inability to incorporate ethics into the day-to-day work of innovation practitioners (Reijers et al., Reference Reijers, Wright, Brey, Weber, Rodrigues, O’Sullivan and Gordijn2018).

Further, our work relates to the ethical discussion around innovation that focuses specifically on the notion of responsibility (e.g., Coeckelbergh, Reference Coeckelbergh2006; Swierstra and Jelsma, Reference Swierstra and Jelsma2006; Grinbaum and Groves, Reference Grinbaum and Groves2013; Simakova and Coenen, Reference Simakova and Coenen2013; Von Schomberg, Reference Von Schomberg2013; Ferrari and Marin, Reference Ferrari, Marin, Arnaldi, Ferrari, Magaudda and Marin2014; Grunwald, Reference Grunwald2014; Pavie, Reference Pavie2014; Sand, Reference Sand2016). While we have refrained from framing our work explicitly around the term ‘responsibility’, understood as “the implementation of ethical values, transparency, reflexivity, participation and more generally bottom-up governance of innovation” (Sand, Reference Sand2018, p. 92), we feel the notion is integral to our work which uses the language of virtue.

Finally, we have observed a specific interest in the relevant literature to focus on individual innovators and pioneering developers of new technologies (e.g., Gardner, Reference Gardner1993; Sand, Reference Sand2018). Our research differs from this approach in that the practitioner of innovation in our focus is not the once-in-a-generation genius but rather the scientifically skilled civil servant. Our discussion of individual virtues, therefore, looks very different and so has a different scope of applicability.

Our work comes with limitations. First, as the main data source, we have chosen to focus on an individual person with a specific role in these innovation processes. We recognize the fact that processes and practices of innovation, perhaps especially in the context of the public sector, are highly collaborative, complex, and collective. This limitation also provides an opportunity for future research. We suggest that our method can equally be applied to understand also any other actors playing different roles in these complex systems, such as chief information officers, data architects, policy experts, data protection lawyers, procurement specialists, service designers, or elected political leaders. Additionally, it is possible to explore applications of this approach to collective processes in addition to different individuals participating in those processes.

Second, the four data-driven innovation virtues we discussed are by no means an exhaustive or a comprehensive list. Some of the relevant literature is motivated in part by the identification and even classification of specific virtues that are especially relevant for innovation, such as creativity (Sand, Reference Sand2018; Astola et al., Reference Astola, Bombaerts, Spahn and Royakkers2022). In contrast, rather than focusing on specific virtues, we want to point out that there is a need for identifying the layer in between the abstract and general ethical principles and the concrete and practical algorithmic choices.

Further, the examples we presented are not meant to be generalizable, abstractable, or universally applicable: that would be sliding back into principlism. Instead, they are the results of applying the language and theoretical tools provided by virtue ethics to the actual practice of, for example, writing code by a data scientist. This application is provided to demonstrate that virtue theoretical tools can fruitfully be used to render visible and graspable what is usually considered opaque or “black box” about innovation processes, namely what goes on between having a principle (like “responsibility”) on the one hand, and the individual data scientist’s actual practice (like the decision to opt for a straightforward substitution technique for data masking rather than adopting a full-blown encryption approach).

The policy implications of this work are for both decision-makers and practitioners of data-driven innovation in the public-sector context who are committed to ethical practice. We join Hemerly (Reference Hemerly2013) and Meyer (Reference Meyer2015), both of whom argue for flexible and reasonable policymaking that takes the context into account, and suggest that a virtue-based approach can support their efforts to connect abstract and unchanging principles with contextual and shifting demands of day-to-day practice.

Acknowledgments

The authors are grateful for the case studies generously described to us by Nuutti Kytö (né Sten).

Funding statement

The authors declare none.

Competing interest

Both authors have been previously employed by the city of Helsinki.

Author contribution

Conceptualization: V.L., K.K.; Methodology: V.L., K.K.; Writing original draft: V.L., K.K. All authors approved the final submitted draft.

Data availability statement

Data availability is not applicable to this article as no new data were created or analyzed in this study.

Comments

No Comments have been published for this article.