Policy Significance Statement

The need and significance for a practice of DSD is evident in a number of sectors and specific case studies. The increasing datafication of international migration, where the lives and experiences of migrants are increasingly quantified, often even without their knowledge, offers a good example. Operationalizing DSD in this (and other) contexts so as to maximize the potential of data while limiting its harms requires a number of steps. In particular, a responsible operationalization of DSD would consider four key prongs or categories of action: processes, people and organizations, policies, and products and technologies.

1. Introduction

Our world is awash in data. Every day, some 2.5 quintillion bytes of data are generated (Vuleta, Reference Vuleta2021), and in 2020, approximately 64.2 ZB of data was created or replicated (Rydning, Reference Rydning2021). According to the International Data Corporation, the amount of data created or replicated is growing at a compound annual growth rate of 23%, driven by the proliferation of Internet of Things devices, remote sensors, and other data collection methods that are now deeply intertwined with virtually every aspect of people’s professional and social lives (Rydning, Reference Rydning2021).

We have transitioned to a new era of Datafication—one in which human life is increasingly quantified, often without the knowledge of data subjects, and frequently transformed into intelligence that can be monetized for private or public benefit. At the same time, this new era offers tremendous opportunities: the responsible use and reuse of data can help address a host of apparently intractable societal and environmental problems, in large part by improving scientific research, public policymaking, and decision-making (Verhulst et al., Reference Verhulst, Young, Zahuranec, Aaronson, Calderon and Gee2020). Yet, it has also become increasingly clear that the datafication is simultaneously marked by a number of asymmetries, silos, and imbalances that are restricting the potential of data. This tussle—between potential and limits—is emerging as one of the central public policy challenges of our times.

In what follows, we outline a number of ways in which asymmetries are limiting the potential of datafication. In particular, we explore the notion of agency asymmetry, arguing that power imbalances in the data ecology are in effect disempowering key stakeholders, and as such undermining trust in how data are handled. We suggest that in order to address agency asymmetry (along with other forms of asymmetry), we need a new principle and practice of digital self-determination. This principle is built on the foundations of long-established philosophical, psychological, and legal principles of self-determination but updated for the digital era.

In Section 2, we explore how datafication has come about, and some of the asymmetries it has led to. Existing methods of addressing these asymmetries, we suggest, are insufficient; we need a new concept and practice of digital self-determination (DSD). We examine DSD in Section 3, showing how it builds on a pre-existing intellectual tradition pertaining to self-determination. Section 4 contains a case study (on migration) that illustrates the notion of DSD, and Section 5 contains some specific recommendations for operationalizing the practice of DSD.Footnote 1

2. Context: Digital Transformation and Datafication

The notion of datafication is sometimes conflated with big data. As we have elsewhere written, the two phenomena may be said to exist on a spectrum, but are in fact distinct (Verhulst, Reference Verhulst, Lapucci and Cattuto2021). In particular, datafication extends beyond a mere technical phenomenon and has what may be considered a sociological dimension. As Mejias and Couldry argue in the Internet Policy Review, datafication includes “the transformation of human life into data through processes of quantification,” and this transformation, the authors further argue, has “major social consequences … [for] disciplines such as political economy, critical data studies, software studies, legal theory, and—more recently—decolonial theory” (Mejias and Couldry, Reference Mejias and Couldry2019).

One aspect of datafication that is particularly relevant for our discussion here is that it is often intermingled with hierarchy and relationships of power. It exists, as Mejias and Couldry suggest, at “the intersection of power and knowledge” (Mejias and Couldry, Reference Mejias and Couldry2019). This has tremendous implications for how data are accessed and distributed, in particular to the creation of data silos and asymmetries. We return to these challenges below. First, we consider the potential offered by datafication.

2.1. Potential of datafication: Reuse for public interest purposes

In order to understand the potential of datafication, we need to explore the possibilities offered by data reuse. Data reuse takes place when information gathered for one purpose is repurposed (often in an anonymized or aggregated form) for another purpose, generally with an intended public benefit outcome. For example, location data collected by private telecommunication operators can be reused to understand human mobility patterns, which can help with public aid responses to mass migration events or during ecological crises. Likewise, data collected from wearables and smart thermometers were used to predict outbreaks of COVID-19 in different areas across the USA ahead of the Center for Disease Control and Prevention (Chafetz et al., Reference Chafetz, Zahuranec, Marcucci, Davletov and Verhulst2022).

Such benefits are often realized through two key vehicles:

-

• Open data, which involves data holders (typically in governmental and academic sectors) releasing data publicly, so that it can be “freely used, reused, and redistributed by anyone” (Open Knowledge Foundation, 2015).

-

• Data collaboratives, which are an emerging form of partnership, often between the public, academic, and private sectors, that allow for data to be pooled and reused across datasets and sectors (Young and Verhulst, Reference Young, Verhulst, Harris, Bitonti, Fleisher and Binderkrantz2020). Data collaboratives can take a number of forms and allow data holders to provide access to data with other stakeholders for the public benefit without necessarily losing control or giving up a competitive advantage (this may be of particular concern to corporations and companies). In the example cited above, for instance, telecoms firms can provide access to their data with third-party researchers and responders while still maintaining their own stakeholder interests in the data.

2.2. Key challenge: Data asymmetries

Open data and data collaboratives offer real potential to address some of the most intractable problems faced by society. When used responsibly and in the right data ecology, they can help policymakers by improving situational awareness, drawing clearer connections between cause and effect, enhancing predictive capabilities, and improving our understanding of the impact of critical decisions and policies (Verhulst et al., Reference Verhulst, Young, Winowatan and Zahuranec2019). All of this, when combined, can make a real and tangible difference in public decision-making. Similar benefits exist in the scientific community where greater access to data can lead to new research while allowing experiments to be reproduced and verified by anyone (Yong, Reference Yong2015).

At the moment, though, this potential is often held back. The key restriction stems from asymmetries in the way data are collected and, especially, stored (or hoarded). An era marked by unprecedented abundance—of data and other potential public goods—is also marked by vast disparities and hierarchies in how that abundance is distributed and accessed. Today, much of our data exists in silos, hidden from public view or usage, thus limiting the ability of policymakers, researchers, or other actors to benefit from its possibilities. In addition, the public is often left in the dark about how data are being collected, for what purpose, and how it is being used.

Three forms of asymmetry are worth highlighting:

-

• Data asymmetries, in which those who could benefit from access to data or draw out its potential are restricted from access;

-

• Information asymmetries refer to situations where there is a mismatch in transparency or understanding of how data are behind handled between data holders (such as companies or organizations), data subjects (the individuals whose data are being collected and used), and potential users of the data (such as researchers or policymakers). In these cases, the data holder may not disclose to the data subject or user what data exist. This mismatch in awareness can lead to a situation of distrust or where valuable data are never sought or deployed, even though it could be used to inform critical decisions or improve outcomes for individuals or society as a whole. This highlights the importance of promoting greater transparency and awareness in the handling and use of personal data; and

-

• Agency asymmetries, where data relationships between parties are marked by imbalances and hierarchies, meaning that one party—typically one that is already vulnerable and disenfranchised—is further disempowered. For instance, large quantities of data are collected on children daily, tracking their movements, communications, and more. Yet they (and their caregivers) have little to no agency over their data, and how it is used and later reused (Young and Verhulst, Reference Young and Verhulst2020).

The persistence of such asymmetries has a number of negative consequences. Most obviously, the potential public benefits of access to and reuse of data (e.g., through improved research or policymaking) are not fully realized. Lack of access to data may also contribute to bias in the analysis, especially if data hoarding leads to the exclusion of certain populations in the datasets.Footnote 2 In addition, the power imbalances mean that in effect an extractive relationship often exists between data subjects (e.g., citizens) and data holders (e.g., large companies), posing a number of practical and ethical consequences (observers have written of a sense of “data colonization”).

Further, while data collaboratives are a means to unlock data, it is important to design them in a way that does not exacerbate existing asymmetries. For example, if a data collaborative is designed in a way that only benefits the more powerful partners, it may reinforce existing power imbalances rather than addressing them. In the context of Open Finance (Awrey and Macey, Reference Awrey and Macey2022), for instance, Joshua Macey and Dan Awrey have shown that data collaboration in the short time may possibly promote greater competition, spur innovation, and enhance consumer choice, yet “in the longer term, the economics of data aggregation are likely to yield a highly concentrated industry structure, with one or more data aggregators wielding enormous market power” (Awrey and Macey, Reference Awrey and Macey2022). Similarly, open data policies can be a powerful tool to democratize access to data and promote greater transparency. However, if not designed carefully, open data policies can also exacerbate data asymmetries. When implemented in a way that only benefits certain groups, such as those with already greater technological or financial resources, it may further marginalize already marginalized groups.

All of this leads to a number of less obvious and more psychological, but no less insidious, consequences. The asymmetries and the sense of colonization lead to a feeling of disempowerment and a lack of autonomy, especially among populations that are already vulnerable, and this in turn erodes public trust in both technology and institutions—one of the defining problems of our times.

For all these reasons, it is essential that steps be taken to address the asymmetries that are at the heart of our data economy, in the process helping to unlock the value of the data age and spurring new forms of innovation in public decision-making. This includes designing data collaboratives and open data policies in a way that promotes equitable access and benefits for all stakeholders, rather than exacerbating existing power imbalances. In Section 3, we examine a principle and practice of digital self-determination that we believe is central to this process.

2.3. Existing methods of rebalancing asymmetries—And their limitations

First, though, we examine some existing methods either being deployed or considered to address these asymmetries. While these methods are well-intentioned and do sometimes have at least a marginal effect, we suggest that each has limitations and that, individually and collectively, they fail to address the underlying magnitude or scope of the problem.

2.3.1. Consent

Today, the default approach to addressing information and power asymmetries involves the concept of informed consent. In this method, information about data handling policies is shared with data subjects, who then have the option of consenting whether or not to allow their data to be collected, accessed, and (re)used. This has been the primary vehicle for providing data subjects with a “choice” since the widespread adoption of the Fair Information Practice Principles, approximately 30 years ago (Landesberg et al., Reference Landesberg, Levin, Curtin and Lev1998). Yet, despite its widespread use and despite the fact that it provides the bedrock for many legislative efforts concerning data management,Footnote 3 as well as contemporary practices such as clickwrap end-user-license-agreements (Preston and McCann, Reference Preston and McCann2011)Footnote 4 or the long terms and conditions (T&C) to consent to prior to using social media platforms, informed consent has a number of shortcomings:

-

• Binary: Generally, opt-in or opt-out regimes dominate the practice of consent (de Man et al., Reference de Man, Jorna, Torensma, de Wit, van Veen, Francke, Oosterveld-Vlug and Verheij2023). Yet such approaches tend to be binary—for or against collection or sharing—and thus inappropriately reductive. Some versions do allow for a greater level of granularity (i.e., more boxes to be checked), but even these fail to capture the true nuances and complexity of how data are collected, used, and reused.

-

• Informational shortcomings: To truly confer agency, informed consent practices would need to convey a robust understanding of the nature, significance, implications, and risks of data collection, use and reuse (European Commission – Research Directorate-General, 2021). For example, data subjects should be made aware of the immediate uses of their data and also potential future uses. Such “rich information” is generally lacking, thus compromising citizens’ ability to provide genuine consent.

-

• Collective versus individual: Informational shortcomings are exacerbated by the fact that consent policies are typically aimed at informing individuals about how their data will be used. In truth, however, datasets are often combined and repurposed in ways that have significant consequences for groups or communities. More responsible forms of consent would pay greater attention to the interests of communities (Francis and Francis, Reference Francis and Francis2017).

-

• Limited scope: Finally, existing consent mechanisms are limited because much of the ethical and policy debate focuses on the scope of the original consent and whether reuse is permissible in light of that scope (Francis and Francis, Reference Francis and Francis2017). As a result, most consent regimes have a difficult time handling repurposing, which is so essential to fulfilling the potential of data. Recent years have witnessed the development of more open-ended consent models (Francis and Francis, Reference Francis and Francis2017), and several groups, such as the World Economic Forum (Bella et al., Reference Bella, Carugati, Mulligan and Piekarska-Geater2021), have tried to improve on existing methods of consent to propose new methods. However, these too contain many ethical limitations and can even act as bottlenecks to qualitative studies (Mannheimer, Reference Mannheimer2021).

2.3.2. Alternative consent mechanisms—And their shortcomings

In part due to these shortcomings, some have suggested “post-consent privacy,” while others have suggested the establishment of alternative rights and technologies. In addition, legislation such as the General Data Protection Regulation which was introduced by the European Union (EU) in May 2018 includes other lawful bases for processing personal data in addition to consent, including for instance contract, legal obligation, vital interests, public interests, and legitimate interests (Gil González and de Hert, Reference Gil González and de Hert2019). However, each of these also contains certain limitations (Barocas and Nissembaum, Reference Barocas, Nissembaum, Lane, Stodden, Bender and Nissenbaum2014). In the below, we focus on three areas that gain a substantial amount of traction in the literature:

-

• Data ownership rights: One approach is to treat data as the private property of data subjects. While in theory this could enhance agency, it poses the serious problem of undermining the public good properties of data. Data are non-rivalrous, non-excludable, and non-depletable, making it by definition a public good (Hummel et al., Reference Hummel, Braun and Dabrock2021). While ownership of data may appear to solve problems related to consent and control, it raises serious concerns regarding the marketization and commodification of data (Montgomery, Reference Montgomery2017). In truth, a lack of clarity regarding the notion of ownership when it comes to data means that it cannot be treated as solely a public or private good.

-

• Collective ownership: Ownership of data can be at the level of the individual, the community, or group. Group-level ownership has most commonly been explored under the rubric of “data sovereignty,” which places data under the jurisdictional control of a single political entity (Christakis, Reference Christakis2020). Collective ownership poses many of the same challenges as those posed by individual ownership, notably those associated with the privatization of a public good. In addition, a lack of operationalization in terms of clear and enforceable legal frameworks around data ownership makes it difficult to establish or operationalize data sovereignty (Hummel et al., Reference Hummel, Braun and Dabrock2021). National or subnational jurisdictions are often in conflict, and different areas of law operate differently. For example, while intellectual property rights (Mannheimer, Reference Mannheimer2021) protect certain aspects of data reuse, criminal law (Liddell et al., Reference Liddell, Simon and Lucassen2021) may interpret reuse as a form of theft. Overall, the absence of a legal framework allows data subjects to be exploited, while also limiting the effective reuse of data for the public good.

-

• Personal information management systems (PIMS): PIMS (EDPA, 2021) are sometimes proposed as alternative systems of data management to empower individuals with greater control over their personal data. PIMS are typically centralized or decentralized systems through which individuals can choose to share (or not share) their personal data. This method also faces some important limitations.

First, there is a danger that, rather than conferring control on individual subjects, PIMS will simply transfer control to owners and operators of large PIMS systems. In this argument, a PIMS-based system of consent will end by replicating (and perhaps aggravating) existing hierarchies and asymmetries.

Second, PIMS remain highly susceptible to the many vulnerabilities of the existing data ecosystem. In particular, they are prone to hacking and breaches and are only as robust as the surrounding legal and policy ecosystem that protects how data are collected, stored, and shared (IAPP, 2019).

Finally, the adoption of PIMS has been stunted by a lack of adequate use cases and, consequently, an insufficiently proven business case (Janssen and Singh, Reference Janssen and Singh2022). Without stronger models and stress-tested best practices, the potential of PIMS remains more conceptual than proven.

3. Need for New Principle: Digital Self-determination

All these shortcomings, of both existing and hypothetical methods of agency, call out for a new approach to addressing the asymmetries of our era. Our proposed solution rests on the principle of DSD. As noted, DSD is built on the foundations of existing practices and principles about self-determination. As a working definition, we propose the following:

Digital Self-Determination is defined as the principle of respecting, embedding, and enforcing people’s and peoples’ agency, rights, interests, preferences, and expectations throughout the digital data life cycle in a mutually beneficial manner Footnote 5 for all parties Footnote 6 involved.

3.1. The concept of self-determination

The above definition may be a relatively new concept, but it stems from a historical body of exploration that involves philosophy, psychology, and human rights jurisprudence. The term “self-determination” is often attributed to the German philosopher Immanuel Kant, who wrote in the 19th century about the importance of seeing humans as “moral agents” who would respect rules over their own needs and emotions because of an innate feeling of social “duty” (Remolina and Findlay, Reference Remolina and Findlay2021). Regardless of personal feelings, he argued, humans “have the duty to respect dignity and autonomy”—the self-determination—of others (Kant, Reference Kant and Gregor1997). More generally, Kant’s philosophy affirmed the importance of treating individuals as ends rather than means, and of the importance for individuals to be able to remain eigengesetzlich (autonomous) (Hutchings, Reference Hutchings2000). Others (Aboulafia, Reference Aboulafia2010), such as George Herbert Mead, have focused on the social development of the self, through interactions with others (Mead, Reference Mead1934). In this sense, self-determination is not just a matter of making decisions based on one’s own desires and goals but also involves taking into account the perspectives and expectations of others. Simone de Beauvoir, on the other hand, approached self-determination from an existentialist perspective (de Beauvoir, Reference de Beauvoir1948), emphasizing the importance of individual agency and the need for individuals to actively shape their own lives and identities, rather than simply accepting the roles and expectations imposed on them by society.

Self-determination can also be explored through the prism of psychology, where the ability to make decisions for oneself is often considered central to people’s motivations, well-being, and fulfillment (Ryan and Deci, Reference Ryan and Deci2006). For instance, Deci and Ryan (Reference Deci and Ryan1980) explore self-determination theory, which argues that there is a dichotomy between “automated,” instinctive behaviors and consciously “self-determined behaviors” to achieve a specific outcome (Deci and Ryan, Reference Deci and Ryan1980).

Finally, it is also worth considering international law, which upholds the notion of self-determination as it applies to both states and their constitutive members, that is, individuals. Self-determination is for instance closely associated with the decolonization movement, as well as with movements for the autonomy and independence of indigenous people. In 2007, the UN Declaration on the Rights of Indigenous Peoples acknowledged the right of peoples to practice customs and cultures “without outside interference” while also taking “part in the conduct of public affairs at any level” (Thürer and Burri, Reference Thürer, Burri and Wolfrum2008), thereby asserting the fundamental importance of autonomy for individuals and groups of people. The basis for this importance can be extended even further back, to Article 1 of the 1966 International Covenant on Economic, Social and Cultural Rights and the International Covenant on Civil and Political Rights, which state that: “All peoples have the right to self-determination. By virtue of that right they freely determine their political status and freely pursue their economic, social, and cultural development”(UN General Assembly, 1966).

3.2. Digital self-determination

The increased digitization and datafication of society lead us to extend these notions of self-determination to a concept of DSD. DSD includes the following key aspects and components.

3.2.1. DSD is mainly concerned with agency about data

First, with the advent of digital technologies rapidly advancing the collection, storage, and use of data, the need for DSD has become increasingly more pressing in recent years. As a concept, it rests essentially on the understanding that, in a digital society, data and individuals are not separate entities but mutually constitutive.Footnote 7 Thus, control over one’s data representation is fundamentally a matter of individual agency and liberty.

3.2.2. DSD has both an individual and collective dimension

Second, like physical self-determination, DSD has an external collective dimension that accounts for the influence that other people, peoples, and communities have on the virtual social self. Vice versa, DSD also has an internal individual dimension that defines the whole online self as the sum of three elements: one’s virtual persona, one’s data, and data about oneself. Each of these elements is essential in considering the notion of DSD and how best to apply it.

3.2.3. DSD can especially benefit the vulnerable, marginalized, and disenfranchised

Third, DSD is ethically desirable for the way in which it protects subject rights, whether individually or collectively. It is also worth noting that the need for DSD is particularly important to protect the rights of society’s most marginalized and disenfranchised—those who are typically less included in, and aware of, the emerging processes of social datafication. These populations are often the most vulnerable to digital asymmetries and already excluded from various aspects of social and economic life in the digital era. As such, there is a strong redistributive dimension to DSD.

3.2.4. DSD can leverage existing practices of principled negotiation

Fourth, the notion of determination creates a new avenue for negotiation beyond traditional institutional levers. Based on learnings from principled negotiation theory, DSD can help to establish objective criteria, focus on specific individual and collective interests, and unite common options (Alfredson and Cungu, Reference Alfredson and Cungu2008). Negotiations framed around objective criteria are more efficient and productive, as they highlight mutual gain and help balance power asymmetries. DSD plays a role in establishing objective criteria by empowering individuals to advocate for and establish their pre-existing interests in a broader negotiation process (Alfredson and Cungu, Reference Alfredson and Cungu2008). This not only helps focus on important interests that may otherwise be ignored but also helps to frame negotiations in a way that unites common interests to achieve more fair outcomes.

3.2.5. DSD will need to be flexible and context-specific, yet enforceable

Finally, it is important to emphasize that DSD cannot be achieved in a strictly pro-forma or prescriptive way but will often need to be approached in a voluntary, contextual, and participatory manner (in this sense, it is conceptually reminiscent of notions of self-regulation). Such an approach of “productive ambiguity” aligns more closely to the principles of self-determination and can help to ensure the successful adoption of DSD in the long run. How DSD is implemented will depend on what data are being handled, the stage of the data life cycle that is being considered, who the actors are, and how interests are being addressed. Each context will call for its own set of stakeholders, processes, and systems. At the same time, because of the well-established weaknesses of enforcement in self-regulatory contexts, special attention will need to be given to how to enforce the negotiated conditions of DSD.

3. Case Study: Migrants

The concept of DSD can be productively explored through case studies. In this section, we explore an example related to migrant populations, the challenges they face concerning data, and how DSD can protect and help them flourish.Footnote 8

Migrant populations today account for an estimated 3.6% of the world’s population, a number that continues to grow as global crises increase (McAuliffe and Khadria, Reference McAuliffe and Khadria2020). Already in 2022, the COVID-19 pandemic, the war in Ukraine, and the floods in Pakistan have displaced millions of people. As the number of migrants around the world increases, so too do the number of technologies associated with their journeys. These tools generate and use huge amounts of data, often without the express consent of the data subjects (Cukier and Mayer-Schoenberger, Reference Cukier and Mayer-Schoenberger2013; Berens et al., Reference Berens, Raymond, Shimshon, Verhulst and Bernholz2016). Consider the following examples:

-

• In 2013, at a refugee camp in Malawi, the UNHCR launched the Biometric Identity Management System. This system holds “body-based” identifiers—including fingerprints, iris scans, and facial scans—to accredit refugees and grant a service access to food rations, housing, and spending allowances (UNHCR, 2015). Additionally, UNHCR employs blockchain to link individuals with transaction data.

-

• The EUMigraTool uses data from migrants sourced from video content, web news, and social media text content to generate modeling and forecasting tools to help manage migrants’ arrival and support needs in a new country (IT Flows, 2022). Through its algorithms, the tool can help predict migration flows and detect risks and tensions related to migration, allowing migration service organizations to prepare for the appropriate amount of human and material resources needed when responding to a migration event.

-

• X2AI, a mental healthcare app, developed “Karim,” a Chatbot to provide virtual psychotherapy (Romeo, Reference Romeo2016) to Syrians in the Zaatari refugee camp. The non-profit Refunite (2023) assists refugees in locating missing family members via mobile phone or computer, and currently has over 1 million registered users. And Mazzoli et al. (Reference Mazzoli, Diechtiareff, Tugores, Wives, Adler, Colet and Ramasco2020) demonstrate how geolocated Twitter data can help identify specific routes taken, as well as areas of resettlement, by migrants during migrant crises.

These are just a few examples that illustrate how data are both generated by migrant movements and also used to channel aid, resettle populations, and generally inform the policy response. Without a doubt, there are many potential benefits to such usage. Much of the generated data can be leveraged in the pursuit of evidence-based policymaking to alleviate the sufferings and marginalization of this vulnerable population.

But as in virtually every other aspect of our digital era, data also pose a threat to migrant populations, notably by potentially infringing upon their rights and creating new power structures and inequalities (Bither and Ziebarth, Reference Bither and Ziebarth2020; European Migration Network, 2022). Migrants face power imbalances when it comes to agency over their data, choice in how their data are used, and control over who has access to their data (Verhulst et al., Reference Verhulst, Ragnet and Kalkar2022). These asymmetries are often further exacerbated by a lack of digital literacy, limiting migrants’ ability to use digital tools to achieve self-determination. For example, with little to no agency over the use of their data, migrant populations are often exploited as test subjects for new technologies, rather than benefiting from these rapid developments (Molnar, Reference Molnar2019; Martin et al., Reference Martin, Sharma, de Souza, Taylor, van Eerd, McDonald, Marelli, Cheesman, Scheel and Dijstelbloem2022). In addition, in many use cases, there exists a very weak framework for how data are collected, stored, and generally used, leading to ample scope for abuses.

DSD may offer some potential solutions to these growing asymmetries. Applied responsibly, DSD can help address power and agency asymmetries between migrants and various stakeholders by empowering migrants with the ability to control how their data are collected, stored, and used. It also creates avenues for negotiation, whereby trusted intermediaries can advocate for migrants and for other stakeholders in shared ecosystems. DSD’s focus on the “self” helps direct discussions and frameworks around the unique experience of various migrant populations, thus making DSD more effective in addressing the specific vulnerabilities and contextual factors facing different populations today. DSD is also useful because it can help engage migrants in the process of data generation, collection, use, and reuse, thus opening avenues for their engagement in the policy process and widening the range of insights brought to bear on the data policy process (Chafetz et al., Reference Chafetz, Kalkar, Ragnet, Verhulst and Zahuranec2022).

These are just some of the ways in which DSD can be useful in addressing a pressing global socio-economic problem. In the next section, we examine how these insights can be operationalized more generally, across sectors and domains.

4. Operationalizing Digital Self-determination

In order for DSD to have a social impact and help mitigate the asymmetries of our era, it is critical for theory to be translated into practical implementation. This represents a critical step in moving from concept to concrete policy implementation. As always within the data ecology, the task is not simply to blindly apply the concepts explored above but to understand how to do so responsibly—in a manner that maximizes agency and balances the potential benefits with the possible harms of any possible policy or technical intervention.

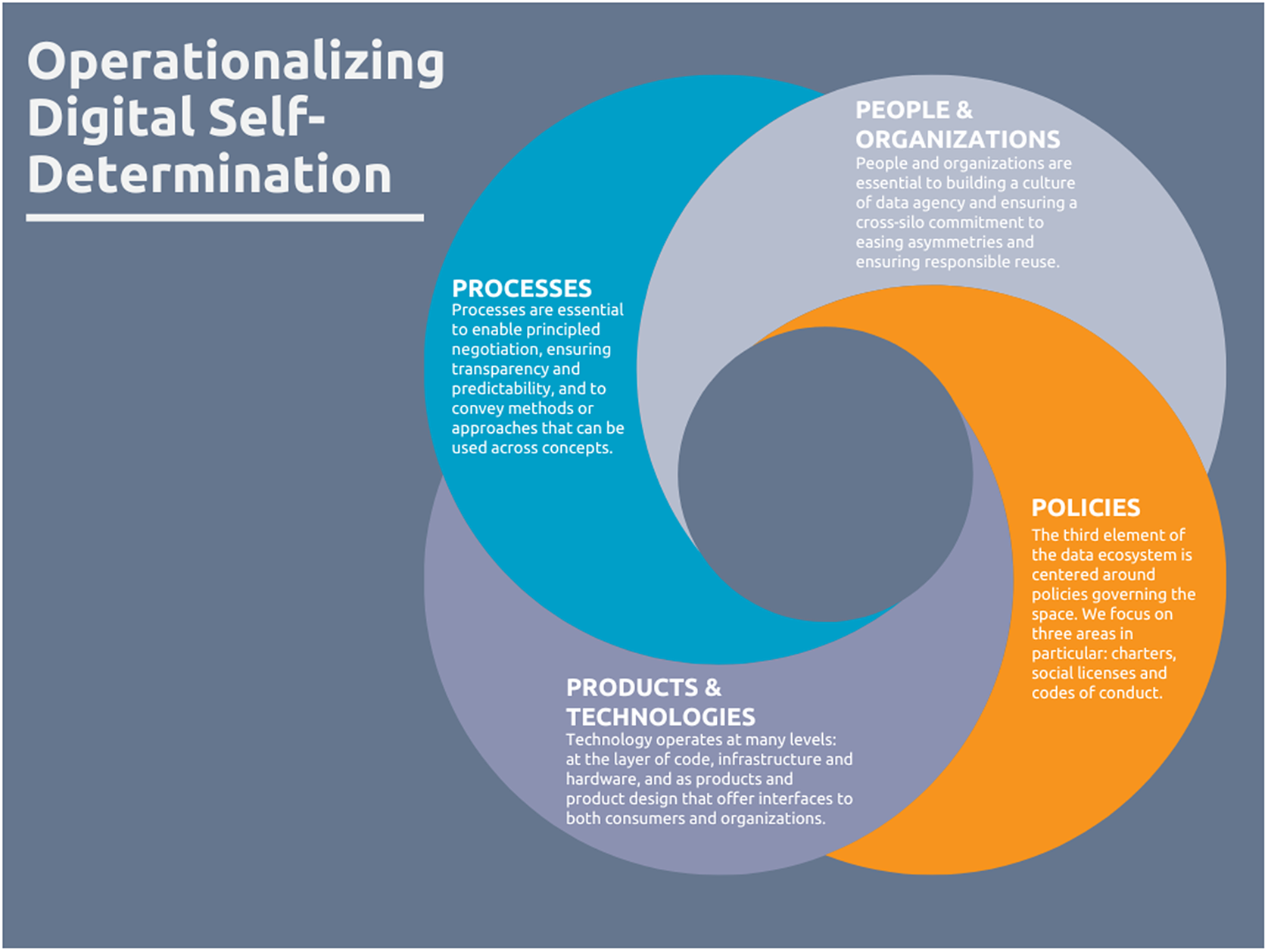

Responsible implementation of DSD can be explored through a four-pronged framework: processes, people and organizations, policies, and products and technologies (Figure 1).

Figure 1. Operationalizing digital self-determination: A four-pronged framework, Stefaan Verhulst.

4.1. Processes: Exploring the role of data assemblies

Processes are essential to enable principled negotiation, ensuring transparency and predictability, and conveying methods or approaches that can be used across concepts. Some key processes considered in the operationalization of DSD include citizen data commons (Kamruzzaman, Reference Kamruzzaman2023), citizen engagement programs (GIZ, 2021), public deliberations, and participatory impact assessments (Patel et al., Reference Patel2021).

One process holding particular potential involves the use of data assemblies, or citizen assemblies or juries around the reuse of data. Data assemblies bring together policymakers, data practitioners, and key members of communities to co-design the conditions under which data can be reused, as well as various other associated issues (The GovLab, 2020). As an example, in 2020, the GovLab launched The Data Assembly initiative, a citizens assembly based in New York City. Through this approach, participants were able to understand how different stakeholders perceive the challenges and risks of data reuse, as well as the diverse value propositions data reuse promises each actor. Among the key lessons of this project was the finding that data assemblies not only create space for public engagement but also offer avenues through which data practitioners can secure responsibly informed consent from the public—an essential step in building a more trusted and engaged data ecology (Zahuranec et al., Reference Zahuranec, Young and Verhulst2021).

4.2. People and organizations

People and organizations also play a key role in operationalizing DSD. Among other functions, they are essential to building a culture of data agency and ensuring a cross-silo commitment to easing asymmetries and ensuring responsible reuse. People and organizations are in essence the building blocks of responsible data use and reuse.

To operationalize DSD, two critical functions or roles for individuals and groups need to be highlighted.

4.2.1. Data stewards

Individuals or groups of individuals occupying the emerging function of data stewards within organizations play important roles in facilitating DSD and responsible data reuse. A data steward is a leader or team “empowered to create public value by reusing their organization’s data (and data expertise); identifying opportunities for productive cross-sector collaboration and responding proactively to external requests for functional access to data, insights or expertise” (Verhulst et al., Reference Verhulst, Zahuranec, Young and Winowatan2020). Their roles and responsibilities include engaging with and nurturing collaborations with internal and external stakeholders, promoting responsible practices, implementing governance processes, and communicating insights with broader audiences (Verhulst, Reference Verhulst2021). Data stewards are key actors in enabling the operationalization of DSD due to their ability to promote the adoption of processes and practices that empower data subjects to effectively assert agency.

4.2.2. Data intermediaries

If data stewards facilitate responsible data reuse, then data intermediaries are emerging as potential solutions to the challenges posed by unbalanced collective bargaining. These individuals or teams mediate transactions between the supply and demand of data. For example, they may help match a private sector organization that currently stores large sets of (siloed) data with a non-profit organization that can apply that data toward the public good. In the context of DSD, data intermediaries can help balance the need for data subjects to maintain agency over their own data while at the same time enabling a robust data-sharing ecosystem. Data intermediaries do pose certain challenges, notably the risks of higher transaction costs and the creation of new power asymmetries. These can be mitigated by regulatory frameworks that ensure that data intermediaries remain neutral, fair, and secure (EUI, 2022).

4.3. Policies

The third element needed to operationalize DSD relates to governance and policies. We focus on three areas in particular: charters, social licenses, and codes of conduct.

4.3.1. Charter

DSD is, in essence, about balancing power and agency asymmetries. These imbalances center around agency, choice, and participation. The creation of a charter (or statements of intent) nuanced by the types of actors, data collection, and data usage may provide a potential policy-based solution to this imbalance. Past data charters, such as the International Open Data Charter (2023) or the Inclusive Data Charter (Global Partnership for Sustainable Development Data, 2018), could act as models for a DSD charter. Such a charter would serve as a unifying step to define, scope, and establish DSD for data actors. In order to be most effective, it is important that any charter for DSD takes a life cycle, multi-stakeholder approach. Additionally, a charter ought to be context specific and human centered to ensure that the rights of the data subject are protected.

4.3.2. Social license

Social licenses are another policy tool that can help operationalize DSD. A social license, or social license to operate, captures multiple stakeholders’ acceptance of standard practices and procedures, across sectors and industries (Kenton, Reference Kenton2021). Social licenses help facilitate responsible data reuse by establishing standards of practice for the sector as a whole. They also empower individual actors to exert more proactive control over their data, which is critical to the adoption of DSD.

Broadly, there exist three approaches to secure social licenses for data reuse: public engagement; data stewardship; and regulatory frameworks (Verhulst and Saxena, Reference Verhulst and Saxena2022). In the context of DSD, data stewards play an especially important role, given their position as facilitators of responsible data reuse.

4.3.3. Codes of conduct

Codes of conduct are another policy element that can help operationalize DSD. A code of conduct interprets a policy and lays the foundation for its implementation in a specific sector (Vermeulen, Reference Vermeulen2021). By bringing together diverse stakeholders, or “code owners,” a code of conduct is able to account for the many different interests as well as technical and logistical requirements at play in the ecosystem. Moreover, a code of conduct can lead to the creation of a monitoring body, which is responsible for ensuring compliance, reviewing and adapting procedures, and sanctioning members who break the code. The monitoring body not only helps implement the code in a dynamic and effective manner but can also foster secure data sharing by acting as a third-party intermediary.

4.4. Products and technological tools

While the preceding elements are largely focused on human or human-initiated processes, it is important to recognize that technology also plays an important role in operationalizing DSD. Technology operates at many levels: at the layer of code, infrastructure and hardware, and as products and product design that offer interfaces to both consumers and organizations. User-led design experience (Patel et al., Reference Patel2021), informed by the needs of both consumers and larger beneficiaries of data, can help implement DSD principles in practice by promoting digital access and action across stakeholders (Ponzanesi, Reference Ponzanesi2019).

One technological product that can play a significant role is a trusted data space. A data space can be defined as an “organizational structure with technical and physical components that connects data users and data providers with sources of data” (DETEC and FDFA, 2022). A trusted data space gives stakeholders a degree of control over this space and thus over their data, while still encouraging sharing practices. This balance between agency and the right to reuse is a step toward DSD, as it aims to protect and empower data subjects without hampering open data.

5. Considerations and Reflections

In addition to outlining these four areas of operationalization, we wish to offer some additional considerations and observations on the operationalization of DSD—both in its current incipient state and in the more fleshed-out version that may yet emerge.

5.1. Life cycle approach

In order for that more fleshed-out, operational version to take shape, it is going to be essential to identify opportunities for DSD that exist at each stage of the data life cycle. The data life cycle follows data from its creation to its transformation into an asset across five stages: collection, processing, sharing, analyzing, and using (Young et al., Reference Young, Zahuranec and Verhulst2021). Each stage of the process could benefit from DSD. As we have seen above, the collection and the sharing stages of the life cycle must overcome challenges posed by agency asymmetries, which can be mitigated by the principle and practice of DSD. Similarly, during the processing, analysis, and use of data, opportunities for DSD emerge in terms of the use (and reuse) of data and the sharing of insights.

By taking a data life cycle approach to DSD, stakeholders will also be well-positioned to account for the variety of asymmetries, both in terms of data and in terms of power, that exist across different levels of the ecosystem. Starting from the level of the individual and extending all the way to the national stage, each actor defines different notions of self-determination to address unique asymmetries. The life cycle approach allows for stakeholders to reflect on and respond to the varied asymmetries that exist at each stage to help achieve a greater balance in power.

5.2. Symmetric relationships

As we have seen, DSD offers many benefits. One of the most important is its role in building symmetric relationships between stakeholders by re-balancing existing power and agency asymmetries, as outlined in Section 2.2. Symmetric relationships are important ethically, but they also have the potential for greater stability in the long term and help prevent the exploitation of weaker parties by stronger ones (Pfetsch, Reference Pfetsch2011).

In the context of a data ecosystem, symmetric relationships can help data subjects more effectively leverage their self-determination to exert a greater degree of control over the ways in which their data are used and reused. This is especially important to vulnerable minorities, who may also be disempowered in other ways. Future systems ought to be designed with these permanent minorities in mind within the broader context of human rights, justice, and democracy (Mamdani, Reference Mamdani2020).

5.3. DSD and disintermediation

Finally, DSD can help limit the creation of new power asymmetries by preventing the emergence of new chokepoints and loci of control in the form of new intermediaries. By returning power and agency to individual stakeholders, the need for dominant intermediaries is minimized. One important consequence is a lowering in the risk of new power imbalances, which so often stem from the disproportionate power of intermediaries.

Removing dominant intermediaries also simplifies the process of developing symmetric relationships, which are easier to achieve without the role of middlemen. In this way, disintermediation can play a vital role in increasing subject agency and in empowering data subjects to exert control over their own data while also promoting safe data sharing.

6. Further Research and Action

Self-determination is a historical concept with an impressive intellectual and juridical pedigree. It is imperative that this concept, like so many others, be updated to the digital era. The notion of DSD we have outlined here is preliminary, more exploratory, and conjectural than finalized. It sets the foundations for a fuller exploration of DSD, as well as the vital roles of agency, asymmetries, and other power dynamics within the data ecology. Our hope is that this paper sets an agenda or framework for further action and research into, and ultimately operationalization of, DSD across sectors and industries in our era of rapid and unrelenting datafication.

In conclusion, we therefore offer some key questions and areas for further enquiry that may help shape a DSD research agenda. A non-exhaustive list of questions could include as follows.

6.1. Conceptual and operational

-

• How does DSD differ or align across sectors, geographies, communities, and contexts?

-

• What can be learned from other (self-)governance practices in the further development and enforcement of DSD?

-

• What are the conditions and drivers that can enable a principled implementation of DSD?

6.2. Processes

-

• What design principles should inform the creation and implementation of “data assemblies” or other deliberative processes?

-

• What can be learned from recent deliberative democracy practices and/or innovations in collective bargaining processes to operationalize DSD?

6.3. Policies

-

• How are the components of a possible charter or statement of intent regarding DSD? And who should be involved in drafting these?

-

• How to deepen and operationalize the concept of “social license” across contexts and sectors?

-

• What can be learned from existing code of conducts in the development of a DSD code of conduct?

6.4. People and organizations

-

• How to train “data stewards” who have a responsibility to define and comply with DSD conditions?

-

• What is the role of existing institutions and intermediaries (such as unions, community organizations, and others) in representing vulnerable groups when DSD is negotiated or determined?

-

• How to ensure that new disintermediaries do not become new choke-points?

6.5. Products and tools

-

• What guidelines should be in place to steer transnational trustworthy data spaces that can provide legal certainty and accountability?

-

• How can products and tools be used to bridge gaps in digital literacy to empower DSD?

Funding statement

The GovLab received support from the Swiss Federal Government to hold two studios on migration and digital self-determination, and I had the great fortune of spending a month at the Rockefeller Foundation’s Bellagio Center where the paper was written.

Competing interest

The author declares none.

Author contribution

Writing—original draft: S.G.V.

Data availability statement

Data availability is not applicable to this article as no new data were created or analyzed in this study.

Comments

No Comments have been published for this article.