Book contents

- Frontmatter

- Dedication

- Contents

- Preface

- Introduction

- Part I Inverse Problems

- 1 Bayesian Inverse Problems andWell-Posedness

- 2 The Linear-Gaussian Setting

- 3 Optimization Perspective

- 4 Gaussian Approximation

- 5 Monte Carlo Sampling and Importance Sampling

- 6 Markov Chain Monte Carlo

- Exercises for Part I

- Part II Data Assimilation

- 7 Filtering and Smoothing Problems and Well-Posedness

- 8 The Kalman Filter and Smoother

- 9 Optimization for Filtering and Smoothing: 3DVAR and 4DVAR

- 10 The Extended and Ensemble Kalman Filters

- 11 Particle Filter

- 12 Optimal Particle Filter

- Exercises for Part II

- Part III Kalman Inversion

- 13 Blending Inverse Problems and Data Assimilation

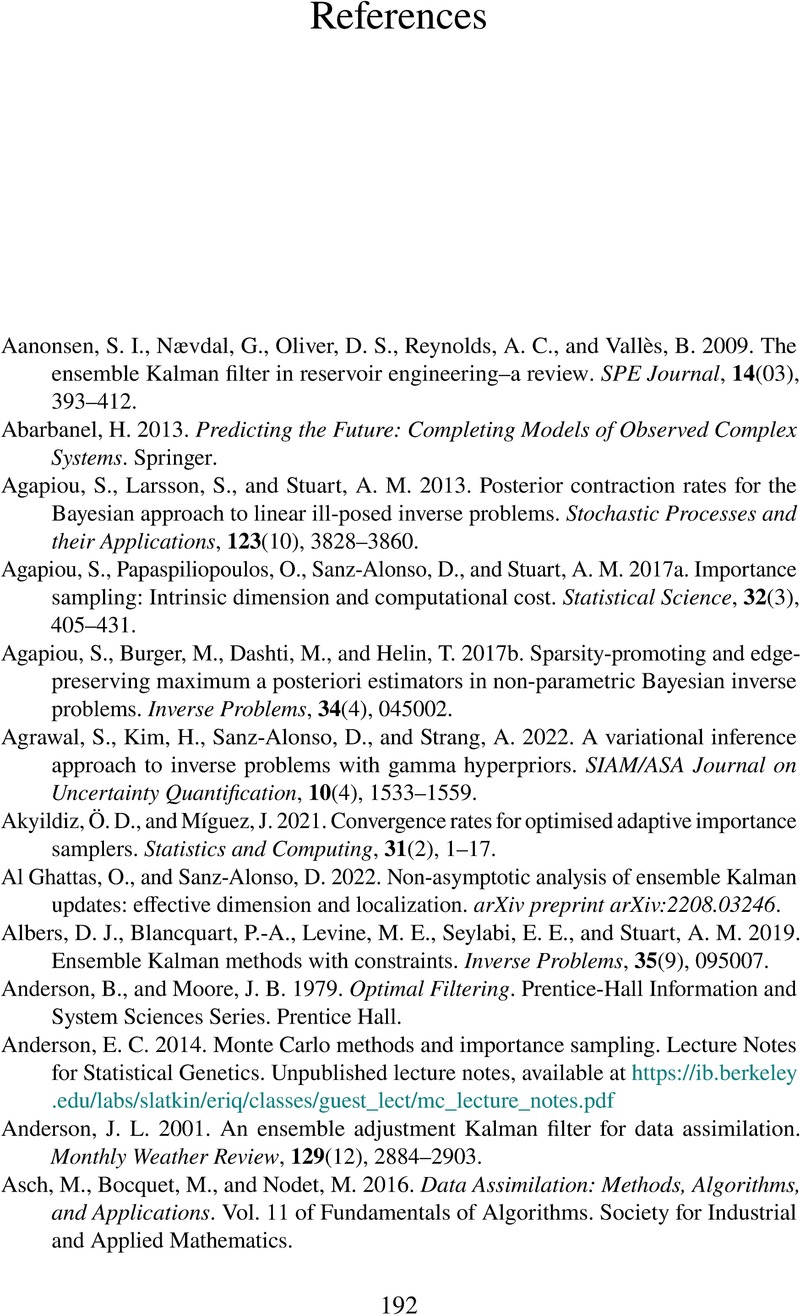

- References

- Index

- References

References

Published online by Cambridge University Press: 27 July 2023

- Frontmatter

- Dedication

- Contents

- Preface

- Introduction

- Part I Inverse Problems

- 1 Bayesian Inverse Problems andWell-Posedness

- 2 The Linear-Gaussian Setting

- 3 Optimization Perspective

- 4 Gaussian Approximation

- 5 Monte Carlo Sampling and Importance Sampling

- 6 Markov Chain Monte Carlo

- Exercises for Part I

- Part II Data Assimilation

- 7 Filtering and Smoothing Problems and Well-Posedness

- 8 The Kalman Filter and Smoother

- 9 Optimization for Filtering and Smoothing: 3DVAR and 4DVAR

- 10 The Extended and Ensemble Kalman Filters

- 11 Particle Filter

- 12 Optimal Particle Filter

- Exercises for Part II

- Part III Kalman Inversion

- 13 Blending Inverse Problems and Data Assimilation

- References

- Index

- References

Summary

- Type

- Chapter

- Information

- Inverse Problems and Data Assimilation , pp. 192 - 204Publisher: Cambridge University PressPrint publication year: 2023