11.1 Introduction

As the quality of AIFootnote 1 improves, it is increasingly applied to support decision-making processes, including in public administration.Footnote 2 This has many potential advantages: faster response time, better cost-effectiveness, more consistency across decisions, and so forth. At the same time, implementing AI in public administration also raises a number of concerns: bias in the decision-making process, lack of transparency, and elimination of human discretion, among others.Footnote 3 Sometimes, these concerns are raised to a level of abstraction that obscures the legal remedies that exist to curb those fears.Footnote 4 Such abstract concerns, when not coupled with concrete remedies, may lead to paralysis and thereby unduly delay the development of efficient systems because of an overly conservative approach to the implementation of ADM. This conservative approach may hinder the development of even safer systems that would come with wider and diverse adoption. The fears surrounding the adoption of ADM systems, while varied, can be broadly grouped into three categories: the argument of control, the argument of dignity, and the argument of contamination.Footnote 5

The first fear is the loss of control over systems and processes and thus of a clear link to responsibility when decisions are taken.Footnote 6 In a discretionary system, someone must be held responsible for those decisions and be able to give reasons for them. There is a legitimate fear that a black box system used to produce a decision, even when used in coordination with a human counterpart or oversight, creates a system that lacks responsibility. This is the fear of the rubber stamp: that, even if a human is in the loop, the deference given to the machine is so much that it creates a vacancy of accountability for the decision.Footnote 7

The second fear of ADM systems is that they may lead to a loss of human dignity.Footnote 8 If legal processes are replaced with algorithms, there is a fear that humans will be reduced to mere ‘cogs in the machine’.Footnote 9 Rather than being in a relationship with other humans to which you can explain your situation, you will be reduced to a digital representation of a sum of data. Since machines cannot reproduce the whole context of the human and social world, but only represent specific limited data about a human (say age, marital status, residence, income, etc.), the machine cannot understand you. Removing this ability to understand and to communicate freely with another human and the autonomy which this represents can lead to alienation and a loss of human dignity.Footnote 10

Third, there is the well-documented fear of ‘bad’ data being used to make decisions that are false and discriminatory.Footnote 11 This fear is related to the ideal that decision-making in public administration (among others) should be neutral, fair, and based on accurate and correct factual information.Footnote 12 If ADM is implemented in a flawed data environment, it could lead to systematic deficiencies such as false profiling or self-reinforcing feedback loops that accentuate irrelevant features that can lead to a significant breach of law (particularly equality law) if not just societal norms.Footnote 13

While we accept that these fears are not unsubstantiated, they need not prevent existing legal remedies from being acknowledged and used. Legal remedies should be used rather than the more cursory reach towards general guidelines or grand and ambiguous ethical press releases, that are not binding, not likely to be followed, and do not provide much concrete guidance to help solve the real problems they hope to address. In order to gain the advantages of AI-supported decision-making,Footnote 14 these concerns must be met by indicating how AI can be implemented in public administration without undermining the qualities associated with contemporary administrative procedures. We contend that this can be done by focusing on how ADM can be introduced in such a way that it meets the requirement of explanation as set out in administrative law at the standard calibrated by what we expect legally out of human explanation.Footnote 15 In contradistinction to much recent literature, which focuses on the right to an explanation solely under the GDPR,Footnote 16 we add and consider the more well-established traditions in administrative law. With a starting point in Danish law, we draw comparisons to other jurisdictions in Europe to show the common understanding in administrative law across these jurisdictions with regard to assuring administrative decisions are explained in terms of the legal reasoning on which the decision is based.

The chapter examines the explanation requirement by first outlining how the explanation should be understood as a legal explanation rather than a causal explanation (Section 11.2). We dismiss the idea that the legal requirement to explain an ADM-supported decision can be met by or necessarily implies mathematical transparency.Footnote 17 To illustrate our point about legal versus causal explanations, we use a scenario based on real-world casework.Footnote 18 We consider that our critique concerns mainly a small set of decisions that focus on legal decision-making: decisions that are based on written preparation and past case retrieval. These are areas where a large number of similar cases are dealt with and where previous decision-making practice plays an important role in the decision-making process (e.g., land use cases, consumer complaint cases, competition law cases, procurement complaint cases, applications for certain benefits, etc.). This scenario concerns an administrative decision regarding the Danish law on the requirement on municipalities to provide compensation for loss of earnings to a parent (we will refer to them as Parent A) who provides care to a child with a permanent reduced physical or mental functioning (in particular whether an illness would be considered ‘serious, chronic or long-term’). The relevant legislative text reads:

Persons maintaining a child under 18 in the home whose physical or mental function is substantially and permanently impaired, or who is suffering from serious, chronic or long-term illness [shall receive compensation]. Compensation shall be subject to the condition that the child is cared for at home as a necessary consequence of the impaired function, and that it is most expedient for the mother or father to care for the child.Footnote 19

We will refer to the example of Parent A to explore explanation in its causal and legal senses throughout.

In Section 11.3, we look at what the explanation requirement means legally. We compare various national (Denmark, Germany, France, and the UK) and regional legal systems (EU law and the European Convention of Human Rights) to show the well-established, human standard of explanation. Given the wide range of legal approaches and the firm foundation of the duty to give reasons, we argue that the requirements attached to the existing standards of explanation are well-tested, adequate, and sufficient to protect the underlying values behind them. Moreover, the requirement enjoys democratic support in those jurisdictions where it is derived from enacted legislation. In our view, ADM can and should be held accountable under those existing legal standards and we consider it unnecessary to public administration if this standard were to be changed or supplemented by other standards or requirements for ADM and not across all decision makers, whether human or machine. ADM, in our view, should meet the same minimum explanation threshold that applies to human decision-making. Rather than introducing new requirements designed for ADM, a more dynamic communicative process aimed at citizen engagement with the algorithmic processes employed by the administrative agency in question will be, in our view, more suitable to protecting against the ills of using ADM technology in public administration. ADM in public administration is a phenomenon that comes in a wide range of formats: from the use of automatic information processing for use as one part of basic administrative over semi-automated decision-making, to fully automated decision-making that uses AI to link information about facts to legal rules via machine learning.Footnote 20 While in theory a full spectrum of approaches is possible, and fully automated models have attracted a lot of attention,Footnote 21 in practice most forms of ADM are a type of hybrid system. As a prototype of what a hybrid process that would protect against many of the fears associated with ADM might look like, we introduce a novel solution, that we, for lack of a better term, call the ‘administrative Turing test’ (Section 11.4). This test could be used to continually validate and strengthen AI-supported decision-making. As the name indicates, it relies on comparing solely human and algorithmic decisions, and only allows the latter when a human cannot immediately tell the difference between the two. The administrative Turing test is an instrument to ensure that the existing (human) explanation requirement is met in practice. Using this test in ADM systems aims at ensuring the continuous quality of explanations in ADM and advancing what some research suggests is the best way to use AI for legal purposes – namely, in collaboration with human intelligence.Footnote 22

11.2 Explanation: Causal versus Legal

As mentioned previously, we focus on legal explanation – that is, a duty to give reasons/justifications for a legal decision. This differs from causal explainability, which speaks to an ability to explain the inner workings of that system beyond legal justification. Much of the literature on black-box AI has focused on the perceived need to open up the black box.Footnote 23 We can understand that this may be because it is taken for granted that a human is by default explainable, where algorithms in their many forms are not, at least in the same way. We propose that, perhaps counter-intuitively, that even if we take the blackest of boxes, it is the legal requirement of explanation in the form of sufficient reasons that matter for the protection of citizens. It is, in our view, the ability to challenge, appeal, and assess decisions against their legal basis, which ensures citizens of protection. It is not a feature of being able to look into the minutiae of the inner workings of a human mind (its neuronal mechanisms) or a machine (its mathematical formulas). The general call for explainability in AI – often conflated with complete transparency – is not required for the contestation of the decision by a citizen. This does not mean that we think that the quest for transparent ADM should be abandoned. On the contrary, we consider transparency to be desirable, but we see this as a broader and more general issue that links more to overall trust in AI technology as a wholeFootnote 24 rather than something that is necessary to meet the explanation requirement in administrative law. The requirement of explanation for administrative decisions can be found, in one guise or another, in most legal systems. In Europe, it is often referred to as the ‘duty to give reasons’ – that is, a positive obligation on administrative agencies to provide an explanation (‘begrundelse’ in Danish, ‘Begründung’ in German, and ‘motivation’ in French) for their decisions. The explanation is closely linked to the right to legal remedies. Some research indicates that its emergence throughout history has been driven by the need to enable the citizen affected by an administrative decision to effectively challenge it before a court of law.Footnote 25 This, in turn, required the provision of sufficient reasons for the decision in question: both towards the citizen, who as the immediate recipient should be given a chance to understand the main reasoning behind the decision, and the judges, who will be charged with examining the legality of the decision in the event of a legal challenge. The duty to give reasons has today become a self-standing legal requirement, serving a multitude of other functions beyond ensuring effective legal remedies, such as ensuring better clarification, consistency, and documentation of the decisions, self-control of the decision-makers, internal and external control of the administration as a whole, as well as general democratic acceptance and transparency.Footnote 26

The requirement to provide an explanation should be understood in terms of the law that regulates the administrative body’s decision in the case before it. It is not a requirement that any kind of explanation must or should be given but rather a specific kind of explanation. This observation has a bearing on the kind of explanation that may be required for administrative decision-making relying on algorithmic information analysis as part of the process towards reaching a decision. Take, for instance, our example of Parent A. An administrative body issues a decision to Parent A in the form of a rejection explaining that the illness the child suffers from does not qualify as serious within the meaning of the statute. The constituents of this explanation would generally cover a reference to the child’s disease and the qualifying components of the category of serious illness being applied. This could be, for example, a checklist system of symptoms or a reference to an authoritative list of formal diagnoses that qualify combined with an explanation of the differences between the applicant disease and those categorised as applicable under the statute. In general, the decision to reject the application for compensation of lost income would explain the legislative grounds on which the decision rests, the salient facts of the case, and the most important connection points between them (i.e., the discretionary or interpretive elements that are attributed weight in the decision-making process).Footnote 27 It is against this background that the threshold for what an explanation requires should be understood.

In a human system, at no point would the administrative body be required to describe the neurological activity of the caseworkers that have been involved in making the decision in the case. Nor would they be required to provide a psychological profile and biography of the administrator involved in making the decision, giving a history of the vetting and training of the individuals involved, their educational backgrounds, or other such information, to account for all the inputs that may have been explicitly or implicitly used to consider the application. When the same process involves an ADM system, must the explanation open up the opaqueness of its mathematical weighting? Must it provide a technical profile of all the inputs into the system? We think not. In the case of a hybrid system with a human in the loop, must the administrators set out – in detail – the electronic circuits that connect the computer keyboard to the computer hard drive and the computer code behind the text-processing program used? Must it describe the interaction between the neurological activity of the caseworker’s brain and the manipulation of keyboard tabs leading to the text being printed out, first on a screen, then on paper, and finally sent to the citizen as an explanation of how the decision was made? Again, we think not.

The provided examples illustrate the point that causal explanation can be both insufficient and superfluous. Even though it may be empirically fully accurate, it does not necessarily meet the requirement of legal explanation. It gives an explanation – but it does likely not give the citizen the explanation he or she is looking for. The problem, more precisely, is that the explanation provided by causality does not, in itself, normatively connect the decision to its legal basis. It is, in other words, not possible to see the legal reasoning leading from the facts of the case and the law to the legal decision, unless, of course, such legal reasoning is explicitly coded in the algorithm. The reasons that make information about the neurological processes inside the brains of decision-makers irrelevant to the legal explanation requirement are the same that can make information about the algorithmic processes in an administrative support system similarly irrelevant. This is not as controversial of a position as it might seem on first glance.

Retaining the existing human standard for explanation, rather than introducing a new standard devised specifically for AI-supported decision-making, has the extra advantage that the issuing administrative agency remains fully responsible for the decision no matter how it has been produced. From this also follows that the administrative agency issuing the decision can be queried about the decision in ordinary language. This then assures a focus on the rationale behind the explanation being respected, even if the decision has been arrived at through some algorithmic calculation that is not transparent. If the analogy is apt in comparing algorithmic processes to human neurology or psychological history, then requiring algorithmic transparency in legal decisions that rely on AI-supported decision-making would fail to address the explanation requirement at the right level. Much in line with Rahwan et al., who argue for a new field of research – the study of machine behaviour akin to human behavioural researchFootnote 28 – we argue that the inner workings of an algorithm are not what is in need of explanation but, rather, the human interaction with the output of the algorithm and the biases that lie in the inputs. What is needed is not that algorithms should be made more transparent, but that the standard for intelligibility should remain undiminished.

11.3 Explanation: The Legal Standard

A legal standard for the explanation of administrative decision-making exists across all main jurisdictions in Europe. We found, when looking at different national jurisdictions (Germany, France, Denmark, and the UK) and regional frameworks (EU law and European Human Rights law), that explanation requirements differ slightly among them but still hold as a general principle that never requires the kind of full transparency advocated for. While limited in scope, the law we investigated includes a variety of different legal cultures across Europe at different stages of developing digitalised administrations (i.e., both front-runners and late-comers in that process). They also diverge on how they address explanation: in the form of a general duty in administrative law (Denmark and Germany) or a patchwork of specific legislation and procedural safeguards, partly developed in legal practice (France and the UK). Common for all jurisdictions is that the legal requirement put on administrative agencies to provide reasons for their decisions has a threshold level (minimum requirement) that is robust enough to ensure that if black box technology is used as part of the decision-making process, recipients will not be any worse off than if decisions were made by humans only. In the following discussion, we will give a brief overview of how the explanation requirement is set out in various jurisdictions.Footnote 29

In Denmark, The Danish Act on Public Administration contains a section on explanation (§§22-24).Footnote 30 In general, the explanation can be said to entail that the citizen to whom the decision is directed must be given sufficient information about the grounds of the decision. This means that the explanation must fully cover the decision and not just explain parts of the decision. The explanation must also be truthful and in that sense correctly set forth the grounds that support the decision. Explanations may be limited to stating that some factual requirement in the case is not fulfilled. For example, in our parent A example, perhaps a certain age has not been reached, a doctor’s certificate is not provided, or a spouse’s acceptance has not been delivered in the correct form. Explanations may also take the form of standard formulations that are used frequently in the same kind of cases, but the law always requires a certain level of concreteness in the explanation that is linked to the specific circumstances of the case and the decision being made. It does not seem to be possible to formulate any specific standards in regards to how deep or broad an explanation should be in order to fulfil the minimum requirement under the law. The requirement is generally interpreted as meaning explanations should reflect the most important elements of the case relevant to the decision. Similarly, in Germany, the general requirement to explain administrative decisions can be found in the Administrative Procedural Code of 1976.Footnote 31 Generally speaking, every written (or electronic) decision requires an explanation or a ‘statement of grounds’; it should outline the essential factual and legal reasons that gave rise to the decision.

Where there was not a specific requirement for explanation,Footnote 32 we found – while perhaps missing the overarching general administrative duty – a duty to give reasons as a procedural safeguard. For example, French constitutional law does not by itself impose a general duty on administrative bodies to explain their decisions. Beyond sanctions of a punitive character, administrative decisions need to be reasoned, as provided by a 1979 statuteFootnote 33 and the 2016 Code des Relations entre le Public et l’Administration (CRPA). The CRPA requires a written explanation that includes an account of the legal and factual considerations underlying the decision.Footnote 34 The rationale behind the explainability requirement is to strengthen transparency and trust in the administration, and to allow for its review and challenge before a court of law.Footnote 35 Similarly, in the UK, a recent study found, unlike many statements to the contrary and even without a general duty, in most cases, ‘the administrative decision-maker being challenged [regarding a decision] was under a specific statutory duty to compile and disclose a specific statement of reasons for its decision’.Footnote 36 This research is echoed by Jennifer Cobbe, who found that ‘the more serious the decision and its effects, the greater the need to give reasons for it’.Footnote 37

In both the UK as well as the above countries, there are ample legislative safeguards that provide specific calls for reason giving. What is normally at stake is the adequacy of reasons that are given. As Marion Oswald has pointed out, the case law in the UK has a significant history in spelling out what is required when giving reasons for a decision.Footnote 38 As she recounts from Dover District Council, ‘the content of [the duty to give reasons] should not in principle turn on differences in the procedures by which it is arrived at’.Footnote 39 What is paramount in the UK conception is not a differentiation between man and machine but one that stands by enshrined and tested principles of being able to mount a meaningful appeal, ‘administrative law principles governing the way that state actors take decisions via human decision-makers, combined with judicial review actions, evidential processes and the adversarial legal system, are designed to counter’ any ambiguity in the true reasons behind a decision.Footnote 40

The explanation requirement in national law is echoed and further hardened in the regional approaches, where for instance Art. 41 of the Charter of Fundamental Rights of the European Union (CFR) from 2000 provides for a right to good administration, where all unilateral acts that generate legal consequences – and qualify for judicial review under Art. 263 TFEU – require an explanation.Footnote 41 It must ‘contain the considerations of fact and law which determined the decision’.Footnote 42 Perhaps the most glaring difference that would arise between automated and non-automated scenarios is the direct application of Art. 22 of the General Data Protection Regulation (GDPR), which applies specifically to ‘Automated individual decision making, including profiling.’ Art. 22 stipulates that a data subject ‘shall have the right not to be subject to a decision based solely on automated processing, including profiling, which produces legal effects concerning him or her or similarly significantly affects him or her’,Footnote 43 unless it is proscribed by law with ‘sufficient safeguards’ in place,Footnote 44 or by ‘direct consent.’Footnote 45 These sufficient safeguards range from transparency in the input phase (informing and getting consent) to the output-explanation phase (review of the decision itself).Footnote 46 The GDPR envisages this output phase in the form of external auditing through Data Protection Authorities (DPAs), which have significant downsides in terms of effectiveness and efficiency.Footnote 47 Compared to this, we find the explanation standard in administrative law to be much more robust, for it holds administrative agencies to a standard for intelligibility irrespective of whether they use ADM or not. Furthermore, under administrative law, the principle of ‘the greater interference on the recipients life a decision has, the greater the need to give reasons in justification of the decision’ applies. Furthermore, the greater the discretionary power of the decision maker, the more thorough the explanation has to be.Footnote 48 Focusing on the process by which a decision is made rather than the gravity of its consequences seems misplaced. By holding on to these principles, the incentive should be to develop ADM technology that can be used under this standard, rather than inventing new standards that fit existing technologies.Footnote 49

ADM in public administration does not and should not alter existing explanation requirements. The explanation is not different now that it is algorithmic. The duty of explanation, although constructed differently in different jurisdictions, provides a robust foundation across Europe for ensuring that decision-making in public administration remains comprehensible and challengeable, even when ADM is applied. What remains is asking how ADM could be integrated into the decision-making procedure in the organisation of a public authority to ensure this standard.

11.4 Ensuring Explanation through Hybrid Systems

Introducing a machine-learning algorithm in public administration and using it to produce drafts of decisions rather than final decisions to be issued immediately to citizens, we suggest, would be a useful first step. In this final section of the chapter, we propose an idea that could be developed into a proof of concept for how ADM could be implemented in public authorities to support decision-making.

In contemporary public administration, much drafting takes place using templates. ADM could be coupled to such templates in various ways. Different templates require different kinds of information. Such information could be collected and inserted into the template automatically, as choices are made by a human about what kind of information should be filled into the template. Another way is to rely on automatic legal information retrieval. Human administrators often look to previous decisions of the same kind as inspiration for deciding new cases. Such processes can be labour intensive, and the same public authority may not all have the same skills in finding a relevant, former decision. Natural Language Processing technology may be applied to automatically retrieve relevant former decisions, if the authority’s decisions are available in electronic form in a database. This requires, of course, that the data the algorithm is learning from is sufficiently large and that the decisions in the database are generally considered to still be relevant ‘precedent’Footnote 50 for new decisions. Algorithmically learning from historical cases and reproducing their language in new cases by connecting legal outcomes to given fact descriptions is not far from what human civil servants would do anyway: whenever a caseworker is attending to a new case, he or she will seek out former cases of the same kind to use as a compass to indicate how the new case should be decided.

One important difference between a human and an algorithm is that humans have the ability to respond more organically to past cases because they have a broader horizon of understanding: They are capable of contextualizing the understanding of their task to a much richer extent than algorithms, and humans can therefore adjust their decisions to a broader spectrum of factors – including ones that are hidden from the explicit legislation and case law that applies to the case at hand.Footnote 51 Resource allocation, policy signals, and social and economic change are examples of this. This human contextualisation of legal text precisely explains why new practices sometimes develop under the same law.Footnote 52. Algorithms, on the other hand operate, without such context and can only relate to explicit texts. Hence they cannot evolve in the same way. Paradoxically, then, having humans in the legal loop serves the purpose of relativizing strict rule-following by allowing sensitivity to context.

This limited contextualization of algorithmic ‘reasoning’ will create a problem if all new decisions are drafted on the basis of a machine learning algorithm that reproduces the past, and if those drafts are only subjected to minor or no changes by its human collaboratorFootnote 53. Once the initial learning stage is finalized and the algorithm is used in output mode to produce decision drafts, then new decisions will be produced in part by the algorithm. One of two different situations may now occur: One, the new decisions are fed back into the machine-learning stage. In this case, a feedback loop is created in which the algorithm is fed its own decisions.Footnote 54 Or, two, the machine-learning stage is blocked after the initial training phase. In this case, every new decision is based on what the algorithm picked up from the original training set, and the output from the algorithm will remain statically linked to this increasingly old data set. None of these options are in our opinion optimal for maintaining an up-to-date algorithmic support system.

There are good reasons to think that a machine learning algorithm will only keep performing well in changing contexts (which in this case is measured by the algorithm’s ability to issue usable drafts of a good legal quality) – if it is constantly maintained by fresh input which reflects those changing contexts. This can be done in a number of different ways, depending on how the algorithmic support system is implemented in the overall organization of the administrative body and its procedures for issuing decisions. As mentioned previously, our focus is on models that engage AI and human collaboration. We propose two such models for organizing algorithmic support in an administrative system that aim at issuing decisions that we think are particularly helpful because they address the need for intelligible explanations of the outlined legal standard.

In our first proposed model, the caseload in an administrative field that is supported by ADM assistance is randomly split into two loads, such that one load is fed to an algorithm for drafting and another load is fed to a human team, also for drafting. Drafts from both algorithms and humans are subsequently sent to a senior civil servant (say a head of office), who finalizes and signs off on the decisions. All final decisions are pooled and used to regularly update the algorithm used.

By having an experienced civil servant interact with algorithmic drafting in this way, and feeding decisions, all checked by human intelligence, back into the machine-learning process, the algorithm will be kept fresh with new original decisions, a percentage of which will be written by humans from scratch. The effect of splitting the caseload and leaving one part to through a ‘human only’ track is that the previously mentioned sensitivity to broader contextualization is fed back into the algorithm and hence allows a development in the case law that could otherwise not happen. To use our Parent A example as an illustration: Over time, it might be that new diseases and new forms of handicaps are identified or recognized as falling under the legislative provision because it is being diagnosed differently. If every new decision is produced by an ADM system that is not updated with new learning on cases that reflect this kind of change, then the system cannot evolve to take the renewed diagnostic practices into account. To avoid this ‘freezing of time’, a hybrid system in which the ADM is constantly being surveyed and challenged is necessary. Furthermore, if drafting is kept anonymous, and all final decisions are signed off by a human, recipients of decisions (like our Parent A) may not know how his/her decision was produced. Still, the explanation requirement assures that recipients can at any time challenge the decision, by inquiring further into the legal justification.Footnote 55 We think this way of introducing algorithmic support for administrative decisions could advance many of the efficiency and consistency (equality) gains sought by introducing algorithmic support systems, while preserving the legal standard for explanation.

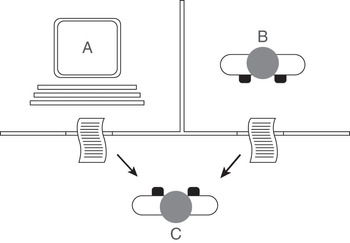

An alternative method – our second proposed model – is to build into the administrative system itself a kind of continuous administrative Turing test. Alan Turing, in a paper written in 1950,Footnote 56 sought to identify a test for artificial intelligence. The test he devised consisted of a setup in which (roughly explained) two computers were installed in separate rooms. One computer was operated by a person; the other was operated by an algorithmic system (a machine). In a third room, a human ‘judge’ was sitting with a third computer. The judge would type questions on his computer, and the questions would then be sent to both the human and the machine in the two other rooms for them to read. They would then in turn write replies and send those back to the judge. If the judge could not identify which answers came from the person and which came from the machine, then the machine would be said to have shown the ability to think. A model of Turing’s proposed experimental setup is seen in Figure 11.1:

Akin to this, an administrative body could implement algorithmic decision support in a way that would imitate the setup described by Turing. This could be done by giving it to both a human administrator and an ADM. Both the human and the ADM would produce a decision draft for the same case. Both drafts would be sent to a human judge (i.e., a senior civil servant who finalizes and signs off on the decision). In this setup, the human judge would not know which draft came from the ADM and which came from the human,Footnote 57 but would proceed to finalize the decision based on which draft was most convincing for deciding the case and providing a satisfactory explanation to the citizen. This final decision would then be fed back to the data set from which the ADM system learns.

The two methods described previously are both hybrid models and can be used either alone or in combination to assure that ADM models are implemented in a way that is both productive, because drafting is usually a very time-consuming process and safe (even if not mathematically transparent) because there is a human overseeing the final product and a continuous human feedback to the data set from which the ADM system learns. Moreover, using this hybrid approach helps overcome the legal challenges that a fully automated system would face from both EU law (GDPR) and some domestic legislation.

11.5 Conclusion

Relying on the above models keeps the much-sought-after ‘human in the loop’ and does so in a way that is systematic and meaningful because our proposed models take a specific form: they are built around the idea of continuous human-AI collaboration in producing explainable decisions. Relying on this model makes it possible to develop ADM systems that can be introduced to enhance the effectiveness, consistency (equality) without diminishing the quality of explanation. The advantage of our model is that it allows ADM to be continuously developed and fitted to the legal environment in which it is supposed to serve. Furthermore, such an approach may have further advantages. Using ADM for legal information retrieval allows for analysis across large numbers of decisions that have been handed down across time. This could grow into a means for assuring better detection of hidden biases and other structural deficiencies that would otherwise not be discoverable. This approach may help allay the fears of the black box.

In terms of control and responsibility, our proposed administrative Turing test allows for a greater scope of review of rubber stamp occurrences by being able to compare differences in pure human and pure machine decisions by a human arbiter. Therefore the model may also help in addressing the concern raised about ‘retrospective justifications’.Footnote 58 Because decisions in the setup we propose are produced in collaboration between ADM and humans, the decisions issued are likely to be more authentic than either pure ADM or pure human decision-making, since the use of ADM allows for a more efficient and comprehensive inclusion of existing decision-making practice as inputting the new decision-making through automated information retrieval and recommendation. With reference to human dignity, our proposed model retains human intelligibility as the standard for decision-making. The proposed administrative Turing model also continually adds new information into the system, and undergoes a level of supervision that can protect against failures that are frequently associated with ADM systems. Applying the test developed in this chapter to develop a proof of concept for the implementation of ADM in public administration today is the most efficient way of overcoming the weaknesses of purely human decision-making tomorrow.

ADM does not solve the inequalities built into our societal and political institutions, nor is it their original cause. There are real questions to be asked of our systems, and we would rather not bury those questions with false enemies. To rectify those inequalities, we must be critical of our human failings and not hold hostage the principles we have developed to counter injustice. If those laws are deficient, it is not the fault of a new technology. We are, however, aware that this technology can not only reproduce but even heighten injustice if it is used thoughtlessly. But we would also like to flag that the technology offers an opportunity to bring legal commitments like the duty of explanation up to a standard that is demanded by every occurrence of injustice: a human-based standard.