1 Introduction

One of the core challenges in creating a reliable radiocarbon (14C) calibration curve is ensuring that the statistical approach used for the curve’s production is able to accurately synthesize the various datasets which make up the curve, specifically recognizing and incorporating their diverse and unique aspects in doing so. This is particularly critical in light of the recent increase in the availability of high-precision radiocarbon determinations, and the consequent demand by users for ever more precise and accurate calibration. For IntCal20, not only is the underlying data available for use in curve construction considerably more numerous and detailed than in previous curves but, due to the advances in our wider understanding of the Earth’s systems, our insight into the specific and individual characteristics of much of that data has also improved. If we are able to harness and more accurately represent these specific features within our curve construction then this will hopefully improve the resultant calibration. To achieve this, for the new calibration curve we have completely revised the statistical methodology from a random walk to a Bayesian spline based approach. This new approach allows us to take better advantage of the underlying data and also to provide output which is more useful and relevant for calibration users.

Over the years, the IntCal statistical methodology has developed and improved alongside our understanding of the constituent data and as we gain more knowledge of the underlying Earth Systems processes. For IntCal98 (Stuiver et al. Reference Stuiver, Reimer, Bard, Beck, Burr, Hughen, Kromer, McCormac, van der Plicht and Spurk1998), the curve was produced via a combination of linear interpolation of windowed tree-ring averages and frequentist splines. In 2004, the approach was updated to a random walk model (Buck and Blackwell Reference Buck and Blackwell2004) which introduced an approximate Bayesian method, and began to incorporate some of the important additional aspects of the constituent data such as potential uncertainty in the calendar ages for the older determinations, and that many tree-ring observations related to multiple years of metabolization. This random walk was developed further for IntCal09 (Blackwell and Buck Reference Blackwell and Buck2008; Heaton et al. Reference Heaton, Blackwell and Buck2009) and IntCal13 (Niu et al. Reference Niu, Heaton, Blackwell and Buck2013) into a fully Bayesian MCMC approach that enabled the inclusion of more of the unique structures in the calibration datasets such as floating sequences of tree rings and more complex covariances in the calendar age estimates provided by wiggle-matching or palaeoclimate tie-pointing.

The random walk approach however had several disadvantages. While in principle, it allowed for modeling flexibility, the details of the implementation required a very large number of parameters that, despite being highly dependent, were predominantly updated individually. This made it extremely slow to run; difficult to ensure it explored the full space of possible curves; and also hard to assess whether the MCMC had reached its equilibrium distribution. Furthermore, this restricted the ability to explore the impact of specific modeling assumptions or individual data on the final curve. As the volume of the data used to generate IntCal has increased, alongside the need for further bespoke modeling of key data structures, this random walk approach to curve creation has consequently become computationally impractical for further use.

For the updated IntCal20 curve, the IntCal working group therefore requested a new approach to curve creation be developed. This new approach needed to be equally rigorous, from a statistical perspective, as the previous methodology; to incorporate the advances in both geoscientific understanding and radiocarbon precision that have occurred since 2013; and yet overcome the implementational difficulties of the previous random walk approach. Specifically, to increase confidence in the curve’s robustness and reliability the new approach needed to run at a speed which allowed investigation of the effect of key modeling choices not possible with the previous methodology. The new approach is based upon Bayesian splines with errors-in-variables as introduced by Berry et al. (Reference Berry, Carroll and Ruppert2002) but requires multiple bespoke innovations to accurately adapt to the specific data complexities. We believe it offers a significant improvement over previous approaches not just in the modeling but also the additional output it can provide for both calibration users and geoscientific users more widely.

The paper is set out as follows. In Section 2 we list the main advances made in the statistical methodology, data understanding and modeling since the last set of IntCal curves (Reimer et al. Reference Reimer, Bard, Bayliss, Beck, Blackwell, Bronk Ramsey, Buck, Cheng, Edwards, Friedrich, Grootes, Guilderson, Haflidason, Hajdas, Hatté, Heaton, Hoffmann, Hogg, Hughen, Kaiser, Kromer, Manning, Niu, Reimer, Richards, Scott, Southon, Staff, Turney and van der Plicht2013; Hogg et al. Reference Hogg, Hua, Blackwell, Niu, Buck, Guilderson, Heaton, Palmer, Reimer, Reimer, Turney and Zimmerman2013). We then provide, in Section 3, a short non-technical introduction to three key practical ideas for calibration users: an explanation of Bayesian splines; the importance of recognizing potential calendar age uncertainty in curve construction; and the modeling of potential over-dispersion in the data. Section 4 then describes the main technical details of curve construction. The IntCal20 curve is created in two sections with somewhat different statistical concerns. The more recent part of the curve, back to approximately 14 cal kBP, is based entirely upon tree-ring determinations, that are predominantly dendrodated with exact, known calendar ages. Here the main challenges are to incorporate the large amount of data, provide high resolution in the curve with appropriate uncertainties, and accurately incorporate blocked determinations that represent the measurement of multiple years. This is described in Section 4.3. Further back in time, the curve is based upon a wider range of 14C material where, as well as tree rings, we use direct and indirect records of atmospheric 14C in the form of corals, macrofossils, forams and speleothems. We describe the modifications required to incorporate these kinds of data in Section 4.4. This being a Bayesian approach we are able to incorporate prior information into our model and also provide posterior information on particular outputs of independent interest. In Section 5 we provide an indication of the kind of additional output the new methodology is able to provide. In particular the new approach generates not only pointwise means and variances for the calibration curve but, for the first time, sets of complete posterior realizations from 0–55 cal kBP which also allow access to covariance information. This covariance has the potential to improve future calibration of multiple radiocarbon determinations, for example in wiggle matches or more complex modeling. We also gain information on the level of additional variation (beyond laboratory reported uncertainty) present in tree-ring 14C determinations arising from the same calendar year. Finally, we discuss potential future work in Section 6 along with areas we have identified for further improvement for the next IntCal iteration. Note that the statistical approaches to the creation of SHCal20 (Hogg et al. Reference Hogg, Heaton, Hua, Palmer, Turney, Southon, Bayliss, Blackwell, Boswijk, Bronk Ramsey, Pearson, Petchey, Reimer, Reimer and Wacker2020 in this issue) and Marine20 (Heaton et al. Reference Heaton, Köhler, Butzin, Bard, Reimer, Austin, Bronk Ramsey, Grootes, Hughen, Kromer, Reimer, Adkins, Burke, Cook, Olsen and Skinner2020 in this issue) are presented within those papers and not discussed in detail here.

Notation

All ages in this paper and the database are reported relative to mid-1950 AD (= 0 BP, before present). Conventional, pre-calibration, 14C ages are given in units “![]() $^{\it14}{\it{C}}$yrs BP.” Calendar, or calibrated, ages are denoted as “cal BP” or “cal kBP” (thousands of calibrated years before present).

$^{\it14}{\it{C}}$yrs BP.” Calendar, or calibrated, ages are denoted as “cal BP” or “cal kBP” (thousands of calibrated years before present).

Data and Code

As in previous updates to the curve, the constituent data is available from the IntCal database http://www.intcal.org/. Other inputs are available on request, for example covariance matrices for the calendar ages of the tie-pointed records of the Cariaco Basin (Hughen and Heaton Reference Hughen and Heaton2020 in this issue), Pakistan and Iberian margin (Bard et al. Reference Bard, Ménot, Rostek, Licari, Böning, Edwards, Cheng, Wang and Heaton2013); and Lake Suigetsu (Bronk Ramsey et al. Reference Bronk Ramsey, Heaton, Schlolaut, Staff, Bryant, Brauer, Lamb, Marshall and Nakagawa2020 in this issue). Coding was performed in R (R Core Team 2019), using the fda (Ramsay et al. Reference Ramsay, Wickham, Graves and Hooker2018), mvtnorm (Genz et al. Reference Genz, Bretz, Miwa, Mi, Leisch, Scheipl and Hothorn2019), mvnfast (Fasiolo Reference Fasiolo2016) and Matrix (Bates and Maechler Reference Bates and Maechler2019) packages to efficiently create and fit the spline bases; and doParallel (Corporation and Weston Reference Corporation and Weston2019) to implement parallelization for the MCMC tempering. This code is available on request from the first author.

2 Developments in the IntCal20 statistical methodology

The main differences/improvements in the updated IntCal20 methodology over the previous random walk can be split into three broad categories: those relating to improvements in the statistical implementation itself; progress in the detailed modeling of unique data aspects that are enabled by the updated methodology; and finally, advances in the curve output that are both relevant to users of the calibration curve and of potential interest in their own right.

Improvements in Statistical Implementation

1. Bayesian Splines—the change from the random walk of Niu et al. (Reference Niu, Heaton, Blackwell and Buck2013) to splines allows a much more computationally feasible fitting algorithm. We retain the Bayesian aspect since it allows us to incorporate the various unique aspects of the calibration data; provides useful posterior information, e.g. the posterior calendar ages of the constituent data; and maintains consistency with calibration itself which is now universally implemented under that paradigm. This Bayesian spline approach is equally conceptually rigorous as the random walk but considerably more flexible and can be run much more quickly.

2. Change in modeling and fitting domain—function estimation via splines is based on a trade-off between creating a curve that passes close to the observed data yet is not too rough. Previously all aspects of the curve’s construction occurred in the radiocarbon age domain. However, this is not the natural space in which to either model the curve roughness or decide on fidelity of the data to the curve. In the older section of the 14C record, the measurement uncertainties on the radiocarbon age scale become non-symmetric. This causes difficulties in fairly assessing the fit of a model to observed data if we judge it in this radiocarbon age domain. Instead, it is more natural to assess model fit in F 14C where measurement uncertainty remains symmetric. Similarly, a more natural domain in which to model the curve roughness is in Δ 14C space. We therefore change our modeling domain to

${\Delta ^{14}}{\rm{C}}$ and our fitting domain to

${\Delta ^{14}}{\rm{C}}$ and our fitting domain to  ${F^{14}}{\rm{C}}$ when creating the curve. See Section 3.1.2 for definitions of the radiocarbon age,

${F^{14}}{\rm{C}}$ when creating the curve. See Section 3.1.2 for definitions of the radiocarbon age,  ${F^{14}}{\rm{C}}$ and

${F^{14}}{\rm{C}}$ and  ${\Delta ^{14}}{\rm{C}}$ domains.

${\Delta ^{14}}{\rm{C}}$ domains.3. Over-dispersion in dendrodated trees—as the volume of data entering IntCal increases, it is key to make sure we do not produce a curve which is over-precise as this would give inaccurate calibration for a user. While laboratories attempt to quantify all

$^{\rm14}{\rm{C}}$ uncertainty in their measurements, including through intercomparison exercises such as Scott et al. (Reference Scott, Naysmith and Cook2017), there remain some sources of additional

$^{\rm14}{\rm{C}}$ uncertainty in their measurements, including through intercomparison exercises such as Scott et al. (Reference Scott, Naysmith and Cook2017), there remain some sources of additional  $^{\rm14}{\rm{C}}$ variation which are difficult for any laboratory to capture. For tree rings, potential examples include variation between local region, species, or growing season. Consequently, when we bring together

$^{\rm14}{\rm{C}}$ variation which are difficult for any laboratory to capture. For tree rings, potential examples include variation between local region, species, or growing season. Consequently, when we bring together  $^{\rm14}{\rm{C}}$ measurements from the same calendar year, they may potentially be over-dispersed, i.e. more widely spread than would be expected given their laboratory quoted uncertainty. The new approach incorporates a term allowing for potential over-dispersion within 14C determinations of the tree rings so that, if additional variability is seen in the underlying IntCal data, the method will account for it and prevent excessive confidence in the resultant curve.

$^{\rm14}{\rm{C}}$ measurements from the same calendar year, they may potentially be over-dispersed, i.e. more widely spread than would be expected given their laboratory quoted uncertainty. The new approach incorporates a term allowing for potential over-dispersion within 14C determinations of the tree rings so that, if additional variability is seen in the underlying IntCal data, the method will account for it and prevent excessive confidence in the resultant curve.4. Heavy tailed errors—in the older portion of the curve, where data come from a range of different

$^{\rm14}{\rm{C}}$ reservoirs, we aim to reduce the influence of potential outliers by permitting each dataset to have heavier tailed errors. These tails are adaptively estimated during curve construction.

$^{\rm14}{\rm{C}}$ reservoirs, we aim to reduce the influence of potential outliers by permitting each dataset to have heavier tailed errors. These tails are adaptively estimated during curve construction.5. Improved model mixing and parallel tempering—when using any MCMC method, it is crucial to ensure that the chain has reached its equilibrium distribution and that it is not stuck in one part of the model space. This was a significant concern with the previous random walk approach since the curve was updated one calendar year at a time. Conversely, Bayesian splines enable updates of the complete curve simultaneously via Gibbs sampling. To address any additional concerns about mixing of the new MCMC and to ensure we explore the space of possible curves more freely, we also implement parallel tempering whereby we run multiple modified/tempered chains simultaneously in such a way that some can move around the space more easily. By appropriate switching between these tempered chains we can further improve model mixing.

Improvements in Data Modeling

These steps forward in the statistical implementation, while still maintaining computational feasibility, also enable us both to incorporate new data structures and to advance our modeling of the processes from which the data arise:

1. Large increase in volume of data throughout the curve—the new IntCal20 is based upon a much greater number of

$^{\rm14}{\rm{C}}$ determinations. These include many annual tree-ring determinations such as remeasurement within various laboratories of data from the period of Thera (e.g. Pearson et al. Reference Pearson, Brewer, Brown, Heaton, Hodgins, Jull, Lange and Salzer2018); new wiggle-matched and floating sequences of late-glacial tree rings (e.g. Capano et al. Reference Capano, Miramont, Shindo, Guibal, Marschal, Kromer, Tuna and Bard2020 in this issue); and new measurements of Hulu Cave extending further back in time (Cheng et al. Reference Cheng, Edwards, Southon, Matsumoto, Feinberg, Sinha, Zhou, Li, Li, Xu, Chen, Tan, Wang, Wang and Ning2018). In total, the new IntCal20 curve is based upon 12,904 raw 14C measurements compared to the 7019 used for IntCal13 (Reimer et al. Reference Reimer, Bard, Bayliss, Beck, Blackwell, Bronk Ramsey, Buck, Cheng, Edwards, Friedrich, Grootes, Guilderson, Haflidason, Hajdas, Hatté, Heaton, Hoffmann, Hogg, Hughen, Kaiser, Kromer, Manning, Niu, Reimer, Richards, Scott, Southon, Staff, Turney and van der Plicht2013). This rapid increase means a faster curve construction method is essential.

$^{\rm14}{\rm{C}}$ determinations. These include many annual tree-ring determinations such as remeasurement within various laboratories of data from the period of Thera (e.g. Pearson et al. Reference Pearson, Brewer, Brown, Heaton, Hodgins, Jull, Lange and Salzer2018); new wiggle-matched and floating sequences of late-glacial tree rings (e.g. Capano et al. Reference Capano, Miramont, Shindo, Guibal, Marschal, Kromer, Tuna and Bard2020 in this issue); and new measurements of Hulu Cave extending further back in time (Cheng et al. Reference Cheng, Edwards, Southon, Matsumoto, Feinberg, Sinha, Zhou, Li, Li, Xu, Chen, Tan, Wang, Wang and Ning2018). In total, the new IntCal20 curve is based upon 12,904 raw 14C measurements compared to the 7019 used for IntCal13 (Reimer et al. Reference Reimer, Bard, Bayliss, Beck, Blackwell, Bronk Ramsey, Buck, Cheng, Edwards, Friedrich, Grootes, Guilderson, Haflidason, Hajdas, Hatté, Heaton, Hoffmann, Hogg, Hughen, Kaiser, Kromer, Manning, Niu, Reimer, Richards, Scott, Southon, Staff, Turney and van der Plicht2013). This rapid increase means a faster curve construction method is essential.2. Blocking in dendrodated trees—with the new radiocarbon revolution providing an increased ability to measure annual tree rings, there has been a concern that the inclusion within IntCal of determinations relating to decadal or bi-decadal averages alongside these new annual measurements could mean we lose critical information on short-term variation in atmospheric radiocarbon levels. Our approach fully recognizes the number of years each determination represents, meaning there is no loss of information as a result of such blocked determinations.

3. Variable marine reservoir ages—IntCal09 (Reimer et al. Reference Reimer, Baillie, Bard, Bayliss, Beck, Blackwell, Bronk Ramsey, Buck, Burr, Edwards, Friedrich, Grootes, Guilderson, Hajdas, Heaton, Hogg, Hughen, Kaiser, Kromer, McCormac, Manning, Reimer, Richards, Southon, Talamo, Turney, van der Plicht and Weyhenmeyer2009) and IntCal13 (Reimer et al. Reference Reimer, Bard, Bayliss, Beck, Blackwell, Bronk Ramsey, Buck, Cheng, Edwards, Friedrich, Grootes, Guilderson, Haflidason, Hajdas, Hatté, Heaton, Hoffmann, Hogg, Hughen, Kaiser, Kromer, Manning, Niu, Reimer, Richards, Scott, Southon, Staff, Turney and van der Plicht2013) modeled marine reservoir ages beyond the Holocene as constant over time. For IntCal20, we incorporate time-varying marine reservoir ages using the LSG model of Butzin et al. (Reference Butzin, Heaton, Köhler and Lohmann2020 in this issue) and a separate adaptive spline for the Cariaco Basin.

4. Inclusion of new floating tree-ring sequences—the new curve includes several new late-glacial trees which have calendar age estimates obtained via wiggle matching (Reinig et al. Reference Reinig, Nievergelt, Esper, Friedrich, Helle, Hellmann, Kromer, Morganti, Pauly, Sookdeo, Tegel, Treydte, Verstege, Wacker and Büntgen2018; Capano et al. Reference Capano, Miramont, Shindo, Guibal, Marschal, Kromer, Tuna and Bard2020 in this issue), as well as three entirely floating tree chronologies around the time of the Bølling-AllerødFootnote 1 (Adolphi et al. Reference Adolphi, Muscheler, Friedrich, Güttler, Wacker, Talamo and Kromer2017) and two older Southern Hemisphere floating kauri tree-ring sequences (Turney et al. Reference Turney, Fifield, Hogg, Palmer, Hughen, Baillie, Galbraith, Ogden, Lorrey and Tims2010, Reference Turney, Jones, Phipps, Thomas, Hogg, Kershaw, Fogwill, Palmer, Bronk Ramsey and Adolphi2017) on which we have no absolute dating information and which need to be placed accurately amongst the other data.

5. Rapid

$^{\rm14}{\rm{C}}$ excursions—there are several specific times when the level of atmospheric

$^{\rm14}{\rm{C}}$ excursions—there are several specific times when the level of atmospheric  $^{\rm14}{\rm{C}}$ is known to vary extremely rapidly. For IntCal20 we have identified three such events around 774–5 AD, 993–4 AD and

$^{\rm14}{\rm{C}}$ is known to vary extremely rapidly. For IntCal20 we have identified three such events around 774–5 AD, 993–4 AD and  $\sim$660 BC (Miyake et al. Reference Miyake, Nagaya, Masuda and Nakamura2012, Reference Miyake, Masuda and Nakamura2013; O’Hare et al. Reference O’Hare, Mekhaldi, Adolphi, Raisbeck, Aldahan, Anderberg, Beer, Christl, Fahrni, Synal, Park, Possnert, Southon, Bard and Muscheler2019). Such rapid 14C changes will typically not be modeled well by standard regression which will tend to smooth them out. However, by increasing the density of knots forming our spline basis in the vicinity of these rapid excursions we can better represent these significant features.

$\sim$660 BC (Miyake et al. Reference Miyake, Nagaya, Masuda and Nakamura2012, Reference Miyake, Masuda and Nakamura2013; O’Hare et al. Reference O’Hare, Mekhaldi, Adolphi, Raisbeck, Aldahan, Anderberg, Beer, Christl, Fahrni, Synal, Park, Possnert, Southon, Bard and Muscheler2019). Such rapid 14C changes will typically not be modeled well by standard regression which will tend to smooth them out. However, by increasing the density of knots forming our spline basis in the vicinity of these rapid excursions we can better represent these significant features.

Improvements in Output

The statistical innovations also mean that we can now provide several new facets to our output:

1. Annualized output—we are able to provide curve estimates on an annual grid enabling more detailed calibration. This will be needed in light of the increased demand to calibrate annual radiocarbon determinations. While we do not discuss the implications for calibration itself in this paper, such a detailed annual calibration curve will likely increase the potential for multimodal calendar age estimates which require significant care in interpretation, especially during periods of plateaus in the calibration curve. See the IntCal20 companion paper (van der Plicht et al. Reference van der Plicht, Bronk Ramsey, Heaton, Scott and Talamo2020 in this issue) for more details and an illustrative example with the calibration of the Minoan Santorini/Thera eruption.

2. Predictive intervals—if the IntCal20

$^{\rm14}{\rm{C}}$ data contain additional sources of variation beyond their laboratory quantified uncertainties (due to potential regional, species or growing season differences), i.e. they appear more widely spread around the calibration curve than can be explained by their quoted uncertainties, we will need to take this into account for calibration of new determinations too. Specifically, any

$^{\rm14}{\rm{C}}$ data contain additional sources of variation beyond their laboratory quantified uncertainties (due to potential regional, species or growing season differences), i.e. they appear more widely spread around the calibration curve than can be explained by their quoted uncertainties, we will need to take this into account for calibration of new determinations too. Specifically, any  $^{\rm14}{\rm{C}}$ determination a user calibrates against the curve is likely to contain similar levels of unseen additional variation. If we can assess the level of additional variation seen within the IntCal20 data, then we can incorporate this into calibration for a user by providing a predictive interval on the curve. Intuitively this predictive interval aims to incorporate both uncertainty in the value of the curve itself and potential additional uncertainty sources such as regional, species or growing season effects beyond the laboratory quoted uncertainty. These predictive intervals are therefore more relevant for calibration than curve intervals which do not incorporate or adapt to potential additional sources of variability.

$^{\rm14}{\rm{C}}$ determination a user calibrates against the curve is likely to contain similar levels of unseen additional variation. If we can assess the level of additional variation seen within the IntCal20 data, then we can incorporate this into calibration for a user by providing a predictive interval on the curve. Intuitively this predictive interval aims to incorporate both uncertainty in the value of the curve itself and potential additional uncertainty sources such as regional, species or growing season effects beyond the laboratory quoted uncertainty. These predictive intervals are therefore more relevant for calibration than curve intervals which do not incorporate or adapt to potential additional sources of variability.3. Posterior information arising from curve construction—the Bayesian implementation allows us to provide posterior estimates for many aspects of interest. For example, all of the records with uncertainties in their calendar timescales (the various floating tree-ring sequences, marine sediments, Lake Suigetsu and the speleothems) will be calibrated during the curve’s construction. We can provide these posterior calibrated age estimates along with other information such as the level of over-dispersion seen in the data, and posterior estimates of marine reservoir ages and dead carbon fractions.

4. Complete realizations of the curve from 0–55 cal kBP—historically IntCal output has consisted of pointwise posterior means and variances. However, the Bayesian approach provides a set of underlying curve realizations which provide covariance information on the value of the curve at any two calendar ages. When calibrating single determinations, this covariance does not affect the calendar age estimates obtained, i.e. calibrating against the pointwise posterior means and variances provides the same inference as calibrating against the set of individual curve realizations. However, when calibrating multiple determinations simultaneously, for example if we are seeking to determine the length of time elapsed between two determinations or fit a more complex model, use of these complete realizations in consequent calibration offers potential to improve insight. Work is planned by the group to explore how this may be best incorporated into existing calibration software.

We discuss some of these aspects in more detail, and present some of the output available, in Section 5 and the Supplementary Information.

3 A Brief Summary of Bayesian Splines, Errors-in-Variables and Predictive Intervals

For a typical calibration user the key elements of the new methodology consist of the change to Bayesian splines with the accompanying shift in the modeling and fitting domains; the continued recognition that many of the data have uncertainties in their calendar ages and the significant effect that has on curve estimation; and the use of predictive intervals on the published curve for calibration. We therefore commence with an intuitive explanation of these three elements. The technical details can be found later in Section 4. We note that, in our intuitive descriptions, the observational model may be further complicated by 14C determinations representing multiple, as opposed to single, calendar years and the inclusion of a reservoir age or dead carbon fraction for those observations which are not directly atmospheric. However, for clarity of exposition, we do not consider either of these factors here, and refer to Section 4 for details on how these additions can be incorporated.

3.1 Bayesian Splines and Choice of Modeling Domain

3.1.1 Frequentist Ideas

Suppose that we observe a function ![]() $f$, subject to noise, at a series of times

$f$, subject to noise, at a series of times ![]() ${\theta _i}$,

${\theta _i}$, ![]() $i = 1, \ldots ,n$,

$i = 1, \ldots ,n$,

where, for the time being, we assume that the times ![]() ${\theta _i}$ are known absolutely. To obtain a spline estimate for the unknown function we seek to find a function that provides a satisfactory compromise between going close to the data but yet does not overfit. This can be done by choosing the estimate

${\theta _i}$ are known absolutely. To obtain a spline estimate for the unknown function we seek to find a function that provides a satisfactory compromise between going close to the data but yet does not overfit. This can be done by choosing the estimate ![]() $\hat f$ that minimizes, over a suitable set of functions,

$\hat f$ that minimizes, over a suitable set of functions,

where ![]() ${\texttt{FIT}}(\,f,Y)$ measures the lack of agreement between a potential

${\texttt{FIT}}(\,f,Y)$ measures the lack of agreement between a potential ![]() $f$ and the observed

$f$ and the observed ![]() $Y$; and

$Y$; and ![]() ${\texttt{PEN}}(\,f)$ represents a penalty for functions that might be overfitting. Typically,

${\texttt{PEN}}(\,f)$ represents a penalty for functions that might be overfitting. Typically, ![]() ${\texttt{FIT}}(\,f,Y)$ consists of the sum-of-squares difference between

${\texttt{FIT}}(\,f,Y)$ consists of the sum-of-squares difference between ![]() $f({\theta _i})$ and

$f({\theta _i})$ and ![]() ${Y_i}$, while

${Y_i}$, while ![]() ${\texttt{PEN}}(\,f)$ assesses the roughness of a proposed

${\texttt{PEN}}(\,f)$ assesses the roughness of a proposed ![]() $f$ ensuring that the more variable f the larger the penalty given to it with the aim of preventing the spline estimate from overfitting the data. The parameter

$f$ ensuring that the more variable f the larger the penalty given to it with the aim of preventing the spline estimate from overfitting the data. The parameter ![]() $\lambda$ determines the relative trade-off between how one values fidelity to the observed data, i.e.

$\lambda$ determines the relative trade-off between how one values fidelity to the observed data, i.e. ![]() ${\texttt{FIT}}(\,f,Y)$, compared with function roughness,

${\texttt{FIT}}(\,f,Y)$, compared with function roughness, ![]() ${\texttt{PEN}}(\,f)$. A large

${\texttt{PEN}}(\,f)$. A large ![]() $\lambda$ will heavily penalize roughness and typically results in a smooth curve that is less close to the data; while a small

$\lambda$ will heavily penalize roughness and typically results in a smooth curve that is less close to the data; while a small ![]() $\lambda$ will mean the spline goes closer to the data but at the expense of being more variable.

$\lambda$ will mean the spline goes closer to the data but at the expense of being more variable.

3.1.2 Selection of Appropriate Fitting and Modeling Domains for Radiocarbon

Within the radiocarbon community there are three commonly used domainsFootnote 2: ![]() ${\Delta ^{14}}{\rm{C}}$,

${\Delta ^{14}}{\rm{C}}$, ![]() ${F^{14}}{\rm{C}}$ and the radiocarbon age. Given

${F^{14}}{\rm{C}}$ and the radiocarbon age. Given ![]() $g(\theta )$, the historical level of

$g(\theta )$, the historical level of ![]() ${\Delta ^{14}}{\rm{C}}$ in year

${\Delta ^{14}}{\rm{C}}$ in year ![]() $\theta$ cal BP, we can freely convert between the domains as follows:

$\theta$ cal BP, we can freely convert between the domains as follows:

${F^{14}}{\rm{C}}$ domain:

${F^{14}}{\rm{C}}$ domain:  $f(\theta ) = \left( {{1 \over {1000}}g(\theta ) + 1} \right){e^{ - \theta /8267}}.$ The value of

$f(\theta ) = \left( {{1 \over {1000}}g(\theta ) + 1} \right){e^{ - \theta /8267}}.$ The value of  ${F^{14}}{\rm{C}}$, or fraction modern (Reimer et al. Reference Reimer, Brown and Reimer2004b), denotes the relative proportion of radiocarbon to stable carbon in a sample compared to that of a sample with a conventional radiocarbon age of 0 14C yrs BP (i.e. mid-1950 AD) after accounting for isotopic fractionation. It is a largely linear calculation from the instrumental measurements meaning its uncertainties are approximately normal.

${F^{14}}{\rm{C}}$, or fraction modern (Reimer et al. Reference Reimer, Brown and Reimer2004b), denotes the relative proportion of radiocarbon to stable carbon in a sample compared to that of a sample with a conventional radiocarbon age of 0 14C yrs BP (i.e. mid-1950 AD) after accounting for isotopic fractionation. It is a largely linear calculation from the instrumental measurements meaning its uncertainties are approximately normal.Radiocarbon age domain:

$h(\theta ) = - 8033\ln f(\theta ).$ The radiocarbon age, in 14C yrs BP, is obtained from the fraction modern using Libby’s original half-life and without any calibration. Since this is a non-linear mapping of

$h(\theta ) = - 8033\ln f(\theta ).$ The radiocarbon age, in 14C yrs BP, is obtained from the fraction modern using Libby’s original half-life and without any calibration. Since this is a non-linear mapping of  ${F^{14}}{\rm{C}}$, the uncertainties are no longer even approximately normally distributed as we approach the limit of the technique.

${F^{14}}{\rm{C}}$, the uncertainties are no longer even approximately normally distributed as we approach the limit of the technique.

When fitting a smoothing spline, we have some flexibility in the precise choice of ![]() ${\texttt{FIT}}(\,f,Y)$, our assessment of fit, and our roughness penalty

${\texttt{FIT}}(\,f,Y)$, our assessment of fit, and our roughness penalty ![]() ${\texttt{PEN}}(\,f)$. For radiocarbon purposes, the natural domain to assess the quality of fit of a proposed calibration curve to observations is the

${\texttt{PEN}}(\,f)$. For radiocarbon purposes, the natural domain to assess the quality of fit of a proposed calibration curve to observations is the ![]() ${F^{14}}{\rm{C}}$ domain, the raw scale on which the determinations are obtained. In this

${F^{14}}{\rm{C}}$ domain, the raw scale on which the determinations are obtained. In this ![]() ${F^{14}}{\rm{C}}$ domain, our measurement uncertainties are symmetric. Conversely, in the radiocarbon age domain these uncertainties become asymmetric as we progress back in time. We therefore choose

${F^{14}}{\rm{C}}$ domain, our measurement uncertainties are symmetric. Conversely, in the radiocarbon age domain these uncertainties become asymmetric as we progress back in time. We therefore choose

where ![]() ${F_i}$ are the observed F 14C values of the determinations and

${F_i}$ are the observed F 14C values of the determinations and ![]() ${\sigma _i}$ their associated uncertainties in the

${\sigma _i}$ their associated uncertainties in the ![]() ${F^{14}}{\rm{C}}$ domain.

${F^{14}}{\rm{C}}$ domain.

![]() ${F^{14}}{\rm{C}}$ is not however the most appropriate domain in which to assess roughness since it exhibits exponential decay over time making equitable penalization of potential calibration curves more difficult. Instead a more natural choice is the

${F^{14}}{\rm{C}}$ is not however the most appropriate domain in which to assess roughness since it exhibits exponential decay over time making equitable penalization of potential calibration curves more difficult. Instead a more natural choice is the ![]() ${\Delta ^{14}}{\rm{C}}$ domain where, a priori, one might expect a calibration curve to display approximately equal roughness over its length. For our penalty function we therefore choose

${\Delta ^{14}}{\rm{C}}$ domain where, a priori, one might expect a calibration curve to display approximately equal roughness over its length. For our penalty function we therefore choose

where ![]() $g''(\theta )$ is the second derivative of the proposed level of

$g''(\theta )$ is the second derivative of the proposed level of ![]() ${\Delta ^{14}}{\rm{C}}$, a standard penalty for function roughness.

${\Delta ^{14}}{\rm{C}}$, a standard penalty for function roughness.

We summarize this by saying that we perform modeling in the Δ 14C domain as it is here we penalize roughness; while we perform fitting in the ![]() ${F^{14}}{\rm{C}}$ domain since it is here we assess fidelity of the curve to the raw observations. Since, given

${F^{14}}{\rm{C}}$ domain since it is here we assess fidelity of the curve to the raw observations. Since, given ![]() $\theta$, the transformation from

$\theta$, the transformation from ![]() ${F^{14}}{\rm{C}}$ to

${F^{14}}{\rm{C}}$ to ![]() ${\Delta ^{14}}{\rm{C}}$ is affine we can utilize these different modeling and fitting domains while still maintaining a practical spline estimation approach.

${\Delta ^{14}}{\rm{C}}$ is affine we can utilize these different modeling and fitting domains while still maintaining a practical spline estimation approach.

3.1.3 Bayesian Reframing

To reinterpret the above idea in a Bayesian framework we can split our functional

into two distinct parts. The penalty component ![]() $\int {\left\{ {g''(\theta )} \right\}^2}d\theta$ can be considered as specifying the prior distribution on the space of calibration curves; more precisely, it gives the negative log density of the prior. Intuitively this summarizes our prior beliefs about the form of the calibration curve before we observe any actual data. We then wish to update this prior belief, in light of the observed data, to obtain our posterior. This updating is achieved via the fitting component

$\int {\left\{ {g''(\theta )} \right\}^2}d\theta$ can be considered as specifying the prior distribution on the space of calibration curves; more precisely, it gives the negative log density of the prior. Intuitively this summarizes our prior beliefs about the form of the calibration curve before we observe any actual data. We then wish to update this prior belief, in light of the observed data, to obtain our posterior. This updating is achieved via the fitting component ![]() $\sum\nolimits_{i = 1}^n {1 \over {\sigma _i^2}}{\left(\, {f({\theta _i}) - {F_i}} \right)^2}$ which represents the negative log-likelihood of the observed data under an assumption that each

$\sum\nolimits_{i = 1}^n {1 \over {\sigma _i^2}}{\left(\, {f({\theta _i}) - {F_i}} \right)^2}$ which represents the negative log-likelihood of the observed data under an assumption that each ![]() ${F_i} \sim N(\,f({\theta _i}),\sigma _i^2)$. The value of

${F_i} \sim N(\,f({\theta _i}),\sigma _i^2)$. The value of ![]() $S(\,f)$ then represents the negative posterior log-density for a potential calibration curve

$S(\,f)$ then represents the negative posterior log-density for a potential calibration curve ![]() $f$.

$f$.

This idea is illustrated in Figure 1. In panel (a) we present three potential calibration curves in the ![]() ${\Delta ^{14}}{\rm{C}}$ domain. Using just our prior, we give each curve an initial weight corresponding to their roughness. The black curve is the least rough and so would be given highest prior weight as a plausible potential calibration curve. The green curve is the roughest and so has least weight according to our prior. Each of these

${\Delta ^{14}}{\rm{C}}$ domain. Using just our prior, we give each curve an initial weight corresponding to their roughness. The black curve is the least rough and so would be given highest prior weight as a plausible potential calibration curve. The green curve is the roughest and so has least weight according to our prior. Each of these ![]() ${\Delta ^{14}}{\rm{C}}$ curves is then converted into the symmetric

${\Delta ^{14}}{\rm{C}}$ curves is then converted into the symmetric ![]() ${F^{14}}{\rm{C}}$ space and compared with our observations as shown in panel (b). The plausibility of each curve is then updated to incorporate the fit to this data. Based upon this, the red curve would have a higher posterior density as it fits relatively closely while not being overly rough. Both the green and black curves would have a low posterior density and so be considered highly implausible as they do not pass near the data. Use of MCMC allows us to generate any number of plausible calibration curves drawn from the posterior that provide a satisfactory trade-off between the roughness prior and fidelity to the observations. Some such realizations are shown in panels (c) and (d) in both the

${F^{14}}{\rm{C}}$ space and compared with our observations as shown in panel (b). The plausibility of each curve is then updated to incorporate the fit to this data. Based upon this, the red curve would have a higher posterior density as it fits relatively closely while not being overly rough. Both the green and black curves would have a low posterior density and so be considered highly implausible as they do not pass near the data. Use of MCMC allows us to generate any number of plausible calibration curves drawn from the posterior that provide a satisfactory trade-off between the roughness prior and fidelity to the observations. Some such realizations are shown in panels (c) and (d) in both the ![]() ${\Delta ^{14}}{\rm{C}}$ and

${\Delta ^{14}}{\rm{C}}$ and ![]() ${F^{14}}{\rm{C}}$ domains respectively. These realizations are then summarized to produce the final IntCal20 curve.

${F^{14}}{\rm{C}}$ domains respectively. These realizations are then summarized to produce the final IntCal20 curve.

Figure 1 An illustration of Bayesian splines. Panel (a) shows some potential calibration curves in the ![]() ${\Delta ^{14}}{\rm{C}}$ domain drawn from the prior. These are then compared with the observed data in the F 14C domain as shown in panel (b) to form our Bayesian posterior. The bottom two panels (c) and (d) show posterior realizations of potential curves (shown in Δ 14C and

${\Delta ^{14}}{\rm{C}}$ domain drawn from the prior. These are then compared with the observed data in the F 14C domain as shown in panel (b) to form our Bayesian posterior. The bottom two panels (c) and (d) show posterior realizations of potential curves (shown in Δ 14C and ![]() ${F^{14}}{\rm{C}}$ space respectively) obtained via MCMC that provide a satisfactory trade-off between agreement with our prior penalizing over-roughness and the fit to our observed data.

${F^{14}}{\rm{C}}$ space respectively) obtained via MCMC that provide a satisfactory trade-off between agreement with our prior penalizing over-roughness and the fit to our observed data.

3.1.4 Variable Smoothing and Knot Selection

A further choice to be made when spline smoothing is the set of functions (or potential calibration curves) to search over for our functional ![]() $S(\,f)$. This set is called a basis. We use cubic splines where this basis is determined by specifying what are known as knots at distinct calendar times. The more knots one has in a particular time period, the more the spline can vary. One common option, known as a smoothing spline, is to place a knot at every calendar age

$S(\,f)$. This set is called a basis. We use cubic splines where this basis is determined by specifying what are known as knots at distinct calendar times. The more knots one has in a particular time period, the more the spline can vary. One common option, known as a smoothing spline, is to place a knot at every calendar age ![]() ${\theta _i}$ for

${\theta _i}$ for ![]() $i = 1, \ldots ,n$. However, since we have a very large number of observations

$i = 1, \ldots ,n$. However, since we have a very large number of observations ![]() $n$ this is computationally impractical. Instead we use P-splines (see Berry et al. Reference Berry, Carroll and Ruppert2002, for details) where we select a smaller number of knots; this is equivalent to restricting the potential calibration curves to a somewhat smaller subspace of functions.

$n$ this is computationally impractical. Instead we use P-splines (see Berry et al. Reference Berry, Carroll and Ruppert2002, for details) where we select a smaller number of knots; this is equivalent to restricting the potential calibration curves to a somewhat smaller subspace of functions.

In implementing P-splines we need to make sure that the curves we consider are still able to provide sufficient detail for a calibration user and identify fine scale features such as solar cycles where we have the ability to do so. The data on which we base our curve have highly variable density. In some periods, such as recent dendrodated trees, we have a great density of determinations while in others the underlying data is more sparse. To adapt to this and keep required detail, we choose a large number of knots and place them at calendar age quantiles of the data to provide variable smoothing. This approach of locating the knots at observed quantiles is standard in the regression literature (e.g. Harrell Reference Harrell2001). Where our data is dense, we can pick out the required fine detail but where the data is more sparse we smooth more significantly. An example can be seen in Figure 2. This figure also highlights how we pack additional knots around known Miyake-type events, narrow spikes (sub-annual) of increased ![]() $^{\rm14}{\rm{C}}$ production, to enable us to better retain these events in the final curve. For more details on choice of knots and placement see Sections 4.3.4 and 4.4.3.

$^{\rm14}{\rm{C}}$ production, to enable us to better retain these events in the final curve. For more details on choice of knots and placement see Sections 4.3.4 and 4.4.3.

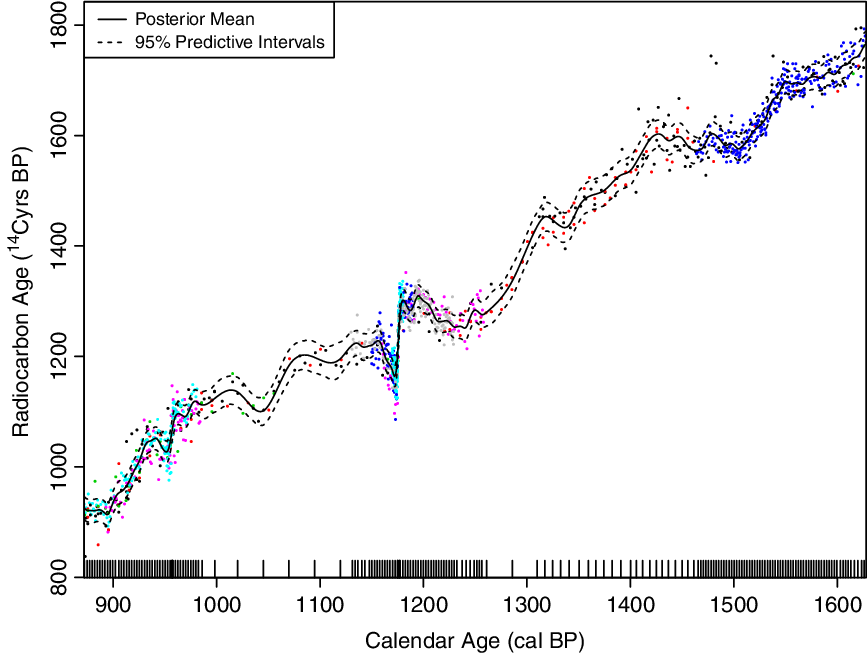

Figure 2 Variable smoothing and knot selection. Shown as a rug of tick marks along the bottom are the locations of the knots for the cubic spline. These are placed at quantiles of the observed calendar ages to provide variable smoothing. In dense regions, we can identify more detail in the calibration curve; while where the underlying data is less dense we perform more smoothing. In particular, note the additional knots placed around the two Miyake-type events (i.e. 957 and 1176 cal BP) which allow the curve to vary much more rapidly at these times. The points from different datasets within the IntCal database are shown in different colors to distinguish them.

3.2 Errors-in-Variables Regression

3.2.1 Calendar Age Uncertainty

Within the IntCal database (http://www.intcal.org/), many of the determinations have calendar ages which are not known absolutely. This is particularly the case as we progress further back in time and calendar age estimates are constructed from uranium thorium (U-Th) dating, e.g. speleothems (Southon et al. Reference Southon, Noronha, Cheng, Edwards and Wang2012; Cheng et al. Reference Cheng, Edwards, Southon, Matsumoto, Feinberg, Sinha, Zhou, Li, Li, Xu, Chen, Tan, Wang, Wang and Ning2018; Beck et al. Reference Beck, Richards, Edwards, Silverman, Smart, Donahue, Hererra-Osterheld, Burr, Calsoyas, Jull and Biddulph2001; Hoffmann et al. Reference Hoffmann, Beck, Richards, Smart, Singarayer, Ketchmark and Hawkesworth2010) and corals (Bard et al. Reference Bard, Hamelin, Fairbanks and Zindler1990, Reference Bard, Arnold, Hamelin, Tisnerat-Laborde and Cabioch1998, Reference Bard, Ménot-Combes and Rostek2004; Cutler et al. Reference Cutler, Gray, Burr, Edwards, Taylor, Cabioch, Beck, Cheng and Moore2004; Durand et al. Reference Durand, Deschamps, Bard, Hamelin, Camoin, Thomas, Henderson, Yokoyama and Matsuzaki2013; Fairbanks et al. Reference Fairbanks, Mortlock, Chiu, Cao, Kaplan, Guilderson, Fairbanks, Bloom, Grootes and Nadeau2005; Edwards et al. Reference Edwards, Beck, Burr, Donahue, Chappell, Druffel and Taylor1993; Burr et al. Reference Burr, Beck, Taylor, Recy, Edwards, Cabioch, Correge, Donahue and O’Malley1998, Reference Burr, Galang, Taylor, Gallup, Edwards, Cutler and Quirk2004); varve counting, e.g. parts of Cariaco Basin (Hughen et al. Reference Hughen, Southon, Bertrand, Frantz and Zermeño2004) and Lake Suigetsu (Bronk Ramsey et al. Reference Bronk Ramsey, Heaton, Schlolaut, Staff, Bryant, Brauer, Lamb, Marshall and Nakagawa2020 in this issue); and palaeoclimate tuning/tie-pointing, e.g. other parts of Cariaco Basin (Hughen and Heaton Reference Hughen and Heaton2020 in this issue), and the Pakistan and Iberian Margins (Bard et al. Reference Bard, Ménot, Rostek, Licari, Böning, Edwards, Cheng, Wang and Heaton2013). Furthermore, in the case of several of the floating tree-ring sequences, we have only a relative set of calendar ages and no absolute age estimate. Regression in this situation is called errors-in-variables since we have errors/uncertainties in both the calendar age and radiocarbon determination variables. For these observations we therefore observe pairs ![]() ${\left\{ {({F_i},{T_i})} \right\}_{i \in {\cal I}}}$ where:

${\left\{ {({F_i},{T_i})} \right\}_{i \in {\cal I}}}$ where:

Here ![]() $f( \cdot )$ is our calibration curve of interest in the F 14C domain;

$f( \cdot )$ is our calibration curve of interest in the F 14C domain; ![]() ${\varepsilon _i} \sim N(0,\sigma _i^2)$ independently; and

${\varepsilon _i} \sim N(0,\sigma _i^2)$ independently; and ![]() ${\psi _i}$ describes the uncertainty in our calendar age estimate. The form of this calendar age uncertainty varies between the datasets. While there is no restriction on the distribution of

${\psi _i}$ describes the uncertainty in our calendar age estimate. The form of this calendar age uncertainty varies between the datasets. While there is no restriction on the distribution of ![]() ${\psi _i}$ for our Bayesian spline approach, we model all these calendar age uncertainties as normally distributed but with appropriate covariances. For some sets they are considered independent, e.g. corals dated by U-Th; while for others, e.g. floating tree-ring sequences and those records dated by varve counting or palaeoclimate tie-pointing (Heaton et al. Reference Heaton, Bard and Hughen2013), there is considerable dependence between the observations. For more detail on the various covariance structures in the calendar age uncertainties see Niu et al. (Reference Niu, Heaton, Blackwell and Buck2013).

${\psi _i}$ for our Bayesian spline approach, we model all these calendar age uncertainties as normally distributed but with appropriate covariances. For some sets they are considered independent, e.g. corals dated by U-Th; while for others, e.g. floating tree-ring sequences and those records dated by varve counting or palaeoclimate tie-pointing (Heaton et al. Reference Heaton, Bard and Hughen2013), there is considerable dependence between the observations. For more detail on the various covariance structures in the calendar age uncertainties see Niu et al. (Reference Niu, Heaton, Blackwell and Buck2013).

3.2.2 Importance of Recognizing Calendar Age Uncertainty

Incorporation of calendar age uncertainty in the construction of the IntCal calibration curves has occurred since IntCal04 (Reimer et al. Reference Reimer, Baillie, Bard, Bayliss, Beck, Bertrand, Blackwell, Buck, Burr, Cutler, Damon, Edwards, Fairbanks, Friedrich, Guilderson, Hogg, Hughen, Kromer, Manning, Bronk Ramsey, Reimer, Remmele, Southon, Stuiver, Talamo, Taylor, van der Plicht and Weyhenmeyer2004a) and is key for reliable curve construction. It was therefore crucial to retain within the new methodology. A range of statistical methods have been developed to deal with errors-in-variables regression. From the frequentist, non-Bayesian, perspective Fan and Truong (Reference Fan and Truong1993) introduced a kernel-based approach which achieves global consistency; Cook and Stefanski (Reference Cook and Stefanski1994) proposed a more general approach known as SIMEX. These approaches were not however considered suitable for our application due to the genuine prior information we have on certain aspects of the data; the wider, almost universal, use of Bayesian methodology within radiocarbon calibration; and the independent interest in several aspects of the calibration data which could be provided more simply through a Bayesian method. We therefore began the development of our method using the Bayesian splines of Berry et al. (Reference Berry, Carroll and Ruppert2002).

The effect of not recognizing calendar age uncertainty, where it is present, in regression is quite varied. In the case that the calendar age uncertainties are entirely independent of one another, such as U-Th dating, not recognizing calendar age uncertainty will typically result in curve estimates that are overly smooth and do not attain the peaks and troughs of the true underlying function. See Samworth and Poore (Reference Samworth and Poore2005) for an illustrative case study. However, in the case of IntCal, the situation is made more complicated by the shared dependence of the calendar age estimates within particular datasets. Specifically, the entire timescale for, e.g. Hulu Cave, is not the same as the Suigetsu or Cariaco timescale. As a consequence, all of these individual datasets may show the same overall features but at slightly different times. We require our method to recognize that these different timescales may need to be aligned, within their respective uncertainties, to keep these shared features. This requires us to shift multiple ages jointly by stretching/squashing the timescales accordingly. A failure to adapt to this joint calendar age uncertainty can give curve estimates which either introduce spurious wiggles as the curve flips between the different datasets or lose major features entirely.

Illustration

We provide in Figure 3 an illustrative example of the need to incorporate calendar age uncertainty. For simplicity, in this example, we fit and model our spline in the same domain. We consider a straightforward underlying function which we wish to reconstruct from 100 noisy observations ![]() $\left\{ {({Y_i},{T_i})} \right\}_{i = 1}^{100}$

$\left\{ {({Y_i},{T_i})} \right\}_{i = 1}^{100}$

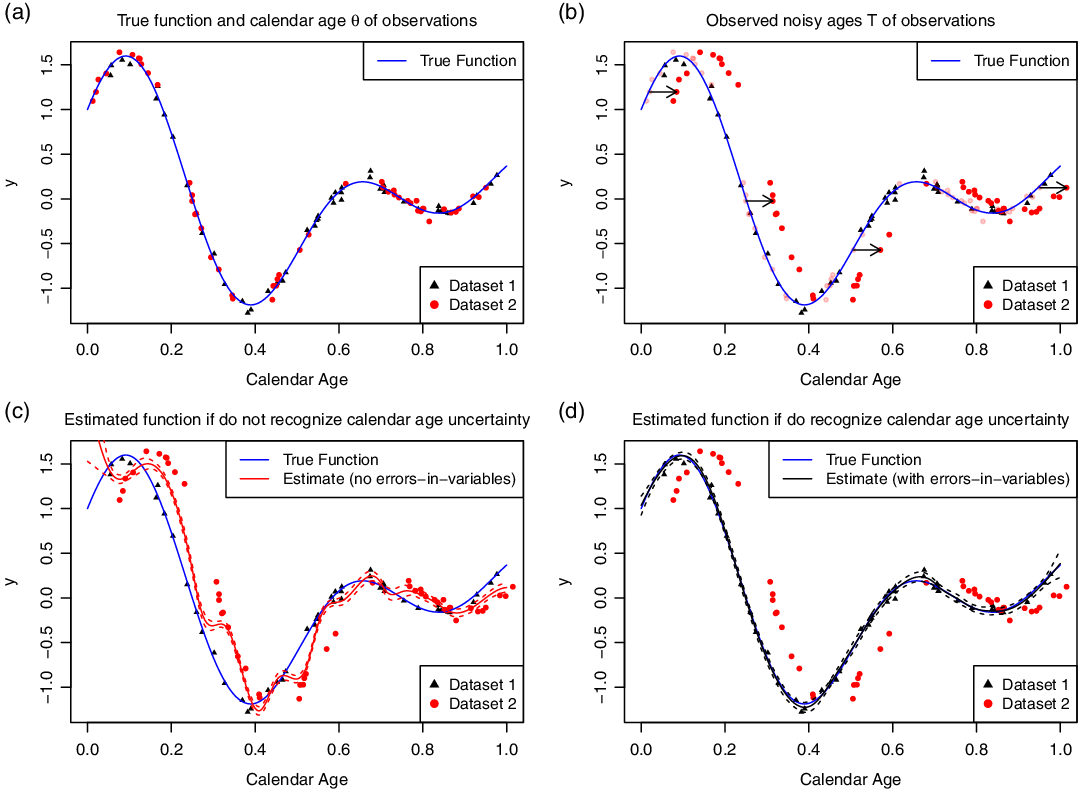

Figure 3 The importance of recognizing calendar age uncertainty (and correctly representing covariance within that uncertainty) when constructing a curve based on data arising from records with different observed timescales. Panel (a) shows the true, underlying, calendar ages of the data in two different records; while panel (b) shows a joint shift in the observed calendar ages within record 2. In such a case that observed timescales in two records are offset from one another, if we ignore this calendar age uncertainty then our spline estimate will introduce spurious variation as it flips between the records as in panel (c). Conversely if we incorporate such calendar age uncertainty and accurately represent it then we can still recover the underlying function accurately, as shown in (d).

where our underlying ![]() ${\theta _i} \sim Beta(1.1,1.1)$ and observed

${\theta _i} \sim Beta(1.1,1.1)$ and observed ![]() ${Y_i} \sim N(\,f({\theta _i}{),0.05^2})$ for

${Y_i} \sim N(\,f({\theta _i}{),0.05^2})$ for ![]() $i = 1, \ldots ,100$. Further, let us assume that these observations arise from two different sediment cores, 50 from core 1 (shown as black triangles) and 50 from core 2 (shown as red dots) as seen in panel (a). Within core 1, the calendar ages are known absolutely. However, within core 2 the observed timescale is somewhat shifted/biased so that when we observe the calendar ages

$i = 1, \ldots ,100$. Further, let us assume that these observations arise from two different sediment cores, 50 from core 1 (shown as black triangles) and 50 from core 2 (shown as red dots) as seen in panel (a). Within core 1, the calendar ages are known absolutely. However, within core 2 the observed timescale is somewhat shifted/biased so that when we observe the calendar ages ![]() $T$ in this core they all share the same joint shift from their true values, i.e.

$T$ in this core they all share the same joint shift from their true values, i.e.

where ![]() $\psi \sim N{(0,0.1^2})$. The observed pairs

$\psi \sim N{(0,0.1^2})$. The observed pairs ![]() $\left\{ {({Y_i},{T_i})} \right\}_{i = 1}^{100}$ are presented in panel (b) showing the shift in timescale within core 2. If we attempt to reconstruct the function using splines based upon our observed

$\left\{ {({Y_i},{T_i})} \right\}_{i = 1}^{100}$ are presented in panel (b) showing the shift in timescale within core 2. If we attempt to reconstruct the function using splines based upon our observed ![]() $\left\{ {({Y_i},{T_i})} \right\}_{i = 1}^{100}$ without recognizing the calendar age uncertainty, i.e. assuming both cores are on the same timescale, then we obtain the estimate in the panel (c). The estimate is spuriously variable as the spline will try to pass near all of the data on their non-comparable timescales. Conversely, if we recognize that the timescale in core 2 may need to be shifted onto the timescale of core 1, we obtain the estimate shown in panel (d). If we inform our Bayesian method that the calendar age observations in core 2 are subject to uncertainty, it will estimate the size of the joint shift needed to register core 2 onto the true timescale of core 1 and simultaneously reconstruct the true underlying function.

$\left\{ {({Y_i},{T_i})} \right\}_{i = 1}^{100}$ without recognizing the calendar age uncertainty, i.e. assuming both cores are on the same timescale, then we obtain the estimate in the panel (c). The estimate is spuriously variable as the spline will try to pass near all of the data on their non-comparable timescales. Conversely, if we recognize that the timescale in core 2 may need to be shifted onto the timescale of core 1, we obtain the estimate shown in panel (d). If we inform our Bayesian method that the calendar age observations in core 2 are subject to uncertainty, it will estimate the size of the joint shift needed to register core 2 onto the true timescale of core 1 and simultaneously reconstruct the true underlying function.

Implications for Visually Assessing Curve Fit

While it is important to incorporate errors-in-variables in curve construction, and accurately represent any dependence in the calendar age uncertainties, this does cause some difficulty in visually assessing the quality of fit between the raw constituent data and the final IntCal curve. Since the proposed method can shift the calendar ages of the observed data left and right within their uncertainties as described, one cannot estimate the final curve’s goodness-of-fit by eye using only the radiocarbon age axis if the raw data are only plotted at their initial, observed calendar ages. The method will have tried to align shared features even if they occur at somewhat different observed times within the different sets. This should be taken into consideration when viewing the final curve against the raw data. We provide the posterior calendar age estimates for the true calendar ages ![]() ${\theta _i}$ for all the data. The difficulty of assessing the fit of the curve by eye is further compounded by the offsets in

${\theta _i}$ for all the data. The difficulty of assessing the fit of the curve by eye is further compounded by the offsets in ![]() $^{\rm14}{\rm{C}}$ described in Section 4.4, in particular the marine reservoir ages which vary significantly over time and so will change as the MCMC updates the calendar ages of the data.

$^{\rm14}{\rm{C}}$ described in Section 4.4, in particular the marine reservoir ages which vary significantly over time and so will change as the MCMC updates the calendar ages of the data.

3.3 Predictive Intervals and Over-Dispersion

3.3.1 Background and Motivation

As well as creating a curve which has the correct posterior mean, it is key to make sure we provide appropriate intervals on the curve to ensure that, when used for calibration, the calendar age estimates produced are not over- or under-precise. This becomes particularly relevant for IntCal20 due to the large increase in data available to create the curve, and also the increased measurement precision provided by current laboratories. In addition to the laboratory reported uncertainty, there are a wide range of possible further sources of variation in the recorded ![]() $^{\rm14}{\rm{C}}$ within objects of the same age, for example determinations come from different locations; have different local environments; tree rings may be of different species; and have different periods of growth due to local weather and so may have differing elements of wood from late/early growth. Even when the same sample is measured in different laboratories, we have evidence of a greater level of observation spread (i.e. over-dispersion) in the

$^{\rm14}{\rm{C}}$ within objects of the same age, for example determinations come from different locations; have different local environments; tree rings may be of different species; and have different periods of growth due to local weather and so may have differing elements of wood from late/early growth. Even when the same sample is measured in different laboratories, we have evidence of a greater level of observation spread (i.e. over-dispersion) in the ![]() $^{\rm14}{\rm{C}}$ measurements within objects from the same calendar year than the laboratory reported uncertainties would support (see e.g. Scott et al. Reference Scott, Naysmith and Cook2017). Recognizing this potential over-dispersion, whatever its cause, is important both for curve estimation and resultant calibration for IntCal20. Specifically, if there is more variation in determinations from the same calendar year than the uncertainties reported by the laboratory, we need to make sure we incorporate that in our modeling, and also recognize its existence in the objects users will then calibrate against the IntCal20 curve.

$^{\rm14}{\rm{C}}$ measurements within objects from the same calendar year than the laboratory reported uncertainties would support (see e.g. Scott et al. Reference Scott, Naysmith and Cook2017). Recognizing this potential over-dispersion, whatever its cause, is important both for curve estimation and resultant calibration for IntCal20. Specifically, if there is more variation in determinations from the same calendar year than the uncertainties reported by the laboratory, we need to make sure we incorporate that in our modeling, and also recognize its existence in the objects users will then calibrate against the IntCal20 curve.

We achieve this through the inclusion of a term to quantify the level of over-dispersion which we adaptively estimate, based on the high-quality and dense IntCal data, within our curve construction. This ensures that our IntCal20 curve does not become over-precise as an estimate of the Northern Hemispheric average. However, this alone is not sufficient for calibration since any additional sources of variation seen within the IntCal data are also likely to be present in uncalibrated determinations. We must therefore propagate this over-dispersion through to the calibration process itself. This is achieved using predictive curve intervals which incorporate not only uncertainty in the Northern Hemispheric average atmospheric curve but also the potential additional sources of variation seen in individual ![]() $^{\rm14}{\rm{C}}$ determinations. Importantly, if no such additional variability exists then our model will estimate this appropriately, however if such additional sources of variability do seem present in the calibration data, our method will also recognize this and adapt accordingly. Without such an over-dispersion term then, as we get more data, the calibration curve will have intervals that become narrower and narrower even if the underlying data suggests considerably higher variability. Eventually, this would mean that the data entering the curve itself would potentially not calibrate to their known ages.

$^{\rm14}{\rm{C}}$ determinations. Importantly, if no such additional variability exists then our model will estimate this appropriately, however if such additional sources of variability do seem present in the calibration data, our method will also recognize this and adapt accordingly. Without such an over-dispersion term then, as we get more data, the calibration curve will have intervals that become narrower and narrower even if the underlying data suggests considerably higher variability. Eventually, this would mean that the data entering the curve itself would potentially not calibrate to their known ages.

Such an idea of irreducible uncertainty was introduced in Niu et al. (Reference Niu, Heaton, Blackwell and Buck2013) but here we extend the idea further and generate predictive curve intervals which are more relevant for calibration users. Importantly, this does not however mean that we produce a calibration tool that any measurement (no matter its quality) can be calibrated against—we wish to maintain a calibration curve which has basic minima for data quality for both what goes into its construction and also what can be reliably calibrated using it.

3.3.2 The Over-Dispersion Model: Additive Errors Scaling with  $\sqrt {{F^{14}}{\rm{C}}}$

$\sqrt {{F^{14}}{\rm{C}}}$

The basic tree-ring model for an annual measurement assumes that a determination of calendar age ![]() $\theta$ arises from a hemispherically uniform atmospheric level of

$\theta$ arises from a hemispherically uniform atmospheric level of ![]() $^{\rm14}{\rm{C}}$ shared by all determinations of the same calendar age. Under this model, the only uncertainty is that reported by the laboratory so that, in the F 14C domain, the observed value is

$^{\rm14}{\rm{C}}$ shared by all determinations of the same calendar age. Under this model, the only uncertainty is that reported by the laboratory so that, in the F 14C domain, the observed value is

where ![]() ${\theta _i}$ is the year the determination represents; and

${\theta _i}$ is the year the determination represents; and ![]() ${\varepsilon _i} \sim N(0,\sigma _i^2)$ with

${\varepsilon _i} \sim N(0,\sigma _i^2)$ with ![]() ${\sigma _i}$ the uncertainty reported by the laboratory. The function

${\sigma _i}$ the uncertainty reported by the laboratory. The function ![]() $f(\theta )$ is our unique estimate of the hemispheric level of

$f(\theta )$ is our unique estimate of the hemispheric level of ![]() ${F^{14}}{\rm{C}}$ present in the atmosphere at calendar age

${F^{14}}{\rm{C}}$ present in the atmosphere at calendar age ![]() $\theta$.

$\theta$.

Currently it is not feasible to identify the specific sources that may potentially contribute extra variability beyond that reported by the laboratory (e.g. regional, species, growing season differences) and so we aim to cover them all in a unified approach. We therefore modify the above model to allow any determination to have an additional and independent source of potential variability ![]() ${\eta _i}$ beyond that reported by the laboratory, i.e.

${\eta _i}$ beyond that reported by the laboratory, i.e.

where ![]() ${\eta _i} \sim N(0,\tau _i^2)$ are independent random effects with unknown variance

${\eta _i} \sim N(0,\tau _i^2)$ are independent random effects with unknown variance ![]() $\tau _i^2$. To create the curve we simultaneously estimate both

$\tau _i^2$. To create the curve we simultaneously estimate both ![]() ${\tau _i}$, the level of over-dispersion, and the calibration curve

${\tau _i}$, the level of over-dispersion, and the calibration curve ![]() $f( \cdot )$.

$f( \cdot )$.

After investigation of several options, see Section 5.1 and the Supplementary Information, we model the level of over-dispersion (i.e. the level of any additional variability) to scale proportionally with the underlying value of ![]() $\sqrt {f(\theta )}$ i.e.

$\sqrt {f(\theta )}$ i.e. ![]() ${\tau _i} = \tau \sqrt {f({\theta _i})}$ so that

${\tau _i} = \tau \sqrt {f({\theta _i})}$ so that ![]() ${\eta _i} \sim N(0,{\tau ^2}f(\theta ))$. We choose a prior for the constant of proportionality

${\eta _i} \sim N(0,{\tau ^2}f(\theta ))$. We choose a prior for the constant of proportionality ![]() $\tau$ based upon data taken from the SIRI inter-comparison project (Scott et al. Reference Scott, Naysmith and Cook2017), and update this prior within curve construction based upon the IntCal data. The information provided by the very large volume of IntCal data dominates the SIRI prior and so the posterior estimate for the level of over-dispersion is primarily based upon the high-quality IntCal data.

$\tau$ based upon data taken from the SIRI inter-comparison project (Scott et al. Reference Scott, Naysmith and Cook2017), and update this prior within curve construction based upon the IntCal data. The information provided by the very large volume of IntCal data dominates the SIRI prior and so the posterior estimate for the level of over-dispersion is primarily based upon the high-quality IntCal data.

3.3.3 Implications for Calibration

A user seeking to calibrate a high-quality 14C determination against the IntCal20 curve is likely to have a measurement that has been subject to similar additional sources of potential variation to whatever is seen in the IntCal data, and so have a similar level of over-dispersion. However, such a user typically has no way of assessing this over-dispersion themselves. Consequently, they should calibrate their determination using predictive curve estimates which additionally account for potential over-dispersion in their own determination. These predictive estimates are based upon the posterior of

where ![]() $\eta (\theta ) \sim N(0,{\tau ^2}f(\theta ))$ and

$\eta (\theta ) \sim N(0,{\tau ^2}f(\theta ))$ and ![]() $\tau$ is the posterior estimate of over-dispersion based upon the IntCal data. For IntCal20 we therefore report this predictive interval since it is more relevant for calibration. It is slightly wider than the corresponding credible interval for

$\tau$ is the posterior estimate of over-dispersion based upon the IntCal data. For IntCal20 we therefore report this predictive interval since it is more relevant for calibration. It is slightly wider than the corresponding credible interval for ![]() $f$ but more likely to give accurate calibrated dates.

$f$ but more likely to give accurate calibrated dates.

Note that the level of over-dispersion, and hence the predictive intervals, we incorporate into the IntCal20 curve is based upon the high-quality, and screened, IntCal database. If a new uncalibrated ![]() $^{\rm14}{\rm{C}}$ determination has additional sources of variability beyond those present in the IntCal tree-ring database (e.g. tree-ring species or locations not represented in IntCal, or a non-tree-ring sample) then this may mean that the level of over-dispersion for that uncalibrated determination is higher than that incorporated within the IntCal prediction intervals. This would result in a potentially over-precise calibrated age estimate. Users are advised to be cautious in such circumstances.

$^{\rm14}{\rm{C}}$ determination has additional sources of variability beyond those present in the IntCal tree-ring database (e.g. tree-ring species or locations not represented in IntCal, or a non-tree-ring sample) then this may mean that the level of over-dispersion for that uncalibrated determination is higher than that incorporated within the IntCal prediction intervals. This would result in a potentially over-precise calibrated age estimate. Users are advised to be cautious in such circumstances.

4 Creating the curve

As in previous versions of IntCal, the IntCal20 curve itself is created in two linked sections. Firstly we create the more recent part of the curve (extending from 0 cal kBP back to approximately 14 cal kBP) which is predominantly based upon dendrodated tree-ring determinations. Secondly we create the older part of the curve (from approximately 14 cal kBP back to 55 cal kBP) which is based upon a wider range of material, e.g. corals, macrofossils, forams, speleothems as well as five floating tree-ring chronologies, that are of uncertain calendar ages. Furthermore, these older ![]() $^{\rm14}{\rm{C}}$ determinations are often not direct atmospheric measurements and hence have marine reservoir ages or dead carbon fractions. We therefore split our technical description of the approach accordingly. The two curve sections are stitched together to ensure smoothness and continuity through appropriate design of spline basis and conditioning the older part of the curve on the, already estimated, more recent section. This means that we can still produce sets of complete curve realizations from 0–55 cal kBP. Future work will aim to adapt the approach into a single step, updating the entire 0–55 cal kBP range as one.

$^{\rm14}{\rm{C}}$ determinations are often not direct atmospheric measurements and hence have marine reservoir ages or dead carbon fractions. We therefore split our technical description of the approach accordingly. The two curve sections are stitched together to ensure smoothness and continuity through appropriate design of spline basis and conditioning the older part of the curve on the, already estimated, more recent section. This means that we can still produce sets of complete curve realizations from 0–55 cal kBP. Future work will aim to adapt the approach into a single step, updating the entire 0–55 cal kBP range as one.

4.1 Notation

Calibration Curve by Domain

We can represent the atmospheric history of ![]() $^{\rm14}{\rm{C}}$, as a function of calendar age

$^{\rm14}{\rm{C}}$, as a function of calendar age ![]() $\theta$, in three domains equivalently:

$\theta$, in three domains equivalently: ![]() ${\Delta ^{14}}{\rm{C}}$,

${\Delta ^{14}}{\rm{C}}$, ![]() ${F^{14}}{\rm{C}}$, and radiocarbon age. For any proposed history, i.e. calibration curve, we switch between these domains as appropriate within our statistical methodology. Let us denote:

${F^{14}}{\rm{C}}$, and radiocarbon age. For any proposed history, i.e. calibration curve, we switch between these domains as appropriate within our statistical methodology. Let us denote:

$g(\theta )$—the 14C calibration curve represented in Δ 14C space, i.e. the level of

$g(\theta )$—the 14C calibration curve represented in Δ 14C space, i.e. the level of  ${\Delta ^{14}}{\rm{C}}$ at

${\Delta ^{14}}{\rm{C}}$ at  $\theta$ cal BP. We model

$\theta$ cal BP. We model  $g(\theta )$ in our spline basis and penalize roughness of the curve in this domain.

$g(\theta )$ in our spline basis and penalize roughness of the curve in this domain. $f(\theta )$—the

$f(\theta )$—the  $^{\rm14}{\rm{C}}$ calibration curve represented in

$^{\rm14}{\rm{C}}$ calibration curve represented in  ${F^{14}}{\rm{C}}$ space. Given

${F^{14}}{\rm{C}}$ space. Given  $g(\theta )$ then

$g(\theta )$ then $$f(\theta ) = \left( {{1 \over {1000}}g(\theta ) + 1} \right){e^{ - \theta /8267}}.$$

$$f(\theta ) = \left( {{1 \over {1000}}g(\theta ) + 1} \right){e^{ - \theta /8267}}.$$It is this

${F^{14}}{\rm{C}}$ domain that is used to assess goodness-of-fit to the observed data.

${F^{14}}{\rm{C}}$ domain that is used to assess goodness-of-fit to the observed data.•

$h(\theta )$—the

$h(\theta )$—the  $^{\rm14}{\rm{C}}$ calibration curve represented in radiocarbon age:

$^{\rm14}{\rm{C}}$ calibration curve represented in radiocarbon age: $$h(\theta ) = - 8033\ln f(\theta ).$$

$$h(\theta ) = - 8033\ln f(\theta ).$$This is the domain in which the calibration curve is plotted in the main IntCal20 paper (Reimer et al. Reference Reimer, Austin, Bard, Bayliss, Blackwell, Bronk Ramsey, Butzin, Cheng, Edwards, Friedrich, Grootes, Guilderson, Hajdas, Heaton, Hogg, Hughen, Kromer, Manning, Muscheler, Palmer, Pearson, van der Plicht, Reimer, Richards, Scott, Southon, Turney, Wacker, Adolphi, Büntgen, Capano, Fahrni, Fogtmann-Schulz, Friedrich, Köhler, Kudsk, Miyake, Olsen, Reinig, Sakamoto, Sookdeo and Talamo2020 in this issue).

Observed Data

We consider all of our observations in ![]() ${F^{14}}{\rm{C}}$ since, as explained in Section 3.1, in this domain our 14C measurement uncertainties are symmetric. We define:

${F^{14}}{\rm{C}}$ since, as explained in Section 3.1, in this domain our 14C measurement uncertainties are symmetric. We define:

${F_i}$—the observed F 14C value of the data used to create the IntCal20 curve.

${F_i}$—the observed F 14C value of the data used to create the IntCal20 curve. ${\sigma _i}$—the laboratory-reported uncertainty on the observed

${\sigma _i}$—the laboratory-reported uncertainty on the observed  ${F^{14}}{\rm{C}}$.

${F^{14}}{\rm{C}}$. ${m_i}$—the number of annual years of metabolization that a determination represents. The determination is considered to represent the mean of this block.

${m_i}$—the number of annual years of metabolization that a determination represents. The determination is considered to represent the mean of this block. ${T_i}$—the observed calendar age of a determination, which could either be the true calendar age (in the case of absolutely dendrodated determinations) or an estimate with uncertainty (in the case of e.g. corals, varves, speleothems).

${T_i}$—the observed calendar age of a determination, which could either be the true calendar age (in the case of absolutely dendrodated determinations) or an estimate with uncertainty (in the case of e.g. corals, varves, speleothems).

Model Parameters

Within the model we update:

${\beta _j}$—the spline coefficients which describe the calibration curve.

${\beta _j}$—the spline coefficients which describe the calibration curve. $\lambda$—the smoothing parameter for the spline estimate.

$\lambda$—the smoothing parameter for the spline estimate. ${\theta _i}$—the true calendar age of a determination or, in the case of a block-average determination, the most recent year of metabolization included in the block.

${\theta _i}$—the true calendar age of a determination or, in the case of a block-average determination, the most recent year of metabolization included in the block. $\tau$—the level of over-dispersion in the observed

$\tau$—the level of over-dispersion in the observed  ${F^{14}}{\rm{C}}$ under a model whereby the potential additional variability for determination

${F^{14}}{\rm{C}}$ under a model whereby the potential additional variability for determination  ${F_i}$ is

${F_i}$ is  ${\eta _i} \sim N(0,{\tau ^2}f({\theta _i}))$.