Paradigmatic advances in machine learning techniques have greatly expanded the capabilities of artificial intelligence (AI). These systems mimic functions typically associated with human attributes and augment them at scale via software, including the functions not only of vision and speech but also of language processing, learning, and problem solving. On the basis of these burgeoning capabilities, AIs can exercise an ever-increasing degree of autonomy in decision-making in crucial spheres, including in government (Coglianese & Lehr, Reference Coglianese and Lehr2016), health care (Norgeot, Glicksberg, & Butte, Reference Norgeot, Glicksberg and Butte2019), management (Kellogg, Valentine, & Christin, Reference Kellogg, Valentine and Christin2020), and policing (Kaufmann, Egbert, & Leese, Reference Kaufmann, Egbert and Leese2019). Despite their many upside promises, AI systems can fail—like humans—to achieve their intended goals, either because the training data they use may be biased or because their recommendations, decisions, and actions yield unintended and negative consequences (Crawford & Calo, Reference Crawford and Calo2016; Martin, Reference Martin2019; Mittelstadt, Allo, Taddeo, Wachter, & Floridi, Reference Floridi2016). Through such failures, AIs can have wide-ranging adverse effects on public goods, such as justice, equity, and privacy, even potentially undermining the processes of fair democratic elections (Calo, Reference Calo2017; Eubanks, Reference Eubanks2018; Tutt, Reference Tutt2016; Zarsky, Reference Zarsky2016). Therefore most governments have now declared a commitment to addressing innovations in AI as global challenges to the safeguarding of public goods (Cath, Wachter, Mittelstadt, Taddeo, & Floridi, Reference Cath, Wachter, Mittelstadt, Taddeo and Floridi2018).

Many of the challenges entailed in seeking to establish responsible innovation in AI are not all entirely new, as they closely resemble issues in other fields, such as bioethics (Floridi et al., Reference Floridi, Cowls, Beltrametti, Chatila, Chazerand, Dignum, Luetge, Madelin, Pagallo, Rossi, Scahfer, Valcke and Vayena2018). The literature on responsible AI has identified and continues to discuss, however, the unique role of epistemic challenges ensuing from the poor “traceability” (Mittelstadt et al., Reference Mittelstadt, Allo, Taddeo, Wachter and Floridi2016) and “explicability” (Floridi et al., Reference Floridi, Cowls, Beltrametti, Chatila, Chazerand, Dignum, Luetge, Madelin, Pagallo, Rossi, Scahfer, Valcke and Vayena2018) of “opaque” (Burrell, Reference Burrell2016) AI systems. Broadly speaking, such epistemic challenges arise from the self-learning capacities of algorithms and the autonomy of AI systems that results from these capacities. This can make it difficult even for AI developers themselves to forecast or reconstruct how data inputs are handled within such systems, how decisions are made, and how these decisions impact domains of application in the long term (Mittelstadt et al., Reference Mittelstadt, Allo, Taddeo, Wachter and Floridi2016). This in turn imposes significant limitations on the effectiveness of government regulations to protect societies and the environment from the harmful impacts of AI (Buhmann & Fieseler, Reference Buhmann and Fieseler2021b; Morley, Elhalal, Garcia, Kinsey, Mökander, & Floridi, Reference Morley, Elhalal, Garcia, Kinsey, Mökander and Floridi2021). Attempts to address this problem have led to a surge in the issuance of guidelines for “ethical AI” over the past five years, authored by governments, nongovernmental organizations, and corporations (Schiff, Borenstein, Biddle, & Laas, Reference Schiff, Borenstein, Biddle and Laas2021). Recent scholarship has endeavored to synthesize these guidelines within a meta-framework of principles for ethical AI (Floridi & Cowls, Reference Floridi and Cowls2019) and to move beyond principles (or “what” questions) to the creation of translational tools (or “how” questions) for tackling ethical challenges in practice, that is, within the process of AI design (Morley, Floridi, Kinsey, & Elhalal, Reference Morley, Floridi, Kinsey and Elhalal2020). This discussion to date is directed mostly at AI practitioners, such as designers, engineers, and controllers, and focused on making principles applicable for the diagnosis of ethical issues in specific microcontexts. Less attention has so far been paid to linking such principles and translational tools with questions of societal and corporate governance (Morley et al., Reference Morley, Elhalal, Garcia, Kinsey, Mökander and Floridi2021). Although most principles and translational tools currently being developed envisage active and collaborative involvement on the part of the AI industry, and specifically those organizations that develop and employ semiautonomous systems, with actors from the public, private, and civil society sectors as a means of overcoming the limitations of government regulations (Buhmann & Fieseler, Reference Buhmann and Fieseler2021b; Buhmann, Paßmann, & Fieseler, Reference Buhmann, Paßmann and Fieseler2020; Morley et al., Reference Morley, Floridi, Kinsey and Elhalal2020; Rahwan, Reference Rahwan2018; Veale & Binns, Reference Veale and Binns2017), the matter of which specific actors to involve in solutions and how precisely to involve these actors is rarely elaborated in detail. This raises the question, what should be the role of actors from within the AI industry in contributing to the governance of responsible AI innovation, specifically in addressing both the need for the collaborative involvement of the AI industry and the need to tackle the epistemic challenges pertaining to the governance of AI? This question highlights several further open questions in the ethical AI and AI governance literatures.

The first of these outstanding questions is how and under which conditions societal and corporate governance structures can gainfully interact with translational tools for ethical AI (and with the principles on which these tools are based). As Morley et al. (Reference Morley, Elhalal, Garcia, Kinsey, Mökander and Floridi2021: 241) observed, “there is, as of yet, little evidence that the use of any of these translational tools/methods has an impact on the governability of algorithmic systems.” This unresolved question highlights the fact that the governability of systems is ultimately a matter to be decided in the context of concrete models of governance. In turn, this implies that any further discussion of tools for ethical AI needs to address their application at the levels of both system design and governance, that is, clarify not only their “technical implementation” along the AI development pipeline but also their “administrative implementation” within mechanisms of societal and organizational decision-making.

A second question is which specific form of governance would best help actors in the AI industry to identify and implement legitimate approaches to responsible innovation while at the same time allowing for and fostering technically and economically efficient processes of AI innovation. Addressing this question thus calls for the development of steering mechanisms that would allow the AI industry to innovate while also taking societal needs and fears into due consideration. For example, such consideration would involve balancing conflicting pressures between harnessing the potential for accuracy of AI systems (including their power to do good) against the need for these systems to be accountable (Goodman & Flaxman, Reference Goodman and Flaxman2017).

A third issue to be addressed is that implementing ethical AI entails a realistic appraisal of the prospects for and challenges involved in bringing about the active and collaborative engagement of the AI industry in the process of responsible innovation. This in turn calls for a problematization of the power imbalances between different stakeholders, including the epistemic challenges and knowledge inequalities between AI experts and the general public, further calling into question the arguably ambivalent role of the AI industry in gatekeeping such endeavors.

And as a fourth and final question related to all the preceding questions, what are the most appropriate political visions and values for the governance of responsible AI innovation? This question highlights the need for feeding macro-ethical considerations into current micro-ethical discussions that focus on the design specifications of algorithms and the AI development process. Although this macro–micro connection is currently explored in relation to data ethics more broadly (Taddeo & Floridi, Reference Taddeo and Floridi2016; Tsamados et al., Reference Tsamados, Aggarwal, Cowls, Morley, Roberts, Taddeo and Floridi2021), it has rarely been examined with a business ethics focus in mind (Häußermann & Lütge, Reference Häußermann and Lütge2021).

As Whittaker et al. (Reference Whittaker, Crawford, Dobbe, Fried, Kaziunas, Mathur, West, Richardson, Schultz and Schwartz2018: 4) succinctly concluded in their AI Now Report of 2018, “the AI industry urgently needs new approaches to governance.” Responding to this call and the questions outlined earlier, we will develop our argument as follows. First, building on the work of Mittelstadt et al. (Reference Mittelstadt, Allo, Taddeo, Wachter and Floridi2016) and Tsamados et al. (Reference Tsamados, Aggarwal, Cowls, Morley, Roberts, Taddeo and Floridi2021), we identify three types of ethical concerns specific to AI innovation, that is, evidence, outcome, and epistemic concerns. We then interrelate these concerns with a recent normative concept of responsible innovation (Voegtlin & Scherer, Reference Voegtlin and Scherer2017) to propose a new framework of responsibilities for innovation in AI. In developing this framework, we foreground the importance of facilitating governance that addresses epistemic concerns as a meta-responsibility. Second, we discuss the rationale and possibilities for involving the AI industry in broader collective efforts to enact such governance. Here we argue from the perspective of political corporate social responsibility (PCSR) (Scherer & Palazzo, Reference Scherer and Palazzo2007, Reference Scherer and Palazzo2011), focusing on the potential of deliberation for addressing questions of legitimation, contributions to collective goals, and organizational learning, and we outline the challenges in applying this perspective to responsible AI innovation. Subsequently, we set forth the prospects of a “distributed deliberation” approach as a means of overcoming these challenges. We elaborate this approach by proposing a model of distributed deliberation for responsible innovation in AI, identifying different venues of deliberation and specifying the role and responsibilities of the AI industry in these different fora. Finally, we discuss prospects and challenges of the proposed approach and model and highlight avenues for further research on deliberation and the governance of responsible innovation in AI.

TOWARD A FRAMEWORK OF RESPONSIBILITIES FOR THE INNOVATION OF ARTIFICIAL INTELLIGENCE

Three Sets of Challenges for Responsible Innovation in AI

Broadly speaking, responsible innovation refers to the exercise of collective care for the future by way of stewardship of innovation in the present (Owen, Bessant, & Heintz, Reference Owen, Bessant and Heintz2013: 36). Such stewardship calls for informed anticipation of key challenges and concerns regarding the purposes, processes, and outcomes of innovation (Barben, Fisher, Selin, & Guston, Reference Barben, Fisher, Selin, Guston, Hackett and Amsterdamska2008; Stilgoe, Owen, & Macnaghten, Reference Stilgoe, Owen and Macnaghten2013). From the ongoing debate on the ethics of AI and algorithms (Mittelstadt et al., Reference Mittelstadt, Allo, Taddeo, Wachter and Floridi2016; Tsamados et al., Reference Tsamados, Aggarwal, Cowls, Morley, Roberts, Taddeo and Floridi2021), three sets of challenges can be summarized (see similarly Buhmann et al., Reference Buhmann, Paßmann and Fieseler2020).

Evidence concerns relate to the mechanisms by which self-learning systems transform massive quantities of data into “insights” that inform an AI system’s decisions, recommendations, and actions. Such concerns arise because AIs are designed to reach conclusions on the basis of probabilities rather than conclusive evidence of certain outcomes. These probabilities are derived from seemingly meaningful patterns detected within vast collections of data, often involving inferences of causality based on mere correlations within such data. The decisions reached by AIs may be based on misguided evidence, moreover, as when algorithmic conclusions rely on incomplete and incorrect data or when decisions are based on unethical or otherwise inadequate inputs (Mittelstadt et al., Reference Mittelstadt, Allo, Taddeo, Wachter and Floridi2016; Tsamados et al., Reference Tsamados, Aggarwal, Cowls, Morley, Roberts, Taddeo and Floridi2021; Veale & Binns, Reference Veale and Binns2017). In short, flawed AI decisions can arise both from poor-quality data and also (intended or unintended) properties of data sets, models, or entire systems.

Outcome concerns relate to the potentially adverse consequences of decisions reached by AI systems, including both directly and indirectly harmful outcomes. Directly harmful outcomes may take the form of discrimination against certain entities or groups of people, as, for example, when data-driven decision-support systems serve to perpetuate existing injustices related to ethnicity or gender, either because these systems are biased in their design or because human biases are picked up in the training data used for algorithms (Tufekci, Reference Tufekci2015). Poorly designed AI may further generate feedback loops that reinforce inequalities, as in the case of predictive policing (Kaufmann, Egbert, & Leese, Reference Kaufmann, Egbert and Leese2019), for example, or in predictions of creditworthiness that render it difficult for individuals to escape vicious cycles of poverty (O’Neill, Reference O’Neill, Morris and Vines2014). Indirectly harmful outcomes of AIs can arise from the application of AI technologies more generally, often with long-term consequences, such as large-scale technological unemployment (Korinek & Stiglitz, Reference Korinek, Stiglitz, Ajay, Gans and Goldfarb2018). Such outcomes can also take the form of so-called latent, secondary, and transformative effects (Mittelstadt et al., Reference Mittelstadt, Allo, Taddeo, Wachter and Floridi2016) that occur when AI outcomes change the ways that people perceive situations, as, for example, in the case of profiling algorithms that powerfully ontologize the world in particular ways and trigger new patterns of behavior (Pasquale, Reference Pasquale2015), though these effects are also evident in the ways that content curation and news recommendation algorithms lead to people being unwittingly socialized in “filter bubbles” (Berman & Katona, Reference Berman and Katona2020).

Epistemic concerns relate to issues stemming from the “opacity” of AI (Burrell, Reference Burrell2016), including both the inscrutability of algorithmic inputs and their processing and the poor traceability of potentially latent and long-term harmful consequences of AIs.Footnote 1 These concerns arise when AIs are not readily open to explication and scrutiny and when the outcomes of their application are not relatable in any straightforward way to the vast sets of data on which AIs draw to reach their conclusions (Miller & Record, Reference Miller and Record2013). Harmful outcomes may be difficult to trace to a particular system’s operations, moreover, because of the fluid and diffuse, that is, networked, ways in which such systems evolve (Sandvig, Hamilton, Karahalios, & Langbort, Reference Sandvig, Hamilton, Karahalios, Langbort and Gangadharan2014). As software artifacts applied in data processing, AIs give rise to ethical issues that are incorporated into their very design as well as the data used to test and train models (Mittelstadt et al., Reference Mittelstadt, Allo, Taddeo, Wachter and Floridi2016). Epistemic concerns thus relate to all technical and sociotechnical factors that render it difficult to detect the potential harm caused by algorithms and to identify the causes and responsibilities for such harm. Indeed, epistemic concerns are arguably what truly set AI innovation apart from other ethically complex fields, such as biotechnology, and pronounces it as a “grand challenge” (Buhmann & Fieseler, Reference Buhmann and Fieseler2021a), especially in the case of AIs that are “truly opaque” (Floridi et al., Reference Floridi, Cowls, Beltrametti, Chatila, Chazerand, Dignum, Luetge, Madelin, Pagallo, Rossi, Scahfer, Valcke and Vayena2018), as we argue next.

Types of Epistemic Concerns about AI

Epistemic concerns can be further differentiated in relation to three broad categories of strategic, expert, and true opacity. Strategic opacity refers to inscrutability and poor traceability resulting from deliberate intent on the part of the designers of a certain AI. In this case, algorithms that might otherwise be interpretable and whose effects might be traceable are intentionally kept secret, obfuscated, or “black-boxed.” Typical motives for strategic opacity include relatively noncontroversial aims like optimizing the functionality of an AI, ensuring its competitiveness, or protecting the privacy of user data (Ananny & Crawford, Reference Ananny and Crawford2018; Glenn & Monteith, Reference Glenn and Monteith2014; Leese, Reference Leese2014; Stark & Fins, Reference Stark and Fins2013) but also the motives of avoiding accountability and evading regulations (Ananny & Crawford, Reference Ananny and Crawford2018; Martin, Reference Martin2019).

Epistemic concerns regarding expert opacity relate to the issue of “popular comprehensibility.” Whereas the design, development, and outcomes of an AI may be explicable and interpretable among experts, these aspects of AI remain widely inscrutable, uninterpretable, and untraceable for laypeople. Expert opacity can thus be described in broad terms as arising at the intersection of system complexity and “technical literacy” (Burrell, Reference Burrell2016). Common themes identified in the literature on expert opacity include so-called epistemic vices, such as AI “gullibility,” “dogmatism,” and “automation bias” (Tsamados et al., Reference Tsamados, Aggarwal, Cowls, Morley, Roberts, Taddeo and Floridi2021). Expert opacity can also arise inadvertently through attempts at disclosure and transparency that overwhelm citizens on account of the sheer volume and complexity of information made available to them (Ananny & Crawford, Reference Ananny and Crawford2018), though here it should be noted that any intentional obfuscation by such disclosure “overload” would rather constitute an element of strategic opacity (Aïvodji, Arai, Fortineau, Gambs, Hara, & Tapp, Reference Aïvodji, Arai, Fortineau, Gambs, Hara and Tapp2019).

The third group of epistemic concerns relates to AI processes and outcomes that are difficult to scrutinize and trace not only for laypeople but also for AI experts and developers themselves. We refer to this as true opacity, which arises from the ways in which AIs are developed and evolve as emergent phenomena in practice, since AIs and algorithms do not simply comprise mathematical entities but further constitute “technology in action.” Together with the fact that AI developers often reuse and repurpose code from libraries, thereby leading to the wide dispersion and therefore obfuscation of responsibilities for particular code and outcomes, the perpetually evolving aspect of AI leads even software designers to “regularly treat part of their work as black boxes” (Mittelstadt et al., Reference Mittelstadt, Allo, Taddeo, Wachter and Floridi2016: 15). Such true opacity is especially problematic in that it is not merely a matter of insufficient popular comprehension and technical literacy that could potentially be addressed directly through explanation and training. In the face of true opacity, AIs can only be understood by way of an iterative process and not merely through studying an AI system’s properties and mathematical ontology (Burrell, Reference Burrell2016).

True opacity can relate to evidence, outcomes, or both. At the level of evidence, for example, such opacity can take the form of uncertainty in identifying potentially problematic and sensitive variables used by AIs (Veale & Binns, Reference Veale and Binns2017). At the level of outcomes, meanwhile, examples of true opacity include uncertainty about the latent impacts of AIs (Sandvig et al., Reference Sandvig, Hamilton, Karahalios, Langbort and Gangadharan2014) and the appropriateness of extant social evaluation of these impacts (Baum, Reference Baum2020; Buhmann et al., Reference Buhmann, Paßmann and Fieseler2020). In relation to evidence and outcomes in combination, true opacity can take the form of uncertainty about the allocation of responsibilities across vast and poorly transparent networks of human, software, and hybrid agents (Floridi, Reference Floridi2016) or uncertainty about the norms incorporated within automated systems that are thereby excluded from the sphere of social reflexivity (D’Agostino & Durante, Reference D’Agostino and Durante2018). As such, the term true opacity is not an ontologization but rather denotes phenomena that AI experts themselves refer to as “opaque.”

A Framework of Responsibilities for AI Innovation

For all the numerous guidelines that have been published on “ethical AI” by governments, private corporations, and nongovernmental organizations, especially over the past five years (Schiff et al., Reference Schiff, Borenstein, Biddle and Laas2021), the lack of consensus still surrounding key areas threatens to delay the development of a clear model of governance to ensure the responsible design, development, and deployment of AI (Jobin, Ienca, & Vayena, Reference Jobin, Ienca and Vayena2019). More promisingly, however, some recent research has started to offer meta-analyses, with growing agreement apparently emerging around a five-dimensional framework of principles for ethical AI. This framework considers beneficence (AI that benefits and respects people and the environment), nonmaleficence (AI that is cautious, robust, and secure), autonomy (AI that conserves and furthers human values), justice (AI that is fair), and explicability (AI that is explainable, comprehensible, and accountable) (Floridi & Cowls, Reference Floridi and Cowls2019). Nevertheless, these efforts to attain one common framework still include some inconsistencies. It remains unclear, for instance, why certain aspects of justice (such as “avoiding unfairness”) or of autonomy (such as “protecting people’s power to decide”) are not simply subsumed within the dimension of nonmalfeasance and why other aspects of justice (such as “promoting diversity and inclusion”) or autonomy (such as “furthering human autonomy”) are not positioned as elements of beneficence. Furthermore, and more importantly, “explicability” appears in this framework both as a stand-alone dimension and as a necessary element of all other dimensions, because such explicability is necessary to enable AI beneficence, justice, and so on. In its current version, moreover, the framework appears to replicate a central omission in AI ethics guidelines concerning the role of governance: as shown by a recent study of twenty-two guidelines (Hagendorff, Reference Hagendorff2020), questions of governance are rarely addressed in codes and principles for ethical AI. The framework developed by Floridi and Cowls (Reference Floridi and Cowls2019), founded on a meta-review of such guidelines, likewise falls short of interrelating principles for ethical AI with principles for governance. Governance, however, is key for responsible processes of innovation (Jordan, Reference Jordan2008).

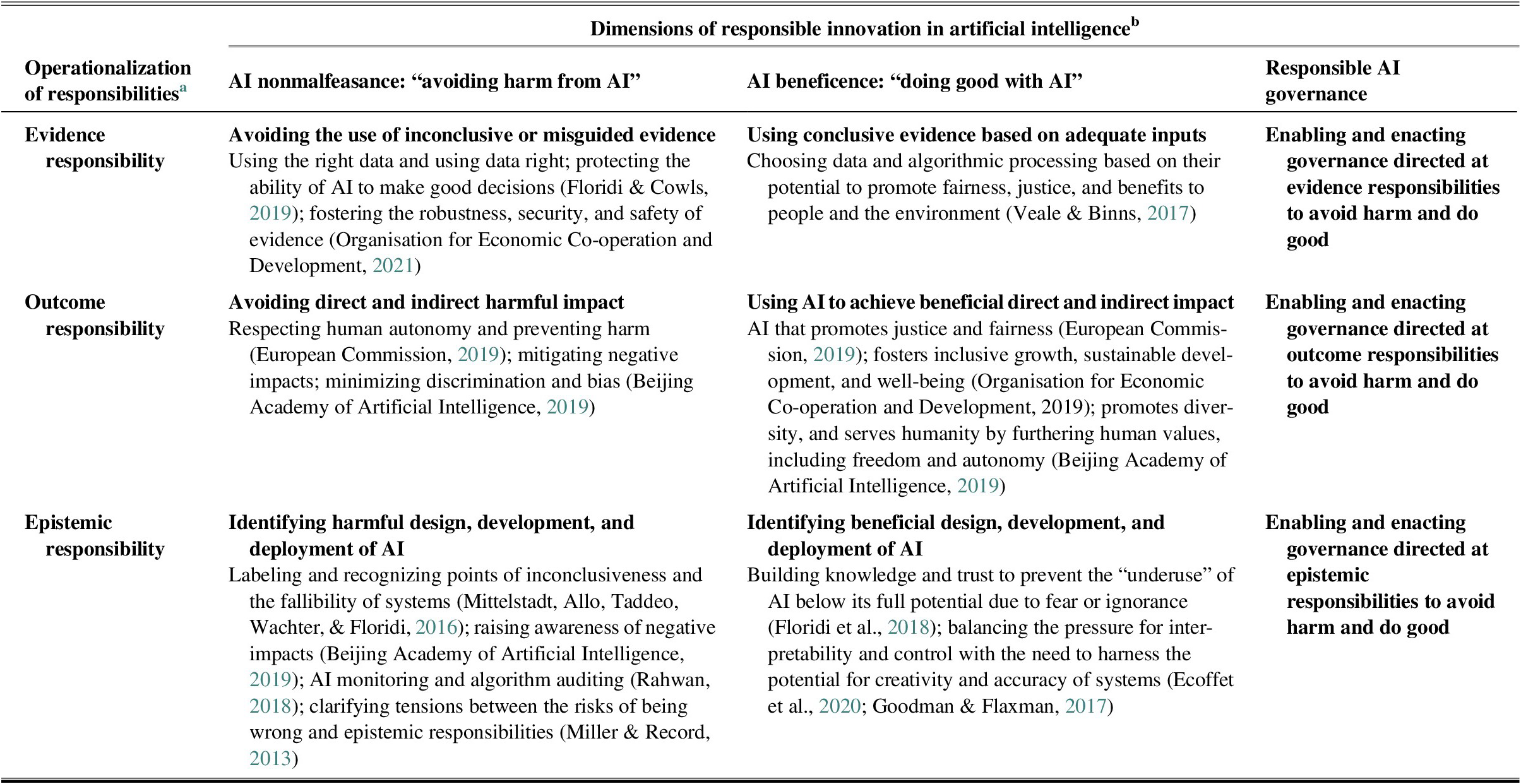

To address these issues, we suggest working toward a framework of responsibilities that interrelates the three sets of challenges reviewed earlier with a normative concept of responsible innovation (Voegtlin & Scherer, Reference Voegtlin and Scherer2017), which involves three basic types of responsibilities: 1) responsibilities to do no harm, 2) responsibilities to do good, and 3) responsibilities for governance that enables the first two dimensions. This three-dimensional setup has recently been applied to principles in ethical AI (Buhmann & Fieseler, Reference Buhmann and Fieseler2021b), closely corresponds to the basic distinction between AI risks versus opportunities used earlier by Floridi et al. (Reference Floridi, Cowls, Beltrametti, Chatila, Chazerand, Dignum, Luetge, Madelin, Pagallo, Rossi, Scahfer, Valcke and Vayena2018), and, more importantly, adds the key dimension of governance. Thus we propose a matrix consisting of three responsibility dimensions that are further operationalized by three constitutive responsibilities that each address one of the challenges reviewed earlier. (See Table 1 for an overview with examples from the current AI ethics literature for illustration.)

Table 1: A Framework of Responsibilities for the Innovation of Artificial Intelligence

a Based on three sets of challenges for responsible innovation in AI. bAdapted from Voegtlin and Scherer (Reference Voegtlin and Scherer2017) and Floridi and Cowls (Reference Floridi and Cowls2019).

The dimension of AI nonmalfeasance (avoiding harm from AI) refers to responsibilities for managing risks and controlling for potentially harmful consequences. These include the evidence responsibility for avoiding harm by using the right data and using data in the right way so as to ensure robustness of evidence and the protection of security, safety, and integrity in algorithmic processing; the outcome responsibility for avoiding harm by protecting human autonomy and avoiding discriminatory effects like biases; and the epistemic responsibility for avoiding harm by identifying any inconclusiveness and fallibilities of AI systems and creating awareness and knowledge regarding any negative impacts of AI.

The dimension of AI beneficence (doing good with AI) refers to responsibilities for the improvement of living conditions in accordance with agreed principles or aims, such as the United Nations Sustainable Development Goals (SDGs). These include the evidence responsibility for doing good by assessing data and their algorithmic processing according to their potential to promote fairness, justice, and well-being for people and the environment; the outcome responsibility for doing good by furthering justice through AI and applying AI for achieving agreed aims, such as the SDGs, and tackling “grand challenges”; and the epistemic responsibility for doing good through building knowledge and trust to maximize the social utility potential of AI and prevent the “underuse” of AI systems owing to fear or ignorance.

The dimension of responsible AI governance refers to responsibilities for the development and support of institutions, structures, and mechanisms aimed at facilitating responsible innovation in AI. Specifically, this entails enabling and enacting governance of the evidence responsibility for preventing the use of potentially inconclusive and misguided evidence in algorithmic processing, governance of the outcome responsibility for monitoring the direct and indirect effects of AI, and governance of the epistemic responsibility for scrutinizing algorithmic processes and enabling traceability of AI.

Responsible AI governance must be addressed at two levels in parallel: at the technological level of AI design and at the level of translational tools that are supposed to operationalize responsible AI design by enhancing the evaluation, understanding, and legitimation of AI. In other words, translational tools (including their development and implementation) need to be explained and justified together with the technology they are supposed to help govern (Morley et al., Reference Morley, Floridi, Kinsey and Elhalal2020). Furthermore, the governance of evidence, outcome, and epistemic responsibilities merits particular attention in that it constitutes the key dimension of responsible AI innovation. This is because, as a governance responsibility, it operates at a meta-level, meaning it facilitates responsible innovation on the other two dimensions (Voegtlin & Scherer, Reference Voegtlin and Scherer2017). Within responsible AI governance, the governance of epistemic responsibilities plays a pivotal role, for two main reasons. First, among the three meta-responsibilities, it operates itself on a meta-level, as the detection and governance of potential harm as well as opportunities to do good on the levels of evidence and outcomes rely on scrutable and traceable systems. In other words, epistemic challenges like poor scrutability and traceability may significantly hinder effective apprehension of the purposes, processes, and outcomes of AI. Second, the high demand for transparency that results from prevalent epistemic concerns can potentially divert resources away from important advances in AI performance and accuracy (Ananny & Crawford, Reference Ananny and Crawford2018), which means the governance of epistemic responsibility needs to support business and society in seeking legitimate solutions to prevalent tensions in AI. Addressing these tensions includes balancing the pressure for interpretability, accountability, and control of AI systems with the need to avoid hindering the potential of AI systems for greater creativity and accuracy (Ecoffet, Clune, & Lehman, Reference Ecoffet, Clune and Lehman2020; Goodman & Flaxman, Reference Goodman and Flaxman2017).

ENACTING RESPONSIBLE AI GOVERNANCE: A POLITICAL CORPORATE SOCIAL RESPONSIBILITY APPROACH

The Prospects of Political Corporate Social Responsibility

The framework of responsibilities for the innovation of artificial intelligence described in the preceding sections accentuates responsible AI governance as a meta-responsibility. Within this dimension, we have pointed to the particular importance of enacting governance directed at epistemic responsibilities. As we argue subsequently, both these emphases in the responsible innovation of AI point toward the prospects of deliberation for governing AI innovation.

In following common frameworks on responsible innovation (Owen, Bessant, & Heintz, Reference Owen, Bessant and Heintz2013; Stilgoe et al., Reference Stilgoe, Owen and Macnaghten2013), we argue that responsible AI governance needs to be enacted through a deliberative control process. This entails open and well-informed “deep democratic” debate (Michelman, Reference Michelman1997) aimed at generating broadly agreed-upon opinions and decisions (Chambers, Reference Chambers2003). Such deliberation for responsible innovation necessitates “structures at various levels (e.g., global, societal, corporate) that facilitate an inclusive process of collective will formation on the goals and means and the societal acceptability of innovation” (Scherer & Voegtlin, Reference Scherer and Voegtlin2020: 184). Recent scholarship on responsible innovation within the management and business ethics literatures has discussed the capacity of different corporate governance models for responsible innovation and explored the prospects of approaches that address nonstate entities like corporations as political actors (Brand & Blok, Reference Brand and Blok2019; Scherer & Voegtlin, Reference Scherer and Voegtlin2020). Rather than focusing corporate responsibilities on shareholders or stakeholders, this scholarship has developed a program of PCSR that tasks nonstate actors with an active role in the collaborative endeavor of producing and protecting public goods (Scherer & Palazzo, Reference Scherer and Palazzo2007, Reference Scherer and Palazzo2011).Footnote 2 For this, PCSR builds on ideals of deliberative democracy (Habermas, Reference Habermas1998; Thompson, Reference Thompson2008), foregrounding the collaborative engagement of state and nonstate actors in collective decisions through a rational process of principled communication that “draws in” the diverse knowledges and perspectives of all those potentially affected by such decisions.

From a PCSR perspective, achieving responsible innovation is understood as a challenge embedded in complex and globalized business environments that requires the involvement of nonstate actors as active participants in public governance to support deliberation aimed at alleviating institutional deficits (Voegtlin & Scherer, Reference Voegtlin and Scherer2017). This perspective has strong similarities with the debate on responsible innovation, especially in the fundamental importance it places on deliberative democracy (Brand & Blok, Reference Brand and Blok2019; Scherer, Reference Scherer2018; Scherer & Voegtlin, Reference Scherer and Voegtlin2020). We see three main ways in which the PCSR approach is particularly well suited to tackle the challenges involved in achieving responsible innovation in AI. First, widespread outcome concerns about the potential negative impacts of AI, together with epistemic concerns related to this technology, constitute a relevant context and basis for considering organizations in the AI industry as public actors with a responsibility for social well-being and the collective good. PCSR’s focus on innovation as a “political activity” and its positioning of nongovernmental actors as subject to democratic governance resonate directly with calls for politicizing the debate on responsible AI innovation (Green & Viljoen, Reference Green and Viljoen2020; Helbing et al., Reference Helbing, Frey, Gigerenzer, Hafen, Hagner, Hofstetter, Van Den Hoven, Zicar, Zwitter and Helbing2019; Wong, Reference Wong2020; Yun, Lee, Ahn, Park, & Yigitcanlar, Reference Yun, Lee, Ahn, Park and Yigitcanlar2016). These calls highlight the need for a clear connection to be drawn between discussions about AI governance and questions related to the public good, including the duties and contributions of the AI industry to the public good (Hartley, Pearce, & Taylor, Reference Hartley, Pearce and Taylor2017; Wong, Reference Wong2020).

Second, we believe that by highlighting and addressing the limitations of merely formal compliance with legal regulations and social expectations (Scherer & Palazzo, Reference Scherer and Palazzo2007, Reference Scherer and Palazzo2011), the PCSR approach takes into account the challenges that arise from the opacity of AI. This opacity means that corporate AI developers cannot rely merely on extant laws and regulations for legitimation and accountability but also need to consider communicative and discursive strategies. In particular, true opacity as well as expert opacity constitute a permanent concern for the AI industry in terms of the industry’s legitimacy and reputation, especially as the industry may struggle to give immediate explanations and provide satisfactory accounts when critical stakeholders demand information and transparency (Buhmann et al., Reference Buhmann, Paßmann and Fieseler2020). In conditions of unclear (external) demands related to opaque information systems, the kind of “discursive engagement” advocated in the PCSR approach for facilitating legitimate outcomes (Scherer, Palazzo, & Seidl, Reference Scherer, Palazzo and Seidl2013) is highly relevant and appropriate (Mingers & Walsham, Reference Mingers and Walsham2010). This is because important knowledge about the workings of AI systems and their wide-ranging ramifications does not reside exclusively with AI industry actors but must emerge from open deliberation with other actors that use and are affected by these systems (Lubit, Reference Lubit2001).

Third, by emphasizing the role of organizational learning (Scherer & Palazzo, Reference Scherer, Palazzo, Rasche and Kell2010), the PCSR approach takes account of the dynamic nature of AI and the related potential for corporate routines, goals, and governance structures to be revised and shifted over time, either to achieve competitive (first-mover) advantages in AI (Horowitz, Reference Horowitz2018) or as a means of proactively managing compliance, accountability, and reputation in the AI industry (Buhmann et al., Reference Buhmann, Paßmann and Fieseler2020). Such concerns about organizational learning may serve to push AI industry actors toward discursive approaches and compel them to enter into proactive deliberative debates. In practice, however, the impossibility of attaining complete “AI transparency” can be used as an excuse for organizations not to fulfill ethical duties to deliver conventional explanations and straightforward accounts based on fixed legal frameworks. In this regard, PCSR highlights not only the necessity of managing reputation and facilitating learning but also the ethical obligation of organizations to enable and participate actively in joint deliberation with other actors from government and civil society to mitigate the impediments to responsible AI innovation that arise based on expert and true opacity. As a governance approach, PCSR is thus highly compatible with current work on translational tools for ethical design that aim to compensate for the limits of hard regulation by proposing mechanisms for effectively opening up AI design and development to social scrutiny (Morley et al., Reference Morley, Floridi, Kinsey and Elhalal2020; Rahwan, Reference Rahwan2018; Veale & Binns, Reference Veale and Binns2017). In the following section, we discuss key challenges related to deliberation and PCSR in opening AI design and development to social scrutiny, and based on this discussion, we argue for “distributed deliberation” as an approach to help offset these challenges.

The Challenges Related to Deliberation in Governing Responsible AI Innovation

Specific limits to deliberation involving AI industry actors can be demonstrated based on the following operational principles of deliberation—see especially Nanz and Steffek (Reference Nanz and Steffek2005) and Steenbergen, Bächtiger, Sporndli, and Steiner (Reference Steenbergen, Bächtiger, Sporndli and Steiner2003) or, for a discussion and application of these principles in the AI ethics literature, Buhmann et al. (Reference Buhmann, Paßmann and Fieseler2020). The first principle relates to participation and the imperative that subjects who potentially suffer negative effects should have equal access to communicative fora that aim to spotlight potential issues and facilitate argumentation with the goal of reaching broadly acceptable decisions. The second relates to comprehension and the principle that participants should have access to all necessary information about the issues at stake as well as proposed solutions, including the ramifications of such solutions. The principle of multivocality, meanwhile, means that participants need to have a chance to voice their concerns and exchange arguments freely, including the opportunity to revise their positions based on stronger and more informed arguments.

In terms of widening participation to achieve responsible innovation in AI, the challenge here lies not only in access itself but also in ensuring sufficient permanency of access, especially where systems evolve in dynamic and fluid ways (Sandvig et al., Reference Sandvig, Hamilton, Karahalios, Langbort and Gangadharan2014; Buhmann & Fieseler, Reference Buhmann and Fieseler2021a; Buhmann et al., Reference Buhmann, Paßmann and Fieseler2020). Indeed, one of the key challenges evident in the literature on principled AI and translational tools is how to go beyond currently prevalent “one-off” approaches to ethical AI, because these approaches lack sufficient continuity of validation, verification, and evaluation of systems (Morley et al., Reference Morley, Elhalal, Garcia, Kinsey, Mökander and Floridi2021). While some scholars have suggested cooperative and procedural audits of algorithms to address this issue (Mittelstadt et al., Reference Mittelstadt, Allo, Taddeo, Wachter and Floridi2016; Sandvig et al., Reference Sandvig, Hamilton, Karahalios, Langbort and Gangadharan2014), the focus of such scholarship has so far been mostly on expert settings. Although such approaches would enable developers, engineers, and other “industry insiders” to diagnose ethical issues, these solutions lack mechanisms to ensure the inclusion of external actors and stakeholders to “plug in” social views and evaluations from outside of the industry. Moreover, in those studies that do explicitly envisage external evaluation (e.g., Rahwan, Reference Rahwan2018; Veale & Binns, Reference Veale and Binns2017), there is a tendency to treat laypeople—or “the public”—as a monolithic entity, without addressing ways to augment public and private engagement. No consideration is given, for example, of the possibility of establishing fora or venues for involving actors in deliberation based on different kinds of knowledge and expertise. Without such ties, translational tools will remain limited to a decontextualized technical exercise that potentially distorts or neglects social injustices (Wong, Reference Wong2020). This issue of participation exists as much within such approaches and translational tools as it does for them. For while practical applications proposed for ethical AI do incorporate and promote standards for assessing algorithmic practices, there is rarely any discussion of ways to subject these tools to evaluation themselves (Fazelpour & Lipton, Reference Fazelpour and Lipton2020). In the absence of any such meta-evaluation, the choice of translational tools is left to developers, increasing the likelihood of convenient rather than ethical solutions, that is, approaches that favor the functionality and accuracy of for-profit systems over pro-ethical systems that bolster explicability and control in support of societal needs (Morley et al., Reference Morley, Elhalal, Garcia, Kinsey, Mökander and Floridi2021).

Lack of social evaluation is not an issue merely of participation but also of comprehension. The ideal scenario whereby informed citizens’ judgments should have a critical bearing on AI design and regulation (Kemper & Kolkman, Reference Kemper and Kolkman2019) seems to be undermined not only in the most fundamental sense by the way in which AIs are developed and evolve as emergent phenomena in practice (true opacity) but also by steep knowledge inequalities between AI industry actors, policy makers, and citizens (expert opacity). While issues of expert opacity can be addressed at least in part through efforts aimed at replacing black-box models with interpretable ones (Rudin, Reference Rudin2019), these solutions do not address important tensions related to expert and civic engagement in deliberation. This is because such efforts do not take place in a social vacuum but in specific cultural and organizational settings (Felzmann, Villaronga, Lutz, & Tamò-Larrieux, Reference Felzmann, Villaronga, Lutz and Tamò-Larrieux2019; Kemper & Kolkman, Reference Kemper and Kolkman2019; Miller, Reference Miller2019), meaning they are performative and may have unintended consequences and downsides (Albu & Flyverbom, Reference Albu and Flyverbom2019). This is the case, for example, in attempts at AI explicability that actually serve to obfuscate further through disclosure (Aïvodji et al., Reference Aïvodji, Arai, Fortineau, Gambs, Hara and Tapp2019; Ananny & Crawford, Reference Ananny and Crawford2018).

Limits to comprehension are apparent not only at the level of laypeople, moreover, because even AI experts and industry insiders themselves necessarily lack insights into latent interests, newly arising issues, and tensions between public goals. Such insights are vital for understanding and managing sensitive variables in the processing of algorithmic evidence and the latent impacts of AI. This limitation impedes the ability of experts to reflect and reconfigure their approaches (Dryzek & Pickering, Reference Dryzek and Pickering2017). The only way in which this can be compensated for is arguably through the development of a diverse knowledge base through citizen participation (Meadowcroft & Steurer, Reference Meadowcroft and Steurer2018).

Finally, the limitations of deliberation involving the AI industry are also evident in the often limited means available to citizens for formulating and deliberating their concerns. For example, although public code repositories may foster open access (participation) and provide extensive information on codes and interpretable models (comprehension), actual engagement via such platforms remains hierarchical and dominated by experts (Buhmann et al., Reference Buhmann, Paßmann and Fieseler2020). This makes it especially difficult to render accountable all the “unknowns” of algorithmic actions (Paßmann & Boersma, Reference Paßmann, Boersma, Schäfer and van Es2017), because exploring different forms of opacity requires inclusive observation and debate. Arguably, such lack of multivocality can be ameliorated to some extent by journalistic media, as in the case of “data journalism,” for example (Diakopoulos, Reference Diakopoulos2019). Such media-backed public scrutiny of AI is only possible, however, in instances of sufficient magnitude to attract the attention of “watchdog” journalism (on “criminal justice algorithms,” see the discussed examples in Buhmann et al., Reference Buhmann, Paßmann and Fieseler2020).

Although scholars have highlighted the challenges of ethical AI in relation to technical opacity and obfuscation arising from efforts to provide explanations, as well as the need for “citizen insight” (Tsamados et al., Reference Tsamados, Aggarwal, Cowls, Morley, Roberts, Taddeo and Floridi2021), studies rarely address the question of what exactly should be disclosed to whom, which actors should be engaged and how, and where the boundaries to particular discussions and information should be drawn to enable “bigger picture” governance of AI. User participation and comprehension have so far been discussed largely as a “micro issue” in the form of technical tools or procedures with which to test and audit systems. Such approaches thus fall short of envisaging ways to increase comprehension across different expert and citizen fora and venues to enable the kind of broader deliberative process needed to facilitate a socially situated traceability and explicability of AI systems.

A MODEL OF DISTRIBUTED DELIBERATION FOR GOVERNING RESPONSIBLE AI INNOVATION

A Systems Perspective: The Prospects of Distributed Deliberation

Discussions on knowledge inequalities in deliberation (Moore, Reference Moore2016) suggest that decisions and actions that result from processes in which there are strong boundaries between experts and nonexperts undermine trust in deliberative processes of governing AI for several important reasons, including 1) the inability of citizens to comprehend the content matters being discussed, 2) their inability to trace and evaluate the internal process through which AI experts reach decisions and recommendations, and 3) epistemic deficits that arise because diverse assessments and evaluations are not sufficiently “fed into” deliberation. The preceding considerations regarding participation, comprehension, and multivocality indicate a need to address the tensions around knowledge inequalities between the (expertise of the) AI industry and other actors—see similarly also the discussions in Stirling (Reference Stirling2008) on the governance of technology and in Dryzek and Pickering (Reference Dryzek and Pickering2017) on environmental governance, as well as the subsequent uptake of these discussions in the corporate governance literature in Scherer and Voegtlin (Reference Scherer and Voegtlin2020). Such knowledge inequalities are inherent in any process of analyzing, regulating, and managing complex technological and societal problems (Mansbridge et al., Reference Mansbridge, Bohman, Chambers, Christiano, Fung, Parkinson, Thompson, Warren, Parkinson and Mansbridge2012).

Viewed from a systems perspective, knowledge inequalities are distributed across various venues, including AI expert committees, civil society organizations, public fora, and individual contemplations and reflections about AI and its governance. Examples of such venues include initiatives like the Ethics and Governance of Artificial Intelligence Initiative launched by MIT’s Media Lab and Harvard University’s Berkman Klein Center, professional association initiatives like the Institute of Electrical and Electronics Engineers’ Global Initiative on Ethics of Autonomous and Intelligent Systems, open source activist initiatives like the Open Ethics Initiative, and corporate forays like Google’s recent efforts to help its customers better understand and interpret the predictions of its machine learning models. Each of these venues could in principle assume different functions in “interacting” (Thompson, Reference Thompson2008: 515) single deliberative moments in support of wider public judgment. Here the idea is that although none of these venues by themselves can fully enact the deliberative virtues of participation, comprehension, and multivocality, they can still support public reasoning at large by fulfilling a distinct function in a wider network of deliberation (Parkinson, Reference Parkinson2006; Thompson, Reference Thompson2008: 515). This view recognizes the inevitability of a division of labor in the deliberative process as a result of the differing types of expertise among actors in the AI industry and those outside the industry. Instead of a focus on “true” single-actor venues for deliberation, this approach emphasizes that different parts of a system can be complementary in supporting “deliberative rationality” for the governance of responsible AI innovation. What is most important about the judgments or outputs of deliberative venues is not so much whether they are conducive to a truly rational process “within” but whether that venue’s particular discourse leads to a useful output that can be further “processed” by other venues.

On the basis of the important arguments advanced by Alfred Moore (Reference Moore2016) on deliberative democracy and epistemic inequalities, we hold that citizens outside of a particular expert venue for deliberating AI and its governance need to be able to exercise judgment on the closed deliberations of AI developers and other experts on the inside. However, as Moore (Reference Moore2016) discussed, meeting this need presents a complex challenge in that outsiders can be expected neither to possess the knowledge required to trace and follow the subjects deliberated upon in closed AI expert discourse nor to be able to corroborate whether this discourse follows a process of fair and principled deliberation. Furthermore, the sharpness of the boundary between expert and nonexpert venues points to an important difference in reasoning within these venues: whereas experts reason among themselves to deliver evaluations of the design, development, and impacts of particular systems or to decide on proposals for translational tools and policies to govern AI (as outputs of their deliberation), nonexpert “outsiders” of these venues need to form well-informed opinions on whether to accept (trust) or reject (resist/contest) these outputs. Moreover, the reasons that outsiders might have for accepting experts’ evaluations and decisions may be quite different from what first led these experts to develop and support these outputs (Moore, Reference Moore2016).

If the internal reasoning of AI expert discourse is detached in this way from the concerns and reasoning of citizens, expert venues would consequently be both secluded from public oversight and scrutiny (a legitimation issue) and cut off from public feedback as an important source of creative fact finding and articulation of new public issues emerging from AI in practice (an epistemic sourcing issue). For the division of labor to function effectively in support of wider public reasoning, there needs to be both a real possibility of contesting and withdrawing legitimation and a real opportunity to influence the content of expert deliberation (Moore, Reference Moore2016). In the following section, we follow these important reflections by Moore to discuss further how this division of labor might be gainfully distributed among different deliberative venues and across the whole AI innovation pipeline.

Modeling Distributed Deliberation across the AI Innovation Pipeline

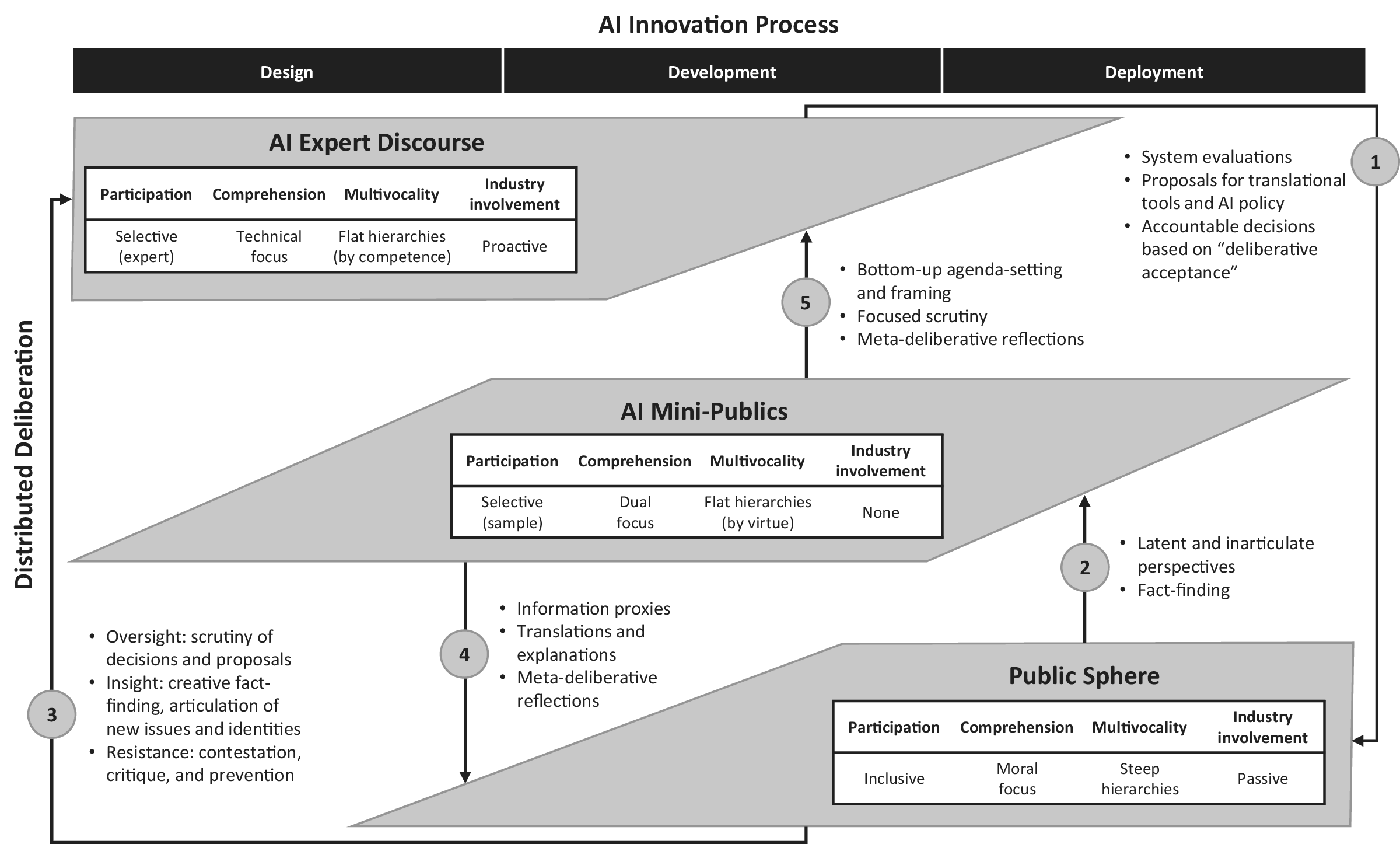

The AI innovation pipeline consists of an iterative process that ranges from design (e.g., business and use-case development, where problems are defined and uses of AI are proposed), to development (e.g., data procurement, programming, and turning business cases into concrete design requirements applied to training data sets) and deployment (where AI “goes live,” it is used, and its performance is monitored in practice) (Saltz & Dewar, Reference Saltz and Dewar2019). This pipeline is a useful reference point in that various ethical challenges and related translational tools for ethical AI can be better understood by plotting them across this pipeline and addressing their implications in relation to these different stages (Morley et al., Reference Morley, Floridi, Kinsey and Elhalal2020). Similarly, the role of different deliberative venues and their potential for mitigating the epistemic challenges entailed in responsible AI innovation can best be elucidated by discussing them vis-à-vis this process (see Figure 1).

Figure 1: A Model of Distributed Deliberation for Responsible Innovation in Artificial Intelligence

The Role of AI Expert Discourse

From a systems perspective, the core function of AI expert discourse is to deliver assessments and evaluations of AI systems to the wider public and to suggest new translational tools and policies for AI governance. Possible venues for such discourse include AI committees, commissions, and councils. Insofar as participation in such expert venues is based on competence, however, these venues fall short of the virtues of inclusive and open participation. The mode of engagement here is “technical expertise” (Fung, Reference Fung2006), and actors from the AI industry should have an active and structured role in such expert deliberations. Selective access to these venues, combined with this proactive role of the AI industry, inevitably limits the focus of deliberation in AI expert discourse. Unlike broader public deliberation, these venues lack the ability to relate concerns about AI to broader questions of moral norms and the public good. Instead, the efforts of experts are more usefully focused on rather “narrow” technical issues and judgments (see “deliberative output 1” in the model presented in Figure 1). Such issues could include addressing system limitations due to evidence concerns about AI (e.g., trade-offs between reliability and costs, i.e., quantifying risks) or considering ways of making systems more intelligible by linking data inputs to conclusions to afford a better understanding of outcomes in relation to data, that is, what data are used (their scope, quality, etc.) and how data points are used for learning (Miller & Record, Reference Miller and Record2013). Among experts and system developers, the focus should be primarily on “how” explanations, that is, on the interpretability of systems, qualitatively assessing whether they meet other desiderata, such as fairness, privacy, reliability, robustness, causality, usability, and trust (Doshi-Velez & Kim, Reference Doshi-Velez and Kim2017: 3). In principle, such how explanations can be both prospective and retrospective, that is, “How will the system operate and use data?” and “How and why were decisions reached?” (Preece, Reference Preece2018). Toward outsiders, meanwhile, the focus should be on why explanations, that is, on providing reasons to end users as to why a particular course of action was taken (Dwivedi et al., Reference Dwivedi, Hughes, Ismagilova, Aarts, Coombs, Crick, Duan, Dwivedi, Edwards, Eirug, Galanos, Vigneswara Ilavarasank, Janssen, Jones, Kumar Kar, Kizgin, Kronemann, Lal, Lucini, Medaglia, Le Meunier-FitzHugh, Le Meunier-FitzHugh, Misra, Mogaji, Kumar Sharma, Bahadur Singh, Raghavan, Raman, Rana, Samothrakis, Spencer, Tamilmani, Tubadji, Walton and Williams2019; Rahwan, Reference Rahwan2018). The focus of AI expert discourse accordingly tends to be more on the front end of the AI development pipeline, where a larger share of issues can be meaningfully addressed at a technical level. Toward the back end of the pipeline, however, where issues emerge in the form of long-term transformative effects that transpire during system deployment, the focus of discourse needs to be on moral judgments and decisions regarding the public good. For this, AI expert discourse is a lot less conductive as inclusive participation is compromised. In AI expert discourse, the virtue focus is strongly on comprehension and multivocality. Unlike in wider public debate, this is possible because secluded deliberations among experts afford good opportunities for achieving a shared commitment to the equality of participants and for facilitating diverse reason giving, because knowledge about AI is equally distributed in these venues. This allows for a strong and transparent relationship to be established between the multitude of arguments weighed against each other and the decisions reached as an outcome of such deliberation (Moore, Reference Moore2016). These decisions may take the form of expert consensus arising from unanimity of beliefs and evaluations (cf. “scientific consensus” in Turner, Reference Turner2003) or as an equally collective decision that spans other differences and incorporates a willing suspension of possible disagreement (cf. “active consensus” in Beatty & Moore, Reference Beatty and Moore2010). In the latter case, the assumption is that potential disagreements can more readily be suspended based precisely on the propensity to flat hierarchies (based on the mutually high competence among participants) and strong multivocality amid experts.

Such informed suspension of disagreement in deliberation in turn provides people outside of secluded AI expert discourse with good reasons and confidence to accept expert system evaluations and proposals for translational tools and policy. To support this kind of trust in expertise, Moore (Reference Moore2016) proposes that expert discourse should work toward “deliberative acceptance” by signaling the deliberative quality of the expert venue to the outside. This could be achieved, for example, by experts taking a vote that must not only secure a majority but also a confirmation from those who disagree that their concerns and criticisms have been appropriately taken into account and that they have had ample opportunity to challenge and prevent the final expert decision.

The Public Sphere as the Main Venue for AI Scrutiny

Although most citizens and everyday users of AI and algorithms lack sufficient formal knowledge and qualifications to participate in AI expert discourse, they can nonetheless be considered as potential “citizen experts” (Fischer, Reference Fischer2000) inasmuch as they have experiential knowledge accumulated from varied and particular contexts of AI systems in use. Such citizen expertise must thus be considered an important part of the overall process and dynamic of mitigating epistemic challenges in AI through distributed deliberation. For the public sphere tasked with deliberating on “AI in practice” and scrutinizing the system evaluations and AI policy proposals produced by expert venues, the focus should primarily be on normative concerns about AI that require broad judgments regarding moral norms and the public good. (This corresponds to “deliberative output 2” in our model, as an input for AI “mini-publics,” which will be introduced as a separate venue later.) While such public scrutiny of AI may be of limited value for certain, more technical evaluations regarding design and development, it is indispensable at the back end of the AI innovation pipeline, that is, in the stages of AI testing, deployment, and monitoring. This highlights the important role of the public sphere, especially in tackling what Mittelstadt et al. (Reference Mittelstadt, Allo, Taddeo, Wachter and Floridi2016: 5) term “strictly ethical”—as opposed to more technical—problems with AI and in assessing the “observer-dependent fairness of the (AI) action and its effects.” Tackling these problems could include addressing topics of concern, such as the potentially biased outcomes of AI applications and identifying and weighing up the potential long-term transformative effects of applying AI in particular social domains. While public-sphere deliberation should thus tend toward a greater emphasis on such back-end concerns, it is also needed to address certain aspects of the design and development stages, for example, through deliberations concerning potentially unethical and discriminatory variables (Veale & Binns, Reference Veale and Binns2017).

In line with Moore’s (Reference Moore2016) conclusions, the functions of the public sphere can be enacted in at least three important ways vis-à-vis AI expert discourse: 1) through overseeing and scrutinizing AI developers and experts based on “lifeworld-bound” perspectives, 2) through stimulating expert discourse by articulating new issues and identities that emerge from the everyday use of AI applications, and 3) through empowering and exercising resistance against problematic AI systems and policies. These functions correspond to “deliberative output 3” in our model in Figure 1.

AI Mini-publics as Mediating and Moderating Venues

On the basis of the concept of “mini-publics” (Setälä & Smith, Reference Setälä, Smith, Bächtiger, Dryzek, Mansbridge and Warren2018), we refer here to “AI mini-publics” as venues for deliberation that comprise a “sample of citizens” situated at the intersection of closed AI expert venues and the wider public sphere. Such venues may take the form of purposeful associations, citizen panels, AI think tanks, and interest groups. As Moore (Reference Moore2016) notes, the idea of “minipopuli” was proposed from early on as a way of bringing public judgment to bear on expert discourse. The literature on responsible innovation has described such venues as an important means for ensuring inclusion and for “upstreaming” public debate into the “technical parts” of governing innovation (Stilgoe et al., Reference Stilgoe, Owen and Macnaghten2013: 1571). In agreement with arguments advanced by, for example, Moore (Reference Moore2016), Niemeyer (Reference Niemeyer2011), and Brown (Reference Brown2009), we posit that AI mini-publics constitute a central mode for enabling and supporting rational public judgment of both AI systems and policy. Situated between the “expert layer” and lay citizens, AI mini-publics can concern themselves with all stages of the AI innovation pipeline, ranging from assessments of proposals for AI use (during the design phase) to monitoring and evaluating the long-term transformative effects of AI in practice (during the deployment phase). As elucidated in what follows, mini-publics as venues of AI scrutiny serve at least three related functions.

First, AI mini-publics mediate between secluded AI expert venues and the public sphere by providing “palatable expertise,” serving as “information proxies” (MacKenzie & Warren, Reference MacKenzie, Warren, Parkinson and Mansbridge2012) that offer translations and explanations of poorly scrutable and traceable systems, AI policies, and expert arguments and decisions (corresponding to “deliberative output 4” in our model). In the case of efforts by AI industry actors to frame principles for AI governance (Schiff et al., Reference Schiff, Borenstein, Biddle and Laas2021) or to help users and implementers understand their machine learning systems in action by providing interpretative tools (Mitchell et al., Reference Mitchell, Wu, Zaldivar, Barnes, Vasserman, Hutchinson, Spitzer, Raji and Gebru2019), for example, these industry-led efforts could be gainfully contextualized and evaluated through the work of AI mini-publics. By supplementing these efforts with alternative assessments and explanations, AI mini-publics could strengthen users’ understanding of otherwise mainly industry-based framings, thereby bolstering public resilience to potentially biased accounts. As such, AI mini-publics can augment the comparatively low capacity of public deliberation for comprehension and multivocality.

As “mediators,” AI mini-publics need to have a dual focus of judgment to relate narrower technical issues with AI to broader normative concerns, and vice versa. In this sense, they are particularly well equipped to address “traceability issues” in AI, that is, to answer questions about the causes of these issues and the responsibilities of actors where broader questions about accountability relate to narrow technical issues in data sets or code design. As such, AI mini-publics are key long-term agents of traceability (Mittelstadt et al., Reference Mittelstadt, Allo, Taddeo, Wachter and Floridi2016) and explicability (Floridi et al., Reference Floridi, Cowls, Beltrametti, Chatila, Chazerand, Dignum, Luetge, Madelin, Pagallo, Rossi, Scahfer, Valcke and Vayena2018). This is because they can support what Morley et al. (Reference Morley, Floridi, Kinsey and Elhalal2020) call “the development of a common language” beyond any expert community, linking terminologies and interpretations across diverse and dispersed deliberative venues with different knowledge, experiences, and virtue foci. This translating function seems especially important for enabling the public at large to reflect upon and stay alert to the long-term impacts and transformative effects of AI. This is crucial because such long-term effects, unlike the biases of specific applications or other more direct harmful outcomes of AI systems, can have much less obvious but wide-ranging harmful consequences.

Second, AI mini-publics can moderate the distributed efforts of deliberation on AI and its governance by providing reflections on the need for a division of deliberative labor to mitigate the epistemic challenges around AI. This is essential because for deliberative venues to interact constructively, their division of labor must itself be a potential object of justification through deliberation (Mansbridge et al., Reference Mansbridge, Bohman, Chambers, Christiano, Fung, Parkinson, Thompson, Warren, Parkinson and Mansbridge2012). Epistemic inequalities resulting from AI developers’ and other experts’ knowledge and their deliberative distribution need to be accompanied by the possibility of “meta-deliberation” on the procedures and functional differentiation of the deliberative system itself. Such meta-deliberative reflection can be enacted though the work of mini-publics (Moore, Reference Moore2016) and is thus included in our model in Figure 1 as outputs 4 and 5.

Third, AI mini-publics are important for generating and directing media attention to otherwise latent issues around AI. Such attention is often necessary for framing issues, widening popular mobilization, and deepening support for arguments or points of critique (Fung, Reference Fung2003); it corresponds to “deliberative output 5” in our model. Media reporting on discrimination and unfairness, errors and mistakes, violations of social and legal norms, and human misuse of AI can be useful for exposing the contours of algorithmic power (Diakopoulos, Reference Diakopoulos2019). Given that the media system is itself embedded in a hierarchical arena of communicative actors (Habermas, Reference Habermas2006), however, this system is difficult to penetrate, especially by unorganized civil society interests. Only a functioning media can, via a latent escalation potential, counteract the tendency within expert discourses to keep concerns latent and suppress public dissent (Moore, Reference Moore2016).

In conclusion, we argue that venues currently being set up by associations like the Institute of Electrical and Electronics Engineers, the Royal Society, and groups hosted by the Future of Life Institute to discuss the workings and desiderata of autonomous systems can be positioned to perform the role and functions of AI mini-publics. These functions, as we have seen, include explaining, translating, and contextualizing the outputs of closed expert discourse for the public sphere. For example, this could involve developing contents and formats for “documentary procedures” by which to increase the transparency of systems, providing reflections through meta-deliberation on the necessity and particular “location” of boundaries between expert and nonexpert venues, and increasing the capacity for media attention to support the public scrutiny of otherwise latent issues. For AI mini-publics to exercise these roles, however, they need to exclude actors with organized particular interests, or what Moore (Reference Moore2016: 201) calls “partisans.” This entails “cutting” the involvement of the AI industry wherever possible at this level.

DISCUSSION

The Promise and Peril of a Deliberatively Engaged AI Industry

We have started our article by proposing a new framework of responsibilities for innovation in AI. As an addition to extant frameworks for ethical AI (Floridi & Cowls, Reference Floridi and Cowls2019), our framework highlights the importance of governance that draws on deliberation to address epistemic concerns as a meta-responsibility in AI innovation. On the basis of this framework, we have advocated for PCSR as an approach to such AI governance because it allows the foregrounding of the role of the AI industry in deliberative processes of principled communication and collective decision-making. Besides the discussed upsides of the PCSR approach and the challenges related to deliberation in responsible AI innovation (see section “Enacting Responsible AI Governance”), open participation and deliberation by AI industry actors can obviously create problems of agency, for instance, in cases when the disclosure and sharing of information lead to disproportionate advantages for competitors (Hippel & Van Krogh, Reference Hippel and Van Krogh2003). For reasons of self-interest, therefore, including the desire to maintain power imbalances and information advantages, AI industry actors, just like other corporate actors (Hussain & Moriarty, Reference Hussain and Moriarty2018), would seem unlikely to be willing to solve challenges deliberatively. In fact, the AI industry is often accused of disregarding participation and user consent in favor of “closed-door” decision-making and of prioritizing frictionless functionality in accordance with profit-driven business models (Campolo, Sanfilippo, Whittaker, & Crawford, Reference Campolo, Sanfilippo, Whittaker and Crawford2017).

In addition to the poor scrutability and traceability of AI, the private interests and power of AI industry actors would seem to challenge the optimistic notion that the epistemic power of “deep democracy” could foster responsible AI governance by way of deliberatively engaging AI developers and other AI industry actors in a wider network of empowered actors from the public, private, and civil society sectors. Indeed, there are good reasons for rejecting proposals to extend the political role of businesses, not least on the basis that this could turn corporations into “supervising authorities” and thus lead rather to a democratic deficit than the desired increase in informed deliberation (Hussain & Moriarty, Reference Hussain and Moriarty2018). Such a normative approach to AI governance can further be criticized for shifting the focus of ethical expectations away from corporate conduct that is adaptive to external demands and concerns, instead proposing a discursive negotiation of ethical conduct that risks ultimately serving the interests of corporations and further suppressing already marginalized publics (Ehrnström-Fuentes, Reference Ehrnström-Fuentes2016; Whelan, Reference Whelan2012; Willke & Willke, Reference Willke and Willke2008). For corporate actors, the prioritization of “mutual dialogue” to allegedly resolve issues may be a much easier option than changing or simply abandoning contested conduct (Banerjee, Reference Banerjee2010). Normative stakeholder engagement in this case would thus constitute merely a means of deflection.

All this may inspire little optimism when it comes to a deliberatively engaged AI industry, not only as it relates to openness in addressing evidence, outcome, and epistemic concerns about AI, especially in the case of what we earlier labeled “strategic opacity,” but also on a meta-level to the development of guidelines for soft governance. Consider, for instance, the recent criticism that the AI industry utilizes soft governance merely for purposes of “ethics washing” and for delaying regulation (Butcher & Beridze, Reference Butcher and Beridze2019; Floridi, Reference Floridi2019b). However, we believe that our discussion on PCSR as an approach to enacting responsible AI governance and the related arguments regarding collective goals, legitimation, and organizational learning provide some promising grounds for positioning the role of the AI industry as less adversarial and more communicative than is often proposed to be the case with corporate innovators more generally (Brand, Blok, & Verweij, Reference Brand, Blok and Verweij2020; Hussain & Moriarty, Reference Hussain and Moriarty2018). In this article, we have presented and discussed instances in which fostering and participating in deliberation is not simply an “easier option” for the AI industry but the best available approach to manage responsible AI innovation in view of the poor scrutability and traceability of AI systems. Current instances of apparent “ethics washing” may well be part of an early stage on a longer path toward the substantive adoption and institutionalization of corporate social responsibility, which often starts with the adoption of ceremonial forms that may look like ethics washing (Haack, Martignoni, & Schoeneborn, Reference Haack, Martignoni and Schoeneborn2020). In particular, the need to manage concerns about “expert” and “true” opacity in AI may eventually lead organizations to look less favorably on strategic approaches to risk management and merely instrumental stakeholder engagement (Van Huijstee & Glasbergen, Reference Van Huijstee and Glasbergen2008), leading them toward the adoption of more open, prosocial, and consensus-oriented approaches. (On this aspect, see Scherer & Palazzo, Reference Scherer and Palazzo2007, as well as Buhmann et al., Reference Buhmann, Paßmann and Fieseler2020, in relation to AI developers more specifically.)

Augmenting Distributed Deliberation for Responsible Innovation in AI

Building on the PCSR perspective and a discussion of the challenges entailed in deliberation for responsible AI innovation, we have argued for the prospects of a “distributed deliberation” approach as a means of overcoming said challenges. And we have, subsequently, considered ways in which different venues can reach deliberative conclusions supportive of a broader, distributed process of deliberative governance for responsible AI innovation. In addition to the need for further empirical exploration of how different internal procedures for reaching decisions within AI expert discourse and AI mini-publics (on concrete AI systems and translational tools) can support the ability of citizens to make informed judgments in accepting or rejecting expert decisions, there are important theoretical questions to be considered.

First and foremost among these is the concern that the approach of distributed deliberation we have proposed is only convincing to the extent that AI mini-publics do indeed supplement rather than replace the critical public sphere and its judgments. This important criticism has previously been leveled against the concept of mini-publics on the basis that it could lead to “deliberative elitism” (Lafont, Reference Lafont2015), effectively displacing important instances of public-sphere scrutiny, including social movements. From this critical perspective, the outcomes of deliberation by AI mini-publics are seen not as vital information proxies directed at the broader public sphere in support of rational public discourse but rather as dominant elite recommendations that undermine public-sphere rationality (see the discussion of this critique in Moore, Reference Moore2016). If this were the case in practice, distributed deliberation mediated by AI mini-publics would indeed serve to sharpen epistemic inequalities and further exacerbate ethical problems related to AI opacity. In agreement with other proponents of mini-publics (e.g., Brown, Reference Brown2009; Fisher, Reference Fischer2000; Fung, Reference Fung2003; Moore, Reference Moore2016), however, we hold that AI mini-publics can meaningfully supplement and enhance public-sphere-level judgments on AI systems and policies. Accordingly, we propose that further research on responsible AI innovation and governance should include empirical exploration of how and to what extent this happens in practice. More specifically, this calls for a closer look at how the efforts of mini-publics can support public-sphere deliberations on the creation and evaluation of translational tools, especially because the creation of such tools has been proposed as a key focus for the machine learning expert community (Morley et al., Reference Morley, Floridi, Kinsey and Elhalal2020). These efforts could be explored at the level of professional associations, think tanks, advocacy groups, and more loose and time-bound workshop groups, conferences, and collaborations, such as those that produced the Asilomar AI Principles and the Montréal Declaration for a Responsible Development of AI.Footnote 3

Second, we have suggested that AI expert discourse constitutes a venue type with a narrow focus on technical judgments rather than on moral norms or the common good. Although this seems an apt conceptualization of the work and dynamics of most closed AI expert venues, such as the Organisation for Economic Co-operation and Development’s Expert Group on AI or the European Union’s High-Level Expert Group on Artificial Intelligence, at least one important type of AI expert venue does not match this definition in any straightforward manner. This is the case with AI ethics councils, which, for example, although these are distinctly expert deliberative venues, also necessarily have an orientation toward questions of moral norms and the common good. The special role of AI ethics councils thus warrants particular theoretical and empirical attention in further developing the proposed distributed approach. (For related discussions on the role of ethics councils, see Wynne, Reference Wynne2001; Moore, Reference Moore2010.)