No CrossRef data available.

Article contents

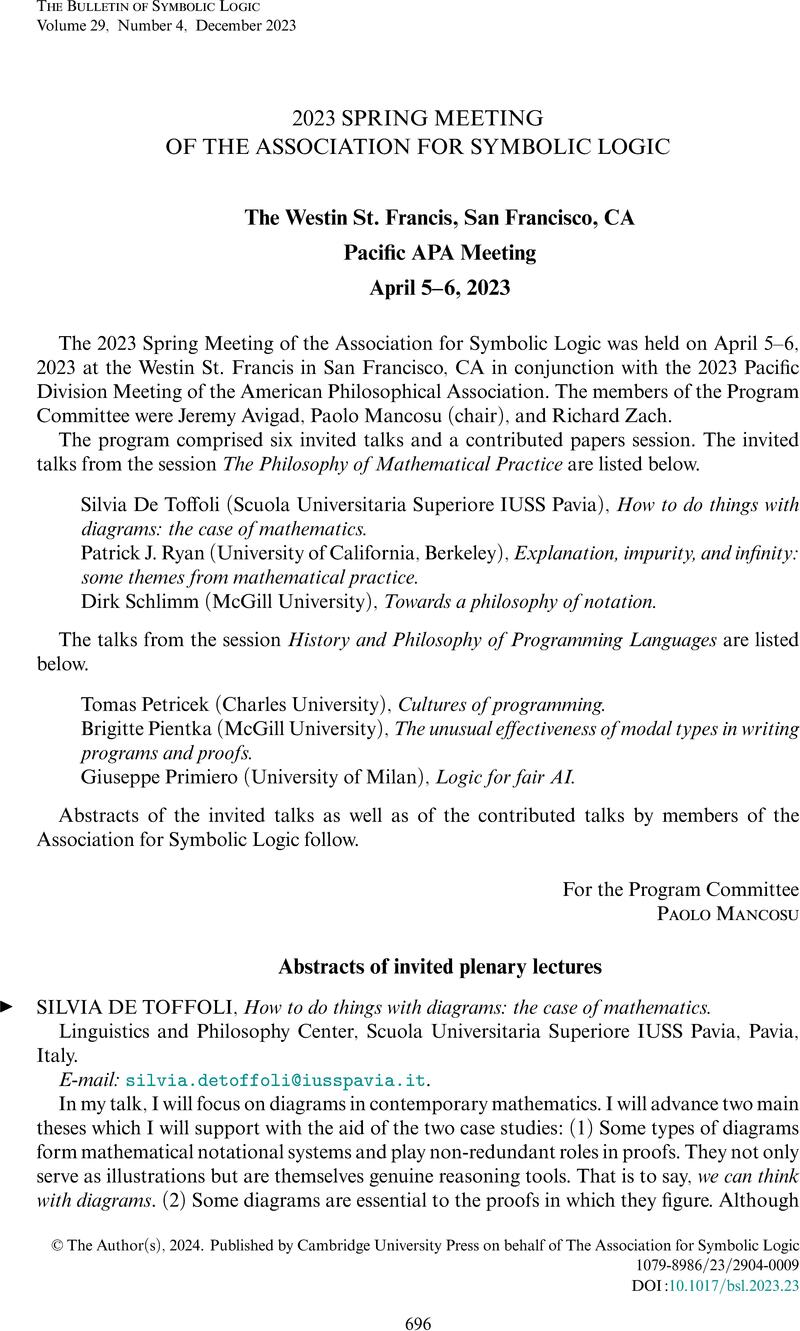

2023 SPRING MEETING OF THE ASSOCIATION FOR SYMBOLIC LOGIC The Westin St. Francis, San Francisco, CA Pacific APA Meeting April 5–6, 2023

Published online by Cambridge University Press: 23 February 2024

Abstract

An abstract is not available for this content so a preview has been provided. Please use the Get access link above for information on how to access this content.

- Type

- Meeting Report

- Information

- Copyright

- © The Author(s), 2024. Published by Cambridge University Press on behalf of The Association for Symbolic Logic