There is no single generally accepted, overarching definition of a portfolio. It rather depends on the portfolio's use and purpose. From the published literature, six uses have been found, which can be overlapping (Box 1). Reference Pitts and Swanwick1

Portfolios themselves have evolved from a paper-based format to an electronic format (e-portfolios), as well as evolving in their content and purpose (see Box 2 for various models of portfolios). For psychiatry trainees in the North West, portfolios were initially a log of activities, achievements and assessments. Since then there has been a move towards portfolios being more trainee-centred to aid learning and development, with more focus placed on self-reflection. The competencies required for psychiatry trainees in the north west of England were initially derived from the General Medical Council's (GMC's) Good Medical Practice 3 but now are taken from the Canadian model, the CanMEDS Physician Competency Framework (2005). 4 This looks at the knowledge, skills and attitudes in a variety of domains, including viewing the doctor as a communicator, collaborator, manager, scholar, health advocate and medical expert.

Box 1 Uses of portfolios Reference Pitts and Swanwick1

-

• Continuing professional development

-

• Enhanced learning

-

• Assessment

-

• Evaluation

-

• Certification and re-certification

-

• Career advancement

Box 2 Models of portfolios Reference Webb2

Shopping trolley

The portfolio contains everything the student considers appropriate. No predetermined sections. Rarely any linking strategies between components.

Toast rack

The portfolio contains predetermined ‘slots'that must be filled for each module, such as reflective pieces, action plans or list of skills required.

Cake mix

The portfolio sections are blended or integrated. Students are expected to provide evidence to demonstrate they have achieved their learning outcomes. There is a collection of individual ingredients, which are ‘mixed’ (such as using reflective pieces with formative assessments) and what emerges as the ‘cake’ is more than the sum of its parts.

Spinal column

A series of competency statements form the central column (‘vertebrae’) of assessment. The evidences collected are the ‘nerve roots’ entering the ‘vertebrae’. One piece of evidence can be used against multiple statements.

Studies in the USA found that raters had good reliability for judging the overall quality of portfolios for psychiatrists Reference O'Sullivan, Reckase, McClain, Savidge and Clardy5 and psychiatry residents rated portfolios as being the best way to assess their medical knowledge, feeling that they could effectively measure their competencies in the areas of patient care and practice-based learning. Reference Cogbill, O'Sullivan and Clardy6

In the UK, postgraduate medical trainees spend the first 2 years obtaining general or foundation training (FY1 and FY2). If trainees choose psychiatry, they then spend 3 years as a core trainee (CT1-3), followed by another 3 years as a specialty trainee (ST4-6). All psychiatry trainees in the North Western Deanery have experience of the electronic portfolio system known as METIS, introduced in 2008. Prior to this, a paper-based system was used. As a sign of how quickly things move on in medical education, a survey of psychiatry trainees in London in 2005 revealed that the majority of respondents did not have portfolios, and half of them had never heard of portfolios. Reference Seed, Davies and McIvor7 At the time of writing, North West psychiatry trainees using METIS store evidence in the various sections given in Box 3. The evidence can be mapped onto the competencies expected for their level of training.

As a medical education representative for the Pennine Care NHS Foundation Trust, a medical education fellow for the North Western Deanery, and from attending North West Trainee meetings, N.H. had anecdotal evidence that some trainees had strong positive and negative views about the current portfolio system. Some view the portfolios as a ‘tick-box’ exercise and as a bureaucratic process ultimately necessary for the summative end-of-year assessment (Annual Review of Competency Progression, ARCP). Other authors have expressed similar views in relation to portfolio use by nurses, midwives and health visitors. Webb questioned, ‘Does a portfolio provide a real insight into a practitioner's clinical ability, or does it simply show that its author is good at writing about what he or she does?’. Reference Webb2 In addition, a systematic review of portfolios published in 2009 reported that ‘no studies objectively tested the implication that time was a barrier to the practicality of portfolio use’. Reference Tochel, Haig, Hesketh, Cadzow, Beggs and Colthart8

Box 3 Sections within the METIS e-portfolio system

-

• Personal development (incorporating self-appraisal and personal development plan)

-

• Educational appraisal (incorporating induction, mid- and end-point reviews and educational supervisor's report)

-

• Reflective practice (for critical incidents or difficult situations, or self-appraisal of learning or clinical experiences)

-

• Workplace-based assessments

-

• Psychotherapy experience

-

• Other training (includes record of research, audit, teaching, courses, management, on-call, supervision, electroconvulsive therapy, ethical dilemmas and other experiences not mentioned elsewhere)

-

• Mandatory training (including record of cardiopulmonary resuscitation, breakaway, National Health Service appraisal, School of Psychiatry quality and safety surveys, General Medical Council survey)

To our knowledge, there have not been any large-scale studies evaluating UK psychiatry trainees’ views of portfolios after their compulsory implementation. We therefore conducted the first study in the North West region into formally looking at trainees’ views about portfolios, what they are used for, how long trainees spend on them and whether trainees felt they had adequate time to do so, and what can be done to improve the process of preparing portfolios.

Method

N.H., following consultation with G.S. and D.L., devised a questionnaire using the electronic-based survey tool, Lime Survey (Box 4). The questionnaire incorporated answers that needed to be ranked from a list, and free-text answers without a maximum word limit.

This questionnaire was linked to the School of Psychiatry's Mandatory Quality Survey that was sent out via email to all psychiatry trainees in the North Western Deanery in February 2010 (a total of 228 trainees). Trainees received an explanation about the purpose of the survey prior to completing it. The software collated the results anonymously into an Excel spreadsheet after 1 month following initial emailing. Trainees could only submit their answers once (the software did not allow for multiple entries from the same trainee). The software automatically sent reminders to non-responders after a certain time frame.

The questions evaluated trainees’ priorities and what educational tools they found most useful, as taken from the sections contained in METIS as of November 2009. They also evaluated how much time trainees were spending on their portfolios, and contained free-text boxes to evaluate what the trainees felt were the best and worst aspects of the portfolios and how it could be improved to meet their learning needs. The responses were analysed using SPSS version 16 on Windows. N.H. read all free-text answers and collated any recurrent themes.

Box 4 Questionnaire

-

1. What are your top priorities regarding portfolio use?

-

2. Which educational tools within the portfolio do you learn most by?

-

3. How could the portfolio be improved to meet your learning needs?

-

4. What are the best aspects of the portfolio?

-

5. What are the worst aspects of the portfolio?

-

6. (a) How much time do you spend on your portfolio weekly?…hours….minutes,

(b) Do you feel you have enough time to use your portfolio? Y/N

Results

The survey was completed by 207 out of 228 trainees; giving a response rate of 90.8%. Out of all trainees within the North West, a total of 20.2% were CT1 trainees (n = 46); 15.8% were CT2 trainees (n = 36); 22.8% were CT3 trainees (n = 52); 14.9% were ST4 trainees (n = 34); 13.6% were ST5 trainees (n = 31); and 11% were ST6 trainees (n = 25). The spread of trainee distribution is shown in Fig. 1. There were few (n = 4) that were still classified as ‘SPRs’ (specialist registrars) (under the old training system) and not STs.

FIG 1 Trainee grade of respondents. ST, specialty trainee; CT, core trainee.

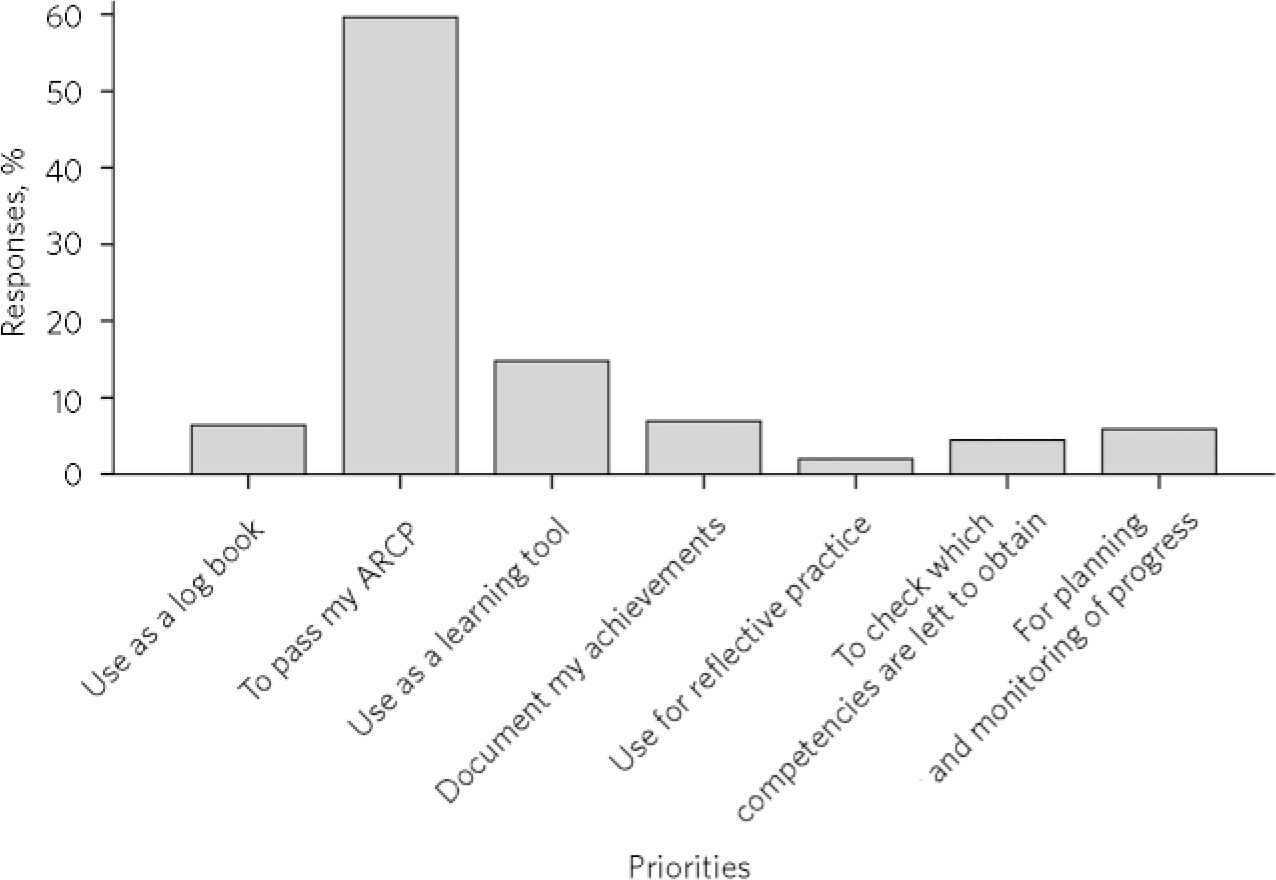

The majority (58.4%, n = 121) rated ‘Passing my ARCP’ as their top priority for portfolio use (Fig. 2). This was a question in which trainees were invited to rank their preferences in order from their top priority downwards, from a predetermined list. Out of the trainees that responded to this answer, 71 were CT1-3grade, and 49 were ST4-6. For their second and third priorities, the most common answers were ‘Checking which competencies are left to obtain’ (26.6%, n = 55) and ‘To document my achievements’ (24.6%, n = 51).

FIG 2 First-ranked responses to: ‘What are your top priorities regarding portfolio use?’. ARCP, Annual Review of Competence Progression.

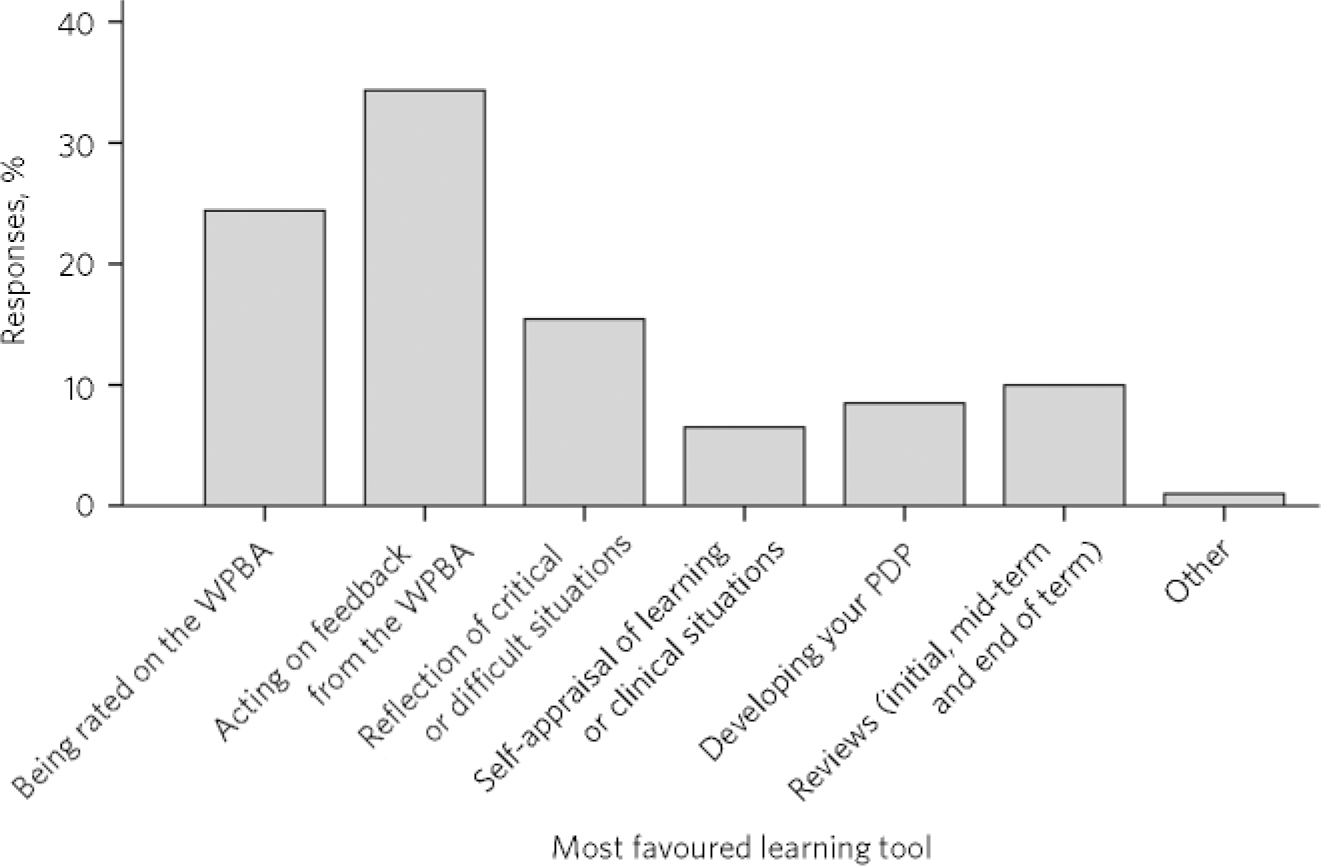

Within the portfolios, most trainees responded that they learnt most from obtaining feedback from their workplace-based assessments (WPBAs, 33.3%, n = 69). Other results can be seen in Fig. 3 (the categories on the x-axis were based on the sections contained within the e-portfolio).

FIG 3 Responses to: ‘Which educational tools within the portfolio do you learn most by?’. WPBA, workplace-based assessment; PDP, personal development plan.

Time spent on portfolios ranged from 5 min to 12 h a week (the most common answer given was 1-2 h a week). Thirty-eight trainees did not answer, or their answers could not be interpreted accurately (e.g. some trainees answered, ‘I don't tend to do it weekly’, ‘I let it accumulate and then panic’). The answers given are shown in Table 1. In total, 50.7% stated that they did not feel they had enough time to spend on their portfolios (n = 105), and 49.3% felt that they did (n = 102).

TABLE 1 Time spent on portfolios (n=228)

| Time spent per week | Trainees, n (%) |

|---|---|

| 0-30 min | 45 (19.7) |

| 30 min to 1 h | 50 (21.9) |

| 1-2h | 61 (26.8) |

| >2h | 34 (14.9) |

| Not answered/missing data | 38 (16.7) |

Free-text answers

All free-text answers were read by N.H. The following represent the top seven themes, identified by the number of comments made about the same subject matter (actual numbers given in Box 5). Some direct quotes are included below with the responder number given in brackets. All themes are shown in Box 5.

Time-consuming and anxiety-provoking

Many mentioned that portfolios were having an impact on their clinical work as trainees were ‘rewarded more for sitting in front of the computer and filling out portfolios rather than doing clinical/other work’ (130). Others mentioned that there was a need for protected portfolio time, as many were spending hours of their own personal time compiling the portfolio. This was perhaps a reflection on there being ‘too many mandatory sections’ (58) and competencies that need to be obtained. One trainee reflected the view of many others by writing, ‘Candidates spend more time trying to jazz up their portfolios than improving their clinical assessments and spending time on the ward with patients’ (149). The time taken by some trainees to complete their portfolios appeared to be linked directly to anxiety, making them feel as if the process was, as one put it, ‘very stressful, scary and threatening’ (206). The anxiety was fuelled by the possibility of appearing before the ARCP panel if their portfolio was not adequate: ‘There is an immense fear of being called in front of the committee and made to feel like a guilty convict’ (58).

Ease of use

There were 61 positive comments and 30 negative comments. Regarding the positive comments, trainees commented that they liked having all the documentation pertaining to training, achievements and competencies in one place, and found the online system user-friendly. Several trainees specifically commented on the practical advantages of having a portfolio in electronic form. It is ‘accessible from anywhere with internet connection’ (34), including internet-enabled mobile telephones or tablet personal computers, with the added benefit that educational supervisors can also access their trainee's portfolios, meaning ‘you don't need to carry heavy hard copies around’ (178). When it came to the negative comments, almost all of them centred around difficulties scanning in documents, being able to upload documents saved under various formats (such as gif, PDF files, etc.), and having an uploaded file limit of 300 kb. At the time of writing, many of these problems had been rectified.

Box 5 Top 7 recurrent themes from free-text answers

-

• Time-consuming, linked to anxiety (105 comments)

-

• Ease of use (91 comments)

-

• More clarity needed (57 comments)

-

• Encourages reflection (49 comments)

-

• Pride and ownership (48 comments)

-

• Training required (41 comments)

-

• Tick-box exercise (20 comments)

Clarity

Although trainees stated that the subsections within their portfolios were laid out clearly, they wanted more transparency about what the minimum requirements were for the ARCP. Some expressed annoyance and anger at changes made in the middle of the academic year regarding fields and sections that need to be filled (e.g. the introduction of a new WPBAs form or record of supervision).

Encourages reflection

All WPBAs have to be accompanied by a reflective piece. There were positive and negative comments about this. Many wrote that reflection is important for self-learning but there were opposing views regarding whether it needed to be written down: ‘In reality, reflective practice occurs in conversations with peers, supervisors, while cycling home, having a gin in front of the TV…’ (216). Some trainees did not like being ‘forced’ to reflect: ‘Those trainees who tend not to self-reflect are not likely to improve just because they have to fill in a form telling them to reflect!’ (79).

Pride and ownership

Some trainees took great pride in their portfolios, seeing them as tools written by them and for their own benefit. Some felt it to be an ‘excellent portfolio - ahead of other Deaneries by far’ (28).

Training

Trainees stated that there was a need for training on how to use the portfolio effectively, and for training medical and non-medical staff on the use of WPBAs. Many wanted training on how to reflect, what they should be reflecting on, and how to document their reflective pieces. Some trainees struggled to know how to demonstrate competency in certain areas, such as probity. Trainees mentioned that some supervisors needed training on the purpose and uses of portfolios too, so that supervisors can show genuine interest in the trainees’ learning needs, rather than viewing it as a chore. Some took one step back from this and asked for training on using a computer as there are ‘computer illiterate supervisors out there who can't validate forms’ (105).

Tick-box exercise

The feedback relating to this theme was overwhelmingly negative, with most of the vociferous comments made in this section. Many comments are about how this process takes trainees away from the ‘real’ learning: ‘The system penalises doctors who want to do their job and not spend all their time jumping through hoops’ (149) and, ‘it creates a tick-box reductionist approach to clinical medicine’ (179). Some questioned the validity:

‘It is a tick-box exercise that decides on the competency of the doctor based on what is in the portfolio. Unfortunately the trainee decides what goes into the portfolio. The system is set up for favourable feedbacks, and the reality is masked by attrition bias and poor validity for the portfolio’ (91).

Discussion

Although some trainees took great pride in their portfolios, others viewed it as a tool mainly for the benefit of others; a monitoring tool rather than a learning tool, for example, ‘I cannot help seeing it as a tool that exists to police my training rather than develop it’ (89). Many used it as a summative tool: ‘It is of no use except for passing or failing ARCP’ (139). Herein lies the conflict between those that see it primarily as a developmental tool to promote further self-learning and those that see it more as an assessment tool, for registering competencies monitored by external agencies. Of course, it may not be so clear-cut. Although most respondents replied that passing the ARCP was their main priority, it does not mean that they do not see it as a learning tool too. However, the development v. monitoring debate is nothing new, and is also not restricted to the medical profession. It was similar to those expressed by teachers and corporate managers when portfolios were introduced over a decade ago. Reference Smith and Tillema9 Indeed, many of their comments are remarkably similar to those made by the psychiatry trainees in this study, with regard to the time spent, reflection and requirement for further training and clarity.

Perhaps one solution would be to have an optional or a private section in the portfolios reserved mainly for reflection and development (to which only the trainees have access), and a mandatory part that the trainers feel is essential for progression to the next stage. Trainees in this survey had indeed suggested that the ‘ability to keep reflective notes private’ (191) would improve the system. This may reduce the frequency of comments about being ‘policed’ and perhaps make for more open and honest reflections when mistakes occur. However, would trainees regularly make entries if this was optional? One London-based study showed that over 75% of psychiatry trainees did not keep portfolios or store reflective pieces prior to portfolios being mandatory. Reference Seed, Davies and McIvor7

Perhaps the solution is not to try and separate these two important functions of the portfolio, but rather to marry them for trainees to view it as a developmental assessment tool. Whether the assessment is from a WBPA or the ARCP, vital information can be obtained and used to gain insight into current practices and areas of need and, with support and feedback, can be turned into promoting self-awareness and future development. This highlights the importance of trainees’ perceptions and training for what the portfolio is for. The results from Smith & Tillema's study Reference Smith and Tillema9 showed that people who think more favourably of self-directed learning use portfolios as an instrument for personal development more easily and readily.

The results for Fig. 3 are more spread out than Fig. 2. This may imply that trainees were more unified about what portfolios were for than about how to put it into practice. Training could help with this, and this was requested by many trainees. Since 2008, all new trainees have received an induction on portfolio usage, which has included learning theory in order to contextualise the usage of the portfolio. Nearly all trainers have been trained. The comments included in the free-text boxes about the need for training may therefore highlight a skewed perception of trainees from what is happening in reality. Good communication is important for trainees to prevent a ‘them and us’ mentality that came through in some of the answers; for example trainees perceiving some portfolio requirements as time-consuming measures designed to take them away from doing their core work. Open and transparent channels of communication between trainees and those in charge of any portfolio system are crucial. As such, the North Western Deanery has appointed trainee representatives since 2007 that regularly meet with those responsible for portfolios and their implementation. The authors believe the METIS structure is sound, and rather than the portfolio changing, the focus should be on further training for the users so that they understand its prime purpose as a reflective iterative tool that shows past achievements and future aspirations. D.L. has found that when users understand this fully, the quality of portfolios vastly improves. The METIS structure should also lend itself well to consultant portfolios, provided the regulatory framework is appropriate.

Portfolios do take time to fill, as Table 1 shows. However, there were data that could not be coded quantitatively for 16.7% of trainees, which included answers such as ‘Tend to do it in fits and bursts, not regularly’ (223), or ‘Don't tend to do it weekly… tend to accumulate stuff and then panic’ (113). It is difficult to know which way this would skew the data. It is possible that the non-responders were the ones that spent most time on the portfolio (so they would not want to spend more time on answering questions). On the other hand one could argue that these are the very people who would want their voice heard. Many trainees said portfolios were taking time away from clinical duties or their personal time. They also appeared to be having an impact on supervision time: ‘Supervision is taken over by what WPBAs need to be done rather than spending time talking about things the trainee really wants, such as career advice, etc’ (34). However, there are also pressures from regulatory bodies to directors of medical education and their equivalents to ensure that certain standards are being met, and the regulatory bodies’ guidelines (such as the GMC) are being adhered to. Helping trainees to feel that time spent on portfolios is time well spent can be a challenge, but is important before misunderstandings and cynicism seep in. For some it is already too late:

‘The portfolio is far removed from clinical practice; trainees who spend time making their portfolio look good are not spending time interviewing patients and writing in the notes. This is surely the core of psychiatric training and we learn most from our patients, not from scanning documents, clicking virtual buttons and finding evidence to prove we have gained mastery of offering to make the secretaries cups of tea from time to time.’ (101)

A few commented on how the previous system was better: ‘This apprenticeship type of learning and appraisal is being lost’ (101), although to balance this argument others stated how the WPBAs finally made consultants actually observe and provide feedback on their clinical practice for the first time. It was interesting that the feedback from the WPBA was the most highly regarded learning tool - more so than the rating scales used. Unless assessors use the rating consistently, an argument can be made for not using it in practice. Some trainees voiced their concern about the rating scales:

‘The rating scheme seems to be very differently understood by different assessors. One consultant considers ‘6’ to be good; another gives ‘3’ for exactly the same outcome. The result is that the marks are almost meaningless, and we go solely by the assessor's comments instead. This being the case, why not just have comments rather than ratings?’ (158)

This study is timely, as the Royal College of Psychiatrists has recently released their online portfolio system for UK trainees. Trainees within the North Western Deanery are in the unique position of having used an online portfolio system since 2008 and their views can form useful feedback for trainees in the rest of the UK. Although the questions asked were generic for all portfolio systems and not specifically assessing online issues, several of the trainees’ answers nevertheless touched on this area.

The results are in keeping with other work done in other specialties. Senior house officers (the equivalent of CT1 and CT2 trainees in the current UK postgraduate training scheme) in the emergency department and an obstetric unit using ‘personal learning logs’ stated that more training was required, as well as protected time, and many stated that it did not increase their learning. Reference Kelly and Murray10 Some authors question whether the portfolio is too prescriptive, and propose alternative models based on educational development, clinical practice, leadership, innovation, professionalism and personal experience. Reference Cheung11 However, other studies show medical students and registrars in general practice finding portfolios useful. Reference Grant, Ramsey and Bain12,Reference Snadden, Thomas, Griffin and Hudson13 The results also chime with Pitts’ ideas about how to introduce a portfolio successfully Reference Pitts and Swanwick1 (Box 6). The North Western Deanery believe that all of Pitts’ ideas are in place. It may be the case that time is required for trainees to get used to incorporating portfolios into their lives, which represents a huge change from their previous everyday practice. This survey was carried out shortly after portfolios were introduced. It is hoped that trainees’ comments will continue to help the system evolve, and it will be interesting to repeat the survey after a few years.

Box 6 How to introduce a portfolio successfully Reference Pitts and Swanwick1

-

• Be clear about the purpose of the portfolio

-

• In design, consider together content, purpose and assessment

-

• Understand the level of experience and maturity of the learner

-

• Maintain the centrality of the ‘reflection on practice’

-

• Base on individual professional practice

-

• Provide clear instructions for use

-

• Develop and implement a well-resourced training strategy

-

• Provide institutional support and leadership

The expansion of portfolios within the changing National Health Service requires careful planning, training, and perhaps allocated time to complete, so that trainees can derive the maximum benefits from its intended purpose. By doing this, it is hoped that more trainees will start echoing one particular trainee who, by completing the survey, appears to have reflected and focused on the true purpose of the portfolio:

‘I didn't know the importance of using it as an educational tool. I saw it as record-keeping exercise to pass the ARCP. I didn't see [any] clinical relevance to my development, but now I know’ (43).

Strengths

As far as we are aware, this is the first large-scale survey for UK postgraduate psychiatry trainees following mandatory implementation of portfolios. There were therefore no prior questionnaires found, where questions could be used or adapted for this survey. It was tagged to the mandatory survey, and so the response rate was extremely good. There were a large number of open-ended free-text boxes, as this was felt most conducive to providing a wider and richer range of answers. Other questions addressing trainees’ priorities were answered by a ranking system. It was felt that this would focus the mind and make trainees think more carefully about the answers, rather than just having tick-boxes with the instructions ‘tick all that apply’.

Although the survey was carried out in the North Western Deanery, many of the findings could be applied nationally. We feel the trainees are broadly representative of the rest of the country, and given that almost all trainees responded to the survey, we feel many of the results could be generalised.

As far as the authors know, this is the first large-scale survey looking at time spent on portfolios, and whether this was perceived as a barrier. Given that this was the most popular of the themes, it was important to quantify how long trainees spend on their portfolios. This is an important consideration for anyone designing portfolios in future. The software used for the survey meant that no trainee could answer the survey more than once, which is an advantage over other online surveys such as SurveyMonkey.

Weaknesses

One disadvantage of the ranking system is that it makes it difficult if there is more than one point that is equal in importance. If, for example, getting through the ARCP and using it as a learning tool were equally important, it forces the trainee to make a decision to place one above the other. There is no way of quantifying whether the second choice only narrowly missed being the top choice.

The questions asked in this survey were included within a much larger mandatory survey requesting feedback from trainees for various aspects of their training (including safety issues and physical work environment). This was advantageous as it ensured very high response rates. However, the questions in this study came at the end of the survey comprising approximately 60 questions and taking about 45 min to complete. By the time each trainee came to answer the questions in this study, many may have experienced ‘survey fatigue’ and may not have devoted as much time to the questions as they would have done if they were asked at the beginning. N.H. was given a maximum of 6 questions to ask, which limited the amount of data that could be extracted.

The questionnaire could have been more robust had a pilot study been conducted, followed by a focus group to add or discuss relevant questions for a questionnaire. Alternatively, key people involved in the use and design of portfolios could have been asked to independently rate which are the most important questions to include in any survey of this sort, as part of the Delphi technique. However, these were not done because of time constraints. One person read all the free-text and grouped them into emerging themes. This could have been made more robust using two reviewers, independently analysing the free-text or using accepted coding techniques or software to help with the analysis.

Further research

It is envisaged that the questions asked of trainees for this study (Box 4) can form the basis of some more detailed and specific qualitative work, perhaps with focus groups, to explore this area further. Another possible area of interest would be to find out the views of the supervisors on the utility of portfolio, as some respondents had raised the issue of whether the trainers understood the importance of the portfolio and perhaps some of them needed further training. Other areas of research could focus on demonstrating the validity and reliability of portfolios and the WPBAs contained within them.

Demonstrating the validity of portfolio systems is difficult, as the nature of some of the evidence in portfolios is descriptive and judgement-based rather than quantifiable. This is probably more true of psychiatry portfolios where reflective pieces are crucial. Some authors propose using qualitative research evaluation criteria. Reference Webb, Endacott, Gray, Jasper, McMullen and Scholes14 Other authors have developed a Portfolio Analysis Scoring Inventory for using on portfolios in a medical setting. Reference Driessen, Overeem, van Tartwijk, van der Vleuten and Muijtjens15 This is a complex area that is beyond the scope for detailed discussion within this article, but requires further research.

Acknowledgements

We thank the North Western Deanery for allowing us access to the data supplied in this study.

eLetters

No eLetters have been published for this article.