The development of writing and critical-thinking skills is a key objective and core part of assessments in many undergraduate political science courses (Brown, Nordyke, and Thies Reference Brown, Nordyke and Thies2022; Franklin, Weinberg, and Reifler Reference Franklin, Weinberg and Reifler2014; Pennock Reference Pennock2011). As instructors, we prepare students for future careers inside and outside of academia, where writing and the critical analysis of different types of evidence—whether for policy briefs, research, law reviews, political theory, or op-ed pieces—likely comprise a significant part of a student’s future profession.

The release of ChatGPT on November 30, 2022, changed everything. In a matter of seconds, ChatGPT could respond to any assessment prompt from any discipline. The uncomfortable part? The responses are good. For example, ChatGPT achieved the equivalent of a passing score for a third-year medical student on the United States Medical Licensing Examination (Gilson et al. Reference Gilson, Safranek, Huang, Socrates, Chi, Taylor and Chartash2023). ChatGPT scored 90% on logic-reasoning questions in the Law School Admission Test exam (Fowler Reference Fowler2023a) and received a passing score on law school exams at the University of Minnesota Law School (Choi et al. Reference Choi, Hickman, Monahan and Schwarcz2023).

The emergence of Artificial Intelligence (AI) tools such as ChatGPT creates an obvious dilemma. Analytical writing is hard—and data analysis is even harder, especially for so many students who identify as math phobic (Oldmixon Reference Oldmixon2018). Students now have a technology that can do much of this difficult work for them in a matter of seconds. Many of the traditional assignments that we use to assess writing and critical-thinking skills—including essays, research papers, and short-answer reflections—are all vulnerable to AI misuse (Faverio and Tyson Reference Faverio and Tyson2023).

Despite the impact that AI has had on our world, we know little about students’ skills and perceptions of the technology. We argue that pedagogical practices should be informed by a better understanding of students’ interactions with and attitudes toward AI. This article presents the results of a survey of students currently enrolled in political science courses at a large American public university. We asked students (1) how they are currently using AI tools, as well as how usage varies across student race/ethnicity, gender, GPA, and first-generation status; (2) how competent they feel using AI technology; and (3) how they perceive the appropriateness of AI and its effect on their future career. The results show evidence that AI usage is widespread among undergraduate students, including for writing assistance on papers and essays, as well as evidence that they are not confident in their skills for using AI in ways that they view as most appropriate for their learning (e.g., providing feedback on their writing and preparing for exams). The article discusses the pedagogical implications of the findings, including ideas for integrating AI in the classroom using research-informed practices that also are tailored to students’ perceptions of AI.

USE OF AI TOOLS IS BECOMING MORE WIDESPREAD, ESPECIALLY AMONG YOUNG ADULTS

It is becoming increasingly difficult to ignore the existence of ChatGPT and similar AI tools because the use of AI technology is becoming increasingly widespread, particularly among younger demographics. For example, a July 2023 survey conducted by Pew Research Center suggests that people enrolled in higher education are among the demographic most likely to use ChatGPT (Park and Gelles-Watnick Reference Park and Gelles-Watnick2023). In the Pew survey, more than 40% of young adults aged 18 to 29 who have heard of ChatGPT have used it at least once compared to only 5% of adults aged 65 and older. A May 2023 survey conducted by Common Sense Media found that 58% of students aged 12 to 18 also have used ChatGPT for academic purposes (Klein Reference Klein2023).

AI usage also is increasing in the workforce, particularly in the legal sector. Given that many political science students aspire to enter the law profession, even those who do not use AI on a regular basis may see their future employment prospects shaped by AI. A Brookings Institute report argues that law firms that can use AI technology effectively will have an advantage because they can offer more services at lower costs (Villasenor Reference Villasenor2023). Dell’Acqua et al. (Reference Dell’Acqua, McFowland, Mollick, Lifshitz-Assaf, Kellogg, Rajendran, Krayer, Candelon and Lakhani2023) found that consultants who use AI significantly outperformed their counterparts who do not in terms of efficiency, quality, and quantity on various work-related tasks.

With AI literacy becoming increasingly valuable, it also is important to consider potential gender disparities in skill development with AI technology. Wikipedia, a commonly used classroom tool, has a significant gender imbalance in both the content-creation process and content output (Ford and Wajcman Reference Ford and Wajcman2017; Young, Wajcman, and Sprejer Reference Young, Wajcman and Sprejer2023). Without careful instructor intervention (Kalaf-Hughes and Cravens Reference Kalaf-Hughes and Cravens2021), this can result in gender gaps in women’s self-efficacy and engagement with technical tools in the classroom (Hargittai and Shaw Reference Hargittai and Shaw2015; Shaw and Hargittai Reference Shaw and Hargittai2018). We anticipated a similar gendered difference in usage and self-efficacy for AI tools such as ChatGPT. We also examined gaps in AI usage and competence across race/ethnicity and among first-generation college students, who confront unique barriers in acquiring the skills demanded in higher education (Stebleton and Soria Reference Stebleton and Soria2012).

MANY ASSESSMENTS ARE VULNERABLE TO MISUSE OF CHATGPT

Students are using AI tools in ways that potentially undermine the learning objectives associated with assessments by using them to complete assignments or in ways that generate false information (Faverio and Tyson Reference Faverio and Tyson2023). For instructors, it may be impossible to determine with certainty if an essay was written by a student or by AI because many common detection tools disproportionately identify non-native English speakers’ work as AI produced (Liang et al. Reference Liang, Yuksekgonul, Mao, Wu and Zou2023) and have reliability problems (Fowler Reference Fowler2023b). AI technology can produce misleading results regarding tasks such as asking for information, sources, references, and citations. Whereas hallucination rates vary across AI platforms (Mollick Reference Mollick2023a), ChatGPT is especially susceptible to confidently providing false or made-up information. Large machine-learning models trained on material from the Internet, like ChatGPT, overrepresent hegemonic viewpoints and present biases against minority groups (Bender et al. Reference Bender, Gebru, McMillan-Major and Shmitchell2021). Critical AI literacy, therefore, has serious ramifications for academic integrity. Students may be unknowingly spreading misinformation and reproducing societal biases in their work.

INTERN VERSUS TUTOR: AI AS A USEFUL CLASSROOM TOOL WITH INSTRUCTOR GUIDANCE

Despite the potential risks, AI can be an effective classroom tool for both instructors and students. Mollick (Reference Mollick2023b) offers an interesting perspective on ChatGPT: “[S]everal billion people just got interns. They are weird, somewhat alien interns that work infinitely fast and sometimes lie to make you happy, but interns nonetheless.” Individuals who can successfully “onboard” their intern by learning their strengths and weaknesses are associated with a 55% increase in productivity of some writing and coding tasks (Noy and Zhang Reference Noy and Zhang2023; Peng et al. Reference Peng, Kalliamvakou, Cihon and Demirer2023). AI tools such as ChatGPT also can serve as a free, low-stakes, and accessible tutor by explaining concepts and theories to students in multiple ways, providing feedback on writing, helping to generate ideas, and debugging code.

Although AI misuse has significant ramifications for academic integrity and societal implications of perpetuating biases, there also is the reality that AI can be an essential classroom tool that improves student outcomes and career readiness. We argue that we need a better understanding of student usage of AI technologies so we can make more informed pedagogical decisions about how to incorporate AI literacy in the classroom and reduce the potential growth of technology gaps (Trucano Reference Trucano2023).

METHODS

We conducted an online survey in September 2023 among a sample of 106 students currently enrolled in political science courses at a large public university (Cahill and McCabe Reference Cahill and McCabe2024).Footnote 1 Students were informed that their participation in the survey would not be tracked or required as part of the courses and that their responses were anonymous.Footnote 2 The sample included a mix of class years (i.e., 17% freshmen, 35% sophomore, 35% junior, 12% senior, and 1% other), had mostly social science majors/intended majors (88%), and was balanced across gender with 54% identifying as female and 43% as male. Approximately 26% of the respondents reported that neither of their parents or their legal guardians had graduated from a four-year college or university. The sample was diverse in terms of race/ethnicity: 39% identified as non-Hispanic white, 28% as Hispanic, 8% as non-Hispanic Black, 21% as Asian/Pacific Islander, and 4% as another race/ethnicity.

The survey asked students how often they used AI tools such as ChatGPT; their skills using those tools; and their perceptions of the appropriateness, benefits, and harms of these tools. Key outcomes analyzed in the results included the following.

-

ChatGPT Usage. Students were asked how often they use ChatGPT for 12 different academic and nonacademic tasks. Examples of tasks included finding new music and recipes, exploring new ideas, finding sources for papers, and analyzing data. We classified students as ChatGPT users in each area if they used the tool at least rarely (i.e., the response options were “never,” “rarely,” “a few times per month,” “several times per week,” and “at least once per day”). Even if students did not use ChatGPT regularly, using it at least once may indicate potential future use. We compared ChatGPT users to students who stated that they have never used the tool.

-

Skill Levels. The survey asked respondents to rate their skills with AI tools such as ChatGPT by indicating their agreement with six different statements on a 5-point scale from “strongly disagree” to “strongly agree.” Statements included overall competence with AI; the ability to use AI in ways that adhere to university integrity policies and that do not violate plagiarism policies; and assistance with writing, data analysis and visualization, and preparation for quizzes and exams.

-

Appropriate Use of AI. The survey asked students to what extent they think it is appropriate to use AI technology for eight different academic purposes, including writing components of research papers and/or personal essays, finding reliable sources, solving math problems, visualizing data, studying for exams, providing writing feedback, and generating research questions. Responses were on a 5-point scale from “extremely inappropriate” to “extremely appropriate.” The survey also asked students to indicate whether various potential AI uses in society were a “good idea,” a “bad idea,” or “it depends.”

-

Effect of AI on Future Career. Students were asked how concerned, if at all, they are about how AI might affect their future career with an open-ended follow-up question to explain their reasoning.

RESULTS

Respondents were asked first about the types of AI tools they use on a regular basis. Most respondents (58%) reported using Grammarly and/or Siri, suggesting familiarity with modern digital tools, and almost 90% reported that they were at least slightly familiar with ChatGPT. Students in the sample routinely rely on several tools to assist with their academic work, defined as studying, writing papers, and working on assignments (see online appendix figure A1). Tools such as Spell Check and Grammar Check are extremely common, with 73% and 64% of students reporting using them, respectively. More students reported using ChatGPT for their academic work (33%) than tutoring (14%) or taking advantage of university writing and learning centers (15%).

Students Use ChatGPT for a Variety of Academic Tasks

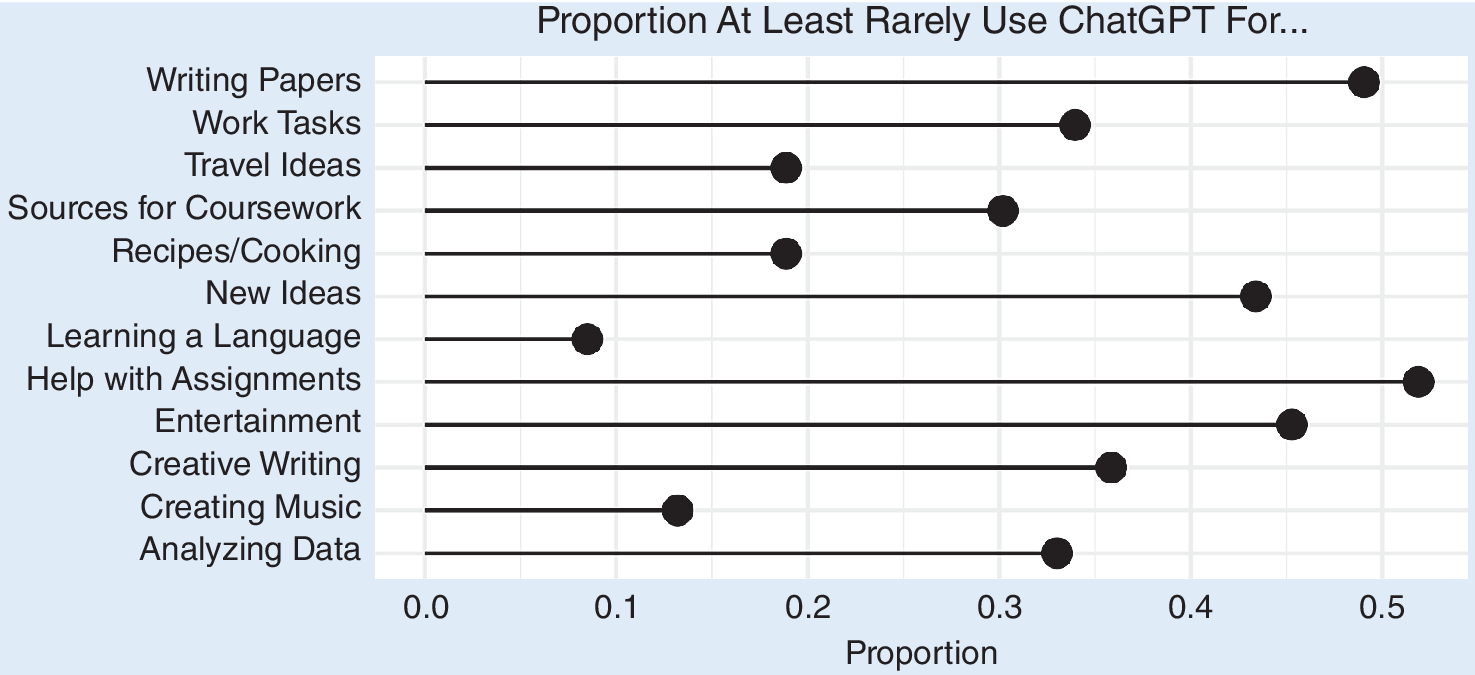

Figure 1 lists the proportion of respondents who used ChatGPT for different tasks. Almost 50% reported using ChatGPT for assistance with writing papers, and 52% more generally reported using the tool for help with assignments. Less common academic uses included finding sources (33%) and analyzing and visualizing data (30%). Many respondents also used ChatGPT outside of the classroom for entertainment (45%).

Figure 1 Proportion of Respondents Using ChatGPT for Different Purposes

The survey also made it possible to examine demographic differences in the use of ChatGPT (self-reported) across different dimensions, including whether students’ parents were college graduates, their gender, and their race/ethnicity (figure 2). These dimensions represent important demographics that may be correlated with digital literacy. We also examined differences in GPA as an indicator of whether ChatGPT serves as an additional tool for students who already are doing well academically or as support for those who are struggling.

Among those in our sample, first-generation respondents (i.e., those who reported that neither parent had graduated from college) were more likely than non-first-generation respondents to report using ChatGPT for a variety of tasks, especially for writing papers (p<0.01) and help on assignments (p<0.05). Similar to the literature that examines gendered differences in usage and self-efficacy with technical tools such as Wikipedia, we also observed that men use ChatGPT at higher rates than women for almost all usage types. However, the difference in means did not reach conventional levels of statistical significance, with the exception of entertainment purposes (p<0.05).

Among those in our sample, first-generation respondents (i.e., those who reported that neither parent had graduated from college) were more likely than non-first-generation respondents to report using ChatGPT for a variety of tasks, especially for writing papers and help on assignments.

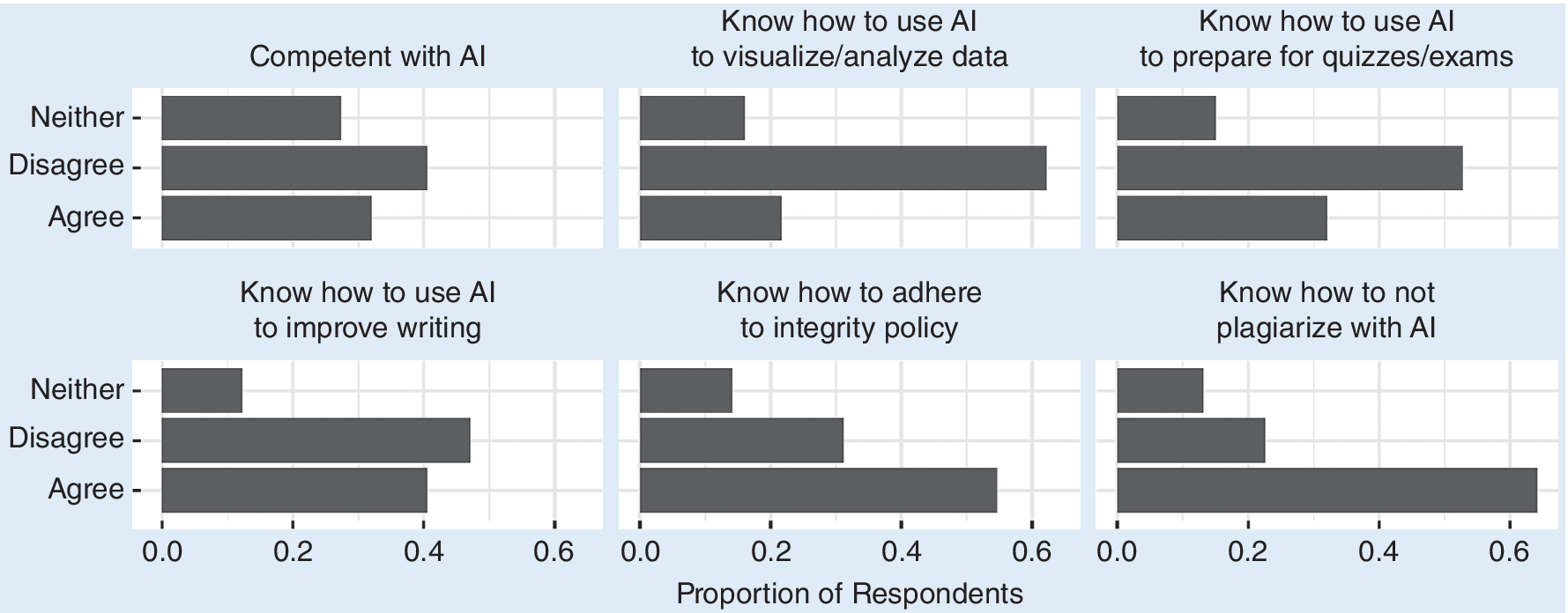

We next compared how students rated their own skills with AI. Whereas students generally were confident that they can use the tools in ways that avoid plagiarism and otherwise do not violate university integrity policies, they were less confident in their skills more generally (figure 3). That is, 40% of respondents “somewhat” or “strongly” disagreed that they were competent with AI technology. Students disagreed more than they agreed that they possess the skills to know how to use AI to improve their writing, prepare for quizzes and exams, and analyze and visualize data. Only 8% of respondents “strongly agreed” that they know how to use AI to prepare for exams or analyze and visualize data, and only 11% “strongly agreed” that they know how to use AI to improve their writing.

Only 8% of respondents “strongly agreed” that they know how to use AI to prepare for exams or analyze and visualize data, and only 11% “strongly agreed” that they know how to use AI to improve their writing.

Figure 3 AI Skills Self-Assessment

Not all students reported feeling equally skilled in AI technology. Male students reported higher average skill assessments than female students (p<0.05), including overall competence (p<0.10). Similar to their reported greater use of ChatGPT, first-generation students self-reported higher skills compared to students with at least one parent who is a college graduate (p<0.10; see online appendix figure A3). First-generation students face unique challenges in academic success, including perceived weak writing, math, and study skills (Stebleton and Soria Reference Stebleton and Soria2012). AI technology provides an accessible and low-stakes tutor to help students overcome these challenges. Future work is needed to validate our findings and to better understand whether first-generation students are using AI tools such as ChatGPT appropriately and effectively. This technology has the potential to break down barriers and reduce achievement gaps for first-generation students.

Not All AI Tasks Are Viewed as Equally Appropriate

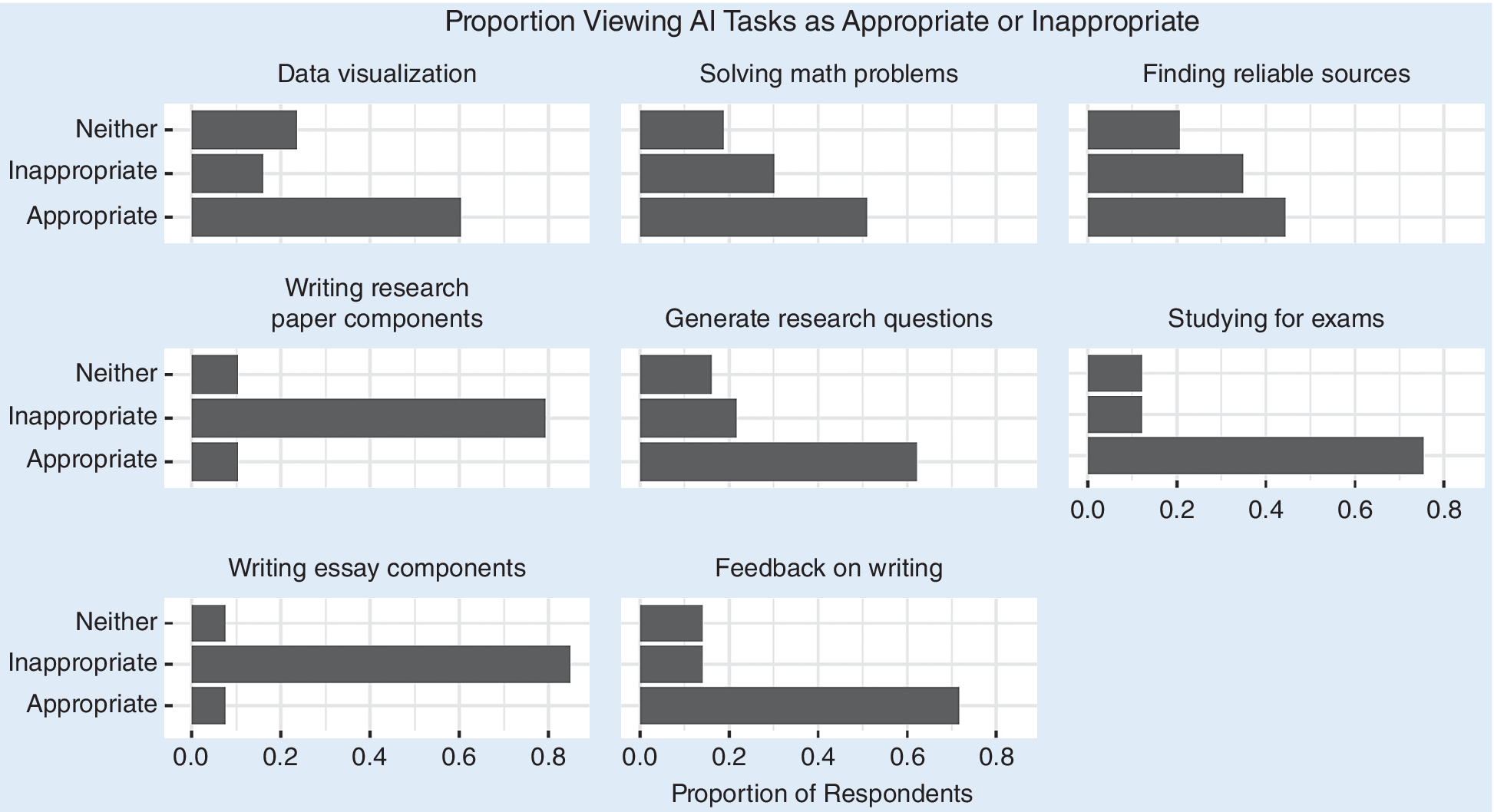

Students had nuanced perceptions of the role of AI when they viewed some of its functions as much more appropriate than others. On average, survey respondents viewed using AI to write components of research papers and essays as very inappropriate. In contrast, using AI to help with studying for exams, providing feedback on writing, and visualizing data were perceived as much more appropriate uses. It is worth noting that the areas where students are not confident in their skills for using AI (figure 3) are the same areas where students tend to find AI to be the most appropriate for classroom usage (figure 4).

It is worth noting that the areas where students are not confident in their skills for using AI are the same areas where students tend to find AI to be the most appropriate for classroom usage.

Figure 4 Perception of AI Appropriateness for Different Academic Tasks

Students Are Ambivalent About the Growth of AI

Students also were skeptical about the growth of AI in different parts of society. The only area where students viewed the use of AI as more of a good idea than a bad idea was for machines that perform risky jobs (e.g., coal mining) (see online appendix figure A2). Within academic contexts, respondents were particularly concerned about AI being used to write letters of recommendation or to write papers and complete assignments. In a second general question about the growth of AI in academic settings, most students believed that the use of AI is equally harmful and beneficial to them (58%); approximately 25% believed it will be more harmful.

Nonetheless, students acknowledged that AI will be here to stay, with more than 80% noting that they were at least somewhat concerned about the effect of AI on their future career. Of those students who were at least somewhat concerned, the most common issue they had about AI was potential job replacement (49%)—particularly in the legal field, which 53% indicated as their desired future profession. Other concerns included the potential misuse of the technology in the workplace and society (18%), the fear that they will not be adequately prepared to use AI technology in their future field (10%), and that individuals fluent with AI will have an unfair advantage over others (9%). Table 1 summarizes these concerns and presents sample comments to the open-ended question that asked students to explain why they are concerned about AI’s effect on their future career.

Table 1 Sample Open-Ended Responses About How AI Will Influence Employment

Note: *N=92 respondents who stated that they are at least somewhat concerned about the effect of AI technology on their future career.

PEDAGOGICAL IMPLICATIONS

Our first major takeaway from the survey is that the wariness of many instructors toward ChatGPT is warranted. The high rate of ChatGPT usage also indicates that instructors must clearly communicate classroom policies about the use of AI tools.

Second, the types of AI tasks that students find most appropriate also are those that survey respondents felt less competent using. Few students felt confident in using AI to improve their writing, prepare for exams, and analyze data; however, they believed that these are the most appropriate uses for AI. Encouraging the exploration of AI technology during class activities and in course assessments can help students to gain skills in using AI technology as an intern or tutor (Mollick Reference Mollick2023b). A simple activity is to give students a quiz in class and then demonstrate how to ask an AI tool such as ChatGPT to help them understand why their answer was incorrect. Learning how to ask ChatGPT questions is a skillset much like learning how to search for peer-reviewed sources; it is not always intuitive and it requires practice.

Third, almost 50% of respondents believed that using AI tools to find reliable sources is at least a somewhat appropriate use of AI. As mentioned previously, there are serious risks in relying on machine-learning models due to their tendency to hallucinate false or misleading information. Failure to teach this critical AI literacy component could have serious ramifications for students as they prepare for their future career.

Fourth, although there were no major differences in the demographic use of AI, interesting patterns appear in our sample and should be explored in future research. First-generation students were more likely to use AI technology. Are these students using AI in a way that would reduce or increase potential achievement gaps, and can instructors help students apply AI tools in ways that may improve equity? Our survey was limited in that it represents only a snapshot of how a diverse sample of political science students viewed and applied AI tools in Fall 2023. The validity of these findings may change over time as more students become familiar with AI, as instructors begin to formalize policies on AI usage, and as AI tools also change. We recommend that future researchers continue to measure student perceptions and usage of AI tools on a regular basis in ways that are tailored to their own student demographics. Moreover, future research can examine the underlying psychological motivations and institutional incentives that lead students to opt in or out of using AI technologies.

Finally, we emphasize that students believe their future career will be impacted by AI technology. The evidence overwhelmingly confirms this belief. As instructors, we should reflect on our course objectives to ensure that we are adequately “raising the bar” to prepare students for these changes. We should critically and creatively evaluate our course assessments to consider how AI technology could enhance student learning outcomes while also helping our students to acquire the necessary skills for remaining competitive in the changing landscape of higher education and careers.

SUPPLEMENTARY MATERIAL

To view supplementary material for this article, please visit http://doi.org/10.1017/S1049096524000155.

DATA AVAILABILITY STATEMENT

Research documentation and data that support the findings of this study are openly available at the PS: Political Science & Politics Harvard Dataverse at https://doi.org/10.7910/DVN/1QA5PC.

CONFLICTS OF INTEREST

The authors declare that there are no ethical issues or conflicts of interest in this research.