Critical thinking, according to the well-accepted succinct definition of Ennis (Reference Ennis1985, 45), is “reflective and reasonable thinking … focused on deciding what to believe or do.” The complex process of critical thinking involves a wide range of skills. Among these, the most essential skills are identifying the logistic structure of an argument, assessing whether a claim is made on sound empirical grounds, weighing opposing arguments and evidence fairly, and seeing under the surface level and through false assumptions (Cottrell Reference Cottrell2017, 2). These skills are vital in enabling students to make sense of important issues in and beyond the discipline of political science (Atwater Reference Atwater1991; Cohen Reference Cohen1993). Providing students with an intellectual tool kit of critical thinking skills has been widely accepted as an essential function of modern higher education (Hanscomb Reference Hanscomb2015). Moreover, the abilities to deconstruct a narrative, to question the assumptions that underpin a claim, to explore the relevance and reliability of the sources of information provided, and to appreciate the logic and reasons behind an argument different from one’s own are crucial for responsible citizens’ engagement in politics (Lamy Reference Lamy2007; ten Dam and Volman Reference ten Dam and Volman2004). This is particularly so when responding to the recent resurgence of populism, racism, and hate discourses.

Yet for many educators, including ourselves, developing students’ critical thinking skills is a challenging task (Çavdar and Doe Reference Çavdar and Doe2012). It is sometimes assumed that students will somehow “absorb” the skills of critical thinking through “immersing” themselves in the environment of higher education, observing their peers, or reading the literature associated with their degree programs (Ennis Reference Ennis1989). Having taught in a wide range of higher education settings, however, we have observed that not all students are able to pick up critical thinking skills through their normal university experiences and class participation. This observation, combined with feedback we frequently received from students regarding the difficulties they had with grasping the fundamental tenets of critical thinking, motivated us to look beyond the conventional immersion approach and seek strategies that are more explicit and effective in helping students develop their critical thinking skills.

Existing research suggests that issue-based live debates are effective in explicitly demonstrating some of the most essential critical thinking skills (Roy and Macchiette Reference Roy and Macchiette2005). Pedagogical experiments have shown that a “crossfire-style” live debate between two instructors performed in front of a class can effectively heighten students’ interest and engagement in the academic discipline of political science; such a performance can also demonstrate the feasibility of disagreement or critique in a civil manner, dispelling a common misperception that political disagreement is necessarily conflictual (Baumgartner and Morris Reference Baumgartner and Morris2015). Inspired by these findings, we piloted a pedagogical experiment with a group of 45 final-year undergraduates taking a class on politics and international development in East Asia. During the experiment, we performed a regular section of issue-based live debates between ourselves during the weekly lectures and explicitly debriefed the critical thinking skills employed during our debates. We also assessed the students’ critical thinking skills through a series of standardized short-answer question exercises (SQEs), which formed part of the students’ summative assessment for the course, before and after the interventions. The empirical results demonstrate a positive correlation between our experimental interventions and our students’ performance in the SQEs designed to test their critical thinking skills. This suggests that live debates on current political issues, accompanied with immediate explicit debriefs and articulations on the critical thinking skills used, are indeed effective in improving the students’ critical thinking skills—at least in the short term and in certain higher education settings.

This suggests that live debates on current political issues, accompanied with immediate explicit debriefs and articulations on the critical thinking skills used, are indeed effective in improving the students’ critical thinking skills—at least in the short term and in certain higher education settings.

INTERVENTIONS

We conducted our pedagogical experiment during a 12-week final-year undergraduate course titled “Development and Change in the Asia Pacific” during the 2016–17 academic year. This course is designed to deepen the students’ understanding of the processes of political and economic development in the Asia-Pacific region, with a particular focus on China, Japan, and Korea. In addition to the subject-specific knowledge, critical thinking skills are also among the course’s learning outcomes, as is commonly the case in British universities. This semester-long course had two 2-hour sessions in each teaching week, and all students were taught together in the same group.

Existing research has posited a direct link between critical thinking skills and the act of questioning knowledge bases (Cuccio-Shirripa and Steiner Reference Cuccio-Schirripa and Edwin Steiner2000). Live debates, in this regard, are effective tools in teaching critical thinking skills, because they create arenas in which participants have to apply a variety of critical thinking skills to question the premises of opposing arguments and to ascertain the most convincing explanation. Moreover, training in critical thinking skills in political science requires educators to “bring students into contact with the world outside their own unchallenged perceptions of it” (Hoefler Reference Hoefler1994, 544), and live debates on current political affairs can vividly demonstrate to them the necessity of admitting “in principle that the possibility that one’s premises do not always constitute good grounds for one’s conclusion” (Johnson and Blair Reference Johnson and Antony Blair2006, 50–51).

To demonstrate how to apply critical thinking skills, in late 2016 we intervened in the normal teaching and learning activities of our course with a regular section of live debates between us on current political issues. Each of our intervention sessions lasted approximately 15 minutes and comprised of (1) a brief introduction in which we identified the topic for the session, clarified the rules including how we would take sides in the live debate, and explicitly reminded our students that the main purpose of our live debate was to demonstrate the critical thinking skills that were to be evaluated through formal assessments; (2) a live debate during which we questioned, critiqued, or critically concurred with each other’s ideas; and (3) a short after-debate debrief during which we explicitly commented on the lessons (and sometimes the mistakes) from our application of critical thinking skills during our debates.

Each live debate lasted approximately 10 minutes and was focused on a current political issue that was relevant to but not specifically a part of the curriculum. For example, in November 2016, we focused our second debate on the United States’ withdrawal from the Trans-Pacific Partnership shortly after then president-elect Donald Trump announced that he would honor the promise he made to do so during the election campaign.Footnote 1 Before the class, we briefly discussed the possible ramifications of this action. When the lecture started, we flipped a coin in front of the class to decide which position each of us would take in the debate. We did this deliberately, with the hope of demonstrating explicitly to the students that critical thinking skills are needed and helpful regardless of one’s position in an academic argument or debate. This intention, along with a description of the skills that we would like students to observe during the debates, was clearly communicated to them before the actual debates.

During our debates, we made an effort to demonstrate a variety of critical thinking skills that are widely identified as essential for students in and beyond the discipline of political science. These included questioning the definitions of terms, identifying pertinent ideas and factors, reasoning, adapting to context, and, especially, distinguishing opinions from facts (Fitzgerald and Baird Reference Fitzgerald and Baird2011). In addition, from our previous teaching experience we were aware that some students may confuse critical thinking with criticism. To demonstrate that critical thinking skills can, and should, be applied to deepen and enrich discussions in which the participants fundamentally agree, in our final discussion we deliberately chose to take the same side on the following proposition: the issue of climate change presents an opportunity for the Asia-Pacific region to deepen international cooperation.

Our skepticism regarding the assumption that students can somehow “naturally” grasp critical thinking skills by immersing themselves in the environment of higher education led us to make targeted efforts to articulate explicitly what critical thinking skills are and how to apply them. To ensure that our students were completely aware of what we were trying to teach them through the live debates, after each one we always spent a few minutes elaborating the lessons (and sometimes the mistakes) from our application of critical thinking skills. Students were also invited to participate in these debriefs by asking questions and offering comments on the critical thinking skills we employed during the debates.

When the lecture started, we flipped a coin in front of the class to decide which position each of us would take in the debate. We did this deliberately, with the hope of demonstrating explicitly to the students that critical thinking skills are needed and helpful regardless of one’s position in an academic argument or debate.

MEASURES

Altogether, we introduced three interventions (live debates) during the experiment period. To measure the effectiveness of these interventions, we introduced a series of five SQEs as a component of the formal assessment for the class: they were spaced out across the semester at two-week intervals. Each SQE gave the students a choice of two academic articles or book chapters to assess critically.Footnote 2 The students were required to write no more than 200 words articulating why they agreed, disagreed, or partially agreed with the main argument presented in the selected text.

The students were informed that there were no “right” or “wrong” answers to the questions and that their grade depended only on the level of competence they displayed in applying critical thinking skills to the tasks set. Furthermore, it was made clear to them that they were expected to learn these skills from observing the live debates, listening to our introductions, and participating actively in the debrief sessions. Following the standard procedure for summative assessments at the university in which the experiment was conducted, all answers were marked anonymously by a main examiner who used a grading rubric that focused on critical thinking skills.Footnote 3 For each SQE, a random sample of answers in each grade band was independently second marked, following the same rubric used by the main examiner. The university procedure requires that any disputed cases should be discussed between the two examiners, and whenever the first examiner is successfully challenged during such a discussion, the answers should be re-marked in their entirety. In the year in which we conducted this experiment, no such action was necessary. Finally, at the end of the semester, an external examiner from another university also randomly selected several answers in each marking band of all SQEs to review the grades in the context of the rubric and to benchmark them against the relevant national academic quality assurance framework. In the particular year in which we conducted our experiment, the external examiner was not only satisfied with the marks but also praised the quality and consistency of the marking process.

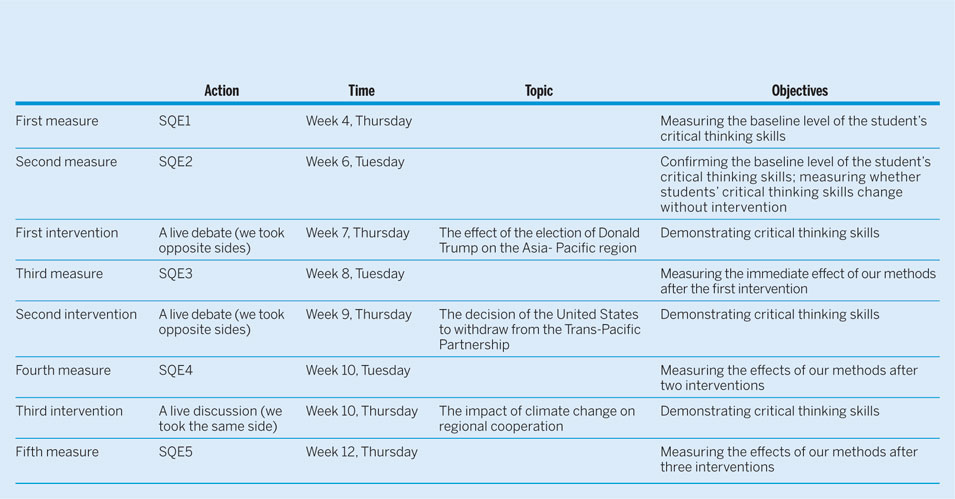

We outline the experiment sequence in table 1. After an initial period in which we introduced the course and went over some basic knowledge regarding critical thinking skills, we introduced the first SQE in week 4 to obtain baseline information regarding the critical thinking skills of our students. As a pilot experiment, we did not separate our students into a treatment group and a control group. Therefore, we did not introduce any intervention between the first two SQEs, so that a comparison between their results could enable us to identify the “normal” trend of academic performance when the students are exposed to ordinary teaching and learning sessions. We introduced our first intervention shortly before SQE3, and we took opposite positions in that debate. A similar intervention, during which we once again took opposite positions, was introduced between SQE3 and SQE4. Our final intervention was conducted between SQE4 and SQE5, and on this occasion we deliberately chose to concur with each other.

Table 1 Experiment Arrangements

RESULTS

The empirical results of the SQEs show that our pilot experiment was a success, suggesting that demonstrating critical thinking skills explicitly through live debates on current political issues can indeed significantly improve these skills in students. Generally, the overall performance of the class in SQE4 and SQE5 was noticeably better than in the previous three: this upturn followed our second and third interventions. The SQE3 result stands out as having, by far, the greatest diversity of scores. Although the median score of SQE3 was similar to that of SQE1 and even slightly lower than that of SQE2, its higher quartile is noticeably higher than those of both previous measures, suggesting that at least some students started grasping the critical thinking skills that we hoped to teach them immediately after the first intervention.Footnote 4

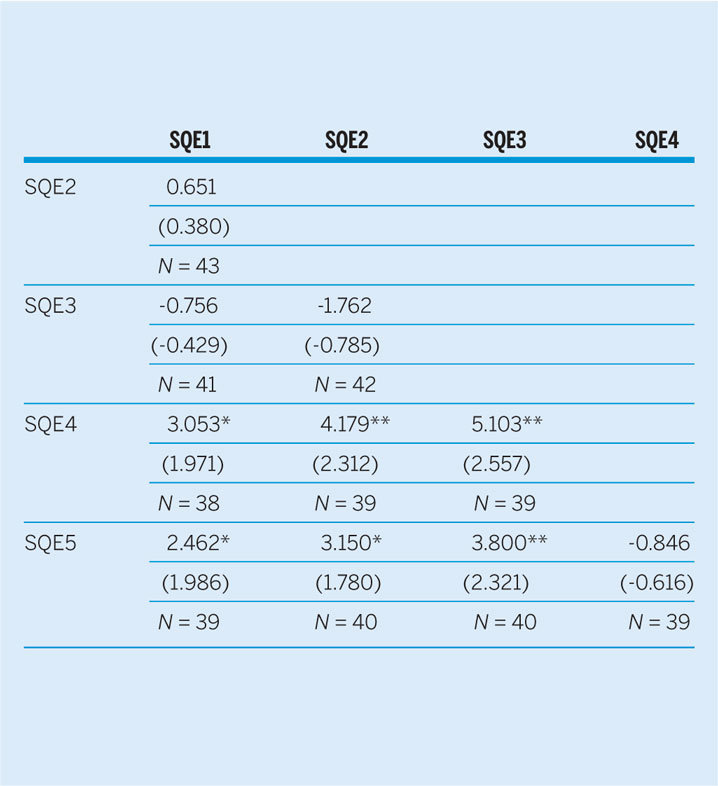

Because the aggregated scores may be affected by the presence or absence of certain students, we examined the impacts of our interventions on the individual level by conducting a series of paired t-tests to compare each student’s performance in different SQEs. As shown in table 2, although, on average, many students performed slightly better in SQE2 and slightly worse in SQE3, the difference in their performance during the first three SQEs is not statistically significant. However, after being exposed to at least one purposely designed issue-based live debate in the full circle of preparing for their assignment, on average each student scored 3 to 5 points (or between 4.7% and 7.8%) higher in SQE4 than in the previous three exercises, and these results are statistically significant. The results of SQE5 followed the same pattern, confirming that the performance of students significantly improved after we explicitly demonstrated essential critical thinking skills through live debates based on current political issues.Footnote 5

Table 2 Paired t-Test Results in the 2016–17 Cohort

Notes: In each non-header grid, the number in the first line displays the paired differences (which are equal to the mean score of the earlier SQE subtracted from the mean score of the latter short question exercise; e.g., SQE2-SQE1); the bracketed number in the second line displays the t value; and the N in the third line displays the number of pairs included in a particular t-test. *p < 0.1; **p < 0.05.

We also performed paired t-tests in the subgroups of male, female, domestic, and international students. The pattern of the dynamics of the students’ performance in different SQEs appears to be mostly similar among these subgroups, and between them and the whole sample, suggesting that the findings reported in table 2 are robust.Footnote 6

The empirical results reported in table 2 also show that there is no linear progression in the students’ performance from SQE1 to SQE5: their performance improved in SQE2 and SQE4, but decreased in SQE3 and SQE5, despite the general trend of improvement during our experiment. This suggests that improvement in the students’ performance cannot simply be explained as resulting from their increased familiarity with the task or the topics of the course.

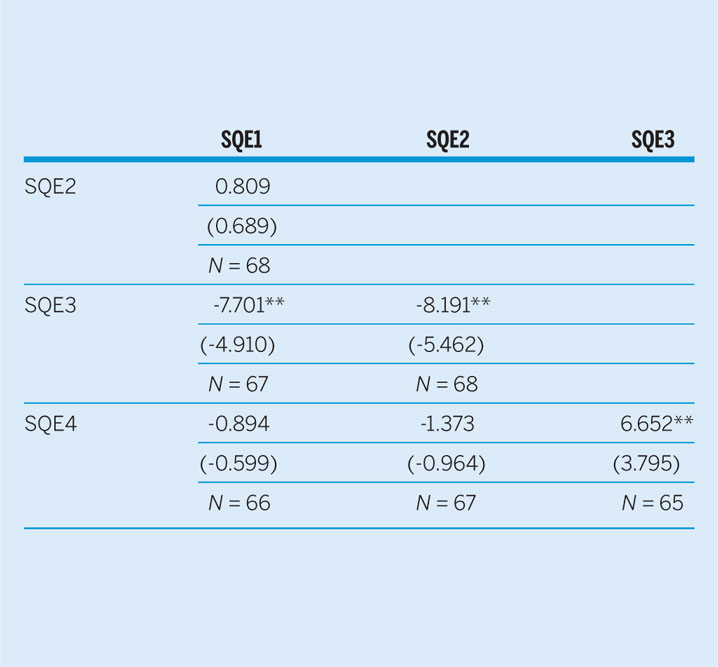

To further check the robustness of our results, we also examined the SQE results of the same course taught in the 2017–18 academic year. Although the requirements and marking processes for the SQEs were identical in the two academic years, we were not able to perform issue-based live debates in 2017–18 because one of us had moved to another country and the replacement had not yet been appointed when the course was taught. The student cohort of 2017–18 was about 50% larger than that of the previous year, but otherwise the two cohorts were similar. Therefore, although not a deliberate design, the 2017–18 cohort serves as a decent de facto control group in our pilot experiment.

As demonstrated in table 3, when the debate intervention was not performed, the students’ performance in SQEs did not naturally increase over time. Apart from the significantly worse result of SQE3, there is no significant difference between the students’ performance in the other SQEs.Footnote 7 Our robustness test further confirms that such a pattern also exists in the subgroups of female, male, domestic, and international students.Footnote 8 These results not only enhance our confidence that the improvement of the students’ performance in the 2016–17 academic year was indeed a consequence of the interventions but also vividly demonstrate that simply immersing students in the normal teaching and learning activities in the university does not automatically lead to development of their critical thinking skills.

Table 3 Paired t-Test Results in the 2017–18 Cohort

Notes: In each non-header grid, the number in the first line displays the paired differences (which are equal to the mean score of the earlier SQE subtracted from the mean score of the latter short question exercise; e.g., SQE2-SQE1),; the bracketed number in the second line displays the t value; and the N in the third line displays the number of pairs included in a particular t-test. *p < 0.1; **p < 0.05.

LESSONS LEARNED

The encouraging results of our pilot pedagogical experiment show that training students in critical thinking skills is an achievable task, despite its challenging nature, and that even a modest number of explicit demonstrations of critical thinking skills through purposely designed live debates on current political issues can have a noticeable and immediate positive impact on students’ academic performance.

Our results add to the body of literature indicating that students learn critical thinking skills much more effectively through explicit rather than implicit training (Halpern Reference Halpern1998).

Our results add to the body of literature indicating that students learn critical thinking skills much more effectively through explicit rather than implicit training (Halpern Reference Halpern1998). Before this experiment, our previous attempts to incorporate critical thinking skills into the curriculum met with little success. We had selected reading materials that were not only relevant to the curriculum but also exemplary in applying critical thinking skills, but they appeared to be insufficient to enable the students to “naturally” gain the necessary skillset to understand and apply critical thinking through reading literature and in-class discussions. The contrast between our previous experience and the results of this pilot experiment has led us to believe that it is more efficient to teach critical thinking skills through explicit demonstration than through the conventional immersion or infusion approaches, at least in settings similar to the large, diverse, modern public university in which we conducted the experiment.

Our results further suggest that different strategies of explicitly teaching critical thinking skills vary in their effectiveness. We had previously attempted to be explicit in articulating critical thinking skills to our students through stand-alone workshops and training sessions, most of which centered on straightforward introductions of the abstract concepts and epistemological foundations of critical thinking skills, which were predominantly illustrated through examples we created. Despite the considerable extra time and energy that we invested in organizing these events (which in many cases were not recognized in our workload), it was difficult to secure either a satisfactory turnout rate (when these sessions were made optional) or a decent level of attention and enthusiasm (when these sessions were made compulsory). The level of success achieved through the pilot experiment presented in this article far exceeds any made through other methods. To ensure that students’ interest in our live debates remained high, we drew topics from current affairs that had tangible connections to the areas being addressed in class. This proved useful: during the live debates, we could clearly sense that most of the students were enthusiastic and engaged. In the anonymous course evaluation at the end of the semester, several students identified our live debates as the aspect of the class that they enjoyed the most.

Our success was achieved with a moderate amount of resources. Once the fundamental design of our pedagogy was determined, we spent only about a half-hour before each intervention session going through both the possible scenarios in our upcoming debate and the key critical thinking skills that we wanted to cover. We normally did this as part of our routine casual exchange of ideas during coffee breaks. The fact that we had been working together in the same course team for a several years likely helped reduce the time required for preparation, but we feel that even a newly formed course team could easily replicate what we did as long as there is a healthy working relationship between the two instructors co-delivering the live debates.

Given the relatively modest amount of time and energy we spent in preparing and executing the interventions, this pedagogy requires a low investment in human resources. The effort we made to design and deliver the issue-based live debates was part of the general preparation and delivery process of our course and hence did not noticeably increase our workload. Furthermore, despite the need for the training to be explicit and for a period of time to be designated for its completion, our live debates did not reduce the time spent on the subject matter in class. Our students were able to benefit from witnessing an informed discussion of issues that were relevant to their curriculum (and assessments) while simultaneously improving their critical thinking skills.

It is worth emphasizing that one objective we hoped to achieve through our live debates and debrief sessions was to exemplify that critical analysis does not need to be hostile in nature. This is an essential aspect of the students absorbing the critical thinking skills into their habitual behavior and enabling them to be reasonable and responsible citizens. We believe this objective, although not explicitly measured, was also achieved. This was reflected in comments we received from students, who observed that our debates, although robust and rigorous, were always good-natured and ended with us either demonstrating where common ground had been found or accepting the differences that we had identified between the philosophical roots of our respective viewpoints.

REFLECTIONS

As a pilot project, our experiment was not without shortcomings. For example, although we carefully examined the dynamics of SQE scores in each subgroup defined by students’ gender and country of origin, because of the size of our sample we were not able to directly measure whether these personal characteristics actually have a significant influence on how our pedagogy affects students on the individual level. In addition, although our students clearly benefited from the purposely designed interventions in a measurable way, it is not yet clear if this rate of improvement could continue to be delivered if a longer period or a larger amount of similar interventions were employed. It should also be mentioned that most of our students come from nonselective, state-funded secondary schools, and very few had been exposed to extensive training in critical thinking skills through debates or other engaging forms before this experiment. Unfortunately, we were not able to obtain data regarding each individual student’s socioeconomic background to enable a specific investigation into this issue. One may question whether our pedagogy would generate a similar scale of success when applied to those who are very familiar with and practiced at debating. Certainly, further research in this area would be valuable. Yet, all these shortcomings generate testable hypotheses for subsequent investigation and experiments, which is itself an objective for pilot experiments.

The nature of our pilot experiment was exploratory, and our findings remain encouraging in this regard. Our success came despite having a class of students with varying abilities, and it was achieved with just a few sessions of issue-based live debates. This suggests that our pedagogy could easily be deployed in similar settings for significant benefits, at least in the short term. We hope that the methods and findings reported in this article offer some insight and inspiration for fellow educators of political science to take on the commonly faced challenge of developing students’ critical thinking skills in higher education.

SUPPLEMENTARY MATERIAL

To view supplementary material for this article, please visit https://doi.org/10.1017/S104909651900115X

ACKNOWLEDGMENTS

We are very grateful for the inspiring and helpful comments from the editor and reviewers, as well as the kind support from all students who participated in this pedagogical experiment. All remaining errors are our own.