1 Introduction

Moral dilemmas often force us to decide between so-called deontological and utilitarian considerations. Imagine, for example, that by torturing a captured terrorist we can obtain critical information that allows us to prevent a planned attack that would kill dozens of innocent citizens. Unfortunately, this example is not so hypothetical. We wrote this paper in the aftermath of the Paris and Brussels terror attacks. One of the members of the ISIS terrorist cell that planned both attacks, Salah Abdeslam, was arrested a couple of days before his partners put their plans into action and killed 32 people in suicide bombings at Brussels airport and metro. There was quite some outrage – voiced among others by Donald J. Trump - over the fact that authorities had not used “all means possible” to get Abdeslam to confess the upcoming attack (Reference DiamondDiamond, 2016). But even if we assume that torturing Abdeslam would have prevented the attack, would it therefore be morally acceptable? Someone who takes a utilitarian point of view would say “yes”. The moral principle of utilitarianism implies that the morality of an action is determined by its expected consequences. Therefore, harming an individual will be considered acceptable if it prevents comparable harms to a greater number of other people. Although torture might be intrinsically bad, it will nevertheless be judged morally acceptable in this case because of the lives it saves (i.e., one chooses the “greater good”). Alternatively, the moral perspective of deontology implies that the morality of an action depends on the intrinsic nature of the action. Here harming an individual is considered wrong regardless of its consequences and potential benefits. Hence, from a deontological point of view, the use of torture would always be judged unacceptable.

In the last two decades psychologists, philosophers, and economists have started to focus on the cognitive mechanisms underlying utilitarian and deontological reasoning (e.g., Białek & Terbeck, 2016; Reference Białek, Terbeck and HandleyBiałek, Terbeck & Handley, 2014; Reference GreeneGreene, 2015; Reference Conway and GawronskiConway & Gawronski, 2013, Kahane, 2015; Reference Moore, Stevens and ConwayMoore, Stevens & Conway, 2011; Reference NicholsNichols, 2002; Valdesole & Desteno, 2006). A lot of this work has been influenced by the popular dual-process model of thinking (Reference EvansEvans, 2008; Reference KahnemanKahneman, 2011), which describes cognition as an interplay of fast, effortless, intuitive (i.e., so called “System 1”) processing and slow, working-memory dependent, deliberate (i.e., so-called “System 2”) processing. Inspired by this dichotomy the dual process model of moral reasoning (Reference GreeneGreene, 2014; Reference Greene and HaidtGreene & Haidt, 2002) has associated utilitarian judgments with deliberate System 2 processing and deontological judgments with intuitive System 1 processing. The basic idea is that giving a utilitarian response to moral dilemmas usually requires System 2 thinking and allocation of cognitive resources to override an intuitively cued deontological System 1 response that primes us not to harm others.

In support of the dual process model of moral reasoning it has been shown that people higher in working memory capacity tend to be more likely to make utilitarian judgments (Reference Moore, Clark and KaneMoore, Clark & Kane, 2008). Also, experimental manipulations that limit response time (Reference Suter and HertwigSuter & Hertwig, 2011) or cognitive resources (Reference Conway and GawronskiConway & Gawronski, 2013; Reference Lotto, Manfrinati and SarloTrémolière, De Neys, & Bonnefon, 2012) sometimes make utilitarian judgments less likely. These findings give some backing to the basic dual process claim that utilitarian responding typically results from slow and demanding System 2 processing whereas deontological responding typically results from fast and more effortless System 1 processing (but see, for failed attempts and doubts, Baron, 2017; Reference BaronBaron, Scott, Fincher & Metz, 2015; Reference KahaneKahane, 2015; Reference Franssens and De NeysKlein, 2011; Reference Tinghög, Andersson, Bonn, Johannesson, Kirchler, Koppel and VästfjällTinghög et al., 2016; Reference MassonTrémolière & Bonnefon, 2014).

However, the precise nature of the interaction between the two types of processes is not clear (Reference Berk and TheallBiałek & De Neys, 2016; Reference Gürçay and BaronGürçay & Baron, 2017; Reference KoopKoop, 2013). To illustrate this point, consider the question of whether or not deontological responders also detect that there are conflicting responses at play in moral dilemmas. That is, do deontological responders blindly rely on the intuitively cued deontological System 1 response without taking utilitarian considerations into account? Or, do they also realize that there is an alternative to the cued deontological response, consider the utilitarian view but simply decide against it in the end? These two possibilities can be linked to two different views on the nature of the interaction between System 1 and System 2 in dual process models: a serial or parallel one.

In a serial model (often also called a “default-interventionist” model, Reference Conway and GawronskiEvans & Stanovich, 2013) it is assumed that people initially rely exclusively on the intuitive System 1 to make judgments. This default System 1 processing might be followed by deliberate System 2 processing in a later stage but this is optional. Indeed, serial dual process models often characterize human reasoners as cognitive misers who try to minimize cognitive effort and refrain from demanding System 2 processing as much as possible (Reference KahnemanKahneman, 2011; Reference Conway and GawronskiEvans & Stanovich, 2013). Under this interpretation only utilitarian responders will engage in the optional System 2 processing. Deontological responders will stick to the intuitively cued System 1 response. Since taking utilitarian considerations into account is assumed to require System 2 processing, this implies that deontological responders will not have experienced any conflict from the utilitarian side of the dilemma. However, in a parallel dual process model (e.g., Epstein, 1994; Reference SlomanSloman, 1996) both processes are assumed to be engaged simultaneously from the start. Under this interpretation, deontological and utilitarian responders alike would always engage System 1 and 2. Although deontological responders will not manage to complete the demanding System 2 process and override the intuitive System 1 response, the fact that they engaged System 2 would at least allow them to detect that they are faced with conflicting responses.Footnote 1

A number of recent empirical studies have started to test these different conflict predictions of the serial and parallel dual process model of moral reasoning. In one study Białek and De Neys (2016) contrasted subjects’ processing of conflict and no-conflict dilemmas. Moral reasoning studies typically present subjects with dilemmas in which they are asked whether they would be willing to sacrifice a small number of persons in order to save several more (e.g., kill one to save five). In these classic scenarios utilitarian and deontological considerations cue conflicting responses (hence, conflict dilemmas). Białek and De Neys also reversed the dilemmas by asking subjects whether they would be willing to sacrifice more people to save less (e.g., kill five to save one). In these no-conflict or control dilemmas both deontological and utilitarian considerations cue the exact same decision to refrain from making the sacrifice. By contrasting processing measures such as response latencies and response confidence when solving both types of dilemmas, one can measure subjects’ conflict sensitivity (e.g., Botvinick, 2007; Reference DeNeysDe Neys, 2012). If deontological responders are not considering utilitarian principles, then the presence or absence of intrinsic conflict between utilitarian and deontological considerations should not have an impact on their decision making process. However, Białek and De Neys observed that deontological responders were significantly slower and less confident about their decision when solving the conflict dilemmas. In contrast with the serial model predictions, this suggests that they have to be considering both deontological and utilitarian aspects of their decision.

Related evidence against the serial model comes from the mouse-tracking studies by Koop (2013; see also Gürçay & Baron, 2017, for a recent replication). After having read a moral dilemma, subjects had to move the mouse pointer from the centre of the screen towards the utilitarian or deontological response option presented in the upper left and right corner to indicate their decision. In the mouse-tracking paradigm researchers typically examine the curvature in the mouse movement to test whether the non-chosen response exerts some competitive “pull” or attraction over the chosen response (Reference Spivey, Grosjean and KnoblichSpivey, Grosjean & Knoblich, 2005). One can use this attraction as a measure of conflict detection. That is, if deontological reasoners are not considering the utilitarian response option they can be expected to go straight towards the deontological response option. If they are also considering the utilitarian perspective, they will tend to slightly move towards it resulting in a more curved mouse trajectory. Bluntly put, people will reverse from one direction to the other reflecting their “doubt”. Koop observed that mouse trajectories were indeed more curved when solving conflict dilemmas vs control problems. But critically, this effect was equally strong for utilitarian and deontological responders. This suggests that, just like utilitarian responders, deontological responders were considering and attracted by both perspectives. As Koop argued, this pattern is more consistent with a parallel than with a serial dual process model (also argued by Gürçay & Baron, 2017).

The evidence for deontological reasoners’ utilitarian sensitivity argues against the serial dual process model. However, the evidence does not suffice to declare the parallel model the winner. Note that both the serial and parallel model are built on the assumption that utilitarian reasoning is demanding and requires System 2 thinking. The parallel model entails that it will be this deliberate thinking that allows people to consider the utilitarian aspects of the dilemma and detect the conflict with the intuitively cued deontological response. But, clearly, the fact that deontological responders feel conflict does not in and by itself imply that this experience results from System 2 engagement. An alternative possibility is that deontological responders experience conflict between two different System 1 intuitions. In other words, under this interpretation taking utilitarian considerations into account might also be an intuitive System 1 process that does not require deliberation. Hence, rather than a System 1/System 2 conflict between an intuitive and more deliberated response that the parallel model envisages, the experienced conflict would reflect a System 1/System 1 clash between two different types of intuitions, one deontological in nature and the other utilitarian in nature.

Interestingly, there have been a number of theoretical suggestions that alluded to the possibility of such intuitive utilitarianism in the moral reasoning field (e.g., Dubljević & Racine, 2014; Reference Kahane, Wiech, Shackel, Farias, Savulescu and TraceyKahane et al., 2012; Reference MassonTrémolière & Bonnefon, 2014). In addition, generic dual process research in the reasoning and decision-making field has recently pointed to a need for so-called hybrid dual process models as alternative to purely serial or parallel models (Reference DeNeysDe Neys, 2012; Reference Handley and TrippasHandley & Trippas, 2015; Reference Pennycook, Fugelsang and KoehlerPennycook, Fugelsang & Koehler, 2015). The brunt of these models is that operations that are traditionally assumed to be at the pinnacle of System 2 (e.g., taking logical or probabilistic principles into account), can also be cued by System 1. At a very general level, these findings lend some minimal credence to the possible generation of a utilitarian System 1 intuition in classic moral dilemmas. If people do have such alleged utilitarian intuitions, they could experience conflict between the competing moral aspects of a dilemma without a need to engage in active deliberation. The present paper tests this possibility and will thereby present a critical test of the dual process model of moral reasoning.

In our studies we adopted Białek and De Neys’ (2016) paradigm and presented subjects with a set of classic moral conflict dilemmas and no-conflict control versions to measure their conflict sensitivity. Critically, while subjects were solving the problems their cognitive resources were burdened with a demanding secondary task. The basic rationale is simple. A key defining characteristic of System 2 thinking is that it draws on our limited executive working memory resources. Imposing an additional load task that burdens these resources will hamper or “knock-out” System 2. Hence, if subjects’ potential moral conflict sensitivity results from deliberate System 2 processing, it should become less likely under load. That is, under load it should be less likely that subjects manage to consider the alleged demanding utilitarian aspects of the dilemma and this should decrease the likelihood that a conflict with the intuitive deontological response will be experienced. Consequently, the previously reported conflict detection effects such as an increased response doubt for conflict vs no-conflict problems should no longer be observed under load. Alternatively, intuitive System 1 processes are assumed to operate effortless. Hence, if subjects’ conflict sensitivity results from the competing output of two intuitive System 1 processes, it should not be affected by load.

We tested the predictions in Study 1 and assessed the robustness of the findings in Study 2 and 3. Taken together, the studies will test whether reasoners intuitively take the utilitarian aspects of a moral dilemma into account. The answer will allow us to advance the specification of the dual process model of moral cognition.

2 Study 1

2.1 Method

2.1.1 Subjects

A total of 261 individuals (108 female, Mean age = 35.8, SD= 12.5, range 18–72) recruited on the Amazon Mechanical Turk platform participated in the study. Only native English speakers from the USA or Canada were allowed to participate. Subjects were paid a fee of $0.75.

2.2 Material and procedure

Moral reasoning task.

We used four classic moral dilemmas: the trolley, plane, cave, and hospital problem. These were adopted from the work of Reference Royzman and BaronRoyzman and Baron (2002), Foot (1978), and Reference Cushman, Young and HauserCushman, Young and Hauser (2006). All problems had the same core structure and required subjects to decide whether or not to sacrifice the lives of one of two groups of persons (all with a 1:5 ratio, see Table 1). Each subject solved a total of four dilemmas. Two dilemmas were presented in a conflict version and the other two in a no-conflict version. In the conflict versions subjects were asked whether they were willing to sacrifice a small number of persons in order to save several more. In the no-conflict versions subjects were asked whether they were willing to sacrifice more people to save less. For each subject it was randomly determined which dilemmas were presented as conflict and no-conflict problems. Presentation order of the four dilemmas was also randomized for each subject. Each dilemma was presented in each version an equal number of times.

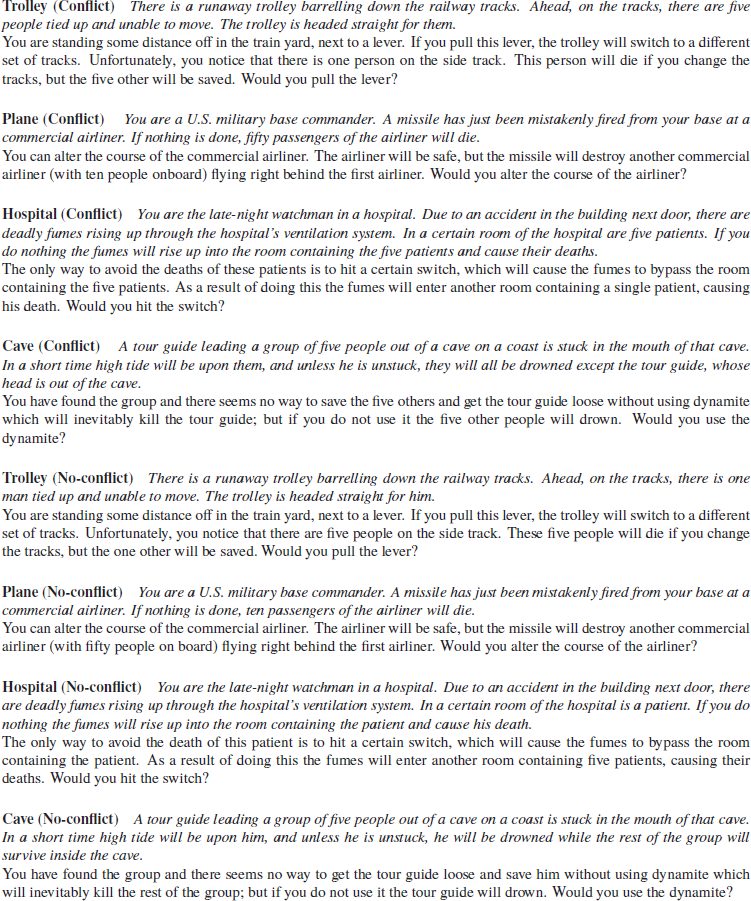

Table 1. Exact wording of the conflict and no-conflict versions of the dilemmas that were used in Study 1. Dilemmas were presented in two parts. The first part containing the background information is shown in italics.

The dilemmas were presented in two parts. First, the general background information was presented (italicized text in Table 1) and subjects clicked on a confirmation button when they finished reading it. Subsequently, subjects were shown the second part of the problem that contained the critical conflicting or non-conflicting dilemma information and asked them about their personal willingness to act and make the described sacrifice themselves (“Would you do X?”). Hence, on the conflict version the utilitarian response is to answer “yes” and the deontological response is to answer “no”. On the no-conflict problems both utilitarian and deontological considerations cue a “no” answer. Subjects entered their answer by clicking on a corresponding bullet point (“Yes” or “No”). The first part of the problem remained on the screen when the second part was presented.

Confidence measure.

Immediately after subjects had entered their dilemma response, a new screen with the confidence measure popped-up. Subjects were asked to indicate their response confidence (“How confident are you in your decision?”) on a 7-point rating scale ranging from 1 (not confident at all) to 7 (extremely confident).Footnote 2 Relatively lower confidence for the conflict vs. no-conflict decision, in particular for those reasoners who provide a deontological response (see further), can be taken as an indicator that subjects considered both the utilitarian and deontological aspects of the dilemma and were affected by the conflict between them (Reference Berk and TheallBiałek & De Neys, 2016).

Dot memorization load task.

The secondary load task was based on the work of Reference Miyake, Friedman, Rettinger, Shah and HegartyMiyake, Friedman, Rettinger, Shah and Hegarty (2001) and Trémolière et al. (2012). Subjects had to memorize a dot pattern (like the one shown in Figure 1) during the moral reasoning task. After subjects had read the first part of a dilemma, the dot pattern was briefly presented. Next, subjects read the second part of the dilemma, indicated their decision, and entered their response confidence. After subjects had indicated their confidence they were shown four matrices with different dot patterns and they had to select the correct, to-be-memorized matrix (Figure 1). Finally, subjects were given feedback as to whether they recalled the correct matrix and were instructed to focus more closely on the memorization in case they erred.

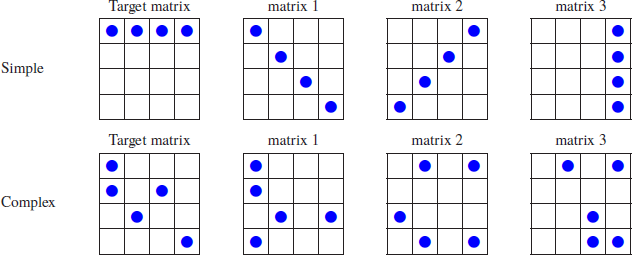

Figure 1. Examples of the matrices and recall options that were used in the low (Simple matrix) and high load (Complex matrix) conditions.

Half of the subjects were randomly allocated to the high load condition, the other half were allocated to a low load control condition. In the high load condition the matrix consisted of five dots arranged in a complex interspersed pattern in a 4 x 4 grid, which has been established to interfere specifically with effort-demanding executive resources (Reference Miyake, Friedman, Rettinger, Shah and HegartyMiyake et al., 2001). Previous studies established that this demanding secondary task effectively burdens System 2 during reasoning (Reference DeNeys and VerschuerenDe Neys, 2006; Reference Johnson, Tubau and De NeysJohnson et al., 2016; Reference Lotto, Manfrinati and SarloTrémolière et al., 2012). In the low load condition the matrix consisted of a simple pattern of four aligned dots, which should place only a minimal burden on executive resources (Reference DeNeys and VerschuerenDe Neys, 2006; Reference DeNeys and VerschuerenDe Neys & Verschueren, 2006). Presentation time for the grid in the low load condition was set to 1000 ms. To make sure that subjects could perceive the extra complex pattern in the high load condition so that storage would effectively burden executive resources, presentation time was increased to 2000 ms in this condition (e.g., see Trémolière et al., 2012, for a related approach).

As in Trémolière et al. (2012) we also used a multiple choice recognition format for the dot memorization task. In the high load condition all response options showed an interspersed pattern with 5 dots. There was always one incorrect matrix among the four options that shared 3 out of the 5 dots with the correct matrix (e.g., see Figure 1, matrix 1). The two other incorrect matrices shared one of the dots with the correct matrix (e.g., see Figure 1, matrix 2 and 3). In the low load condition all response options showed four dots aligned on a straight line. Subjects were presented with two dot memorization practice trials to familiarize them with the procedure. In the first trial they only needed to memorize a (simple) matrix. The second practice trial mimicked the full procedure, but instead of a moral dilemma it used a simple arithmetic problem as primary task.

The point at which the dot matrix was introduced in the experimental trial sequence was specifically chosen to optimize the load procedure. On one hand, one wants to minimize the amount of reading under load to minimize the possibility that the load will interfere with basic scenario reading and comprehension processes. This argues against introduction of the load before the scenario is presented (i.e., if subjects cannot read the scenario, responding will be random and uninformative). On the other hand, if the load is introduced after the full scenario has been presented, subjects can pre-empt and sidestep the load by starting to reflect on their decision during the scenario “reading” phase. Our two stage presentation minimizes these problems. It allows reducing the amount of reading under load and avoids the pre-empting confound since the critical alternative choice and the resulting potential conflict between the utilitarian and deontological considerations are introduced after the load has been introduced.

To avoid any confusion it should be stressed that our load procedure was not designed to interfere with subjects’ reading and comprehension of the second part of the dilemma either. More generally, one should keep in mind that all dual process models entail that System 1 processing can cue a response only after the problem material has been properly encoded. Bluntly put, if you can’t read the different alternative dilemma choices, there will not be any content that can trigger an intuitive answer. Testing whether the load does indeed not interfere with basic reading and comprehension is straightforward. If it were, then people will not understand the problem, and they can only guess when responding. Hence, we would get a similar rate of random responding on conflict and no-conflict problems. If people cannot encode the preambles, then by definition their cognitive processing of both problem versions cannot differ. As we will show, in all our studies the observed pattern of dilemma choices on the conflict and no-conflict problems establishes that this is not the case (i.e., “yes” responses are much rarer on the no-conflict problems than on the conflict problems). Because the same task has been extensively used in previous dual process work on deductive/probabilistic reasoning (e.g., De Neys, 2006) and even moral reasoning (Reference Lotto, Manfrinati and SarloTrémolière et al., 2012), we already knew that it was quite unlikely that the task would interfere with basic comprehension processes. At the same time, extensive prior evidence in the reasoning field establishes that the task does disrupt System 2 deliberation (e.g., De Neys, 2006, with syllogistic reasoning problems; Johnson et al., 2016, with the bat-and-ball problem; De Neys & Verschueren, 2006, with the Monty Hall Dilemma; Franssens & De Neys, 2009, with base-rate neglect problems; Trémolière et al., 2012, with moral reasoning problems). In sum, there is good evidence for the soundness of our load approach.

We recorded the time subjects needed to read the first part of the dilemma (i.e., time between presentation of the first part of the dilemma and subject’s confirmation) before the dot matrix was presented. We refer to this measure as the initial dilemma reading time. After the matrix was presented, we also measured the time that elapsed between the presentation of the second part of the dilemma and subjects’ answer selection. We refer to this measure as the dilemma decision time, although, because of the two-stage dilemma presentation, the decision time also includes some additional reading time.Footnote 3

2.3 Results and discussion

2.3.1 Dot memorization load task

Overall, subjects were able to select the correct matrix in more than 3 out of 4 cases. On average, accuracy reached 81% (mean = 3.24, SD = 0.97) in the high load condition and 84 % (mean = 3.36, SD = 0.88) in the low load condition. This indicates that in general the load task was performed properly, according to the instructions. However, although most subjects recalled the dot locations correctly, some subjects made more recall errors. In cases where the dot matrix is not recalled correctly, one might argue that we cannot be certain that the load task was efficiently burdening executive resources (i.e., the subject might be neglecting the load task, thereby minimizing the experienced load). A possible lack of a load effect on conflict detection could be then attributed to this possible confound. To sidestep this potential problem we used a two-stage filtering procedure. First, we discarded any subject who had made more than one recall error (i.e., less than 75% accuracy, n = 44; 16.9% of the sample). Next, from the remaining group we also removed subjects from the high load condition who had selected an incorrect matrix that shared only 1 dot with the correct pattern (n = 26; 9.96% of the sample). Hence, subjects who were included in the analyses had at least 75% accuracy and were able to recognize 3 out of 5 dots correctly when they did err under high load. The final sample consisted of 191 individuals (71 females, mean age = 35.9, SD = 12.3, range 18–72). Resulting average memorization performance in the high load condition was 94% (mean = 3.75, SD =0.44) and 93 % (mean = 3.73 SD = 0.45) in the low load condition.Footnote 4

2.3.2 Dilemma decision choices

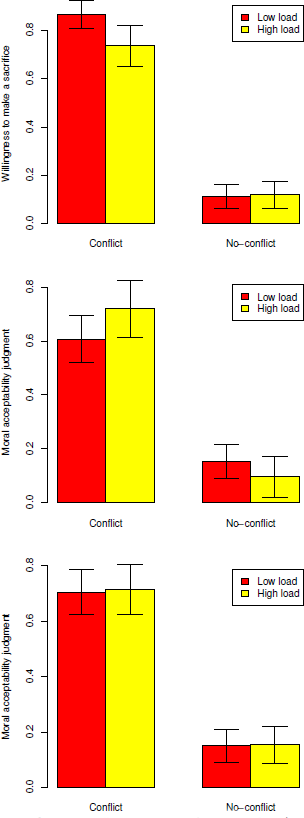

Subjects were asked to decide whether they were willing to make a sacrifice and perform the action that was described in each dilemma. For each subject we calculated the average willingness to make a sacrifice (i.e., percentage “yes” responses) in the conflict and no-conflict dilemmas.Footnote 5 These averages were entered in a 2 (Conflict, within-subjects) x 2 (Load, between-subjects) mixed model ANOVA. Recall that on the conflict versions the utilitarian response is to answer “yes” and the deontological response is to answer “no”. On the no-conflict problems both utilitarian and deontological considerations lead to a “no” answer. Figure 2 (top panel) gives an overview of the results. Not surprisingly there was a main effect of the Conflict factor, F(1, 189) = 499.61, p < .001, ηp2 = .726. As Figure 2 shows, overall, subjects were willing to make a sacrifice in 80% of the cases on the conflict versions. Hence, in line with previous studies (e.g., Reference Lotto, Manfrinati and SarloLotto, Manfrinati & Sarlo, 2014; Reference Gold, Colman and PulfordGold, Colman & Pulford, 2014; Reference Royzman and BaronRoyzman & Baron, 2002) subjects gave utilitarian responses to the conflict dilemmas in the majority of cases. Obviously, the average willingness to make a sacrifice was much lower (i.e., 11.6%) on the conflict problems. The main effect of load was not significant, F(1, 189) = 3.61, p = .059, but the two factors interacted, F(1, 189) = 4.74, p < .035, ηp2 = .024. Planned contrasts showed that load had no impact on no-conflict decisions, F(1, 189) < 1. However, willingness to make a sacrifice decreased under load on the conflict problems, F(1, 189) = 6.49, p < .015, ηp2 = .033. Hence, in line with previous observations, under high load subjects were less likely to make utilitarian decisions on conflict dilemmas (Reference Conway and GawronskiConway & Gawronski, 2013; Reference Lotto, Manfrinati and SarloTrémolière et al., 2012).Footnote 6

Figure 2. Average willingness to make a sacrifice (% “yes” responses ) in Study 1 (top panel) and average moral acceptability judgments (% “yes” responses ) in Study 2 (middle panel) and Study 3 (bottom panel) on conflict and no-conflict dilemmas as a function of load. Error bars represent 95% confidence intervals.

2.3.3 Decision confidence

Obviously, our primary interest lies in subjects’ decision confidence as this allows us to measure their moral conflict detection sensitivity. The key contrast concerns the confidence ratings for conflict vs. no-conflict problems. If subjects take utilitarian considerations into account and detect that they conflict with the cued deontological response, response confidence should be lower on the conflict vs no-conflict problems. We first focus on the contrast analyses for deontological (“no”) conflict responders. Next, we present the analyses for utilitarian (“yes”) conflict responders.Footnote 7 Note that we use only those no-conflict responses in which subjects refuse to make a sacrifice (i.e., “no” responses). No-conflict decisions to sacrifice many to save few are cued by neither deontological nor utilitarian considerations. These “other” responses were rare but were excluded from the contrast analyses to give us the purest possible test of subjects’ conflict sensitivity.

Deontological responders.

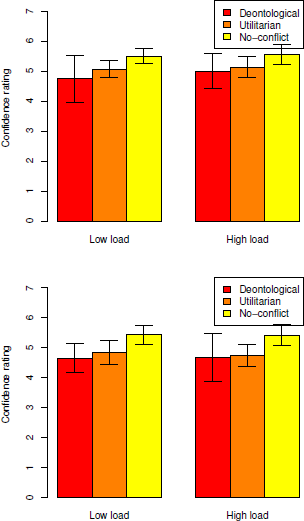

We entered subjects’ average confidence ratings in a 2 (Conflict, within-subjects) x 2 (Load, between-subjects) mixed model ANOVA. Results are illustrated in Figure 3 (top panel). There was a main effect of the conflict factor, F(1, 46) = 6.8, p < .015, ηp2 = .129. Overall, deontological responders’ confidence decreased with about 12% (mean decrease = 0.66 scale points) when solving conflict problems. In line with previous findings this increased decision doubt indicates that deontological responders take both utilitarian and deontological considerations into account and are sensitive to conflict between them. However, the critical finding is that this sensitivity was not affected by load. Neither the main effect of load, F(1, 46) < 1, nor the interaction with the conflict factor reached significance, F(1, 46) < 1 (see the results of Study 3 for an overall Bayes factor analysis further supporting this conclusion).

Figure 3. Average confidence ratings (7-point scale) for utilitarian and deontological decisions on the conflict problems and no-conflict control decisions (“no” responses) under high and low cognitive load in Study 1 (top panel) and Study 2 (bottom panel). Error bars represent 95% confidence intervals.

Utilitarian responders.

Our main theoretical interest concerned the conflict detection findings for deontological conflict responders. For completeness, we also analysed the conflict contrast for utilitarian conflict responders. It should be noted that these findings face a potential fundamental methodological problem (e.g., Reference Conway and GawronskiConway & Gawronski, 2013). Deontological considerations cue “no” responses on the conflict and no-conflict problems. Utilitarian considerations cue a “no” response on no-conflict problems, but a “yes” response (i.e., willingness to make a sacrifice) on conflict problems. Hence, in the case of utilitarian responders, conflict and no-conflict responses not only differ in the presence or absence of conflict but also in terms of the decision made (i.e., willingness to take action or not). Consequently, when contrasting the conflict and no-conflict detection indexes, results might be confounded by the decision factor. Any potential processing difference might be attributed to the differential decision rather than to conflict sensitivity. Therefore, we present the utilitarian conflict contrast for completeness. If one wants to eliminate the potential decision factor confound completely, one can focus exclusively on the analysis for deontological responders.

We entered utilitarian responders average confidence ratings in a 2 (Conflict, within-subjects) x 2 (Load, between-subjects) mixed model ANOVA. Results are also illustrated in Figure 3 (top panel, orange bars). As the figure indicates, findings were similar to the trends we observed for deontological responders. There was a main effect of the conflict factor, F(1, 158) = 11.98, p < .005, ηp2 = .07. Overall, utilitarian responders’ confidence decreased with about 6.5% (mean decrease = 0.33 scale points) when solving conflict problems. The conflict effect was not affected by load. Neither the main effect of load, F(1, 158) < 1, nor the interaction with the conflict factor reached significance, F(1, 158 ) < 1.

2.3.4 Reading and decision latencies

We recorded subjects’ dilemma reading (i.e., time between presentation of the first part of the dilemma and subject’s confirmation) and decision times (i.e., time that elapsed between the presentation of the second part of the dilemma and subjects’ answer selection). Because of skewness all latencies were log transformed prior to analysis. An overview can be found in Table 2. Average reading times were entered in a 2 (Conflict, within-subjects) x 2 (Load, between-subjects) mixed model ANOVA. Neither for deontological responders [all F(1, 46) < 1, all p > .431], nor utilitarian responders [all main effects F(1, 158) < 1, all p > .337; interaction F(1, 158) = 1.07, p = .303] did any of the factors reach significance. As one might expect this establishes that subjects did not process the problems differently before the introduction of load.

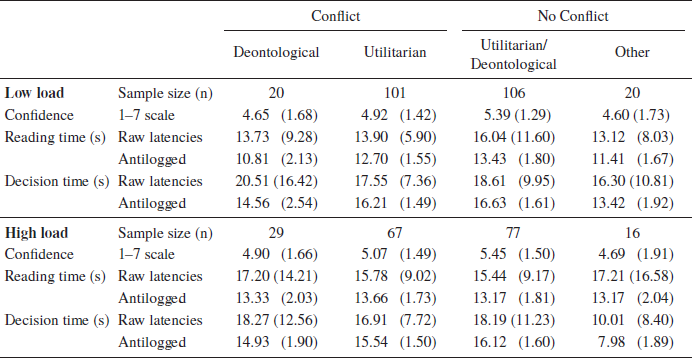

Table 2. Mean ratings, decision time, and reading time for conflict and no-conflict dilemmas as a function of the dilemma response decision in Study 1. Standard deviations are shown in parentheses.

We also ran a similar 2 (Conflict, within-subjects) x 2 (Load, between-subjects) ANOVA on the decision times. As with the reading times, neither for deontological responders [all F(1, 46) < 1, p > . 419] nor utilitarian responders [conflict, F(1, 158) = 2.28, p = .133; all other F(1, 158) < 1, p > .541] did any of the factors reach significance. The absence of a load effect and load by conflict interaction suggests that subjects in the high load condition did not try to compensate for the load by taking more time to solve the (conflict) problems under load. Note that we also did not observe a main effect of the conflict factor. Białek and De Neys (2016) previously used decision time as a conflict detection index an observed that the doubt associated with conflict detection also resulted in longer conflict (vs no conflict) decision times. However, unlike the present study this study measured decision time after the full scenario had been read. It is possible that the combination of reading and decision time in the present two-stage presentation format obscured this effect.

3 Study 2

Subjects’ decreased decision confidence when solving conflict dilemmas indicates that they are taking utilitarian considerations into account and are sensitive to conflict between the competing utilitarian and deontological aspects of moral dilemmas. The fact that this conflict sensitivity effect was not affected by load indicates that it operates effortlessly and results from intuitive System 1 processing. However, Study 1 is but the first study to test this hypothesis. In Study 2 we therefore tried to replicate the findings and test the robustness of the effects. The study also allowed us to eliminate a potential remaining confound. Study 1 asked subjects to indicate their personal willingness to act (e.g., “would you pull the switch?”) in the moral dilemmas. Although this question format is not uncommon, most studies in the moral reasoning field ask subjects to judge whether they find the described action morally acceptable (e.g., “do you think it is morally acceptable to pull the switch?”). Our choice reflected a personal preference to question people about their actual behaviour rather than about their moral beliefs. However, recent studies indicate that this design choice might not be trivial (Reference Francis, Howard, Howard, Gummerum, Ganis, Anderson and TerbeckFrancis et al., 2016; Reference Patil, Cogoni, Zangrando, Chittaro and SilaniPatil, Cogoni, Zangrando, Chittaro & Silani, 2014; Reference Tassy, Oullier, Duclos, Coulon, Mancini, Deruelle and WickerTassy et al., 2012; Reference Tassy, Oullier, Mancini and WickerTassy, Oullier, Mancini & Wicker, 2013). When people are tested with both questions there tends to be a discrepancy in their answers. That is, people often indicate they believe they have to make a sacrifice, although they still judge the action to be unacceptable. Consequently, subjects tend to be slightly more “utilitarian” when questioned about their personal willingness to act (but see also Baron, 1992, for a small opposite effect). What is important in the present context is that the personal-willingness-to-act question might elicit stronger conflict than the acceptability question (possibly because people take a more egocentric perspective and are more emotionally engaged in the task, e.g., Patil et al., 2014, and Tassy et al., 2012, 2013). This points to a potential confound. By asking subjects about their personal willingness to act we might have made the utilitarian/deontological conflict more salient than it is with the traditional moral acceptability question. Hence, with the more traditional acceptability format, conflict might be less clear, and deontological responders might not manage to (effortlessly) detect conflict between the utilitarian and deontological aspects. Therefore, in Study 2 we simply changed our question format and tested whether there was still evidence for deontological responders’ utilitarian sensitivity under load.

3.1 Method

3.1.1 Subjects

A total of 189 individuals (94 female, Mean age = 40.2, SD= 12.7, range 19–75) recruited on the online Crowdflower platform participated in the study. Only native English speakers from the USA or Canada were allowed to participate. Subjects were paid a fee of $.75.

3.1.2 Material and Procedure

The exact same material and procedure as in Study 1 was adopted. The only difference was the way that the dilemma decision question was phrased (i.e., the last sentence of the dilemma). Whereas subjects in Study 1 were asked to indicate their personal willingness to act (“Would you do X”?) subjects in Study 2 were asked to judge whether or not they found the action morally acceptable (“Do you think it is morally acceptable to do X”).

3.2 Results and discussion

3.2.1 Dot memorization load task

Overall, subjects selected the correct matrix in more than 3 out of 4 cases. On average, accuracy reached 88% (mean = 3.52, SD = 0.63) in the high load condition and 82% (mean = 3.28, SD = 0.84) in the low load condition. We used the same two-stage filtering procedure as in Study 1 to screen for individuals who might have neglected the load task. First, all subjects who had made more than one error were discarded (i.e., less than 75% accuracy, n = 24; 12.7% of the sample). Next, subjects in the high load condition who selected an incorrect matrix that shared only one dot with the correct matrix were also discarded (n = 24, 12.7% of the initial sample). The final sample consisted of 141 individuals (64 females, mean age = 40.3, SD = 13.1, range 19–75). The resulting average memorization performance in the high load condition was 95% (mean = 3.81, SD = 0.40) and 92% (mean = 3.68, SD = 0.47) in the low load condition.

3.2.2 Dilemma acceptability judgments

Subjects were asked to judge whether it was morally acceptable to make the sacrifice that was described in each dilemma. For each subject we calculated the average acceptability (i.e., percentage “yes” responses) in the conflict and no-conflict dilemmas. For clarity, note that, just as in Study 1, on the conflict versions the utilitarian response is to answer “yes” and the deontological response is to answer “no”. On the no-conflict problems both utilitarian and deontological considerations lead to a “no” answer. The average acceptability judgments were entered in a 2 (Conflict, within-subjects) x 2 (Load, between-subjects) mixed model ANOVA. Figure 2 (middle panel) shows the results. Not surprisingly, there was again a main effect of the conflict factor, F(1, 139) = 195.23, p < .001, ηp2 = .57. Subjects found it far more acceptable to make a sacrifice on the conflict problems (i.e., 66.3%) than on the no-conflict problems (i.e., 12.6%). The main effect of load was not significant, F(1, 139) < 1, but the two factors interacted, F(1, 139) = 4.91, p < .035, ηp2 = .034. As Figure 2 shows, there seemed to be a trend towards more frequent utilitarian “yes” judgments under load, especially on the conflict problems. Follow-up test showed that the load impact on the conflict problems (just as on no-conflict problems, F(1, 139) = 1.37, p = .24, ηp2 = .01) did not reach significance, F(1, 139) = 2.58, p = .11, ηp2 = .018. However, the interesting point here is that there is clearly no evidence for the decreased utilitarian responding under load for conflict problems that was observed in Study 1. Hence, this suggests that giving a utilitarian acceptability judgment (in contrast to indicating that one is personally willing to act) does not necessarily require demanding System 2 computations.Footnote 8

Finally, note that we also contrasted the rate of utilitarian responses on the conflict dilemmas in Study 2 and Study 1. Average rate of utilitarian responses was 66% in Study 2 whereas it reached 80% in Study 1, univariate ANOVA, F(1, 329) = 18.61, p < .001, ηp2 = .05. Hence, in line with previous studies we also observed that utilitarian responding is less likely when people are asked about the moral acceptability of an action than when they are asked about their personal willingness to do the action (e.g., Tassy et al., 2012).

3.2.3 Acceptability decision confidence

Our primary interest again lies in subjects’ decision confidence as this allows us to measure their moral conflict detection sensitivity. As in Study 1, we ran separate analyses for deontological (“no”) and utilitarian (“yes”) conflict responders and only used no-conflict responses in which subjects indicate that they find it unacceptable to make a sacrifice (i.e., “no” responses).

Deontological responders.

We entered subjects’ average confidence ratings in a 2 (Conflict, within-subjects) x 2 (Load, between-subjects) mixed model ANOVA. Results are illustrated in Figure 3. There was a main effect of the conflict factor, F(1, 62) = 18.72, p < .001, ηp2 = .232. Overall, deontological responders’ confidence decreased with 12% (mean decrease = 0.64 scale points) when solving conflict problems. The critical finding is that, in line with Study 1, this sensitivity was not affected by load. Neither the main effect of load, F(1, 62) < 1, nor the interaction with the conflict factor reached significance, F(1, 62) < 1. Hence, results replicate the key finding of Study 1: Cognitive load does not hamper deontological responders’ detection of conflict between utilitarian and deontological considerations in classic moral dilemmas.

Utilitarian responders.

We entered subjects’ average confidence ratings in a 2 (Conflict, within-subjects) x 2 (Load, between-subjects) mixed model ANOVA. Results are also illustrated in Figure 3. There was a main effect of the conflict factor, F(1, 97) = 22.08, p < .001, ηp2 = .185. Utilitarian responders’ confidence decreased with 11% (mean decrease = 0.60 scale points) when solving conflict problems. Neither the main effect of load, F(1, 97) < 1, nor the interaction with the conflict factor reached significance, F(1, 97) < 1. These findings are also consistent with the Study 1 results.

3.2.4 Reading and decision latencies

We also ran analyses on subjects’ dilemma reading and decision times (log transformed, as in Study 1). Table 3 presents an overview. Average reading times were entered in a 2 (Conflict, within-subjects) x 2 (Load, between-subjects) mixed model ANOVA. Neither for deontological responders [main effects all F(1, 62) < 1; interaction, F(1, 62) = 1.12, p = .294] nor utilitarian responders [all F(1, 97) < 1] any of the factors reached significance. As in Study 1, this establishes that subjects did not process the problems differently before the introduction of load.

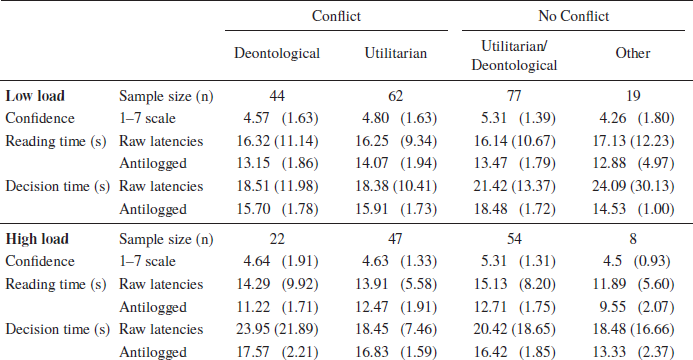

Table 3. Mean ratings, decision time, and reading time for conflict and no-conflict dilemmas as a function of the dilemma response decision in Study 2. Standard deviations are shown in parentheses.

Next, we ran a 2 (Conflict, within-subjects) x 2 (Load, between-subjects) mixed model ANOVA on the decision times. In line with the Study 1 findings, for deontological responders none of the factors reached significance [conflict, F(1, 62) = 2.36, p = .129; load, F(1, 62) < 1; interaction, F(1, 62) = 1.81, p = .18]. For utilitarian responders, we found a significant interaction between conflict and load, F(1, 97) = 10.06, p < .005, ηp2 = .094, whereas the main effects of load, F(1, 97) < 1, and conflict, F(1, 97) = 3.83, p = .053, did not reach significance. Follow-up test showed that utilitarian responders showed increased conflict decision latencies (20.82 s vs 17.09 s in antilogged units) in the low load condition, F(1, 54) = 218.73, p < .001, ηp2 = .258, but not in the high load condition, F(1, 43) <1.

4 Study 3

The critical confidence findings in Study 2 are consistent with the Study 1 results. This supports the robustness of our findings. Nevertheless, a critic might object to the use of a confidence rating to measure conflict detection during moral reasoning per se. That is, although the confidence measure has been frequently used to assess conflict detection in the cognitive control, memory, and reasoning field (e.g., Botvinick, 2007; Reference DeNeysDe Neys, 2012; Reference Thompson and JohnsonThompson & Johnson, 2014), paradigms in these fields typically concern cases in which a response can be understood by everyone as normatively right or wrong. In the moral cognition case, people are asked a question about what they may see as a matter of personal conviction. Indeed, subjects in moral reasoning studies are typically explicitly informed that there are no “wrong” or “right” answers. In and by itself, this ambiguity might bias the confidence measure.Footnote 9 In Study 3 we address this issue. Note that one assumption underlying the use of a confidence rating as a conflict detection index is that the measure allows us to track the experienced processing difficulty associated with the conflict. It is this processing difficultly that is believed to lead to a lowered decision confidence. Hence, instead of measuring subjects’ confidence one might try to question them more directly about the experienced difficulty and/or conflict. For example, studies on conflict detection in economic cooperation games have started to simply ask people to rate their feeling of conflict (“How conflicted do you feel about your decision?”, e.g., Reference Evans, Dillon and RandEvans, Dillon & Rand, 2015; Reference Nishi, Christakis, Evans, O’Malley and RandNishi, Christakis, Evans, O’Malley & Rand, 2016). In Study 3 we adopted a similar approach and asked subjects to indicate how difficult they found the problem and how conflicted they felt while making their judgment. Thereby, Study 3 will allow us to further validate the findings.

4.1 Method

4.1.1 Subjects

We recruited 200 individuals (110 female, mean age = 36.9, SD = 11.7, range 19–67) on the Crowdflower platform. Only native English speakers from the USA or Canada were allowed to participate. Subjects were paid a fee of $.75.

4.1.2 Material and procedure

The same material and procedure as in Study 2 was adopted (i.e., subjects were asked to judge the moral acceptability of the action). The only difference was that subjects were not asked for a confidence rating but were instead presented with two questions that were aimed to more specifically track any experienced conflict related processing difficulty. The difficulty rating question stated “How hard did you find it to make a decision? (1-Very easy → 7-Very hard)”. The conflictedness rating question stated “Did you feel conflicted when making your decision (e.g., did you consider to make a different decision)? (1-Not at all → 7-Very much so)”. The difficulty and conflictedness rating questions were presented below each other. For both questions subjects selected a rating on a 7-point scale.

As can be expected, results showed that ratings on both questions were highly correlated, r = .83, p < .001. We calculated a conflict composite by averaging subject’s ratings on both questions. For completeness, we will also report the analyses for each individual question separately.

4.2 Results and discussion

4.2.1 Dot memorization load task

Average accuracy reached 83% (mean = 3.33, SD = 0.81) in the high load condition and 86% (mean = 3.44, SD = 0.77) in the low load condition. We used the same two-stage filtering procedure as in Study 1 and 2 to screen for individuals who might have neglected the load task. First, all subjects who had made more than one error were discarded (i.e., less than 75% accuracy, n = 28; 14% of the sample). Next, subjects in the high load condition who selected an incorrect matrix that shared only one dot with the correct matrix were also discarded (n = 21, 10.5% of the sample). The final sample consisted of 151 individuals (82 females, mean age = 36.3, SD = 11.3, range 19–67). The resulting average memorization performance in the high load condition was 94% (mean = 3.75, SD = 0.44) and 93% (mean = 3.71, SD = 0.46) in the low load condition.

4.2.2 Dilemma acceptability judgments

As in Study 2, subjects were asked to judge whether it was morally acceptable to make the sacrifice that was described in each dilemma. For each subject we calculated the average acceptability (i.e., percentage “yes” responses) and entered these in a 2 (Conflict, within-subjects) x 2 (Load, between-subjects) mixed model ANOVA. Figure 2 (bottom panel) shows the results. As in the first two studies, there was again a main effect of the conflict factor, F(1, 149) = 231.76, p < .001, ηp2 = .61. Subjects found it far more acceptable to make a sacrifice on the conflict problems (i.e., 70.9%) than on the no-conflict problems (i.e., 15.3%). The main effect of load, F(1, 149) < 1, and the interaction, F(1, 149) < 1, were not significant. Hence, as in Study 2 there was no evidence for the decreased utilitarian responding under load that was observed in Study 1 when subjects were asked about their willingness to act. As in Study 2, we also observed that the overall rate of utilitarian responses on the conflict dilemmas in Study 3 (71%) was lower than in Study 1 (80%), univariate ANOVA, F(1, 340) = 6.92, p = .009, ηp2 = .02. In sum, the pattern of acceptability judgments is consistent with Study 2 and differs from the pattern observed in Study 1 where subjects were questioned about their willingness to act rather than the action’s moral acceptability. As we noted in Study 2, this result is consistent with recent claims that have pointed to the impact of the framing of the dilemma question in moral reasoning studies (e.g., Francis et al., 2016; Reference Körner and VolkKörner & Volk, 2014).

4.2.3 Moral conflict detection indexes

Our primary interest in Study 3 concerns the alternative conflict detection indexes. Each subject was asked to rate the experienced problem difficulty and conflict. Both measures were combined into a conflict composite. For completeness, we analysed both the results on the individual scales and the composite measure. Note that as with the confidence analyses in Study 1 and 2, we ran separate analyses for deontological (“no”) and utilitarian (“yes”) conflict responders and only used no-conflict responses in which subjects indicate that they find it unacceptable to make a sacrifice (i.e., “no” responses).

Deontological responders.

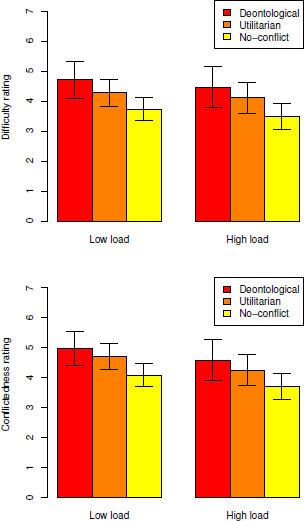

We entered subjects’ average ratings on the difficulty, conflictedness, and composite scales separately in a 2 (Conflict, within-subjects) x 2 (Load, between-subjects) mixed model ANOVA. Results are illustrated in Figure 4. As Figure 4 suggests, results for the difficulty and conflictedness scales were very coherent and fully in line with the confidence findings in Study 1 and 2. For the difficulty analysis we found a main effect of the conflict factor, F(1, 61) = 7.66, p = .007, ηp2 = .112. Overall, deontological responders judged conflict problems as about 10% harder (mean increase = 0.57 scale points) than no-conflict problems. But neither the main effect of load, F(1, 61) < 1, nor the interaction with the conflict factor reached significance, F(1, 61) < 1. For conflictedness, there was also a main effect of the conflict factor, F(1, 61) = 8.33, p = .005, ηp2 = .120. Overall, deontological responders’ felt about 10% more conflicted (mean increase = 0.62 scale points) when solving conflict problems. Again, neither the main effect of load, F(1, 61) < 1, nor the interaction with the conflict factor reached significance, F(1, 61) < 1. Finally, when both scales were combined, the composite analysis also pointed to a main effect of the conflict factor, F(1, 61) = 8.96, p = .004, ηp2 = .128. Overall, deontological responders’ composite conflict rating increased with 10% (mean increase = 0.60 scale points) when solving conflict problems. Neither the main effect of load, F(1, 61) < 1, nor the interaction with the conflict factor reached significance, F(1, 61) < 1.

Figure 4. Average difficulty rating (top panel) and conflictedness rating (bottom panel) for utilitarian and deontological decisions on the conflict problems and no-conflict control decisions (“no” responses) under high and low cognitive load in Study 3. All ratings were made on a 7-point scale. Error bars represent 95% confidence intervals.

In sum, for all scales there is a clear effect of the conflict factor but this effect is not affected by cognitive load. This pattern replicates the confidence measure findings in Study 1 and 2. Interestingly, the size of the observed conflict effect is also quite consistent across measures and studies (i.e., it typically hovers at around 10%-12%). Taken together these findings validate the moral conflict sensitivity findings we observed in Study 1 and 2.

Utilitarian responders.

We entered subjects’ average rating on each of the measures in a 2 (Conflict, within-subjects) x 2 (Load, between-subjects) mixed model ANOVA. Results are also illustrated in Figure 4. As in Study 1 and 2, results were in line with the pattern we observed for deontological responders. In the analysis on difficulty ratings, there was a main effect of the conflict factor, F(1, 118) = 23.86, p < .001, ηp2 = .168. Utilitarian responders’ difficulty rating increased with 11% (mean increase = 0.67 scale points) when solving conflict problems. But neither the main effect of load, F(1, 118) < 1, nor the interaction with the conflict factor reached significance, F(1, 118) < 1. For conflictedness, there was again a main effect of the conflict factor, F(1, 118) = 14.72, p < .001, ηp2 = .111. Utilitarian responders’ felt about 10% more conflicted (mean increase = 0.59 scale points) when solving conflict problems. The effect of load, F(1, 118) = 3.11, p = .08, ηp2 = .026 , and the interaction with the conflict factor, F(1, 118) < 1, did not reach significance. Finally, in the composite analysis — in which both scales were combined – there was also a main effect of the conflict factor, F(1, 118) = 20.60, p < .001, ηp2 = .149. Utilitarian responders’ composite conflict ratings increased with 11% (mean increase = 0.64 points) when solving conflict problems. Neither the main effect of load, F(1, 118) < 1, nor the interaction with the conflict factor reached significance, F(1, 118) < 1.

4.2.4 Reading and decision latencies

We also analyzed dilemma reading and (log transformed) decision times. Table 4 presents an overview. Average reading times were entered in a 2 (Conflict, within-subjects) x 2 (Load, between-subjects) mixed model ANOVA. Neither for deontological responders [all main effects and interaction, F(1, 61) < 1], nor utilitarian responders [main load, F(1, 118) = 1.14, p = .288; main conflict and interaction, F(1, 118) < 1] any of the factors reached significance. As in Study 1 and 2, this suggests that subjects did not process the problems differently before the introduction of load.

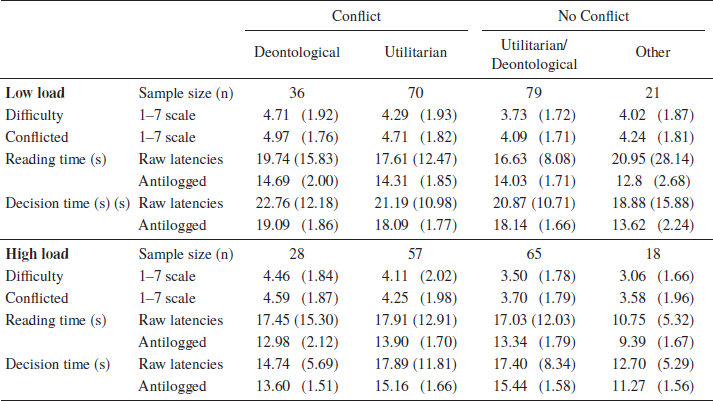

Table 4. Mean ratings, decision time, and reading time for conflict and no-conflict dilemmas as a function of the dilemma response decision in Study 3. Standard deviations are shown in parentheses.

We also ran a 2 (Conflict, within-subjects) x 2 (Load, between-subjects) mixed model ANOVA on the decision times. Deontological responders answered faster under higher load (18.48 vs 14.10 sec in antilogged units respectively), F(1, 61) = 4.48, p = .038, ηp2 = .068, but were equally fast for conflict and no-conflict problems, F(1, 61) < 1 (interaction, F(1, 61) = 3.04, p = .086, ηp2 = .047). A similar pattern of results was found in utilitarian responders, where load significantly reduced decision times, (19.03 vs 15.74 s in antilogged units, respectively), F(1, 118) = 5.65, p = .019, ηp2 = .046, but all others effects were nonsignificant (all F(1, 118) < 1)

4.2.5 Pooled conflict sensitivity and Bayes factor analysis

The critical conflict sensitivity findings with our alternative difficulty and conflictedness ratings in Study 3 are consistent with the Study 1 and 2 results we obtained with confidence ratings. Subjects show sensitivity to moral conflict and this effect is not affected by cognitive load. To obtain an even more powerful test of the hypothesis we also pooled the individual rating data from the three studies in a final set of analyses and again tested whether there was evidence for an impact of load on the conflict sensitivity findings. These pooled analyses used the reversed composite conflict score (i.e., the average of the difficulty and conflictedness ratings) from Study 3 as confidence proxy (i.e., the higher the experienced processing difficulty, the lower the score, as with confidence ratings).

Results of the pooled analysis were consistent with the individual studies. Deontological responders showed a main effect of conflict, F(1, 173) = 32.622, p < .001, ηp2 = .159. Overall, the index decreased with 12% (mean decrease = 0.63 scale points) when solving conflict dilemmas in the pooled test. However, the effect of load, F(1, 173) < 1, and the interaction, F(1, 173) < 1, were still not significant.

For completeness we also ran a pooled analysis for utilitarian responders. Here too results were consistent with the individual studies. There was a main effect of conflict (mean 9% decrease = 0.51 scale points), F(1,377) = 53.16, p < .001, ηp2 = .124, but no significant effect of load, F(1,377) < 1, or interaction effect, F(1,377) < 1.

As we clarified, we mainly focused on deontological responders’ conflict sensitivity to obtain the purest possible test of our hypothesis. However, in all our Study 1, Study 2, and Study 3 tests, the conflict sensitivity rating findings were similar for deontological and utilitarian responders. Therefore, in a final analysis we also pooled the utilitarian and deontological responders in the three studies to get the strongest possible test of the load hypothesis. In this final analysis we obtain a sample size of n = 458. Results were still consistent with the previous analysis. There was a main effect of conflict (mean 7% decrease = 0.40 scale points), F(1, 456) = 70.47, p < .001, ηp2 = .134, but no load effect, F(1,456) < 1, or interaction, (1,456) < 1.

Finally, although our conflict sensitivity findings seem to be robust, our key conclusion always relies on acceptance of the null hypothesis (i.e., the absence of a conflict x load interaction). The null-hypothesis significance testing approach that we followed so far does not make it possible to quantify the degree of support for the null hypothesis. An alternative in this case is Bayesian hypothesis testing using Bayes factors (e.g., Masson, 2011; Reference Morey, Rouder, Verhagen and WagenmakersMorey, Rouder, Verhagen & Wagenmakers, 2014; Reference WagenmakersWagenmakers, 2007). We used the JASP package (JASP Team, 2016) to run 2 (Conflict, within-subjects) x 2 (Load, between-subjects) Bayesian ANOVAs with default priors on our pooled data. Analyses were run for deontological responders, utilitarian responders, and deontological and utilitarian responders combined. The full JASP output tables are presented in the Supplementary Material. In all cases the model that received the most support against the null model was the one with a main effect of conflict only (deontological responders BF10 = 1.60e+65; utilitarian responders BF10 = 3457.41; combined BF10 = 2031.95). In all cases adding the interaction to the model decreased the degree of support against the null model (deontological responders BF10 = 3.74e+63; utilitarian responders BF10 = 33.48; combined BF10 = 61.8). Hence, the model with a main effect of Conflict only was preferred to the interaction model by a Bayes factor of at least 32 (deontological responders BF10 = 42.81; utilitarian responders BF10 = 103.26; combined BF10 = 32.88). Following the classification of Wetzels et al. (2011) these data provide strong evidence against the hypothesis that cognitive load is affecting deontological responders’ utilitarian sensitivity.

5 General discussion

The present studies established that moral reasoners show an intuitive sensitivity to conflict between deontological and utilitarian aspects of classic moral dilemmas. In line with previous findings subjects showed increased doubt (or experienced processing difficulty) about their decisions when solving dilemmas in which utilitarian and deontological considerations cued conflicting responses. Critically, this crucial moral conflict sensitivity was observed irrespective of the amount of cognitive load that burdened reasoners’ cognitive resources. This implies that reasoners are considering the utilitarian aspects of the dilemma intuitively without engaging in demanding deliberation.

In terms of the dual process model of moral cognition the findings support a hybrid model in which System 1 is simultaneously generating a deontological and utilitarian intuition when faced with a classic moral scenario. For the serial model it is hard to explain that deontological reasoners show utilitarian sensitivity. For the parallel model it is hard to explain that this sensitivity (both for utilitarian and deontological responders) is observed without deliberate, System 2 thinking. The present data imply that the operation of considering the utilitarian aspects of one’s moral decision and detecting the conflict with deontological considerations is effortless and happens intuitively. This supports the hypothesis that the process is achieved by System 1.

Our load conflict findings lend credence to the concept of an intuitive utilitarianism. As we noted, in and by itself the idea that utilitarian reasoning can be intuitive is not new. For example, at least since J. S. Mill various philosophers have portrayed utilitarianism as a heuristic intuition or rule of thumb (Sunstein, 2004). At the empirical level, Kahane (2012, 2015; Reference Kahane, Wiech, Shackel, Farias, Savulescu and TraceyKahane, Wiech, Shackel, Farias, Savulescu & Tracey, 2012) demonstrated this by simply changing the severity of the deontological transgression. He showed that in cases where the deontological duty is trivial and the consequence is large (e.g., when one needs to decide whether it is acceptable to tell a lie in order to save someone’s life) the utilitarian response is made intuitively. Likewise, Trémolière and Bonnefon (2014) showed that when the kill-save ratios (e.g., kill 1 to save 5 vs kill 1 to save 5000) were made extreme, people also effortlessly made the utilitarian decision. Hence, one could argue that these earlier empirical studies established that at least in some exceptional or extreme scenarios utilitarian responding can be intuitive. What the present findings indicate is that there is really nothing exceptional about intuitive utilitarianism. People’s sensitivity to utilitarian-deontological conflict in the present studies indicates that intuitive utilitarian processing occurs in the standard dilemmas with conventional kill-save ratios (e.g., 1 to 5) and severe deontological transgressions (e.g., killing) that were used to validate the standard dual process model of moral cognition. This implies that utilitarian intuitions are not a curiosity that result from extreme or trivial scenario content but lie at the very core of the moral reasoning process. In this sense, one might want to argue that our observations fit with earlier theoretical suggestions that stress the primacy of intuitive processes in moral judgments (e.g., Białek & Terbeck, 2016; Reference DubljevićDubljević & Racine, 2014; Reference Greene and HaidtGreene & Haidt, 2002; Reference HaidtHaidt, 2001).

To avoid confusion it is important to underline that our hybrid dual process model proposal does not argue against the idea that there also exists a type of demanding, deliberate utilitarian System 2 thinking as suggested by the traditional dual process model of moral cognition. Note that at least in Study 1 we observed – in line with the load studies of Reference Conway and GawronskiConway and Gawronski (2013) and Trémolière et al. (2012) — that people gave fewer utilitarian responses under load. This implies that although people might be intuitively detecting conflict between utilitarian and deontological dimensions of a moral dilemma, resolving the conflict in favour of a utilitarian decision can require executive resources. Hence, we do not contest that there might be a type of utilitarian thinking that is driven by System 2.Footnote 10 However, the key point here is that simply taking utilitarian considerations into account does not necessarily require deliberation or System 2 thinking. Mere System 1 thinking suffices to have people grasp the utilitarian dimensions of a dilemma and appreciate the conflict with competing deontological considerations. It is this observation that forces us to postulate that System 1 is also generating a utilitarian intuition. In other words, in the hybrid model intuitive and deliberate utilitarianism are complementary rather than mutually exclusive.

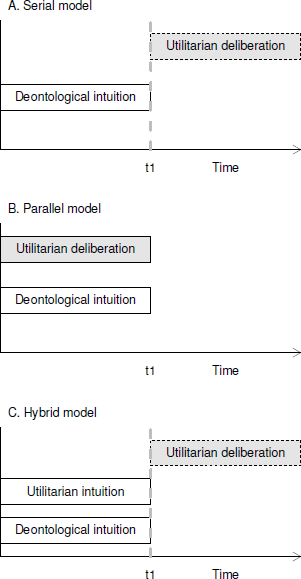

To illustrative the core differences between the hybrid model of moral cognition that we propose and the alternative serial or parallel models, we present a schematic illustration in Figure 5. In the serial model people initially start with System 1 processing which will generate an intuitive deontological response. This System 1 processing might be followed by optional generation of a utilitarian response based on deliberate System 2 processing in a later stage (represented by time t1 in the figure). In the parallel model these System 1 and System 2 processes are assumed to be activated simultaneously from the start. In the hybrid model, there is also parallel initial activation but this activation concerns cueing of a deontological and utilitarian System 1 intuition rather than the parallel activation of the two reasoning systems. But note that, as in the serial model, the initial System 1 processing can be followed by additional System 2 processing in a later stage. Indeed, making a final utilitarian decision and resolving the conflict between competing intuitions in favour of the utilitarian response is expected to be favoured by the explicit reflection and justification that is provided by System 2. However, the key point is that this System 2 deliberation is not needed to simply take the utilitarian view into account and experience the conflict with the cued deontological response.

Figure 5. Schematic illustration of three possible views on the interplay between System 1 and System 2 processing in dual process models of moral cognition. Deliberate system 2 processing is represented by gray bars and intuitive System 1 processing by white bars. The horizontal axis represents the time flow. In the serial model (A) reasoners initially only rely on System 1 processing that will cue an intuitive deontological response. In the parallel model (B) the two systems are both activated from the start. In the hybrid model (C) initial System 1 activation will cue both a deontological and utilitarian intuition. The dashed lines represent the optional nature of System 2 deliberation in the serial and hybrid model that can follow the initial System 1 processing in a later stage (represented as t1).

It should be noted that the hybrid model that we present does not make any specific claims about the nature of the System 2 deliberation that follows the initial System 1 processing. What we refer to as “utilitarian deliberation” might involve a justification process that allows people to provide explicit justifications for the initially cued intuitive utilitarian response. Alternatively, it might involve demanding inhibitory processing to override one of the conflicting responses, some deliberate weighting of the utilitarian and deontological responses, or a combination of all these different processes. Likewise, the hybrid model does not entail that System 2 deliberation is necessarily restricted to the utilitarian view. It is not excluded that in some cases people will allocate System 2 resources to deliberate about the deontological response.Footnote 11 Moreover, given that the impact of load on moral decisions was not robust across our three studies, one might even question whether there is still a need to postulate that making a utilitarian decision necessarily requires System 2 deliberation to start with. Hence, pinpointing the precise role and nature of the postulated System 2 deliberation phase is clearly one aspect of the model (or any other dual-process model) that will need further testing. Clearly, the key contribution of our model and work lies in the specification of the initial processing stage and the postulation of a parallel cueing of a deontological and utilitarian System 1 intuition.

It is also worthwhile to highlight that there might be some confusion in the field as to which specific interaction architecture the original dual process model of Greene and colleagues (e.g., Greene 2014; Reference Greene and HaidtGreene & Haidt, 2002) entails. Often the model has been interpreted as postulating a serial processing architecture, although it is acknowledged that the model is also open to a parallel reading (e.g., Baron et al., 2015; Reference KoopKoop, 2013; Reference Tinghög, Andersson, Bonn, Johannesson, Kirchler, Koppel and VästfjällTinghög et al., 2016). However, note that it might also be the case that the Greene dual process model was conceived with a hybrid processing architecture in mind. Under this interpretation of the Greene model, mere reading of the utilitarian dilemma option – without any further deliberation — suffices to intuitively grasp the utilitarian implications of a dilemma and detect the conflict with the deontological view. The idea here is that people cannot be said to have understood a moral “dilemma” without some understanding of the fact that there are competing considerations at play. Such understanding would — by definition — imply that people automatically give some minimal weight to each perspective in their judgment process. Under this reading, the Greene model always entailed that moral dilemmas cue both deontological and utilitarian intuitions and it should indeed be conceived as a hybrid model. Consequently, rather than a revision of the Greene dual process model our present work should be read as a correction of the received interpretation of the model. We prefer to keep a neutral position in this debate. Whatever the precise model Greene and colleagues might have had in mind, the key point is that the different possible incorporations have never been empirically tested against each other. As even the most fervent proponents of the dual process model in cognitive science have acknowledged, without a specification of the interaction, any dual process model is vacuous (e.g., Evans, 2007; Evans & Stanovich, 2003). It is here that the main contribution of our paper lies: We test the predictions of three possible architectures directly against each other and present robust evidence for the hybrid model.

We believe it is interesting to point to the possible wider implications of the present findings for dual process models of human cognition, outside of the specific moral reasoning field. As we noted, hybrid dual process models have already been put forward in studies on logical and probabilistic reasoning (and the related Heuristics and Biases literature, e.g., De Neys, 2012; Reference Handley and TrippasHandley & Trippas, 2015; Reference Pennycook, Fugelsang and KoehlerPennycook, Fugelsang & Koehler, 2015). In a nutshell, the traditional serial or parallel dual process models in these fields posits that, when people are faced with a logical or probabilistic reasoning task, System 1 will cue an intuitive, so-called “heuristic” response based on stored associations. Sound reasoning along traditional logical and probabilistic principles is typically assumed to require deliberate, System 2 processing (e.g., Kahneman, 2011; Reference Conway and GawronskiEvans & Stanovich, 2013). However, empirical studies have demonstrated that even reasoners who give the intuitively cued heuristic System 1 answer show sensitivity to the conflict between cued heuristics and logical or probabilistic principles in classic reasoning tasks – as expressed, for example, by an increased response doubt (Reference DeNeys, Rossi and HoudéDe Neys, Rossi & Houdé, 2013; Reference Thompson and JohnsonThompson & Johnson, 2014; Reference Pennycook, Trippas, Handley and ThompsonPennycook, Trippas, Handley & Thompson, 2014; Reference Stupple, Ball and EllisStupple, Ball & Ellis, 2013; Reference Trippas, Handley, Verde and MorsanyiTrippas, Handley, Verde & Morsanyi, 2016). Critically, this sensitivity is also observed when System 2 is “knocked out” under load or time pressure (e.g., Bago & De Neys, 2017; Reference Franssens and De NeysFranssens & De Neys, 2009; Reference Johnson, Tubau and De NeysJohnson, Tubau & De Neys, 2016; Reference Pennycook, Trippas, Handley and ThompsonPennycook et al., 2014; Reference Thompson and JohnsonThompson & Johnson, 2014). This result suggests that people consider the logical and probabilistic principles intuitively and presented one of the reasons to posit a hybrid view in which System 1 cues simultaneously a heuristic and logical intuition (e.g., De Neys, 2012; Reference Pennycook, Fugelsang and KoehlerPennycook et al., 2015). The simple fact that we want to highlight here is that these findings from the logical/probabilistic reasoning field show an interesting similarity with the current findings in the moral reasoning field. Both in the case of moral and logical/probabilistic reasoning there is evidence that reasoning processes that are often attributed to System 2 can also be achieved by System 1. To put it bluntly, in both cases it seems that System 1 is less oblivious and more informed than it has been assumed. Whereas this point remains somewhat speculative we hope that the present study will at least stimulate a closer interaction between dual process research on moral cognition and logical/probabilistic reasoning (e.g., Trémolière, De Neys & Bonnefon, 2017). We believe that such interaction holds great potential for each of the fields and the possible development of a general, all-purpose dual process model of human thinking.

To conclude, the results of the present studies indicate that reasoners show an intuitive sensitivity to the utilitarian aspects of classic moral dilemmas without engaging in demanding deliberation. These findings lend credence to a hybrid dual process model of moral reasoning in which System 1 is simultaneously generating a deontological and utilitarian intuition. Taken together, the available evidence strongly suggests that the core idea of an intuitive utilitarianism needs to be incorporated in any viable dual process model of moral cognition.