1. Introduction

We will start with a brief mention of a few examples of risks/trouble. Of course, there is much more than this. Could there be a single root cause for much of this trouble? And are we (partially) responsible?

After mentioning some of this trouble, and summaries thereof in the press (Section 2.2) and academic literature (Section 2.3), Section 3 will then survey some of the work on Risks 1.0 and 2.0 in our field, computer science. Reporters are accusing us of pivoting when they want to hear what we are doing to address Risks 2.0 (addictive, dangerous, and deadly), and we respond with a discussion of our recent progress on Risks 1.0 (bias and fairness).

Section 4 attempts to identify root causes. It has been suggested that the combination of machine learning and social media has created a Frankenstin Monster that takes advantage of human weaknesses. We cannot put our phones down, even though we know it is bad for us (and bad for society). Our attempts to build toxicity classifiers (Section 3) and moderation (Section 5) are not effective, given incentives (Section 6). We should not blame consumers of misinformation for their gullibility, or suppliers of misinformation (including adversaries) for taking advantage of the opportunities. There would be less toxicity without market makers creating a market for misinformation and fanning the flames.

Finally, after a discussion of history in Section 7, we will end with constructive suggestions in Section 8. In much of the work that we survey, there tends to be more discussion of problems than solutions. While that may be somewhat depressing, we are pleasantly surprised to see so much pushback from so many directions: governments, users, content providers, academics, consumer groups, advertisers, and employees. Given the high stakes, as well as the challenges, we will need all the help we can get from as many perspectives as possible.Footnote a

We are even more optimistic about the long term. While trafficking in misinformation may have been insanely profitable thus far, as evidenced by stock market caps, the long-term outlook is less bullish. Recent layoffs at Twitter and Facebook suggest the misinformation business may not continue to be as insanely profitable as it has been. At the end of the day, just as the lawlessness of the Wild West did not last long, this too shall pass. Chaos may be insanely profitable in the short term, but in the long term, chaos is bad for business (and many other parties).

As computer scientists, this paper will survey a diverse set of different perspectives and avoid the temptation to editorialize and advocate our own views. We apologize in advance for so many quotes, citations, and footnotes. As computer scientists, we want to make it clear that we are not experts in all these fields, or that we are entitled to a position on these questions. Our goals are more modest than that. We want to survey criticisms that are out there and suggest that our field should work on a response.

That said, we will suggest that we need more work on both Risks 1.0 (bias and fairness) as well as 2.0 (addictive, dangerous, and deadly). Thus far, there has been quite a bit of work in our field on Risks 1.0. We would like to see more work on Risks 2.0.

2. Risks 1.0 and Risks 2.0

2.1. What happened, and was it our fault?

We will start with a brief discussion of trouble around the world. It might seem that these issues are unrelated, but we fear that there may be a common root cause, and we may have contributed to the problem. Machine learning and social media have been implicated in much of this trouble. Correlated risks are more dangerous than uncorrelated risks. It may even be possible to strengthen claims for correlation to causality, as will be discussed in Section 4.2.

Much has been written about big data and Responsible AI (Zook et al. Reference Zook, Barocas, Boyd, Crawford, Keller, Gangadharan, Goodman, Hollander, Koenig, Metcalf, Narayanan, Nelson and Pasquale2017; McNamee Reference McNamee2020). The term, net neutrality, was introduced in Wu (Reference Wu2003). Tim Wu has also written about many related issues such as attention theft.Footnote b

Cathy O’Neil (Reference O’Neil2016) warned us that machine learning is a risk to democracy. Machine learning algorithms are being used to make lots of important decisions like who gets a loan and who gets out of jail. Many of these algorithms are biased and unfair (though perhaps not intentionally so by design). We will refer to these risks as Risks 1.0.

After Hillary Clinton lost the election to Donald Trump, she asked, “What happened?” (Clinton Reference Clinton2017). There has been considerable discussion of the usual suspects: her emails,Footnote c Wikileaks,Footnote d the RussiansFootnote e , Footnote f (Aral Reference Aral2020) and the director of the FBIFootnote g (Comey Reference Comey2018). Section 4 will suggest the root cause is actually less malicious, but more insidious.

Shortly after Clinton’s book, Madeleine Albright (1937–2022), secretary of state of the United States from 1997 to 2001, warned us about the rise of fascism around the world (Albright and Woodward Reference Albright and Woodward2018). A review of her bookFootnote h starts with: A seasoned US diplomat is not someone you’d expect to write a book with the ominous title Fascism: A Warning. This review continues with her response to a question about authoritarian leaders creating an anti-democratic spiral [underlining added]:

But are we witnessing an anti-democratic spiral? I think so. Some people have said my book is alarmist, and my response is always, “It’s supposed to be.” We ought to be alarmed by what’s happening. Demagogic leaders are taking advantage of all these various factors and using it to divide people further. We should absolutely be alarmed by that.

2.2. What is the press saying?

Two new books, The Chaos Machine (Fisher Reference Fisher2022) and Like, Comment, Subscribe (Bergen Reference Bergen2022), raise additional risks to public health/safety/security (henceforth, Risks 2.0) and suggest a connection to social media.

Bergen’s book is more about YouTube,Footnote i with more emphasis on domestic issues in America, especially from a perspective inside YouTube/Google. Fisher’s book is more about FacebookFootnote j than YouTube, with more emphasis on international trouble, from the perspective of a journalist that has covered trouble around the world with his colleague, Amanda Taub. Fisher’s book covers much of their reporting in the New York Times,Footnote k , Footnote l , Footnote m , Footnote n , Footnote o , Footnote p , Footnote q , Footnote r a newspaper in the United States.

A review of Bergen’s bookFootnote s emphasizes the reference to Frankenstein, as well as misinformation [underlining added]:

Bergen, a writer for Bloomberg Businessweek, begins the book with a quote from Mary Shelley’s “Frankenstein” and it’s easy to see why. From outsized YouTube personalities to misinformation campaigns, YouTube oftentimes comes across as the creature whose makers have lost control.

Fisher’s book mentions trouble around the world: Myanmar,Footnote t Sri Lanka,Footnote u opposition to vaccines,Footnote v climate change denial,Footnote w mass shootings, GamerGate,Footnote x Piazzagate,Footnote y QAnon,Footnote z right wing politics in Germany (AfD)Footnote aa , Footnote ab and America (MAGA),Footnote ac Charlottsville,Footnote ad the January 6th Insurrection,Footnote ae and more. Social media has been implicated in much of this trouble, as well as troubles that are not mentioned in Fisher’s book.Footnote af

A review of Fisher’s bookFootnote ag points out that Fisher is a careful journalist and does not explicitly “assume causality,” though causality is strongly implied (as will be discussed in Section 4.2) [underlining added]:

Fisher, a New York Times journalist who has reported on horrific violence in Myanmar and Sri Lanka, offers firsthand accounts from each side of a global conflict, focusing on the role Facebook, WhatsApp and YouTube play in fomenting genocidal hate. Alongside descriptions of stomach-churning brutality, he details the viral disinformation that feeds it, the invented accusations, often against minorities, of espionage, murder, rape and pedophilia. But he’s careful not to assume causality where there may be mere correlation.

There is considerable discussion of these topics on the internet in videos, blogs, podcasts, and more.Footnote ah , Footnote ai , Footnote aj , Footnote ak These topics are also discussed in a popular movie on Netflix, The Social Dilemma.Footnote al , Footnote am Frontline Footnote an has a documentary with a similar title, The Facebook Dilemma.Footnote ao

2.3. Academic literature

Fisher and Bergen are journalists. Academics provide a different perspective. There are many academic papers suggesting connections between social media and:

-

1. addiction (Young Reference Young1998; Griffiths Reference Griffiths2000; Kuss and Griffiths Reference Kuss and Griffiths2011a; Kuss and Griffiths Reference Kuss and Griffiths2011b; Andreassen et al. Reference Andreassen, Torsheim, Brunborg and Pallesen2012; Pontes and Griffiths Reference Pontes and Griffiths2015; Andreassen Reference Andreassen2015; van den Eijnden, Lemmens, and Valkenburg Reference van den Eijnden, Lemmens and Valkenburg2016; Andreassen et al. Reference Andreassen, Billieux, Griffiths, Kuss, Demetrovics, Mazzoni and Pallesen2016; Andreassen, Pallesen, and Griffiths Reference Andreassen, Pallesen and Griffiths2017; Courtwright Reference Courtwright2019),

-

2. misinformation (Lazer et al. Reference Lazer, Baum, Benkler, Berinsky, Greenhill, Menczer, Metzger, Nyhan, Pennycook, Rothschild, Schudson, Sloman, Sunstein, Thorson, Watts and Zittrain2018; Broniatowski et al. Reference Broniatowski, Jamison, Qi, Alkulaib, Chen, Benton, Quinn and Dredze2018; Schackmuth Reference Schackmuth2018; Vosoughi, Roy, and Aral Reference Vosoughi, Roy and Aral2018; Johnson et al. Reference Johnson, Velásquez, Restrepo, Leahy, Gabriel, El Oud, Zheng, Manrique, Wuchty and Lupu2020; Suarez-Lledo et al. Reference Suarez-Lledo and Alvarez-Galvez2021),

-

3. polarization/homophily Footnote ap , Footnote aq (McPherson, Smith-Lovin, and Cook Reference McPherson, Smith-Lovin and Cook2001; De Koster and Houtman Reference De Koster and Houtman2008; Colleoni, Rozza, and Arvidsson Reference Colleoni, Rozza and Arvidsson2014; Bakshy, Messing, and Adamic Reference Bakshy, Messing and Adamic2015; Barberá Reference Barberá2015; Kurka, Godoy, and Zuben Reference Kurka, Godoy and Zuben2016; Allcott and Gentzkow Reference Allcott and Gentzkow2017; Fourney et al. Reference Fourney, Rácz, Ranade, Mobius and Horvitz2017; Ferrara Reference Ferrara2017; Bail et al. Reference Bail, Argyle, Brown, Bumpus, Chen, Hunzaker, Lee, Mann, Merhout and Volfovsky2018; Grinberg et al. Reference Grinberg, Joseph, Friedland, Swire-Thompson and Lazer2019; Rauchfleisch and Kaiser Reference Rauchfleisch and Kaiser2020; Kaiser and Rauchfleisch Reference Kaiser and Rauchfleisch2020; Baptista and Gradim Reference Baptista and Gradim2020; Zhuravskaya, Petrova, and Enikolopov Reference Zhuravskaya, Petrova and Enikolopov2020),

-

4. riots/genocide (Zeitzoff Reference Zeitzoff2017; Hakim Reference Hakim2020),

-

5. cyberbullying (Zych, Ortega-Ruiz, and Rey Reference Zych, Ortega-Ruiz and Rey2015; Hamm et al. Reference Hamm, Newton, Chisholm, Shulhan, Milne, Sundar, Ennis, Scott and Hartling2015; Paluck, Shepherd, and Aronow Reference Paluck, Shepherd and Aronow2016),

-

6. suicide, depression, eating disorders, etc. (Luxton, June, and Fairall Reference Luxton, June and Fairall2012; O’Dea et al. Reference O’Dea, Wan, Batterham, Calear, Paris and Christensen2015; Choudhury et al. Reference Choudhury, Kiciman, Dredze, Coppersmith and Kumar2016; Primack et al. Reference Primack, Shensa, Escobar-Viera, Barrett, Sidani, Colditz and James2017; Robinson et al. Reference Robinson, Cox, Bailey, Hetrick, Rodrigues, Fisher and Herrman2016),

-

7. and insane profits (Oates Reference Oates2020).

Many of these topics are discussed in many other places, as well (Ihle Reference Ihle2019; Aral Reference Aral2020).

2.4. Summary of risks/trouble

Much of the trouble above is associated with misinformation. It is natural to try to fix the problem by going after bias and misinformation with debiasing, fact-checking,Footnote ar and machine learning (see footnote bd), but that may not work if misinformation is a consequence of some other underlying root cause and/or unfortunate incentives. Debiasing runs into the criticism from the NLP community: removing bias may not reduce inequality (Senthil Kumar et al. Reference Senthil Kumar, Chandrabose and Chakravarthi2021), and Awareness is better than blindness (Caliskan, Bryson, and Narayanan Reference Caliskan, Bryson and Narayanan2017).

To make matters worse, misinformation is creating correlated risks. Correlated risks are worse than uncorrelated risks.Footnote as Social media helps various small groups find one another; tweets are not i.i.dFootnote at (Himelboim, McCreery, and Smith Reference Himelboim, McCreery and Smith2013; Barberá Reference Barberá2015; Kurka et al. Reference Kurka, Godoy and Zuben2016). YouTube recommendations are also not i.i.d. (Kaiser and Rauchfleisch Reference Kaiser and Rauchfleisch2020). With these new social media technologies, it is no longer necessary for conspirators to conspire with one another explicitly the way they used to do in face-to-face meetings, and over the phone.

3. What are we doing about Risks 1.0 and Risks 2.0?

There is considerable work on Trustworthy computing,Footnote au Responsible AIFootnote av , Footnote aw , Footnote ax and ethicsFootnote ay , Footnote az (Blodgett et al. Reference Blodgett, Barocas, Daumé III and Wallach2020; Rogers, Baldwin, and Leins Reference Rogers, Baldwin and Leins2021; Church and Kordoni Reference Church and Kordoni2021). There is a documentary on PBS, Coded Bias Documentary — Facial Recognition and A.I. Bias, directed by Shalini Kantayya.Footnote ba The following quotes are from a summary of this documentatary:Footnote bb

Over 117 million people in the US has their face in a facial-recognition network that can be searched by the police.

Racism is becoming mechanized and robotized.

Power is being wielded through data collection, through algorithms, through surveillance.

Some of the leading figures in this field participated on an ACM Panel Discussion: “From Coded Bias to Algorithmic Fairness: How do we get there?”Footnote bc

Many of these concerns are relevant to our field. There is a considerable body of work in the ACL community on bias (Mitchell et al. Reference Mitchell, Wu, Zaldivar, Barnes, Vasserman, Hutchinson, Spitzer, Raji and Gebru2019; Blodgett et al. Reference Blodgett, Barocas, Daumé III and Wallach2020; Bender et al. Reference Bender, Gebru, McMillan-Major and Shmitchell2021), fake news detection,Footnote bd hate speech Footnote be (Schmidt and Wiegand Reference Schmidt and Wiegand2017; Davidson et al. Reference Davidson, Warmsley, Macy and Weber2017), offensive language (Zampieri et al. Reference Zampieri, Nakov, Rosenthal, Atanasova, Karadzhov, Mubarak, Derczynski, Pitenis and Çöltekin2020), abusive language (Waseem et al. Reference Waseem, Davidson, Warmsley and Weber2017), and more.

Many classifiers can be found on HuggingFaceFootnote bf and elsewhere.Footnote bg Unfortunately, as will be discussed in Section 6.1, these classifiers are unlikely to reduce toxicity given current incentives in the social media business to maximize shareholder value.

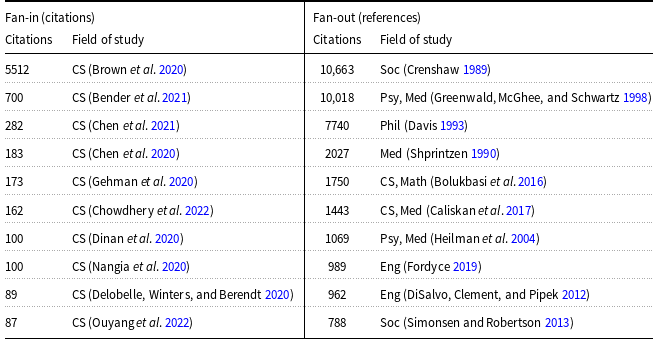

Table 1 was computed from Blodgett et al. (Reference Blodgett, Barocas, Daumé III and Wallach2020), a highly critical survey of work on bias in our field. They categorized 146 papers and concluded: the vast majority of these papers do not engage with the relevant literature outside of NLP.

Table 1. The top 10 papers by citations. The fan-out (right) are more interdisciplinary (and more cited)

Table 1 uses Semantic ScholarFootnote bh to provide additional evidence supporting this conclusion. The table shows 10 papers that cite the survey (fan-in) and 10 papers that are cited by the survey (fan-out). Both columns are limited to just the top 10 papers by citations, since there are too many papers to show (according to Semantic Scholar, 446 papers cite the survey and 236 are cited by the survey).

The Semantic Scholar API was also used to estimate fields of study. Note the differences between fan-out (right) and fan-in (left), both in terms of citation counts as well as fields of study. One of the points of the survey is that work in our field should engage more with the relevant (high-impact) literature in other fields. While there is considerable work in our field on bias, our work has relatively little impact beyond computer science.

3.1. Reporters are accusing our field of pivoting

In addition to concerns about engaging with other fields, we would also like to see more work addressing Risks 2.0. Some journalists are accusing the tech community of pivoting when they want to talk about Risks 2.0 (addictive, dangerous, and deadly), and we respond with a discussion of recent progress on work addressing Risks 1.0 (bias and fairness) [underlining added]:

But Entin and Quiñonero had a different agenda. Each time I tried to bring up these topics, my requests to speak about them were dropped or redirected. They only wanted to discuss the Responsible AI team’s plan to tackle one specific kind of problem: AI bias, in which algorithms discriminate against particular user groups. An example would be an ad-targeting algorithm that shows certain job or housing opportunities to white people but not to minorities. Footnote bi

This criticism was followed by an assertion that they (and we) should be prioritizing Risks 2.0 [underlining added]:

By the time thousands of rioters stormed the US Capitol in January, organized in part on Facebook… it was clear... the Responsible AI team had failed to make headway against misinformation and hate speech because it had never made those problems its main focus…

That criticism was followed by an assertion that they were not prioritizing Risks 2.0 because of incentives [underlining added]:

The reason is simple. Everything the company does and chooses not to do flows from a single motivation: Zuckerberg’s relentless desire for growth.

There is a growing concern that much of this criticism could also be applied to the NLP community. There is a real danger that the court of public opinion may not view our work on Risks 1.0 as part of the solution and might even see our work as part of the problem. We need to make progress on both Risks 1.0 as well as Risks 2.0.

The books mentioned above (Fisher Reference Fisher2022; Bergen Reference Bergen2022) have quite a bit to say about Risks 2.0. Many academics are mentioned (e.g., Chaslot, DiResta, Farid, Kaiser, Müller, Rauchfleisch, Schwarz), but there is relatively little discussion of our toxicity classifiers. Our classifiers may not have the impact we would hope because there are few incentives for social media companies to reduce toxicity, as will be discussed in Section 6.

4. Root causes

How does fake news spread? It is often suggested that fake news is spread by malicious bots (Bessi and Ferrara Reference Bessi and Ferrara2016; Ferrara Reference Ferrara2017) and malicious adversariesFootnote bj (Aral Reference Aral2020), perhaps via APIs (Ng and Taeihagh Reference Ng and Taeihagh2021), but the books mentioned above suggest an alternative mechanism. It is suggested that the use of machine learning to maximize engagement may have (accidentally) created a Frankenstein Monster that spreads fake news more effectively than real newsFootnote bk (Vosoughi et al. Reference Vosoughi, Roy and Aral2018).

4.1. A Frankenstein monster

What do the social media companies have to do with all this trouble? The suggestion is that a number of companies have been working over a number of years on machine learning algorithms for recommending content (Davidson et al. Reference Davidson, Liebald, Liu, Nandy, Van Vleet, Gargi, Gupta, He, Lambert, Livingston and Sampath2010; Covington, Adams, and Sargin Reference Covington, Adams and Sargin2016). They may or may not have intended to create a malicious Frankenstein monster, but either way, they eventually stumbled on a remarkably effective use of persuasive technology (Fogg Reference Fogg2002), Pavlovian conditioning (Rescorla Reference Rescorla1988; Bitterman Reference Bitterman2006) and Skinner’s intermittent variable reinforcement (Skinner Reference Skinner1953; Skinner Reference Skinner1965; Skinner Reference Skinner1986) to take advantage of human weaknesses. Just as casinos take advantage of addicted gamblers, recommender algorithms (Gillespie Reference Gillespie2014) know that it is impossible for us to satisfy our cravings for likes. We cannot put our phones down, and stop taking dozens of dopamine hits every day, even though we know it is bad for us (and bad for society)Footnote bl , Footnote bm (Jacobsen and Forste Reference Jacobsen and Forste2011; Junco Reference Junco2012; Junco and Cotten Reference Junco and Cotten2012).

Sean Parker, who had become Facebook’s first president at the age of 24 years, put it this way [underlining added]:

we are unconsciously chasing the approval of an automated system designed to turn our needs against us (Fisher Reference Fisher2022) (p. 31)

Fisher’s book (Fisher Reference Fisher2022) (pp. 24–25) suggests a connection between Napster’s strategy of exploiting a weakness in the music industry and Facebook’s strategy of exploiting a weakness in human nature, which they refer to as the social-validation feedback loop [underlining added]:

Parker had cofounded Napster, a file-sharing program … that… damaged the music business… Facebook’s strategy, as he described it, was not so different from Napster’s. But rather than exploiting weaknesses in the music industry, it would do so for the human mind… “How do we consume as much of your time and conscious attention as possible?” … To do that, he said, “We need to sort of give you a little dopamine hit every once in a while, because someone liked or commented on a photo or a post or whatever. And that’s going to get you to contribute more content, and that’s going to get you more likes and comments.” He termed this the “social-validation feedback loop…” exploiting a vulnerability in human psychology.” He and Zuckerberg “understood this” from the beginning, he said, and “we did it anyway.”

Maximizing engagement brings out the worst in people, with significant risks for public health, public safety, and national security [underlining added]:

Either unable or unwilling to consider that its product might be dangerous, Facebook continued expanding its reach in Myanmar and other developing and under-monitored countries. It moored itself entirely to a self-enriching Silicon Valley credo that [Google’s Eric] Schmidt had recited on that early visit to Yangon: “The answer to bad speech is more speech. More communication, more voices.” (Fisher Reference Fisher2022) (p. 38)

Guillaume Chaslot worked on YouTube’s algorithm but was fired because he wanted to make the algorithm less toxic (and less profitable). He has since become an outspoken critic of maximizing engagement [underlining added]:Footnote bn , Footnote bo

YouTube was exploiting a cognitive loophole known as the illusory truth effect. (Fisher Reference Fisher2022) (p. 125)

Chaslot’s mention of “the illusory truth effect” is a reference to the literature on truth effectsFootnote bp (Dechêne et al. Reference Dechêne, Stahl, Hansen and Wänke2010; Fazio et al. Reference Fazio, Brashier, Payne and Marsh2015; Unkelbach et al. Reference Unkelbach, Koch, Silva and Garcia-Marques2019). There is a well-known tendency to believe false information to be correct after repeated exposure. The illusory truth effect plays a significant role in such fields as election campaigns, advertising, news media, and political propaganda.Footnote bq

Eli Pariser coined the term “filter bubble” circa 2010.Footnote br The Frankenstein monster is creating polarization by giving each of us a personalized view that we are likely to agree with, leading to confirmation bias. He gave a TED Talk on filter bubbles in 2011 [underlining added]:

As web companies strive to tailor their services (including news and search results) to our personal tastes, there’s a dangerous unintended consequence: We get trapped in a “filter bubble” and don’t get exposed to information that could challenge or broaden our worldview… this will ultimately prove to be bad for us and bad for democracy. Footnote bs

In summary, the root cause of much of the trouble mentioned above is the market maker and the business case, not the suppliers and consumers of misinformation (or even malicious adversaries like the Russian Internet Research Agency).Footnote bt Trafficking in misinformation is so insanely profitable (at least in the short term) that it is in the market-maker’s benefit to do so, despite risks to public health/safety/security. Suppliers and consumers of misinformation (and adversaries) would not do what they are doing if the market-makers did not create the market and fan the flames.

4.2. Causality

Although Fisher does not assume causality, as discussed in Section 2.2, he provides considerable evidence connecting the dots between social media and violence [underlining added]:

The country’s leaders [in Sri Lanka], desperate to stem the violence, blocked all access to social media. It was a lever they had resisted pulling, reluctant to block platforms that some still credited with their country’s only recent transition to democracy, and fearful of appearing to reinstate the authoritarian abuses of earlier decades. Two things happened almost immediately. The violence stopped; without Facebook or WhatsApp driving them, the mobs simply went home. And Facebook representatives, after months of ignoring government ministers, finally returned their calls. But not to ask about the violence. They wanted to know why traffic had zeroed out. (Fisher Reference Fisher2022) (p. 175)

Fisher and Taub provide a second example of causality in footnote o, where they refer to Müller and Schwarz (Reference Müller and Schwarz2021) as “a landmark study,” providing strong evidence for causality [underlining added]:

This may be more than speculation. Little Altena exemplifies a phenomenon long suspected by researchers who study Facebook: that the platform makes communities more prone to racial violence. And, now, the town is one of 3,000-plus data points in a landmark study that claims to prove it.

The abstract of Müller and Schwarz (Reference Müller and Schwarz2021) does not mince words: there is a strong assertion of causality [underlining added]:

We show that anti-refugee sentiment on Facebook predicts crimes against refugees… To establish causality, we exploit exogenous variation in major Facebook and internet outages, which fully undo the correlation between social media and hate crime… Our results suggest that social media can act as a propagation mechanism between online hate speech and violent crime. (Müller and Schwarz Reference Müller and Schwarz2021)

A third argument for causality involves misinformation in Sri Lanka. This misinformation infected all but the elderly, who are immune to the problem because they are less exposed to Facebook [underlining added]:

When I asked Lal and the rest of his family if they believed the posts were true, all but the elderly, who seemed not to follow, nodded. (Fisher Reference Fisher2022) (p. 167)

4.3. An example of trouble: Vaccines and minority rule

Much has been written about misinformation and vaccines in Nature (Johnson et al. Reference Johnson, Velásquez, Restrepo, Leahy, Gabriel, El Oud, Zheng, Manrique, Wuchty and Lupu2020) and Chapter 1 of Fisher’s book, and elsewhere (Suarez-Lledo et al. Reference Suarez-Lledo and Alvarez-Galvez2021). Apparently, large majorities support vaccines, and yet, there are schools with low vaccination rates. Misinformation is creating a serious (correlated) risk to public health [underlining added]:Footnote bu , Footnote bv

It was 2014… and DiResta had only recently arrived in Silicon Valley… she began to investigate whether the anti-vaccine anger she’d seen online reflected something broader. Buried in the files of California’s public-health department, she realized, were student vaccination rates for nearly every school in the state… What she found shocked her. Some of the schools were vaccinated at only 30 percent…” She called her state senator’s office to ask if anything could be done to improve vaccination rates. It wasn’t going to happen, she was told. Were vaccines really so hated? she asked. No, the staffer said. Their polling showed 85 percent support for a bill that would tighten vaccine mandates in schools. But lawmakers feared the extraordinarily vocal anti-vaccine movement… seemed to be emerging from Twitter, YouTube, and Facebook… Hoping to organize some of those 85 percent of Californians who supported the vaccination bill, she started a group—where else? — on Facebook. When she bought Facebook ads to solicit recruits, she noticed something curious. Whenever she typed “vaccine,” or anything tangentially connected to the topic, into the platform’s ad-targeting tool, it returned groups and topics that were overwhelmingly opposed to vaccines. (Fisher Reference Fisher2022) (pp. 13–14)

Similar mechanisms may explain why the minority has such a good chance to control all three branches of the US government (presidency, congress, and the courts) in the near future. Is it possible that the anti-vaccination movement is strong, not because of the facts/science, or the number of supporters, but because of social media? There are some scary precedents where minority rule ended badly.Footnote bw

4.4. Summary of root causes and precedents

Many of these risks are not new. There has been a long tradition of misinformation, propagandaFootnote bx and hype (Aral Reference Aral2020). The “Big Lie” used to refer to Goebbels.Footnote by Mark Twain is credited with the aphorism that a lie can travel halfway around the world while the truth is putting on its shoes (Jin et al. Reference Jin, Wang, Zhao, Dougherty, Cao, Lu and Ramakrishnan2014). There are examples of protest movements that went viral long before Facebook and the Arab spring.Footnote bz Footnote bz

What is new is the speed and connectivity. With modern technology, a lie can travel faster than ever before.

5. Moderation: An expensive nonsolution

Facebook and YouTube have expensive cost centers that attempt to clean up the mess, but they cannot be expected to keep up with better-resourced profit centers that are pumping out toxic sludge as fast as they can [underlining added]:

Some had joined the company thinking they could do more good by improving Facebook from within than by criticizing from without. And they had been stuck with the impossible job of serving as janitors for the messes made by the company’s better-resourced, more-celebrated growth teams. As they fretted over problems like anti-refugee hate speech or disinformation in sensitive elections, the engineers across the hall were redlining user engagement in ways that, almost inevitably, made those problems worse. (Fisher Reference Fisher2022) (p. 261)

Facebook outsources much of the clean-up effort to under-resourced third parties [underlining added]:

After a few weeks had passed [after a violent incident in Sri Lanka], we asked Facebook how many Sinhalese-speaking moderators they’d hired. The company said only that they’d made progress. Skeptical, Amanda scoured employment websites in nearby countries. She found a listing, in India, for work moderating an unnamed platform in Sinhalese. She called the outsourcing firm through a translator, asking if the job was for Facebook. The recruiter said that it was. They had twenty-five Sinhalese openings, every one unfilled since June 2017 — nine long months earlier. Facebook’s “progress” had been a lie. (Fisher Reference Fisher2022) (p. 177)

Much has been written about moderation in Bergen (Reference Bergen2022), Fisher (Reference Fisher2022), and elsewhere.Footnote ca , Footnote cb Moderation is unlikely to work, given the lack of incentives [underlining added]:

With little incentive for the social media giants to confront the human cost to their empires—a cost borne by everyone else, like a town downstream from a factory pumping toxic sludge into its communal well—it would be up to dozens of alarmed outsiders and Silicon Valley defectors to do it for them. (Fisher Reference Fisher2022) (pp. 11–12)

Zuckerberg posted a blog on moderationFootnote cc and reward curves. Engagement (and profits) increase as content comes closer and closer to the line of acceptability, but if content crosses the line, then there will be no engagement after it is censored.

Interestingly, there is less toxicity in China, perhaps because of differences in reward curves. The penalties can be severe in China for coming close to the line, and there is more uncertainty about where the line is. In California, liability lawsuits have been effective in convincing electric companies to prevent forest fires.Footnote cd Similar methods might convince social media companies to address toxicity. Zuckerberg’s blog mentions many suggestions, but not increases in penalties and/or liabilities.

5.1. Jacob, a whistleblower

Even if Facebook had been able to hire enough moderators to keep up with better-resourced profit centers pumping out toxic sludge, the moderating task is an impossible task (Fisher Reference Fisher2022) (pp. 4–6) [underlining added]:

At the other end of the world, a young man I’ll call Jacob, a contractor…, had formed much the same suspicions as my own. He had raised every alarm he could. His bosses had listened with concern, he said, even sympathy. They’d seen the same things he had. Something in the product they oversaw was going dangerously wrong…

Jacob recorded his team’s findings and concerns to send up the chain. Months passed. The rise in online extremism only worsened.

Jacob first reached me in early 2018… Facebook, on learning what I’d acquired, invited me to their sleek headquarters, offering… corporate policymakers available to talk.

5.2. I know it when I see it

Moderators are supposed to follow written rules. It is inevitable that rules become more and more complicated over time.Footnote ce It might be an impossible task to define rules to cover all imaginable cases across languages, countries, and cultures. The US Supreme Court tried to define obscenity, but eventually, ended up with the famous non-definition: I know it when I see it.Footnote cf

Context often matters. Innocent videos can become not-so-innocent when seen by a different audience from a different perspective. For example, footnote r describes some examples of pedophiles taking advantage of innocent videos of children. Given this reality, it may not be possible for moderators to know it when they see it.Footnote cg

5.3. Twitter is perhaps more open to moderation

According to Fisher (Reference Fisher2022) pp. 219–220 and other sources,Footnote ch there was a time when Twitter may have been relatively open to addressing toxicity, even if doing so could have led to a reduction in engagement/profits [underlining added]:

At Twitter, Dorsey… was shifting toward… deeper changes… that the Valley had long resisted… instead of turbocharging its algorithms or retooling the platform to surface argument and emotion, as YouTube and Facebook had done…, Dorsey announced… social media was toxic… The company… would reengineer its systems to promote “healthy” conversations rather than engaging ones.

Unfortunately, the effort failed.Footnote ci Twitter is smaller than Facebook and YouTube and less profitable, perhaps because Twitter is less committed to the business plan of maximizing engagement (and toxicity). There is less discussion of Twitter in Fisher (Reference Fisher2022): there are 500 mentions of Facebook, 465 mentions of YouTube, and just 169 mentions of Twitter.

It is unclear what will happen to Twitter after the recent acquisition. There have been suggestions that it needs to think more about its long-term strategy:

I don’t think Elon [Musk] has a plan for: this should be a nicer place to be. Because what you need to do is to go from 229M monetizable daily users to 2B. Footnote cj

It would be nice if Twitter was a nice place to be. That seems unlikely to happen, especially given recent layoffs (including people working on moderation),Footnote ck and changes to user verification policies.Footnote cl , Footnote cm , Footnote cn

6. Incentives

The problem is that trafficking in misinformation is so insanely profitable.Footnote co , Footnote cp We cannot expect social media companies to regulate themselves.Footnote cq Most social media companies have an obligation to maximize shareholder value, under normal assumptions.Footnote cr It is easier for nonprofits such as Wikipedia and ScratchFootnote cs to address toxicity because nonprofits are not expected to maximize shareholder value.

Competition is forcing a race to the bottom, where everyone has to do the wrong thing. If one company decides to be generous and do the right thing, they will lose out to a competitor that is less generous.Footnote ct

In an unintended 2015 test of this [race to the bottom], Ellen Pao, still Reddit’s chief, tried something unprecedented: rather than promote superusers, Reddit would ban the most toxic of them. Out of tens of millions of users, her team concluded, only about 15,000, all hyperactive, drove much of the hateful content. Expelling them, Pao reasoned, might change Reddit as a whole. She was right, an outside analysis found. With the elimination of this minuscule percentage of users, hate speech overall dropped an astounding 80 percent among those who remained. Millions of people’s behavior had shifted overnight. It was a rare success in combating a problem that would only deepen on other, larger platforms, which did not follow Reddit’s lead. They had no interest in suppressing their most active users, much less in acknowledging that there might be such a thing as too much time online. Fisher (Reference Fisher2022) p. 189. [underlining added]

This race to the bottom is described in a segment on the CBS television show, 60 Minutes, titled “Brain Hacking.”Footnote cu This segment leads with Tristan Harris,Footnote cv who makes similar points in a TED Talk,Footnote cw at StanfordFootnote cx and elsewhere.Footnote cy

If social media companies do not want to reduce toxicity, then it is unlikely to happen. Asking social media companies to reduce toxicity is like the joke about about therapists and light bulbs:

Question: How many therapists does it take to change a light bulb?

Answer: Just one — but the light bulb has to really want to change. Footnote cz

The profits are so large that going cold turkey could have serious consequences not only for the companies but also for the national (and international) economy. Of the top 10 stocks by market cap, more than half are technology stocks, and some of their core businesses involve trafficking in misinformation.

6.1. Non-solutions

A number of solutions are unlikely to work given these incentives:

-

1. Toxicity classifiers (as discussed in Section 3)

-

2. Just say noFootnote da

Suppose we were given a magic toxicity classifier that just worked. Given these incentives, the social media company should use the classifier in the reverse direction. That is, rather than use the classifier to minimize toxicity, the social media company should maximize toxicity (in order to maximize profits).

It is also unreasonable to ask companies to cut off their main source of revenue just as we cannot expect tobacco companies to sell fewer cigarettes. The CBS television show, 60 Minutes, ran similar stories on whistle-blowers in tobacco companiesFootnote db and social media.Footnote dc In both cases, the companies appeared to know more than they were willing to share about risks to public health and public safety.

6.2. It is easier to say no to noncore businesses

It is hard for a company to shut down its core business. Thus, it may be difficult for Facebook and YouTube to shutdown their core business in social media. Microsoft, on the other hand, is different, because Microsoft is not a social media company. Hany FaridFootnote dd explained the difference this way:

“YouTube is the worst,” he said. Of what he considered the four leading web companies—Google/YouTube, Facebook, Twitter, and Microsoft—the best at managing what he’d called “the poison” was, he believed, Microsoft. “And it makes sense, right? It’s not a social media company,” he said. “But YouTube is the worst on these issues” Fisher (Reference Fisher2022), p. 198.

China also differentiates Microsoft from the others. Of the four companies Farid called out, Microsoft is the only one that is not blocked in China. Many apps are blocked in many countries,Footnote de , Footnote df though there are some interesting exceptions.Footnote dg

7. History

7.1. Precedents and unsuccessful attempts to just say no

There is a long tradition of prioritizing profits ahead of public health and public safety. Consider the Opium Wars and the role of the East India Company and the British Empire in this conflict. According to Imperial twilight: The opium war and the end of China’s last golden age (Platt Reference Platt2018) (p. 393 and note 11 on p. 503), the term “opium wars” was coined by The Times, the conservative paper in England, in a strongly worded editorial. The conservatives were opposed to the opium trade because of the risk to their core businesses in tea and textiles. History remembers the conservative’s sarcastic name for the conflict, even though the conservatives lost the debate in parliament.

When the first author worked at AT&T, they attempted to say no to 976 numbers when they realized that these numbers were being used by iffy businesses in pornography and various scams. AT&T viewed those businesses like The Times viewed opium: not profitable enough to justify risks to more important core businesses. AT&T also valued its brand and would not risk it for short-term gains.

AT&T’s attempt to end 976 numbers involved a change of numbers, as well as a change in tariffs. The new 900 numbers were tariffed as a joint venture, where AT&T was responsible for billing and transport, and the other company was responsible for content. As a joint venture, AT&T could opt out if it did not approve of the business. The old 976 numbers were tariffed like like sealed box cars, where AT&T was prohibited from breaking the seal. Even if AT&T knew what was inside those box cars, they were required by the tariff to ship the unpleasant cargo.

Unfortunately, the effort was ineffective. While 900 numbers provided AT&T with a legal right to opt out of iffy businesses, there were so many iffy businesses that AT&T was unable to keep up with the problem. The problem eventually became someone else’s problem when the internet came along and proved to be a superior technology for iffy businesses.Footnote dh

7.2. What happened to Our Idealism?

It is hard to remember these days, but there was a time about a decade ago when most of us thought social media technology would make the world a better place.

I want to remind us of the awe-inspiring power of the Hype Machine to create positive change in our world. But I have to temper that optimism by noting that its sources of positivity are also the sources of the very ills we are trying to avoid… This dual nature makes managing social media difficult. Without a nuanced approach, as we turn up the value, we will unleash the darkness. And as we counter the darkness, we will diminish the value. (Aral Reference Aral2020) (pp. 356–357)

What happened to our optimism?

-

1. The Arab SpringFootnote di (Howard et al. Reference Howard, Duffy, Freelon, Hussain, Mari and Maziad2011; Fuchs Reference Fuchs2012; Smidi and Shahin Reference Smidi and Shahin2017) was followed by the Arab Winter.Footnote dj , Footnote dk

-

2. What happened to “Hope and change?” Technology helped elect Obama in 2008 and Trump in 2016.Footnote dl Why was 2016 different than 2008?

-

3. “Don’t be evil”Footnote dm became less idealistic (“Move fast and break things”),Footnote dn more profit driven (“Tech Rules our Economy)”Footnote do and chaotic (Taplin Reference Taplin2017).

The details are different in each case. Consider Obama’s use of technology in 2008. In that case, it is useful to appreciate how long it took to deploy broadband. We tend to think that the roll-out happened quickly, but actually, it took decades. They were connecting about 7M households per year in the United States. About half of the 100M households had broadband in 2008. Since it is cheaper to wire up houses in urban areas, the half with broadband in 2008 overlapped with Obama’s base. By 2016, the roll-out was largely completed, eliminating that advantage.

In addition, and more seriously, many of the root causes in Fisher’s book became important between 2008 and 2016. Trump benefited in 2016 by maximizing engagement and trafficking in misinformation (McNamee Reference McNamee2020). Many of the details behind Trump’s victory involve Cambridge Analytica and data scraped from FacebookFootnote dp , Footnote dq , Footnote dr (Wylie Reference Wylie2019). At first, we thought social media technology would benefit positions we agreed with, but more realistically, these forces favor polarization and extremism (O’Callaghan et al. Reference O’Callaghan, Greene, Conway, Carthy and Cunningham2015; Allcott and Gentzkow Reference Allcott and Gentzkow2017) (Fisher Reference Fisher2022) (p. 152).

More generally, when people (and companies) are young, there are more possibilities for growth (and optimism for the future). But as people and companies grow up, there are fewer opportunities for growth, and more downsides. It is natural for the youth to be anti-establishment (“move fast and break things”), and for the establishment to be more risk averse and more realistic and less idealistic.

The word, corporation, is a legal fiction, where companies are treated like people. But there is more to the analogy than that. Start-up companies are like teenagers. After a while, they become middle-aged, and wish they were young again. Companies eventually become senior citizens. Seniors are not as agile as they used to be. Growth stocks eventually become value stocks. Social media will eventually grow up and become a utility.

8. Constructive suggestions

What can we do about this nightmare? We view the current chaos like the Wild West. Just as that lawlessness did not last long because it was bad for business, so too, in the long run, the current chaos will be displaced by more legitimate online businesses.

What can we do in the short term? Many of the books mentioned above (O’Neil Reference O’Neil2016; Aral Reference Aral2020; Fisher Reference Fisher2022; Bergen Reference Bergen2022) have more to say about the problem than the solution. For an example of how we can be part of the solution,Footnote ds read Chapter 5 of Zucked (McNamee Reference McNamee2020), Mr. Harris and Mr. McNamee Go to Washington. (There is a condensed version of McNamee’s book on Democracy Now!)Footnote dt , Footnote du

As an early investor in Facebook, Roger McNamee has connections to the Facebook leadership. He tried to use those connections to raise awareness within Facebook. When that failed, he published an op-edFootnote dv and worked with Tristan Harris on the TED Talk mentioned in footnote cw. When those efforts failed to raise enough awareness to make meaningful progress, Harris and McNamee went to Washington, and were more successful there, as described in Chapter 6 of his book, Congress Gets Serious.

McNamee seems to be having more success in government than with the Facebook leadership. His efforts may or may not succeed, but either way, we respect his persistence, as well as his emphasis on constructive solutions. He wrote a piece for law-makers with a title that emphasizes fixes: How to Fix Facebook—Before It Fixes Us.Footnote dw This piece was not only effective with law-makers, but it also reached Soros, who gave a speech at Davos along similar lines.Footnote dx , Footnote dy The text of Soros’s remarks can be found in Appendix 2 of McNamee (Reference McNamee2020). Facebook tends to ignore such criticisms, but they are not ignoring Soros.Footnote dz

McNamee (p. 231) describes a simple project involving word associations. This project could be a good exercise for students in our classes. He suggests that Facebook’s brand suffered since the 2016 election and provides evidence involving associations with pejorative words such as: scandal, breach, investigation, fake, Russian, alleged, critical, false, leaked, racist. It should be relatively easy for students in our classes to use word associations and deep nets (BERT) to track sentiment toward various brands as a function of time.

8.1. Pushback from many perspectives

As mentioned above, we are pleasantly surprised to see so much pushback from so many directions: governments, users, content providers, academics, consumer groups, advertisers and employees. Given the high stakes, as well as the challenges, we will need all the help we can get from so many different perspectives:

-

1. Pushback from government(s): (See Section 8.3) Regulation, anti-trust, bans, taxes, fines, liability, data privacy, educationFootnote ea

-

2. Pushback from users and their friends and family (including parents, children and peers)Footnote eb , Footnote ec (Allcott et al. Reference Allcott, Braghieri, Eichmeyer and Gentzkow2020)

-

3. Pushback from investorsFootnote ed (McNamee Reference McNamee2020)

-

4. Pushback from content providersFootnote ee

-

5. Pushback from mediaFootnote ef (Bergen Reference Bergen2022; Fisher Reference Fisher2022)

-

6. Pushback from academics (Aral Reference Aral2020)

-

7. Pushback from consumer groups,Footnote eg , Footnote eh activists,Footnote ei , Footnote ej and consultants.Footnote ek , Footnote el

-

8. Pushback from advertisersFootnote em (Fisher Reference Fisher2022) (p. 311)

-

9. Pushback from employees (see Section 8.2)

-

10. Economic auctions: Google’s Ad Auction finds an equilibrium satisfying the needs of three parties:Footnote en readers, writers, and advertisers. YouTube’s “audience first” strategyFootnote eo prioritizes the audience ahead of other parties. Perhaps they would have more success with an auction that addresses the needs of more parties. The market maker should not favor one party over the others: You’re Not the Customer; You’re the Product.Footnote ep

There is considerable discussion of many of the suggestions above, though so far, there are relatively few examples that are as successful as face recognition. Following concerns in Buolamwini and Gebru (Reference Buolamwini, Gebru, Friedler and Wilson2018), there are limits on the use of face recognition technology involving a combination of legislationFootnote eq , Footnote er and voluntary actions.Footnote es , Footnote et

8.2. Pushback from employees

Pushback from employees is already happening and may be more effective than most of the suggestions above.

Employees are writing books (Martinez Reference Martinez2018) and participating in documentaries such as The Social Dilemma, mentioned in Section 2.2. Tristan Harris, for example, has been mentioned several times above.

There are a number of quotes from employees in Fisher (Reference Fisher2022):

“Can we get some courage and actual action from leadership in response to this behavior?” a Facebook employee wrote on the company’s internal message board as the riot unfolded. “Your silence is disappointing at the least and criminal at worst.” (Fisher Reference Fisher2022) (p. 325)

Polls of employees confirm this sentiment:Footnote eu

“When I joined Facebook in 2016, my mom was so proud of me,” a former Facebook product manager told Wired magazine. “I could walk around with my Facebook backpack all over the world and people would stop and say, ‘It’s so cool that you worked for Facebook.’ That’s not the case anymore.” She added, “It made it hard to go home for Thanksgiving.” Footnote ev (Fisher Reference Fisher2022) (p. 248)

There have been a number of other examples of pushback from employees in the news recently, starting with Uber.Footnote ew More recently, Facebook employees wrote an open letter to Zuckerberg.Footnote ex Even more recently, Google has been in the news.Footnote ey , Footnote ez , Footnote fa , Footnote fb , Footnote fc , Footnote fd

8.3. Regulation

There must be a way to make it less insanely profitable to traffic in misinformation. Regulators should “Follow the money”Footnote fe and “take away the punch bowl.”Footnote ff The risks to public health, public safety, and national security are too great (Oates Reference Oates2020).

Regulation can come in many forms: anti-trust, censorship, bans, rules about data privacy, taxes, and liabilities. Europe has been leading the way on regulation (Section 8.3.1), especially when compared to America (Section 8.3.2).

8.3.1. Regulation in the European Union

Regulation is taken very seriously in Europe. As mentioned above, there are strong data privacy laws such as the GDPR,Footnote fg and companies have been fined.Footnote fh There are also rules to combat fake news, hate speech, and misinformation such as the Network Enforcement Act (Netzwerkdurchsetzungsgesetz), known colloquially as the Facebook Act.Footnote fi , Footnote fj Even stronger regulation is under discussion.Footnote fk Many people are involved in these discussions, including members of our field.

8.3.2. Less regulation in the United States

There is less regulation in the United States than in Europe [underlining added]:

Some agencies, such as the Food and Drug Administration or the Department of Transportation, have been working for years to incorporate AI considerations into their regulatory regimes. In late 2020, the Trump Administration’s Office of Management and Budget encouraged agencies to consider what regulatory steps might be necessary for AI, although it generally urged a light touch. Footnote fl

There is a link from the final phrase, light touch, to a strong criticism of the lack of regulation of AI in the United States under the Trump administration. This criticism ends with:

Yet there is a real risk that this document becomes a force for maintaining the status quo, as opposed to addressing serious AI harms. Footnote fm

In the United States, there has been more regulation of health care and energy than Artificial Intelligence (Goralski and Górniak-Kocikowska Reference Goralski and Górniak-Kocikowska2022; Munoz and Maurya Reference Munoz and Maurya2022), though there is an effort to increase regulation of AI under the Biden administration.Footnote fn There are also efforts at the state level to regulate AI,Footnote fo privacy,Footnote fp self-driving cars,Footnote fq and facial recognition and biometrics.Footnote fr

Congress may take action based on anti-trust considerations:Footnote fs

The effects of this significant and durable market power are costly. The Subcommittee’s series of hearings produced significant evidence that these firms wield their dominance in ways that erode entrepreneurship, degrade Americans’ privacy online, and undermine the vibrancy of the free and diverse press. The result is less innovation, fewer choices for consumers, and a weakened democracy.

There are some strong advocates of anti-trust.Footnote ft That said, even the threat of anti-trust action can be effective.Footnote fu One the other hand, anti-trust will take time, as pointed out in Chapter 12 of Aral (Reference Aral2020).

Realistically, it is unlikely that regulation will succeed in America as long as one party or the other believes that the status quo is in their best interest. As discussed in Section 4.3, trafficking in misinformation enables minority rule.Footnote fv With help from social media, it is likely that all three branches of the US government (executive branch, congress, and courts) will be captured by less than 50% of the voters.Footnote fw

8.3.3. Data privacy

There is considerable discussion of data privacy in McNamee (Reference McNamee2020). Doctors and lawyers are not allowed to sell personal data (p. 226). So too, social media companies should be liable for inappropriate disclosures, as would be expected in many industries: medicine, banking, etc. McNamee advocates for a fiduciary rule; companies would more careful if consumers had the right to sue.

McNamee also advises social media companies to cooperate with privacy laws, but that is unlikely to happen [underlining added]:

the target industry is usually smart to embrace the process early, cooperate, and try to satisfy the political needs of policy makers before the price gets too high. For Facebook and Google, the first “offer” was Europe’s General Data Protection Regulation (GDPR). Had they embraced it fully, their political and reputational problems in Europe would have been reduced dramatically, if not eliminated altogether. For reasons I cannot understand, both companies have done the bare minimum to comply with the letter of the regulation, while blatantly violating the spirit of it. (McNamee Reference McNamee2020) (p. 221)

The American companies should show more respect to the regulators in Europe. As discussed in Section 8.3.1, there have already been a few fines, and there will be more.

Much has been written about data privacy in different parts of the world. It is said that there is relatively little privacy in China, but the PIPLFootnote fx in China is similar to the GDPR in Europe and the CCPAFootnote fy in California. Some companies and some countries are more careful with data than others. The first author has worked for a number of companies. In his experience, his employer in China (Baidu) is more careful than his employers in America. The penalties for inappropriate disclosures can be severe in China. The American government seems to be able to get what it wants.Footnote fz Other governments may be similar. It is not clear how users can defend themselves from a government or a sophisticated adversary.Footnote ga One could avoid the use of cell phones, and connections to the internet, as is standard practice in a SCIF,Footnote gb but it is hard to imagine that most of us would be willing to do that.

9. Conclusions: Trafficking in misinformation is insanely profitable in the short term, but bad for business in the long term

We have discussed Risks 1.0 (fairness and bias) and Risks 2.0 (addictive, dangerous, and deadly). The combination of machine learning and social media has created a Frankenstein Monster that uses persuasive technology, the illusory truth effect, Pavlovian conditioning, and Skinner’s intermittent variable reinforcement to take advantage of human weaknesses and biases. We cannot put our phones down, even though we know it is bad for us and bad for society. The result is insanely profitable, at least in the short term.

Much of the trouble mentioned above is caused by the market maker and the business strategy, not the suppliers and consumers of misinformation, or even malicious adversaries. We should not blame consumers of misinformation for their gullibility, or suppliers of misinformation (including adversaries) for taking advantage of the opportunities. Without the market makers creating a market for misinformation, and fanning the flames, there would be much less toxicity. Regulators can help by making it less insanely profitable to traffic in misinformation. “Follow the money” and “take away the punch bowl.”

There has been considerable discussion of these issues in our community. There are a number of toxicity classifiers on HuggingFace. Unfortunately, given short-term incentives to maximize shareholder value (and maximize engagement), it is unlikely that such classifiers could be effective without first convincing the social media companies that it is in their interest to reduce toxicity. Moreover, as discussed in Section 3.1, there is a risk that work on Risks 1.0 (toxicity classifiers) could be seen as an attempt to pivot away from Risks 2.0 (addictive, dangerous, and deadly). We need to address both Risks 1.0 and Risks 2.0.

We are more optimistic about the long term. Assuming that markets are efficient, rational, and sane, at least in the long term at steady state, then insane profits cannot continue for long. There are already hints that the short-term business case may be faltering at Twitter (as discussed in Section 5.3) and at Facebook.Footnote gc , Footnote gd , Footnote ge Footnote gf , Footnote gg , Footnote gh , Footnote gi , Footnote gj , Footnote gk You know it must be bad for social media companies when The Late Show with Stephen Colbert is making jokes at their expense.Footnote gl The telephone monopoly was broken up soon after national television made jokes at their expense.Footnote gm As discussed in Section 7.1, AT&T valued its brand and would not risk the brand for short-term gains. Social media companies should be more risk-averse with their brand.

Just as the lawlessness of the Wild West did not last long, this too shall pass. The current chaos is not good for business (and many other parties). We anticipate a sequel to “How the West Was Won”Footnote gn entitled “How the Web Was Won,” giving a whole new meaning to: WWW.