Article contents

Filtered partial differential equations: a robust surrogate constraint in physics-informed deep learning framework

Published online by Cambridge University Press: 15 November 2024

Abstract

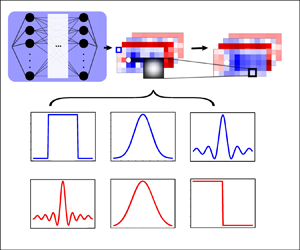

Embedding physical knowledge into neural network (NN) training has been a hot topic. However, when facing the complex real world, most of the existing methods still strongly rely on the quantity and quality of observation data. Furthermore, the NNs often struggle to converge when the solution to the real equation is very complex. Inspired by large eddy simulation in computational fluid dynamics, we propose an improved method based on filtering. We analysed the causes of the difficulties in physics-informed machine learning, and proposed a surrogate constraint (filtered partial differential equation, FPDE) of the original physical equations to reduce the influence of noisy and sparse observation data. In the noise and sparsity experiment, the proposed FPDE models (which are optimized by FPDE constraints) have better robustness than the conventional PDE models. Experiments demonstrate that the FPDE model can obtain the same quality solution with 100 % higher noise and 12 % quantity of observation data of the baseline. Besides, two groups of real measurement data are used to show the FPDE improvements in real cases. The final results show that the FPDE still gives more physically reasonable solutions when facing the incomplete equation problem and the extremely sparse and high-noise conditions. The proposed FPDE constraint is helpful for merging real-world experimental data into physics-informed training, and it works effectively in two real-world experiments: simulating cell movement in scratches and blood velocity in vessels.

- Type

- JFM Papers

- Information

- Copyright

- © The Author(s), 2024. Published by Cambridge University Press

References

- 1

- Cited by