1. Introduction

The physical realizations of sounds are often conditioned by the surrounding segmental context in connected speech. For instance, it is typical in English for coronals to be perceived as assimilated to the following consonant or deleted: /t/ in must be is not perceived and the phrase is thus heard as [m∧sbi] (Brown Reference Brown1977). Extensive studies within the framework of Articulatory Phonology have discovered that the occurrence of such coarticulation is due to substantial overlap of adjacent gestures in speech, and that the gesture that is not perceptually available is in fact not deleted but hidden by adjacent gestures (e.g., Browman and Goldstein Reference Browman, Goldstein, Clements, Kingston and Beckman1990, Barry Reference Barry1991, Nolan Reference Nolan, Docherty and Ladd1992, Recasens et al. Reference Recasens, Fontdevila, Pallarès and Solanas1993, Zsiga Reference Zsiga1994, Byrd Reference Byrd1996a, Gafos Reference Gafos2002, Davidson and Stone Reference Davidson, Stone, Garding and Tsujimura2003). Many non-native speech errors are thus assumed to arise from failing to apply the appropriate degree of overlap in the target language (Solé Reference Solé, Díaz and Pérez1997; Davidson Reference Davidson, Solé, Recasens and Romero2003, Reference Davidson2006, Reference Davidson2010; Zsiga Reference Zsiga2003, Reference Zsiga2011; Colantoni and Steele Reference Colantoni and Steele2008). As part of the ongoing process of understanding speech production, the current study examines how three factors (i.e., place of articulation, frequency, and speech rate) affect the degree of overlap in both native (L1)Footnote 1 and non-native (L2) speech. In particular, I investigate surface forms of stop-stop coarticulation spanning across words (C1#C2) produced by English and Mandarin speakers.

The reasons for choosing the two languages are as follows. Mandarin has both unaspirated (/p, t, k/) and aspirated stops (/ph, th, kh/) (Cheng Reference Cheng1966). One major difference between Mandarin and English is that all Mandarin stops are voiceless, while English stops may be voiceless or voiced, although the six stops are sometimes transcribed as the same [p, b, t, d, g, k]. Another major difference is that Mandarin has strong phonotactic constraints on syllable shape, categorically prohibiting word-final stops and stop-stop clusters (Cheng Reference Cheng1966, Lin Reference Lin2001), while English is well known for allowing heavy syllables, with up to three consonants in onset position and four in coda position (Harris Reference Harris1994, Shockey Reference Shockey2008). In addition, stressed segments in English usually have less coarticulatory effects than unstressed segments, such as more lenited characteristics (e.g., reduced duration, deletion) (Öhman Reference Öhman1967, Crystal Reference Crystal1976, Avery and Ehrlich Reference Avery and Ehrlich1992, Cummins and Port Reference Cummins and Port1998). The duration of each vowel or syllable in Mandarin, however, is stably fixed so that each syllable receives relatively equal timing across a sentence (Grabe and Low Reference Grabe, Low, Gussenhoven and Warner2002, Lin and Wang Reference Lin and Wang2007, Mok and Dellwo Reference Mok, Dellwo, Barboso, Madureira and Reis2008). There is no distinctive vowel reduction or cross-word coarticulation in Mandarin, with few exceptions (e.g., r-retroflexion) (Cheng Reference Cheng1966). Due to these differences between the two languages, Mandarin speakers are found to have substantial difficulty in acquiring English clusters (e.g., Anderson Reference Anderson, Ioup and Weinberger1987, Weinberger Reference Weinberger, Ioup and Weinberger1987, Broselow and Finer Reference Broselow and Finer1991, Hansen Reference Hansen2001, Chen and Chung Reference Chen and Chung2008).

Most studies investigate coda production and only a few have dealt with English cross-word coarticulation produced by Mandarin learners (e.g., Tajima et al. Reference Tajima, Port and Dalby1997, Chen and Chung Reference Chen and Chung2008). Chen and Chung discovered that the lower the English proficiency of the Mandarin learners, the longer the duration of the consonant clusters that they produced. However, it remains poorly understood how cross-word coordination is implemented in English by Mandarin speakers. Moreover, there is extensive evidence that three factors systematically determine the overlap degree in native speech: place of articulation, frequency, and speech rate. This study aims to combine these factors concurrently to develop a more comprehensive account of what affects consonantal coarticulation in both L1 and L2 speech.

2. English cluster coarticulation

This section discusses English cluster coarticulation, first in native speech and then in non-native speech.

2.1 English cluster coarticulation in native speech

Recent research findings have suggested that English consonant clusters have approximately 20% to 60% overlap (Catford Reference Catford1977, Barry Reference Barry1991, Zsiga Reference Zsiga1994, Byrd Reference Byrd1996a). Three primary factors that have been shown to have systematic effects on gestural overlap of English clusters are discussed in detail below.

The most consistent result involves the place of articulation of C1 and C2 in stop-stop sequences. Earlier instrumental studies have discovered that in most sequences containing two adjacent English stops, either within words or across words (VC1C2V or VC1#C2 V), the closure for C1 is not released until the closure for C2 is formed (Catford Reference Catford1977, Hardcastle and Roach Reference Hardcastle, Roach, Hollien and Hollien1979, Ladefoged Reference Ladefoged2001). Hardcastle and Roach (Reference Hardcastle, Roach, Hollien and Hollien1979) report that in only 32 cases out of 272 was C1 released before the onset of the second closure. In English homorganic clusters, the lack of a release results in the whole sequence being interpreted as a long consonant rather than as a sequence of two segments, involving “a prolongation of the articulatory posture” (Catford Reference Catford1977: 210). In heterorganic clusters, the lack of a release is more evident when C2 is more anterior than C1 than vice versa: fewer acoustic releases are produced in back-front clusters (e.g., coronal-labial, dorsal-coronal, or dorsal-labial) than in front-back sequences (e.g., labial-coronal, coronal-dorsal, or labial-dorsal) (Henderson and Repp Reference Henderson and Repp1982, Byrd Reference Byrd1996a, Zsiga Reference Zsiga2000, Davidson Reference Davidson2011; see different results in Ghosh and Narayanan Reference Ghosh and Narayanan2009). For example, Henderson and Repp found that 58% of C1s in [-C1C2-] (e.g., napkin, abdomen) were released, and release percentages were strongly conditioned by the place of articulation of C2 in the sequence. An average of 16.5% of C1s followed by labial C2s were released, compared to 70% of those followed by alveolar C2s and 87.5% of those followed by velar C2s. That is, C1 was released more often when followed by a “back” consonant. Similar results were reported in Zsiga (Reference Zsiga2000: 78), who concluded that in English, differences in place of articulation “seem to account for release patterns in a straightforward way: clusters are more likely to have an audible release if C1 is further forward than C2”.

In addition to affecting release patterns, this place order effect (POE) determines the degree of overlap. Hardcastle and Roach (Reference Hardcastle, Roach, Hollien and Hollien1979) found that the interval between the onset of the C1 closure and the onset of the C2 closure was significantly shorter for /Vt#kV/ compared to /Vk#tV/ sequences, suggesting more overlap in /t#k/ (also see Henderson and Repp Reference Henderson and Repp1982, Barry Reference Barry1991, Zsiga Reference Zsiga1994). Byrd (Reference Byrd1996a) discovered that the sequence overlap and C1 overlap were both much greater for [d#g] than for [g#d]. C2 also started much later relative to C1 for [g#d] than for [d#g], suggesting greater latency in [g#d]. The POE (more releases and more overlap in front-back clusters than in sequences of reversed order) is also evident in other languages (for Taiwanese, see Peng Reference Peng1996; for Georgian, see Chitoran et al. Reference Chitoran, Goldstein, Byrd, Gussenhoven and Warner2002; for Russian, see Kochetov et al. Reference Kochetov, Pouplier, Son, Trouvain and Barry2007; for Greek, see Yip Reference Yip2013).

Different syllable positions might result in different sequence timing and thus confound the POE. Existing analyses have demonstrated that English stop sequences have more timing variants and allow more overlap in codas than in onsets (Byrd Reference Byrd1996b, Browman and Goldstein Reference Browman and Goldstein2000, Nam and Saltzman Reference Nam, Saltzman, Solé, Recasens and Romero2003, Byrd and Choi Reference Byrd, Choi, Fougeron, Kuehnert, Imperio and Vallée2010). Speakers also display a greater amount of overlap across word boundaries than within (Sproat and Fujimura Reference Sproat and Fujimura1993, Byrd Reference Byrd1996b, Fougeron and Keating Reference Fougeron and Keating1997, Zsiga Reference Zsiga2000). However, more overlap across words does not mean the POE would be stronger in such positions. Davidson and Roon (Reference Davidson and Roon2008) hypothesized that both consonants in C1#C2 are longer than their counterparts in #C1C2 because of the intervening word boundary. Their analysis confirmed that both monolingual Russian and bilingual Russian-English speakers produced #C1əC2 with the longest duration, followed by C1#C2 sequences, then by #C1C2 sequences. The follow-up study of Davidson (Reference Davidson2011) on English found that neither English word-final clusters (CC#) nor clusters across words (C#C) allow more overlap in back-front clusters than in front-back clusters; only the word-internal clusters ([-CC-]) did. She posited that a coordination relation across words is not stable enough for a significant place order effect to emerge.

The second variable that could affect sequence timing is word frequency. A word frequency effect on English word reduction is attested in previous research, and these studies agree in finding that more frequent words have a variety of phonetically reduced characteristics (e.g., shorter durations, reduced vowels, deleted codas, more tapping, palatalization, etc.; see Fosler-Lussier and Morgan Reference Fosler-Lussier and Morgan1999, Gregory et al. Reference Gregory, Raymond, Bell, Fosler-Lussier, Jurafsky, Billings, Boyle and Griffith1999, Bybee Reference Bybee, Barlow and Kemmer2000, Jurafsky et al. Reference Jurafsky, Bell, Gregory, Raymond, Bybee and Hopper2001, Bell et al. Reference Bell, Brenier, Gregory, Girand and Jurafsky2009). Bybee found that the schwa in frequent words like memory is more likely to delete than the schwa in the nearly identical, but infrequent, mammary. Bybee's data also showed a significant relationship of word frequency with rate of /t, d/ deletion. The most frequent word told had /d/ deleted in 68% of tokens, while the least frequent meant never had the /t/ deleted. Comparably, the results in Jurafsky et al. (Reference Jurafsky, Bell, Gregory, Raymond, Bybee and Hopper2001) indicated that /t, d/ are deleted more often in high-frequency words than in low-frequency words and that, if they are not deleted, their durations are significantly shorter in high-frequency than in low-frequency words. As much of the research deals with word-final deletion, there is a lack of research on how word frequency affects English cluster coordination (but see Yanagawa Reference Yanagawa2006, Davidson Reference Davidson2011). A notable exception is the study of Bush (Reference Bush, Bybee and Hopper2001), showing that palatalization of /tj/ and /dj/ sequences in English conversation occurs only between pairs of words that occur together most frequently (e.g., did you, don't you, would you).

One question that needs addressing is this: does frequency affect degree of overlap in consonant sequences, and if so, how? More specifically, to what extent are sound patterns like the POE evident in high-frequency versus low-frequency words? One way to answer this question is to test speakers’ production of non-words. This idea has its root in research that claims that speakers possess implicit knowledge, and that this internalized working system would be able to select the right form in new cases (Berko Reference Berko1958, Ernestus and Baayen Reference Ernestus and Baayen2003, Pater and Tessier Reference Pater, Tessier, Slabakova, Montrul and Prévost2006, Wilson Reference Wilson2006). The application of native phonological knowledge, however, may be incomplete (see Fleischhacker Reference Fleischhacker2005, Zuraw Reference Zuraw2007, Zhang and Lai Reference Zhang and Lai2010, Zhang et al. Reference Zhang, Lai and Sailor2011).

Another variable that has been shown to affect consonantal coarticulation is speech rate (see Munhall and Löfqvist Reference Munhall and Löfqvist1992, Byrd and Tan Reference Byrd and Tan1996, Tjaden and Weismer Reference Tjaden and Weismer1998, Fosler-Lussier and Morgan Reference Fosler-Lussier and Morgan1999, Jurafsky et al. Reference Jurafsky, Bell, Gregory, Raymond, Bybee and Hopper2001). These studies indicate that greater fluency is associated with segment shortening, such that the faster the speech rate, the more overlap occurs. For example, Munhall and Löfqvist found that glottal openings were blended into one single movement at fast rate when producing adjacent alveolar fricatives and alveolar stops. Similarly, Byrd and Tan reported that faster speech was associated with reduced magnitude in C1#C2. Individual consonants were shortened in duration as speaking rate increased, regardless of the place or manner of articulation of the consonants.

Other studies have reported inconsistent results. In the study of Zsiga (Reference Zsiga1994), comparison of cluster overlap in slow and fast tokens of the same utterance was not indicative of a direct relationship. The interaction of consonant and rate was “rarely significant” (1994:54) (also see Dixit and Flege Reference Dixit and Flege1991, Kochetov et al. Reference Kochetov, Pouplier, Son, Trouvain and Barry2007). The assumption that speaking rate change induces varying amounts of overlap has been further discouraged by a number of studies outlining substantial variability among speakers (Kuehn and Moll Reference Kuehn and Moll1976, Ostry and Munhall Reference Ostry and Munhall1985, Allen et al. Reference Allen, Miller and DeSteno2003, Tsao et al. Reference Tsao, Weismer and Iqbal2006). The study by Shaiman et al. (Reference Shaiman, Adams and Kimelman1995) showed that the timing between the jaw closing and the upper lip lowering gestures of a Vowel + Bilabial Stop sequence became significantly shorter as speakers moved from normal to fast speech. However, this effect was not consistent across speakers. They concluded that how speech rate changes were implemented varied across individual speakers (see Lubker and Gay Reference Lubker and Gay1982, Barry Reference Barry1992, Nolan Reference Nolan, Docherty and Ladd1992, Tjaden and Weismer Reference Tjaden and Weismer1998).

Building upon previous research showing the influence of three factors (i.e., place of articulation, frequency, and speech rate) on consonantal coarticulation in English, a concurrent examination is needed because it remains to be seen first, whether the POE will show up in cross-word positions in English; second, how frequency (ranging from high- to low- to extremely low-frequency) affects cluster coordination; and third, whether speech rate changes (reading phrases vs. embedded in conversation) affect overlap degree. More important, no previous studies have examined lexical frequency combining the POE associated with overlap degree in L1 and L2 speech. The current study remedies the lack of comparable data by testing speakers’ productive extension with non-words in both L1 and L2 speech. In the next section, I discuss studies reporting on L2 production of English clusters.

2.2 English cluster coarticulation in non-native speech

Due to the extensive overlap in English clusters, most L2 learners encounter difficulty in coordinating adjacent gestures in an appropriate way, such that an insufficient overlap pattern arises (e.g., schwa insertion occurs, see Major Reference Major1987; Broselow and Finer Reference Broselow and Finer1991; Davidson Reference Davidson, Solé, Recasens and Romero2003, Reference Davidson2006; Zsiga Reference Zsiga2003, Reference Zsiga2011; Colantoni and Steele Reference Colantoni and Steele2008; Sperbeck Reference Sperbeck2010), or inappropriate assimilation occurs (see Weinberger Reference Weinberger and Yavaş1994, Solé Reference Solé, Díaz and Pérez1997, Cebrian Reference Cebrian2000). Solé found that Catalan speakers produced one glottal gesture for a whole cluster, when the cluster members differ in voicing (e.g., [sn-] in snail becomes [zn-]), while this anticipatory effect is not shown in English speakers. Solé argued that the observed anticipatory effect in the Catalan subjects was derived from difficulty in changing the fossilized articulatory habits that govern anticipatory voicing in their L1 (for Hungarian regressive voicing assimilation transfer in English, see Altenberg and Vago Reference Altenberg and Vago1983; for Polish regressive voicing assimilation in English, see Rubach Reference Rubach and Eliasson1984).

Interestingly, Cebrian (Reference Cebrian2000) found Catalan subjects failed to apply the L1 rule of regressive voicing assimilation at word boundaries in English; for example, Swiss girl did not undergo such rule. Cebrian then proposed the Word Integrity Principle, positing a tendency to preserve segments within one word, which implied a failure to coarticulate segments across word boundaries (also see Weinberger's (Reference Weinberger and Yavaş1994) Recoverability Principle). Such a principle predicts no specific coordination relationship ensuring overlap across words, and so that gestures across words produced by L2 speakers would drift apart. This hypothesis is confirmed by Zsiga (Reference Zsiga2003), who found that both English and Russian speakers preferred little overlap at word boundaries when producing L2 clusters. Meanwhile, the carryover of L1 is shown to be strong in Zsiga's study. English speakers produced Russian clusters with fewer C1 releases and greater overlap than Russian speakers, carrying over from the English patterns. Russian speakers produced English clusters with more C1 releases and smaller overlap, comparable to their articulatory habits in Russian. This finding is in line with other studies discussing L1 transfer that gives rises to L2 mistiming alignment (e.g., Sato Reference Sato, Ioup and Weinberger1987, Tajima et al. Reference Tajima, Port and Dalby1997, Chen and Chung Reference Chen and Chung2008).

Aside from word-integrity by the Recoverability Principles, a number of studies have relied on Markedness Theory to account for L2 speech errors in producing clusters, particularly in three aspects: voicing (see Broselow and Finer Reference Broselow and Finer1991, Major and Faudree Reference Major and Faudree1996, Broselow et al. Reference Broselow, Chen and Wang1998, Hansen Reference Hansen2001), cluster size (Anderson Reference Anderson, Ioup and Weinberger1987, Weinberger Reference Weinberger and Yavaş1994, Hansen Reference Hansen2001), and sonority sequencing (Broselow and Finer Reference Broselow and Finer1991, Eckman and Iverson Reference Eckman and Iverson1993, Carlisle Reference Carlisle, Baptista and Watkins2006, Cardoso Reference Cardoso, Slabakova, Rothman, Kempchinsky and Gavruseva2008). For example, Spanish learners of English in Carlisle (Reference Carlisle, Baptista and Watkins2006) were found to modify /#sn/ more than /#sl/ clusters, which is due to /#sn/ being more marked than /#sl/ according to the sonority distance. However, the extent to which sonority sequencing can account for L2 consonant cluster processing has been called into question. Davidson et al. (Reference Davidson, Jusczyk, Smolensky, Kager, Pater and Zonneveld2004) reported that English speakers have varying accuracy when producing Polish /zm/ and /vn/ clusters (63% vs. 11%), although both have the same sonority distance. In a follow-up study, Wilson et al. (Reference Wilson, Davidson and Martin2014) discovered that other phonetic details (e.g., voicing) can be equally important for predicting L2 production patterns.

In sum, the reviewed studies on L2 gestural mistiming of English clusters suggest that a purely articulatorily motivated account is not sufficient. Rather, theories of word integrity, L1 influence, and markedness are all active in explaining L2 cluster production (see Davidson Reference Davidson2011 for a review). Given the conflicting results found in L2 studies, as well as the limited number of languages that have been studied, it is important to extend our cross-linguistic comparisons.

2.3 Research questions

Overall, three primary research questions concerning the degree of overlap in English clusters are raised.

First, does place of articulation (i.e., homorganic, front-back, and back-front clusters) affect English and Mandarin speakers similarly in their English stop-stop coarticulation? The general assumption is that the native Mandarin (NM) group will deviate from the native English (NE) group. I raise two hypotheses, listed in (1).

(1)

a. The NE group will have fewer releases and more overlap than the NM group in producing homorganic clusters.

b. The NE group will show the POE, but the NM group will not.

Second, does frequency affect English and Mandarin speakers similarly in their English stop-stop coarticulation? This gives rise to the hypotheses in (2).

(2)

a. Both groups will have fewer releases and more overlap in meaningful high-frequency words than in meaningful low-frequency words.

b. The NE group will show the POE in both real words and non-words, while the NM group will not show the POE in non-words.

Finally, do changes in speech rate affect English and Mandarin speakers similarly in their English stop-stop coarticulation?

3. Experiments

To answer the three research questions raised above, two studies were designed in which English and Mandarin speakers produced a set of clusters in slow and fast speech rates.

3.1 Participants

The experiment included two groups: one group of 25 native Mandarin speakers who use English as a second language (ESL), and a group of 15 native English speakers. For each group, a questionnaire was administered to collect background information (e.g., age, gender, knowledge of additional languages, etc.) prior to the experiment. To control within-group homogeneity, Mandarin volunteers were selected only if they scored on/above 70% on TOEFL or IELTS. There were 14 females and 11 males with an average age of 27 in the NM group (23–40 years old). To control regional dialect influence, only speakers that had been born and raised in British Columbia, Canada, served as the NE group. The NE group consisted of 15 native English speakers, including nine females and six males with an average age of 26.3 years old (19–40 years old). Participation in this study was completely voluntary. All participants reported no hearing or speech problems. Participants were told that they were taking part in a speech study comparing native versus non-native production, but were given no other details until after the recording session was complete.

3.2 Materials

The central criteria for building the data were to consider first, place of articulation (i.e., homorganic, front-back, and back-front clusters); second, frequency (i.e., ranging from high- to low- to extremely low-frequency); and third, speech rate (reading phrases and spontaneous speech). Three lists were designed to incorporate these criteria.

List 1 contained 24 items, yielding a C1#V, V#C2 context (see Zsiga, Reference Zsiga2003). Each stop appeared as onset and coda twice, to calculate the baseline closure duration where no consonant-consonant coarticulation occurs. List 2 included 144 disyllabic items with all stop-stop combinations, yielding a VC1#C2V environment. Seventy-two real words were chosen, including 36 compounds (noun-noun combinations, e.g., soup pot) and 36 non-compounds (e.g., keep pace). Another 72 phrases were designed as corresponding non-words. By corresponding, I mean that the vowel contexts between real words and non-words were controlled as similarly as possible (e.g., peep pate vs. keep pace), and their initial consonants and codas agreed in voicing and manner (e.g., /d/ in dak kit agrees with /b/ in back kick in voicing, and they are both obstruents). That is, two aspects of lexical frequency were considered: high-frequency versus low-frequency items in real words (e.g., take care vs. dock gate), and real words versus non-words (e.g., keep pace vs. peep pate). By doing this, we can make inferences about the ability of speakers to generalize from known forms to less familiar forms, then to novel forms. To control the stress effect, all testing syllables were monosyllabic words (CVC). Using these 144 disyllabic phrases, List 3 included 144*4 dialogues combining four kinds of focus contrasts where C1 and C2 were embedded in a target sentence (more in 3.3).

3.3 Procedure

A pre-test was conducted to assess Mandarin participants’ English proficiency in addition to the self-reported TOEFL/IELTS scores. The NM group was asked to speak about a topic for two to three minutes; the question was “What do you feel the most passionate about?” (see Lin and Wang Reference Lin and Wang2007). The accentedness of the monologue was judged by two native English speakers with a Likert scale from one to seven (Likert, Reference Likert1932), where a score of one indicates native-like and a score of seven indicates heavy accent. No participants received scores higher than six (i.e., extremely heavy accent). The mean rating was 3.38/7 for Judge 1 and 3.06/7 for Judge 2, suggesting that the NM group was perceived to be moderately accented, where 3.5 is the midpoint of the scale. The Pearson correlation test shows that the two judges’ scores are significantly correlated with one another [r = .58, p < .002]. I am confident that the NM group in this study represents intermediate or better proficiency.

The experiment itself was composed of four tasks, administered using E-prime 2.0 Professional (version 2.0.1.97). I first needed to confirm that the participants did make a distinction between high- and low-frequency words. Therefore, Task 1 asked participants to rate word familiarity on all 144 items on a seven-point Likert scale, where one indicates the least familiarity and seven indicates extremely familiar. Prior to Task 1, participants were reminded to make judgements based on orthography rather than sound because some non-words might sound like real words in English (e.g., tik doun).

E-prime collected a total of 5760 familiarity responses from Task 1 (40 participants * 144 stimuli items). Table 1 summarizes the lexical judgment scores for the two groups with standard deviations (SD) in parentheses. The results show that for the NE group, the average score for the 72 real words was 5.66, and for the non-words 1.06. For the NM group, the average score for the meaningful items was 4.74, while for the non-words 1.16. Table 1 also includes the results of two-tailed t-tests, showing that both groups significantly distinguished real words from non-words. The categorical frequency effect was thus validated.

Table 1: Summary of lexical frequency ratings for both groupsFootnote 2

Task 2 asked participants to read the 24 phrases contained in List 1 aloud, clearly. Task 3 asked participants to read the 144 items contained in List 2 aloud, in natural speech. Prior to Task 3, the participants were instructed to produce the words as naturally as possible; the researcher also explicitly told participants to utter all the items in an English way when they ran into words they did not know. In Task 3, stimuli were marked by underlining the appropriate lexical stress in both real words and non-words (e.g., soup pot, foop pok). Each phrase appeared once in Task 2 and once in Task 3, requiring participants to repeat each phrase three times in each task.

To further study the effect of speech rates, a fourth task was included. Task 4 asked participants to answer questions based on a given dialogue. Specifically, using the 144 disyllabic phrases in Task 3, Task 4 was designed as having 144*4 dialogues. Each dialogue included a question and a given answer. Participants were asked to read the question silently, then produce the given answer aloud. The answers were designed to elicit four sentential stress patterns: focused versus unfocused, unfocused versus focused, focused versus focused, and unfocused versus unfocused.Footnote 3 Only the embedded clusters were subject to analysis in Task 4.

The experiment was self-paced. Participants were presented with a new item only after they had indicated that they were ready by pressing the keyboard. All tested materials in each task were in a randomized order generated by E-prime 2. The data were collected using a head-mounted microphone with a fixed distance from the mouth. All participants were recorded in the phonetic laboratory of the Department of Linguistics at the University of Victoria. All .wav files were recorded at 44.1 kHz/16 bits.

Speech rate was calculated by using a speech rate script, measuring how many syllables were produced per second (De Jong and Wempe Reference De Jong and Wempe2009). The results are summarized in Table 2 below (SD in parentheses). The table indicates that the NE group produced 1.48 syllables /per second at the word level and 2.22 syllables at the sentence level, and the NM group produced 1.28 and 1.76 syllable/per second, respectively. Also included in the table are the results of the two-tailed t-tests, which indicate that both groups had a significantly faster speech rate at the sentence level than at the word level. The Speech Rate effect was thus validated for both groups.

Table 2: Speech rate summary for both groups

3.4 Data analysis

A total of 29,760 sound files were acoustically analyzed (40 participants * (24 in Task 2 + 144 in Task 3 + 576 in Task 4)) using Praat software (Boersma and Weenink Reference Boersma and Weenink2009). Duration features were extracted from the manually labelled segments using a duration script developed for Praat. To exclude disfluent tokens, any phrase in which there was a period of silence of 750 ms or more in C1#C2 was discarded. A total of 58 tokens were excluded based on this criterion to avoid skewing the data (0.2% of the total collected).

In the present study, C1#C2 articulatory relationships were measured through two dependent variables. The first dependent variable was release percentage. A cluster of C1#C2 was counted as released if C1's burst of energy was visible on a spectrogram. In Task 3, the middle token was subject to analysis when the release identification agreed with the first or the third token; otherwise, I chose the third token to analyze. All the data were coded by the author. Another trained researcher coded 507 sound files that were randomly chosen from Task 3 (8.8% of the total collected in Task 3). Twenty tokens did not agree in release identifications (mainly quasi-release vs. no release; for quasi-release, see Davidson Reference Davidson2011), making the disagreement 3.9% (20/507) between judges. The inter-coder reliability was 96.1%, suggesting high consistency. Release percentage was then calculated as the number of tokens that had released C1 divided by the total tokens. The second variable was closure duration ratio, computed for each phrase for each speaker. The ratio is defined as “the mean duration of the C1#C2 cluster divided by the sum of the mean closure durations of C1 b and C2b occurring intervocalically” (Zsiga Reference Zsiga2003:441), so that a smaller value indicates a larger degree of overlap.

4. Results

Based on the experimental design, both release percentage and duration ratio were analyzed using four-way repeated measures ANOVAs with a 3*2*2*2 design. The three within-subjects factors were PLACE OF ARTICULATION (POA, 3 levels: homorganic, front-back, and back-front clusters), FREQUENCY (2 levels: real words vs. non-words) and SPEECH RATE (2 levels: word level vs. sentence level). GROUP was the between-subjects factor (2 levels: the NE group vs. the NM group). Table 3 below provides the overall statistics with significant effects in bold. There are two main effects, and two two-way interactions are significant on the release percentage (POA; FREQUENCY; POA by GROUP; SPEECH RATE by GROUP); one main effect and one 2-way interaction are significant on the duration ratio (FREQUENCY; FREQUENCY by GROUP). In the following subsections, I will divide and collapse data to focus on and investigate each specific research question.

Table 3: Summary of statistical results (significant effects in bold)

4.1 Place of articulation

The first research question addresses whether place of articulation (i.e., homorganic, front-back, and back-front clusters) affects English and Mandarin speakers similarly in their English stop-stop coarticulation.

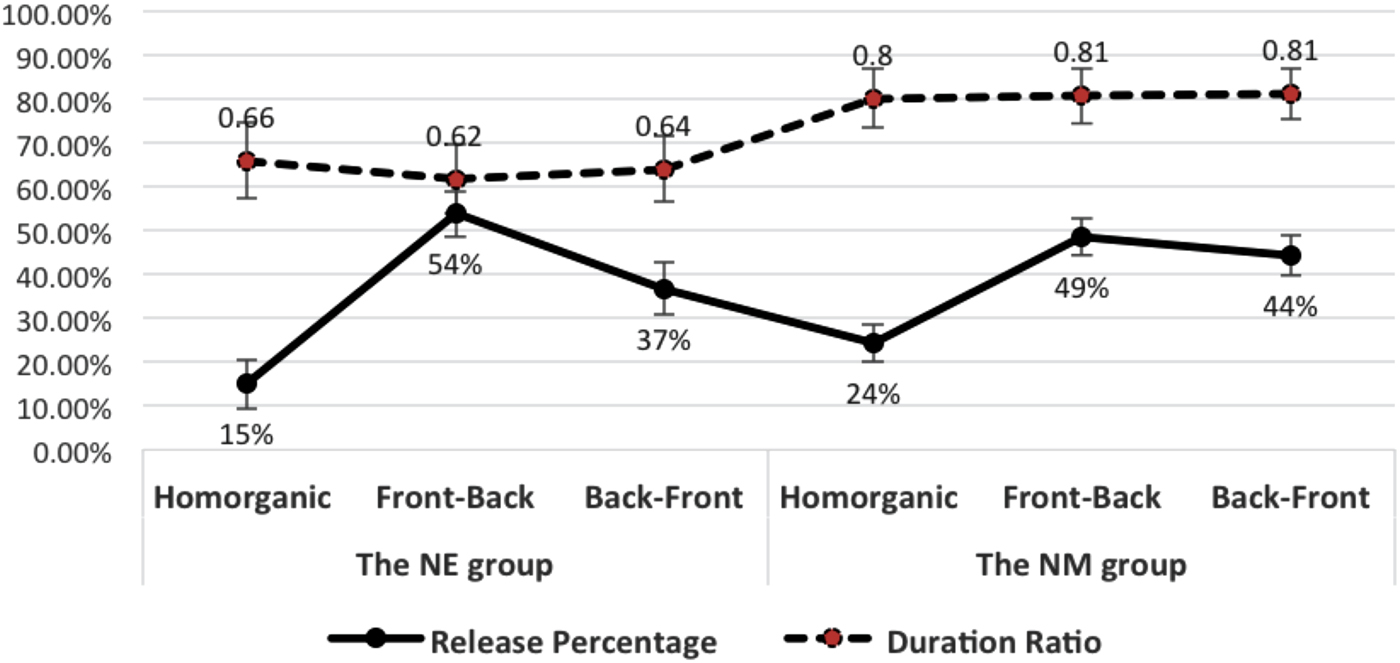

The statistical results indicate that POA has significant effect on release percentage but not on duration ratio for either group. The overall POA effect averaged over both speech rates on release and duration ratio patterns for each group is plotted in Figure 1 below, which shows a similar release trend for both groups. This trend is visible in that both groups had the highest release percentage in front-back clusters, followed by back-front clusters, then homorganic clusters (i.e., front-back > back-front > homorganic, where the symbol “>” indicates “more releases than”). The NE group had the most overlap in front-back clusters, followed by back-front clusters, then homorganic clusters (i.e., front-back >> back-front >> homorganic, where the symbol “>>” indicates “more overlap than”); the NM group did not exhibit much difference in producing the three kinds of clusters.

Figure 1: POA effect on release percentage and duration ratio for both groups

Multiple comparisons, with Bonferroni corrections, were conducted to further examine group differences. In terms of hypothesis (1a), despite the visible trend that the NE group had fewer releases than the NM group, the overall group differences were not statistically significant in releasing homorganic clusters at either speech rate [both p > .072]. Similarly, the NE group did not have significantly more overlap than the NM group in producing homorganic clusters at either speech rate [both p > .163]. In terms of hypothesis (1b), the results showed, on the one hand, that the NE group released significantly more often in front-back than in back-front clusters at both speech rates [both p < .005], while the NM group did not distinguish the two types of clusters significantly at either speech rate [both p > .37]. On the other hand, the analyses indicated that neither of the groups had significantly more overlap in front-back than in back-front clusters at either speech rate [all p > .849].

These findings do not support hypothesis (1a), although the trend was in line with the prediction. Hypothesis (1b) was partially supported, since the predicted release pattern of the POE was statistically evident in L1 speech but not in L2 speech. However, hypothesis (1b) was not supported in that the predicted overlap order of the POE was not statistically shown in either group.

4.2 Lexical Frequency

The second research question asked whether and how lexical frequency determines the cluster realizations: Will English and Mandarin speakers exhibit the same frequency effect on overlap degree?

The results showed that for the 72 real words, the NE group released 31% and produced an average duration ratio of 0.61 (i.e., 39% overlap); the NM group released 36% and produced an average duration ratio of 0.73 (i.e., 27% overlap). For the 72 real words, Pearson two-tailed correlation tests were conducted to examine whether there was a correlation between lexical frequency and release percentage, and between lexical frequency and overlap degree. The results showed that lexical frequency and release percentage were not correlated in either of the groups [both p > .657], while the frequency rating and the closure duration ratio were significantly negatively correlated for both groups [both p < .003]. This result suggested that the more frequent the item was, the shorter the closure duration was (i.e., the greater the overlap). Hypothesis (2a) was therefore partially supported: it was supported in terms of a significant correlation between frequency and duration overlap, but not supported in terms of lacking a correlation between frequency and release percentage.

As for hypothesis (2b), Table 4 summarizes the release percentages for both groups averaged over speech rates, subcategorized by the cluster type in both lexical contexts (SD in parentheses). The table shows that the NE group released 31% in real words and 40% in non-words, while the NM group released 35% and 43%, respectively. The main effect of FREQUENCY was significant on release percentage, indicating that both groups released significantly less often in real words than in non-words.

Table 4: FREQUENCY effect on release percentage for both groups

Despite the fact that both groups showed the same release trend of front-back > back-front > homorganic clusters regardless of lexical status, the statistical analyses found that the NE group had significantly more releases in front-back than in back-front clusters for real words as well as for non-words at both speech rates [all p < .002], while the NM group did not statistically distinguish the two types in real words or non-words at either speech rate [all p > .614]. This result directly says that the NE group had a consistent and strong POE not subject to the FREQUENCY effect.

The main effect of FREQUENCY was also significant on the duration ratio, with both groups having significantly smaller overlap in non-words than in real words. To further explore the significant interaction of FREQUENCY * GROUP, Table 5 summarizes the descriptive statistics of duration ratios in each cluster type averaged over speech rates produced by both groups.

Table 5: FREQUENCY effect on duration ratio for both groups

The table shows that the NE group had the same overlap order across lexical conditions. This pattern is visible: front-back clusters had the most overlap, followed by back-front clusters, then homorganic clusters in both lexical environments (i.e., front-back >> back-front >> homorganic). The NM group had inconsistent organizations. In real words, the NM group had the most overlap in homorganic clusters, followed by front-back and then back-front clusters (i.e., homorganic >> front-back >> back-front). In non-words, back-front clusters allowed more overlap than front-back clusters (i.e., homorganic >> back-front >> front-back). The analyses indicated that neither of the groups had significantly more overlap in front-back than in back-front clusters in either lexical context at either speech rate [all p > .8]. This result means the hypothesis 2b) was not supported.

Post hoc analyses showed that group duration ratio differences were significant in non-words [F(1.38) = 4.121, p < .049] but not in real words [F(1.38) = 2.728, p > .107]. These significant differences came from different organizational patterns in nonsense front-back and back-front clusters [both p < .037]. This finding suggests that both groups coordinated the real words in a similar manner; however, the NM group failed to coordinate the non-words, mainly nonsense front-back and back-front clusters, in the same fashion that the NE group did. These findings are of considerable significance as they show that unlike the NE group, the NM group was unable to extract phonetic details and apply their phonetic knowledge to novel words.

4.3 Speech rate

The third research question asked whether gestural overlap increases when speech rate is increased. Table 6 provides the descriptive statistics, including the mean release percentage and mean duration ratio for each group at each speech rate (SD in parentheses). The table shows that the NE group released more often at the fast rate than at the slow rate (37% vs. 33%) while the NM group released less often at the fast rate (35% vs. 43%).

Table 6: SPEECH RATE effect on release percentage and duration ratio for both groups

As shown in Table 3, the main effect of SPEECH RATE was not significant for duration ratio. In order to further examine how gestural overlap is implemented to differing degrees by individual speakers, the performance of each participant was examined. A breakdown of each participant's data indicates that there are two types of speakers: those that had more overlap in fast speech (19 speakers, nine NE and 10 NM) and those that had less overlap in fast speech (21 speakers, six NE and 15 NM). For the first type, participants’ release percentage was significantly correlated with the duration ratio slow speech [r = .56, n = 19, p < .014], while no significant correlation was found between release percentage and duration ratio in fast speech [r = .26, n = 21, p > .277]. For the second type, speakers’ release percentage was significantly correlated with the duration ratio regardless of speech rates [both p < .036]. These results showed that predetermined gestural patterns were not simply accelerated by faster speech rates.

To sum up, a breakdown of speakers suggests a large amount of speech freedom: speakers could either have more or less gestural overlap at faster rates. The prediction related to the third research question is thus not supported; instead, individual differences are strongly shown in the current study.

5. Summary and discussion

This study investigates how gestural overlap is conditioned by three possible influences: place of articulation, frequency, and speech rates. The data raise some important questions about the nature of coordination, how it is implemented and how it is stored cognitively.

The overall results showed that release percentage and closure duration ratio were significantly positively correlated [r = .47, n = 40, p < .002], that is, the greater the release percentage, the greater the closure duration ratio (i.e., the smaller the gestural overlap). This effect was in fact due to the NE group [r = .58, n = 15, p < .02]. The NM group did not have a significant correlation [r = .37, n = 25, p > .07]. This result indicates that when the NE group had more releases, they had longer closure durations of C1#C2, resulting in less overlap. However, this systematicity was not shown in non-native speech. The coordination of English clusters by the NM group seems fairly random.

On average, 35% of C1s were released in the NE group and 39% of C1s were released in the NM group. The English speakers’ release percentage falls within the range predicted by previous studies (Ghosh and Narayanan Reference Ghosh and Narayanan2009, Davidson Reference Davidson2011). The NE group's overlap is also congruent with the overlap range suggested in previous research (Barry Reference Barry1991, Zsiga Reference Zsiga1994, Byrd Reference Byrd1996a). Not surprisingly, the NE group's overlap degree was almost twice that of the NM group (36% vs. 19%).

5.1 Place of articulation

This study included all stop-stop combinations across words to examine the effect of POA. The main effect of POA was found to be significant on release percentage, but not on closure duration ratio.

The fact that both groups had fewer releases in homorganic than in heterogenic clusters (see Figure 1) is not unexpected, in light of existing cross-linguistic findings, which suggest fewer releases in clusters where the adjacent consonants share the same place of articulation than in clusters where the adjacent consonants have different articulation places (Elson Reference Elson1947, Clements Reference Clements1985, Pouplier Reference Pouplier2003, Zsiga Reference Zsiga2003). Even in L2 speech, this prolongation is strongly evident (Zsiga Reference Zsiga2003). The articulatory rationale behind this, as Pouplier (Reference Pouplier2003) and Goldstein et al. (Reference Goldstein, Pouplier, Chen, Saltzman and Byrd2007) argue, is that the consonants in heterorganic clusters, which use different subsets of articulators, are largely independent of each other; the constriction gestures are therefore compatible and can be produced concurrently. For homorganic clusters that share the same constriction gesture, the consonantal gestures are in competition and can only be manipulated sequentially. The results from both groups in this study suggest a universal grounded articulatory constraint, such that speakers, regardless of their native language, tend not to release C1 in homorganic clusters.

In the current study, the results showed that the NE group had a closure duration ratio of 0.66 (34% overlap) and the NM group had 0.8 (20% overlap) in producing homorganic clusters. This finding provides strong evidence for the blending hypothesis of Browman and Goldstein (Reference Browman and Goldstein1989). Browman and Goldstein discussed gestural blending in terms of place compromise: the location of the constriction should fall somewhere in between C1 and C2 when they share the same articulatory tier, as reflected by the acoustic consequences corresponding to the influence of both consonants. Following this idea, I argue for temporal blending between adjacent gestures when they share the same articulator, which is reflected as the timing influence of both consonants. Specifically, if homorganic clusters were geminate sequences, they would have a single gesture, and the other gesture would be deleted. However, the current study found that the two groups shortened the closure duration of each stop and produced an overlap relation of 34% and 19%, respectively. If one gesture were deleted, a 0.5 closure duration ratio would be expected. Given that both groups yielded overlap figures less than 50%, I conclude that they did not delete a gesture entirely; rather, they shortened each gesture, resulting in less than 50% overlap. The hypothesis of temporal blending is supported by other studies such as Byrd (Reference Byrd, Elenius and Branderud1995), and Munhall and Löfqvist (Reference Munhall and Löfqvist1992). Byrd discovered that two lingual gestures are canonically present in geminated sequences (C1#C2), and that the coproduced movement for C1#C2 is longer than the non-coproduced movement for a single gesture.

In this study, the NE and NM speakers exhibited a trend of the POE: more releases and more overlap in front-back than in back-front clusters. This result is consistent with crosslinguistic studies of the POE (Hardcastle and Roach Reference Hardcastle, Roach, Hollien and Hollien1979, Henderson and Repp Reference Henderson and Repp1982, Zsiga Reference Zsiga1994, Byrd Reference Byrd1996a, Peng Reference Peng1996, Chitoran et al. Reference Chitoran, Goldstein, Byrd, Gussenhoven and Warner2002, Kochetov et al. Reference Kochetov, Pouplier, Son, Trouvain and Barry2007, Davidson Reference Davidson2011). However, findings here were somewhat mixed: only the release pattern was statistically shown in native speech, and the overlap order was not statistically shown in either group.

We have to consider the fact that the consonant clusters used in this study were in boundary positions. Gestures in such cross-word positions stand in a substantially looser relationship than those formed within words in English, where speakers are likely controlling a more stable pattern (Byrd and Saltzman Reference Byrd and Saltzman1998, Cebrian Reference Cebrian2000, Davidson Reference Davidson2011). A loose coordination of consonant clusters would result in greater splittability (e.g., excrescent vowels) (Zuraw Reference Zuraw2007), longer duration in C1#C2 (Davidson and Roon Reference Davidson and Roon2008), and gradient overlap degree (Zsiga, Reference Zsiga, Connell and Arvaniti1995). Under this assumption, gestures are more likely to drift apart when in a boundary position. The findings in the current study appear to show both the POE and the juncture effects. Both groups exhibited the trend of the POE; but the POE was only partially shown in L1 speech. The significant release pattern of the POE in the NE speech indicates that a constriction transition from front to back articulators is strongly favored and articulatorily grounded even in an English boundary position where gestures are relatively loosely coordinated. Compared to NE speakers, NM speakers were more likely to eliminate contact across words, showing a tendency of preserving each word as a unit. What these results suggest is weaker juncture effects in native speech than in non-native speech. Considering that no significant overlap orders emerged in either group, future research is needed to further explore the relation between the POE and the juncture effects with different measurements of overlap.

5.2 Cognitive processing

This study considered two aspects of frequency in order to assess its role in cluster realization: high-frequency versus low-frequency items in real words (e.g., take care vs. dock gate), and the real words (i.e., high-frequency) versus the non-words (i.e., extremely low-frequency) (e.g., keep pace vs. peep pate).

In terms of the gradient effect (i.e., high vs. low frequency items in real words), the results found no significant correlation between frequency and release percentage in either group, but a significant correlation between frequency and overlap for both groups. Both groups rated real words such as take care and look good highly familiar; these had up to 50% overlap. Words such as hug pug and dock date were rated as much less familiar, and showed only up to 20% overlap. This result suggested a frequency-matching behavior for both groups: more reductions occur for more frequent words in meaningful items. This finding is in accordance with existing research on the effect of lexical frequency in sound patterns (Gregory et al. Reference Gregory, Raymond, Bell, Fosler-Lussier, Jurafsky, Billings, Boyle and Griffith1999; Bybee, Reference Bybee, Barlow and Kemmer2000, Reference Bybee2007; Bush Reference Bush, Bybee and Hopper2001). Gregory et al. report that tapping of a word-final /t/ or /d/ in an intervocalic context is highly affected by the probabilistic variable “mutual information”, which measures the likelihood that two words will occur together. As mutual information increases, reflecting a stronger cohesion between the two words, the likelihood of tapping increases. In this study, as lexical frequency increases, the overlap increases.

Moreover, both groups showed frequency-matching behavior by essentially differentiating real words from non-words. The categorical FREQUENCY effect was found to be significant on both release percentage and closure duration ratio. Both groups had significantly fewer releases and more overlap in clusters with real words than with non-words (e.g., keep pace >> peep pate). The NE group had 39% overlap in producing real words and 33% in non-words averaged over both speech rates; the NM group had 24% and 15% overlap, respectively.

The group differences were observed in the interaction of Frequency * POA. In the current study, NE speakers’ treatment of real words versus non-words exhibited a high degree of consistency. In terms of release percentage, the NE group had significantly more releases in front-back than in back-front clusters in both lexical contexts. In terms of duration ratio, the trend of the NE group was to organize the three cluster types in the same fashion (i.e., front-back >> back-front >> homorganic) in both lexical environments. The results indicated that NE speakers maintained a stable timing pattern in new forms even when they slightly modified the degree of overlap in non-words. This observed consistency supports previous studies showing that native speakers have the ability to identify regularities and systematic alternations, and extend their phonological knowledge to unfamiliar environments (see Berko Reference Berko1958, Wilson Reference Wilson2006, Zhang and Lai Reference Zhang and Lai2010). English speakers’ ability to articulate using their knowledge in this study further suggests that they have productively internalized the phonological process. As Wilson (Reference Wilson2006: 946) comments, speakers have “detailed knowledge of articulatory and perceptual properties”. It is obvious that their grammatical systems can make reference to that knowledge.

The rich and detailed representation found in the NE group also essentially corroborates a fundamental hypothesis of Articulatory Phonology; phonological contrasts are represented in terms of articulatory gestures, not discrete features (Browman and Goldstein Reference Browman and Goldstein1989, Reference Browman, Goldstein, Clements, Kingston and Beckman1990, Reference Browman and Goldstein1992; Goldstein et al. Reference Goldstein, Byrd, Saltzman and Arbib2006). One implication of the gestural unit hypothesis is that this kind of representation is apparently what speakers make use of for their lexicon. The findings reported here suggest a step further: the stored gestural units are available for native speakers to reconstruct and redeploy combinatorially in new contexts. If these units could not be reorganized, the English speakers in this study would coordinate non-words differently from real words, perhaps having a different overlap order among the three cluster types. Yet in non-words, they still followed the pattern of front-back >> back-front >> homorganic. It seems that native speech not only encodes gestural organizations manifested in the lexicon, it also allows for the recombination of new possibilities with the same structure (also see Kita and Özyürek Reference Kita and Özyürek2003).

In contrast, there is no evidence that Mandarin speakers have acquired non-native phonological knowledge; rather, how they organize L2 clusters seems to be largely dependent on familiarity with lexical knowledge. The NM group had different overlap orders in different lexical conditions. They produced front-back >> back-front in real words, and back-front >> front-back in non-words. The former pattern of the NM group was consistent with that of the NE group, showing that the two groups had similar organizations with familiar lexical items. However, the NM group's responses for non-words showed that they did not generalize beyond memorized items. This response shows that Mandarin speakers were not sensitive to fine-grained phonetic details in English and thus were unable to reorganize English gestural units that they were familiar with, at least within the confines of the difficult task of reading and orthographically decoding the non-words in L2. As a result, L2 speakers have a limited repertoire for reconstructing new possibilities within L2 phonotactic constraints.

5.3 Stable pattern

This study not only examined how coordination is implemented and how sound patterns are stored in L1 and L2 speech, it also investigated whether changes in speech rate induce greater overlap. The validation analyses established that both groups produced more syllables per second at the fast rate (i.e., Task 4) than at the slow rate (i.e., Task 3); however, the effect of SPEECH RATE was not significant on either release percentage or duration ratio. The results showed that the NE group released more often when speech rate increased (33% vs. 37%), and had slightly more overlap at the slow rate (36.3% vs. 35.9%). Meanwhile, the NM group released less often when speech rate increased (43% vs. 35%), and had slightly more overlap at the fast rate (18% vs. 19%). The results suggest that the SPEECH RATE effect was opposite for the two groups.

Further analyses indicated that individual speakers differed in having either more or less overlap at the fast rate than at the slow rate, pointing towards a speaker-dependent effect. Nine NE speakers and ten NM speakers had more overlap in fast speech, while six NE speakers and 15 NM speakers had less overlap in fast speech. Worth noting is that there was a larger proportion in the NE group (9/15) who had more overlap in faster speech rate than in the NM group (10/25). This finding is consistent with the only study, to my knowledge, that has examined the speech rate effect on overlap produced by Mandarin speakers, Chen and Robb (Reference Chen and Robb2004). They found that Mandarin speakers produced English with a slower speaking rate and articulation rate compared to American speakers. Their analyses also indicated that the Mandarin speakers inserted significantly more pauses between syllables. Comparably, the current study found that the NM group tended to have a longer duration (i.e., smaller overlap) than the NE group even when their general speech rate increased.

The overall results indicate a stable pattern along with speaker variations in both slow and fast speech rates, which is consistent with other work showing individual differences in this regard (e.g., Kuehn and Moll Reference Kuehn and Moll1976, Ostry and Munhall Reference Ostry and Munhall1985, Zsiga Reference Zsiga1994, Tsao et al. Reference Tsao, Weismer and Iqbal2006). I outline here three possible accounts for the lack of correlation between increased overlap and increased speech rate.

One view is to consider the phonetic nature of stops. As Kessinger and Blumstein (Reference Kessinger and Blumstein1997) point out, stops have intrinsic limitations in their production. Specifically, stops involve a pressure build-up behind the constriction and a rapid release of the oral closure. As seen from the acoustic consequences, stops have a rapid change and an abrupt amplitude increase at their release when preceding a vowel (Stevens Reference Stevens1980). These physical gestures must occur to implement the obstruent manner of articulation in either slow or fast speech. With this obstruent manner, stops are less vulnerable than other consonants (e.g., affricates) in that changes in speaking rate are less likely to affect them. In fact, acoustic studies have shown that speaking rate changes affect the duration of glides and affricates, but do not affect stops (Miller and Baer Reference Miller and Baer1983, Shinn Reference Shinn1984). Also, changes in speech rates do not induce differing voice onset time of English stops (Kessinger and Blumstein Reference Kessinger and Blumstein1997; but see different results in Pind, Reference Pind1995 and Theodore et al. Reference Theodore, Miller, DeSteno, Trouvain and Barry2007).

An alternative view is that the rate is simply not fast enough to have exerted much influence. Tjaden and Weismer (Reference Tjaden and Weismer1998) suggested that the increase in speech rate that participants self-produced may not have been great enough to shift coordination patterns. The transition between adjacent gestures cannot be affected if the rate change is not large enough. The speech organs would rather remain relatively stable during the course of production. Lindblom (Reference Lindblom and MacNeilage1983) showed that speakers do not execute extreme displacements or velocities in coarticulation and vowel reduction; they neither hyper-articulate, nor constantly whisper or mumble. Instead, speakers prefer behaviours that minimize motoric demands.

An additional view is that people always tacitly find alternative means to reach target values without compromising a steady speech rhythm. For example, Moisik and Esling (Reference Moisik, Esling, Lee and Zee2011) discovered that speakers raise their larynx to supplement the raising pitch value supposedly induced by a voiced target without laryngeal constriction. Ellis and Hardcastle (Reference Ellis, Hardcastle, Ohala, Hasegawa, Ohala, Graville and Bailey1999) showed that individual speakers may exploit either categorical or gradient assimilation when producing /n#k/. The EPG data showed that two speakers never exhibited tongue-palate contact in the velar region during the /n/, whereas four other speakers consistently produced /ŋ/. Two more speakers alternated between complete assimilation and no assimilation. Only two speakers showed a range of tongue-palate contact from full alveolar closure to partial velar contact to total velar closure. Ellis and Hardcastle (Reference Ellis, Hardcastle, Ohala, Hasegawa, Ohala, Graville and Bailey1999: 2428) concluded that speakers’ preferred strategies “can be fundamentally different”. In the present study, the fact that speakers had either more or less overlap when speech rate increased suggested that gesture coordination was self-controlled; speakers could easily modify the overlap pattern without compromising a steady speech pattern.

6. Conclusion

This study extends our understanding of gestural overlap in both L1 and L2 speech. Although articulatory contact was not directly measured, a detailed examination of two-stop combinations was conducted. I tested the effects of three factors concurrently on the amount of overlap in English stop-stop sequences across words in both L1 and L2 speech: place of articulation, frequency, and speech rate. The first two were found to affect the coordination of gestural units. The POE was only partially shown in L1 speech, but not shown at all in L2 speech. I discussed the implications and proposed to account for the results in terms of juncture effects where gestures become larger and longer, weakening the POE. I also found that the NE group extended the release pattern of the POE into non-words and had consistent overlap orders across lexical conditions; in contrast, the NM group deviated significantly from the NE group especially in coordinating nonsense front-back and back-front clusters, suggesting that they cannot extend their L2 phonetic awareness (if any) into unfamiliar phonological production. The consistency of the NE group showed that the POE is learnable in native processing, indicating that sound patterns are registered in memory along with their phonetic details. Further analyses indicated that a faster speech rate did not induce more overlap in either group, indicating that overlap was not simply accelerated at faster rates.

Appendix: Testing material

List 1: 24 Items of /C1#V/ and /V#C2/

List 2: 144 items of C1#C2