NOMENCLATURE

- AR

Augmented Reality

- HMD

Head-Mounted Display

- IA

Immersive Analytics

- VA

Visual Analytics

- VR

Virtual Reality

1.0 INTRODUCTION

Virtual reality (VR) is rapidly being hailed as the new paradigm for interactive visualisation of data. Its ability to fuse visual, audio, and haptic sensory feedback in a computer-generated simulation environment is deemed to have tremendous potential. While the phrase virtual reality has been used for decades, in the context of computer-aided visualisation, today it is synonymous with head-mounted displays(Reference Sutherland1) (HMDs) or headsets(Reference Oculus2,Reference Nokuo and Sumii3) . Although still in a nascent stage, HMDs have demonstrated their usefulness in the computer gaming, education, fashion and real-estate industries, with countless more application areas currently being pursued, including information visualisation in aerospace(Reference García-Hernández, Anthes, Wiedemann and Kranzlmüller4). One potentially promising application is aerospace design—a complex, multi-disciplinary, multi-objective and multi-dimensional problem—where technologies that offer faster design cycle times, with potentially greater efficiency gains, can be real game-changers. However, as the aerospace community usually works on state-of-the-art computational tools and sophisticated computer-aided design packages, there are tremendous hurdles in getting the community to embrace VR. Furthermore, at this stage, it is not precisely clear what the benefits are in migrating to a VR-based design framework. Thus, what is required by the aerospace design community is an initial sketch of an immersive VR aerospace design environment—the AeroVR, a computer-generated environment that leverages the full visual, audio, and haptic sensory frameworks afforded by VR technology.

The focus in this paper is to explore how aerospace design workflows can benefit from VR. To aid our effort, we will be using ideas from parameter-space dimension reduction(Reference Constantine5,Reference Cook6,Reference Seshadri, Yuchi, Parks and Shahpar7) . This topic has recently received considerable attention from both the applied mathematics and computational engineering communities, where the aim has been to reduce the cost of expensive computer parameter studies—that is, optimisation, uncertainty quantification, and more generally design of experiments. In Section 2, we present some of the key theoretical ideas that underpin dimension reduction. This is followed in Section 3 with a presentation of the VR aerospace design environment including its function and system structures, tasks analysis, and interaction features. The next Section 4 describes a verification of the interface with respect to usability and expressiveness. Section 5 summarises the contributions and outlines future work.

This work represents one of the first forays of virtual reality in aerodynamic design—a field where state-of-the-art computer aided design (CAD) tools are quite competent. Given the nascent stage of VR technology, the objective of this paper is to gauge its potential and lay down some of the key building blocks for future digital twinning and aerospace design efforts. In the years to come, the AeroVR concept will have to be carefully evaluated and compared with existing CAD tools through, for example, a series of controlled experiments to demonstrate superiority in executing design tasks. This latter task is beyond the scope of an isolated paper and will require years of collaborative research involving industry and academia. Our work here is the first step in that direction.

2.0 PARAMETER-SPACE DIMENSION REDUCTION

Consider a function ![]() $f(\textbf{x})$

where

$f(\textbf{x})$

where ![]() $f: \mathbb{R}^{d} \rightarrow \mathbb{R}$

. Here f represents our chosen quantity of interest (qoi); the desired output of a computational model. This qoi can be the lift coefficient of a wing or indeed the efficiency of a turbomachinery blade. Let

$f: \mathbb{R}^{d} \rightarrow \mathbb{R}$

. Here f represents our chosen quantity of interest (qoi); the desired output of a computational model. This qoi can be the lift coefficient of a wing or indeed the efficiency of a turbomachinery blade. Let ![]() $\textbf{x} \in \mathbb{R}^{d}$

be a vector of design parameters. Now when

$\textbf{x} \in \mathbb{R}^{d}$

be a vector of design parameters. Now when ![]() $d \leq 2$

, visualizing the design space of f is trivial, one needs to simply run a design of experiment and view the results as a scatter plot. However, when

$d \leq 2$

, visualizing the design space of f is trivial, one needs to simply run a design of experiment and view the results as a scatter plot. However, when ![]() $d \geq 3$

visualising the design space becomes difficult. One way forward is to approximate f, with

$d \geq 3$

visualising the design space becomes difficult. One way forward is to approximate f, with

where ![]() $g: \mathbb{R}^{m} \rightarrow \mathbb{R}$

and

$g: \mathbb{R}^{m} \rightarrow \mathbb{R}$

and ![]() $\textbf{\textit{U}} \in \mathbb{R}^{d \times m}$

, with

$\textbf{\textit{U}} \in \mathbb{R}^{d \times m}$

, with ![]() $m \leq d$

. We call the subspace associated with the span of

$m \leq d$

. We call the subspace associated with the span of ![]() $\textbf{\textit{U}}$

its ridge subspace and

$\textbf{\textit{U}}$

its ridge subspace and ![]() $g(\textbf{\textit{U}}^{T} \textbf{x})$

its ridge approximation. Further, we assume that the columns of

$g(\textbf{\textit{U}}^{T} \textbf{x})$

its ridge approximation. Further, we assume that the columns of ![]() $\textbf{\textit{U}}$

are orthonormal, i.e.

$\textbf{\textit{U}}$

are orthonormal, i.e. ![]() $\textbf{\textit{U}}^T \textbf{\textit{U}} = \textbf{\textit{I}}$

. The above definitions imply that the gradient of f is nearly zero along directions that are orthogonal to the subspace of

$\textbf{\textit{U}}^T \textbf{\textit{U}} = \textbf{\textit{I}}$

. The above definitions imply that the gradient of f is nearly zero along directions that are orthogonal to the subspace of ![]() $\textbf{\textit{U}}$

. In other words, if we replace

$\textbf{\textit{U}}$

. In other words, if we replace ![]() $\textbf{x}$

with

$\textbf{x}$

with ![]() $\textbf{x} + \textbf{h}$

where

$\textbf{x} + \textbf{h}$

where ![]() $\textbf{\textit{U}}^T \textbf{h} = 0$

, then

$\textbf{\textit{U}}^T \textbf{h} = 0$

, then ![]() $f(\textbf{x} + \textbf{h}) = g(\textbf{\textit{U}}^T (\textbf{x} + \textbf{h})) = f(\textbf{x})$

. Visualizing

$f(\textbf{x} + \textbf{h}) = g(\textbf{\textit{U}}^T (\textbf{x} + \textbf{h})) = f(\textbf{x})$

. Visualizing ![]() $f_i$

along the coordinates of

$f_i$

along the coordinates of ![]() $\textbf{\textit{U}}^{T} \textbf{x}_{i}$

for all designs i, can provide extremely powerful inference; such scatter plots are called sufficient summary plots. To clarify, let

$\textbf{\textit{U}}^{T} \textbf{x}_{i}$

for all designs i, can provide extremely powerful inference; such scatter plots are called sufficient summary plots. To clarify, let ![]() $\textbf{u}_i = \textbf{\textit{U}}^{T} \textbf{x}_{i}$

where

$\textbf{u}_i = \textbf{\textit{U}}^{T} \textbf{x}_{i}$

where ![]() $\textbf{u} \in \mathbb{R}^{m}$

. Let us assume that

$\textbf{u} \in \mathbb{R}^{m}$

. Let us assume that ![]() $m=1$

, in which case we can collapse all the

$m=1$

, in which case we can collapse all the ![]() $\textbf{x}_{i}$

to

$\textbf{x}_{i}$

to ![]() $\textbf{u}_{i}$

via

$\textbf{u}_{i}$

via ![]() $\textbf{\textit{U}}$

into a 1D scatter plot. Here

$\textbf{\textit{U}}$

into a 1D scatter plot. Here ![]() $\textbf{u}_{i}$

values would lie along the horizontal axis, while

$\textbf{u}_{i}$

values would lie along the horizontal axis, while ![]() $f_i$

values would be plotted along the vertical axis. Such a sufficient summary plot can be useful for characterising and understanding the relationship between f and

$f_i$

values would be plotted along the vertical axis. Such a sufficient summary plot can be useful for characterising and understanding the relationship between f and ![]() $\textbf{x}$

. We offer practical examples of this recipe in the forthcoming section.

$\textbf{x}$

. We offer practical examples of this recipe in the forthcoming section.

2.1 Techniques for dimension reduction

Techniques for estimating ![]() $\textbf{\textit{U}}$

build on ideas from sufficient dimension reduction(Reference Cook6) and more recent works such active subspaces(Reference Constantine5) and polynomial(Reference Hokanson and Constantine8,Reference Constantine, Eftekhari, Hokanson and Ward9) and Gaussian(Reference Seshadri, Yuchi and Parks10) ridge approximations. While our work in this paper is invariant to the specific parameter-space dimension reduction technique utilised, we briefly detail a few ideas within ridge approximation. Our high-level objective is to solve the optimisation problem

$\textbf{\textit{U}}$

build on ideas from sufficient dimension reduction(Reference Cook6) and more recent works such active subspaces(Reference Constantine5) and polynomial(Reference Hokanson and Constantine8,Reference Constantine, Eftekhari, Hokanson and Ward9) and Gaussian(Reference Seshadri, Yuchi and Parks10) ridge approximations. While our work in this paper is invariant to the specific parameter-space dimension reduction technique utilised, we briefly detail a few ideas within ridge approximation. Our high-level objective is to solve the optimisation problem

over the the space of matrix manifolds ![]() $\textbf{\textit{U}}$

and the coefficients (or hyperparameters)

$\textbf{\textit{U}}$

and the coefficients (or hyperparameters) ![]() $\alpha$

associated with the parametric function g. This is a challenging optimisation problem and it is not convex. In Seshadri et al(Reference Seshadri, Yuchi and Parks10) the authors assume that g is the posterior mean of a Gaussian process (GP) and iteratively solve for the hyperparameters associated with the GP, whilst optimising

$\alpha$

associated with the parametric function g. This is a challenging optimisation problem and it is not convex. In Seshadri et al(Reference Seshadri, Yuchi and Parks10) the authors assume that g is the posterior mean of a Gaussian process (GP) and iteratively solve for the hyperparameters associated with the GP, whilst optimising ![]() $\textbf{\textit{U}}$

using a conjugate gradient optimiser on the Stiefel manifold (see Absil et al(Reference Absil, Mahony and Sepulchre11)). In Constantine et al(Reference Constantine, Eftekhari, Hokanson and Ward9) the authors set g to be a polynomial and iteratively solve for its coefficients—using standard least squares regression—whilst optimising over the Grassman manifold to estimate the subspace

$\textbf{\textit{U}}$

using a conjugate gradient optimiser on the Stiefel manifold (see Absil et al(Reference Absil, Mahony and Sepulchre11)). In Constantine et al(Reference Constantine, Eftekhari, Hokanson and Ward9) the authors set g to be a polynomial and iteratively solve for its coefficients—using standard least squares regression—whilst optimising over the Grassman manifold to estimate the subspace ![]() $\textbf{\textit{U}}$

. It should be noted that these techniques are motivated by the need to break the curse of dimensionality. In other words, one would like to estimate both g and

$\textbf{\textit{U}}$

. It should be noted that these techniques are motivated by the need to break the curse of dimensionality. In other words, one would like to estimate both g and ![]() $\textbf{\textit{U}}$

for a d dimensional, scalar-valued, function f without requiring a large number of computational simulations.

$\textbf{\textit{U}}$

for a d dimensional, scalar-valued, function f without requiring a large number of computational simulations.

The dimension reduction strategy we pursue in this paper is based on active subspaces(Reference Constantine5) computational heuristic tailored for identifying subspaces that can be used for the approximation in (2). Broadly speaking, active subspaces requires the approximation of a covariance matrix ![]() $\textbf{\textit{C}} \in \mathbb{R}^{d \times d}$

$\textbf{\textit{C}} \in \mathbb{R}^{d \times d}$

where ![]() $\nabla f \!\left(\textbf{x} \right)$

represents the gradient of the function f and

$\nabla f \!\left(\textbf{x} \right)$

represents the gradient of the function f and ![]() $\boldsymbol{\rho}$

is the probability density function that characterises the input parameter space

$\boldsymbol{\rho}$

is the probability density function that characterises the input parameter space ![]() $\mathcal{X} \in \mathbb{R}^{d}$

. The matrix

$\mathcal{X} \in \mathbb{R}^{d}$

. The matrix ![]() $\textbf{\textit{C}}$

is symmetric positive semi-definite and as a result it admits the eigenvalue decomposition

$\textbf{\textit{C}}$

is symmetric positive semi-definite and as a result it admits the eigenvalue decomposition

where the first m eigenvectors ![]() $\textbf{\textit{W}}_{1} \in \mathbb{R}^{d \times m}$

, where

$\textbf{\textit{W}}_{1} \in \mathbb{R}^{d \times m}$

, where ![]() $m << d$

—selected based on the decay of the eigenvalues

$m << d$

—selected based on the decay of the eigenvalues ![]() $\boldsymbol{\Lambda}$

—are on average directions along which the function varies more, compared to the directions given by the remaining

$\boldsymbol{\Lambda}$

—are on average directions along which the function varies more, compared to the directions given by the remaining ![]() $\!\left(d-m \right)$

eigenvectors

$\!\left(d-m \right)$

eigenvectors ![]() $\textbf{\textit{W}}_{2}$

. Readers will note that the notion of computing eigenvalues and eigenvectors of an assembled covariance matrix is analogous to principal components analysis (PCA). However, in (3) our covariance matrix is based on the average outer product of the gradient, while in PCA it is simply the average outer product of samples, i.e.

$\textbf{\textit{W}}_{2}$

. Readers will note that the notion of computing eigenvalues and eigenvectors of an assembled covariance matrix is analogous to principal components analysis (PCA). However, in (3) our covariance matrix is based on the average outer product of the gradient, while in PCA it is simply the average outer product of samples, i.e. ![]() $\textbf{x} \textbf{x}^{T}$

. Now, once the subspace

$\textbf{x} \textbf{x}^{T}$

. Now, once the subspace ![]() $\textbf{\textit{W}}_{1}$

has be identified, one can approximate f via

$\textbf{\textit{W}}_{1}$

has be identified, one can approximate f via

in other words we project individual samples ![]() $\textbf{x}_{i}$

onto the subspace

$\textbf{x}_{i}$

onto the subspace ![]() $\textbf{\textit{W}}_{1}$

. Moreover, as the function (on average) is relatively flat along directions

$\textbf{\textit{W}}_{1}$

. Moreover, as the function (on average) is relatively flat along directions ![]() $\textbf{\textit{W}}_{2}$

, we can approximate f using the directions encoded in

$\textbf{\textit{W}}_{2}$

, we can approximate f using the directions encoded in ![]() $\textbf{\textit{W}}_{1}$

.

$\textbf{\textit{W}}_{1}$

.

But how do we compute (3), as for a given f we may not necessarily have access to its gradients? In Ref. (Reference Seshadri, Shahpar, Constantine, Parks and Adams12), the authors construct a global quadratic model to a 3D Reynolds Averaged Navier Stokes (RANS) simulation of a turbomachinery blade and then analytically estimate its gradients. We detail their strategy below as we adopt the same technique for facilitating parameter-space dimension reduction.

Assume we have N input-output pairs ![]() $\left\{\textbf{x}_{i}, f_{i} \right\}_{i=1}^{N}$

obtained by running a suitable design of experiment (see Ref. (Reference Pukelsheim13)) within our parameter space. We assume that the samples

$\left\{\textbf{x}_{i}, f_{i} \right\}_{i=1}^{N}$

obtained by running a suitable design of experiment (see Ref. (Reference Pukelsheim13)) within our parameter space. We assume that the samples ![]() $\textbf{x}_{i} \in \mathbb{R}^{d}$

are independent and identically distributed and that they admit a joint distribution given by

$\textbf{x}_{i} \in \mathbb{R}^{d}$

are independent and identically distributed and that they admit a joint distribution given by ![]() $\rho \!\left(\textbf{x} \right)$

. Here we will assume that

$\rho \!\left(\textbf{x} \right)$

. Here we will assume that ![]() $\rho \!\left(\textbf{x} \right)$

is uniform over the hypercube

$\rho \!\left(\textbf{x} \right)$

is uniform over the hypercube ![]() $\mathcal{X} \in [-1, 1]^{d}$

. We fit a global quadratic model to the data,

$\mathcal{X} \in [-1, 1]^{d}$

. We fit a global quadratic model to the data,

using least squares. This yields us values for the coefficients ![]() $\textbf{\textit{A}}, \textbf{c}$

and the constant d. Then, we estimate the covariance matrix in (3) using

$\textbf{\textit{A}}, \textbf{c}$

and the constant d. Then, we estimate the covariance matrix in (3) using

Following the computation of the eigenvectors of ![]() $\hat{\textbf{\textit{C}}}$

, one can then generate sufficient summary plots that are useful for subsequent inference and approximation.

$\hat{\textbf{\textit{C}}}$

, one can then generate sufficient summary plots that are useful for subsequent inference and approximation.

We apply this quadratic recipe and show the sufficient summary plots for a 3D turbomachinery blade in Section 3, both in a standard desktop environment and in virtual reality. This comparison—the central objective of this paper—is motivated by the need to explore the gains VR technologies can afford in aerospace design. That said, prior to delving into our chosen case study, an overview of existing immersive visual technologies and their associated frameworks is in order.

3.0 SUPPORTING AEROSPACE DESIGN IN VR

Visual analytics (VA), a phrase first coined by Thomas et al(Reference Thomas and Cook14) has two ingredients: (1) an interactive visual interface(Reference Thomas and Cook14); and (2) analytical reasoning(Reference Thomas and Cook14). Recent advances and breakthroughs in the development of VR (virtual reality) and AR (augmented reality) have spun off another branch of research known as Immersive Analytics (Reference Chandler, Cordeil, Czauderna, Dwyer, Glowacki, Goncu, Klapperstueck, Klein, Marriott, Schreiber and Wilson15) (IA). IA seeks to understand how the latest wave of immersive technologies can be leveraged to create more compelling, more intuitive and more effective visual analytics frameworks. There are still numerous hurdles to overcome for VR-based data analytics tools to be widespread. Issues associated with any type of a 3D interface, for example, potential occlusion effects(Reference Shneiderman16), high computing power demands and specialised, (often costly) hardware, have to be resolved, or at least minimised. Moreover, interaction techniques have to facilitate a user’s understanding of the visualisation and avoid becoming a distraction. Finally, certain guidelines have to be incorporated to mitigate the risk of the simulation sickness(Reference Kennedy, Lane, Berbaum and Lilienthal17,Reference Ruddle18) symptoms that can manifest during or immediately after the use of a VR headset.

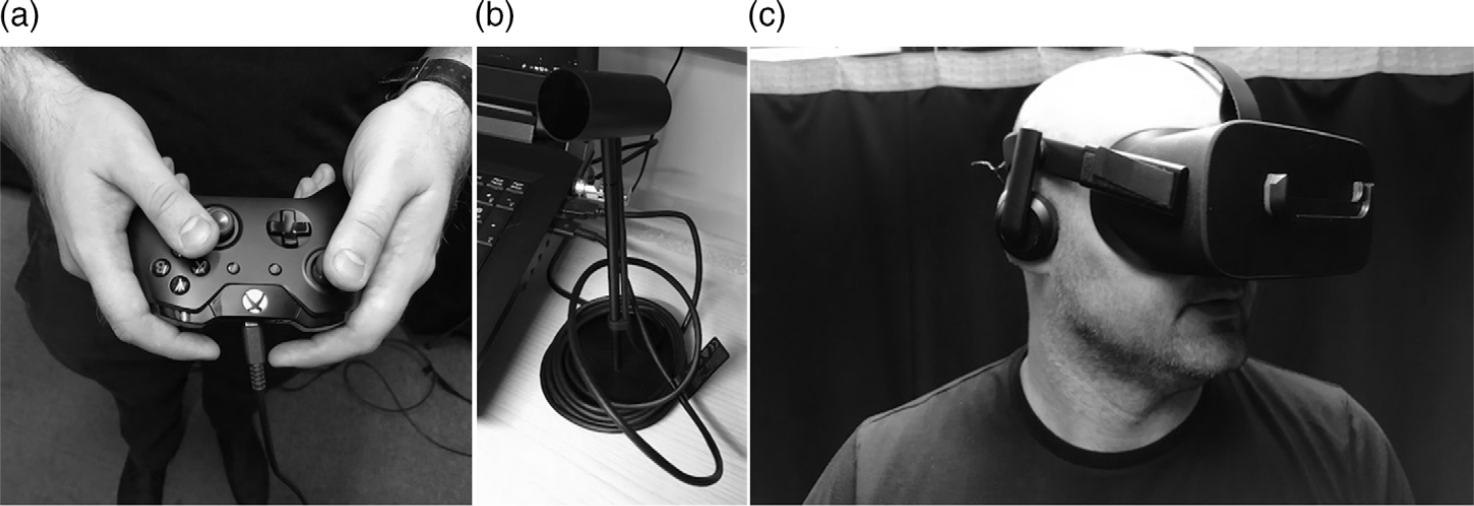

Many interaction techniques and devices have been conceived that can be used separately or in combination for interaction and control of a VR environment. In this paper we use the standard off-the-shelf Xbox(19) controller shown in Fig. 1(a) that comes prepacked with the Oculus Rift(Reference Oculus2) bundle (see Fig. 1(b) and (c)). This controller-style has achieved very high adoption in gaming industry; its design is ergonomic and easy to learn. As one example of wider adoption, the US Navy recently adopted the use of a Xbox controller to operate the periscope on nuclear-powered submarine.Footnote *

Figure 1. Hardware interfaces in VR: (a) Xbox controller; (b) Oculus Rift’s motion sensor; (c) Oculus Rift VR headset.

Here, we present a VR aerospace design environment with a focus on dimension reduction. Information on one of the earliest examples of research into using VR for applications in the scope of aerospace design can befound in Hale(Reference Hale20). García-Hernández et al(Reference García-Hernández, Anthes, Wiedemann and Kranzlmüller4) pointed out that the VR technology is starting to gain ground in aerospace design and listed a range of aerospace research topics in which VR already is, or can be, successfully applied. This includes, among others, spacecraft design optimisation (e.g. Mizell(Reference Mizell21) discusses use of VR and AR in aircraft design and manufacturing whereas Stump et al(Reference Stump, Yukish, Simpson and O’Hara22) used IA to aid a satellite design process) and aerodynamic design, in which 3D scatter plots are already in-use (see Jeong et al(Reference Jeong, Chiba and Obayashi23)). Other applications include use of the haptic feedback (e.g. Savall et al(Reference Savall, Borro and Matey24) describes REVIMA system for maintainability simulation and Sagardia et al(Reference Sagardia, Hertkorn, Hulin, Wolff, Hummell, Dodiya and Gerndt25) presents the VR-based system for on-orbit servicing simulation), collaborative environments (e.g. Roberts et al(Reference Roberts, Garcia, Dodiya, Wolff, Fairchild and Fernando26) introduces an environment for the Space operation and science whereas Clergeaud et al(Reference Clergeaud, Guillaume and Guitton27) discusses implementation of the IA tools used in context of the aerospace with Airbus Group), aerospace simulation (e.g. Stone et al(Reference Stone, Panfilov and Shukshunov28) discuss the evolution of aerospace simulation that uses immersive technologies), telemetry and sensor data visualisation (e.g. see Wright et al(Reference Wright, Hartman and Cooper29), Lecakes et al(Reference Lecakes, Russell, Mandayam, Morris and Schmalzel30) or Russell et al(Reference Russell, Lecakes, Mandayam, Morris, Turowski and Schmalzel31)), or planetary exploration (e.g. see Wright et al(Reference Wright, Hartman and Cooper29)). García-Hernández et al(Reference García-Hernández, Anthes, Wiedemann and Kranzlmüller4) suggests that three elements are especially promising for a VR-based approach: (1) integration of multiple 2D graphs for 3D data (Reference García-Hernández, Anthes, Wiedemann and Kranzlmüller4); (2) 3D parallel coordinates (Reference García-Hernández, Anthes, Wiedemann and Kranzlmüller4) (see Tadeja et al(Reference Tadeja, Kipouros and Kristensson32,Reference Tadeja, Kipouros and Kristensson33) ); and (3) visualisation of complex graphs (Reference García-Hernández, Anthes, Wiedemann and Kranzlmüller4). In this paper, we loosely follow (1), but with a key difference: we use subspace-based dimension reduction to generate the 3D graphs for high-dimensional data.

3.1 Applications in design

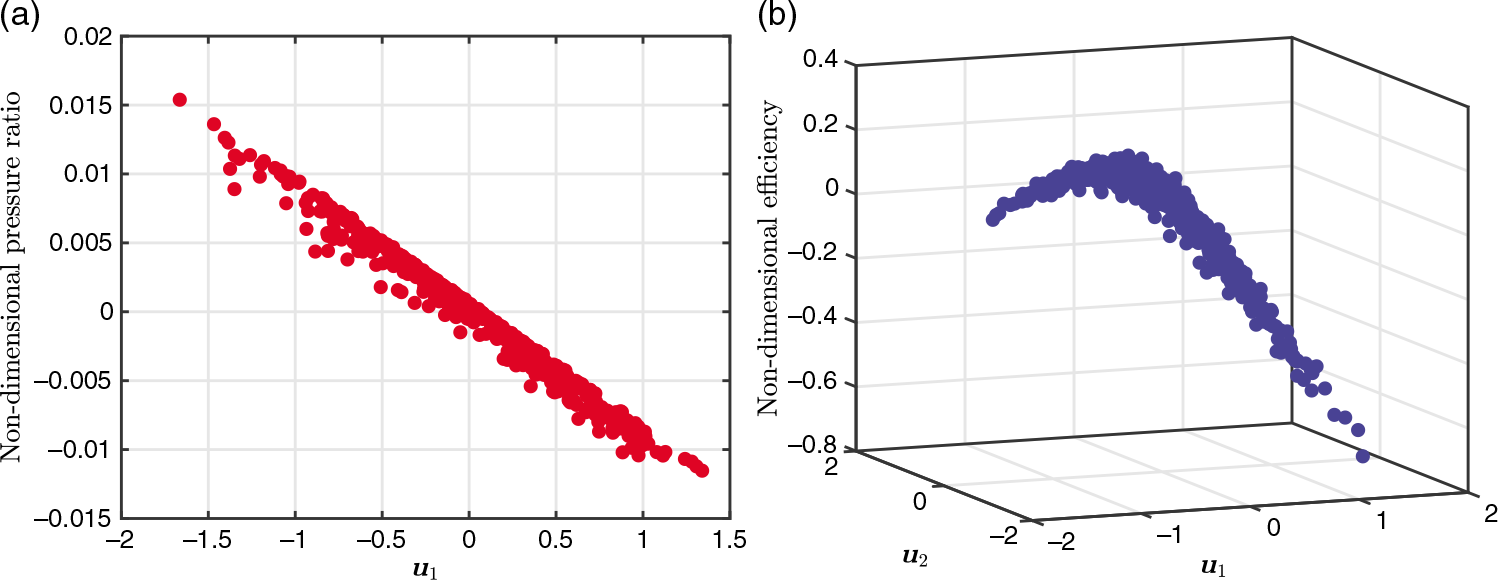

Our dimension reduction results and case study is based on the work undertaken in Seshadri et al(Reference Seshadri, Shahpar, Constantine, Parks and Adams12). Here the authors study the 25D design space of a fan blade using the quadratic active subspaces recipe detailed in Section 2. Towards this end, we used the design of an experiment with ![]() $N=548$

3D RANS computations with different designs; the design space used in this study included five degrees of freedom specified at five spanwise locations. These degrees of freedom composed of an axial displacement, a tangential displacement, a rotation about the blade’s centroidal axis, leading edge recambering and trailing edge recambering, specified at 0, 25, 50, 75 and

$N=548$

3D RANS computations with different designs; the design space used in this study included five degrees of freedom specified at five spanwise locations. These degrees of freedom composed of an axial displacement, a tangential displacement, a rotation about the blade’s centroidal axis, leading edge recambering and trailing edge recambering, specified at 0, 25, 50, 75 and ![]() $100 \%$

span. Thus, we obtained values of the efficiency and pressure ratio for each design vector

$100 \%$

span. Thus, we obtained values of the efficiency and pressure ratio for each design vector ![]() $\textbf{x}_{i}$

. These are two important output quantities of interest in the design of a blade. By studying the eigenvalues and eigenvectors of the covariance matrix for these two objectives the authors were able to discover a 1D ridge approximation for the pressure ratio of a fan and a 2D ridge approximation for the efficiency. These sufficient summary plots are shown in Fig. 2. There are a few important remarks to make regarding these plots.

$\textbf{x}_{i}$

. These are two important output quantities of interest in the design of a blade. By studying the eigenvalues and eigenvectors of the covariance matrix for these two objectives the authors were able to discover a 1D ridge approximation for the pressure ratio of a fan and a 2D ridge approximation for the efficiency. These sufficient summary plots are shown in Fig. 2. There are a few important remarks to make regarding these plots.

Figure 2. Sufficient summary plots of (a) pressure ratios; (b) efficiency, for a range of different computational designs for turbomachinery blade, obtained from a design of experiment study. Based on work in Seshadri et al(Reference Seshadri, Shahpar, Constantine, Parks and Adams12).

For the pressure ratio sufficient summary plot, shown in Fig. 2(a), the horizontal axis is the first eigenvector of the covariance matrix associated with the pressure ratio, ![]() $\textbf{u}_{1}$

. For the efficiency sufficient summary plot, shown in Fig. 2(b), the two horizontal axes are the first two eigenvectors of the covariance matrix associated with the efficiency

$\textbf{u}_{1}$

. For the efficiency sufficient summary plot, shown in Fig. 2(b), the two horizontal axes are the first two eigenvectors of the covariance matrix associated with the efficiency ![]() $[\textbf{u}_{1}, \textbf{u}_{2}]$

. It is important to note that the subspaces associated with efficiency and pressure ratio are distinct.

$[\textbf{u}_{1}, \textbf{u}_{2}]$

. It is important to note that the subspaces associated with efficiency and pressure ratio are distinct.

The sufficient summary plots above permit us to identify and visualise low-dimensional structure in the high-dimensional data. More specifically, these plots can be used in the design process as they permit engineers to make the following inquiries:

• What linear combination of design variables is the most important for increasing/decreasing the pressure ratio?

• How do we increase the efficiency?

• What are the characteristics of designs that satisfy a certain pressure ratio?

• What are the characteristics of designs that satisfy the same efficiency?

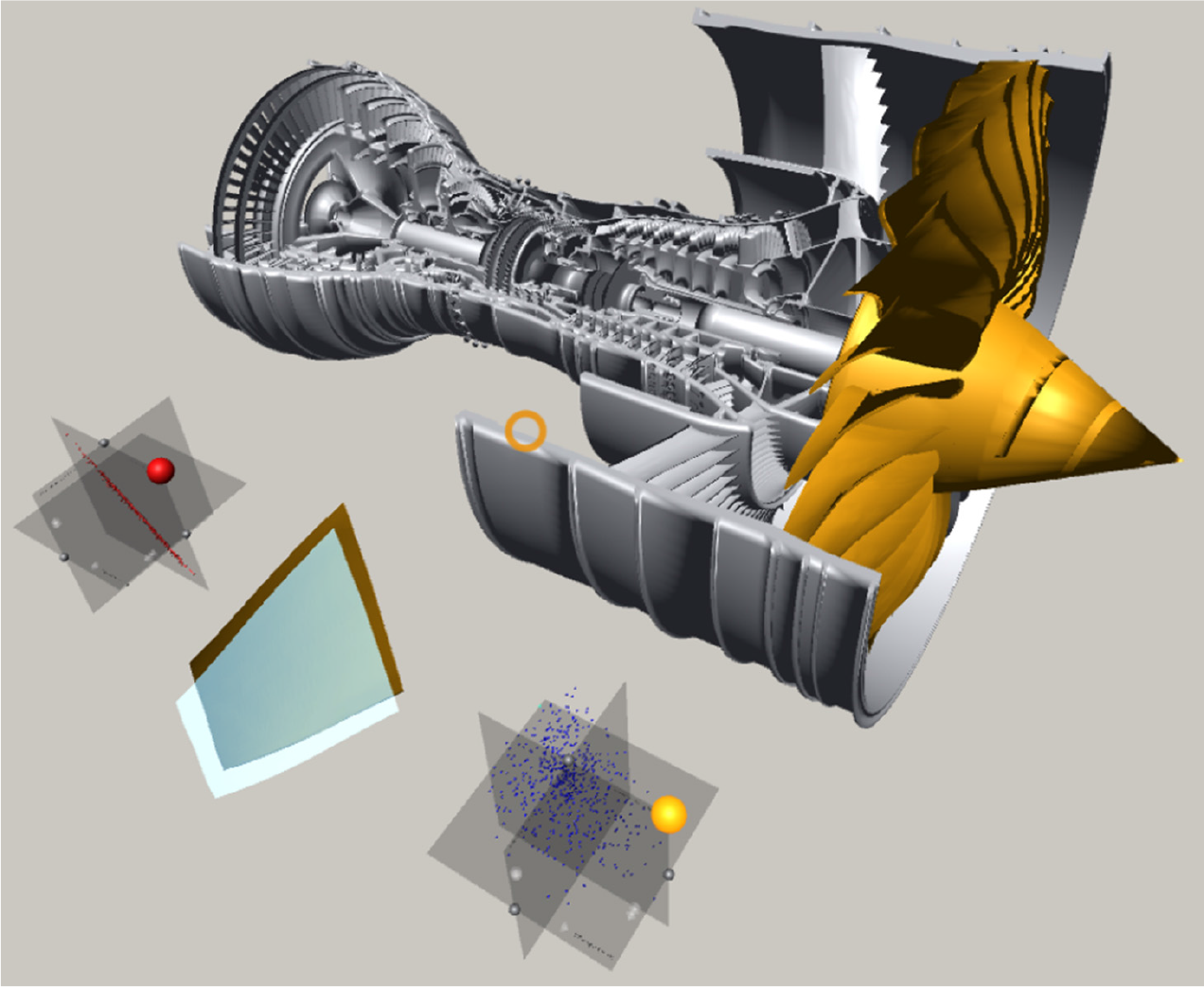

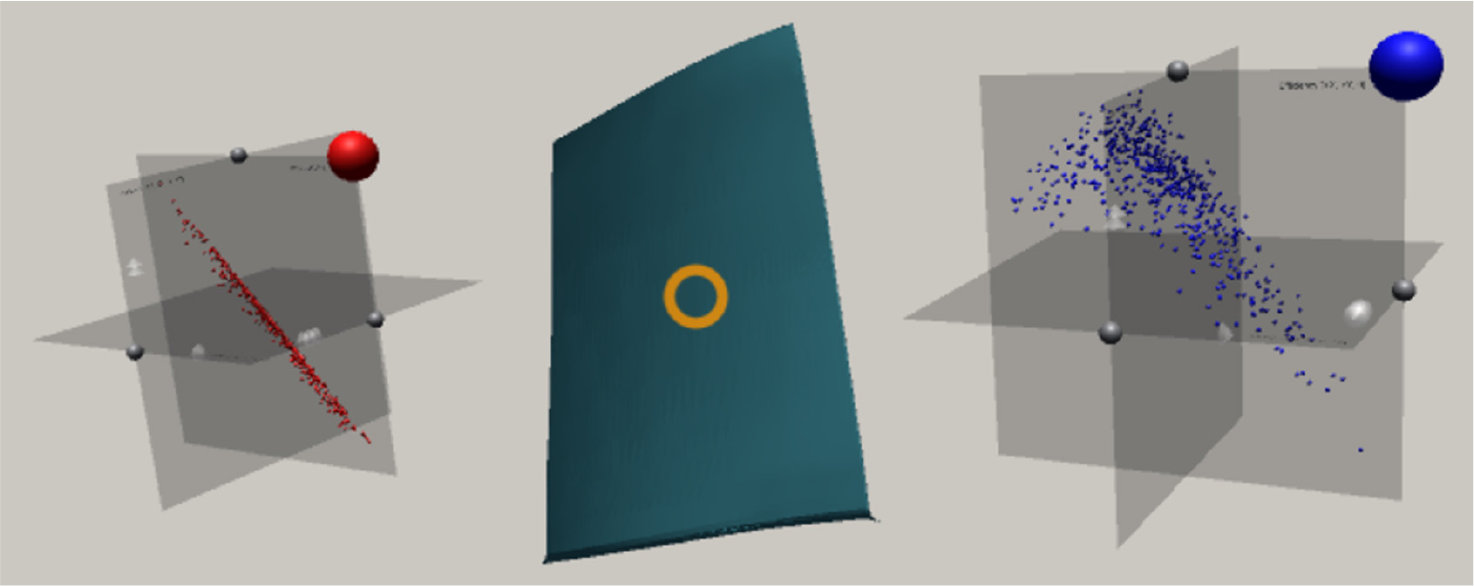

We use these sufficient summary plots in a bespoke VR environment (see Fig. 3). Our high-level objective is to ascertain whether it is possible to leverage tools in VR in conjunction with parameter-space dimension reduction to facilitate better design decision-making and inference. To achieve this goal, we seamlessly integrate the aforementioned sufficient summary plots with the 3D geometric design of the blade, i.e.

In other words, as the user selects a different design—by selecting a suitable level of performance from the sufficient summary plots—they visualise the geometry of the blade that yields that performance. Moreover, they should be able to compare this geometry with that of the nominal design. We clarify and make precise these notions in the forthcoming subsections.

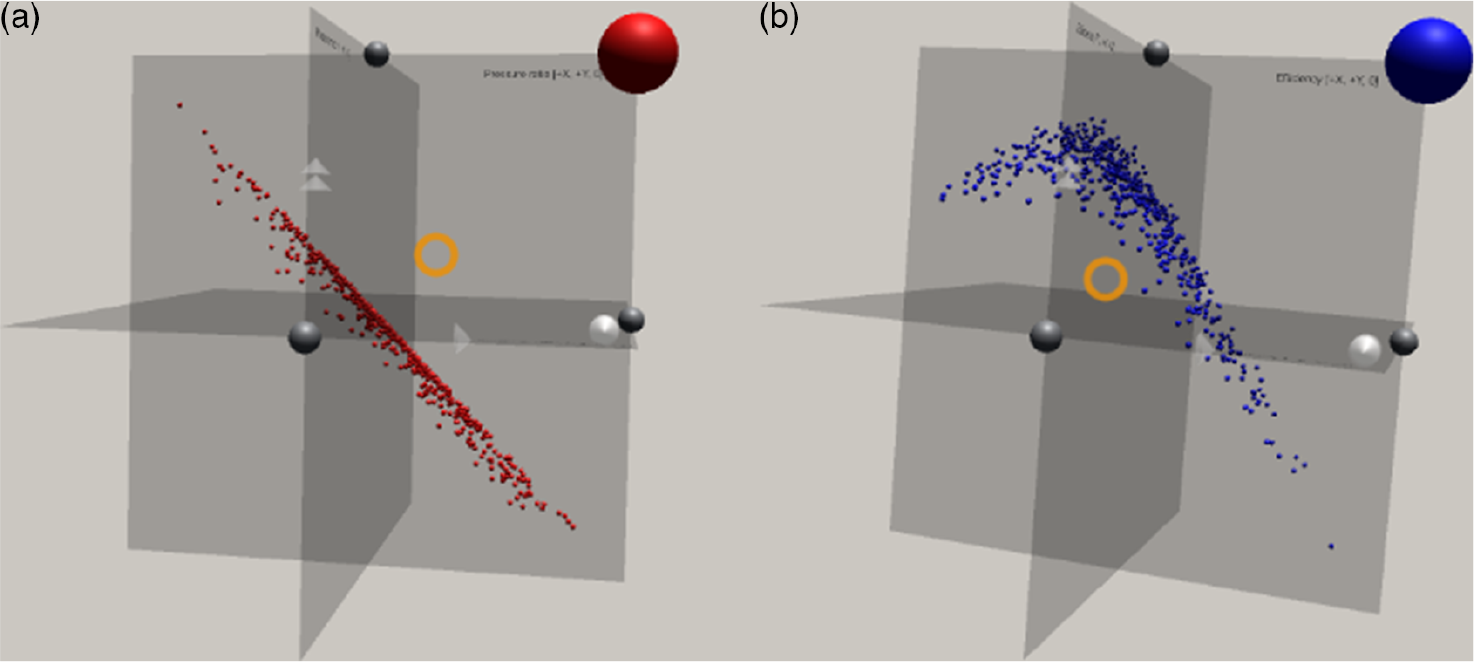

Figure 3. Sufficient summary plots of (a) pressure ratios; (b) efficiency presented in Fig. 2 as seen by the user in the AeroVR environment. Based on work in Seshadri et al(Reference Seshadri, Shahpar, Constantine, Parks and Adams12).

3.2 Function structures

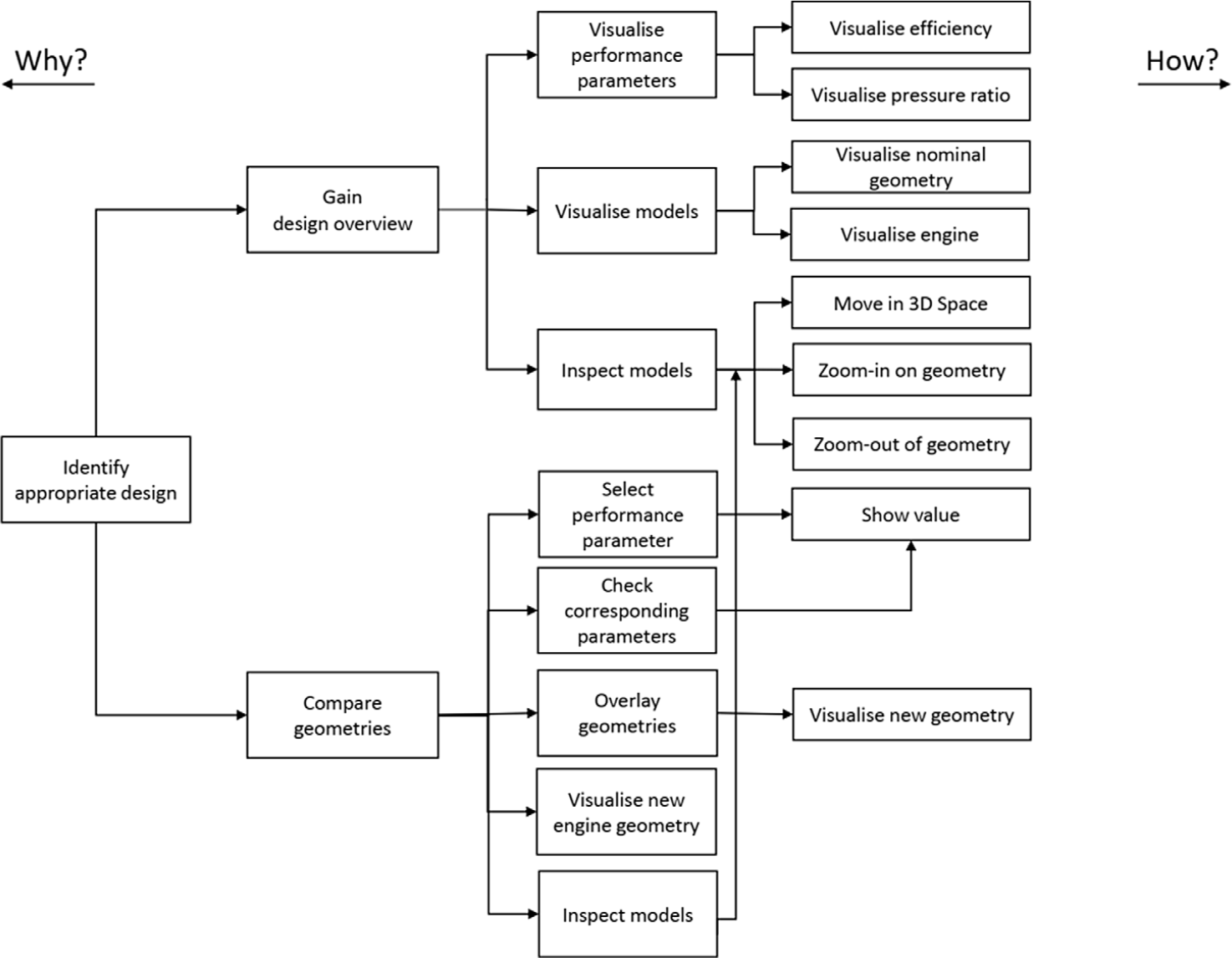

We model the function structures of the system using Function Analysis Systems Technique (Reference Shefelbine, Clarkson, Farmer and Eason34) (FAST). Figure 4 shows the function structures of the VR visualisation environment for an aerospace design workflow with dimension reduction. The FAST-diagram in Fig. 4 models the level of abstraction on the horizontal axis and function sequence on the vertical axis.

Figure 4. The user’s field-of-view: The right-hand plot can, for example, show the lift coefficients whereas the left-hand side plot can contain the drag coefficient values. The nominal blade geometry is visualised in the middle between the two plots. The orange circle is a cross-hair signalising where user is looking at the moment.

Figure 5. A functional model of the interactive system.

3.3 System structure

We model the system internal structure by observing the internal flow of signals between the individual system elements. The visualisation consists of four distinguishable parts: (a) the user who is responsible for all the actions of the system once the data had been loaded and visualised, (b) efficiency and pressure-ratio 3D scatter plots, (c) blade model, and (d) engine geometry model. The signals are usually bi-directional and can introduce a chaining effect. For instance, when user is gazing over an interactive object, which is internally facilitated by the ray-tracing, the object highlights itself, that is, the user receives a return feedback signal in the form of a visual clue. Moreover, selection of a data point on the scatter plot through an implementation of the linking & brushing interaction technique, leads to a selection of the mapped data point on the other scatter plot and visualisation of a new geometry overlapping with the nominal shape. The signal flow analysis is presented on Fig. 5. The main signal flows are decomposed into:

(a) The user: The user interacts with the system using a combination of gaze-tracking and ray-tracing. This works as follows. Gaze-tracking is achieved with the help of a cross-hair in the middle of users field of view, placed a certain, fixed distance along the cameras forward direction. Rays extending from the cross-hair are constantly checked for intersection with other interactive objects i.e. data points on the scatter plots. If such an interaction occurs, the object automatically highlights, providing a signal to the user that it can be interacted with. The way in which the user directly receives signals from other parts of the visualisation is unidirectional, that is, a user’s action results in a visual response. The way the user interacts with other objects is through a combination of gaze-tracking and ray-tracing (i.e. an orange cross-hair, see Figs 6 and 8) as well as actions invoked with a tap of a button (see Fig. 1).

Figure 6. The diagram shows how the signals are flowing within the system between its four main components: (a) the user grouped together with a controller used for user input; (b) a set of performance parameters visualised as the 3D scatter plots, in this case, efficiency and pressure-ratio 3D scatter plots; (c) blade geometry model; and (d) complete engine geometry model.

Figure 7. The Xbox controller: (a) shows the top view with the left-hand joystick [J] used to control the 2D movement on the

$\textit{X}\text{-}\textit{Z}$

plane whereas (b) shows the front view with the two triggers [T] responsible for vertical movement along the Y axis. The other action buttons indicated in (a) have the following meanings: [R] for reload of the visualisation; [L] for loading next dataset; [A] for selection of an interactive item; and [X] for moving or rotating the scatter plots.

$\textit{X}\text{-}\textit{Z}$

plane whereas (b) shows the front view with the two triggers [T] responsible for vertical movement along the Y axis. The other action buttons indicated in (a) have the following meanings: [R] for reload of the visualisation; [L] for loading next dataset; [A] for selection of an interactive item; and [X] for moving or rotating the scatter plots.

Figure 8. By selecting any data point on any plot, the user can immediately observe the blade’s geometry associated with this particular design. Moreover, the user can observe and compare the differences between the nominal and perturbed geometries as the former is kept rendered as a semi-transparent shape overlaying the latter. As users can freely manoeuver in 3D space they can visually inspect the entire blade from any direction and zoom in on any of its parts, as shown in (a–f).

Figure 9. The entire visualisation as it is seen by the user with the complete engine model in the back and the two 3D scatter plots and the nominal blade geometry (in blue) in front. The hub with a series of blades is also shown (in blue).

Figure 10. The same view as in Fig. 9 with a single data point selected on one of the scatter plots (the one on the bottom-right). The nominal blade geometry was rendered as semi-transparent shape with a new geometry superimposed on top of it. The engine hub with a new series of blades is also shown (in tan).

(b) 3D scatter plots: The scatter plots receives signals from the user through a mixture of gaze-tracking and ray-tracing inputs combined with the tap of a button on the controller. This is reflected back to the user by, for example, highlighting scatter plots elements, such as data points or movement selectors, that are being gazed over or changed their color after selection. In turn, this action invokes unidirectional changes in the visualised blade geometry and the turbofan engine.

(c) Blade geometry model: The blade geometry visualisation receives signals from both scatter plots by the user performing a selection of a data point on any of the plots, which automatically visualises the new blade geometry. Moreover, even though the user cannot directly influence the geometry, by using the movement and manoeuvering techniques in the system, the user can inspect the geometry by zooming in on its internal and external surfaces. Hence the relation between the scatter plots and the blade is unidirectional, whereas the relation between the user and the blade model is bidirectional. Furthermore, once the new blade has been visualised, the visualisation of the hub with blades in the engine model simultaneously changes as well. This can be thought of as another unidirectional relation as it cannot happen the other way around.

(d) Engine geometry model: Once the new geometry shape is selected by the user, the blade row with the series of blades embedded in the engine model is automatically replaced. This change is immediately visible to the user providing visual feedback.

3.4 Task analysis

From the limitations imposed by the current state and understanding of the VR environment and from our own analysis of the system achieved by the FAST analysis (see Fig. 4) we identified two primary, high-level tasks:

T1—Gaining design overview: The system should permit the user to easily gain an overview of the entire design space i.e. possible blades geometries together with their associated performance parameters.

T2—Compare geometries: The system should permit the user to easily compare the nominal blade geometry with the one associated with a particular set of performance.

These two main tasks (i.e. T1 and T2) were supported and augmented by a number of low-level tasks:

T3—Movement and interaction: Due to the nature of spatial, 3D immersive workspace provided by the VR environment, this task has a dominant and a supporting role with respect to all the other tasks. Movement, maneuvering and interaction are achieved through the gaze-tracking and with the help of a gamepad controller. All movement facilitated by either the joystick [J] or triggers [T] (see Fig. 7) takes place with respect to the users gaze (see orange cross-hair on Fig. 6). Moreover, the user can interact with an object through gazing over an object and tap of a button (see Fig. 7). Zooming in or out on a part of the visualisation is also achievable by the user’s movement in the virtual space.

T4—Visualisation of performance parameters: The performance parameters, such as efficiency and pressure ratio that were used in our case, are visualised as an interactive 3D scatter plots floating in the 3D space. These can be freely moved, rotated about each of the main axes and implements the linking & brushing interaction technique i.e. changes in one scatter plot are simultaneously reflected on the other scatter plot and blade and engine visualisations as well. This task mainly supports T1.

T5—Visualisation of blades and engine models: The nominal blade geometry and associated engine visualisation are immediately visible at the start of the visualisation. Once the new geometry is selected, the nominal blade renders semi-transparent and the shape of the new blade is superimposed over it. Moreover, the hub with a row of blades are substituted with the new geometries in the engine model.

T6—Models inspection: The inspection of the changes in the engine visualisation and the blade geometry itself can be made through the T3 task.

3.5 Visualisation framework

The visualisation framework is built using Unity3D—one of the most widely used game engines with built-in VR development support. Both of the two mainstream VR headsets provide supporting packages developed natively for Unity3D, which substantially speeds up the development process. This software is built on top of the Unity VR Samples pack (35) and uses the Oculus Utilities for Unity (Reference Oculus36) package as well as parts of the Unity asset(37). In addition, we use the asset store available for the Unity3D game engine, which contains many VR-ready tools and supporting packages.

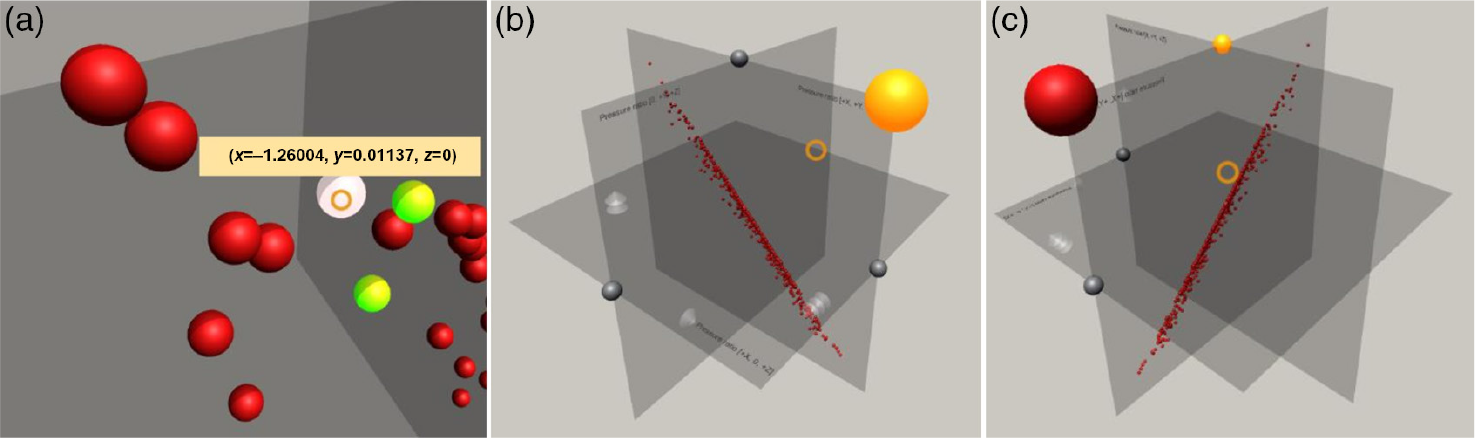

A survey by Wagner et al(Reference Wagner, Blumenstein, Rind, Seidl, Schmiedl, Lammarsch and Aigner38) highlights that game engines “do not support any data exploration”(Reference Wagner, Blumenstein, Rind, Seidl, Schmiedl, Lammarsch and Aigner38) techniques. In other words, these features have to be designed and implemented from scratch. To allow user interaction with data we use an Xbox Controller connected with the laptop via USB cable (see Fig. 1(a)) in combination with gaze-tracking through a cross-hair which moves with the user’s head and is placed straight from the camera (visualised as an orange cross-hair, see Figs 6, 8 and 11).

Figure 11. The scatter plot of the pressure ratios data (see Figs 2(a) and 3(a)) as it is seen by the user. Figure (a) shows a user’s gaze (orange cross-hair) hovering over a data point which instantly displays the associated values (e.g. its coordinates). Visible, formerly selected points (in green) are reflected on the other scatter plot and the associated geometries are also shown. Figure (b) shows the same plot from a distance. The highlighted spheres (in orange) are the movement selectors: If the user gazes at any point in space and taps the [X] button on the controller the plots will be translated towards that point in space. Figure (c) shows a rotation by ![]() $90^{\circ}$

towards the user along the Y-axis with the axis rotation selector highlighted (in orange). Only a single rotation selector can be active at once across all the scatter plots.

$90^{\circ}$

towards the user along the Y-axis with the axis rotation selector highlighted (in orange). Only a single rotation selector can be active at once across all the scatter plots.

3.6 Interaction and movement

The interaction is designed around gaze-tracking in combination with the standard buttons on the Xbox(19) controller (see Fig. 7).

Supported interactions are mapped as follows:

• Left-hand joystick and [T] buttons (see Fig. 7): Triggers movement along the

$\textit{X}\text{-}\textit{Z}$

plane and movement along the vertical axis respectively, right-hand [T] is assigned to “up” and left-hand [T] is “down”. The movement in the

$\textit{X}\text{-}\textit{Z}$

plane and movement along the vertical axis respectively, right-hand [T] is assigned to “up” and left-hand [T] is “down”. The movement in the  $\textit{X}\text{-}\textit{Z}$

plane is always with respect to the user’s gaze. This manoeuvring combination permits the user to move in any direction and in any position in 3D space. The user moves with constant velocity and with fluid movement to ensure the user is receiving continuous closed-loop visual feedback on their changing position in relation to the surroundings.

$\textit{X}\text{-}\textit{Z}$

plane is always with respect to the user’s gaze. This manoeuvring combination permits the user to move in any direction and in any position in 3D space. The user moves with constant velocity and with fluid movement to ensure the user is receiving continuous closed-loop visual feedback on their changing position in relation to the surroundings.• Action button [A]: Selects an interactive element, such as a scatter plot rotation and movement selector, or a data point (see Fig. 11). Objects highlight themselves when the user’s gaze, as indicated by a cross-hair, is on them. Double-tapping on the [A] button selects the highlighted object.

• Button [X]: When tapped after the selection of a scatter plot point, it will re-position the point to a certain distance towards the user’s present gaze direction. Furthermore, if the rotation selector is active, selecting this button will initiate the scatter plot’s rotation over

$90^{\circ}$

based on the current direction of the user’s gaze.

$90^{\circ}$

based on the current direction of the user’s gaze.• Button [R]: Resets the visualisation and all its associated elements to their original state.

• Button [L]: This button loads the next dataset: a new set of performance parameters and associated blade geometries.

3.7 Blade and engine visualisations

As alluded to previously, the central artifact in our VR environment is the geometry of the designs. Our virtual environment contains as many geometries as there are data points, resulting in a total of 548 stereo lithography (STL) files. Hence, whenever a data point is selected on one of the plots, the accompanying shape is instantly visualised. To provide the user with a quick and an effective way of comparing the new perturbed design, the nominal geometry is still kept visible and rendered as a translucent object, as can be seen in Fig. 8. This solution, combined with unlimited movement dexterity, allows the user to visually inspect and observe any differences between the two geometries. Furthermore, by simply changing their position, or by tilting their head (thereby changing the rotational angle), the user can zoom-in and zoom-out on any of the blades parts for a close inspection as presented in Fig. 8(f).

The visualised engine model(Reference Shakal39) (see Fig. 9) consists of six independent parts including the hub with connected blades. When a new blade geometry is being investigated by the user, the blades visible in the engine are automatically replaced as well. Due to limitations imposed by the used CAD model itself, it was not possible to substitute the blades individually, thus the entire hub with the series of attached blades could be replaced altogether, which is signaled to the user by changing color of this entire part (see Fig. 10).

3.8 Sufficient summary plots

The key element in the framework is the sufficient summary plots; they are visualised as three fixed-size, axis-aligned translucent orthogonal rectangles. The data points are scaled so the values of their respective coordinates are within the range of the translucent surfaces. When any of the spheres denoting a data point is selected, the marker lights up and switches to a selection color (light green). Moreover, as we have a 1:1 mapping between the plots, the corresponding design on the other plot is selected. Furthermore, a number of semitransparent conesFootnote †was embedded into these sufficient summary plots to denote their axes: one for the X-axis, two for the Y-axis and three for the Z-axis. Selection of a shape with its pointing tip has an additional advantage—the orientation informs the user of the positive side of a given axis. Each selection can be reverted by double-tapping the [A] button while gazing over it.

The 3D spheres in the plots were used to denote both the data points and various selectors’ markers. Using shape perception has a long-standing application history for VR-based visualisations. For instance, Ribarsky et al(Reference Ribarsky, Bolter and Van Teylingen40) used simple 3D shapes such as cones, spheres and cuboids in their system. They also highlight that glyphs with their intrinsic characteristics, such as “position, shape, color, orientation and so forth” (Reference Ribarsky, Bolter and Van Teylingen40) are very useful when visualising complex datasets.

3.8.1 Initial placement and re-positioning

The two scatter plots—one for pressure ratio and another for efficiency—are automatically positioned on both sides of the blade, which in turn is positioned in front of the initial user’s field of view; see Fig. 6. The plots are placed at the same, pre-configured distance from the user, at a roughly ![]() $45^{\circ}$

angle from the X-axis.

$45^{\circ}$

angle from the X-axis.

To ensure that the user does not feel constrained in a nearby region and to make better use of virtually infinite 3D space provided by the VR-environment, users are provided with the possibility of moving the scatter plots. The interaction occurs via gaze-tracking and the select & move metaphor. Every scatter plot has a color-coded interactive sphere, that is, a selector (see Fig. 11(b)), attached to it in the right-hand top corner. When the user’s gaze hovers over it, the selector automatically highlights it and, if selected by double-tapping the [B] button, changes its color to orange (see Fig. 11(b)). If the user presses the [X] button while a selection is active, the scatter plot is re-positioned at a certain distance towards the point determined by the user’s current gaze. If the button is held the plot will follow the cross-hair’s movement.

It is also possible to move both plots at once if more selectors are simultaneously active. In such a scenario, to keep the current relative position of these scatter plots, a barycentre ![]() $B=(x_B, y_B, z_B)$

of all these objects is calculated using the formula:

$B=(x_B, y_B, z_B)$

of all these objects is calculated using the formula:

\begin{equation}B = \frac{1}{N}\left(x_B=\sum_{i=0}^{N} x_i, y_B=\sum_{i=0}^{N} y_i, z_B=\sum_{i=0}^{N} z_i\right).\end{equation}

\begin{equation}B = \frac{1}{N}\left(x_B=\sum_{i=0}^{N} x_i, y_B=\sum_{i=0}^{N} y_i, z_B=\sum_{i=0}^{N} z_i\right).\end{equation}

where ![]() $(x_i, y_i, z_i)$

are coordinates of the plots’ individual centres. This point is moved along the forward vector from the camera in the same manner as in the case of a single scatter plot. Selected objects are then grouped together and displaced with respect to the new position of the barycentre whilst simultaneously keeping their internal (current position with respect to the local axes) and external (axis-alignment of surfaces) rotations.

$(x_i, y_i, z_i)$

are coordinates of the plots’ individual centres. This point is moved along the forward vector from the camera in the same manner as in the case of a single scatter plot. Selected objects are then grouped together and displaced with respect to the new position of the barycentre whilst simultaneously keeping their internal (current position with respect to the local axes) and external (axis-alignment of surfaces) rotations.

3.8.2 Scatter plot rotation

The scatter plot can be rotated in ![]() $90^{\circ}$

steps about one of the three main axes. This is achieved by double-tapping the [A] button while gazing over one of the three rotation selectors (see Fig. 11(b–c)). A further press of the [X] button will result in a rotation of all the plot’s components, including data points, rotation and movement selectors and axis cone markers. If users are situated in such a way that their gaze is located exactly in front of the active axis selector, the rotation will occur towards the direction provided by the camera’s forward vector. Similar to the movement, the plot’s orientation in the global coordinate system will not be affected.

$90^{\circ}$

steps about one of the three main axes. This is achieved by double-tapping the [A] button while gazing over one of the three rotation selectors (see Fig. 11(b–c)). A further press of the [X] button will result in a rotation of all the plot’s components, including data points, rotation and movement selectors and axis cone markers. If users are situated in such a way that their gaze is located exactly in front of the active axis selector, the rotation will occur towards the direction provided by the camera’s forward vector. Similar to the movement, the plot’s orientation in the global coordinate system will not be affected.

3.8.3 Relationships between the visualisation elements

The data points on one of the plots are correlated in a 1:1:1 (one-to-one-to-one) manner onto the other plot and vice-versa. Moreover, each of the data points is mapped onto one-and-only-one unique blade design. Therefore, whenever a marker is selected on one of the plots, the system will automatically highlight the corresponding data point on the other plot and switch the visualised blade onto the new, corresponding shape. Furthermore, the nominal shape will be kept as a translucent point of reference (see Fig. 8) that overlays the new design to show the user how, where, and to what degree, the new shape differs from the nominal one.

3.8.4 Labelling

Both the data points and the axes are automatically labeled. In case of the latter, the strings embedded into the edges of the semitransparent rectangles denoting the axes are read directly from the input text file (see Fig. 11(a)). The labelling of the data points is only visible once the user is hovering with his or her gaze over the marker (for example, a sphere) and disappears once the user looks at another point, or other parts of the visualisation (see Fig. 11(a)). Furthermore, the small box with the values associated with the point (in this case its coordinates) is always rotated towards the user and follows their gaze. It is rendered on top of any other visualisation elements as seen in Fig. 11(a).

4.0 INTERFACE VERIFICATION

The VR aerospace design environment in this paper is still at an early stage and the objective of this paper is not to present a complete solution but to demonstrate potential benefits of VR for aerospace design.

Here, we verify the fundamental usability of the system using two formative evaluation methods. First, we assess the usability of the system using Nielsen’s(Reference Nielsen41,Reference Nielsen42) guidelines. Second, we use the cognitive dimensions of notations framework(Reference Green43,Reference Green44) to reason about the expressiveness of the system.

4.1 Usability

• Visibility of system status: The system provides immediate feedback to users in response to their actions. For instance, whenever a user’s gaze hovers over an interactive object (such as, for example, movement and rotation selectors or a data point) it is instantly highlighted. In addition, once an object is selected, the object also changes its color in response. In addition, following the selection of a data point, its corresponding data points on the other plot are also simultaneously selected and the accompanying geometry is loaded automatically. This ensures the user remains synchronised with the system’s current state. Finally, whenever the user’s gaze is hovering over an object, a gaze-locked text is also displayed to the user, which reveals values associated with this particular data point.

• Match between the system and the real-world: First, the system uses the cross-hair concept which is well known in the real-world to focus and help guiding the users’ gaze on objects placed directly behind or near it. Moreover, the 3D scatter plots were designed to immediately resemble their two- or three-dimensional desktop-based counterparts. In addition, initially a user observes all the main elements of the visualisation in the field-of-view placed at roughly the same height and direction as how they would be perceived in the real-world if they were visualised on standard computer displays.

• User control and freedom: The user can either load the new set of data or reload the entire visualisation with a click of a button. No direct “undo” and “redo” actions were directly implemented, however, users can always deselect any object or redo the last executedoperation. Moreover, using a combination of the gaze-tracking and controller-based interaction, users can locate themselves at any position in 3D space.

• Consistency and standards and flexibility and efficiency of use: As the VR in its current, almost fully immersive form, is a fairly recent development, the technology itself, not to mention its main applicability areas or interaction design principles, is not yet fully understood. However, the system design is as consistent as possible, for instance, all interactive objects can be (de)selected using exactly the same method.

• Error prevention: Measures to prevent the user from errors, such as missing or broken input data (for example, geometries and data points), are directly built into the system. As there is a 1:1:1 mapping between the system elements (data points) on the two plots and the geometries, missing any of the elements would lead to omitting this particular entry from the visualisation and detailed information of such event being written into the log file. Hence, the main error-prone conditions are eliminated. In addition, the system incorporates certain constraints, such as a user cannot have more than a single axis-rotation selected at the time—the new selection will automatically deselect any previously activated selector. This prevents an error caused by the system being unable to recognise about which axis the scatter plot should be rotated.

• Help users recognise, diagnose, and recover from errors: There are not many errors that user can commit, assuming the dataset is correct. The countermeasures against plotting incomplete data are built-in into the system. However, due to the nature of the visualisation, erroneous blade geometries or any anomalies in their shapes will be detected by the user in a close-up inspection possible through the mixture of movement and maneuvering in the 3D space. The same can be said about the 3D scatter plots where user is able to rotate them and see them from every direction and can zoom in and zoom out from any data point using the same techniques.

• Recognition rather than recall and aesthetic and minimalist design: The blade’s geometry is visualised as a replication of its physical appearance in the real-world. Moreover, both plots use volumetric glyphs to denote the points which reassembles the 2D scatter plots versions (see Fig. 11). Previously selected data points are also highlighted. Furthermore, the system was designed to be minimalistic—only the efficiency and pressure ratio plots together with the geometry visualisation are included to avoid overloading the user with information. Hence, for instance, the values of the data points are not initially visible, however, the scatter plots offer a possibility of gaining a high-level understanding of the data at a first glance. More detail of each individual point is available on an on-demand basis when the gaze cursor hovers over a data marker.

• Help and documentation: A succinct single page documentation sheet describing the interaction techniques is provided to the users.

4.2 Expressiveness

The expressiveness of the system is here analysed using the cognitive dimensions of notation framework(Reference Green43). This framework provides a vocabulary for analysing the possibilities and limitations of an interactive notational system. Below we articulate how the keywords in this vocabulary maps onto the expressiveness of the system.

• Closeness of mapping: The visualised geometry is a detail mapping of how would the blade would look like in the real-world.

• Consistency: All interactions are consistently designed and all interactive objects have consistent interaction qualities, such selectors.

• Diffuseness/terseness: The number of used symbols is minimised by only using sphere-like markers with their characteristics (such as color, size and relative placement) to denote all the interactive elements of the visualisation.

• Error-proneness: Errors are prevented using prevention mechanisms against error states, such as selection of multiple rotation-selectors.

• Hard mental operations: Cognitive load and mental demand is kept to a minimum with straight-forward interaction methods and use of comparative visualisations.

• Hidden dependencies: As there is a 1:1:1 mapping between the visualisation elements all the interdependencies are easily observed by the user since selection of a data point on one of the plots leads to a simultaneous selection of a corresponding data point on the other plot as well and the visualisation of the associated geometry.

• Juxtaposability and visibility: All three main parts of the visualisation, that is, the efficiency and pressure ratio plots together with the blade’s geometry, are initially placed next to each other. Furthermore, the plots can be freely rearranged in the space as a group or individually. Moreover, the selection of any data point in a plot is automatically mapped on to the other plot as well and the corresponding geometry is immediately visible. In addition, selected data points are clearly visible through change in color and luminosity.

• Premature commitment: The user’s workflow with the system is flexible and a user is free to initially inspect the nominal geometry, any or both plots, or to immediately select a data point.

• Progressive evaluation: Since all the user’s actions result in immediate visual feedback (closed-loop interaction) the progress of the visual analytics task can be evaluated by the user at any time.

• Role-expressiveness: The individual roles of the three components, that is, the efficiency and pressure-ratio plots and the blade’s geometry, are clear from the beginning, especially if the system is used by a domain expert.

5.0 CONCLUSIONS AND FUTURE WORK

The goal in this paper has been to introduce the AeroVR system—a novel VR aerospace design environment with a particular emphasis on dimension reduction. We have identified the main structures of the design environment and implemented a fully working system for commodity VR headsets. We have also verified the interface from two perspectives: usability and expressiveness.

The two main identified tasks were (i) gaining the overview over the design and (ii) comparing the nominal geometry with the one associated with a specific performance parameters. The former was achieved through a mixture or visualisation (e.g. blade and engine geometry, and scatter plots) and lower-level tasks (e.g. movement and interaction). The latter was achieved through visualisation of the overlaying geometries: semi-transparent nominal blade superimposing over solid shape of a new design.

Moving forward, our goal is to undertake the complete 3D design of a turbomachinery component in VR. In addition to the sufficient summary plots, our goal is to incorporate characteristics of blade performance at multiple operating points and have reduced order models to estimate the performance characteristics of new designs.

In forthcoming years, the cost of the headset, and the required computing resources, will be further minimised with the introduction of next-generation of controllers, wireless headsets (e.g. Oculus Quest(Reference Oculus2)), and gestures (e.g. Leap Motion(45)), or speech-based interfaces. Simultaneously, these rapid advancements in hardware and software open up completely new possibilities in terms of interaction techniques, rapid information analysis and the amount of data that can be processed and visualised at once. The two most promising venues of further development of the AeroVR are investigation of which interaction techniques may bring the most benefit to the user and integration of our system with a system operating on knowledge from domain expert, i.e. a knowledge-based system (Reference Jarke, Neumann, Vassiliou and Wahlster46,Reference Nalepa47) . The former would include adding either controller-based laser-pointing or hand-tracking capabilities or a combination of thereof depending of the particular user’s needs. The latter would require to develop and integrate a knowledge-(data)base (Reference Jarke, Neumann, Vassiliou and Wahlster46) with the interface provided by our system to build a knowledge-based system (Reference Jarke, Neumann, Vassiliou and Wahlster46,Reference Nalepa47) .

ACKNOWLEDGEMENTS

This work was supported by studentships from the Engineering and Physical Sciences Research Council (EPSRC-1788814), and the Cambridge European & Trinity Hall Scholarship. The second author acknowledges the support of the United Kingdom Research and Innovation (UKRI) Strategic Priorities Fund, managed by the Engineering and Physical Sciences Research Council (EPSRC); grant number EP/T001569/1. The authors are grateful for all the generous support. The authors would also like to thank Timoleon Kipouros for his valuable suggestions.

SUPPLEMENTARY MATERIAL

To view supplementary material for this article, please visit https://doi.org/10.1017/aer.2020.49