Background

Psychiatric disorders can be treated with various psychotropic drugs. With a wide variety of drugs available, choosing the most appropriate one can be difficult, highlighting the importance of good evidence. Clinicians must be able to trust that there is strong evidence that the drug is effective. In the USA, drugs must be approved by the Food and Drug Administration (FDA) before they can be marketed. Although many aspects of a drug's profile must be considered in the approval process, the statistical evaluation of efficacy plays a central role. The FDA states that substantial evidence for efficacy is provided by ‘at least two adequate and well-controlled studies, each convincing on their own’ (U.S. Food and Drug Administration, 1998, p. 3). Occasionally, efficacy can also be established based on ‘data from one adequate, well-controlled clinical investigation’ (U.S. Food and Drug Administration, 1998, p. 3) or existing efficacy studies of closely related drugs, for example for modified-release variants of previously approved drugs (U.S. Food and Drug Administration, 1997, 1998; Wang et al., Reference Wang, Chang, Yang, Chang, Kuan, Tu, Hong and Hsu2019).

In some cases, the current statistical evaluation process may lead to suboptimal decisions. The assumption that at least two independent randomised controlled trials (RCTs), or even fewer, provide substantial evidence of drug efficacy has been questioned (Monden et al., Reference Monden, Roest, van Ravenzwaaij, Wagenmakers, Morey, Wardenaar and de Jonge2018). The FDA decision process does not systematically combine the information from positive (i.e. p < 0.05, rejecting the null hypothesis of no treatment effect) and negative (i.e. p > 0.05, not rejecting the null hypothesis) trials. As such, crucial information like the number of trials conducted before obtaining two positive trials is ignored (van Ravenzwaaij & Ioannidis, Reference van Ravenzwaaij and Ioannidis2017, Reference van Ravenzwaaij and Ioannidis2019). Instead, the Bayes factor (BF) has been suggested as a measure to quantify evidence holistically (Goodman, Reference Goodman1999; Monden et al., Reference Monden, de Vos, Morey, Wagenmakers, de Jonge and Roest2016, Reference Monden, Roest, van Ravenzwaaij, Wagenmakers, Morey, Wardenaar and de Jonge2018). In contrast to p values, BFs quantify evidence in favour of both the null hypothesis (i.e. no treatment effect) and the alternative hypothesis (i.e. a treatment effect) by comparing the relative likelihood of the observed data under either hypothesis (Gronau, Ly, & Wagenmakers, Reference Gronau, Ly and Wagenmakers2019; Jeffreys, Reference Jeffreys1961; Rouder, Speckman, Sun, Morey, & Iverson, Reference Rouder, Speckman, Sun, Morey and Iverson2009; van Ravenzwaaij & Ioannidis, Reference van Ravenzwaaij and Ioannidis2019). For instance, a BF 10 (where the subscript indicates that the BF quantifies the likelihood of the alternative hypothesis relative to the null hypothesis) of 30 indicates the observed data are 30 times more likely to have occurred under the alternative hypothesis than under the null hypothesis (this is considered strong evidence for the alternative hypothesis; Jeffreys, Reference Jeffreys1961). Alternatively, a BF 10 of 0.2 (or 1/5) indicates the observed data are five times more likely to have occurred under the null hypothesis than under the alternative hypothesis. Finally, a BF around 1 indicates equipoise (i.e. the data are about equally likely to have occurred under either hypothesis).

BFs may not only aid drug approval, but also drug prescription by clinicians. Besides effect sizes, which indicate the magnitude of the effect (Sullivan & Feinn, Reference Sullivan and Feinn2012), strength of evidence as quantified through BFs indicates how likely an effect (of any positive size) is to exist. Ideally, effect sizes should be clinically meaningful and the strength of evidence sufficiently large that the drug can be considered effective with relative certainty. There is a great body of literature regarding effect sizes of psychotropic drugs (Cipriani et al., Reference Cipriani, Furukawa, Salanti, Chaimani, Atkinson, Ogawa and Geddes2018; Cortese et al., Reference Cortese, Adamo, Giovane, Mohr-Jensen, Hayes, Carucci and Cipriani2018; Huhn et al., Reference Huhn, Nikolakopoulou, Schneider-Thoma, Krause, Samara, Peter and Leucht2019; Leucht, Helfer, Gartlehner, & Davis, Reference Leucht, Helfer, Gartlehner and Davis2015). However, little is known about the evidential strength of these drugs, which can differ despite homogeneous effect sizes. For example, in a previous study adopting a Bayesian framework, sertraline, fluoxetine, and desvenlafaxine had similar estimated effect sizes, but strength of evidence differed by a factor of two (Monden et al., Reference Monden, Roest, van Ravenzwaaij, Wagenmakers, Morey, Wardenaar and de Jonge2018). Especially in situations such as these, BFs can offer an important additional source of information.

Evidential strength might differ between psychotropic drug groups as well as within. Previous research has shown that there are clear differences among psychotropic drug groups in terms of effect size (Leucht et al., Reference Leucht, Helfer, Gartlehner and Davis2015; Turner, Knoepflmacher, & Shapley, Reference Turner, Knoepflmacher and Shapley2012). There are also indications that trial programmes differ between drug groups: for instance, drug approvals of antidepressants for anxiety disorders were generally supported by fewer trials than approvals of antidepressants for depression (Roest et al., Reference Roest, De Jonge, Williams, de Vries, Schoevers and Turner2015; Turner, Matthews, Linardatos, Tell, & Rosenthal, Reference Turner, Matthews, Linardatos, Tell and Rosenthal2008). Although, to the best of our knowledge, there is no formal policy towards different standards for drug approval, these differences may lead to differences in the typical strength of evidence for different drug groups. However, little is known about the extent to which such factors influence the typical strength of evidence for different drug groups.

The present study

The goal of this study is to examine whether there are systematic differences in the strength of evidence for efficacy at the time of approval between different groups of psychotropic drugs. We consider four major classes: antidepressants approved for depression, antidepressants approved for anxiety disorders, antipsychotics for schizophrenia, and attention deficit hyperactivity disorder (ADHD) medication. We examine whether the current evaluation process generally leads to psychotropic drugs supported by substantial evidence (at the time of approval). To determine whether there are systematic differences across drug groups in terms of strength of evidence, we compare them across the disorder groups and investigate whether trial programme characteristics (e.g. effect sizes and sample sizes) are related to the strength of evidence per drug within each drug group.

Method

This study involved publicly available trial-level data. No ethical approval was needed.

Protocol and registration

Study information, details regarding prior knowledge of the data, and the analysis plan were preregistered at OSF before data analysis but after knowledge of the data. Deviations from the preregistration are reported in the online Supplementary material.

Data sources

Data sources were not identified by a systematic search. Instead, we obtained data for psychotropic drugs approved by the FDA. We used data extracted for previous meta-analyses, supplemented by data extracted by ourselves. Data on antidepressants for depression were obtained from Turner et al. (Reference Turner, Matthews, Linardatos, Tell and Rosenthal2008) and de Vries et al. (Reference de Vries, Roest, De Jonge, Cuijpers, Munafò and Bastiaansen2018), on antidepressants for anxiety disorders from de Vries, de Jonge, Van Heuvel, Turner, and Roest (Reference de Vries, de Jonge, Van Heuvel, Turner and Roest2016) and Roest et al. (Reference Roest, De Jonge, Williams, de Vries, Schoevers and Turner2015), and on antipsychotics for schizophrenia from Turner et al. (Reference Turner, Knoepflmacher and Shapley2012). We extracted additional data on medications for ADHD, and antipsychotics for schizophrenia approved after publication of Turner et al. (Reference Turner, Knoepflmacher and Shapley2012) from FDA reviews. No additional data extraction was necessary for depression or anxiety disorders, as no new antidepressants were approved for these indications after previous publications. We followed data extraction procedures originally proposed by Turner et al. (Reference Turner, Matthews, Linardatos, Tell and Rosenthal2008) described in detail elsewhere (Turner et al., Reference Turner, Knoepflmacher and Shapley2012). In short, for each drug we retrieved the corresponding FDA reviews from the FDA's website. Within the Drug Approval package, data relevant to the FDA's determination of drug efficacy were examined. Clinical phase II/III trials pivotal in the endorsement decision of the drug were eligible for inclusion, regardless of their outcome. Efficacy data were extracted preferably from the statistical review, and from the medical review or team leader memos, if necessary. In total, we included data for 15 antipsychotics (Nr trials = 43, n treatment = 9937, n control = 4303), 16 antidepressants approved for depression (Nr trials = 105, n treatment = 14 042, n control = 9917), nine antidepressants approved for anxiety (Nr trials = 59, n treatment = 8745, n control = 6618), and 20 drugs approved for ADHD (Nr trials = 46, n treatment = 5705, n control = 3508).Footnote †Footnote 1 For anxiety, we focused on generalised anxiety disorder (GAD), obsessive compulsive disorder (OCD), panic disorder (PD), post-traumatic stress disorder (PTSD), and social anxiety disorder (SAD). Some drugs were approved for multiple anxiety disorders. Consequently, we included 21 endorsement decisions for anxiety, resulting in a total of 72 drug-disorder combinations.

We included data for all available short-term, placebo-controlled, parallel-group, and cross-over phase II/III clinical trials. We excluded studies concerned with relapse or discontinuation of the medication, long-term extension trials, and studies without a placebo control group, as these do not qualify as ‘well-controlled’ trials (U.S. Food and Drug Administration, 2020). We excluded data on non-approved sub-therapeutic dosages (i.e. not effective dosages), as we were concerned with the evidence load regarding dosages associated with a therapeutic effect.

Statistical analysis

Analysis was conducted in R (4.0.1), using the ‘BayesFactor’ (0.9.12–4.2) (Morey, Rouder, Jamil, & Morey, Reference Morey, Rouder, Jamil and Morey2015) and ‘metaBMA’ (Heck, Gronau, & Wagenmakers, Reference Heck, Gronau and Wagenmakers2017) packages.

Individual BF and effect size calculation

We calculated t statistics using sample size and p values. For parallel-group trials, we used independent samples t tests and for cross-over trials, we used paired samples t tests (Higgins et al., Reference Higgins, Thomas, Chandler, Cumpston, Li, Page and Welch2019). We used two-sided tests, in concordance with the FDA policy. Following Monden et al. (Reference Monden, Roest, van Ravenzwaaij, Wagenmakers, Morey, Wardenaar and de Jonge2018), we calculated a t statistic for all dose levels in fixed-dose trials with multiple drug arms, whereas one t value was calculated for flexible-dose trials with a single drug arm. When precise p values were unavailable, t statistics were calculated based on other information (e.g. mean differences) or imputed (see online Supplementary material). To determine the strength of evidence that an effect exists, we calculated Jeffreys–Zellner–Siow BFs (Rouder et al., Reference Rouder, Speckman, Sun, Morey and Iverson2009; van Ravenzwaaij & Etz, Reference van Ravenzwaaij and Etz2020). For each comparison, BFs were calculated using t statistics and sample size of the drug and placebo groups. We used a default Cauchy prior with location parameter zero and scale parameter $1/\sqrt 2$![]() (Bayarri, Berger, Forte, & García-Donato, Reference Bayarri, Berger, Forte and García-Donato2012; Consonni, Fouskakis, Liseo, & Ntzoufras, Reference Consonni, Fouskakis, Liseo and Ntzoufras2018). As the FDA follows two-sided tests with a check for direction, thus de facto performing a one-sided test, we truncated below zero, and calculated one-sided BFs (Senn, Reference Senn2008).

(Bayarri, Berger, Forte, & García-Donato, Reference Bayarri, Berger, Forte and García-Donato2012; Consonni, Fouskakis, Liseo, & Ntzoufras, Reference Consonni, Fouskakis, Liseo and Ntzoufras2018). As the FDA follows two-sided tests with a check for direction, thus de facto performing a one-sided test, we truncated below zero, and calculated one-sided BFs (Senn, Reference Senn2008).

To determine the size of an effect, we calculated the standardised mean difference (SMD). For parallel group trials, we calculated the corrected Hedges g. For cross-over trials, the uncorrected SMD was used.

Model-averaged Bayesian meta-analysis

We implemented Bayesian model-averaging (BMA; Gronau, Heck, Berkhout, Haaf, & Wagenmakers, Reference Gronau, Heck, Berkhout, Haaf and Wagenmakers2021), as neither a fixed-effect model (assuming the same underlying ‘true’ effect-size) nor a random-effect model (being overly complex for meta-analysis of only a handful of trials) was believed to be necessarily best-suited for the present data. Instead, we weighted the results from both models according to their posterior probability, thus fully acknowledging the uncertainty with respect to the choice between a fixed or random-effect model (Gronau et al., Reference Gronau, Van Erp, Heck, Cesario, Jonas and Wagenmakers2017; Hinne, Gronau, van den Bergh, & Wagenmakers, Reference Hinne, Gronau, van den Bergh and Wagenmakers2020).

To conduct a Bayesian meta-analysis, prior distributions were assigned to the model parameters (Gronau et al., Reference Gronau, Van Erp, Heck, Cesario, Jonas and Wagenmakers2017). For the standardised effect size, we used a default, zero-centred Cauchy distribution with scale parameter equal to $1/\sqrt 2$![]() (Morey et al., Reference Morey, Rouder, Jamil and Morey2015). For the one-sided hypothesis test, we used the same distribution with values below zero truncated. For the between-study heterogeneity parameter τ in random-effect models, we used an informed prior distribution based on an analysis of 14 886 meta-analyses from the Cochrane Database of Systematic Reviews (Turner, Davey, Clarke, Thompson, & Higgins, Reference Turner, Davey, Clarke, Thompson and Higgins2012, Reference Turner, Jackson, Wei, Thompson and Higgins2015), namely a log normal distribution with mean μ = − 2.12 and standard deviation s.d. = 1.532. We performed one Bayesian meta-analysis per endorsement decision. This yielded pooled estimates of both effect size and strength of evidence for the effects (i.e. efficacy of a certain drug for a specific mental disorder), in the form of model-averaged BFs (BF BMA). A total of 63 Bayesian meta-analyses were performed. For nine drug-disorder combinations supported by a single two-arm trial, we did not conduct a Bayesian meta-analysis, but used the individual BF and effect size instead.Footnote 2 Hence, results are reported for 72 drug-disorder combinations.

(Morey et al., Reference Morey, Rouder, Jamil and Morey2015). For the one-sided hypothesis test, we used the same distribution with values below zero truncated. For the between-study heterogeneity parameter τ in random-effect models, we used an informed prior distribution based on an analysis of 14 886 meta-analyses from the Cochrane Database of Systematic Reviews (Turner, Davey, Clarke, Thompson, & Higgins, Reference Turner, Davey, Clarke, Thompson and Higgins2012, Reference Turner, Jackson, Wei, Thompson and Higgins2015), namely a log normal distribution with mean μ = − 2.12 and standard deviation s.d. = 1.532. We performed one Bayesian meta-analysis per endorsement decision. This yielded pooled estimates of both effect size and strength of evidence for the effects (i.e. efficacy of a certain drug for a specific mental disorder), in the form of model-averaged BFs (BF BMA). A total of 63 Bayesian meta-analyses were performed. For nine drug-disorder combinations supported by a single two-arm trial, we did not conduct a Bayesian meta-analysis, but used the individual BF and effect size instead.Footnote 2 Hence, results are reported for 72 drug-disorder combinations.

The resulting BF BMA were used to describe the proportion of well-supported endorsement decisions. We used different thresholds to quantify ‘substantial’ evidence. A BF 10 between 1/3 and 3 is interpreted as ambiguous evidence, while a BF 10 between 3 and 10 provides moderate, a BF 10 between 10 and 30 strong, and a BF 10 above 30 very strong evidence for the treatment effect (Jeffreys, Reference Jeffreys1961). Importantly, these thresholds are used for demonstrative purposes and we did not aim for just another hard threshold such as p < 0.05 (see Gelman, Reference Gelman2015).

Sensitivity analysis

To study the impact of the choices we made for the prior distributions on outcomes, we performed a sensitivity analysis by setting parameter estimates varied from $r = ( 1/6) \times \sqrt 2$![]() to $r = ( 3/2) \times \sqrt 2$

to $r = ( 3/2) \times \sqrt 2$![]() . Additionally, we inspected the differences between fixed-effect and random-effect models and how excluding imputed values or cross-over trials impacted the results. Details can be found in the online Supplementary material.

. Additionally, we inspected the differences between fixed-effect and random-effect models and how excluding imputed values or cross-over trials impacted the results. Details can be found in the online Supplementary material.

Results

Proportion of studies supported by substantial evidence

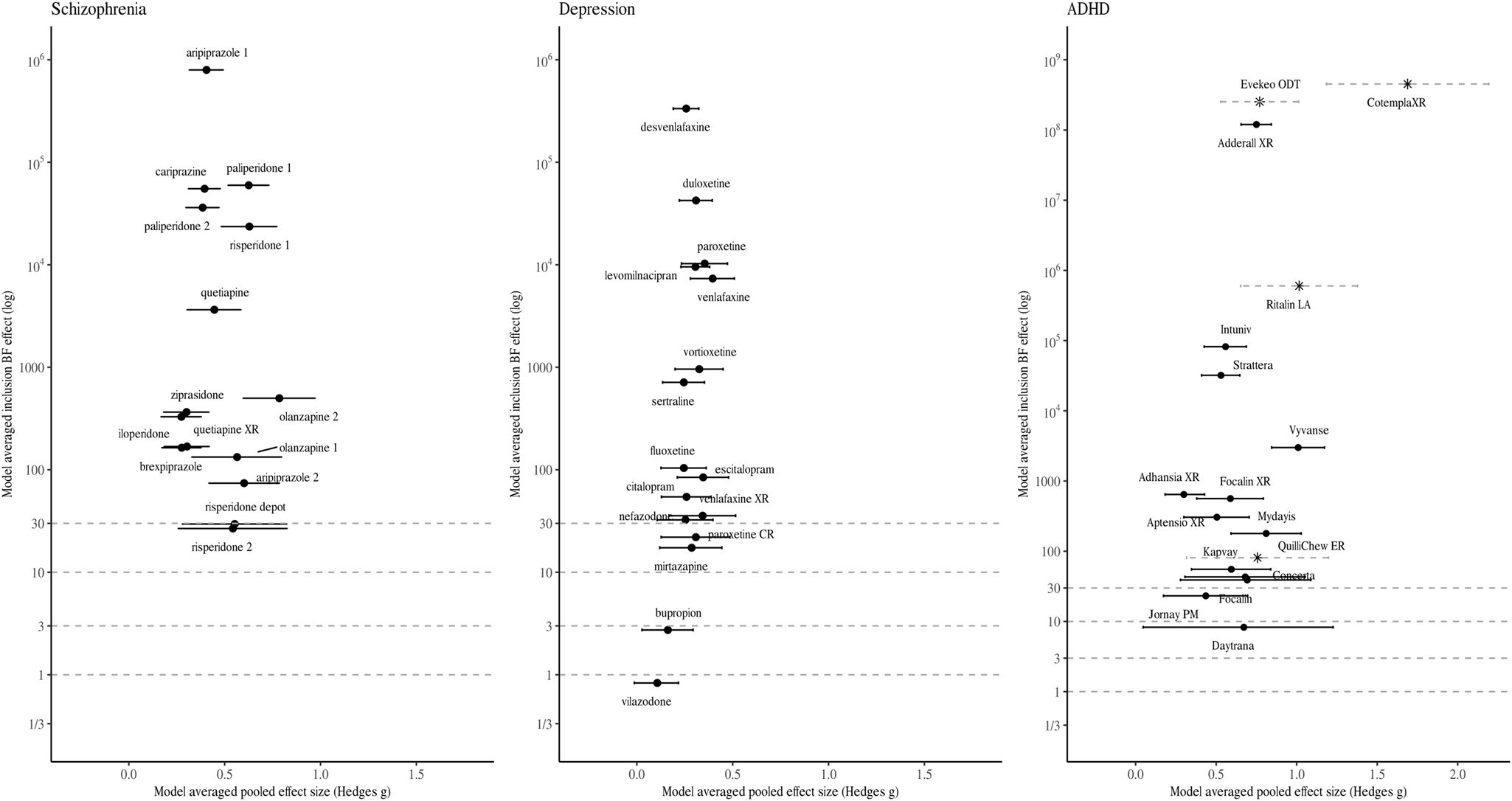

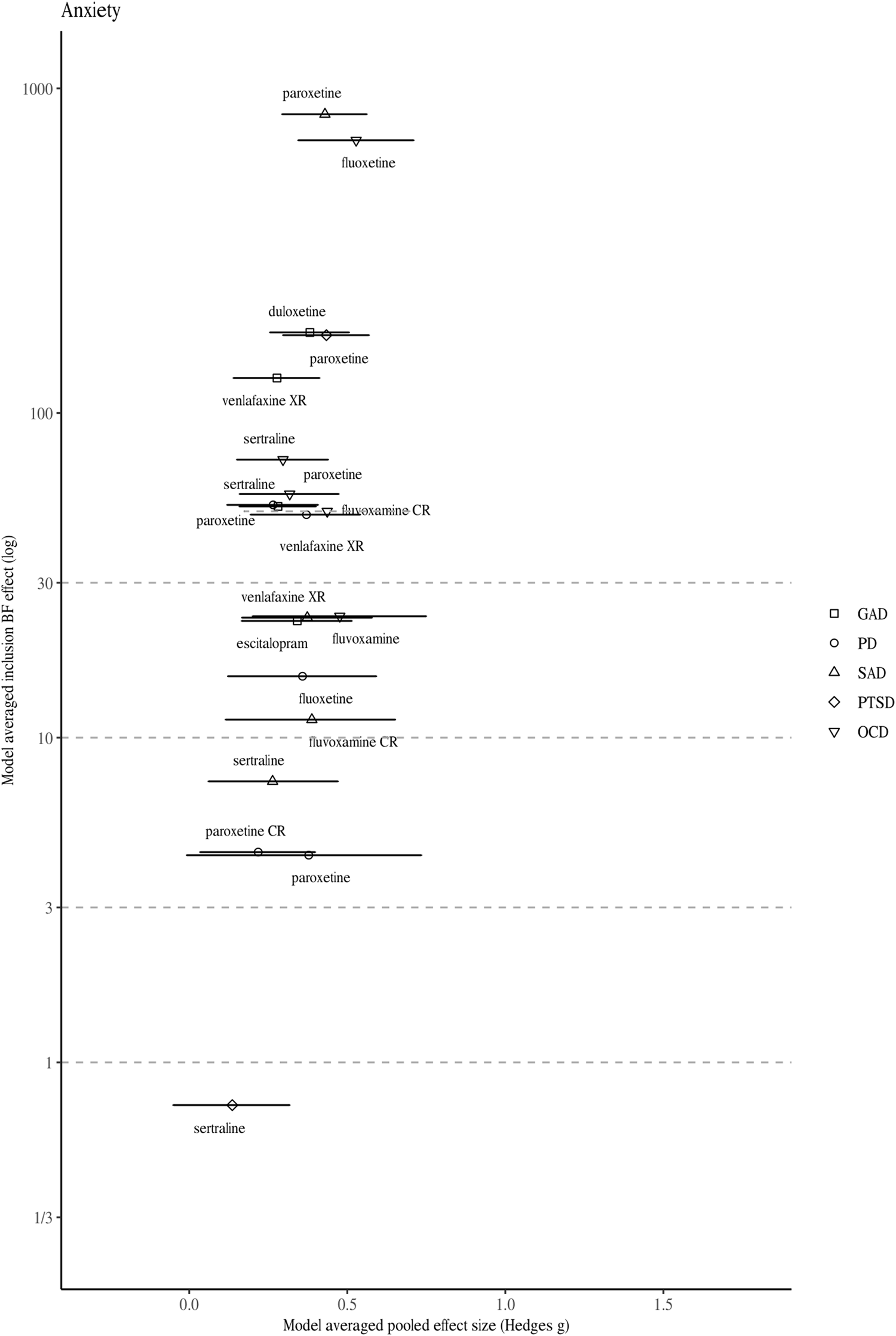

Figures 1 and 2 visualise the results of the BMA meta-analyses for each of the disorder groups. Tables including detailed information for all analyses performed can be found in the online Supplementary material. Overall, three (4.2%) BF BMAs indicated ambiguous evidence (⅓ < BF BMA < 3): sertraline approved for PTSD (BF BMA = 0.7), vilazodone (BF BMA = 0.5), and bupropion approved for depression (BF BMA = 2.7). Four (5.6%) meta-analytic BF BMAs indicated only modest evidence for a treatment effect (3 < BF BMA < 10): Daytrana for ADHD (BF BMA = 8.3), sertraline approved for SAD (BF BMA = 7.3), and paroxetine (BF BMA = 4.4) and paroxetine CR (BF BMA = 4.4) for PD. Ten (13.9%) BF BMAs showed moderately strong evidence for treatment effects (10 < BF BMA < 30), including five antidepressants approved for anxiety, two antidepressants for depression, two antipsychotics, and one ADHD medication. The majority of drugs (76.3%) were supported by strong pro-alternative evidence (BF BMA > 30).

Fig. 1. Model-averaged meta-analytic BFs and pooled effect estimates. Error bars represent 95% highest density interval. Note that the x- and y-axis has different dimensions for medication approved for ADHD. For some drug BFs and effect size correspond to a single trial (indicated by a Asterix and dotted line depicting the 95% confidence intervals). Numbers are used to differentiate drugs with the same non-proprietary name (1 = Abilify, 2 = Aristada, 3 = Zyprexa, 4 = Zyprexa Relprevv, 5 = Invega, 6 = Invega Sustenna, 7 = Risperdal, 8 = Perseris kit).

Fig. 2. Model-averaged meta-analytic BFs and pooled effect estimates for drugs approved for anxiety disorders. Symbols refer to approvals for different indications. Error bars represent 95% highest density interval. For one drug BFs and effect size correspond to a single trial (indicated by a dotted line depicting the 95% confidence intervals).

Differences in strength of evidence across disorders

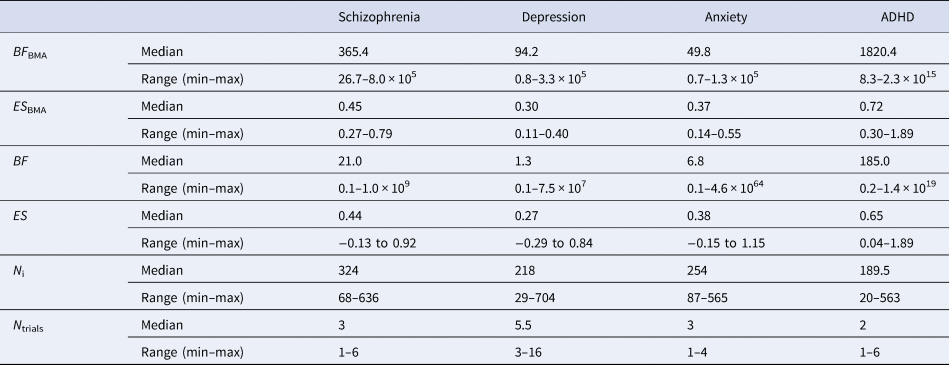

Detailed results (i.e. meta-analytic BFs and pooled effect sizes, individual trial BFs and effect sizes, sample sizes, and number of trials) are presented in online Supplementary Table S1. Summary results are presented in Table 1. Because the distributions of BFs were heavily right-skewed, we report the median instead of the mean.

Table 1. Overview of meta-analytic BFs (BF BMA) and pooled effect sizes per drug (ES BMA), individual trial BFs (BF) and effect sizes (ES), sample size for individual trials (N i), and number of trials (N trials) across the four disorder groups

Although the median meta-analytic BF of each disorder group indicated ‘very strong evidence’, the strength of evidence differed between disorders. The highest median strength of evidence was found for ADHD (BF BMA = 1820.4), followed by antipsychotics for schizophrenia (BF BMA = 365.4). Median strength of evidence was lower for antidepressants for depression (BF BMA = 94.2) and for anxiety (BF BMA = 49.8). Similarly, variability in BF BMA differed between disorders. The largest variance was found in ADHD (8.3–2.3 × 1015), followed by schizophrenia (26.7–8.0 × 105). For antidepressants approved for depression (0.8–3.3 × 105) and anxiety (0.7–1.3 × 105), the range was the smallest.

Individual BFs and trial characteristics

Median individual trial BFs are presented in online Supplementary Table S1 (for a visual representation, see online Supplementary Figs S1 through S4). Individual BFs differed (i.e. BFs corresponding to individual trials) between the four disorder groups. The median individual BF was the highest for ADHD (BF = 185.0), followed by antipsychotics (BF = 21.0) and anxiety (BF = 6.8). The median individual BF was the lowest for depression (BF = 1.3). Trial strength of evidence varied for all disorder groups (see Table 1).

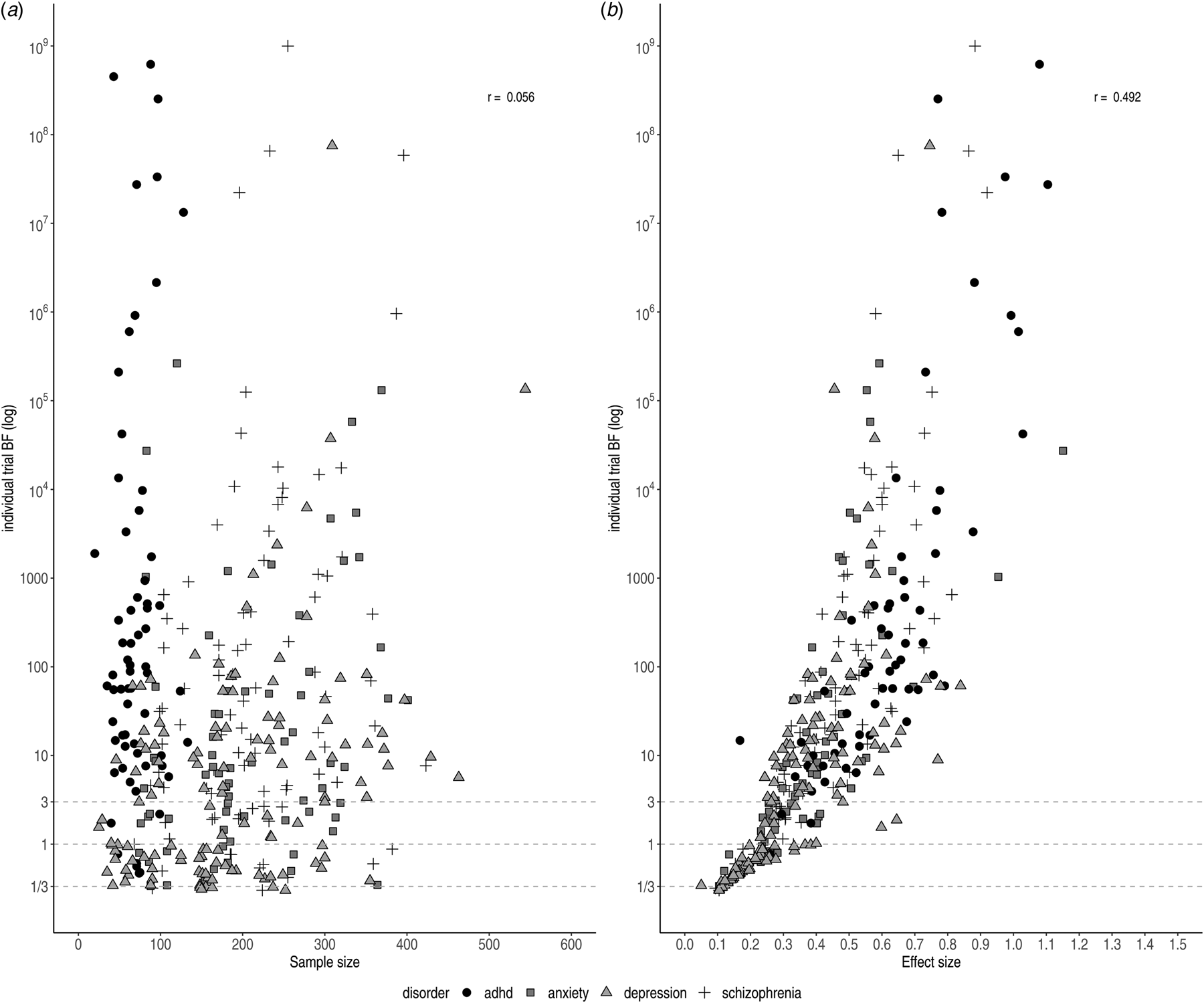

The relationships between individual BFs and both effect size and sample size are shown in Fig. 3. The scatterplot of the effect sizes and individual BFs shows a positive association (r log(BF),ES = 0.492), whereas the scatterplot of sample sizes and individual BFs does not show a clear pattern (e.g. the highest BF for ADHD trials had the smallest sample size; r log(BF),N = 0.056), although this may be due to confounding with other factors (such as effect size).

Fig. 3. Individual BFs on a log scale plotted against sample size (left) and effect size (right)). Symbols and shading indicate the four different disorder groups.

We observed variation in sample size for trials concerning ADHD medication (20–563). This can be partially explained by the inclusion of cross-over trials, which by design have comparatively small sample sizes. Trials were generally randomised, controlled, parallel group trials (parallel-group RCTs); however, crossover trials were sometimes performed for drugs approved for the treatment of ADHD. Smaller sample sizes did not necessarily correspond to ambiguous evidence. For instance, two cross-over trials for ADHD indicated very strong pro-alternative evidence with small sample sizes (n = 20, ES = 1.74, BF = 189.4 and n = 39, BF = 3.70 × 1014). This was the case for parallel trials for depression and schizophrenia, as well (n = 66, ES = 0.84, BF = 61.1 and n = 104, ES = 0.73, BF = 163.7).

The lowest individual BFs were found for depression (BF = 1.3), which might be explained by the small effect sizes (ES = 0.27). As illustrated in Fig. 3a, antidepressants for depression commonly displayed the smallest effect sizes, corresponding to the smallest individual BFs. In contrast, antipsychotics and ADHD medication showed larger effect sizes (ES = 0.44 and ES = 0.65, respectively) corresponding to stronger evidential strength.

Meta-analytic BFs and trial characteristics

The very strong evidence obtained for most ADHD medications appeared to be primarily due to high individual BFs, as the number of trials for each ADHD drug was very small. In contrast, very strong evidence for depression was generally achieved through a large number of trials, despite small studies and very low individual BFs. Larger numbers of trials corresponded to a greater proportion of trials being deemed questionable or negative by the FDA. For example, for paroxetine for depression, 16 trials were mentioned, of which nine were deemed questionable or negative. Our Bayesian re-analysis suggested evidence for an additional trial to be ambiguous. Nonetheless, the meta-analytic BF suggested very strong pro-alternative evidence for the drug to treat depression (BF BMA = 10 267.8).

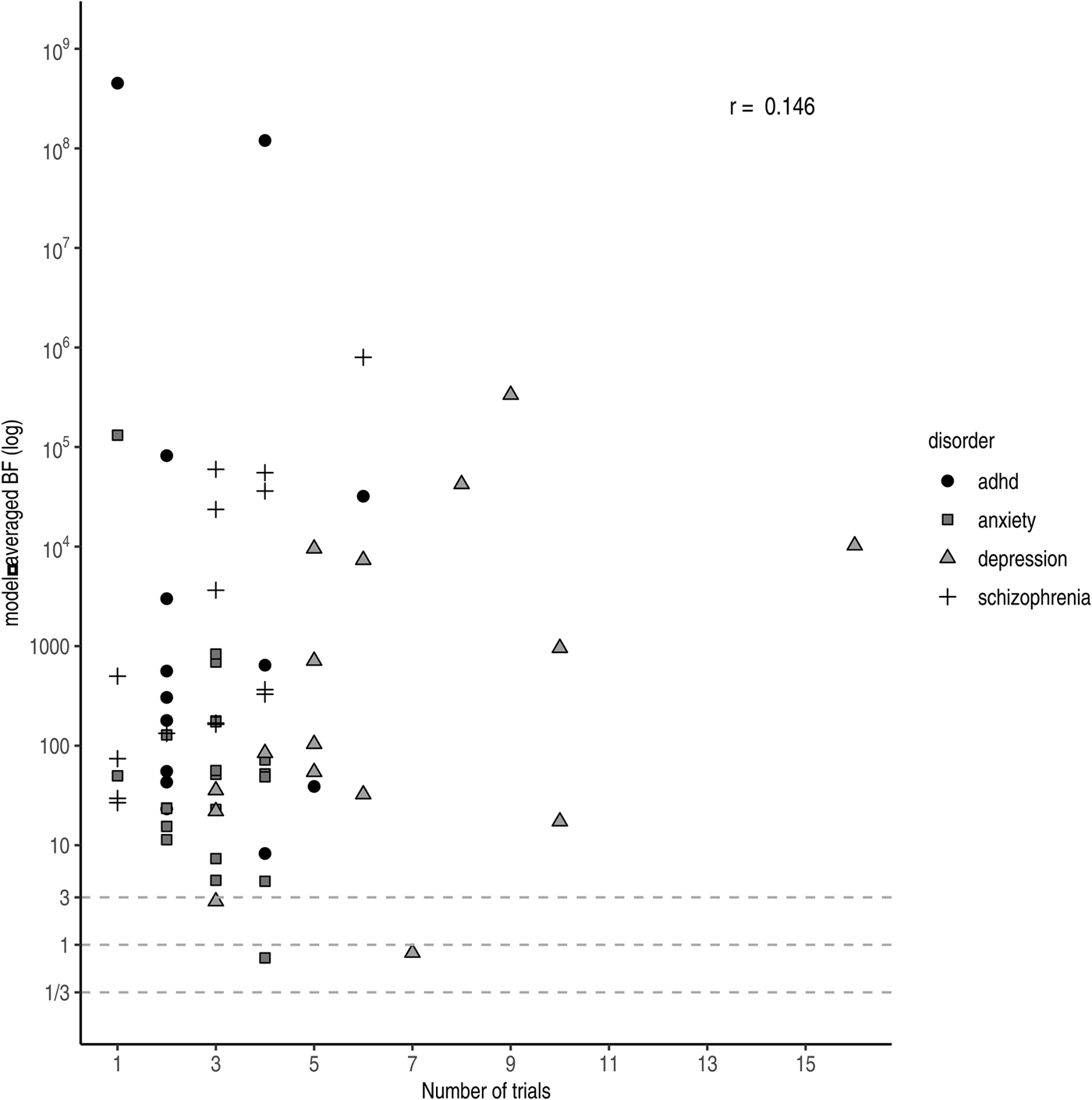

Under a Bayesian framework, more trials (i.e. more data) equal more evidence for the more probable hypothesis. In other words, with accumulating data the evidential strength (i.e. the BF) tends to point towards either zero (in case the null hypothesis is true) or infinity (in case the alternative hypothesis is true). However, we do not observe this relationship across drugs in practice (r log(BF),Nr. trials = 0.146 see Fig. 4). A likely explanation is that more trials are run for drugs with lower effect sizes to compensate. Trials concerning antidepressants approved for anxiety were slightly higher powered (i.e. had larger effect sizes and sample sizes) compared to those for antidepressants approved for depression. Nonetheless, only a few trials were performed per drug resulting in weaker evidence at the drug level compared to the other three disorder groups. For antipsychotics, we observed substantial pro-alternative evidence across the board, while the number of trials was comparable to those of antidepressants for anxiety. However, similar to ADHD medication, the individual studies were on average well-powered (i.e. medium effect size and large sample size), resulting in higher individual BFs and consequently stronger evidence at the drug level.

Fig. 4. Model-averaged BFs on a log scale plotted against the number of performed trials. Symbols and shading indicate the four different disorder groups.

Sensitivity analysis

Results from the sensitivity analyses are summarised in online Supplementary Table S2. Importantly, the qualitative interpretation did not change for different choices of model and/or scale parameter.

Discussion

Even though approval of psychotropic drugs is based on the same guideline and processed through the same pathway and by the same group within the FDA, we detected large differences in evidential strength and trial programmes. Although efficacy for the majority of psychotropic drugs was supported by very strong evidence at the time of approval, we observed substantial variation in the strength of evidence between approved psychotropic drugs: ADHD medication was supported by extreme evidence, whereas evidence for antidepressants for both depression and anxiety was considerably lower and more frequently classified as weak or moderate.

Differences in evidential strength might be partly explained by differences in trial programmes. For instance, ADHD drugs typically had very large effect sizes, resulting in extreme evidence for efficacy despite comparatively fewer and smaller trials. All else being equal, larger effect sizes correspond to larger t values, which in turn correspond to larger BFs. A potential drawback here is that the drug is tested on too few people to effectively gather evidence to rely on for safety. As most ADHD drugs are variants of methylphenidate, this may be considered acceptable. However, one might wonder: if a drug is considered different enough that a new approval application with new trials is needed to establish efficacy, is it reasonable to assume that safety will be the same?

In contrast, for depression in particular we saw clinical trial programmes with comparatively many trials and participants, meaning that there is much more experience with the drug at the time of approval. Evidence for efficacy, however, is considerably lower compared to ADHD and schizophrenia. The most likely explanation for this finding is that effect sizes for antidepressants were generally smaller than for other drug groups. Alternatively, heterogeneous samples for depression and anxiety, due to more ambiguous diagnostic criteria, might have contributed to larger between-study variation and thus lower evidential strength.

Using a Bayesian approach allowed us to identify cases in which psychotropic drugs were approved with moderate or even ambiguous evidence for its efficacy. Approximately, a quarter of all meta-analytic BFs fell within this tier (i.e. BF BMA < 30). In a few instances, drugs were approved despite ambiguous statistical evidence (i.e. 1/3 < BF BMA < 3). Sometimes approval was based on other considerations. For example, bupropion SR (sustained-release) was approved based on bio-equivalence with immediate-release bupropion, despite negative efficacy trials for bupropion SR (U.S. Food and Drug Administration ‘Bupropion SR’, 1996). Other times, negative or ‘failed’ trials were not included in the efficacy determination. For example, for vilazodone, three of five trials were considered ‘failed’, as the active comparator did not separate from placebo. The FDA has a history of ignoring failed trials because they supposedly lack assay sensitivity, the ability to differentiate an effective treatment from a less effective or ineffective one (Chuang-Stein, Reference Chuang-Stein2014). Although other considerations certainly play a role in the approval process, the example of vilazodone illustrates how the FDA's current practice of determining efficacy using two independent statistically significant trials (regardless of the number of additional negative trials) can lead to inconsistent decision making in practice. Under the Bayesian framework, endorsement of this drug would not have been recommended.

How BFs could aid evidence-based treatment choices

For the purpose of drug development and endorsement, Bayesian meta-analysis offers several advantages over classical, frequentist meta-analysis, suggested by the FDA (U.S. Department of Health and Human Services et al., 2017). Although frequentist meta-analysis is well-equipped to estimate the size of a treatment effect and its uncertainty (van Ravenzwaaij & Ioannidis, Reference van Ravenzwaaij and Ioannidis2019), it cannot differentiate between the absence of evidence (uncertainty regarding the effect) and evidence of absence (e.g. evidence for effect = 0; a similar argument was made by Monden et al., Reference Monden, Roest, van Ravenzwaaij, Wagenmakers, Morey, Wardenaar and de Jonge2018). This is especially important in the context of failed or negative trials, which could either indicate insufficient data or non-effectiveness of the drug. If the problem is merely the absence of evidence, the sponsor might perform additional trials to prove efficacy, whereas non-approval should be issued when evidence of absence has been demonstrated.

Bayesian meta-analysis yields both pooled effect sizes and evidential strength. Effect size estimates from the current analysis are similar to those from previous meta-analyses. Combining pre- and post-marketing studies, effect sizes for methylphenidate for ADHD, antipsychotics, and antidepressants approved for depression were estimated to be 0.77, 0.51, and 0.38, respectively (Leucht et al., Reference Leucht, Helfer, Gartlehner and Davis2015). These estimates are slightly larger than ours (i.e. 0.72, 0.45, and 0.30, respectively), which may be because we included unpublished, negative trials. Moreover, our effect size estimates are similar to previous network meta-analyses, which aimed to compare the efficacy and safety profiles between antipsychotics (Huhn et al., Reference Huhn, Nikolakopoulou, Schneider-Thoma, Krause, Samara, Peter and Leucht2019), antidepressants for depression (Cipriani et al., Reference Cipriani, Furukawa, Salanti, Chaimani, Atkinson, Ogawa and Geddes2018), and ADHD medication (Cortese et al., Reference Cortese, Adamo, Giovane, Mohr-Jensen, Hayes, Carucci and Cipriani2018). For example, estimates for antipsychotics ranged from 0.27 to 0.89 (Huhn et al., Reference Huhn, Nikolakopoulou, Schneider-Thoma, Krause, Samara, Peter and Leucht2019; here: 0.27–0.79) and the pooled effect size for antidepressants was 0.30 (Cipriani et al., Reference Cipriani, Furukawa, Salanti, Chaimani, Atkinson, Ogawa and Geddes2018; here: 0.30).

Our study adds novel information to previous research by using BFs to estimate the strength of evidence. The network meta-analyses conclude with rankings based on efficacy and safety data. We offer additional insight into the strength of evidence for efficacy. Sometimes, our rankings align, lending further support to the efficacy of the drug. For example, based on effect size, Huhn et al. (Reference Huhn, Nikolakopoulou, Schneider-Thoma, Krause, Samara, Peter and Leucht2019) ranked risperidone in the top tier and our analysis additionally indicates very strong support for the treatment effect. In other cases, BFs advise caution. For example, based on effect size, Huhn et al. (Reference Huhn, Nikolakopoulou, Schneider-Thoma, Krause, Samara, Peter and Leucht2019) ranked olanzapine highly, whereas our analysis places it in the lower quarter in comparison with the other drugs. Our analysis suggests that all else being equal, risperidone should be preferred over olanzapine. Additionally, BFs can help to refine rankings based on efficacy and safety data. For example, Cipriani et al. (Reference Cipriani, Furukawa, Salanti, Chaimani, Atkinson, Ogawa and Geddes2018) performed a network meta-analysis pooling efficacy and safety data for antidepressants for depression. Based on relatively high response rates and relatively low dropout rates, they recommended – among others – mirtazapine and paroxetine. Here, paroxetine is supported by extreme evidence, whereas mirtazapine has the third lowest evidential strength. All else being equal, paroxetine should be preferred over mirtazapine. For the purpose of drug prescription, BFs offer a valuable source of information for clinicians. Prescription and use of psychotropic drugs has steadily increased over the past few decades (Ilyas & Moncrieff, Reference Ilyas and Moncrieff2012; Olfson & Marcus, Reference Olfson and Marcus2009; Stephenson, Karanges, & McGregor, Reference Stephenson, Karanges and McGregor2013). With a wide variety of drugs available, choosing the most appropriate one can be difficult, highlighting the importance of good evidence. Next to safety and patient-specific concerns, considerations regarding effect size and evidential strength play a central role. Commonly, strength of evidence is assessed by qualitative or subjective criteria. The American Psychological Association (APA) considers evidential strength for their recommendations by reviewing the available literature and assessing risk of bias, the degree to which reported effects are unidirectional, directness of the outcome measure, quality of the control condition, and precision of the estimate (e.g. width of a 95% confidence interval; American Psychological Association, 2019; American Psychological Association, 2017). Although these considerations are certainly meaningful, implementing them in clinical practice can be unsystematic, easily influenced by the rater, and might fail to effectively quantify strength of evidence (i.e. the likelihood of the treatment effect existing). For example, the APA recommends sertraline for the treatment of PTSD and argues that this decision is supported by the moderate strength of evidence. In contrast, our analysis suggests no evidence for a treatment effect of sertraline at the time of approval for PTSD.

Moreover, BFs offer a valuable source of information when effect sizes are highly comparable between drugs. For instance, the APA concludes that many antidepressants are equally effective (American Psychological Association, 2019) and makes no clear recommendation which one to prefer. In these cases, BF could be used as an additional criterion, as antidepressants vary substantially in evidential strength (see also Monden et al., Reference Monden, Roest, van Ravenzwaaij, Wagenmakers, Morey, Wardenaar and de Jonge2018). For example, leaving aside non-efficacy considerations, but considering both the effect size and evidential strength, one might choose venlafaxine or paroxetine over sertraline or citalopram, two very commonly prescribed antidepressants (Moore & Mattison, Reference Moore and Mattison2017; although we acknowledge that safety/tolerability considerations may alter this choice). This advantage still holds if effect sizes vary from medium to large. For example, for ADHD drugs, Cotempla XR, Evekeo ODT, and Adderall clearly demonstrated the highest evidential strength with comparable effect size and might be preferred over the others.

Strengths and limitations

Adopting a Bayesian framework enabled us to capture differences in evidential strength between disorders and drug groups. Nonetheless, the results should be considered in light of a few limitations. First, although we used information from the FDA-registered trials, limiting the influence of reporting bias, we were confined to data from approved drugs and pre-marketing studies. Consequently, we cannot speak to the process and statistical evidence of non-approval, or strength of evidence after post-marketing studies. As such, the current results only reflect the evidential strength at the time of approval but are not necessarily accurate reflections of the current state of evidence. Second, some values were unavailable and had to be imputed, which might have introduced extra noise. Nonetheless, imputation did not seem to be associated with increased between-study heterogeneity as indicated by comparable posterior probabilities of the random-effect model between drugs for which test statistics were available and drugs for which test statistics were imputed. Finally, Bayesian analysis is dependent on the choice of prior. Although this is an often-heard critique, we mostly restricted our analyses to default priors to ensure comparability of our results across drug groups. Furthermore, our sensitivity analysis indicated that different choices for the scale parameter of the prior did not change interpretation of the BF qualitatively in the present analysis.

The main strength of our study is that, to our knowledge, we have performed the first large-scale comparison of evidential strength between several disorders. Previously, Bayesian methods have been proposed for and discussed in the context of the drug development and endorsement (Burke, Billingham, Girling, & Riley, Reference Burke, Billingham, Girling and Riley2014; Cipriani et al., Reference Cipriani, Furukawa, Salanti, Chaimani, Atkinson, Ogawa and Geddes2018; Huhn et al., Reference Huhn, Nikolakopoulou, Schneider-Thoma, Krause, Samara, Peter and Leucht2019; Monden et al., Reference Monden, de Vos, Morey, Wagenmakers, de Jonge and Roest2016, Reference Monden, Roest, van Ravenzwaaij, Wagenmakers, Morey, Wardenaar and de Jonge2018; van Ravenzwaaij & Ioannidis, Reference van Ravenzwaaij and Ioannidis2019; Woodcock, Temple, Midthun, Schultz, & Sundlof, Reference Woodcock, Temple, Midthun, Schultz and Sundlof2005). In recent years, Bayesian network meta-analyses specifically became increasingly popular in medical sciences (Hamza et al., Reference Hamza, Cipriani, Furukawa, Egger, Orsini and Salanti2021; Holper, Reference Holper2020; Huhn et al., Reference Huhn, Nikolakopoulou, Schneider-Thoma, Krause, Samara, Peter and Leucht2019). Our study differs from previous studies that were concerned with efficacy and tolerability, but that either did not address evidential strength or did not compare evidential strength across different psychological disorders. Here, we provided an overview of the evidential standard for psychotropic drugs at the time of FDA approval and demonstrated how psychotropic drugs differ in their evidential strength, using BFs.

Conclusion

Taken together, the present analysis offers interesting insights into the evidential strength within and across different psychotropic drugs. We observed large differences in evidential strength and trialling between disorders. Although the majority of re-analysed drugs was supported by substantial evidence, we also observed cases where the current approval process led to endorsement despite ambiguous statistical evidence. Moreover, evidential strength differed greatly between drugs and across disorder groups. Lower evidential support for efficacy was observed more frequently for antidepressants. Differences in evidential strength might be a consequence of different standards in trialling. The BF as a measure of evidential strength might offer a valuable, additional source of information and helps to set up a consistent and transparent standard for evaluating strength of evidence of efficacy in the approval process of psychotropic drugs.

Supplementary material

The supplementary material for this article can be found at https://doi.org/10.1017/S0033291721003950.

Data

The datasets generated and analysed during the current study are available at OSF, https://osf.io/364t5.

Acknowledgements

We would like to acknowledge the Center for Information Technology of the University of Groningen for their support and for providing access to the Peregrine high performance computing cluster. Rei Monden was partially supported by the Clinical Investigator's Research Project in Osaka University Graduate School of Medicine.

Author contributions

Initial planning and preregistration were drafted by DvR, YAdV, and MMP, and RM and JAB provided feedback. YAdV and MMP collected the additional data. MMP performed data analysis and drafted the manuscript under supervision of DvR and YAdV. All co-authors provided detailed feedback. The corresponding author attests that all listed authors meet authorship criteria and that no others meeting the criteria have been omitted.

Financial support

This project is funded by an NWO Vidi grant to D. van Ravenzwaaij (016.Vidi.188.001).

Conflict of interest

None.