Introduction

Acute respiratory diseases (ARD) represent a significant concern to the US military and thus national security, as they are responsible for between 12 000 and 27 000 missed training days among recruits, annually [1]. The US Army defines ARD syndromically; soldiers must present to a medical treatment facility with: oral temperature >100.5 °F, recent signs or symptoms of acute respiratory tract inflammation, and either having a limited duty profile or being removed from duty altogether to have ARD. As a result, both viral and bacterial pathogens, as well as non-pathogenic conditions can result in ARD. Adenoviruses, influenza viruses, respiratory syncytial viruses and human coronaviruses make up the majority of viral contributors to ARD, while Streptococcus pneumoniae, Streptococcus pyogenes (‘strep’) and Mycoplasma pneumoniae represent some of the major bacterial etiologic agents of ARD [Reference Gray2, Reference Sanchez3].

Basic Combat Training (BCT) consists of a 10-week long training programme designed to prepare recruits for service in the Army. There are both physical training and practical training exercises, and BCT culminates with a physical fitness test that all recruits must pass in order to graduate from BCT. Recruits at BCT have been shown to be at higher risk of ARD compared with their civilian counterparts [Reference Gray2, Reference Sanchez3]. This excess risk has been attributed to crowded barracks [Reference Breese, Stanbury and Upham4] and other environmental challenges [Reference Russell5], as well as to stress from physically and psychologically demanding conditions [Reference Sanchez3, Reference Breese, Stanbury and Upham4, Reference Gleeson6, Reference Cohen, Tyrrell and Smith7]. There are four Forts that currently serve as BCT sites: Fort Jackson (in South Carolina), Fort Benning (in Georgia), Fort Leonard Wood (in Missouri) and Fort Sill (in Oklahoma). Fort Jackson is the largest BCT installation, which trains approximately 35 000 recruits annually.

In the 1960s, before the rollout of the adenovirus vaccine, approximately 80% of trainees would contract a respiratory infection during BCT. The majority of those infections were attributed to adenovirus types 4 and 7 [Reference Dudding8]. Of those who contracted a respiratory infection, nearly 20% were hospitalised [Reference Breese, Stanbury and Upham4]. This excess risk has been attributed to crowded barracks [Reference Breese, Stanbury and Upham4] and other environmental challenges [Reference Russell5], as well as to stress from physically and psychologically demanding conditions [Reference Sanchez3, Reference Breese, Stanbury and Upham4, Reference Gleeson6, Reference Cohen, Tyrrell and Smith7].

To address this burden, live, enteric-coated oral adenovirus vaccines against types 4 and 7 (AdV-4 and -7, referred to collectively here as the ‘old vaccine’) were routinely used year-round beginning in 1971 [Reference Gray2]. The sole manufacturer of AdV-4 and -7 ceased production in 1994 due to low public demand for the product. The final doses were shipped in 1996, and distributed to Army recruits only during the winter months until the stockpile was depleted in 1999 [Reference Clemmons9]. As a result of the phase out, ARD rates, particularly adenovirus-associated ARD rates, increased at BCT sites [Reference Breese, Stanbury and Upham4]. The increased disease burden cost the Army an estimated $10–$26 million in medical costs and lost training time annually. In 2011, live, enteric-coated oral vaccines against types-4 and -7 were approved for use in military personnel [Reference Radin10]. These ‘new vaccines’ were derived from strains and stocks used by the original manufacturer, and all protocols and logistical procedures were as close to those used in the old vaccine manufacturing line [Reference Hoke and Snyder11]. The subsequent year-round administration of the new vaccines resulted in drastic, sustained declines in ARD at all BCT installations [Reference Clemmons9, Reference Radin10].

Benzathine penicillin G (BPG, trade name ‘Bicillin’) is a high-dose antibiotic injection administered to military recruits upon accession to BCT. Prophylactic use of BPG is intended to reduce the impact of imported bacterial infections on troop health upon entry to BCT in hopes of preventing further spread [Reference Morris and Rammelkamp12]. Multiple studies have suggested that BPG and other antibiotic prophylaxis have positive effects on recruit health and ARD rates [Reference Davis and Schmidt13, Reference Gray14]. Injection with 1.2 M units of BPG has been shown to provide protection against infection with strep and other bacterial pathogens for up to 6 weeks [Reference Morris and Rammelkamp12], with other studies suggesting the duration may be shorter [Reference Kassem15]. One such study found that the duration of protection may be as low as <2 weeks in Navy recruits [Reference Broderick16]. Generally, BPG prophylaxis is given to all Army recruits upon entry to the 10-week long BCT programme. Additional doses are not administered to trainees after protection wanes.

Although it has been Army policy to administer BPG to new recruits since the 1950s, there has been some variability in administration. For reasons that are not clear, Fort Jackson has never given BPG prophylaxis. Also, multiple BPG manufacturing problems resulted in relatively minor and localised supply shortages, and some of these shortages were associated with increases in ARD burden. One of the most extensive BPG shortages occurred in April–July 2016 and affected all BCT installations. Fort Benning chose not to resume routine prophylaxis after the shortage ended, and thus has not administered BPG since March 2016. The variability in the use of BPG in time relative to variability in adenovirus vaccine administration at different sites provides an opportunity to conduct an observational study of the impact of BPG use on ARD.

Here we fit, k-fold cross-validated and externally validated random forest (RF) and Poisson regression (PR) models to weekly counts of ARD in Army recruits at BCT installations from January 1991 to April 2017 in order to evaluate the role of BPG prophylaxis at reducing ARD incidence. We evaluated the relative performance of the RF to the PR in predicting all-cause ARD, and quantified the relative importance of BPG and other covariates in this prediction. We hypothesised that BPG availability would be significantly associated with ARD. Specifically, we hypothesised that BPG would have a moderately protective effect on ARD incidence, and that its effect size would be lower than that of the new adenovirus vaccine.

Methods

Data

As mentioned above, a trainee is said to have ARD if he or she presents to a medical treatment facility with: oral temperature >100.5 °F, recent signs or symptoms of acute respiratory tract inflammation, and either having a limited duty profile or being removed from duty altogether. Weekly counts of all-cause ARD from January 1991 to April 2017 among recruits at the four BCT installations, recruit population size and training type (BCT or a longer programme called One Station Unit Training – OSUT – a combined BCT-advanced individual training (AIT) programme whereby both training programmes are completed in succession at the same installation rather than completing BCT at one installation and travelling to a second installation for AIT) were obtained from the Army ARD Surveillance Program [Reference Lee, Eick and Ciminera17]. BCT installations are required to report weekly counts of ARD to Army Public Health Center as part of the ARD Surveillance Program. Data on BPG prophylaxis and adenovirus vaccine availability (both the old and new vaccines) were extracted from the literature and cross-referenced against ARD Surveillance reports and Army memoranda.

Analysis

Data cleaning and analysis were implemented using R version 3.2.1 (R Core Team 2014). Both an RF model and a PR model were used in a k-fold cross-validation framework (k = 5), with 3 years of data – one from each ‘era’ of adenovirus vaccine availability – held out for external validation. In k-fold cross-validation, the data are partitioned into k subsets (‘folds’) of roughly equal size. The model is then trained on k-1 folds and used to predict values in the kth fold. The process is iterative so that each of the folds acts as the test set and a predicted value is generated for every observation in the original dataset. Performance was evaluated by comparing mean absolute error (MAE) across folds within the same model and within each fold across models [Reference Arlot and Celisse18]. K = 5 was selected in order to conserve computational power, while also providing an 80/20 split between the training and testing set in each of the iterations. The superiority of the RF relative to the PR was calculated by

and statistical significance of these MAEs was determined through a Student's t test adjusted for sample overlap [Reference Bouckaert and Frank19].

RF procedures and prediction metrics were run using the randomForest [Reference Liaw and Wierer20] and forecast [Reference Hyndman21] R packages, respectively. RF models are a non-linear ensemble of decision trees in which bootstrapped samples from the original dataset are used for model construction, while the remaining observations constitute the out-of-box sample on which to test the model's performance [Reference Breiman22–Reference Cutler24]. A single decision tree in the RF represents a hierarchical partition of the bootstrapped data grown by considering highly discriminative variables among randomly drawn subsets of the entire covariate set [Reference Breiman23]. Each level of categorical variables was fed into the model as a binary variable in order to minimise bias in selecting variables to split on [Reference Strobl25]. Mean squared error (MSE) was used as a metric for variable importance to assess the relative predictive ability of each covariate in the model, including BPG.

PR was used to estimate incidence rate ratios (IRRs) and corresponding confidence intervals (CIs) for BPG and each of the covariates on weekly ARD. Trainee population size was included in this model as an offset rather than as a fixed effect in order to adjust for how the size of the population at risk influences the likelihood of observing more cases.

The RF model was then re-run on the external validation set, and variable importance measures were extracted and compared with the average importance measures from the cross-validation. Similarly, a PR model was performed on the external validation set. The MAEs for the RF and PR models were compared using equation 1.

We then ran PR separately on the entire training dataset (used in the cross-validation) to obtain IRR point estimates and corresponding CIs for BPG and other covariates. These IRR estimates allowed us to test the hypothesis that BPG would have a significant protective effect on the rate of all-cause ARD, and to compare the magnitude of the effect with that of adenovirus vaccine.

Results

Prediction

Cross-validation (22.5 years)

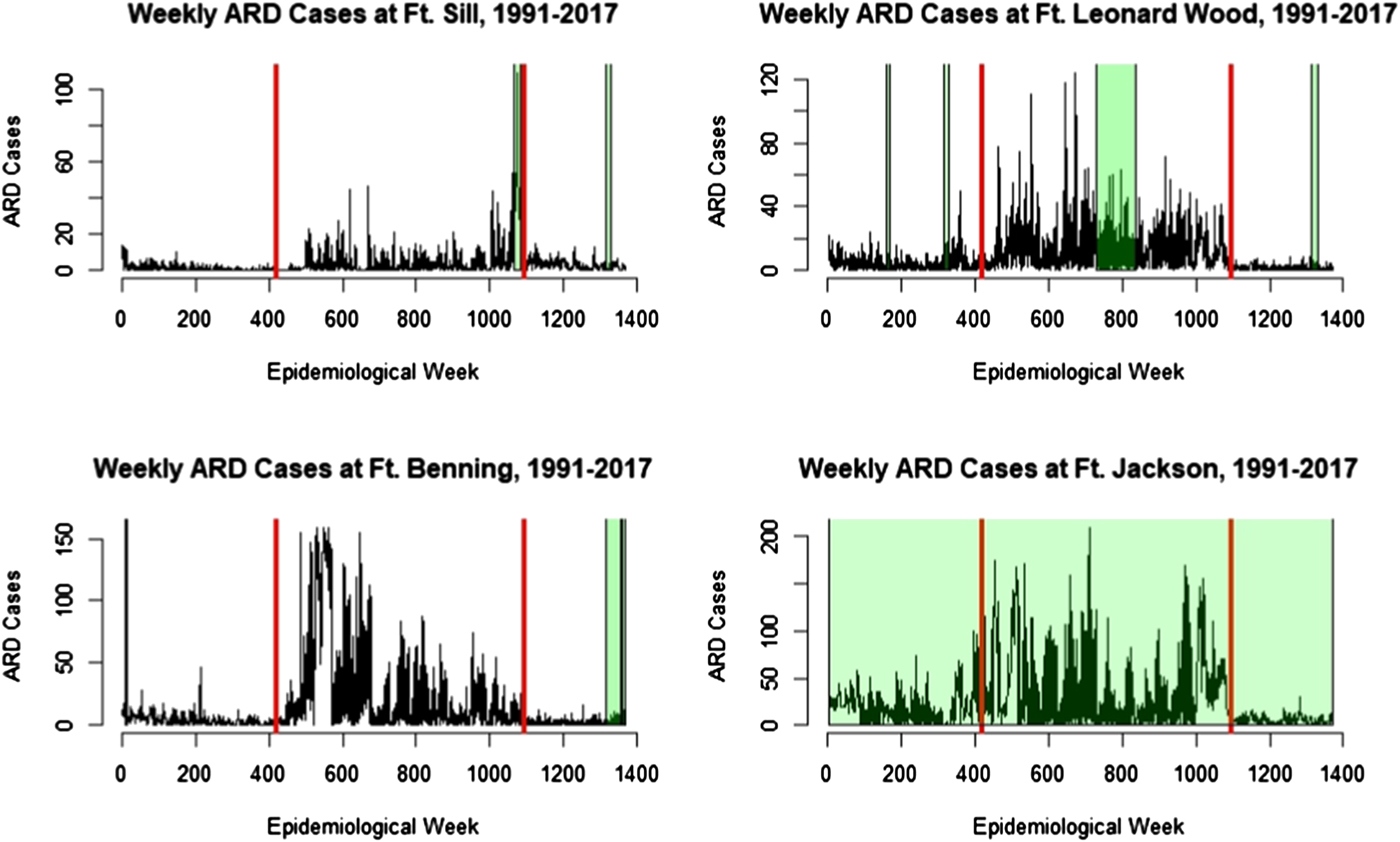

Figure 1 presents the raw time series (1991–2017) for the four BCT installations, Fort Sill (top left), Fort Benning (top right), Fort Leonard Wood (bottom left) and Fort Jackson (bottom right).

Fig. 1. Time series of ARD cases from 1991 to 2017 at Fort Sill (top left), Fort Leonard Wood (top right), Fort Benning (bottom left) and Fort Jackson (bottom right). The period between the red bars is the adenovirus vaccine shortage, with the time preceding the left bar representing the ‘old’ vaccine era, and the ‘new’ vaccine era to the right of the right bar. Green shading indicates BPG prophylaxis shortage. Fort Jackson never has used BPG for prophylaxis.

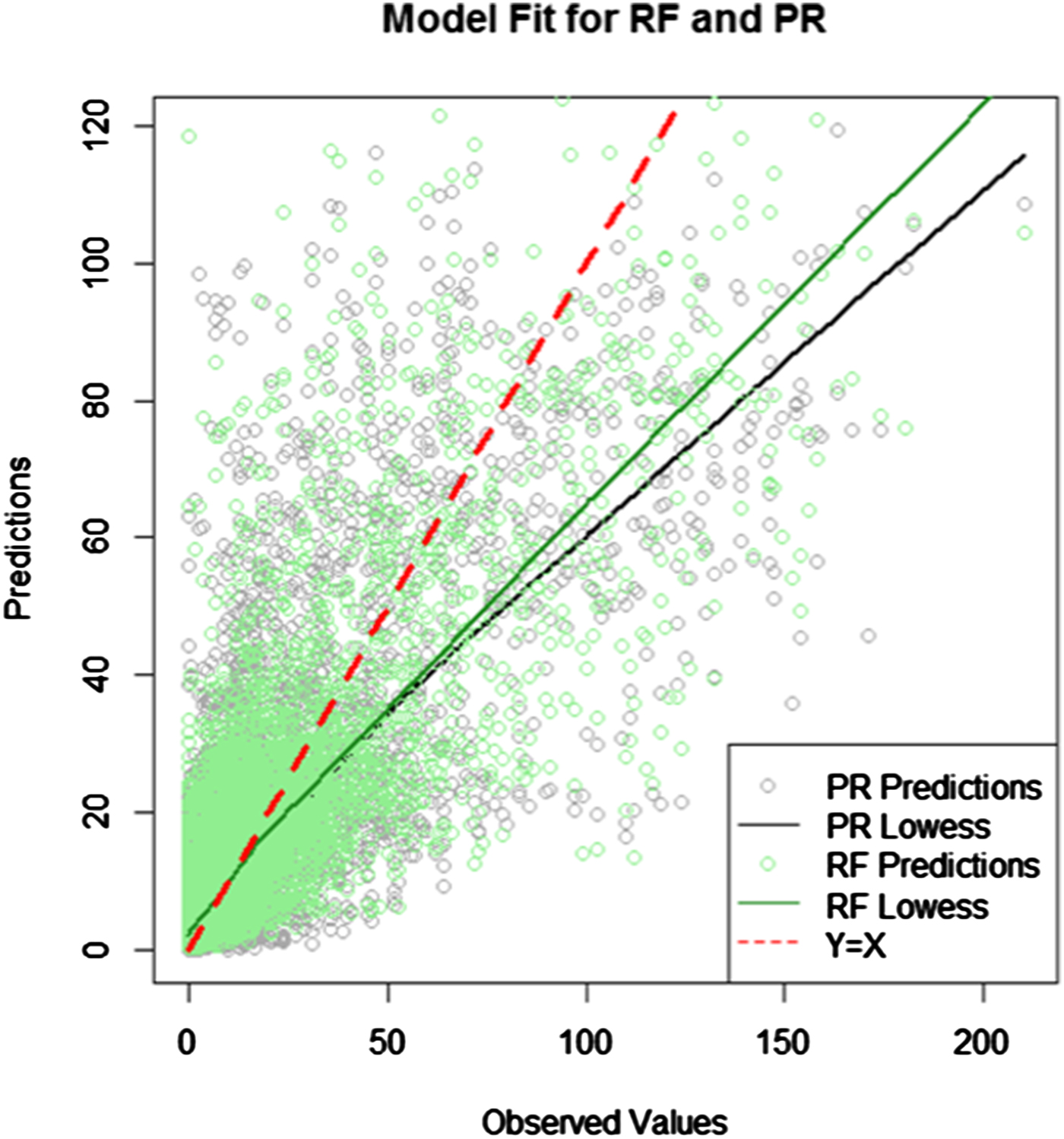

The predicted values from the RF and PR fivefold cross-validation analyses are overlaid on the original time series in Figure 2. Prediction accuracy for the RF and PR k-fold cross-validation analyses are presented in Table 1. The RF model was able to explain on average 65.2% of the variance in the data across the folds, and the estimates across each testing fold were consistent ranging from 63.8% to 66.5%. The RF model, on average and for each individual fold, had a lower MAE than the PR, average MAE of 6.5 (range 6.3–6.7) and 7.3 (range 7.1–7.7) for the RF and PF models, respectively. From equation 1, the average superiority of the RF model over the PR model was estimated at 11.6%, with a range of 10.9–12.1% across folds. This difference was statistically significant (P < 0.001).

Fig. 2. Predicted and observed values from the fivefold cross-validation.

Table 1. Comparing prediction accuracy for fivefold cross-validation by mean absolute error for random forest and Poisson regression

Variable importance, as measured by per cent increase in MSE for each independent variable when left out of models, in each fold of the RF model is presented in Table 2. Of note, the new adenovirus vaccine was the most important variable in all five folds, with an average of 133% increase in MSE. Trainee class size in hundreds and the old adenovirus vaccine were the second and third most important variables with mean per cent increase MSE values of 83% and 75%, respectively. The month of January and training type were the next most important predictors, with per cent increase MSE values of 39% and 35%, respectively. Exclusion of an indicator of BPG use increased MSE by an average of 21.2%.

Table 2. Variable importance (% increase mean squared error when left out) for random forest model (k-fold cross-validation) using trainee class size

External validation

Both the PR and RF models were used to predict ARD case counts in an external validation set consisting of 3 years – one from each adenovirus vaccine ‘era’. Figure 3 overlays the predicted values from each of the two models on the observed data. The RF model still out-performed the PR by 20.9%, with MAE values of 4.9 and 6.2 for the RF and PR, respectively (data not shown).

Fig. 3. Predicted and observed ARD case counts in external validation set.

Table 3 presents the average variable importance measures for the covariates from the cross-validated RF model, and those from when the same RF model was applied to the external validation set. The five most important variables in the cross-validation analysis were also the five most important variables in the validation analysis: the new adenovirus vaccine availability, trainee population size, availability of the old adenovirus vaccine, the month of January and training type. In both analyses, BPG availability was ranked as the ninth most important variable.

Table 3. Comparison of variable importance between the cross-validation set and the external validation set from RF models

Inference on BPG effectiveness

PR model results from the training dataset used in the cross-validation procedure are presented in Table 4 to obtain IRRs. BPG prophylaxis availability was found to be significantly protective against all-cause ARD (IRR = 0.68, 95% CI 0.67–0.70). Similarly, both the old and new adenovirus vaccines were significantly protective against ARD when compared with the adenovirus vaccine shortage, with IRRs of 0.39 (95% CI 038–0.39) and 0.11 (95% CI 0.10–0.11), respectively. Compared with Fort Jackson, Forts Benning and Leonard Wood were significant risk factors for ARD (IRR = 1.17, 95% CI 1.13–1.20 and IRR = 1.29, 95% CI 1.26–1.33, respectively). Fort Sill was significantly protective compared with Fort Jackson (IRR = 0.67, 95% CI 0.65–0.69). BCT training was a significant risk factor compared with OSUT. Furthermore, a 100 person increase in trainee population size was associated with a 3% increase in ARD incidence rate.

Table 4. Incidence rate ratios for independent variables in the Poisson regression model fit to the training data (22.5 years) with a population offset

Discussion

In this study, we sought to build a predictive model for all-cause ARD and assess how important BPG and other covariates are in its performance. Furthermore, we hypothesised that BPG was significantly associated with all-cause ARD – specifically, that BPG would have a significant, moderately predictive of and protective against all-cause ARD. We also hypothesised that the magnitudes of its association and predictive ability would be lower than those of the new adenovirus vaccine.

The RF model explained on average over 65% of the variance in the data, and outperformed the PR model k-fold cross-validation by 11.6% (Table 1). In the external validation, the RF model's predictive accuracy was over 20% superior to that of the PR model.

BPG, in the PR on the full dataset, was associated with a 32% reduction in ARD incidence (IRR = 0.68; 95% CI 0.67–0.70). In the RF k-fold cross-validation, BPG was found to be the seventh most important variable, with a 21% increase in MSE. This indicates that while BPG availability is not highly predictive of all-cause ARD, it does have a significantly protective effect. This result is consistent with a previous study which found that BPG prophylaxis was associated with a broad and persistent protection against not only group A streptococcal infections, but ARD in general [Reference Gunzenhauser26].

It is well established that the development of the new adenovirus vaccine was associated with substantial declines in ARD [Reference Clemmons9, Reference Radin10]. Our finding that the new adenovirus vaccine was associated with an 89% lower incidence rate compared with when there was no adenovirus vaccine further supports its effectiveness. The old adenovirus vaccine, which was thought to be effective [Reference Russell27] but not to the same degree as the new vaccine [Reference Clemmons9], was similarly statistically significantly protective against ARD, with a 61% reduced IRR compared with weeks when there was no adenovirus vaccine available.

Trainee population size in hundreds was the second most important variable in the RF model, representing an 84% mean increase in MSE across the five folds. This result was expected, since larger trainee class sizes means that more people are at risk of contracting ARD. In the Poisson model, log trainee population size in hundreds was included as an offset in order to constrain its effect on the remaining variables’ coefficient estimates.

Temporal covariates such as the months of January and February were also important variables, associated with an average of 42% and 25% increase in RMSE, respectively. However, January and February both are shown to be statistically significant protective factors (IRR = 0.53 and 0.81, respectively) on ARD. January comes after what is usually an extended winter holiday when trainees are permitted to leave the installation. Leaving the psychologically and physically stressful BCT environment may result in improved temporary immune function upon their return [Reference Dudding8]. An additional explanation could be that summer months are when the most recruits receive BCT, and therefore more transmission is expected compared with other months.

Fort Leonard Wood and Fort Benning were both found to be significant risk factors for ARD compared with Fort Jackson (IRRs = 1.29 and 1.17 with 95% CIs of 1.26–1.33 and 1.13–1.20, respectively). Oppositely, Fort Sill was found to be significantly protective against ARD (IRR = 0.67, 95% CI 0.65–0.69). Each base may have a different baseline risk for the various pathogens that make up ARD due to varying environmental conditions across sites, and the protocol for assigning new recruits to BCT sites. Recruits from different parts of the country may have different immune profiles and thus may be more or less susceptible to infection from pathogens vulnerable to BPG.

Limitations

This study is subject to a number of limitations. First, BPG prophylaxis availability does not mean that BPG was not available or used reactively to control an ongoing outbreak. Situations where BPG was used to control an outbreak were not explicitly captured in the ARD Surveillance Program. Additionally, only those in the impacted trainee class or barrack would receive the antibiotic, and not necessarily all trainees in the installation. Similarly, adenovirus vaccine availability does not necessarily reflect use. There could be variability in administration in the few years between when the final adenovirus vaccine shipments and when the installations’ respective stockpiles were depleted.

Second, ARD has a syndromic case definition that encompasses viral and bacterial respiratory pathogens. Since BPG is an antibiotic, it is only effective against specific Gram-positive bacteria, including some Streptococci and Treponema. Meanwhile, it is thought that the majority of ARD in this population is caused by viral pathogens [Reference Gray2, Reference Sanchez3]. BPG effectiveness in a given week is a function of the composition of respiratory pathogens circulating in the recruit population; this is not captured as part of the ARD Surveillance Program. Military treatment facilities are encouraged to test for strep in at least 60% of recruits with ARD, but true strep test compliance rates are around 30%. We opted to use ARD rather than a strep-specific outcome due to low, potentially differential testing compliance rates across installations over time.

In addition to strep surveillance, it is known that overall ARD surveillance practices differ by installation as well as over time. Differences in surveillance between installations are controlled for explicitly in our model, but the effect of surveillance bias cannot be completely accounted for. As a result, care should be given in making between-installation comparisons. Within-installation temporal variation in surveillance (such as in response to an outbreak) was not controlled for in the model. The only type of temporal variation taken into account was month, and not week, season or year. Taking into account week of the year would have resulted in estimating an additional 52 parameters, which would have been computationally intensive and would have significantly decreased our statistical power. Year was not included in the analysis so that the resulting models could be then used to forecast ARD for prospective outbreak detection. Season was not included because within-season variation in weather patterns, as well as overall seasonal trends, should have been captured by the finer temporal scale.

The results of this work suggest that BPG prophylaxis reduces the rate of all-cause ARD by 32%. A randomised controlled trial to test the effectiveness of different dosing regimens of this intervention at reducing ARD would provide additional data on the modes of action and overall effectiveness.

Acknowledgements

The authors would like to acknowledge the Acute Respiratory Diseases Surveillance Program at Army Public Health Center, as well as the epidemiologists and preventive medicine doctors at each of the Basic Combat Training installations.