Impact Statement

Digital twin construction (DTC) is a new mode for managing production in construction that leverages the data streaming from a variety of site monitoring technologies and artificially intelligent functions to provide accurate status information and to proactively analyze and optimize ongoing design, planning, and production. DTC applies Building Information Modeling technology and processes, lean construction thinking, the Digital Twin Concept, and AI to formulate a data-centric mode of construction management. The paper introduces DTC for the first time, providing thorough definitions of its core information concepts and its data-processing workflows.

1. Introduction

The “digital twin” concept for data-centric management of a physical system has emerged over the past decade in the domains of manufacturing, production, and operations (Tao et al., Reference Tao, Sui, Liu, Qi, Zhang, Song, Guo, Lu and Nee2019a). Digital twins are generally understood as up-to-date digital representations of the physical and functional properties of a system, which may be a physical instrument (e.g., an aircraft engine), a social construct (e.g., a stock market), a biological system (e.g., a medical patient), or a composite system (such as a construction project, with aspects of physical products and social systems). Digital twins are considered by some to represent a step in the evolution of manufacturing, capable of facilitating the implementation of Industry 4.0 principles (Rosen et al., Reference Rosen, Von Wichert, Lo and Bettenhausen2015; Uhlemann et al., Reference Uhlemann, Lehmann and Steinhilper2017).

Although there is no commonly agreed conceptualization or definition of the term (Kritzinger et al., Reference Kritzinger, Karner, Traar, Henjes and Sihn2018; Gerber et al., Reference Gerber, Nguyen and Gaetani2019), numerous organizations have defined digital twins in terms of their functions and characteristics. According to Tao et al. (Reference Tao, Sui, Liu, Qi, Zhang, Song, Guo, Lu and Nee2019a), digital twins have three main elements: a physical artefact, a digital counterpart, and the connection that binds the two together. The connection is the exchange of data, information, and knowledge between the physical and virtual counterparts, enabled by the development of advanced sensing (e.g., computer vision), internet of things (e.g., interconnected assets), high-speed networking (e.g., 5G internet), and advanced analytics (e.g., machine learning) technologies (Rosen et al., Reference Rosen, Von Wichert, Lo and Bettenhausen2015; Gerber et al., Reference Gerber, Nguyen and Gaetani2019).

In the academic and popular literature of the built environment, many authors use the term digital twin simply (and naively) as a synonym for Building Information Modeling (BIM) models generated in design and construction. Others perceive “digital twins” as digital representations of buildings, bridges, and so forth. For the purpose of their operation and maintenance, based largely on the BIM models produced through their design and construction (Aengenvoort and Krämer, Reference Aengenvoort, Krämer, Borrmann, König, Koch and Beetz2018; Borrmann et al., Reference Borrmann, König, Koch, Beetz, Borrmann, König, Koch and Beetz2018; Arup, 2019). For example, in addition to listing five differentiators between BIM models and digital twins, Khajavi et al. (Reference Khajavi, Motlagh, Jaribion, Werner and Holmstrom2019) observed that the use of digital twins of buildings is restricted to building operation. In this work, we adopt the Centre for Digital Built Britain’s definition of digital twins in infrastructure: a digital twin is “a realistic digital representation of assets, processes or systems in the built or natural environment” (Bolton et al., Reference Bolton, Butler, Dabson, Enzer, Evans, Fenemore, Harradence, Keaney, Kemp, Luck, Pawsey, Saville, Schooling, Sharp, Smith, Tennison, Whyte, Wilson and Makri2018, p. 10).

This paper develops the core concepts for development and implementation of a data-driven planning and control workflow for the design and construction of buildings and civil infrastructure that is founded on digital twin information systems. We call this “DTC” to characterize the concept and distinguish it from traditional and lean modes of planning and controlling production in the construction industry. The design and construction phases present specific challenges in terms of compilation and operation of an effective digital twin. As project production systems (Ballard and Howell, Reference Ballard and Howell2003), all significant construction projects require intense collaboration among large groups of independent designers, consultants, contractors, suppliers, and public agencies. Each collaborator generates information about the product and the process of construction. They use a wide variety of digital tools with multiple data formats that are generally not interoperable (Ch. 3, R. Sacks et al., Reference Sacks, Eastman, Lee and Teicholz2018). The federated building models that construction delivery teams compile are not digital twins: they reflect the as-designed and as-planned states of a project, but not the as-built nor the as-performed states; and they are not updated as the physical state changes. As-built BIM models capture the completed state of construction works, but they are prepared when buildings are delivered to clients, and they exclude the process information. The temporary nature of project sites makes monitoring the building’s components and the actions of equipment and workers challenging.

Yet digital twins for construction are highly desirable because effective decision-making concerning production planning and detailed product design during construction, based on well-informed and reliable “what-if” scenario assessments, can greatly reduce the waste that is inherent in construction (Formoso et al., Reference Formoso, Soibelman, De Cesare and Isatto2002; Horman and Kenley, Reference Horman and Kenley2005; Gonzalez et al., Reference Gonzalez, Alarcon, Mundaca, Pasquire and Tzortzopoulos2007; Ogunbiyi et al., Reference Ogunbiyi, Goulding and Oladapo2014). This idea has spawned broad areas of research, such as Automated Project Performance Control (APPC) (Navon and Sacks, Reference Navon and Sacks2007), Construction 4.0 (Oesterreich and Teuteberg, Reference Oesterreich and Teuteberg2016), and construction applications of technologies for acquisition of as-built geometry, including, for example, photogrammetry and laser scanning (Brilakis et al., Reference Brilakis, Lourakis, Sacks, Savarese, Christodoulou, Teizer and Makhmalbaf2010; Yang et al., Reference Yang, Park, Vela and Golparvar-Fard2015; Han and Golparvar-Fard, Reference Han and Golparvar-Fard2017). Likewise, a plethora of startup companies have emerged in recent years whose raison d’etre is to automate data acquisition from construction sites. However, these have been isolated efforts, with no guiding principles, plans, or concepts for the role they must play in a coherent “digital twin” whole. This is the gap that we address.

The goal therefore is to derive a coherent, comprehensive, and feasible workflow for planning and control of design and construction of buildings and other facilities using digital twin information systems. The methodology is conceptual analysis. The purpose of conceptual analysis is to establish “the conceptual clarity of a theory through careful clarifications and specifications of meaning” (Laudan, Reference Laudan1978). Together with systematic observation/experimentation and quantification/mathematization, conceptual analysis forms an important part of the scientific method (Machado and Silva, Reference Machado and Silva2007) and is focused on breaking down the concepts into elementary parts and studying their interdependencies (Beaney, Reference Beaney and Zalta2018). “These actions include but are not limited to assessing the clarity or obscurity of scientific concepts, evaluating the precision or vagueness of scientific hypotheses, assessing the consistency or inconsistency of a set of statements and laws, and scrutinizing arguments and chains of inferences for unstated but crucial assumptions or steps” (Machado and Silva, Reference Machado and Silva2007). The scope of the analysis was restricted to consideration of the design and construction phase, with emphasis on work performed on-site.

The paper begins with a review of the key management processes and digital tools that have evolved in the spheres of design, planning, and production control of construction projects: BIM, lean project production systems, APPC, and Construction 4.0. Building on the background review of these concepts, their benefits, and their limitations, we define the requirements for a holistic digital twin mode of design and construction. Then, we formulate a set of ontological and epistemological dimensions of digital twins for construction, which we summarize in a set of core concepts. Working from the requirements and the core concepts, we derive a workflow framework for a comprehensive construction management system based on digital twin information systems.

2. Background

The workflow for construction centered on digital twin information systems builds on the foundations of computing in construction, of construction monitoring technologies and methods, and of lean thinking applied to construction planning and control. While each of these research streams has yielded important advances, they have remained largely separate from one another. Digital twins offer the conceptual solution to joining these strands in an effective closed loop production control system. We review each of these areas briefly, concluding each with discussion of the ways in which they underpin the new paradigm of construction using digital twins.

2.1. Lean construction

Lean construction prioritizes achievement of smooth production flows with minimal variation and thus minimal waste of resources. Koskela’s Transformation-Flow-Value theory added Flow and Value conceptualizations of production in construction to the traditional Transformation view (Koskela, Reference Koskela2000), which obscures processes by encapsulating activities in discrete “black-boxes.” As such, lean construction provides the principles for an effective model of production planning and control that can exploit the information generated by the monitoring and interpretation aspects of the digital twin to optimize workflows.

The primary flow is the flow of work itself, which is usually embodied as locations in a building (Kenley and Seppänen, Reference Kenley and Seppänen2010; Sacks, Reference Sacks2016). The key supporting flows are those of workers, materials, equipment, and design information. Any interruption of a supporting flow will disrupt the primary workflow. Planning must be proactive and requires increasingly detailed iterative planning actions to identify and remove constraints to prepare tasks in preparation for assignment to crews and execution. Planning depends on the availability of increasingly detailed process status information, which well-designed monitoring technologies can provide if they are embedded in a suitable digital twin information system framework.

Conversely, complete and accurate information concerning the current status of production flows is essential for effective implementation of lean production planning and control systems, such as the Last Planner® system (LPS) (Ballard, Reference Ballard2000). This is one of the key potential synergies of lean construction and BIM (Sacks et al., Reference Sacks, Koskela, Dave and Owen2010).

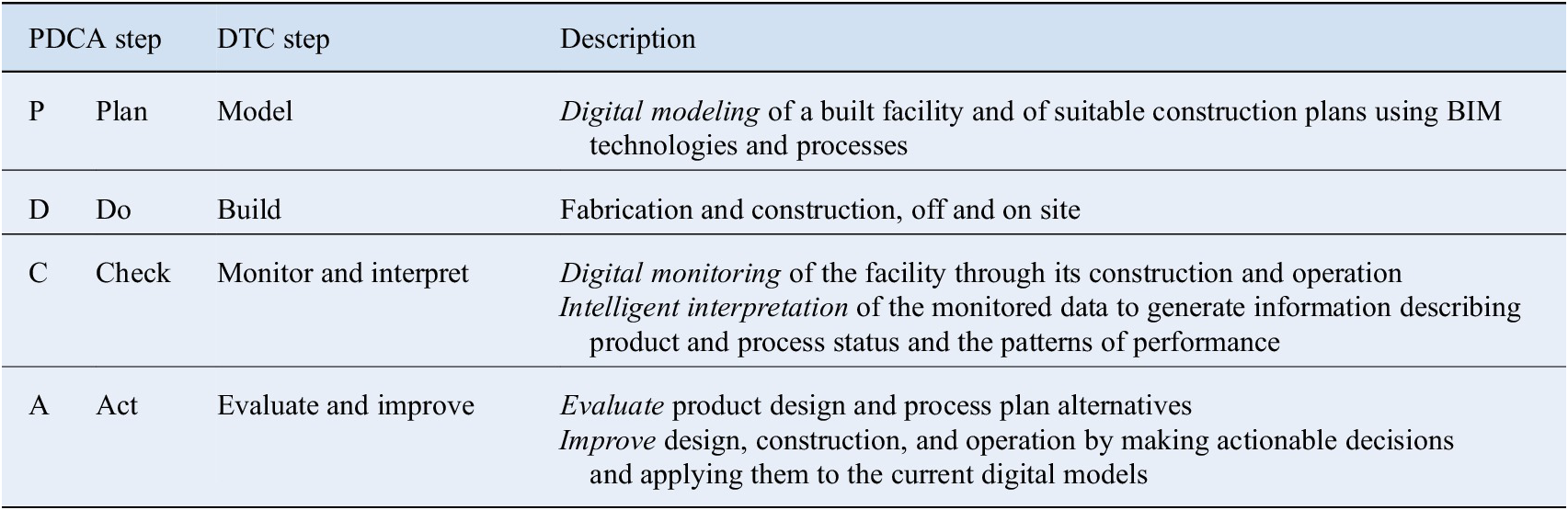

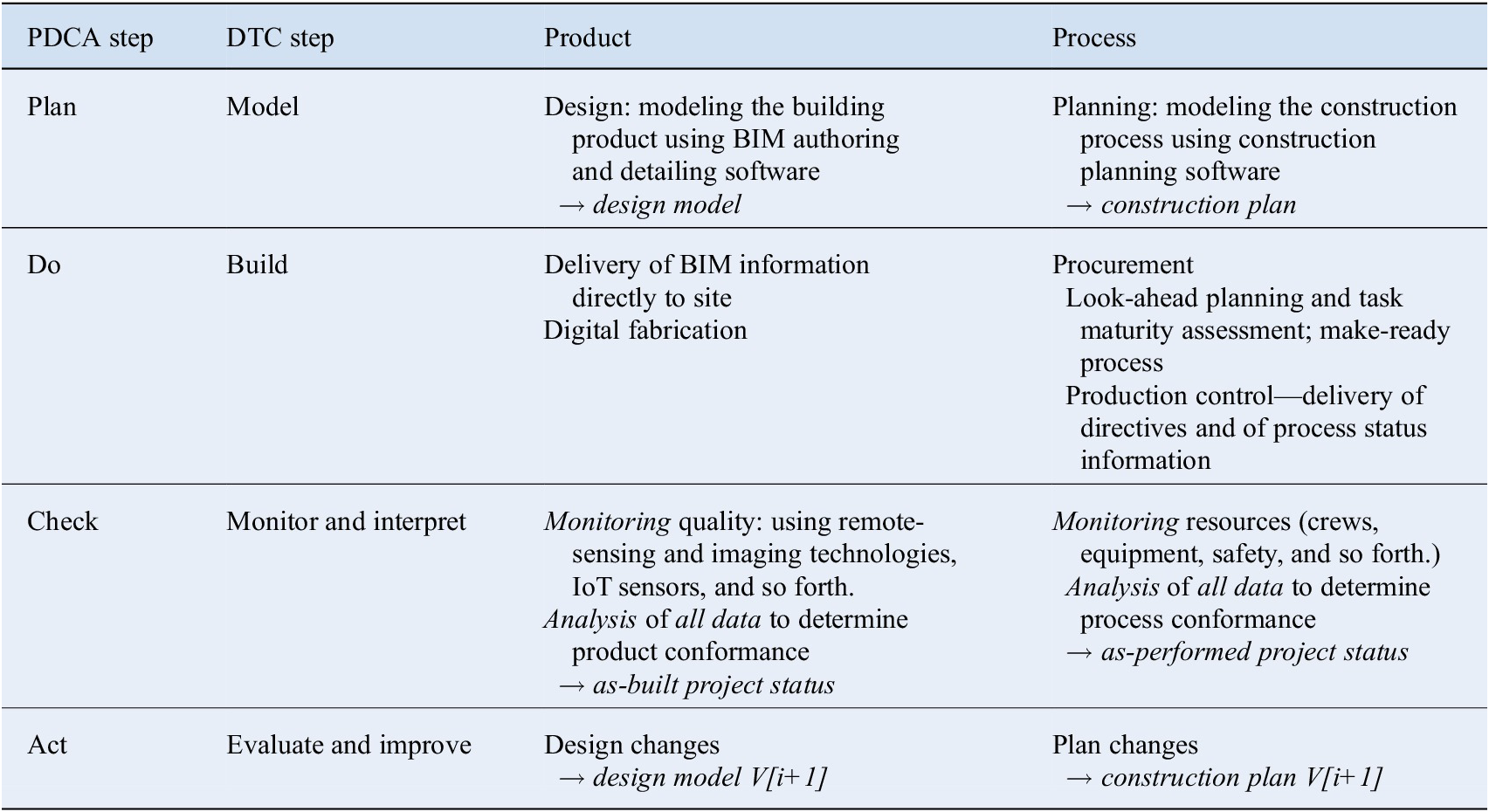

The Plan-Do-Check-Act (PDCA) cycle of production control (Deming, Reference Deming1982), a continuous improvement technique embraced within lean construction, is of direct relevance for construction with digital twin information systems. Modern construction planning and control can be understood as a sequence of concentric PDCA cycles because production in construction is recognized as executed in short-term project production systems amenable to continuous improvement (Koskela, Reference Koskela1992; Forbes and Ahmed, Reference Forbes and Ahmed2011). The LPS itself embodies PDCA cycles at the levels of master planning, phase planning, look ahead planning, and weekly work planning. One of the key challenges to effective implementation of LPS is that the “Check” step requires comprehensive and accurate information about the degree of fulfilment of the constraints to any activity (including the status of all supporting flows). Obtaining this information is especially difficult at the look ahead level (Hamzeh et al., Reference Hamzeh, Zankoul and Rouhana2015), and it is here that automated monitoring can be of particular benefit.

Accordingly, construction using digital twins should implement monitoring feedback loops at varying scales of cycle time—from monitoring activities to determine conformance to major project master plan milestones, to near real-time cycles of monitoring material deliveries, locations of workers and equipment, and so forth. It must also provide prognostic capabilities to extrapolate from current conditions and evaluate the expected emergent outcomes of planned alternative management actions, supporting proactive planning and control.

2.2. Building Information Modeling

BIM encompasses the workflows and the technology for digital, object-oriented modeling of construction products and processes (Sacks et al., Reference Sacks, Eastman, Lee and Teicholz2018). BIM platforms were developed in response to the need for effective IT tools for design, and the processes have evolved to fulfil the need for digital prototyping in construction, allowing testing of both design and production aspects before construction. Many practitioners see BIM as the core technology enabling construction of digital twins.

However, while so-called “as-built” BIM or “Facility Management” (FM-BIM) models (Teicholz, Reference Teicholz2013) provide information about the status of buildings when commissioned, they fall short of the digital twin concept of continuously updated representation of the current state of a facility. “As-built” models are generally compiled reactively, following execution, and their purpose is to provide owners with models for the operation and maintenance phase—called the “asset information model (AIM)” in ISO 19650 (ISO/DIS 19650, 2018). They are not intended to provide the short cycle time feedback needed for project control.

Furthermore, the predictive simulation and analysis tools available for use with BIM are designed for predictive use in design, not in project execution. Applications for structural engineering, for ventilation and thermal performance, for lighting and acoustics, all provide predictions of future performance of the built product. Critical path method tools for master planning are used with BIM models to perform “4D CAD” analysis of project schedules but these are inappropriate for production control (Kenley and Seppänen, Reference Kenley and Seppänen2010, p. 5; Sacks, Reference Sacks2016).

While BIM tools provide excellent product design representations, they lack features essential for construction with digital twins:

-

– their geometry representations use object-oriented vector graphics, which is less than ideal for incorporating the raster graphics of point clouds acquired through scanning;

-

– the object models of BIM systems lack the schema components for representing the construction process aspects.

Furthermore, tools for short cycle predictive analysis of process outcomes, such as those developed in research using agent-based simulations, are lacking.

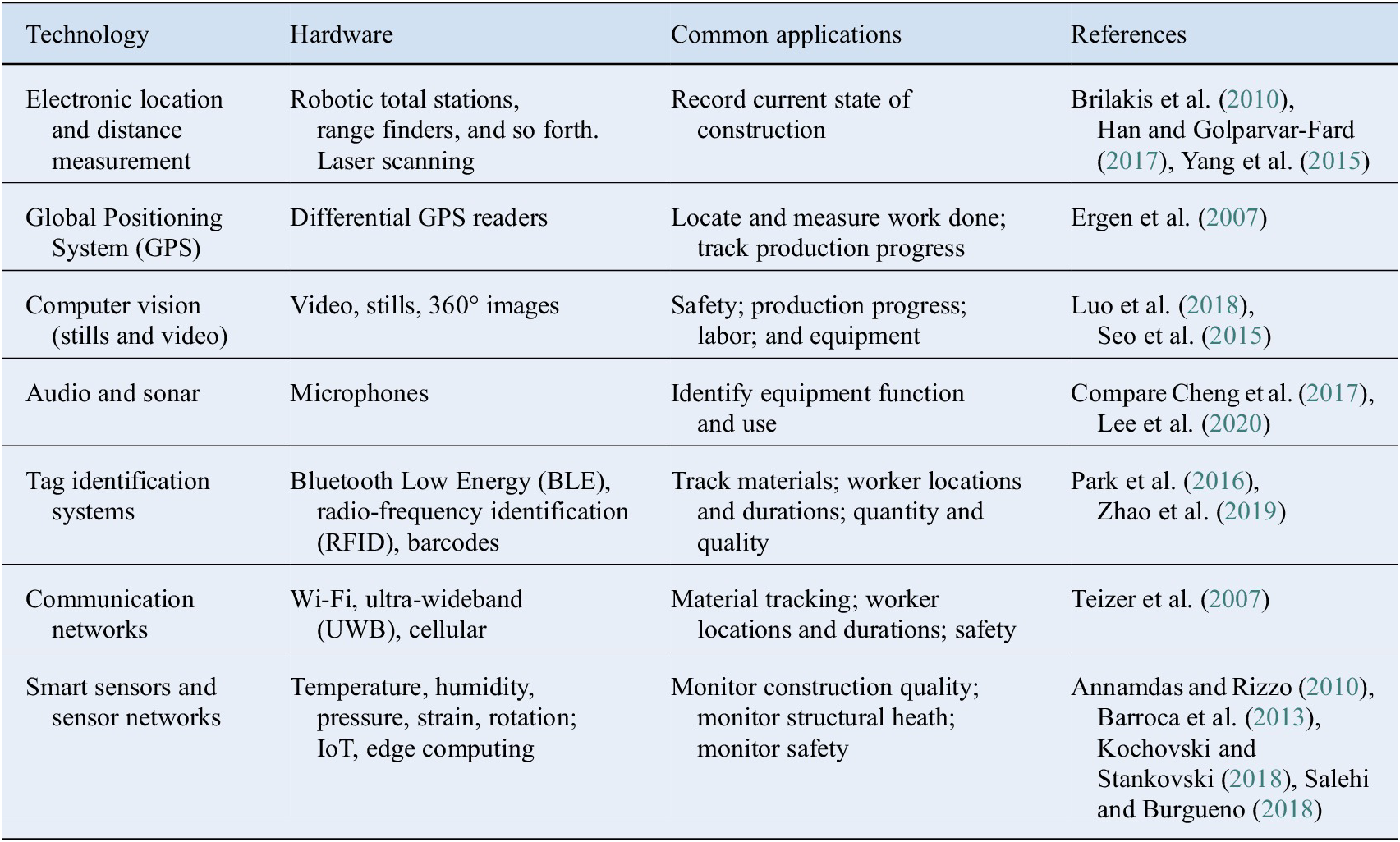

2.3. Construction monitoring technologies and applications

The stream of monitored data that flows from the physical artefact to the digital processes are an essential component of the connection between physical and digital twins. In current traditional construction practice, people monitor the progress of construction work largely by direct observation and measurement. This manual work is time-consuming and error-prone (Costin et al., Reference Costin, Pradhananga and Teizer2012; Zhao et al., Reference Zhao, Seppänen, Peltokorpi, Badihi and Olivieri2019). Researchers have proposed and tested many technological solutions for automatically monitoring construction work, and some have recently become available and applied commercially. Table 1 summarizes some of the key technologies investigated for monitoring construction activity.

Table 1. Data acquisition technologies applied to monitoring construction.

A striking feature of the commercial applications of monitoring technologies (such as those listed in Table 1) to date in construction is that the information gleaned is generally used in isolated fashion. Almost all have a single subject focus, such as performance of the tower cranes, movement of the workers, or physical progress of the works. There are very few cases of integrated use of more than one technology. Systems installed to monitor delivery of materials are used only to authorize accounts; monitored worker access gates only serve security and safety functions, and so forth.

What is lacking is a cohesive, integrated approach to production control in which multiple monitoring systems inform a project database, which can then support various management functions. Some notable exceptions prove the rule: for example, some collision alert systems employ separate technologies to locate heavy machinery (e.g., GPS) and workers (e.g., computer vision), merging the data to generate actionable information (Seo et al., Reference Seo, Han, Lee and Kim2015; Fang et al., Reference Fang, Ding, Zhong, Love and Luo2018).

Navon (Reference Navon2005) proposed a system termed “APPC” (Navon, Reference Navon2005; Navon and Sacks, Reference Navon and Sacks2007), as shown in Figure 1. The key idea was that activities could be monitored to feed a database that captured the as-built state of the building under construction. That model could be compared with the as-designed and as-planned information to determine discrepancies, which would inform the next round of control. However, this system had two major drawbacks. First, it applied the reactive “thermostat model” of control (Hofstede, Reference Hofstede1978). As the flow aspect of production theory in construction reveals, achieving smooth and predictable flows requires proactive filtering of production constraints in advance of assignment of tasks to crews for execution (Ballard, Reference Ballard2000). Reactive correction where actual performance is found to deviate from planned performance is too late to correct a project’s direction. The second drawback is that it monitored construction activities, neglecting the feeding flows of materials, labor, equipment information, and locations. This reflects the “black-box” transformation view of production in construction and the absence of consideration of the flow view. Proactive management requires monitoring the prerequisite flows of materials, of locations, of labor, of equipment, and of information that are essential for evaluating the status of constraints, a key aspect of the lean “make-ready” process.

Figure 1. APPC management model (Navon and Sacks, Reference Navon and Sacks2007). Copyright: Elsevier. Note: this figure has been reproduced with the permission of the copyright holder and is not included in the Creative Commons license applied to this article. For other reuse, please contact the copyright holder.

Thus an effective mode of construction control using a digital twin must incorporate (a) technologies for monitoring the feeding flows of activities as well as the activities themselves and (b) data processing technologies capable of merging data from multiple streams to compile comprehensive and accurate status information.

2.4. Construction 4.0

Industry 4.0 is the idea that automated production operations can be networked together, enabling direct communication and thus coordination among them, along the value stream, resulting in highly autonomous production processes. The concept defines “a model of the ‘smart’ factory of the future where computer-driven systems monitor physical processes, create a virtual copy of the physical world and make decentralised decisions based on self-organisation mechanisms” (Smit et al., Reference Smit, Kreutzer, Moeller and Carlberg2016).

Many authors have suggested that the same concept is applicable to the construction industry (e.g., García de Soto et al., Reference García de Soto, Agustí-Juan, Joss and Hunhevicz2019; Klinc and Turk, Reference Klinc and Turk2019; Sawhney et al., Reference Sawhney, Riley and Irizarry2020). Broadly speaking, “Construction 4.0” is a framework that includes extensive application of BIM for design and for construction, industrial production of prefabricated parts and modules, use of cyber-physical systems (including robotics) where possible, digital monitoring of the supply chain and work on construction sites, and data analytics (big data, AI, cloud computing, blockchain, and so forth.).

However, it is apparent from the descriptions that the understanding of Construction 4.0 falls short with respect to the twin ideas of automation and autonomy of production processes that are central to the conceptualization of Industry 4.0. The driving principle of interconnectedness and autonomy, of systems that make decentralized yet fully coordinated decisions along automated supply chains and production operations, is absent. In addition, production in construction is still far from achieving even partial automation of operations, which as the focus of Industry 3.0, is a prerequisite for Industry 4.0. As such, Construction 4.0 offers inspiration, but it does not provide a coherent, comprehensive, and actionable paradigm that can be used as a blueprint for implementation.

2.5. Digital twins in the built environment

With few exceptions, the digital twin concept has been applied in the built environment to date primarily to the operation and maintenance phases. Governmental and other public clients have increasingly recognized that the information provided through use of BIM in the procurement process of infrastructure assets has value in that it can provide the cornerstone for information systems for optimal operation of individual assets, of systems of assets, and indeed of systems of systems (Bew, Reference Bew and Eynon2016; Gurevich and Sacks, Reference Gurevich and Sacks2020). The procedures for defining requirements and for delivery of asset information using BIM for the purpose of asset and system operation and maintenance are proscribed by ISO 19650 (ISO/DIS 19650, 2018). In this context, most of the self-declared implementations of digital twins are limited to exploiting BIM as information stores and for visualizing information (Teicholz, Reference Teicholz2013; Bonci et al., Reference Bonci, Carbonari, Cucchiarelli, Messi, Pirani and Vaccarini2019).

The UK definition of a “National Digital Twin” (NDT)—an interconnected ecosystem of digital twins, each modeling a component, a system, or a system of systems of buildings and infrastructure, connected via securely shared data—reflects the view of the nature of digital twins in the built environment (Enzer et al., Reference Enzer, Bolton, Boulton, Byles, Cook, Dobbs, El Hajj, Keaney, Kemp, Makri, Mistry, Mortier, Rock, Schooling, Scott, Sharp, West and Winfield2019). A set of nine guiding principles, called the Gemini Principles, has been formulated to guide development of the UK’s NDT (Bolton et al., Reference Bolton, Butler, Dabson, Enzer, Evans, Fenemore, Harradence, Keaney, Kemp, Luck, Pawsey, Saville, Schooling, Sharp, Smith, Tennison, Whyte, Wilson and Makri2018). While some of the principles are applicable to development of the core concepts for DTC (requirements for value creation, provision of insight, security, quality, federation, and curation), others are specific to digital twins in the public domain (public good in perpetuity, openness, and evolution). They do not provide the specificity of function that is needed to delineate the requirements for DTC.

In construction, digital twins are a new phenomenon. Boje et al. (Reference Boje, Guerriero, Kubicki and Rezgui2020) reviewed the literature on BIM for construction applications and analyzed digital twin uses in adjacent fields to identify gaps and to formulate and define a “construction digital twin (CDT).” They propose the development of a CDT in three generations. The first generation is described as an enhanced version of BIM on construction sites to date; the second generation introduces semantics, describing CDTs as “enhanced monitoring platforms with limited intelligence where a common web language framework is deployed to represent the DT with all its integrated IoT devices, thus forming a knowledge base”; and in the third generation, “the apex of the DT implementation possible to date represents a fully semantic DT, leveraging acquired knowledge with the use of AI-enabled agents. Machine learning, deep learning, data mining, and analysis capabilities are required to construct a self-reliant, self-updatable, and self-learning DT.”

Despite Boje et al.’s (Reference Boje, Guerriero, Kubicki and Rezgui2020) thorough review of the state-of-the-art of research and implementation of existing construction applications of BIM, and their consideration in relation to DT developments in other industries, these definitions are neither rigorously derived nor articulated. The key limitations are (a) that the formulation of a CDT begins with the conceptual understanding that the CDT is a progression or evolution of the BIM models and (b) that they lack a sound conceptualization of the construction process itself. The latter limitation is most significant: DTC is a holistic mode of construction management, whereas CDT is seen as a technology to support construction as it is currently practiced. The former limitation is a result of the narrower viewpoint.

3. Introducing DTC

The background review portrays both technological and production management innovations that are making inroads in the construction industry. Recent advances in BIM and lean construction, and specifically commercial innovations in construction monitoring technologies, make it possible to consider a mode of planning and control for design and construction that integrates the new technologies and principles to achieve closed loop systems. This was hitherto impractical due to the need to manually collect status information, interpret it, and derive actionable knowledge. Yet as things stand, the innovations pioneered by Construction Tech startup companies are largely applied with a single technology in isolation from other data streams, with little or no integration within any coherent production management paradigm. Lean construction offers a sound basis for process integration but its methods are information and resource intensive and difficult to maintain without supporting information technology. Thus although innovations in lean construction, in BIM, and in monitoring technologies are each significant in their own right, there is a need for a unifying conceptual framework that defines how construction can be managed and executed, one that harnesses these disparate innovations to make effective use of the data and methods they provide.

One may also view the current situation and the potential of recent advances in technology through the lens of the digital twin concept as defined for the built environment by the “Gemini Principles” document (Bolton et al., Reference Bolton, Butler, Dabson, Enzer, Evans, Fenemore, Harradence, Keaney, Kemp, Luck, Pawsey, Saville, Schooling, Sharp, Smith, Tennison, Whyte, Wilson and Makri2018). Inter alia, this document states “What distinguishes a digital twin from any other digital model is its connection to the physical twin.” Thus, unlike BIM models, a digital twin for construction not only replicates the physical twin, it is also connected to it, automatically updated as the physical twin changes. Advances in monitoring technologies and in the software required to interpret the data they acquire make this possible. By extension, this offers the opportunity to define a new mode for planning and controlling design and production in construction that comprehensively and coherently integrates the disparate innovations.

DTC starts with the recognition that the real-time information streaming from the construction project enables a closed loop model of construction control that has not been possible to date. The PDCA cycle provides the necessary process structure for closed loop production control. Table 2 lists the constituent steps of the PDCA cycle in terms appropriate for DTC. The most significant difference between this approach and current construction control is manifested in the “Check” phase. If the copious amounts of data that can be collected using a variety of technologies, from both supply chains and the construction site, can be effectively interpreted to produce accurate and comprehensive information automatically and within short cycle times, then it should be possible to leverage that information, together with the information contained in the project BIM models, to evaluate alternative product designs and production plans.

Table 2. Constituent components of the DTC workflow with correspondence to the PDCA cycle.

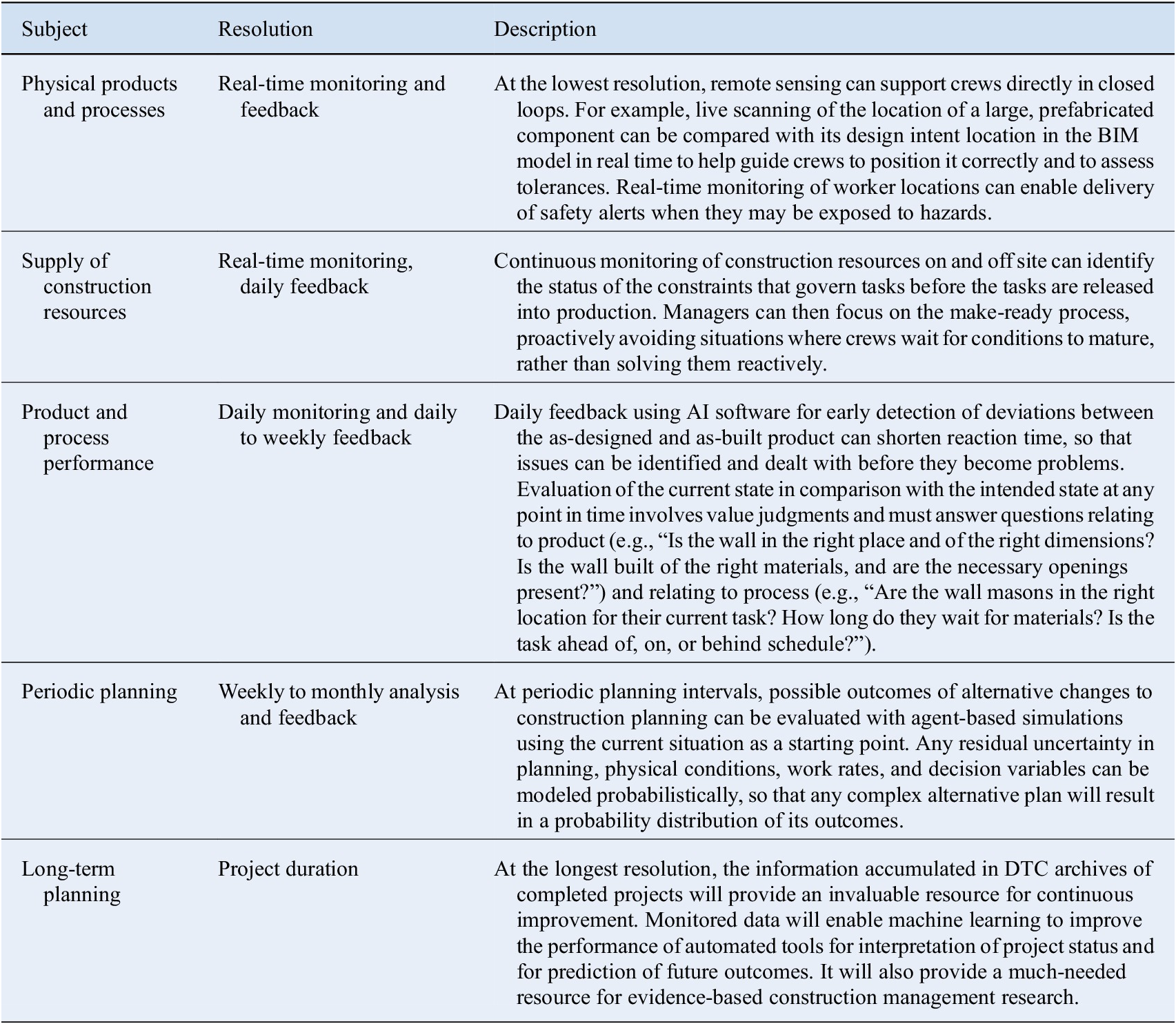

The benefits of such “data-centric” construction management arise from the significantly better situational awareness that it can provide construction managers and workers at all levels, making construction management more proactive than reactive. Situational awareness is not limited to comprehensive understanding of the current state; it encompasses knowledge of the probable consequences of decisions concerning future action, gleaned from extrapolation using digital simulations and other analysis tools (Endsley, Reference Endsley2016). Such foresight applies in concentric PDCA loops at different time scales, as shown in Table 3.

Table 3. Concentric PDCA monitoring and control loops.

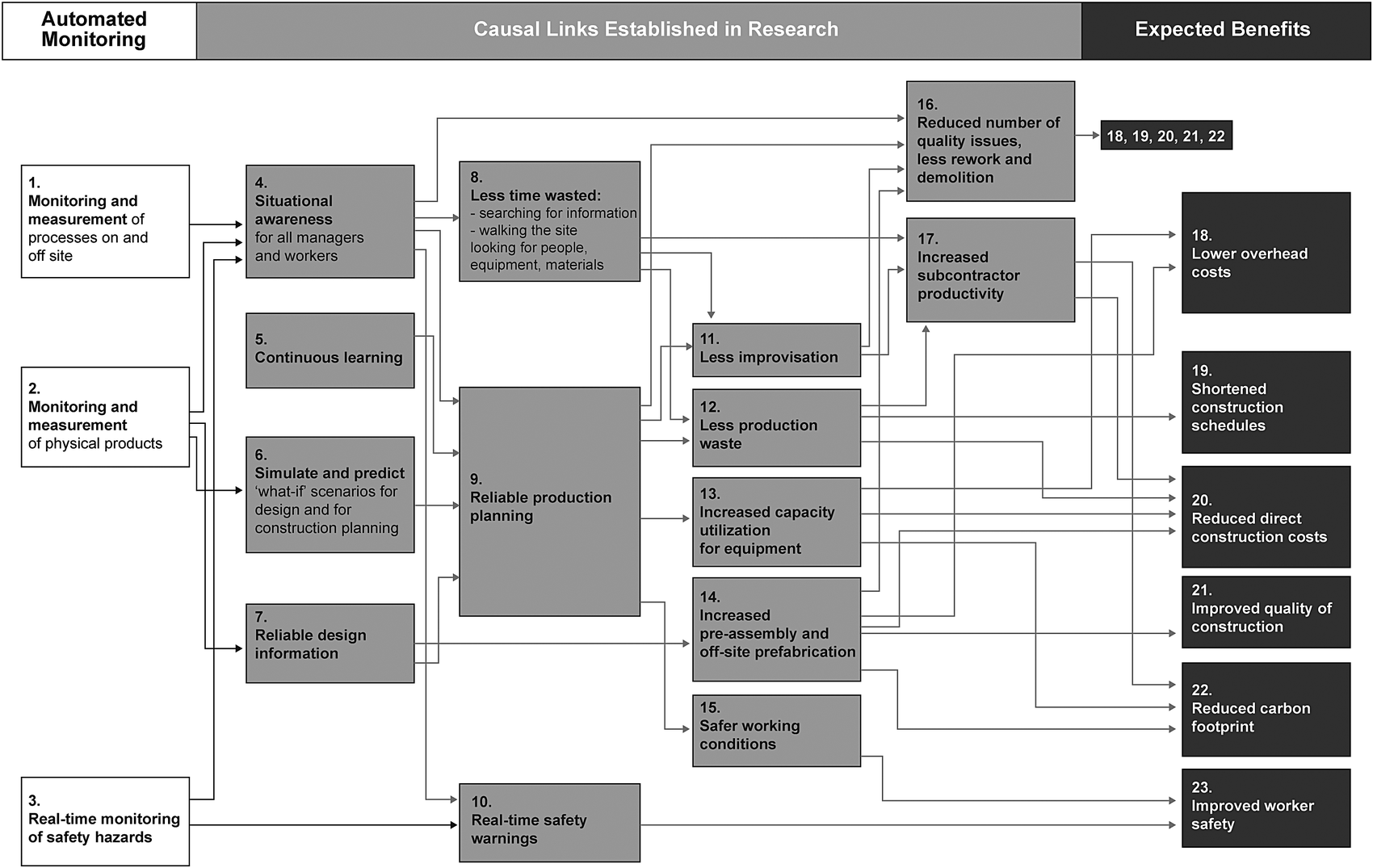

Figure 2 outlines possible causal links between improved situational awareness, on the left-hand side, and the potential benefits, on the right-hand side, assuming DTC. The left-most boxes (1–3) represent automated monitoring; the right-most boxes (18–23) are the expected benefits. The boxes in between reflect causal links established in research. Some are self-explanatory; others are detailed in the following sentences. Continuous learning (box 5) involves exploiting the information accumulated in archives of digital twins of completed projects to support learning for better planning, estimating, and managing future projects. Reliable design information (box 7) reflects the benefit of accurate modeling of site conditions acquired from monitoring systems. Reliable production planning (box 9) is achieved by scheduling only mature work, work that can be done right first time, safely, based on reliable process status information. The amount of “making-do” that is common when tasks are not mature is also reduced, for the same reason (box 11), as is the amount of production waste (less waiting of work for workers; fewer unoccupied locations; less excess work in progress inventory; less waiting of workers for work, materials, space, design information, or preceding tasks; less material wasted due to wrong delivery locations or times) (box 12). Increased productivity for crews (box 17) translates in the medium- to long-term into lower bids from subcontractors.

Figure 2. Possible causal relationships between monitoring, situational awareness, production practice, and desired outcomes expected in DTC.

4. Core Information and Process Concepts

What are the principles that guide design of a system workflow for DTC? Clearly, information and communication technologies play an important role in the development and implementation of digital twins (Tao et al., Reference Tao, Zhang and Nee2019b). However, the design of new digital twin processes and technologies for construction requires holistic thinking: the ontological and epistemological dimensions of digital twins for construction, the basic information and technology elements, the relationships between them, and their individual and collective functions must be clarified. This is the subject of this section.

Ontologically, a digital twin is a categorization of different information entities of production. Fujimoto considered manufacturing a flow of design information, in which productive assets are seen as information carriers, embodying design information (Fujimoto, Reference Fujimoto2007, Reference Fujimoto2019). Design information, based on the axiomatic design theory, is structured information of customer characteristics, functional requirements, and design parameters as design solutions and production processes (Suh, Reference Suh2001). Largely, the information entities can be classified as belonging to virtual or physical, product or process, and intended or realized aspects.

Epistemologically, digital twins are used by people to design and plan production systems and to generate new knowledge by comparing monitored data against the designed and planned. This is manifested in the PDCA cycle, embodied in the different functions of production management, including production system design, production system operation (planning, execution, and control), and production system improvement (Koskela, Reference Koskela2020). Modeling, simulation, and analysis facilitate learning as predicted outcomes can be compared with actual outcomes.

Below, we identify four dimensions that define the conceptual space for the information used in the DTC workflow. By stating these dimensions explicitly and then working from them to design the workflow, we seek to ensure that the resulting systems, once implemented, will provide the full breadth of functionality needed to support DTC.

4.1. Physical–virtual dimension

Information is generated and exists in both the virtual and in the physical worlds. People generate virtual information in design and in planning to represent intent, that is, to guide action that transforms things in the physical world. Physical information is inherent in the building or infrastructure, in the components and in the relationships between them, and in the flows and actions of the resources that construct buildings and infrastructure (workers, equipment, and material). Information is implicitly present in the length of a window, the elevation of a floor, the material of a beam, and the physical relationship of “structural support” inherent between a column and a slab it supports. These can be monitored or measured, resulting in digital copies, which are virtual information.

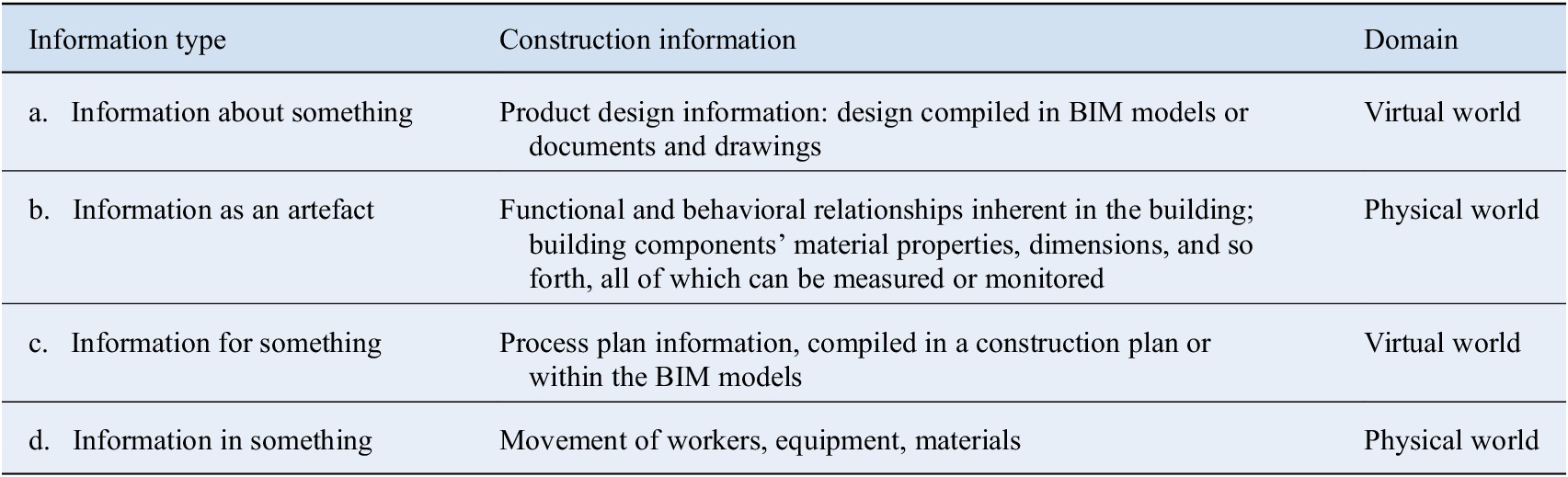

Floridi (Reference Floridi2013) defines four types of information: (a) Information about something, (b) information as an artefact, (c) information for something, and (d) information in something. Table 4 lists the four information types and provides examples from construction. Design and planning information is generated by people and exists in the virtual world. These are types (a) and (c). The building itself embodies information of type (b), which exists in the physical world. Likewise, the movements of resources employed in construction embody physical world information but of type (d).

Table 4. Digital and physical construction information according to Floridi’s four information types.

The digital twin must contain or represent all of the virtual information, comprising both the sets of intended states that exist in people’s minds and the sets of actual states that reflect the physical information as it develops through time. When something is changed in the physical world, the current virtual copy of the physical information no longer represents the physical world correctly, unless and until it is refreshed through renewed monitoring or measurement, and this has important implications for sampling frequency.

4.2. Product–process dimension

Information in the digital twin describes both construction product and construction process. The product information is stored in the design BIM model’s objects, their properties, and their relationships. The process information is stored in the construction plans, including construction methods, schedules (tasks, activities, resources), budgets, and so forth. Speaking broadly, the term Project Information Model (PIM) defined in ISO 19650 (ISO/DIS 19650, 2018) can and should include both product and process. Whereas most BIM tools only provide product modeling (Sacks et al., Reference Sacks, Eastman, Lee and Teicholz2018), management BIM applications, such as VICO (VICO, 2016) and Visilean (Visilean, 2018), incorporate both product and process aspects. Recognizing the dual aspects of product and process enables a more detailed elicitation of the PDCA cycle for DTC, as shown in Table 5.

Table 5. Detailed construction phase Plan-Do-Check-Act (PDCA) cycle and the constituent digitalized parts of the DTC workflow, showing both product and process aspects.

4.3. Intent–status dimension

Information describing product and process changes through time. Design and planning decisions expressing intent are made along a timeline as designers and planners propose, test, and refine their formulations. Information representing the physical world changes as work is done, and materials are transformed into products and as resources flow through construction processes. As such, each item of information must be associated with a timestamp or version descriptor. Fujimoto and others’ information flow-based view of manufacturing emphasizes the fact that information progresses and changes through time. Indeed, a design-information view of manufacturing “is broadly defined as firms’ activities that create and control flow of value-carrying design information […] through various productive resources deployed in factories, development centres, sales facilities, and so on” (Fujimoto, Reference Fujimoto2007, Reference Fujimoto2019).

The present time (or the “status time,” i.e., most recent time when the physical information was monitored and recorded) divides past states from future states. However, it is more practical to classify future states of a design as the design intent, and past states of a product as the product status, because past versions of a design, whether executed or not, become “past perfect” reflections of a formerly intended future state.

-

a. All information about the future state (ex-ante) of a building is expressed in the design and in the construction plan for the parts of the building that have not yet been built. We will call this information the Project Intent Information (PII), representing the as-designed and as-planned aspects of the project. As time progresses, versions of this “future state” are stored. Thus we may have an as-designed model version that was a valid future-looking view at any specific time in the past; likewise, we may store an as-planned construction plan that must be associated with the as-designed model at the same point in time.

-

b. All information about the past state (ex-post) of a building and its construction process records what was done and how it was done. This is the as-built product and as-performed process information. For example, the location and exact geometry of a wall as it stands after construction are its as-built information, whereas the start and end times and the number of hours a mason worked to build the wall are its as-performed information. Here too, we store multiple versions of the state of a building project over time, and each version is time-stamped with the date and time at which it was measured. We call this information the Project Status Information (PSI).

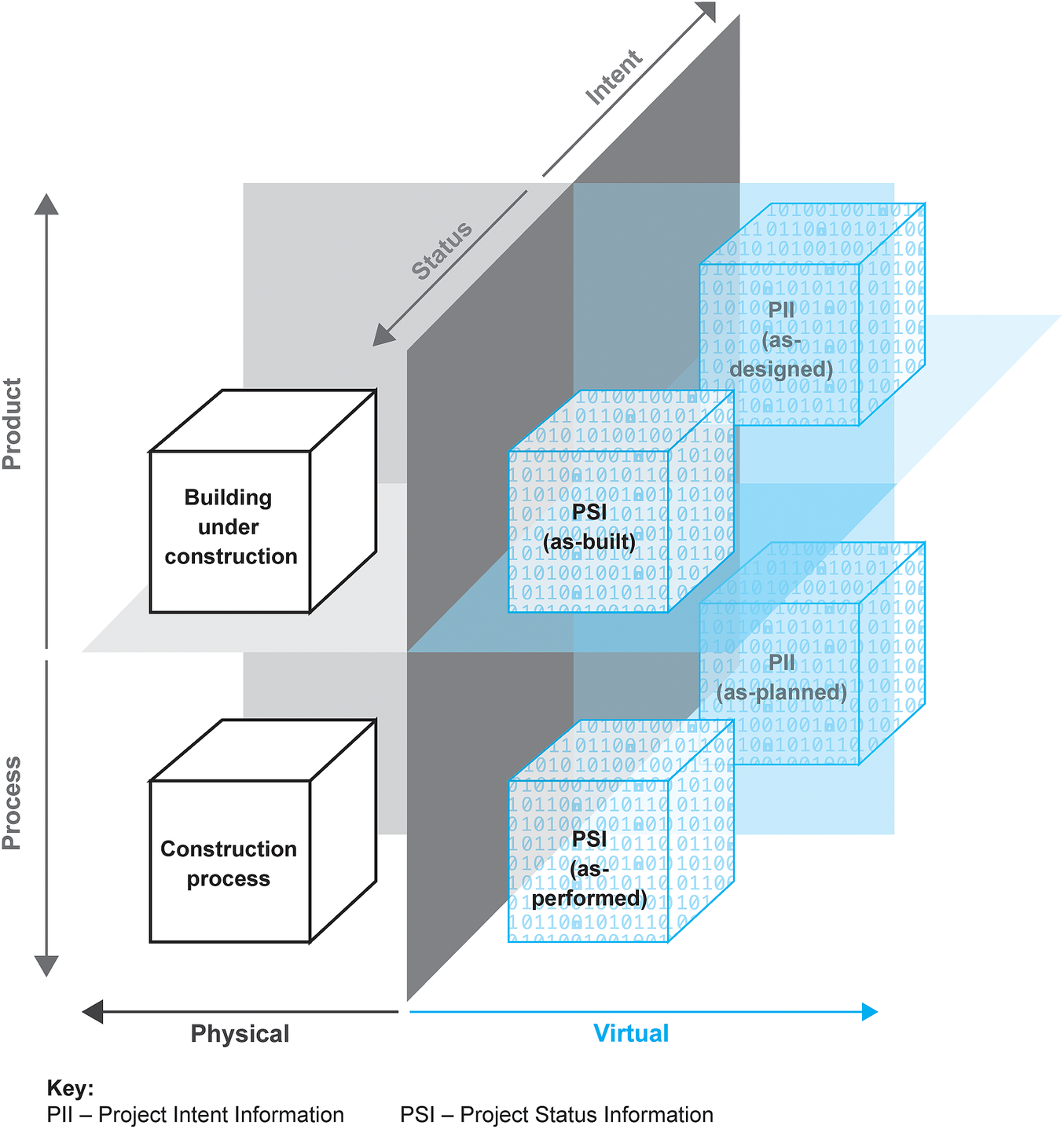

We consider all the information, both planned future versions and measured past versions, to be part of the digital twin for construction. Figure 3 lays out the information aspects of the domain within the three-dimensional space defined by the digital–physical, the product–process, and the intent–status dimensions. The two left-hand “boxes” represent the physical twin, while the four on the right-hand side are the conceptual components of the digital twin, including both PII and PSI.

Figure 3. Information aspects of the domain within the three-dimensional space defined by the digital–physical, the product–process, and the ex-ante and ex-post dimensions.

Figure 4 shows a simpler “top view” version of the same space, highlighting the physical–virtual and the status–intent dimensions. In this view, a physical “mock-up” or scale model of the design intent is shown in the top-left quadrant.

Figure 4. Top view of the three-dimensional space, showing the virtual–physical and the status–intent regions. The PII, PSI, and the site each has both product and process aspects. A physical mock-up or model is shown in the top-left quadrant.

4.4. Data–information–knowledge–decisions

Digital twins generate, derive, store, and manipulate data, information, and knowledge to support decision-making about product design and process plan before and during construction. Up to this point, the discussion has been limited to information. To conceptualize digital twins for construction accurately, we must define our use of the terms data, information, and knowledge. Distinguishing among these is essential because the terms determine our understanding of the semantics and of the degree of uncertainty inherent in the different aspects of the DTC workflows.

Broadly speaking, information is compiled by interpreting data, information is processed to create knowledge, and knowledge supports decisions and actions (Kitchin, Reference Kitchin2014). Despite broad interest in the fields of information and communication technologies, there is no consensus about the nature and meaning of data, information, and knowledge (Castells, Reference Castells2010; Adriaans, Reference Adriaans, Zalta, Nodelman and Allen2018). Floridi (Reference Floridi2013), for example, argued that because information is used “metaphorically and at different levels of abstraction, the meaning is unclear.”

Kitchin (Reference Kitchin2014) reasoned that data refer to those elements that are “taken” (derived from the Latin “capere”), for example, “extracted through observations, computations, experiments, and record keeping” (Borgman, Reference Borgman2012). “As such, data are inherently partial, selective, and representative, and the distinguishing criteria used in their capture has consequence” (Kitchin, Reference Kitchin2014). In our context, the data are collected by capturing the signals from sensors and other monitoring equipment on the construction site and collecting data from the information systems of the companies engaged in construction. Data from any one source are often limited in scope, incomplete or flawed. Numerous data sources are needed to derive some reliable and useful piece of information. For example, one may need a laser scan of a wall, a set of images of the wall, data from and about the workers who built the wall, delivery data for the materials used to build it, to determine a wall’s location (both absolute and relative to other components), the amount of time workers spent in its vicinity and their productivity, the dimensions of the wall, or the materials used in its fabrication.

The data in and of itself have little value—it must be interpreted, processed, and compared with other data and other information, to allow deduction and induction of useful information (Floridi, Reference Floridi2013). Value from information is captured through the information life-cycle: occurrence (discovering, authoring), transmission (networking, retrieving), processing and management (collecting, validating, indexing, classifying), and usage (monitoring, modeling, explaining, forecasting, learning) (Floridi, Reference Floridi, Zalta, Nodelman and Allen2019). Through the latter three stages, processing, management, and usage of information, knowledge can be created.

Knowledge is understood in different ways too, depending on the particular viewpoints, assumptions, and prescriptions (Kitchin, Reference Kitchin2014). In the philosophy of science, the field of epistemology is dedicated to understanding how knowledge is created and disseminated through systems of human inquiry (Steup, Reference Steup, Zalta, Nodelman and Allen2018). The question is about the relationship between the world of ideas (e.g., theories, concepts, and models) and the external world (e.g., physical phenomenon, practices, observations). From the traditional perspective, functions of knowledge include description, explanation, and prediction of the behavior of phenomena (Losee, Reference Losee2001). However, in the context of productive sciences, knowledge also has a prescriptive function (de Figueiredo and da Cunha, Reference de Figueiredo, da Cunha and Kock2007). That is, production theories must also guide the improvement of practices and provide means to validation (Koskela, Reference Koskela2000).

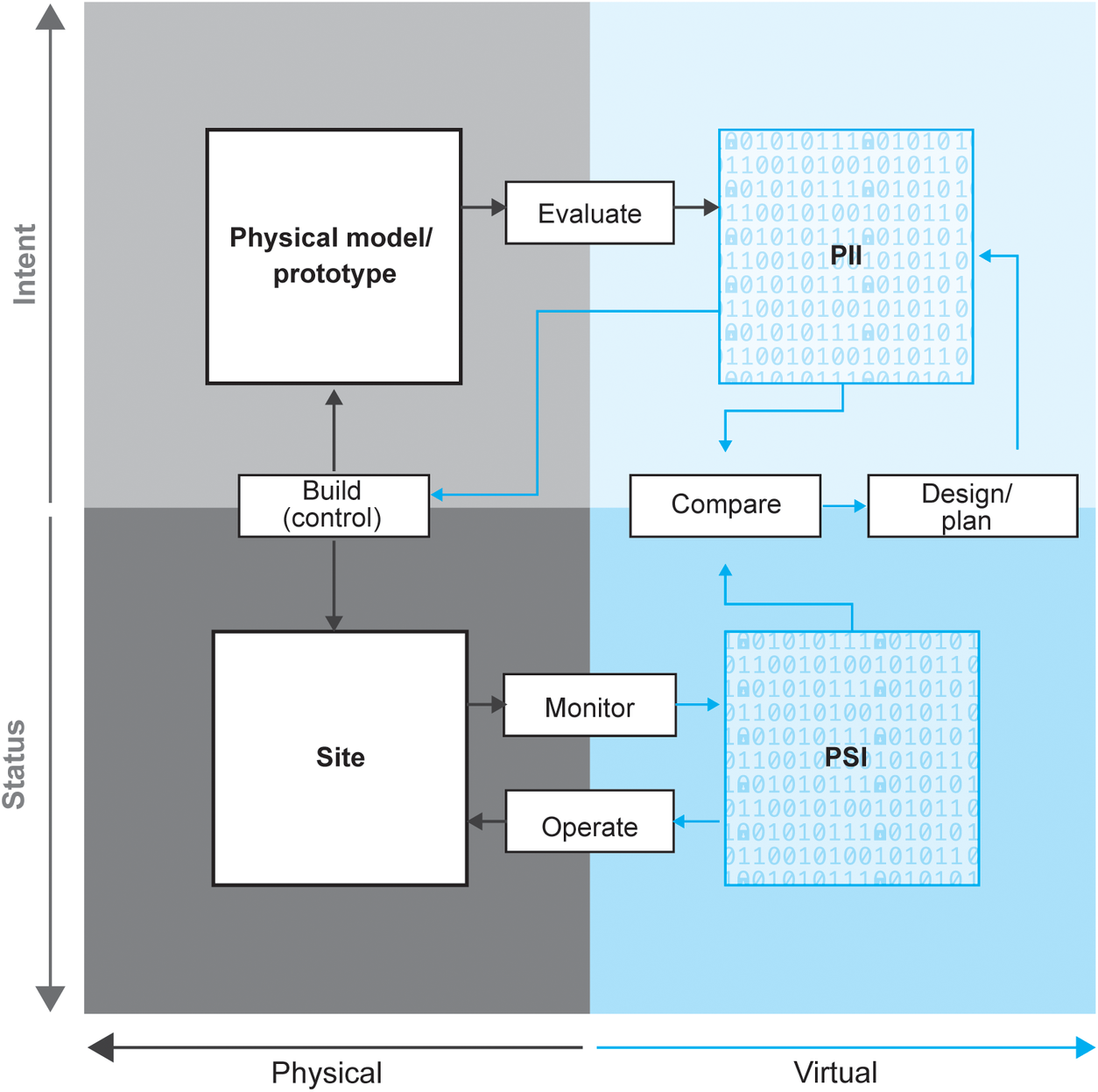

Thus, just as a CDT will require software methods to derive reliable information from data, so will it require software modules to perform value judgments using the information and so derive new knowledge about conformance to design or plan. These modules compare as-built and as-performed information with as-planned and as-designed information (i.e., as shown in Figures 5 and 6, comparing PSI with PII). For example, from information about the wall discussed above, one might conclude that it was not built in the right location or that it was built using the wrong materials or that it required excessive time. This knowledge supports decision-making to change future designs or plans if needed.

Figure 5. DTC workflow process.

Figure 6. Lifecycle of the physical and digital building twins.

5. DTC Information System

Working from the core information and process concepts, we propose a DTC information system workflow. Figure 5 lays out the DTC workflow, and Figure 6 defines the different aspects of information that serves the workflow. The following paragraphs describe the system’s workflow and its components—information stores, information processing functions, and monitoring technologies—according to three concentric control workflow cycles.

5.1. Model, build, monitor and interpret, evaluate, and improve cycle

At the start of any construction project, designers work from a project brief to design a product, such as a building, to fulfil the owner’s functional requirements. This is closely followed, in iterative fashion, with planning the construction. These functions are represented by the Design product, Plan process activity at the top left-hand of Figure 5. The information generated is PII. Designers and planners apply a variety of specialized engineering simulation and analysis software (in the Predict performance activity in the figure), which use the PII and codified design knowledge to predict the likely performance of their design and their plans. The results are knowledge about the behavior of the building, collectively called Project Intent Knowledge (PIK), and the designers use this knowledge to refine their designs through multiple iterations.

Figure 6 depicts the accumulation of “as-designed” and “as-planned” information over time, from left to right, with the triangles at the bottom of the figure. This activity, generating both product and process information, continues to add detail to designs and plans through the construction phase and ends with completion of construction. Multiple versions of both PII and PIK are generated, and each is recorded with an appropriate version identifier. There are many ways to design and implement storage for the PII and the PIK—these will be discussed in the following section. Using the metaphor of human twins, we call this information the Foetal Digital Twin in Figure 6.

The construction phase begins with the earlier of the start of prefabrication of components off site or the start of construction activity on site. This is the birth of the physical twin, and at this time, monitoring begins to accumulate the data and the information that constitutes the PSM. Contractors use the PII information to guide procurement of materials and off-site components and to control construction of the building (Build activity in Figure 5). The supply chain off site and the building works on site embody the physical information that defines the status of the project. Continuing the human twins metaphor, the building under construction, the physical processes executed, and the construction resources and equipment are the Child Physical Twin. Throughout this period, a variety of monitoring technologies are applied to capture the status of the product and of the process (“Monitor”), and they generate raw monitored data (Figure 5).

As construction progresses and data accumulate, the Interpret function applies complex event processing (CEP) to deduce what was done and what resources were consumed in doing it (Buchmann and Koldehofe, Reference Buchmann and Koldehofe2009). The information it generates describes the “as-built” and the “as-performed” state of the project, that is, the PSI. Events may be classified using rule inferencing or machine learning algorithms. The input includes not only the raw monitoring data from multiple streams but also the PII information and any extant PSI from previous cycles. It may also draw on external information archived in historic building twins. The PII provides direct clues as to what was expected and physically locates intended components, thus narrowing the search space. Similarly, the PSI provides context along the timeline of product and process. The historic digital twin information supports machine learning or case-based reasoning, which may occur offline. In the human twins metaphor, the PSI is the Child Digital Twin (Figure 6).

Next in Figure 5, a specialized Evaluate Conformance function compares the actual to the intended, the PII to the PSI. These are value judgments because in every case they must use some threshold value to determine whether the degree of discrepancy between PSI and PII is acceptable or requires remediation. For example, the actual width of a concrete column may be less than its nominal design width but that may be acceptable if the deviation is within the predefined allowable tolerance. While conformance evaluation can be automated using various AI methods, it is likely that this function would solicit user input for value judgments in many instances and will draw on standard design knowledge. The output of this function is knowledge about the projects status and termed Project Status Knowledge (PSK). Appropriate data visualization tools are needed to communicate the project status, deviations from design intent or production plan, and any other anomalies (not shown in the figure).

At this stage, control of the process reverts to the designers and the construction planners, who can propose changes to the product design or the production plan in response to the status knowledge, thus completing the PDCA cycle. They can use the same specialized engineering simulation and analysis software (Predict Performance in Figure 5) to predict the likely outcomes of any possible changes, and thus to compare and select among alternative options regarding changes to the design, to the construction plan, or to both. Any options selected for implementation are added to the PII, generating new versions. The revised PII continues to drive the construction itself, and the planning and control cycle is repeated until completion of the project.

At the end of a project, all the accumulated information and knowledge (PII, PIK, PSI, and PSK) are archived (Figure 5). In traditional construction practice, at this time, a set of asset information is methodically extracted and prepared for handover to the client, for purposes of operation and maintenance. This is a subset of the information available. It commonly includes the as-built product information (a building’s architecture, structure, and its mechanical, electrical and plumbing systems) but excludes product design information and all of the construction process information. No information on activities, resource, construction equipment nor any temporary site works is carried through to the asset model. The deliverables to the client, as shown in Figure 6, are the building itself (the Adult Physical Twin in the figure) and the AIM (the Adult Digital Twin in the figure). In contrast with current practice, under DTC, the design information, process information, and any construction phase product information excluded from the asset information may be archived for future use in long-term system feedback. To enable exploitation of this aspect of the digital twin archive, access to this information would need to be assured for any parties who may be employed to design, build, renovate, or maintain this or similar buildings in the future.

5.2. Real-time feedback for safety and quality control

The next PDCA cycle is the real-time feedback cycle, in which information is fed directly from the PSI to managers and workers on site (Figure 5). This includes quality and safety monitoring, where workers must be alerted to any deviation from design intent or from safe behavior during their ongoing operations. Technologies that sound alarms to alert workers to imminent potential collisions or falls belong to this category (e.g., Cheng and Teizer, Reference Cheng and Teizer2013). The use of laser scanning to guide the precise positioning of steel cable anchor inserts in bridge piers as they are placed using a crane, prior to casting concrete, is an example of real-time feedback for quality control (Eastman et al., Reference Eastman, Teicholz, Sacks and Liston2011).

5.3. Long-term feedback for design and planning

Finally, a broad PDCA cycle exists where each project is viewed as a design-construction event, after which the digital building twin information is archived and subsequently used for organization- or industry-level learning (“check”), with improved action following in subsequent projects. The learning will manifest in a variety of ways:

-

• DTC archives provide a source for labeled data to support training of machine learning applications, such as classifiers for CEP of monitored data. A caveat to such use is that it requires some or all of the raw monitored data, which may or may not be archived.

-

• Design intent information encapsulated in BIM models, together with associated records of the outcomes of performance simulations (the PIK), may be used as a resource for case-based design. Ideally, postoccupancy evaluations can be obtained from adult digital twins to further enrich learning, whether across a project portfolio or in support of academic research.

-

• Researchers may use the wealth of data recorded in DTC archives, subject to appropriate guarantees of privacy and security, to pursue empirical, evidence-based research on subjects such as production management strategies, supply chain performance, construction safety, labor productivity, and a host of other topics. The availability of massive, accurate, reliable, and accessible data sets represents a complete change for construction researchers who currently struggle to collect meagre data sets from active construction sites.

6. Discussion

In the previous section, we outlined a DTC information system workflow, detailing the activities and the information processes. Here, we reflect on the differences between DTC and traditional construction planning and control. DTC is data-centric, whereas traditional modes are data-informed; DTC lays out a comprehensive mode of planning and control, whereas BIM standards are restricted to defining information flows; BIM has well-defined file-based object-oriented information constructs, whereas DTC data and information storage will likely require object-based graph networks stored using cloud services. We also discuss commercial aspects of DTC development, including the interaction between CDTs and other digital twin systems, a possible platform business model for delivering DTC services. Finally, we consider possible barriers to DTC implementation and detail the research and development needed moving forward.

6.1. Data-centric construction

The key difference between DTC and current construction management practice is that DTC is data-centric. PII and knowledge, data streams from monitoring technologies, and PSI and knowledge are all generated within the DTC system and available to support decision-making in a set of concentric closed-loop PDCA cycles. Appropriate curation of data, information, and knowledge makes it accessible to AI tools for interpretation, analysis, simulation, and prediction. Some of these tools will augment or automate functions currently implemented by people (such as planning, coordinating, communicating, measuring, checking, and inspecting). Others will implement functions that are currently too labor-intensive or complex for people to do and therefore often ignored by current construction management practice (such as fine-detailed monitoring of equipment and work or optimization of construction schedules).

In this regard, current modes of construction management, including those that employ BIM, differ from DTC in two significant ways: (a) project participants generate information in silos with distinct ownership and control boundaries, and share information only when needed; (b) information gathering concerning the current state of construction is almost entirely manual. There is little opportunity for application of analytics algorithms that require machine-readable information and/or time-series data. These modes can be considered data-informed or data-engaged. In an initial evolutionary step, DTC is likely to be data-driven, where data scientists support teams in using data analysis to make day-to-day operational decisions. Once these practices are consolidated, data science will be used to evaluate, improve, and innovate on a strategic level, thus fulfilling the value of data-centric construction.

The four dimensions of the DTC workflow identified in this work can support definition of the data collection schemes to ensure the integrity and extensiveness of data-centric construction. Data standardization will be essential for each dimension because the streams of monitored data are drawn from multiple technologies and will require formal syntactic and semantic formats to ensure that the datasets are compatible with the DTC data processing workflow.

6.2. Comparing DTC and BIM workflows

ISO 19650 (ISO/DIS 19650, 2018) is an international standard that formalizes information flow processes for building and civil engineering works when using BIM. It has gained acceptance throughout the world as the governing paradigm for information management in construction. Part 2 of ISO 16950 proscribes collection of the information for the execution of a construction project in a PIM, which incorporates the building models (BIM), the project execution plans, and all information collected during project execution. As such, the PIM encapsulates the PII, PIK, PSI, and PSK defined in the context of the DTC, but it does not distinguish among them.

A second (and more significant) difference between DTC and the ISO 19650 BIM information process is that whereas ISO 19650 lays out a mode of information flow, DTC prescribes a comprehensive mode of construction planning and control. The boundaries of DTC in Figure 5 incorporate not only the information components but also the information processing components themselves.

The scope of the DTC, like that of the PIM, is restricted to the design and construction phase, distinguishing between the “child” and “adult” forms of the digital twin (see Figure 6). ISO 19650 defines an AIM, which is extracted from the PIM when a building is handed over to its owner and contains the information required for operation and maintenance. In the DTC mode, the information needed to prepare the foundations of a digital twin for operation and maintenance is drawn primarily from the PSI.

6.3. Database structure

The design of the database structure for the DTC data, information, and knowledge is a highly unconstrained problem and many alternative configurations are possible. Whereas current modes of data storage in construction projects almost exclusively consider file-based storage, object-based graph networks stored using cloud services are likely to be preferable for DTC. The reason is that aspects of PII, PIK, PSI, and PSK may overlap and share common resources and data at the object or property levels. Some examples:

-

(a) An architect designs a double-swing door and models it as an instance of a door class with appropriate property values in a BIM model (product PII). Once the owner approves the door design, it is digitally signed by setting a meta-data property to “approved for construction” (process PII). The contractor uses the BIM model for procurement, and a value of “purchased” is set for the instances’ status property set, together with a timestamp of the transaction—this is process PSI. Later, an inspector (or a smart software agent) compares the door installed to the design intent and confirms that the door installed meets the design intent and sets an “approved” value and timestamp for the inspection status property set—this is PSK. In this example, both the intent and the status information and knowledge are associated with the same single BIM element instance.

-

(b) Consider the same example of a door design intent expressed as a BIM element instance. However, due to the large size of the door, the contractor decides to procure and install the door in two parts. This necessitates modeling of two new instances of the door “as-procured” (later to be designated “as-built”). Assuming that the two parts fulfil the original design intent, this is not considered a change and no update of the intent information is necessary. In this case, the PII and PSI are modeled as different object instances. Comparison of the PII element with the two PSI elements will yield the determination of fulfilment (or not) of the design intent, thus filling in PSK as before, but this time in the distinct “as-built” instances. In this example, the intent and the status information are associated with separate BIM element instances.

-

(c) Where file-based storage is used, it will be more common for the general contractor to generate their own BIM models for construction. These may be modeled from scratch or initially copied from the design intent models and enriched with details, but in any case, the PII and the PSI are stored separately. While individual building objects can be mapped between PII and PSI models using common IDs or by location, they carry their own property sets, with potentially overlapping values that may be changed independently.

-

(d) During initial design, or during construction, an engineer prepares a construction plan using 4D simulation software and an optimization engine. The input to the plan includes the planned tasks (process PII) and their related BIM elements (product PII). The output of each run of the analysis is a set of predicted outcomes (process PIK). In a file-based system, each task would be replicated in each output file. In a graph-based system, each task object could more simply be associated with a result property set for each outcome, with the property set labeled with an appropriate version number.

Each of these examples represent different configurations of the data storage. This is not an exhaustive set, and there are likely numerous additional configurations and permutations of them. The results of other research suggest that property graph representations with late binding schema objects are apparently most appropriate (Sacks et al., Reference Sacks, Girolami and Brilakis2020).

6.4. System of systems

The digital twin of a construction project will function within a network of digital twins. In an eventual implementation, twins of construction equipment—a tower crane, for example—send information to and receive information from the construction project twin. Similarly, the construction project twin itself communicates with higher-order twins, such as that of the local transport network and a concrete batching plant, to determine expected arrival times of concrete mixers. Construction projects intersect and interact with their surrounding infrastructure with multiple system interdependencies, many of which may be negotiated via their digital twins (Whyte et al., Reference Whyte, Fitzgerald, Mayfield, Coca, Pierce and Shah2019).

The value of such integration lies in the potential to reduce waste in the supply chain through optimization across a portfolio of projects in a local region, rather than local project optimization. For example, flattening the peak demand for ready-mixed concrete by coordinating concrete casting tasks across projects has been shown to improve planning reliability and performance across portfolios of projects (Arbulu et al., Reference Arbulu, Koerckel, Espana and Kenley2005). Exploiting information regarding transport network patterns would further enhance the reliability of planning.

6.5. Platform business model

In many industries, platform business models have proved to be effective for offering products or services with great variety while benefiting from the economies of scale of the underlying platforms. In construction, general contractors, such as the “Tier 1” contractors in the UK, essentially function as platform organizations. They provide management and coordination services with a lean core of management and administrative staff but rely on subcontracted supply chain partners to provide construction personnel, equipment, and materials. However, their growth is constrained by the need to provide core management, which has limited capacity and is difficult to scale. Application of the platform business model in building construction has been proposed (Mosca et al., Reference Mosca, Jones, Davies, Whyte and Glass2020), including adoption of platform organizations in the context of construction management services delivered by startup companies as “software-as-a-service” solutions (e.g., Laine et al., Reference Laine, Alhava, Peltokorpi and Seppänen2017), and product platform models in house building (Jansson et al., Reference Jansson, Johnsson and Engström2014).

The basis for DTC is a system that integrates monitoring hardware, cloud data storage, and sophisticated information processing capabilities. Implementing, maintaining, and operating a DTC system will require larger scales of resources than most general contractors can muster. However, the basic components of the DTC are invariant with construction project type, and it is therefore ideally suited to provision to multiple construction projects as an integrated hardware/software service. As such, DTC could be delivered in a platform business model, in which a large DTC platform company provides all of the management, planning, and production control infrastructure for general contractors.

Over time, a platform DTC provider could concentrate purchasing from the supply chain because the service would already aggregate the day-to-day coordination of subcontractors and supplier deliveries across multiple projects. According to the Portfolio-Project-Operations model (Sacks, Reference Sacks2016), the ability to coordinate operations across a large portfolio would allow a DTC platform provider to achieve significantly better production flow than general contractors can when managing isolated projects.

The deepening transformative impact of digital information on project delivery models in construction is already clearly evident (Whyte, Reference Whyte2019). Against this background, it is quite likely that fulfilment of the DTC mode will also change existing commercial practices by facilitating a platform economy in the construction industry. DTC platform companies will offer optimized design and construction management services that surpass the current capabilities of general contractors, and they will control the most valuable part of projects—the data and the information. In theory, this could not only lead to reduction of production management personnel on construction sites but also to redundancy of traditional gatekeeper organizations (in this case, the classical general contractor), completely changing the way in which design and construction is managed.

6.6. Barriers to implementation

Implementing DTC will require overcoming numerous barriers, technical, sociological, organizational, and commercial. DTC is fundamentally dependent on advanced data and information processing software, such as machine learning tools (to automate CEP and for aspects of computer vision including point cloud processing, and so forth), optimization and search algorithms (for analyzing and exploring potential forward-looking construction plans), and other AI tools. In earlier work, the authors identified two key areas of research needed to make progress with integration of Construction Tech and BIM: these were development of semantic enrichment of BIM models and property graph representations of BIM models that are accessible for machine learning (Sacks et al., Reference Sacks, Girolami and Brilakis2020). Many researchers are currently tackling these problems (e.g., Wagner et al., Reference Wagner, Bonduel, Pauwels and Rüppel2020; Werbrouck et al., Reference Werbrouck, Pauwels, Bonduel, Beetz and Bekers2020).

Existing construction management systems and practices, and particularly the workforce skilled in their implementation, may also prove difficult to change. This has been the experience of innovations such as lean construction and BIM (Zomer et al., Reference Zomer, Neely, Sacks and Parlikad2020). DTC is likely to face similar obstacles. The deep organizational fragmentation that characterizes the construction industry, with multiple layers of subcontracting forming ad-hoc project-specific organizations, is not conducive to the deep process change that is necessary. Traditional commercial practices in construction, particularly with regard to risk management, have hindered adoption of technological innovations in the past. Analysis of these factors has indicated that it is most likely that Construction Tech startup companies, with access to venture capital and to workers with the requisite AI skills, will be better positioned to innovate in the change to DTC than traditional construction companies (Sacks et al., Reference Sacks, Girolami and Brilakis2020).

6.7. Future research

Much research and development work is needed to progress the DTC workflow paradigm, particularly in the areas of (a) interpretation of multiple data streams to derive status information, (b) design of suitable data storage mechanisms, (c) potential interactions between project digital twins and the digital twins of construction resources within a project, on the one hand, and digital twins of systems in the surrounding environments, on the other hand, (d) applicability of AI tools in this data-centric mode of construction management, and (e) commercial and organizational business models.

Development of methods and of tools to derive status information will require researchers skilled in sensing technologies, construction management, and data science. Extensive work will be needed to identify and develop appropriate algorithms and applications for data collection, data labeling, CEP, process simulations, optimization, and testing. Edge computing may be needed to achieve real-time processing of some data streams, and processing may require inferencing as well as deduction. Current research streams concerning acquisition, interpretation, and semantic enrichment of information from images and point clouds are essential components.

Formal classification of the data, information, and knowledge at the high level of abstraction presented in this paper is necessary for development of the analytical and AI tools that can exploit it. In such a data-centric system, the semantics inform consumers (people or software agents) of the intent and the level of confidence of the information. On the other hand, the classifications do not constrain the variety of possible paths for technical implementation of data storage mechanisms for the digital twin. Among possible alternatives schemes: file-based services in which each of PII, PIK, PSI, and PSK are stored in distinct file sets; object-based services in which BIM elements encapsulate both PII and PSI in common objects with differing attributes or property sets; object-based services in which BIM elements are replicated for PII and for PSI; and linked data services in which information of all kinds is stored in property graphs, with metadata for each instance defining intent, version, and timestamp. The results of other research suggest that the latter scheme appears to be most appropriate, particularly for AI applications including pattern recognition and machine learning.

Interactions between digital twins are essential because DTC must function within a multisystem context, as construction projects are embedded in the broader built environment, function within local economies, and apply multiple resources (from contractors to equipment, large and small), all of which are likely to have their own digital twin representations. Research of system interactions, communications protocols, and information reliability will be necessary. At their core, all digital twins are reliant on data from the physical reality. This raises questions as to how formal assimilation, analysis, and computation for data are to be achieved in a consistent manner. Likewise, the underlying theory and guiding mathematical principles of coupling individual and systems of digital twins to achieve a coherent representation is an open question that will, together with data assimilation, require novel research in applied mathematics, theoretical and computational statistics, and computing science. This will form future programs of investigation that will be treated in subsequent publications.

Proposing and evaluating alternative directives to optimize production control require AI routines to formulate alternatives and to predict their possible outcomes by extrapolation from the current state. This is likely to require expertise in deep learning, algorithms, agent-based simulation, and other data science skills, as well as thorough understanding of lean construction and production theory, which implies the need for multidisciplinary research teams.

Finally, new business models will be needed to deliver the technical aspects of DTC, and new business models will emerge as a result of their application. These aspects will require researchers of construction management, organizational sciences, and others.

7. Conclusion

The contributions of this paper include depiction of the workflow framework for a comprehensive DTC information system and detailed review of the research and development needed to fulfil it. DTC is a data-centric mode of construction management in which information and monitoring technologies are applied in a lean closed loop planning and control system. This work applied conceptual analysis to derive the core information and process concepts that will define future development of DTC systems for the design and construction phases of buildings and infrastructure facilities. The paper contributes to and extends the existing understanding of digital twins in the construction industry, viewing the DTC information systems as part and parcel of the transformation of production management from reactive to proactive.

DTC should not be viewed simply as a logical progression from BIM or as an extension of BIM tools integrated with sensing and monitoring technologies. Instead, DTC is a comprehensive mode of construction that prioritizes closing the control loops by basing management decisions on information that is reliable, accurate, thorough, and timeous. That information is provided in two key ways: (a) continuous monitoring of the status of design information, supply chains, and conditions on site coupled with CEP to deduce current status and (b) extensive use of data analytics and engineering simulations to evaluate the probable outcomes of alternative design and planning decisions. Thus, decisions are made within a context of situational awareness. Eventually, people may increasingly rely on software agents that recommend courses of action and allow them to direct work autonomously—coordinating delivery of materials, delivering design information, coordinating construction schedules with trades, filtering tasks for readiness, and instructing crews to commence tasks. BIM and monitoring technologies play a role in modeling building information and acquiring raw data respectively, but they are subsumed in a system that exploits data, information, and knowledge to provide comprehensive situational awareness.

The DTC process incorporates four distinct Plan-Do-Check-Act cycles at different time resolutions, from real-time feedback from monitoring technologies to workers for safety and quality control, to long-term feedback from archived project digital twin information through machine-learning and case-based reasoning to ongoing projects. The DTC information system comprises five conceptual information clusters of project information: PII, PIK, PSI, PSK, and monitoring data. Application of the PDCA cycle as a control mechanism reflects the understanding of construction projects as temporary production systems to which lean continuous improvement practices can and should be applied.

Section 6.7 detailed key aspects of the research and development needed to bring DTC to fruition. Among the key areas are: data fusion to interpret multiple data streams and derive status information; suitable data storage mechanisms, protocols, and algorithms for maintaining consistency among diverse digital twins; data science methods and algorithms for monitoring, interpretation, simulation and optimization; and appropriate business models.

Acknowledgment

Figure 1 was reprinted from Navon R and Sacks R (2007) Assessing research issues in Automated Project Performance Control (APPC). Automation in Construction 16(4), 474–484, with permission from Elsevier.

Funding Statement