Introduction

Diagnostic accuracy relies on proficiency in basic physical examination skills. Despite recognition of its importance, medical education has been criticized for a perceived decline in physical examination skills among recent graduates.Reference McCarthy, Leonard and Vozenilek 1 Having a solid grasp of fundamental ophthalmologic skills is essential for both primary and specialty care physicians, as ophthalmologic problems make up a significant proportion of primary care consultations (2%-3%) and nearly one-fifth of emergency consultations.Reference Shuttleworth and Marsh 2 , Reference Vernon 3 Moreover, basic knowledge of ophthalmology is required in the management of patients with certain neurologic, rheumatologic and infectious conditions.Reference Noble, Somal, Gill and Lam 4 Thus, enhancing primary care physicians’ skills in performing accurate fundus examinations may improve the medical care given to such patients.Reference Morad, Barkana, Avni and Kozer 5

Undergraduate training in ophthalmology is limited. In a study conducted by Noble et al.,Reference Noble, Somal, Gill and Lam 4 76.2% of the 386 first-year residents who had recently graduated from a Canadian medical school stated that they had received 1 week or less of overall exposure to ophthalmology during medical school. Furthermore, this survey suggests that most medical students are not moving from medical school into residency with confidence in their ophthalmology knowledge and skill set.Reference Noble, Somal, Gill and Lam 4 Not surprisingly, most primary care physicians view their undergraduate ophthalmology medical education as inadequate, which may translate into a lack of confidence in performing funduscopic assessments.Reference Shuttleworth and Marsh 2 , Reference Noble, Somal, Gill and Lam 4 , Reference Gupta and Lam 6 - Reference Dalay, Umar and Saeed 8

A number of different teaching methods have been used to train and evaluate students in direct ophthalmoscopy examination skills in the past, but their effectiveness is limited. Previous techniques have included practice on fellow students, and funduscopic examination on patients with verbal confirmation of pathologic findings.Reference Kelly, Garza, Bruce, Graubart, Newman and Biousse 9 These techniques do not allow for repeated and careful exposure to various pathologies, and an observer cannot verify what the student visualizes.Reference Kelly, Garza, Bruce, Graubart, Newman and Biousse 9 - Reference Milani, Majdi and Green 12 Using simulation as a method of teaching difficult-to-master medical diagnostic skills allows for a highly realistic training experience, the ability to vary clinical presentation to meet the need of the learner and most importantly unlimited, repetitive practice to allow for retention of knowledge. Implementation of such a tool with trainees may lead to less reliance on specialists for routine ophthalmoscopic evaluation.

The goal of this study is to evaluate the effect of a hands-on training session with an ophthalmoscopy simulator amongst primary care trainees on improving identification of normal funduscopic anatomy and accuracy in recognition of pathological states of the fundus.

Participants and Methods

This was a randomized-controlled single-center study. The Hospital for Sick Children Research Ethics Board’s approval was obtained before commencing the study. Subjects were eligible if they were in the process of completing postgraduate medical education training in medicine or pediatric neurology in postgraduate levels 1-3. Exclusion criteria included subjects who were unable to complete the full study session of ~1 hour or had completed ophthalmology training at the residency level in the past. An e-mail recruitment letter was sent to residents within the Department of Pediatrics at the Hospital for Sick Children, Toronto, Ontario, Canada. Each resident participated in two separate direct funduscopy sessions: the pre-intervention assessment and the immediate post-intervention assessment. The pre-intervention assessment involved completion of a needs assessment questionnaire that was e-mailed to each participant before the study and graded using a 5-point Likert scale. Participants’ academic institution/university, medical school, postgraduate year (PGY) of training, area of practice, and the amount and adequacy of didactic teaching and clinical exposure to ophthalmology during medical school and residency were collected.

Before the start of the pre-intervention assessment residents were consented and randomized to the intervention group or control group by the study coordination TB who assigned a participant ID number using randomizer.org. Even numbers were assigned to the control group and odd numbers to the intervention. Funduscopy skills were assessed using 20 images of the fundus, where participants were asked to identify common ophthalmic anatomy and pathologies using the ophthalmoscopy simulator, OphthoSimTM (OtoSim Inc., Toronto, ON, Canada), a direct ophthalmoscopy simulator that allows practice of funduscopy on a realistic model of the eye with exposure to both normal funduscopic anatomy and various pathological states of the fundus. This was performed using the undilated pupil on the simulator as it more closely simulates the real world environment in which the physician works. A brief 15-minute teaching session on how to hold and maneuver an ophthalmoscope on the simulator was provided to all participants in both arms of the study in order to ensure comfort and ease-of-use.

Images of various normal and pathological conditions of the eye were projected inside the simulator for participants to view. For this, a total of 20 de-identified funduscopic images were selected from The Hospital for Sick Children patient database. Images which were selected reflected both common retinal pathologies such as optic disc edema and less common pathologies such as a cherry-red spot. multiple-choice question tests were designed by the co-investigators and passed through quality assurance review by an ophthalmologist AA.

For the immediate post-intervention assessment, each participant underwent a 15-minute self-study session. The intervention group was presented with a self-study session on the simulator of normal and pathological anatomy of the fundus, along with a text description of what they were seeing, while the control group looked at randomly chosen non-funduscopic images (e.g., flower, New York City). An instructor was available for guidance and questions as necessary to troubleshoot any technical issues. This was followed by another examination with 20 unique images of the fundus using the simulator to identify common pathologies. At the completion of the post-intervention assessment all participants were provided with an informal feedback session consisting of a didactic PowerPoint presentation outlining the common funduscopic images that were tested, their key features and clinical pearls. The PowerPoint slides were e-mailed out to each participant after study completion for future reference along with another survey, in order to provide feedback on the utility of the training session and the experience with the hands-on simulator.

The funduscopic images used for each assessment were randomized and unique. The general breakdown of funduscopic pathology given to the study participants were as follows: 20% normal fundus anatomy, 10% retinal hemorrhages, 10% optic pallor, 10% macular star, 10% papilledema, 5% retinal detachment, 5% Roth spot, 5% retinoblastoma, 5% chorioretinal coloboma, 5% cotton-wool spots, 5% cherry-red spot, 5% arteriovenous nicking and 5% increased cup-to-disc ratio. No two images were repeated. Participants were given 1 minute to attempt to recognize the pathology or lack thereof.

Outcomes

The primary outcome of the study was the difference in overall mean scores of multiple-choice tests between the pre- and post-treatment groups. We also assessed subjective experiences, perceived benefit and criticism/commentary regarding OphthoSimTM, in terms of ease-of-use, esthetic appeal and user interface.

Data Collection

Study data were collected and managed using REDCap electronic data capture tools hosted at The Hospital for Sick Children.Reference Harris, Taylor, Thielke, Payne, Gonzalez and Conde 13 REDCap (Research Electronic Data Capture) is a secure, web-based application designed to support data capture for research studies, providing (1) an intuitive interface for validated data entry; (2) audit trails for tracking data manipulation and export procedures; (3) automated export procedures for seamless data downloads to common statistical packages; and (4) procedures for importing data from external sources.

Analysis

Statistical analysis was performed by SAG who was blinded to the group assignments. Standard unpaired t-test was used with p-values less than 0.05 deemed statistically significant. Effect size was calculated using Cohen’s d coefficient (small d=0.2; medium d=0.5 and large d=0.8) to account for the small sample size. Where confidence intervals (CIs) were reported, they were specified as 95% minimum and maximum intervals at (95% CI: min-max). Descriptive statistics were used for survey results.

Results

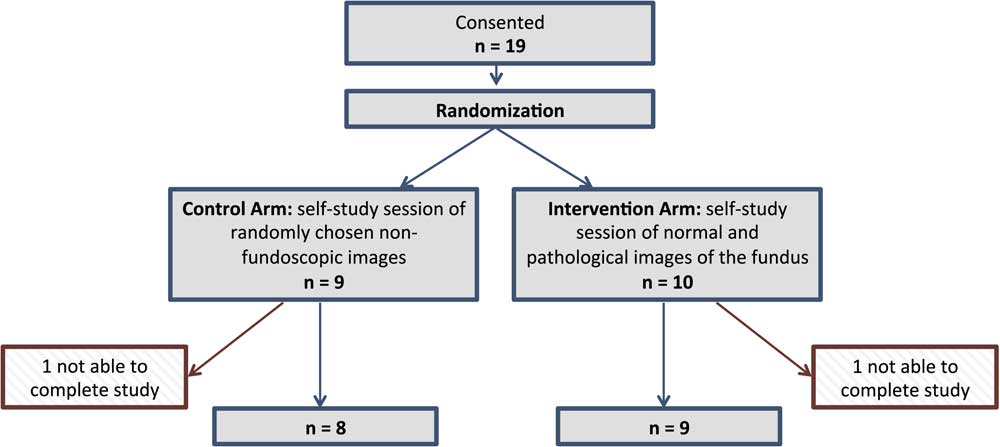

In total, 19 participants were consented. One participant dropped out from each group resulting in a 90% (17/19) retention rate (Figure 1). The study sample included 15 pediatrics residents with the following spilt in the PGY level (4 PGY-1=4; PGY-2=8; PGY-3=3) and two pediatric neurology residents (PGY-2) (Table 1). Most of the participants 47% (8/17) completed their undergraduate medical education in Ontario, 24% (4/17) outside of Ontario and 29% (5/17) outside of Canada.

Figure 1 Study design.

Table 1 Demographic data

PGY=postgraduate year.

Pre-Intervention Survey Results

In total, 65% (11/17) of the residents reported that they received minimal or no formal teaching in ophthalmology during their undergraduate medical studies. While elective opportunities in ophthalmology were offered during their medical school training, only 18% (2/11) participated in those elective opportunities. The residents reported that they had no or minimal exposure to didactic (17/17) and clinical (16/16) ophthalmology training during their postgraduate medical education.

Table 2 summarizes self-reported ease with recognizing funduscopic pathology. Using a 5-point Likert-type scale, respondents indicated how confident (1=“not at all comfortable” to 2=“slightly comfortable” to 3=“neutral” to 4=“comfortable” to 5=“very comfortable”) they felt in their ability to recognize funduscopic pathology and perform the technical skill in order to yield clinically important information. No residents reported comfort with any funduscopic pathologies except papilledema (2/16 [12.5%] comfortable; 11/16 [68.8%] slightly comfortable) and retinal hemorrhages (1/16 [6.3%] comfortable; 2/16 [12.5%] slightly comfortable). In total, 80% were not comfortable at all with recognizing or managing most common retinal abnormalities. Furthermore, residents reported low levels of comfort with direct funduscopy skills (1/17 [6%] comfortable, 10/17 [59%] slightly comfortable). Over one-third, or 35% (6/17), were not comfortable with using an ophthalmoscope. Most residents did not own an ophthalmoscope 71% (12/17) and ownership on an ophthalmoscope (Table 1) did not correlate with self-reported ease with using the ophthalmoscope (r=0.061, p=0.817).

Table 2 Residents’ comfort levels with recognizing ocular pathology

* As one participant did not complete this question the percentages are reported here for a population of n=16.

Intervention Results

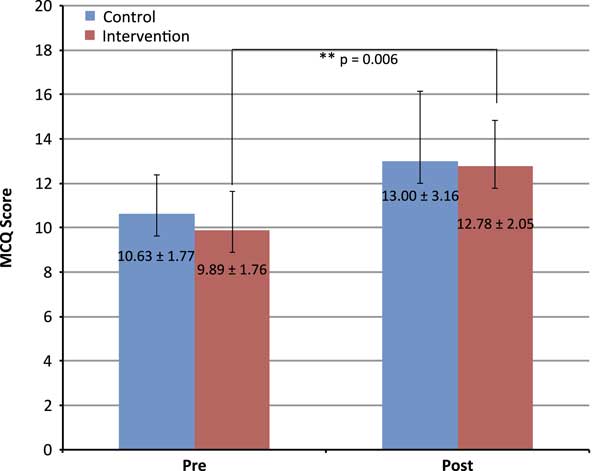

On the pre-intervention multiple-choice evaluation, pediatric trainees recognized an average of 10.24±1.75 (95% CI: 9.34-11.14) of the 20 images correctly. There was no statistically significant difference between the pre-intervention scores in the control (10.63±1.77; 95% CI: 9.14-12.10) versus intervention (9.89±1.76; 95% CI: 8.53-11.24, p=0.405) groups, indicating similar level of experience amongst the groups. There was no statistically significant difference between the post multiple-choice scores, following a single 15-minute self-study session between the control (13.00±3.16; 95% CI 10.36-15.64) and intervention groups (12.78±2.05; 95% CI 11.20-14.35, p=0.864). However, the intervention group’s pre- to post-intervention multiple-choice scores changed significantly (p=0.006) with a large effect size (Cohen’s d=1.5). The control group’s scores did not change significantly (p=0.091) (Figure 2).

Figure 2 Pediatric residents pre-intervention and immediate post-intervention multiple-choice question (MCQ) scores out of 20, representing diagnostic accuracy.

Self-reported comfort with direct funduscopy and self-reported performance on the multiple-choice quizzes did not correlate with pre-intervention funduscopy performance among pediatric residents (r=0.459, p=0.064).

Finally, 71% (12/17) participants indicated on the post-intervention survey that they would prefer hands-on simulation and training with the simulator over a number of different teaching methods as a means of learning and gaining confidence in their direct ophthalmoscopy skills.

Discussion

Our results suggest that a single, brief independent learning session using an ophthalmoscopy simulator improves diagnostic accuracy in postgraduate pediatric trainees. In addition to significantly improving test scores on the simulator, residents allocated to the intervention group reported improved confidence in performing funduscopy and enhanced knowledge.

The results of our pre-intervention survey suggest similar levels of background direct funduscopy training and confidence amongst our postgraduate trainees as that reported previously in other international, US and Canadian centers.Reference Shuttleworth and Marsh 2 , Reference Noble, Somal, Gill and Lam 4 - Reference Lueder and White 7 In 2007, Wu et al.Reference Wu, Fagan, Reinert and Diaz 14 found that internists, residents and medical students reported the lowest self-confidence in performing a nondilated funduscopic examination among all physical examination skills. After 2 years, Noble et al.Reference Noble, Somal, Gill and Lam 4 reported that 64% of Canadian medical school graduates described a lack of exposure to ophthalmology in their undergraduate medical curriculum. Despite knowledge of this gap in training for almost 10 years, our survey results suggest that curricular changes in medical education have not adequately addressed these gaps thus far.

Although our study showed improvement in scores following a single 15-minute self-study session in control and treatment arms, significant improvement was seen only in the group receiving the intervention. Ophthalmoscopy is a complex task: the examiner must have the ability and skills to view the fundus of the eye sufficiently and at the same time to judge the images seen.Reference Asman and Linden 11 Our teaching session allowed all trainees to handle and practice using the ophthalmoscope on the simulator, but only the intervention group was given dedicated images to study using the interventional tool. By having both our control and treatment cohorts practice using the instrument, motor skills involved in direct funduscopy were inherently improved in both groups, which likely led to the ability to better view the retina and translated in the improvement of post-intervention multiple-choice scores in both groups. It follows that those who were allowed to study pathologic images through the interventional tool experienced greater improvement than those who did not. A recent study by Kelly et al.Reference Kelly, Garza, Bruce, Graubart, Newman and Biousse 9 favored the use of fundus photography over direct ophthalmoscopy in a clinical setting. The conclusion of this study is at odds with what we have found. The ability to recognize retinal disease patterns is not useful if the retina cannot be seen in the first place. Direct funduscopy is a skill acquired through training and practice. This is further supported by a study carried out by Roberts et al.Reference Roberts, Morgan, King and Clerkin 15 where doctors were more likely to diagnose a fundal abnormality correctly from a slide than from actual fundoscopy, which suggests that it is a problem with technique rather than lack of knowledge. Sending all patients who need a fundus examination to ophthalmology is not feasible or realistic in practice and can also delay important examinations such as lumbar puncture, which can only be performed after ruling out increased intracranial pressure.

This study is subject to several limitations. First, our sample size of 17 pediatric residents at a single institution is small. It would be strengthened with the addition of more subjects in the future from various primary care specialties, to improve generalizability. Second, due to the short follow-up, we cannot confirm whether this intervention led to sustained improvements in clinical skills or retained learning. The often-observed skill decay amongst trainees with limited exposure suggests the need for continued practice in order to reach a threshold that leads to sustained knowledge. Ideally, a future study would evaluate whether skills gained using this simulator are sustained through time. Third, it is unclear whether improvement in scores on the simulator will lead to improvements in identifying abnormalities in clinical practice. This important subject should be broached in future studies. Finally, our evaluation was limited to the use of the simulator and did not include real-life patient evaluation for pathology, limiting our ability to understand whether this training actually improved hands-on clinical skills.

Conclusion

In conclusion, our findings suggest that one brief session including independent study using a direct funduscopy simulator can significantly improve funduscopic clinical skills. The use of an ophthalmoscopy simulator is a novel addition to traditional learning methods for postgraduate pediatric residents. Future studies should evaluate whether skills gained using this simulator are sustained through time, whether improvements in identification of pathologies in patients are improved through using this technique, and concomitant improvements in patient care and health services delivery.

Acknowledgments

The authors are grateful to Vito Forte, Paolo Campisi and Brian Carrillo for advice and support regarding use of the OphthoSimTM device and assistance with set-up and technical support. The authors thank all the residents who took the time out of their schedules to participate in this study.

Disclosures

EK and EAY received funding from the Mary Jo Haddad Innovation Fund. EAY receives funding from the MS Society of Canada, the National MS Society, Ontario Institute for Regenerative Medicine, Stem Cell Network, SickKids Foundation, Centre for Brain and Mental Health, and Canadian Institutes of Health Research. She has served on the scientific advisory board for Juno Therapeutics, and is a relapse adjudicator for ACI. She has also received speaker's honorarium from Novartis and unrestricted funds from Teva for educational symposium. TB, AA, AA and SG have nothing to disclose.

Statement of Authorship

EK conceptualized and designed the study, drafted the initial manuscript and approved the final manuscript as submitted. SAG carried out the initial analyses and interpretation of data, reviewed and revised the manuscript, and approved the final manuscript as submitted. TB recruited participants and helped carry out the acquisition of data, critically reviewed the manuscript and approved the final manuscript as submitted. AA helped with the study design, reviewed and revised the manuscript, and approved the final manuscript as submitted. AA helped with the study design and approved the final manuscript as submitted. EAY conceptualized and designed the study, reviewed and revised the manuscript, and approved the final manuscript as submitted. All authors approved the manuscript as submitted and agree to be accountable for all aspects of the work.

Financial Support

This study was funded in part by the Mary Jo Haddad Innovation Fund.

Supplementary Material

To view the supplementary materials for this article, please visit https://doi.org/10.1017/cjn.2017.291