Introduction

On January 6th, 2021, violent Trump supporters encouraged by the sitting president stormed the Capitol in an attempt to overturn the legitimate results of the 2020 election. The insurrection represented the stunning culmination of the relentlessly conspiratorial and mendacious presidency of Donald Trump. Through repeated social media posts, speeches, and appearances on conservative media, Trump and allies reiterated baseless claims about the nature of the election, including that his loss in swing states was due to votes from “…dead people, below age people, illegal immigrants,” Footnote 1 as well as specific voting machines that switched votes away from Trump. Footnote 2

Beliefs that the 2020 election was fraudulent are held not only by those who descended upon Washington. Nearly a year after the events of January 6th, 71% of Republicans claimed that Joe Biden’s election victory was “probably not” or “definitely not” legitimate (Nteta Reference Nteta2021). That Trump supporters would endorse false beliefs surrounding the 2020 is not surprising considering the consistent mendacity of Trump and his allies coupled with the election result itself, as individuals who perceive of themselves as “losing” are more likely to endorse conspiratorial beliefs (Uscinski and Parent Reference Uscinski and Parent2014). Beyond elections, Trump persistently lied about matters both trivial and serious, including the size of his inauguration crowd, the path of Hurricane Dorian, and the threat of the coronavirus. Unsurprisingly, the dissemination of blatantly false information had serious consequences for both public health and the health of American democracy. Trump supporters were more hesitant about COVID-19 vaccine and less likely to become vaccinated (Fridman, Gershon, and Gneezy Reference Fridman, Gershon and Gneezy2021), and exposure to Trump tweets questioning the legitimacy of democratic elections significantly decreased trust and confidence in elections among Trump supporters (Clayton et al. Reference Clayton, Davis, Nyhan, Porter, Ryan and Wood2021).

Nevertheless, there may be reason to suspect the endorsement of these misperceptions (alternatively called false or mistaken beliefs) captured by such survey research is overblown (Bullock and Lenz Reference Bullock and Lenz2019). Misperceptions are understood as “…factual beliefs that are false or contradict the best available evidence in the public domain” (Flynn, Nyhan, and Reifler Reference Flynn, Nyhan and Reifler2017). Footnote 3 First, common measures of support for false beliefs (such as simple agree/disagree questions) may inflate estimates of mass conspiratorial beliefs due to acquiescence bias (Clifford, Kim, and Sullivan Reference Clifford, Kim and Sullivan2020). Alternatively, survey respondents may indicate agreement with false beliefs not because they truly believe them, but because they intend to signal their commitment to their partisan identity (Schaffner and Luks Reference Schaffner and Luks2018). Still, research in other contexts suggests such expressive responding is minimal at best (Berinsky Reference Berinsky2018), implying these (misinformed) beliefs may be genuinely held.

Put simply, I address the following question: to what degree are misperceptions surrounding American democracy and the COVID-19 pandemic genuinely held versus a function of expressive responding? I examine this tension by directly testing the resiliency of misperceptions surrounding the “Big Lie” and the coronavirus pandemic. Specifically, I conduct a survey experiment wherein respondents are randomly assigned to receive treatments theorized to reduce expressive responding (Prior, Sood, and Khanna Reference Prior, Sood and Khanna2015; Yair and Huber Reference Yair and Huber2021): accuracy pressures and response substitution treatments. In doing so, I assess whether the high percentage of Republicans who profess belief in the “Big Lie” is a function of genuine or expressive responding.

I find that methods designed to increase accurate survey responses are at best ineffective in reducing indicated support for mistaken beliefs surrounding the 2020 election and the COVID-19 pandemic among Independents, Republicans broadly, and Trump supporters specifically. At worst, these methods increase support for misinformation. Specifically, the response substitution treatment increased Republican respondents’ overall score on a misperception battery surrounding COVID-19 and the 2020 election.

Partisan motivated reasoning and expressive responding

Studies consistently show that respondents are largely uninformed or misinformed about major political issues (Hochschild and Einstein Reference Hochschild and Einstein2015). The source of these misinformed or inaccurate beliefs is numerous. Do respondents genuinely believe inaccurate things about politics – that is, are they misinformed – or are they instead using survey responses to express something about their own identity commitments? For instance, mistaken beliefs about the 2020 election and the coronavirus pandemic can be understood as genuine beliefs caused by partisan motivated reasoning, where individuals seek to process information in a manner that is congruent with their pre-existing identity and issue positions.

Because election losses are psychically costly (Bolsen, Druckman, and Cook Reference Bolsen, Druckman and Cook2014), believing instead that they were victorious is attractive to strong partisans and is especially likely when partisans have clear incentives to process information in a directionally motivated manner (Nyhan Reference Nyhan2021). Importantly, while partisan motivated reasoning may cause respondents to answer inaccurately, respondents are not misrepresenting their opinions.

A second possible reason individuals may endorse inaccurate statements surrounding the 2020 election and the COVID-19 pandemic is expressive responding. In this formation, mistaken beliefs are not “truly” held by respondents. Rather, individuals offer responses designed to signal their identity to the surveyor. For instance, Schaffner and Luks (Reference Schaffner and Luks2018) find Trump supporters are more likely to indicate that smaller crowd sizes at Trump’s inauguration were in fact larger, even when provided with photographic evidence, in order to reaffirm their identity commitments.

Determining whether support for conspiratorial beliefs are genuinely held or a function of expressive responding has serious consequences for survey measurement and democracy. If support for misperceptions peddled by Trump and the Republican party are relatively stable and deeply held, they will be resistant to directional pressures and explicit corrections (Jolley and Douglas Reference Jolley and Douglas2017). By contrast, if the endorsement of mistaken beliefs is partially a function of expressive responding, such beliefs can be reduced through the use of “accuracy-oriented” pressures.

I test two treatments designed to encourage accuracy-oriented processing: “accuracy appeals,” which stress the importance of accurate, honest responses for the validity of the survey, and “response substitution,” treatments, which allow individuals to signal their identity commitments prior to the question of interest (Gal and Rucker Reference Gal and Rucker2011; Yair and Huber Reference Yair and Huber2021). Footnote 4 These treatments are designed specifically to reduce expressive responding–encouraging respondents to provide a more accurate response, rather than a response that they wish to be true.

General accuracy appeals (or honesty pressures) are expected to increase accurate responses by forcing individuals to positively affirm that they will answer truthfully, thereby increasing attention and cognitive engagement (Prior, Sood, and Khanna Reference Prior, Sood and Khanna2015). By contrast, “response substitution” treatments are hypothesized to reduce inaccurate responses by allowing individuals the option to signal their partisan identity prior to answering questions. Rather than being forced to signal their identity through related but distinct questions (thus “substituting” their response), individuals can be satisfied that they have affirmed their identity to the researcher. Previous work shows that allowing individuals the opportunity to signal their partisan identity prior to answering questions reduces expressive responding on both political (Yair and Huber Reference Yair and Huber2021) and non-political items (Nicholson et al. Reference Nicholson, Coe, Emory and Song2016).

Study design and hypotheses

I measure the effect of these two treatments theorized to reduce expressive responding surrounding the 2020 election and coronavirus pandemic through two pre-registered survey experiments. I collected two separate samples of Republicans and Independents through CloudResearch, a participant sourcing platform which draws on existing panels of online respondents. Footnote 5 This focus on Republicans and Independents should not be taken as implying that only these populations support conspiracy theories. Rather, belief in misperceptions is dependent on both the characteristics of the mistaken belief itself and the sociopolitical context in which the question is asked: because I am focusing on misperceptions with significant partisan asymmetry, I focus on Republicans and Independents (Enders et al. Reference Enders, Farhart, Miller, Uscinski, Saunders and Drochon2022).

Once recruited, respondents first completed a standard pre-stimuli demographic battery including partisan identification and self-placement on an ideological scale. Footnote 6 I then randomly assign individuals to one of three groups – control, accuracy pressure, and response substitution (full treatment texts in Table D.1). The first treatment group uses an accuracy pressure, asking individuals to attest that they will answer honestly. In the second treatment group (response substitution), I allow individuals to “signal” their commitment to Donald Trump as well as their opinions about the coronavirus before the questions of interest are posed. The main dependent variables are a set of political knowledge questions surrounding the 2020 election and the coronavirus pandemic. Footnote 7 In order to reduce acquiescence bias introduced by simple agree/disagree question formats, I use the alternate three-answer format measure of conspiratorial beliefs designed by Clifford, Kim, and Sullivan (Reference Clifford, Kim and Sullivan2020), where respondents choose between an inaccurate explanation, false explanation, or “not sure” option. Footnote 8

I select six inaccurate statements through two pilot studies (discussed in Appendix H). Four survey items speak to specific falsehoods surrounding the 2020 election: that Donald Trump was the actual victor of the 2020 election (Election Winner); that Antifa was responsible for the January 6th riots (Antifa); that rigged voting machines were responsible for changing individual votes (Votes Changed); and that illegal immigrants voting were responsible for Trump’s narrow loss in key swing states (Immigrants Voted).

I also query individuals about a belief that has become similarly polarized through elite Republican rhetoric – that the COVID-19 vaccine is unsafe and ineffective. Footnote 9 Finally, I include a general, non-partisan misperception which did not vary across partisan groups in pilot testing as a “baseline”: that the moon landing was faked (Moon Landing). Within the battery of inaccurate beliefs, I also embed a similarly formatted attention check.

From the above discussion, I develop a set of pre-registered Footnote 10 and testable hypotheses about the relationship between partisan identity and misperceptions surrounding the 2020 election and the COVID-19 vaccine. I first hypothesize:

H1: Treatments designed to encourage accuracy-oriented processing will decrease Republicans’ endorsement of false beliefs surrounding the 2020 election and coronavirus pandemic.

I also examine the effectiveness of techniques designed to reduce expressive responding on political Independents. Expressive responding is hypothesized to occur when individuals gain some value from signaling their identity commitments via survey responses – this should be less likely for Independents, who self-consciously choose not to identify as Republicans. However, given that Independent “leaners” behave similarly to avowed partisans, the treatments may have similar effects. I therefore propose my second hypothesis Footnote 11 :

H2: Accuracy pressures and response substitution reduction treatments will reduce the endorsement of misperceptions among Independents.

Results

I collect a sample of 1876 Republicans on February 3rd, 2022 through CloudResearch’s Prime Panel, allowing me to assess expressive responding among Republicans broadly and Trump supporters specifically. Footnote 12 From 1876 completed interviews, 380 respondents were pruned due to attention check failures, low completion times, or responses indicating they were outside the sample of interest (i.e, Democrats or Joe Biden Voters), resulting in 1496 observations.

The six individual DVs (Votes Changed, Immigrant Vote, Election Winner, Antifa, COVID, and Moon) were transformed into dummies, where one indicates endorsement of the inaccurate statement and zero indicates endorsement of either the empirically true statement or the do not know option. In line with the pre-analysis plan, I conduct a confirmatory factor analysis on the proposed Big Lie variable (comprised of variables Votes Changed, Immigrant Vote, Election Winner, and Antifa), as well as an Omnibus variable which adds the COVID variable to the four Big Lie variables. Footnote 13

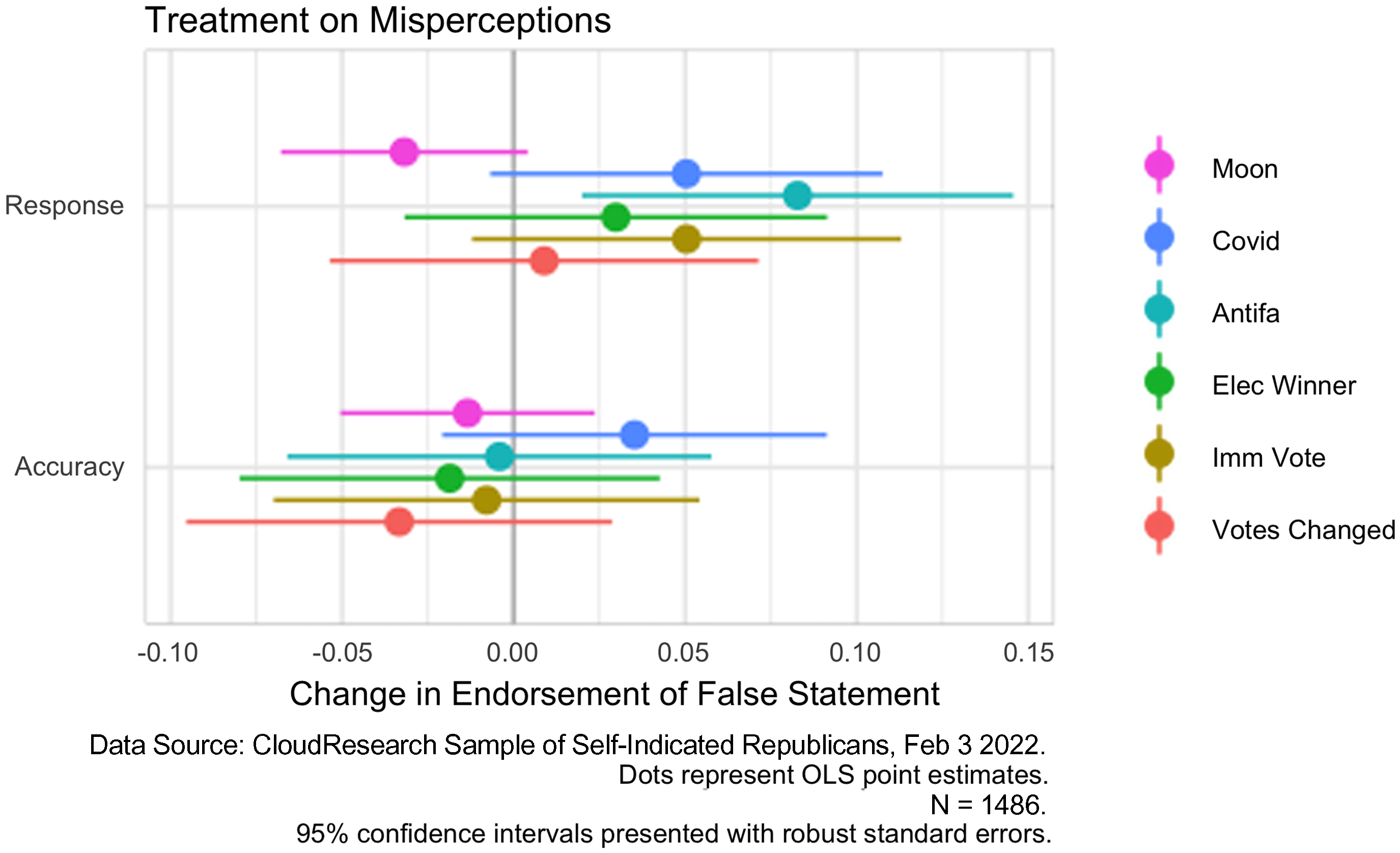

Figure 1 shows the effect of the treatment variables, Response and Accuracy on support for the dependent factor variables (Big Lie and Omnibus), measured using OLS regression with robust standard errors. Contrary to expectations, the two treatment variables are not associated with significant decreases in support for mistaken beliefs surrounding COVID-19 and the 2020 election. In fact, though accuracy pressures appear to have no effect, the response substitution treatment significantly increased endorsement of misperceptions. Moving from the control group to the response substitution treatment group is associated with a 0.23 point increase in number of mistaken beliefs endorsed on a five-point scale (approximately a 4.6% increase).

Figure 1. Effect of Treatment on Factor Misperceptions (Republicans).

I next move to the effect of the treatments on each statement, shown in Figure 2. Similar patterns obtain as with the factor variables. The accuracy pressure treatment is not significant in changing the likelihood that an individual endorses any of the six inaccurate statements. However, the response substitution treatment is associated with a significant increase of approximately 8.6% for the Antifa variable. While the response substitution treatment does not attain conventional significance for any of the other inaccurate statements (Immigrant Vote, Election Winner, Votes Changed, or COVID), the direction is universally positive, leading to the overall significance of the treatment on the factor variables.

Figure 2. Effect of Treatment on Individual Misperceptions (Republicans).

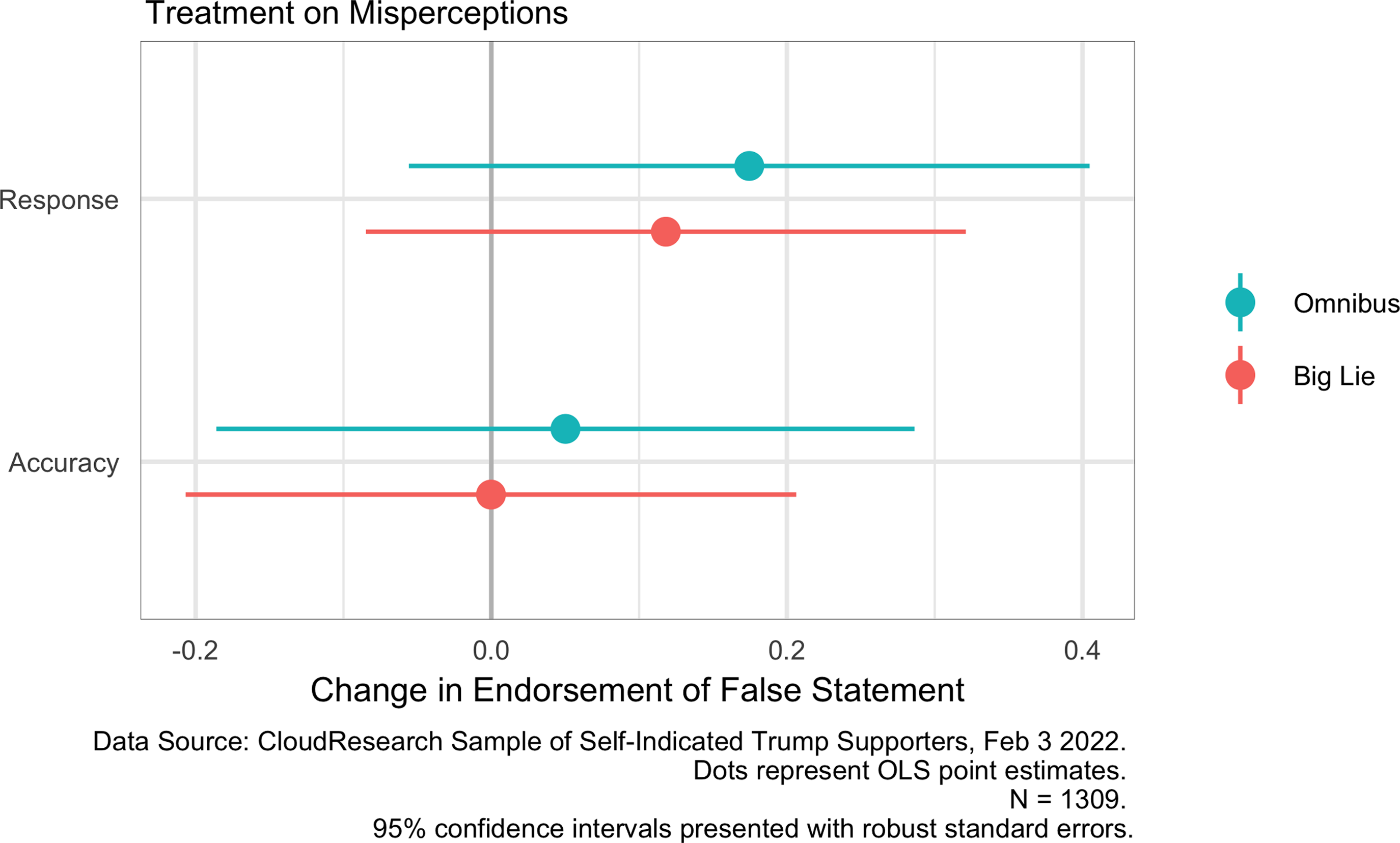

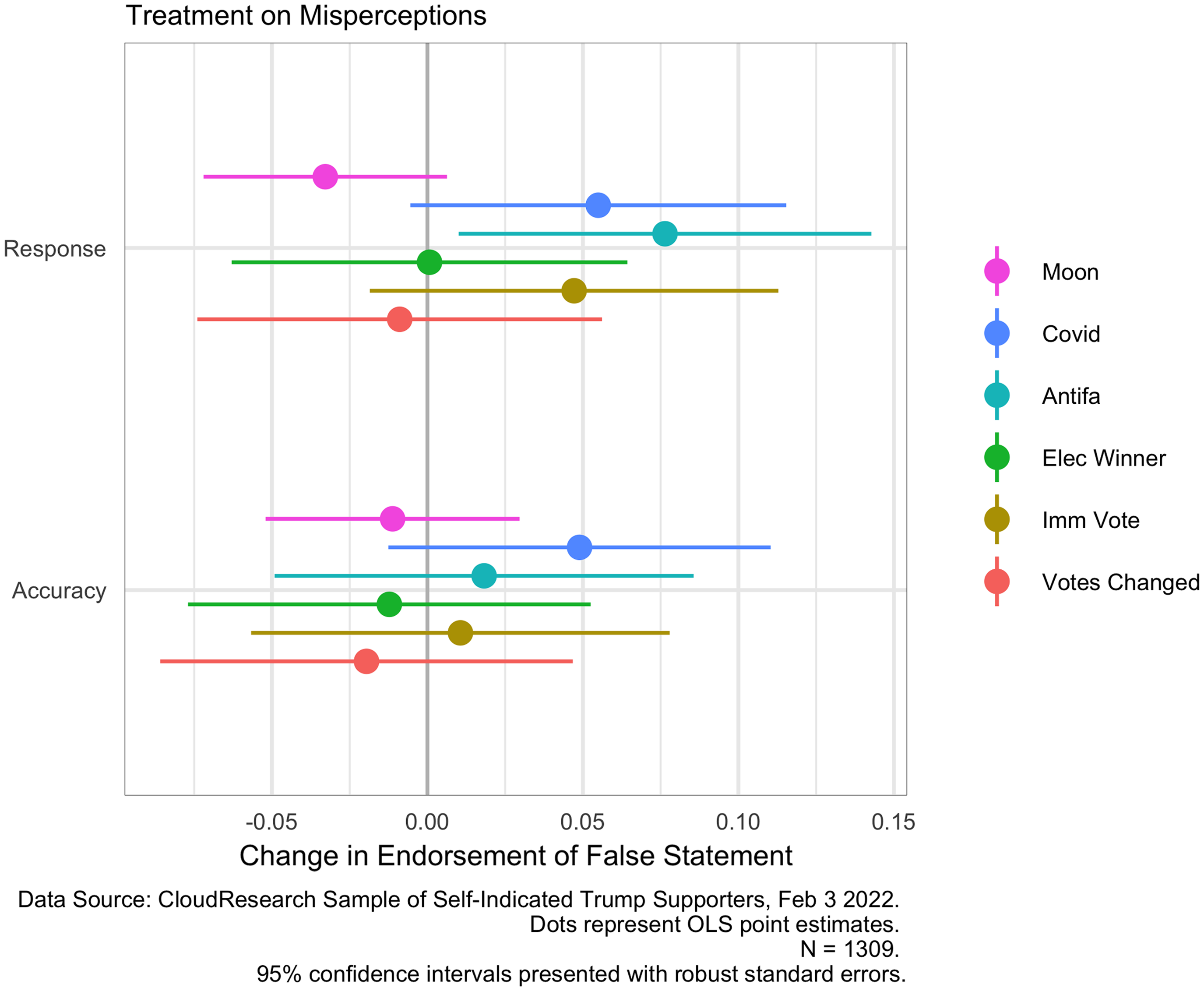

I then examine the same main model estimations for a particular subsample of Republicans who may be particularly likely to engage in expressive responding: Trump supporters. Footnote 14 The results of these regressions are shown in Figures 3 and 4. In contrast to the findings for the Republican sample writ large, neither of the treatments are significant in changing support for either of the factor variables (Big Lie or Omnibus). Still, examining individual variables (see Appendix A.8) shows a similar underlying pattern, as the response substitution treatment is significantly and positively (7.6%) associated with a belief that Antifa was responsible for the January 6th insurrection.

Figure 3. Effect of Treatment on Factor Misperceptions (Trump Supporters).

Figure 4. Effect of Treatment on Individual Misperceptions (Trump Supporters).

Independents

To assess H2, I collect a sample of self-identified Independents Footnote 15 using CloudResearch’s MTurk tool kit from March 7th to 11th (N = 1221). After pruning individuals outside my sample of interest, those that fail attention checks, and those with sub two-minute completion times, I analyze the remaining 592 useable responses.

The modeling strategy is identical to the Republican sample, first creating two factor variables (Big Lie and Omnibus), on which I regress the two treatment variables (Response and Accuracy). Models are also estimated for each of the individual variables (Votes Changed, Immigrant Vote, Election Winner, Antifa, COVID, and Moon). Figures 5 and 6 show the effect of the treatment variables on the dependent variables, measured using OLS regression with robust standard errors and including standard demographic controls. Neither of the treatments significantly shift endorsement of any misperceptions – factor or individual – at traditional levels of significance. Footnote 16

Figure 5. Effect of Treatment on Misperceptions (Independents).

Figure 6. Effect of Treatment on Misperceptions (Independents).

Alternate hypothesis: priming

It is possible that the response substitution treatment did not function as intended due to priming individuals’ identities as Trump supporters. To test this, I conduct an additional, non-pre-registered analysis regressing recalled vote for Trump on the experimental treatment groups for the Republican sample. Since the experimental groups are randomly assigned, one would not expect them to be significantly associated with indicated vote for Donald Trump (measured post-treatment). By contrast, if the treatment is priming partisan identity, one may expect assignment to the response substitution treatment to be associated with an increased likelihood to indicate voting for Trump. I find this to be the case – the response substitution treatment is associated with an approximately 4.2% increase in reported Trump vote (see Appendix A.9).

Discussion and conclusion

Democracies are systems in which parties lose elections. For democracies to flourish, however, parties must not only lose elections, but admit they lost elections fair and square. These findings suggest that for large swaths of Republicans, this is no longer the case. Incentives designed to limit expressive responding (accuracy pressures and response substitution treatments) were universally ineffective in reducing support for inaccurate beliefs surrounding the 2020 election and the COVID-19 pandemic. In fact, among Republicans, response substitution treatments actually increased endorsement of misperceptions.

There are two possible interpretations to this pattern of findings. First, it is plausible that the treatments are ineffective because respondents do genuinely support these mistaken beliefs, and thus there is no expressive responding to reduce. Alternatively, the experimental treatments may simply have been ineffective. With regard to the accuracy pressure, the treatment should theoretically reduce expressive responding by increasing attention to the task. I conduct an exploratory (non-pre-registered) analysis showing that median response times were higher in the accuracy pressure treatment for both Republicans (250 vs. 224 s) and Independents (224 vs. 209 s); however, a two-sample Brown–Mood median test reveals that the difference was significant only for the Republican sample.Footnote 17 Thus, there is some evidence that these pressures did increase respondents’ cognitive load, at least among Republican respondents.

There are two further considerations regarding the effectiveness of the response substitution treatment. First, it is possible that expressive responding is motivated not by positive feelings toward Donald Trump, but negative feelings toward Joe Biden or the Democrats. Future research should address this possibility by allowing individuals to express negative opinions toward the outgroup. Second, response substitution treatments may have primed individuals’ partisan identities, thus driving the observed backfire effect. I find suggestive evidence for this, as assignment to the response substitution treatment (which explicitly references Trump) increases the likelihood to report they voted for Trump in the post-treatment battery.

Why might the response substitution have served as a prime in this experiment, but not in others (e.g., Nicholson et al. Reference Nicholson, Coe, Emory and Song2016; Yair and Huber Reference Yair and Huber2021)? First, beliefs surrounding the Big Lie may be too strongly held and related to political identities: thus, the treatment was unsuccessful because “signaling” their beliefs is insufficient to diminish partisan attachment to these misperceptions. Alternatively, specific design choices may explain the divergent results. For instance, Yair and Huber (Reference Yair and Huber2021) first showed respondents a photo of individuals along with information about their partisan identification; then asked respondents to comment on their values; and finally asked an open-ended question about their perceived attractiveness. In this experiment, respondents were first asked about their opinions on Donald Trump and coronavirus through multiple choice questions; then introduced to a set of misperceptions; and asked to indicate their level of support for these statements. It is possible that response substitution treatments only work when individuals are given the opportunity to respond after exposure to the topic of interest, and/or when they can do so through open-ended questions. Future research could directly test these possibilities to determine when response substitution treatments are most effective.

Nonetheless, the fact that both treatments which have shown to reliably reduce expressive responding did not reduce endorsement of misperceptions surrounding the 2020 election fits with a larger body of research (Graham and Yair Reference Graham and Yair2022; Theodoridis and Cuthbert Reference Thedoridis and Cuthbert2022) revealing that respondents are sincere when answering questions about misinformation surrounding the Big Lie. Using a series of pre-registered survey experiments which together employ accuracy pressures, response substitution treatments, list experiments, and financial experiments, evidence for expressive responding is extremely limited across these studies. Overall, the consistent absence of evidence for expressive responding surrounding the Big Lie across multiple studies, treatments, samples, and points in time suggests these anti-democratic beliefs are strongly held.

Supplementary material

To view supplementary material for this article, please visit https://doi.org/10.1017/XPS.2022.33.

Data availability statement

The data, code, and any additional materials required to replicate all analyses in this article are available at the Journal of Experimental Political Science Dataverse within the Harvard Dataverse Network, at: doi.org/10.7910/DVN/KTTNGM.

Conflicts of interest

The author declares no conflict of interest.

Ethics statement

This project was approved by the University of Florida Internal Review Board #202102817. The author affirms that the research conducted adheres to the American Political Science Association’s Principles and Guidance for Human Subjects Research.