Privileged attention to biological motion, including body movement and face movement (e.g., gaze direction and facial expression), is assumed to be a prerequisite for identifying others’ affective and mental states in typical development (Blake & Shiffrar, Reference Blake and Shiffrar2007; Pavlova, Reference Pavlova2012; Todorova et al., Reference Todorova, Hatton and Pollick2019). However, this visual attention to biological motion can be reduced or absent in people with autism spectrum disorder (ASD) (Klin et al., Reference Klin, Lin, Gorrindo, Ramsay and Jones2009; Mason et al., Reference Mason, Shic, Falck-Ytter, Chakrabarti, Charman, Loth, Tillmann, Banaschewski, Baron-Cohen, Bölte, Buitelaar, Durston, Oranje, Persico, Beckmann, Bougeron, Dell’Acqua, Ecker and Moessnang2021), a lifelong and highly prevalent neurodevelopmental disorder characterized by pronounced impairments in social communication and presence of restricted interests and repetitive behaviors (American Psychiatric Association, 2013). A reduction in this visual attention possibly contributes to autistic people’s impairment in understanding the body and facial movements, resulting in the emergence of social dysfunction (Kaiser & Shiffrar, Reference Kaiser and Shiffrar2009; Pavlova, Reference Pavlova2012). Hence, studies need to delineate and understand the underlying mechanism of reduced visual attention to biological motion in autistic people.

Much previous work studied people’s visual attention to body movement using point-light animations, which portrayed biological motion with points located on the major joints. Studies using such a design have found that autistic people even toddlers as young as 15 months demonstrated a reduced visual preference for biological motion over scrambled and non-biological motion, and shorter looking times to biological motion than non-autistic people (Klin et al., Reference Klin, Lin, Gorrindo, Ramsay and Jones2009; Mason et al., Reference Mason, Shic, Falck-Ytter, Chakrabarti, Charman, Loth, Tillmann, Banaschewski, Baron-Cohen, Bölte, Buitelaar, Durston, Oranje, Persico, Beckmann, Bougeron, Dell’Acqua, Ecker and Moessnang2021; Todorova et al., Reference Todorova, Hatton and Pollick2019). Besides body movement, face movement (e.g., movements of eyes or mouth and emotional expression), another form of human biological motion, also plays a critical role in social interactions by providing a wealth of dynamic information about people’s interests and mental states. For example, gaze shifting provides information about other people’s focus of interest and communicative intention (Alexandra et al., Reference Alexandra, Bayliss and Tipper2007; Bayliss et al., Reference Bayliss, Paul, Cannon and Tipper2006; Soussignan et al., Reference Soussignan, Schaal, Boulanger, Garcia and Jiang2015). Attending to mouth or lip movements along with produced language can help speech perception, especially in noisy environments (Banks et al., Reference Banks, Gowen, Munro and Adank2015; Begau et al., Reference Begau, Klatt, Wascher, Schneider and Getzmann2021). Facial expression, the most complicated face movement, requiring movements of many facial features such as the eyes and mouth, signals the affective states of others (Dawel et al., Reference Dawel, Wright, Irons, Dumbleton, Palermo, O’Kearney and McKone2017). Due to the close relationship between face movement and social signals, it is intriguing to examine how autistic people perceive and understand face movements.

Most studies in this field have focused on autistic people’s attention to communication-related face movements when participants were watching actors speaking or engaging with them (e.g., Feng et al., Reference Feng, Lu, Wang, Li, Fang, Chen and Yi2021; Jones & Klin, Reference Jones and Klin2013; Shic et al., Reference Shic, Wang, Macari and Chawarska2020; Wang et al., Reference Wang, Hoi, Wang, Song, Li, Lam, Fang and Yi2020). Autistic children are found to be less likely to follow others’ gazes (Dawson et al., Reference Dawson, Toth, Abbott, Osterling, Munson, Estes and Liaw2004; Wang et al., Reference Wang, Hoi, Wang, Song, Li, Lam, Fang and Yi2020) and look less at the eyes or mouth than non-autistic ones when a face with a direct gaze was speaking (Feng et al., Reference Feng, Lu, Wang, Li, Fang, Chen and Yi2021; Shic et al., Reference Shic, Wang, Macari and Chawarska2020). Even infants later diagnosed with ASD also showed reduced eye-looking time than non-autistic infants when they watched an actor engaging with them (Jones & Klin, Reference Jones and Klin2013). However, these studies used stimuli with high communicative intent where gaze and speech cues were communication-related, obscuring the contribution of basic face movement and social salience to guide attention. Therefore, it is unclear what drives autistic people’s limited attention to a speaker or engagement with a person’s eyes or mouth. One possibility may lie in the insensitivity to social saliency in ASD, given their diminished activation of social brain areas involved in processing human voices, gaze, emotion, and intention (Abrams et al., Reference Abrams, Padmanabhan, Chen, Odriozola, Baker, Kochalka, Phillips and Menon2019; Chevallier et al., Reference Chevallier, Kohls, Troiani, Brodkin and Schultz2012; Pelphrey et al., Reference Pelphrey, Adolphs and Morris2004, Reference Pelphrey, Shultz, Hudac and Vander Wyk2011; Pitskel et al., Reference Pitskel, Bolling, Hudac, Lantz, Minshew, Wyk and Pelphrey2011). Alternatively, limited attention to moving eyes and mouth in ASD might be determined by their insensitivity to basic face movements. As for the perception of body movement, people should first identify simple point-of-light displays of body movement, and then extract higher-order information from these displays, such as emotional content (Hubert et al., Reference Hubert, Wicker, Moore, Monfardini, Duverger, Da Fonséca and Deruelle2007; Parron et al., Reference Parron, Da Fonseca, Santos, Moore, Monfardini and Deruelle2008). Attention to the communication-related face movement may also have two hierarchical levels. People should first detect basic face movements and then extract the social information of those movements. As such, autistic people’s reduced visual attention to face movement may be due to their insensitivity in guiding their attention to the basic face movement. In other words, if autistic people have reduced visual attention to the basic face movement, it likely leads to dysfunction in subsequent processing. The current study aimed to test whether autistic people showed reduced visual attention to the simple open-closed movement of the eyes or mouth, rather than complicated face movements including higher-order social meanings (e.g., joint attention and facial expression).

To achieve this goal, we should minimize the communicative intention of the face movement, instead of using faces with gaze shifting to an object or an articulating mouth as stimuli. Our study used face stimuli with eyes blinking or mouth moving silently (the actor continuously moved their eyes or mouth open and closed). By comparing the attention to the eyes or mouth of the dynamic face to the same static face, we could test whether autistic people could guide their attention to basic face movement. Our study used a free-viewing eye-tracking task where participants were only required to view the videos without any tasks. Such a free-viewing task without complex verbal instructions, relying on eye movements as the response modality, allowed participants with a wide age range to be administered. We further assessed the age effect on visual attention to the basic face movement in a large sample (277 participants in total) ranging from preschoolers to teenagers (3–17 years old). This is essential, given concerns regarding some inconsistent findings in ASD literature due to the small sample size as well as the variable and limited age range across different studies.

Considering that autistic people can usually identify simple point-of-light displays of body movement (Hubert et al., Reference Hubert, Wicker, Moore, Monfardini, Duverger, Da Fonséca and Deruelle2007), we hypothesized that biological movement would increase attention to social areas that are moving (eyes blinking and mouth moving) in comparison to attention to those areas in a static image, both for autistic and non-autistic participants. Concerning the age effect, little has been reported about changes in scanning face or biological motion in ASD compared to the non-ASD group over childhood. Some meta-analyses suggested that diminished social attention remains constant across development (from infancy to adulthood) in autistic people (Chita-Tegmark, Reference Chita-Tegmark2016; Frazier et al., Reference Frazier, Strauss, Klingemier, Zetzer, Hardan, Eng and Youngstrom2017). A recently published paper found that autistic children showed less preference for biological motion than non-autistic children, and the magnitude of the preference was stable across development (6 to 30 years old; Mason et al., Reference Mason, Shic, Falck-Ytter, Chakrabarti, Charman, Loth, Tillmann, Banaschewski, Baron-Cohen, Bölte, Buitelaar, Durston, Oranje, Persico, Beckmann, Bougeron, Dell’Acqua, Ecker and Moessnang2021). Based on these findings, we further hypothesized that autistic participants would show reduced attention to basic face movement than non-autistic participants, which was persistent across ages.

Method

Participants

A total of 292 children and adolescents aged 3 to 17 years, including 157 with ASD and 135 non-autistic participants, completed the eye-tracking task. All children were Han Chinese. Twelve autistic and three non-autistic participants were excluded from our analysis due to poor eye movement data quality (see “Data Analysis” section for details), resulting in 277 participants contributing valid data. This final sample included 145 autistic children and adolescents (126 boys) and 132 non-autistic children and adolescents (106 boys). Autistic participants were recruited from a psychiatric hospital. They were evaluated by two professional child psychiatrists to obtain a clinical diagnosis of ASD according to DSM-5 (American Psychiatric Association, 2013) criteria before participating in this study. However, information about whether they got an earlier diagnosis was not available. The participants were still engaging in systematic interventions or had a history of the interventions to improve their social skills when they participated in this study, with 91% receiving applied behavior-analytic interventions. The exclusion criteria were the following: (1) Participants with other common mental disorders, such as schizophrenia, mood disorder, anxiety disorders, attention deficit hyperactivity disorder, and tic disorders (participants with developmental delays or cognitive impairment were not excluded). These mental health diagnoses were determined by clinical evaluation, including clinical interviews, caregiver reports of participants’ history, and psychiatric evaluations (e.g., a semi-structured interview based on Kiddie Schedule for Affective Disorders and Schizophrenia). (2) Participants with physical diseases which affected eye-tracking; (3) Use of psychotropic drugs in the past month. Non-autistic children and adolescents were recruited from local communities by advertisements online as well as from local schools. These non-autistic children and adolescents did not exhibit any mental diseases. The other exclusion criteria were the same as the autistic group. The chronological age of the two groups was not matched (M ASD = 6.81 years, SD ASD = 2.90 vs M non-ASD = 7.98 years, SD non-ASD = 3.22) as shown by the Welch Two Sample t-test conducted in R software (R Core Team, 2015), t (264.52) = −3.15, p = .002. Figure S1(A) in the supplementary material shows the age distribution for the two groups. Therefore, age was included as a covariate in our data analysis.

Ethical considerations

The authors assert that all procedures contributing to this work comply with the ethical standards of the relevant national and institutional committees on human experimentation and with the Helsinki Declaration of 1975, as revised in 2008. The study was approved by the sponsoring university’s Ethical Committee. All participants’ parents provided written consent, and the children or adolescents provided oral consent if they could before the experiment.

Materials

The stimuli consisted of three videos depicting the same Han Chinese female model (23 years old with light makeup) with the eyes blinking continuously, the mouth reading meaningless numbers silently, and the upper body maintaining motionless for seven seconds (Figure 1 and Movie 1-3 in the supplementary materials). The videos were silent, with 1280 × 720 pixels in resolution, presented in the center of a 17-inch LCD monitor (1366 × 768 pixels resolution). The video frame rate was 25 frames/second.

Figure 1. One frame of the stimuli video and sample AOIs (Areas of interest; red = eye area, green = face area, blue = mouth area). Participants did not see the AOIs during the experiment. The model represented in the figure approved her images and videos for publication.

A Tobii Pro X3-120 eye tracker (Tobii Technology, Stockholm, Sweden; sampling rate: 120 Hz) was used to record children’s eye movement, and Tobii Studio software (Tobii Technology, Stockholm, Sweden) was used to present the stimuli.

Procedures

Participants sat about 60 cm away from a monitor. For children who were too young to keep quiet and still, parents were asked to sit behind them (out of view of the eye tracker) and remain silent. Before the experiment, participants were asked to pass a five-point calibration procedure. They had to fixate on the five red calibration points appearing sequentially in the center and four corners of the screen. We visually checked the calibration results provided by Tobii studio and started the experiment only after each participant’s calibration error was smaller than 1.5° visual angle.

After the calibration, participants viewed 12 randomly presented videos/trials, with each video type (blinking, moving mouth, and static) repeated four times. The intertrial interval was 1000–2000 ms with a black screen. The Tobii eye tracker recorded children’s eye movements during the whole experiment.

Eye movement data analysis

Data preprocessing

Fixation and sample data were exported from the Tobii Studio software. Fixations were calculated based on the I-VT fixation filter (Olsen, Reference Olsen2012) with the following parameters settings in the Tobii Studio software: (1) Missing sample data (validity code>0) that had a gap shorter than 75 ms were filled in using linear interpolation. Figure S2 in the supplementary material shows the histogram of the interpolation proportion. It was higher for the ASD group (M = 9.77%, SD = 8.93%) than the non-ASD group (M = 5.75%, SD = 7.19%), revealed by Wilcoxon rank sum test, W = 13,138, p < .001. (2) Gaze sample was averaged across the two eyes when data validity was high for both (validity code = 0) and included one eye if validity was low for the other eye (validity code>0); (3) the velocity threshold was set at 30°/s. (4) Fixations close spatially and temporally (<50 pixels, <75 ms) were merged to prevent longer fixations from being separated into shorter fixations because of data loss or noise. (5) Fixations shorter than 60 ms were discarded. Areas of interest (AOIs, see Figure 1) were defined around the eyes (a rectangle with 7.51°× 1.97° visual angles), mouth (a rectangle with 4.44°×1.97° visual angles), and face contour (an ellipse with semiaxis equal to 8.41° and 10.92° visual angles). The definition of these AOIs referred to some studies related to face scanning in autism (e.g., Shic et al., Reference Shic, Macari and Chawarska2014; Wang et al., Reference Wang, Hoi, Wang, Song, Li, Lam, Fang and Yi2020; Wang et al., Reference Wang, Hoi, Wang, Lam, Fang and Yi2020).

To ensure the data quality, we considered trials with more than 65% missing gaze data and trials in which participants did not look at the face AOI as unreliable and excluded them. After excluding these trials, we further identified outliers defined as >= 1.5 times the interquartile range based on the total face-looking time. Finally, after excluding these outliers, at least one trial per face condition was required to be included in the final sample. If a participant’s data did not fulfill this criterion, this participant was excluded from further analyses. Twelve (7.6%) autistic and three (2.2%) non-autistic participants were removed for insufficient data, resulting in 145 autistic and 132 non-autistic participants. In this final sample, 76 (4.4%) trials of the ASD group and 17 (1.1%) trials of the non-ASD group were removed for not looking at the face AOI. Additional three (0.2%) trials of the ASD group and 15 (0.9%) trials of the non-ASD group were removed for outliers. Including these outliers did not change statistical significance we reported in the results section. The average number of valid trials was 10 for the ASD group and 11 for the non-ASD group.

Overall proportional eye-looking and mouth-looking time

We calculated the proportional eye- or mouth-looking time by dividing the total fixation duration on the AOIs of the eyes or mouth by the total fixation duration on the AOI of the face, respectively. To test whether the proportional eye-looking or mouth-looking time varied for different groups (ASD and non-ASD) and conditions (blink, moving mouth, and static), we used an ANOVA with Condition as the within-subject variable, Group as the between-subject variable, and Age as a covariate to be controlled. Taking advantage of a relatively broad chronological age range, we further evaluated whether Age interacted with Group and Condition. Age was mean-centered across the overall sample in the above and following models.

Temporal course of eye-looking and mouth-looking time

While overall looking time is an intuitive and easily interpretable metric, it fails to describe the dynamic nature of social attention. Therefore, such an approach may not tell the moment-to-moment difference between groups and conditions in viewing patterns. It was unclear whether the differences were driven by consistent effects over time or local effects confined to particular times.

To examine how the looking time changed over time, we used a data-driven method based on a moving-average approach with a cluster-based permutation test to control the family-wise error rate (Maris & Oostenveld, Reference Maris and Oostenveld2007; Wang et al., 2020). For each trial, we segmented the sample data into epochs of 0.25 s (30-sample data) with a step of 1/120 s (one-sample data). We calculated the eye-looking/mouth-looking time in each epoch, resulting in a time series of eye-looking/mouth-looking time. We then conducted ANOVA for each time epoch with Condition as the within-subject variable, Group as the between-subject variable, and Age as a covariate to be controlled. We also added Age to interact with Group and Condition to test the effects of Age. As adjacent epochs likely exhibit the same effect, we controlled the family-wise error rate using the cluster-based permutation test.

The statistical analyses of eye/mouth-looking time were conducted in R software (R Core Team, 2015). We used “aov_ez” function in “afex” package to run ANOVA; When interaction effects were found, we used “emmeans” and “emtrends” functions in “emmeans” package to perform post hoc tests with the default tukey method for adjusting p-values due to multiple comparisons. Moreover, we used “clusterlm” function with default parameter settings in “permuco” package (Frossard & Renaud, Reference Frossard and Renaud2021) to conduct temporal course analysis.

Gaze difference map

The above AOI approaches were based on the prior hypothesis that the two groups may have eye/mouth-looking time differences. We further used a data-driven approach to reveal group differences in any part of the face region (in pixel space) without the AOI restriction. In this approach, we used z-tests to compare the two groups’ proportional looking time heatmaps at the pixel level for each condition. The cluster-based permutation test was used to control the family-wise error rate (Maris & Oostenveld, Reference Maris and Oostenveld2007). See the detailed method in the supplementary material.

Results

Biological motion increased visual attention to the eyes, but it was weaker in autistic than non-autistic participants

Overall proportional eye-looking time

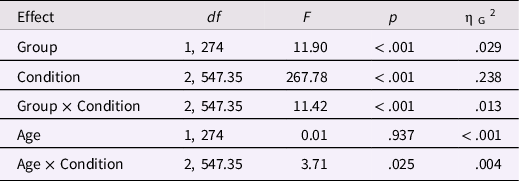

The results are shown in Figure 2a and Figure 2b. Table S1 in the supplementary material provides the mean and stand deviation of overall proportional eye-looking time for different groups and conditions. When Age was controlled (Table 1 presented full statistical results), the ANOVA showed that both the main effects of Group and Condition were significant, F (1, 274) = 11.90, p < .001, ηG 2 = .029, and F (2, 547.35) = 267.78, p < .001, ηG 2 = .238, respectively. The interaction between Group and Condition was also significant, F (2, 547.35) = 11.42, p < .001, ηG 2 = .013. Post hoc tests revealed that autistic participants looked less at the eyes than non-autistic participants in the blink and static conditions, t (274) = −4.29, p < .001 and t (274) = −3.11, p = .002, respectively, but not in the moving-mouth condition, t (274) = −0.44, p = .663. Post hoc tests also revealed a ranking order among three conditions for both the autistic and non-autistic groups: participants looked at the eyes longest in the blink condition, and they looked at the eyes longer in the static than the moving-mouth conditions, all ps < .001.

Figure 2. Overall proportional eye-looking time and mouth-looking time. Boxplots showing the overall proportional eye-looking time (a) and mouth-looking time (c) of the autistic and non-autistic groups in viewing different types of faces (blinking, moving mouth, and static). Autistic participants looked less at the eyes than non-autistic participants when viewing a face blinking continuously and a static face, but not a face with a moving mouth. Both groups’ eye-looking time ranked in descending order from viewing the blinking face, to the static face, and to the mouth-moving face. Autistic participants looked less at the mouth than non-autistic participants when viewing a face with a moving mouth, but not a face blinking continuously and a static face. Both groups’ mouth-looking time ranked in descending order from viewing the mouth-moving face, to the static face, and the blinking face. Each point represents one participant’s data. Error bar is one standard error. The linear relationship between age and eye-looking time (b) as well as mouth-looking time (d) of the autistic and non-autistic groups in viewing different types of faces. Participants’ eye-looking time and mouth-looking time did not change significantly as age increased. Dots represent participants’ data, lines indicate model fits, and shaded regions indicate 95% confidence intervals.

Table 1. ANOVA results of the main and interaction effects of group and condition when age was added as a covariate for the eye-looking time

When Age was added to the model to interact with Group and Condition, the same results were found (Table 2). Age only interacted with Condition. We compared the linear relationship (i.e., the slope of the model) between age and overall proportional eye-looking time to zero for three conditions. None of them was significant, t (273) = −1.27, p = .205, for the blink condition, t (273) = 0.73, p = .469, for the moving-mouth condition, t (273) = 1.03, p = .305, for the static condition. We next determined which pairs of slopes differ significantly. We found that the slopes for the blink and static conditions were statistically different, t (273) = −2.65, p = .023, but the other two pairs were not significant: blinking versus moving mouth, t (273) = −2.04, p = .106; static versus moving mouth, t (273) = 0.54, p = .850.

Table 2. ANOVA results of the main and interaction effects of group, condition, and age for the eye-looking time

Temporal course of eye-looking time

Figure 3a shows the autistic and non-autistic groups’ eye-looking time across stimuli presenting time in viewing different types of faces. When Age was controlled, one cluster was significant for the main effect of Group, the cluster-mass F statistic (i.e., summed value of F in the cluster) was 27,930.13, p < .001; one cluster was significant for the main effect of Condition, the cluster-mass F statistic was 52,995.22, p < .001. Additionally, one cluster was significant for the Group × Condition effect, the cluster-mass F statistic was 8934.91, p < .001. Figure 3b shows significant time periods for different effects.

Figure 3. Temporal course of eye-looking time and mouth-looking time. Eye-looking time (a) and mouth-looking time (c) across stimuli presenting time of the autistic and non-autistic groups in viewing different types of faces. Shaded areas indicate one standard error. Statistical results of the temporal course of eye-looking time (b) and mouth-looking time (d) when Age was controlled. The dotted horizontal lines represent the threshold set to the 95% percentile of the F statistic. The red parts show significant time periods. The plots show the F statistic on the vertical Y-axis against the time epoch on the horizontal x-axis. From top to down: effects of Group, Condition, Age, and interaction between Group and Condition across time.

When age was added to the model to interact with Group and Condition, the same results were found (Figure S3 in the supplementary material). Specifically, one cluster was significant for the main effect of Group, the cluster-mass F statistic was 27,889.46, p < .001; one cluster was significant for the main effect of Condition, the cluster-mass F statistic was 50,905.40, p < .001. Additionally, one cluster was significant for the Group × Condition effect, the cluster-mass F statistic was 8806.49, p < .001. There were no other significant effects.

The temporal course results confirmed the overall looking time results, and provided additional information that the effects of Group and Condition were not restricted to a particular time period.

Biological motion increased visual attention to the mouth, but it was weaker in autistic than non-autistic participants

Overall proportional mouth-looking time

The results are shown in Figure 2c and Figure 2d. Table S2 provides the mean and stand deviation of overall proportional mouth-looking time for different groups and conditions. When Age was controlled (Table 3 presented full statistical results), the ANOVA showed that both the main effects of Group and Condition were significant, F (1, 274) = 5.47, p = .020, ηG 2 = .011, and F (1.49, 409.50) = 348.01, p < .001, ηG 2 = .363, respectively. The interaction between Group and Condition was also significant, F (1.49, 409.50) = 9.26, p < .001, ηG 2 = .015. Post hoc tests revealed that autistic participants looked less at the mouth than non-autistic participants in the moving-mouth condition, t (274) = −3.32, p = .001, but not in the blink and static conditions, t (274) = 0.01, p = .994 and t (274) = −0.82, p = .410, respectively. Post hoc tests also revealed a ranking order among three conditions for both the autistic and non-autistic groups: participants looked at the mouth longest in the moving-mouth condition, and they looked at the mouth longer in the static than the blink conditions, all ps < .05.

Table 3. ANOVA results of the main and interaction effects of group and condition when age was added as a covariate for the mouth-looking time

The same results were found when Age was added to the model to interact with Group and Condition (Table 4). Any Age effects were not significant.

Table 4. ANOVA results of the main and interaction effects of group, condition, and age for the mouth-looking time

Temporal course of mouth-looking time

Figure 3c shows the autistic and non-autistic groups’ mouth-looking time across stimuli presenting time in viewing different types of faces. When Age was controlled, one cluster was significant for the main effect of Group, the cluster-mass F statistic was 16,760.79, p < .001; one cluster was significant for the main effect of Condition, the cluster-mass F statistic was 111466.6, p < .001. Additionally, one cluster was significant for the Group × Condition effect, the cluster-mass F statistic was 10,523.53, p < .001. Figure 3D shows significant time periods for different effects.

The same results were found when Age was added to the model to interact with Group and Condition (Figure S4 in the supplementary material). Specifically, one cluster was significant for the main effect of Group, the cluster-mass F statistic was 16,723.89, p < .001; one cluster was significant for the main effect of Condition, the cluster-mass F statistic was 108326.70, p < .001. Additionally, one cluster was significant for the Group × Condition effect, the cluster-mass F statistic was 10,714.34, p < .001. There were no other significant effects.

The temporal course results confirmed the overall looking time results, and provided additional information that the effects of Group and Condition were not restricted to a particular time period.

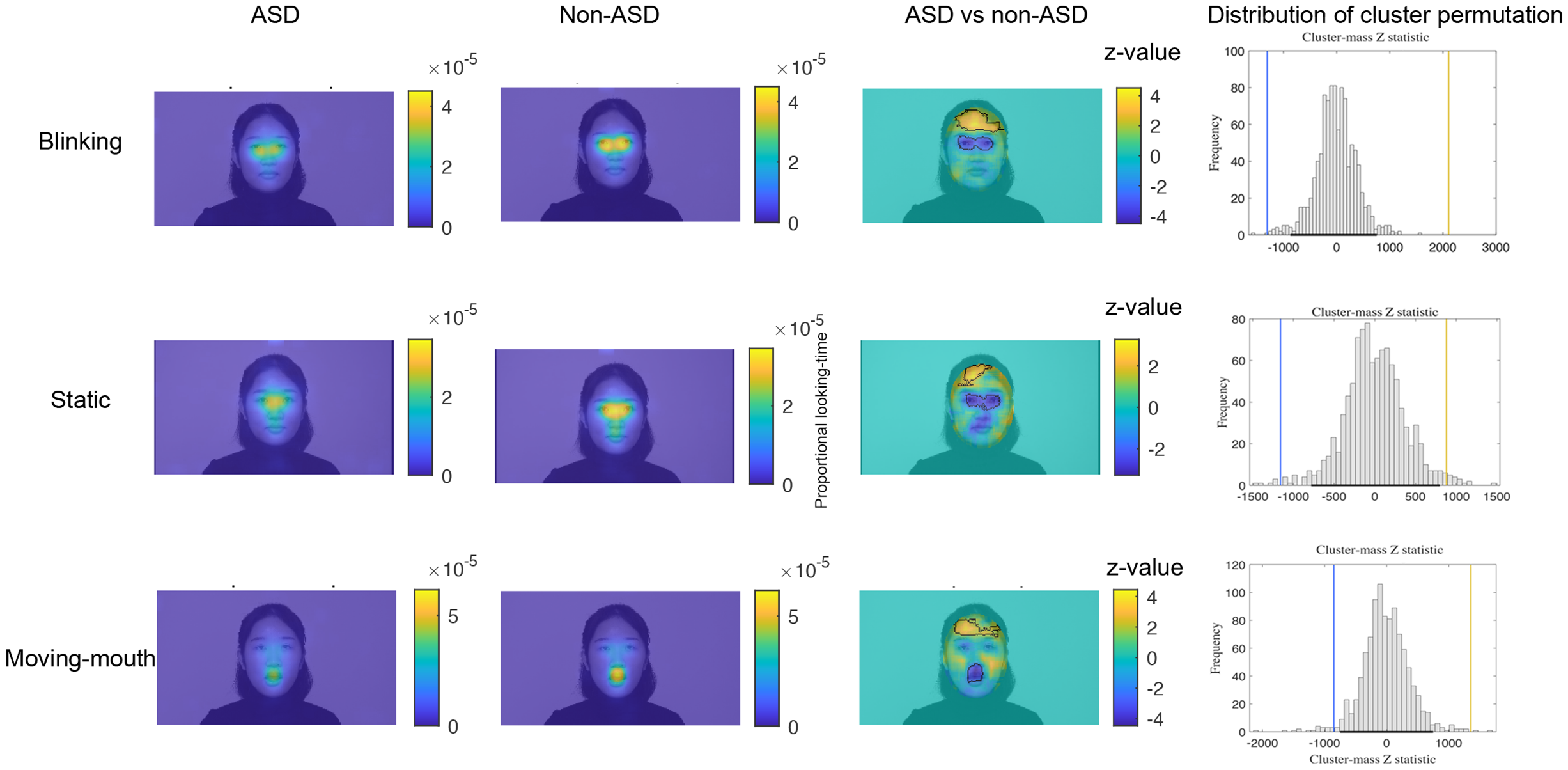

Autistic participants looked more at the forehead than non-autistic participants

The first two columns of Figure 4 show the proportional looking time heatmaps for the ASD and non-ASD groups in the three conditions: ASD and non-ASD groups mainly focused on the face region, especially the eyes, mouth, and nose. Thus, we applied a mask to only model the pixels within the face region since sparse outlines outside the face are likely to generate erroneous estimation. By comparing the group differences directly, we found that two clusters were significant for each condition (the third and fourth columns of Figure 4). The yellow (warmer) color cluster denotes greater proportional looking time by autistic participants, and the blue (colder) color cluster denotes greater proportional looking time by non-autistic participants. Specifically, autistic participants looked less at the eyes than non-autistic participants in the blinking and static conditions. Autistic participants also looked less at the mouth than non-autistic participants in the moving-mouth condition. These results replicated the results of overall proportional looking time. Furthermore, we found that autistic participants looked more at the forehead than non-autistic participants in all three conditions.

Figure 4. Results of the gaze difference map. The first two columns show the proportional looking time heatmaps for the ASD and non-ASD groups in three conditions. Warmer colors (yellow) denote greater proportional looking time. The third column shows the group difference maps with z values. Warmer colors (yellow) denote greater proportional looking time by autistic than non-autistic participants, and colder colors (blue) denote greater proportional looking time by non-autistic than autistic participants. Significant regions are outlined with black lines. The fourth column shows the cluster-based permutation approach to correcting multiple comparisons. The horizontal black line indicates the region between upper and lower 2.5% (corresponding to a significance level of 0.05, two-tailed) of the distribution generated by the permutation test. The vertical lines indicate the positions of the cluster-mass Z statistic (i.e., summed values of z in the cluster) for the original groups relative to the distribution of the permutated data. The color of the lines corresponds to the color of the clusters shown in the third column.

Supplementary analyses

We matched the two groups’ age and gender using “matchit” function with default parameters in “MatchIt” package. This matched sample included 125 autistic children and adolescents (106 boys) and 125 non-autistic children and adolescents (102 boys). Figure S1(B) shows the age distribution for the two matched groups. We reanalyzed the overall proportional eye-looking and mouth-looking time with the matched sample. The results exactly mirrored the results from the unmatched sample. Full results are presented in the Supplemental Material.

Discussion

We examined eye- and mouth-looking times when viewing a blinking, mouth moving, and static face in a large sample of autistic and non-autistic children and adolescents across a wide developmental range (3–17 years old). We found that both autistic and non-autistic participants modulated their visual attention depending on the presence of basic face movements. However, autistic participants displayed a weaker modulation pattern than non-autistic participants. Furthermore, this modulation effect was stable across ages.

Specifically, both autistic and non-autistic participants increased their attention to the moving region. That is, they increased eye-looking time when viewing the blinking face and increased mouth-looking time when viewing the mouth-moving face. However, autistic participants still looked at the eyes less than non-autistic participants when viewing the blinking and static faces, but not the mouth-moving face; they looked at the mouth less than non-autistic participants when viewing the mouth-moving face, but not blinking and static faces. This atypical scanning pattern appeared as soon as the face stimulus appeared and lasted continuously for the duration of stimulus presentation. Together, these results suggested that autistic participants could modulate their visual attention according to the basic face movements, but they failed to increase their attention to the moving region to the same extent as non-autistic participants did. These results were consistent with findings revealing that autistic people showed atypical gaze behaviors more than non-autistic people when viewing dynamic social stimuli (Chevallier et al., Reference Chevallier, Parish-Morris, Mcvey, Rump, Sasson, Herrington and Schultz2015; Shic et al., Reference Shic, Wang, Macari and Chawarska2020).

The findings of autistic participants’ reduced attention to eyes or mouth than non-autistic participants when viewing dynamic faces also replicate earlier work (Feng et al., Reference Feng, Lu, Wang, Li, Fang, Chen and Yi2021; Jones & Klin, Reference Jones and Klin2013; Shic et al., Reference Shic, Wang, Macari and Chawarska2020; Wang et al., Reference Wang, Hoi, Wang, Song, Li, Lam, Fang and Yi2020). Poor eye contact and diminished attention to mouth have played a central role in the social difficulties of ASD (Feng et al., Reference Feng, Lu, Wang, Li, Fang, Chen and Yi2021; Tanaka & Sung, Reference Tanaka and Sung2016). Thus, it is important to understand the mechanism underlying reduced visual attention to eyes and mouth in autistic people. Earlier work using dynamic stimuli with high communicative intent found that autistic people were less likely to look at the face, eyes, or mouth when actors were speaking or engaging with participants (Feng et al., Reference Feng, Lu, Wang, Li, Fang, Chen and Yi2021; Shic et al., Reference Shic, Wang, Macari and Chawarska2020; Wang et al., Reference Wang, Hoi, Wang, Song, Li, Lam, Fang and Yi2020). In our study, participants viewed a face moving their eyes or mouth open and closed silently with minimal social meaning. The results showed that autistic participants looked less at the basic face movement than non-autistic participants, which could influence the downstream social processing. For example, if autistic people looks less at an interactor’s eyes, they may fail in joint attention. Therefore, less likely to attend to complicated face movements with higher-order social meanings in ASD could be due to autistic people’s insensitivity in guiding attention to the basic face movements. Additionally, complicated face movements such as facial expressions require monitoring many basic face movements. Failing to attend to these movements in ASD could affect their information integration and subsequent emotional understanding. As such, our study implicates intervention targeting enhancing attention to basic face movement may be particularly efficient for advancing social function in ASD. However, the amount of looking time needed to gain enough facial information was unclear and could be the focus of future studies.

Reduced looking to face movement in ASD gave an interesting question: what captured autistic participants’ attention more than non-autistic participants? Since no prior regions were assumed, we used a data-driven method that compared the two groups’ looking time heatmaps pixel by pixel. The results replicated the AOI-based analysis and additionally revealed that autistic participants looked more at the forehead than non-autistic participants. When we checked our stimuli, we found the light reflection on the model’s forehead (Figure 1 and Movie 1–3 in the supplementary materials), which might attract the autistic participants’ attention more than the non-autistic participants. If this assumption is valid, one possible explanation for autistic people’s diminished attention to face movement is that they are more likely to be attracted by the distractor of the environment (Keehn et al., Reference Keehn, Nair, Lincoln, Townsend and Müller2016), considering that they rely more on bottom-up salient information for attention orienting than non-autistic people (Amso et al., Reference Amso, Haas, Tenenbaum, Markant and Sheinkopf2014). Other potential mechanisms should also be tested in the future. For example, autistic people’s basic eye movement mechanics are different from non-autistic people’s, such as autistic people’s saccades are characterized by reduced accuracy and high variability in accuracy across trials (Schmitt et al., Reference Schmitt, Cook, Sweeney and Mosconi2014). This less precise looking may impact their looking times and patterns to the face movement. Future studies could additionally use the visually-guided saccade task to measure the basic eye movement (Schmitt et al., Reference Schmitt, Cook, Sweeney and Mosconi2014) to test this hypothesis. Another possibility is that autistic people may just attend less to motion overall, whether social- or nonsocial-related. However, this hypothesis is unlikely since autistic children prefer to attend dynamic geometric movies and repetitive movements compared to non-autistic children (Pierce et al., Reference Pierce, Conant, Hazin, Stoner and Desmond2011; Wang et al., Reference Wang, Hu, Shi, Zhang, Zou, Li, Fang and Yi2018). It would still be valuable for future research to establish whether the results presented here are about social viewing of face dynamics and not just viewing movement overall by including a condition allowing for passive viewing of dynamic nonsocial stimuli.

Taking advantage of our participants’ relatively broad age range, we also evaluated the age effects on attention to the basic face movement. We observed no significant continuous age effects in autistic or non-autistic groups, consistent with the presence of a developmentally stable and persistent reduction in social attention in ASD (Chita-Tegmark, Reference Chita-Tegmark2016; Frazier et al., Reference Frazier, Strauss, Klingemier, Zetzer, Hardan, Eng and Youngstrom2017; Mason et al., Reference Mason, Shic, Falck-Ytter, Chakrabarti, Charman, Loth, Tillmann, Banaschewski, Baron-Cohen, Bölte, Buitelaar, Durston, Oranje, Persico, Beckmann, Bougeron, Dell’Acqua, Ecker and Moessnang2021). This stability is noteworthy since it excludes the possibility that autistic people may have a delay in developing attention to basic face movement. If so, autistic participants should catch up with the non-autistic participants as they age, or the attention level of older autistic children would be comparable to younger non-autistic children. However, this is not the case here. Whether this weaker attention to basic face movement in autistic people is born naturally or a consequence of early experience is still unclear. A longitudinal study tracking infants to adults should be recommended to illustrate this issue further.

Several other potential future directions could be considered. First, we only tested the basic face movement effect on gaze behavior without considering the social saliency effect of face movement. Although previous studies have used socially interactive faces (e.g., an actress looking at the camera and speaking to the viewer using speech) to increase social saliency (Shic et al., Reference Shic, Wang, Macari and Chawarska2020), they also inevitably included basic face movement. One method for testing a pure social saliency effect is to compare the difference between upright and inverted interactive faces, given that both types of faces are identical in terms of basic face movement, while they differ by social meanings. Second, consistent tools for measuring IQ or developmental level across ages were unavailable due to the participants’ broad age range. Therefore, the lack of measurement of IQ is a limitation in our study. Although a meta-analysis indicated IQ had no impact on gaze atypicality in ASD (Frazier et al., Reference Frazier, Strauss, Klingemier, Zetzer, Hardan, Eng and Youngstrom2017), it is still crucial to ensure that the observed group differences were not due to the IQ differences but to the ASD itself. Third, our study excluded participants with anxiety disorder and attention deficit hyperactivity disorder, which have a high comorbidity rate with ASD (Khachadourian et al., Reference Khachadourian, Mahjani, Sandin, Kolevzon, Buxbaum, Reichenberg and Janecka2023). Thus, our study only described a subset of autistic participants. Further research should be carried out to obtain a more fine-grained understanding of visual attention to face movement across the full ASD population. Fourth, as experience with faces can influence face scanning (Quinn et al., Reference Quinn, Lee and Pascalis2019), future studies should collect and control participants’ experience with faces (e.g., neighborhood size, school size, and types of activities that would influence their social experiences with faces). Lastly, we could not test the intervention effect since all participants had an intervention history of improving their social skills. The found group difference might be larger if autistic participants without an intervention history were compared with non-autistic participants. It is also possible that those interventions might not impact visual attention in this kind of social free-viewing task where the participants do not necessarily have a motivation for increased visual engagement with a social partner. These possibilities could be addressed using a waiting list control design in the future.

In conclusion, this large sample study demonstrated that autistic children and adolescents could modulate their visual attention to the basic face movements; this attention captured by basic face movements was stable across 3–17 years old. However, the modulation effect was weaker in autistic participants than in non-autistic participants. Our findings further our understanding of the mechanism underlying visual attention to face movement and highlight enhancing attention to basic face movement to benefit social function in autistic people.

Supplementary material

The supplementary material for this article can be found at https://doi.org/10.1017/S0954579423000883.

Acknowledgements

This work was supported by the Fundamental Research Funds for the Central Universities (grant number 2021NTSS60); Key-Area Research and Development Program of Guangdong Province (grant number 2019B030335001); Clinical Medicine Plus X - Young Scholars Project in Peking University; Humanities and Social Sciences Youth Foundation of Ministry of Education of China (grant number 22YJC190022); and the National Natural Science Foundation of China (grant number 31871116, 62176248, 32271116, and 32200872). We are grateful to the individuals and families who participated in this study.

Author contribution

Qiandong Wang and Xue Li contributed equally to this work.

Competing interests

None.