Impact statement

Across underserved communities in the United States and globally, there are significant gaps in access to quality mental health services. Task-sharing is a promising strategy for bridging these gaps; yet, innovative approaches are required to scale up the use of task-sharing. A key objective of this formative study was to develop a digital platform for training non-specialist providers without prior experience in mental healthcare to deliver a brief psychosocial intervention for depression. Importantly, following a systematic design process, this project resulted in the development of two courses: one called Foundational Skills covering the skills to become an effective counselor and the second called Behavioral Activation covering the skills for addressing adult depression. Additionally, participant feedback collected throughout this project helps to ensure that the training content will be relevant and acceptable for further pilot testing and implementation within the target community settings in Texas. This project represents an initial critical step towards adapting and implementing task-sharing models to increase access to proven psychosocial interventions for mental health problems in underserved settings in the United States.

Introduction

Depression is a leading cause of disability in the United States, affecting roughly 8% of adults in any given year (Olfson et al., Reference Olfson, Blanco and Marcus2016). The resulting impact on individuals, their families and communities is substantial in the form of lost economic opportunity, caregiver distress and increased risk of unemployment, homelessness, poverty, suicide and premature mortality (Walker et al., Reference Walker, McGee and Druss2015). Alarmingly, less than one-third of adults with depression in the United States receive any treatment (Olfson et al., Reference Olfson, Blanco and Marcus2016). For patients who do receive treatment for depression, the vast majority – about 87% – are prescribed antidepressant medications, while only about 23% receive psychosocial interventions such as psychotherapy (Olfson et al., Reference Olfson, Blanco and Marcus2016). This is a major concern because in addition to strong evidence supporting the clinical effectiveness of psychosocial interventions (Cuijpers et al., Reference Cuijpers, Van Straten and Warmerdam2007; Cuijpers et al., Reference Cuijpers, van Straten, van Schaik and Andersson2009; Cuijpers et al., Reference Cuijpers, Pineda, Quero, Karyotaki, Struijs, Figueroa, Llamas, Furukawa and Muñoz2021), patients overwhelmingly express a preference for psychosocial interventions over pharmacological treatment (McHugh et al., Reference McHugh, Whitton, Peckham, Welge and Otto2013). However, a critical challenge facing health systems is determining how best to scale up access to preferred psychosocial interventions given the formidable barrier of the shortage of specialist mental health providers (Dinwiddie et al., Reference Dinwiddie, Gaskin, Chan, Norrington and McCleary2013; Lê Cook et al., Reference Lê Cook, Doksum, C-N, Carle and Alegría2013; HRSA, 2016; Larson et al., Reference Larson, Patterson, Garberson and Andrilla2016; Andrilla et al., Reference Andrilla, Patterson, Garberson, Coulthard and Larson2018). Innovative approaches are needed to expand access to proven psychosocial interventions, such as behavioral activation (Dimidjian et al., Reference Dimidjian, Barrera, Martell, Muñoz and Lewinsohn2011), to address the significant gaps in access to depression care while recognizing the preferences of patients.

An important innovation in depression care supported by scientific evidence attesting to its effectiveness involves the delivery of proven psychosocial interventions by frontline providers without specialized training in mental health care delivery (Singla et al., Reference Singla, Kohrt, Murray, Anand, Chorpita and Patel2017; Cuijpers et al., Reference Cuijpers, Karyotaki, Reijnders, Purgato and Barbui2018; Raviola et al., Reference Raviola, Naslund, Smith and Patel2019). This approach, commonly referred to as ‘task-sharing’ is supported by over 100 randomized controlled trials conducted across diverse settings globally, including low-income and middle-income countries (LMICs) where there are significant shortages in mental health providers (Barbui et al., Reference Barbui, Purgato, Abdulmalik, Acarturk, Eaton, Gastaldon, Gureje, Hanlon, Jordans and Lund2020). Research also supports the effectiveness of task-sharing models for delivering mental health care in higher-income countries such as the United States (Hoeft et al., Reference Hoeft, Fortney, Patel and Unützer2018; Singla et al., Reference Singla, Lawson, Kohrt, Jung, Meng, Ratjen, Zahedi, Dennis and Patel2021), where many individuals similarly lack access to effective mental health services. In the United States, challenges with access are further exacerbated as mental health providers are inequitably distributed, with significant workforce shortages in rural areas (Larson et al., Reference Larson, Patterson, Garberson and Andrilla2016; Andrilla et al., Reference Andrilla, Patterson, Garberson, Coulthard and Larson2018) and gaps in access that are particularly pronounced for underserved racial and ethnic minority groups (Dinwiddie et al., Reference Dinwiddie, Gaskin, Chan, Norrington and McCleary2013; Lê Cook et al., Reference Lê Cook, Doksum, C-N, Carle and Alegría2013).

Despite robust evidence supporting task-sharing models, there remain several challenges to scaling up and sustaining these approaches. One such challenge is the lack of scalable methods to train and ensure the clinical skills and competencies of non-specialist providers so that they can effectively deliver evidence-based psychosocial interventions. Digital technologies have emerged as promising tools for training non-specialist providers, as reflected in recent studies from Pakistan and India (Rahman et al., Reference Rahman, Akhtar, Hamdani, Atif, Nazir, Uddin, Nisar, Huma, Maselko and Sikander2019; Muke et al., Reference Muke, Tugnawat, Joshi, Anand, Khan, Shrivastava, Singh, Restivo, Bhan, Patel and Naslund2020; Nirisha et al., Reference Nirisha, Malathesh, Kulal, Harshithaa, Ibrahim, Suhas, Manjunatha, Kumar, Parthasarathy and Manjappa2023). Digital training programs can reduce costs associated with travel, classroom space, and time and availability of expert trainers while offering participants the freedom to learn and acquire new skills at their own pace (Sissine et al., Reference Sissine, Segan, Taylor, Jefferson, Borrelli, Koehler and Chelvayohan2014). Online training programs are widely used in the United States for health worker training, typically in the context of continuing education and programs focused on basic skills for responding to the needs of patients with mental disorders (Sinclair et al., Reference Sinclair, Kable, Levett-Jones and Booth2016; Jackson et al., Reference Jackson, Quetsch, Brabson and Herschell2018; Dunleavy et al., Reference Dunleavy, Nikolaou, Nifakos, Atun, Law and Tudor Car2019). Training for non-specialist providers, such as certification for community health workers or programs focused on chronic disease management, are often available online or in a hybrid format consisting of a combination of remote and in-person training (National Association of Community Health Workers, 2024; National Community Health Worker Training Center, 2024; Yeary et al., Reference Yeary, Ounpraseuth, Wan, Graetz, Fagan, Huff-Davis, Kaplan, Johnson and Hutchins2021; Zheng et al., Reference Zheng, Williams-Livingston, Danavall, Ervin and McCray2021). It will be important to consider how to expand on the current widespread use and acceptability of remote training in the United States for specifically supporting non-specialist providers with gaining the skills to deliver evidence-based psychosocial interventions for depression.

This study aimed to design and develop a digital program for training non-specialist providers in the United States to deliver an evidence-based psychosocial intervention for depression. Specifically, we replicated prior efforts to digitize training content for use in rural India (Muke et al., Reference Muke, Shrivastava, Mitchell, Khan, Murhar, Tugnawat, Shidhaye, Patel and Naslund2019; Khan et al., Reference Khan, Shrivastava, Tugnawat, Singh, Dimidjian, Patel, Bhan and Naslund2020; Muke et al., Reference Muke, Tugnawat, Joshi, Anand, Khan, Shrivastava, Singh, Restivo, Bhan, Patel and Naslund2020; Naslund et al., Reference Naslund, Tugnawat, Anand, Cooper, Dimidjian, Fairburn, Hollon, Joshi, Khan and Lu2021), to adapt a program for training non-specialist providers in the delivery of a behavioral activation intervention for depression in underserved settings in Texas, United States. There are significant gaps in access to quality mental health care in Texas, which is made more complex to solve due to workforce challenges, given that over 80% of the state’s 254 counties were designated as ‘Mental Health Professional Shortage Areas’ (Meadows Mental Health Policy Institute, 2016). Furthermore, our study adds to a growing number of efforts aimed at adapting task-sharing models for reaching underserved patient populations in the United States (Belz et al., Reference Belz, Vega Potler, Johnson and Wolthusen2024; Kanzler et al., Reference Kanzler, Kunik and Aycock2024; Mensa-Kwao et al., Reference Mensa-Kwao, Sub Cuc, Concepcion, Kemp, Hughsam, Sinha and Collins2024). We describe the stepwise process for the creation and digitization of the digital training program, followed by initial usability testing with a combination of non-specialist providers and experienced mental health providers, to collect feedback on the content to inform further refinements to the digital training curriculum.

Methods

Ethics

Institutional Review Boards at Harvard Medical School, Boston, Massachusetts and the Baylor Scott & White Health System, Dallas, Texas approved all study procedures.

Evidence-based psychosocial intervention for depression

We adapted content from the Healthy Activity Program (HAP), an evidence-based intervention for depression developed and evaluated in India (Chowdhary et al., Reference Chowdhary, Anand, Dimidjian, Shinde, Weobong, Balaji, Hollon, Rahman, Wilson and Verdeli2016; Patel et al., Reference Patel, Weobong, Weiss, Anand, Bhat, Katti, Dimidjian, Araya, Hollon and King2017). We selected HAP given its proven effectiveness in community settings (Patel et al., Reference Patel, Weobong, Weiss, Anand, Bhat, Katti, Dimidjian, Araya, Hollon and King2017; Weobong et al., Reference Weobong, Weiss, McDaid, Singla, Hollon, Nadkarni, Park, Bhat, Katti and Anand2017), and because it has been adapted for delivery by non-specialist providers across settings in India (Shidhaye et al., Reference Shidhaye, Murhar, Gangale, Aldridge, Shastri, Parikh, Shrivastava, Damle, Raja and Nadkarni2017; Shidhaye et al., Reference Shidhaye, Baron, Murhar, Rathod, Khan, Singh, Shrivastava, Muke, Shrivastava and Lund2019), Nepal (Walker et al., Reference Walker, Khanal, Hicks, Lamichhane, Thapa, Elsey, Baral and Newell2018; Jordans et al., Reference Jordans, Luitel, Garman, Kohrt, Rathod, Shrestha, Komproe, Lund and Patel2019), Uganda (Rutakumwa et al., Reference Rutakumwa, Ssebunnya, Mugisha, Mpango, Tusiime, Kyohangirwe, Taasi, Sentongo, Kaleebu and Patel2021) and Eswatini (Putnis et al., Reference Putnis, Riches, Nyamayaro, Boucher, King, Walker, Burger, Southworth, Mwanjali and Walley2023). Furthermore, HAP employs behavioral activation, a proven and cost-effective intervention that has been widely used for treating depression (Cuijpers et al., Reference Cuijpers, Van Straten and Warmerdam2007; Stein et al., Reference Stein, Carl, Cuijpers, Karyotaki and Smits2021).

The intervention manuals for HAP are available open access from Sangath (see https://www.sangath.in/), a non-governmental organization in India, and have been previously digitized for training community health workers in rural India (Brahmbhatt et al., Reference Brahmbhatt, Rathod, Joshi, Khan, Teja, Desai, Chauhan, Shah, Bhatt, Venkatraman and Tugnawat2024; Khan et al., Reference Khan, Shrivastava, Tugnawat, Singh, Dimidjian, Patel, Bhan and Naslund2020; Muke et al., Reference Muke, Tugnawat, Joshi, Anand, Khan, Shrivastava, Singh, Restivo, Bhan, Patel and Naslund2020). Given the success in adapting HAP for delivery by non-specialist providers across diverse settings, there is high potential to adapt this program for a US-based context. HAP consists of two manuals, one covering core skills to be an effective counselor (called Foundational Skills) and the second covering specific skills to deliver the intervention for adult depression (called Behavioral Activation). The intervention is recommended as a first-line treatment for depression in the World Health Organization’s (WHO) Mental Health Gap Action Program (mhGAP), which provides a roadmap for scaling up access to mental health care in low-resource settings (WHO, 2010). The intervention is delivered over 6–8 sessions and consists of content focused on psychoeducation, activity monitoring, activity structuring and scheduling, problem-solving, activation of social networks and behavioral assessments (Chowdhary et al., Reference Chowdhary, Anand, Dimidjian, Shinde, Weobong, Balaji, Hollon, Rahman, Wilson and Verdeli2016).

Stepwise development of the digital training platform

The development of the digital training platform modeled an approach previously employed in designing digital programs for community health workers in rural India (Khan et al., Reference Khan, Shrivastava, Tugnawat, Singh, Dimidjian, Patel, Bhan and Naslund2020; Shrivastava et al., Reference Shrivastava, Singh, Khan, Choubey, Haney, Karyotaki, Tugnawat, Bhan and Naslund2023; Tyagi et al., Reference Tyagi, Khan, Siddiqui, Kakra Abhilashi, Dhurve, Tugnawat, Bhan and Naslund2023). This stepwise process draws from the educational literature, informed by the ADDIE (Analyze, Design, Develop, Implement and Evaluate) framework for instructional design (Obizoba, Reference Obizoba2015), and by principles in human-centered design (Black et al., Reference Black, Berent, Joshi, Khan, Chamlagai, Shrivastava, Gautam, Negeye, Iftin and Ali2023). The ADDIE framework offers a systematic approach for designing and evaluating a training curriculum and instructional content, and has been employed to support the development of online health worker training programs (Patel et al., Reference Patel, Margolies, Covell, Lipscomb and Dixon2018). Specifically, we closely followed the systematic development approach previously employed in India and described in detail by Khan et al. (Reference Khan, Shrivastava, Tugnawat, Singh, Dimidjian, Patel, Bhan and Naslund2020), whereby feedback can ensure the balance of fidelity and usability (Khan et al., Reference Khan, Shrivastava, Tugnawat, Singh, Dimidjian, Patel, Bhan and Naslund2020). For instance, feedback collected from experts is necessary to ensure alignment with the evidence-based intervention manuals while feedback collected from the target audience of frontline non-specialist providers can help to ensure that the content is relevant and acceptable (Khan et al., Reference Khan, Shrivastava, Tugnawat, Singh, Dimidjian, Patel, Bhan and Naslund2020). We developed the digital training content sequentially, beginning with Foundational Skills and then Behavioral Activation to ensure user feedback captured during the development of the first course could guide the development of the second course. Figure 1 illustrates this process, which involved gathering feedback and insights from content experts, non-specialist providers and health workers with experience delivering mental health care. These steps are detailed in the sections that follow.

Figure 1. Overview of the stepwise approach to the development of the digital training program for frontline providers in Texas.

Step 1: Blueprinting

Consistent with prior development of digital training programs (Khan et al., Reference Khan, Shrivastava, Tugnawat, Singh, Dimidjian, Patel, Bhan and Naslund2020), the initial step involved creating a course ‘blueprint’ outlining the key learning objectives and core competencies covered in the training content. The blueprint serves as a course syllabus, outlining the overarching modules for the course and covering broad topics with a breakdown of the specific lessons and learning objectives covered within each module. The blueprint was developed through careful review of the training content while ensuring that key learning objectives for each module (taken from the HAP manuals) aligned with the skills and competencies that learners must ideally master following completion of the training program (Coderre et al., Reference Coderre, Woloschuk and Mclaughlin2009). The blueprint was developed by two members of our team with clinical experience in mental health care and delivery of psychosocial interventions for depression, including clinical psychology and nursing. Next, the blueprint was finalized through review by three licensed clinical psychologists with extensive experience in the delivery of psychological interventions and training frontline providers. One of these expert reviewers was also involved in the early evaluation of behavioral activation for treating depression and in the development of HAP and therefore could offer an in-depth understanding of the content. Table 1 summarizes the final blueprint for the Foundational Skills and Behavioral Activation courses.

Table 1. Digital curriculum ‘blueprint’ for foundational skills and behavioral activation courses

Step 2: Scripting

Using the course blueprint as a guide, content from the manuals was written and adapted into scripts for the development of a series of short videos covering the lesson content. This process also involved cultural adaptation of the intervention content. For instance, the HAP manuals had previously been developed for use in India; therefore, cultural adaptations consisted of making the content relevant for a US context, such as changing terms used in role-play scenarios, such as ‘market’ to ‘shopping mall,’ ‘tea stall’ to ‘coffee shop,’ or ‘temple’ to ‘church’ or another community location. The use of short videos was informed by prior studies developing digital training programs for non-specialist providers, where videos spanning 3–8 min were most engaging without causing fatigue (Khan et al., Reference Khan, Shrivastava, Tugnawat, Singh, Dimidjian, Patel, Bhan and Naslund2020; Tyagi et al., Reference Tyagi, Khan, Siddiqui, Kakra Abhilashi, Dhurve, Tugnawat, Bhan and Naslund2023). The videos followed two format types in each course: (1) didactic instructional videos and (2) role-plays between patients and counselors. In didactic videos, the training content is presented to learners by counselors with extensive experience utilizing these skills, who discuss the definitions and examples associated with clinical concepts. The scripts created for role-play videos illustrated the implementation of various clinical skills in practice by showing realistic counselor-patient interactions, with a focus on key aspects of the therapeutic encounter. Examples include how counselors provide psychoeducation, manage crises (e.g., suicidal ideation), motivate change and problem-solve barriers with patients to homework completion. The video scripts included dialog and screen directions, describing where the scene takes place, props used and other production-related instructions for use by the director and video production team. The scripting process involved creating a list of the different actors needed to play various characters in the videos, including the counselors, patients or family members/significant others, and the characteristics of these actors (such as age, sex, race/ethnicity and other notes) and when they appear in each lesson. The screen directions were used to reinforce learning concepts, such as keywords appearing on the screen when the counselor is demonstrating a particular skill in the role-play. All scripts were written and/or adapted by our team, before inviting expert feedback from the three licensed clinical psychologists who partnered on this project. All videos developed as part of this training involved the use of actors, as opposed to actual clinicians or patients. This was mainly to streamline and standardize the production process given the need to adhere to the scripted content and project timeline.

Step 3: Video production and digital content creation

Our team reached out to multiple video production companies and selected two companies based on the criteria of quality and timeline. One company was hired to support video production for the Foundational Skills course and the second company was hired to support video production for the Behavioral Activation course. Our team worked closely with the video production companies to review and edit the scripts, collect necessary props (e.g., worksheets, brochures, etc.), and select actors to play the different characters (e.g., counselors, patients, family members/significant others, etc.). The videos generally range in duration from 3–8 min to facilitate access from a smartphone device and to retain learner engagement. Production time for each course required approximately 6 months, consisting of 2 months for pre-production, 1 month for filming, and 3 months for video/sound editing, and allowing for 3 rounds of review and feedback. Actors were used in all the videos to represent patients and counselors, as this allowed for expedited development and production.

To reinforce learning, the videos were supplemented with digital content, including brief text summaries of key points in each video. Knowledge checks via multiple-choice questions served to reinforce concepts and were interspersed throughout each lesson to help learners stay engaged. The correct responses to the knowledge check questions are available for learners at the end of each module. Supplemental materials were provided at the end of each module, including articles, YouTube videos, graphics or strategies curated from reputable sources (e.g., American Psychological Association, Centers for Disease Control), peer-reviewed academic research papers, intervention worksheets or text summaries created by our team. These supplemental materials were initially reviewed internally by members of our team with expertise in clinical psychology, before undergoing review by the 3 external experts who previously had reviewed the project blueprint described above. Drafting, reviewing, and finalizing the digital content through expert review was completed concurrently with the video production phase.

Step 4: Uploading digital content to the learning management system

The digital platform used to host the course content (i.e., the videos and other digital content) is referred to as a Learning Management System (LMS). The LMS allows learners to navigate the course content and offers features including content management tools that allow for the creation or upload of content and assigning it to specific individuals or groups, assessments and testing that allow learners to complete questionnaires and progress through the course, mobile optimization that ensures access to the content on a smartphone app, and reporting to enable tracking learners’ progress completing the course. Before the digital content can be uploaded onto the LMS, it must first be formatted using an e-learning authoring tool. We used the Articulate authoring tool to combine the digital content (i.e., videos, audio, text and graphics) for export as SCORM (Sharable Content Object Reference Model) files for uploading onto the LMS. The authoring tool facilitates formatting written content, adding knowledge check questions and incorporating supplemental materials. An important advantage of using the Articulate authoring tool to generate SCORM files is that these files can be uploaded to most available LMS platforms, allowing us to seamlessly move the digital course to other platforms. This is an important consideration for scaling up such training programs, as the digital content could be uploaded to an organization’s existing LMS platform which could support future uptake of the training. In this project, we used the Cornerstone On Demand (CSOD) Learning Management System mainly because it is a widely used platform in many health systems across the United States and includes a cloud-based server which can accommodate a large number of users accessing the training program content. The full lesson content was finalized in Articulate and uploaded to the LMS for final review and user testing.

Step 5: User testing of the digital training platform

We invited health workers and trainees with varying levels of experience to test the first iterations of the digital content. The goal was to assess the usability of the digital training, to identify and address potential challenges with the platform and engaging with the content, and to solicit recommendations for additional features or materials that could be included. We employed a convenience sampling approach to recruit participants from contact lists of the project collaborators. Recruitment involved sending out informational emails with details about the project and inviting interested individuals to reach out to learn more and to participate in the training. We prioritized reaching health workers and other individuals with prior experience delivering mental health care. This was to ensure that we received feedback from a target group that could draw from their own prior training and clinical experiences to offer insights for guiding modifications and improvements to the content. Participants did not receive any payment for completing the training.

Participants completed written informed consent, followed by a brief questionnaire consisting of demographic details and prior work experience and training in mental health care and were then instructed to complete the digital course. We informed participants that our team was available at any time by email or by phone during regular work hours in the event there were any concerns encountered during the training, such as questions about materials or technical challenges. Participants were then invited to join a focus group discussion over the Zoom teleconferencing platform to share their feedback. A member of our team facilitated the focus group discussions using a semi-structured interview guide (see Supplemental Material) to collect participants’ impressions about the training, their experiences, and comments on the ease of navigation, interacting with the content, wording of the content, ability to access the platform on various digital devices (i.e., smartphones, tablet and laptop), structure and layout of the course and relevance and usefulness of the training for their current work. Participants were also requested to provide suggestions about ways to improve the course. Moreover, this formative research process provided the opportunity to address technical issues such as difficulties related to logging into the platform or connectivity issues.

Qualitative data analysis

The focus group discussions were audio-recorded and transcribed by two research assistants. A third research assistant reviewed the transcripts and listened to the audio recordings to ensure accuracy and that no key points were omitted. Our analysis was guided by a framework analysis approach (Gale et al., Reference Gale, Heath, Cameron, Rashid and Redwood2013), which involves a content analysis technique often used in applied qualitative research and allows for the inclusion of pre-determined topic areas (Ward et al., Reference Ward, Furber, Tierney and Swallow2013). We selected this methodology to leverage the qualitative data for better understanding the usability of the digital training platform while capturing insights from participants that could inform improvements to the training format and content. Two members of our team read the transcripts and independently coded participant feedback according to three broad categories reflecting the digital platform navigation, the course layout and the instructional content covered in the course. Our interview guide had questions covering these three broad topic areas. After reading and coding the transcripts, these two team members met to review each other’s coding and to reach a consensus on a summary of the key points reflected in participants’ comments about the training program and content. Next, they presented the broad summary to the larger research team to discuss the different recommendations, make decisions about what recommendations could be addressed, and consider the best approach for improving the layout and functionality of the training program in preparation for further evaluation and implementation.

Results

Digital training program

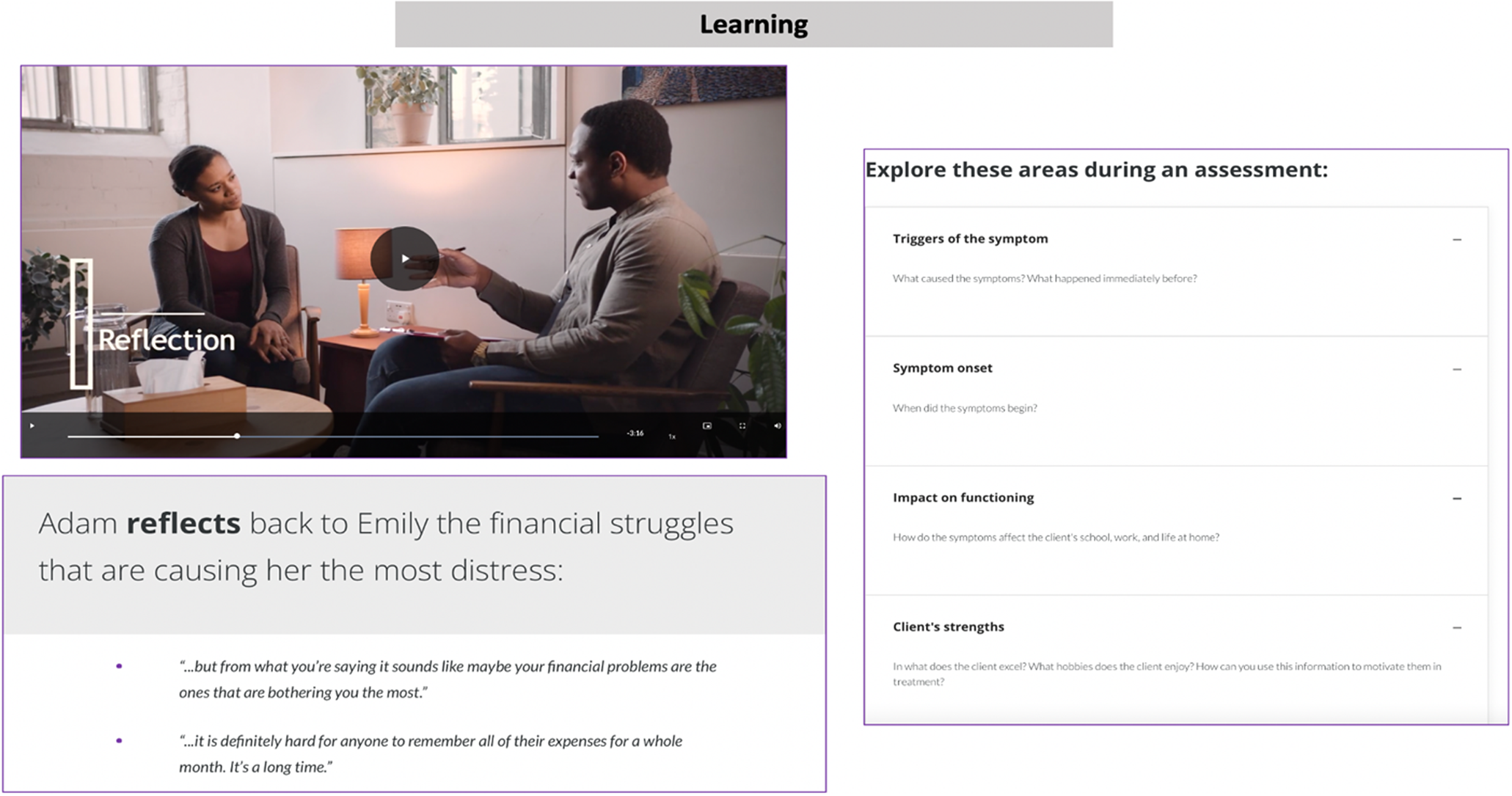

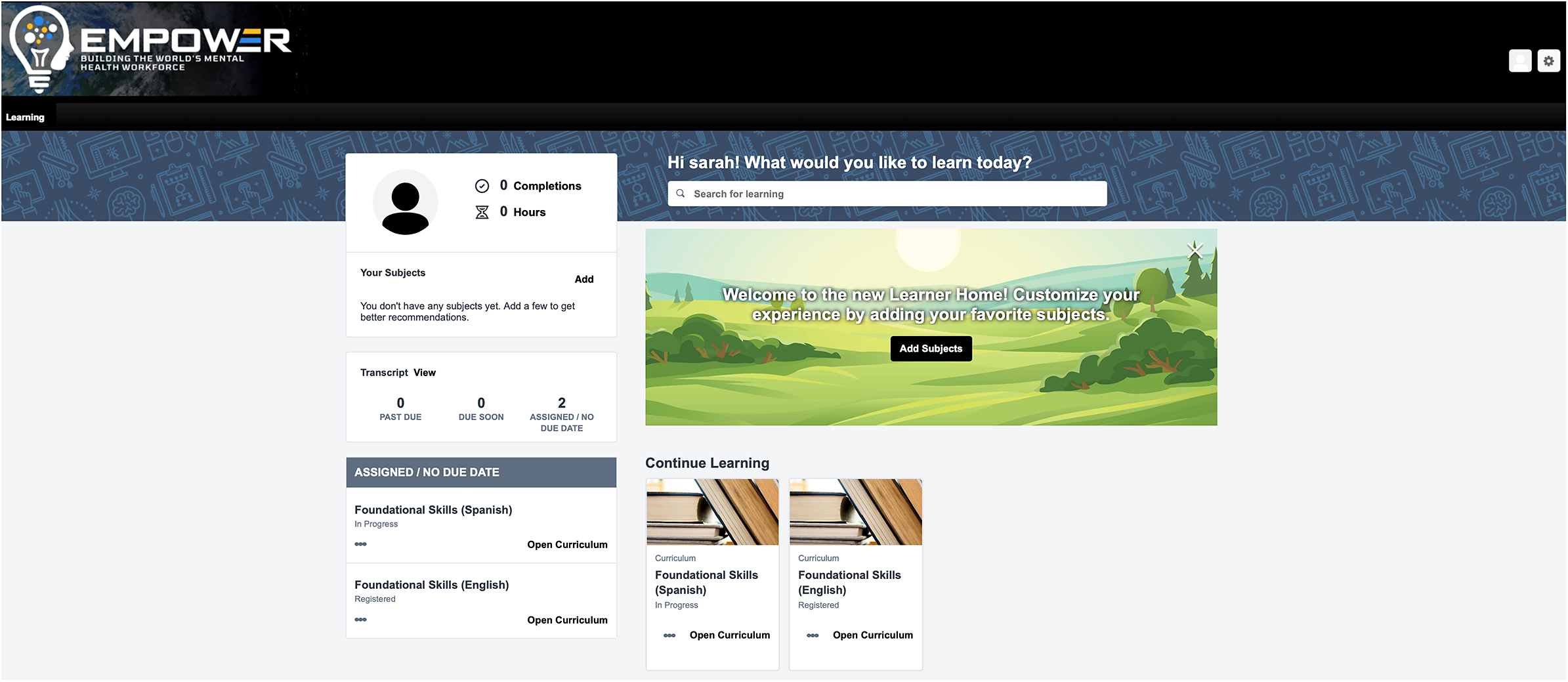

The development process required approximately 6 months for each course (12 months in total). The final digital training content was uploaded to the CSOD LMS, accessible from a smartphone app or web browser, where a course administrator could manage the course, track participant progress and send notifications. The final training consisted of 8 modules for Foundational Skills and 8 modules for Behavioral Activation, as outlined in Table 1. Each module covers a broad topic and consists of a series of short lessons that align with the specific learning objectives for that overarching module. For Foundational Skills, there are 8 modules covering 37 lessons, each using a combination of video lectures and role-plays (53 videos in total) and includes a series of assessment questions covering the content presented in the videos. Similarly, for Behavioral Activation, there are 8 modules consisting of 34 lessons with video lectures and role-plays (55 videos in total) and supplemented with assessment questions to reinforce the key concepts and learning objectives. See sample content presented in Figure 2, as well as an illustration of the user interface on the CSOD platform in Figure 3.

Figure 2. Sample screenshot showing content from one of the modules from the Foundational Skills course.

Figure 3. Learning management system user interface.

The videos ranged in duration from 3 to 8 min. Various approaches were used to make the video-based content interesting and engaging, including the use of illustrative cutaways of counselor-patient interactions in clinical scenarios, presentation of text on the screen to reinforce core concepts, assessment questions including multiple-choice questions, drag-and-drop response options, and flashcards to accompany the videos and to support knowledge comprehension. Role-play videos were a critical component of the training program to offer a demonstration of the use of specific counseling skills and interaction between patients and counselors during delivery of counseling sessions. The time required to complete both courses, along with assessment questions and activities embedded in the digital platform is approximately 20-40 h (about 10-20 h for each course). We determined the time to complete the course by having student interns who were not involved in designing the course, to ensure no prior exposure to the content and record the time necessary to fully complete all the course materials. The course materials are accessed sequentially, meaning the modules need to be completed in order and access to the Behavioral Activation course is enabled after completing the Foundational Skills course. However, learners have the option to revisit modules and retake any assessment questions or activities as often as they like.

Usability testing

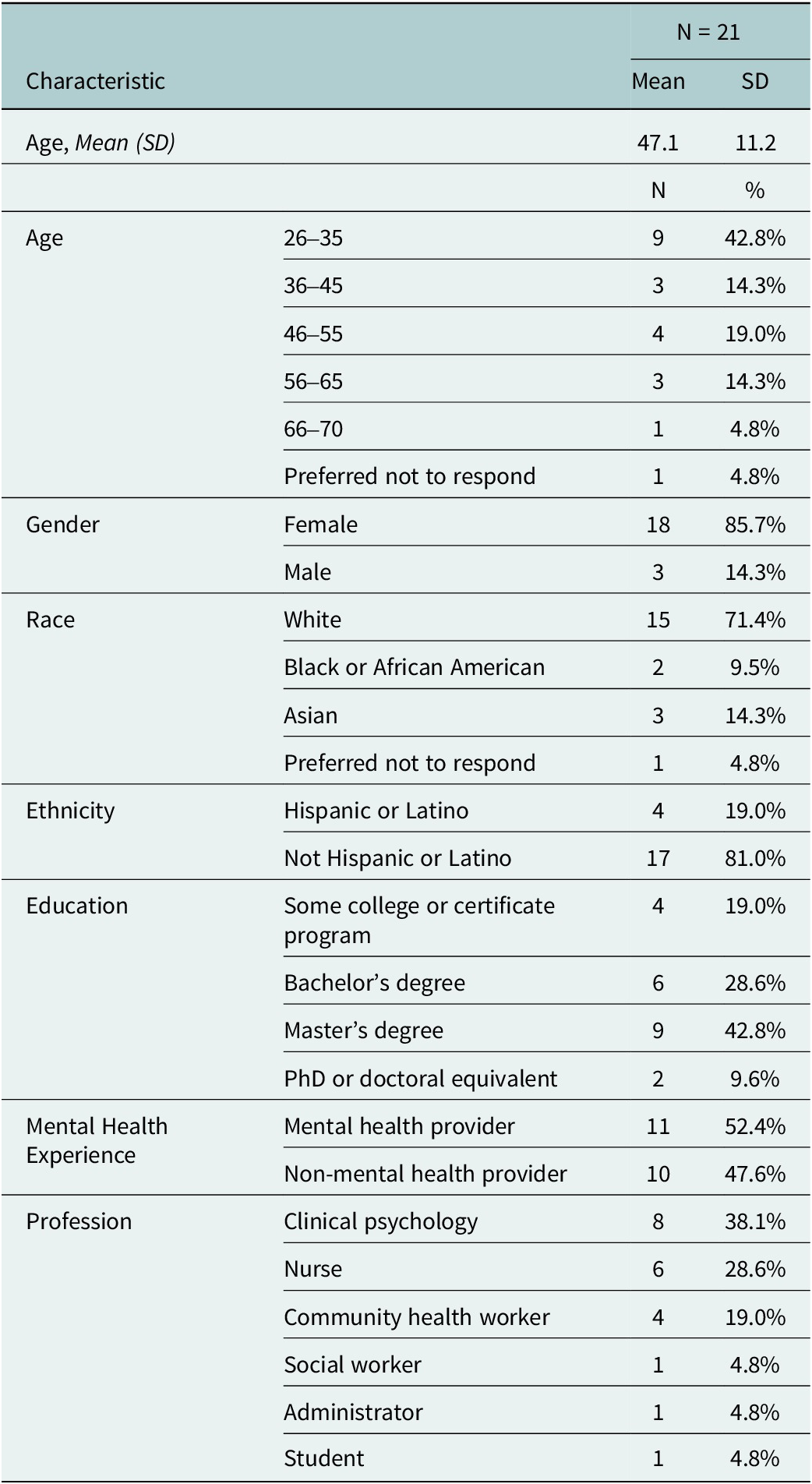

We contacted 75 individuals through email invitations to try out initial versions of the digital training. Of these individuals, 26 consented and agreed to take the course. In total, 21 participants (out of 26; 81%) completed Foundational Skills, before joining a 1-h focus group discussion. The demographic characteristics of these 21 participants are summarized in Table 2. Over half (N = 11; 52%) of participants were mental health providers, consisting largely of individuals with experience in clinical psychology. All 21 participants who completed Foundational Skills were invited to complete Behavioral Activation, of which 12 completed the course (out of 21; 57%), and 9 joined a second 1-h focus group discussion. There were 4 focus groups for Foundational Skills (N = 21 participants) and 2 focus groups for Behavioral Activation (N = 9 participants). There was about a 1-month delay between completing Foundational Skills and starting Behavioral Activation to allow our team time to complete the first round of focus group discussions and to integrate participant feedback accordingly.

Table 2. Participant demographic characteristics

Qualitative findings

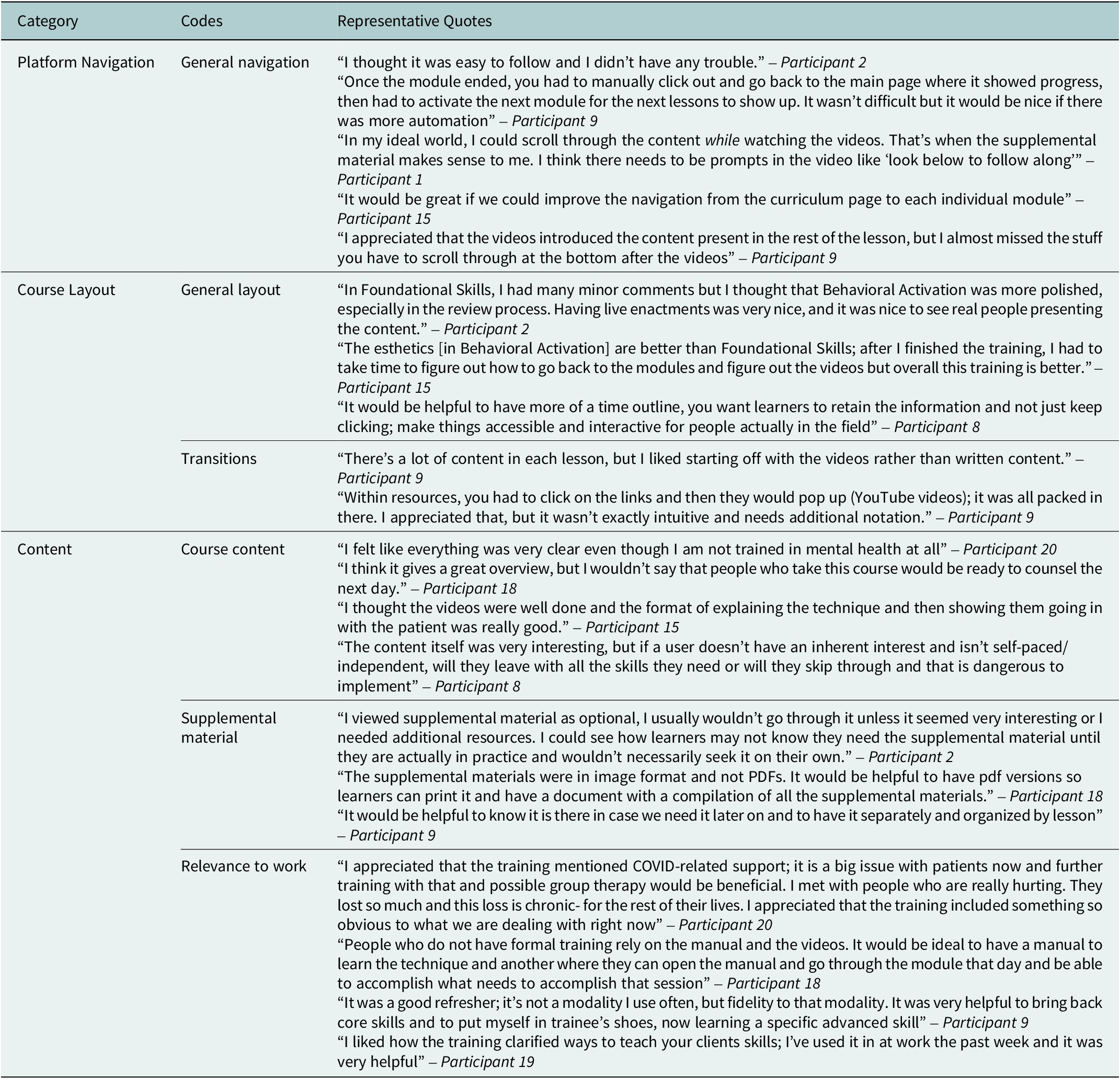

Participant feedback from focus group discussions was grouped into three overarching topic areas to inform modifications to the digital training, including: platform navigation, course layout and content. Participants offered specific suggestions and recommendations for areas for improvement related to the user-friendliness and course content. Participants were generally impressed with the courses and indicated that they found the content useful, engaging and professionally designed. Participant feedback on Foundational Skills is summarized in Table 3, and participant feedback on Behavioral Activation is summarized in Table 4. For course layout, among participants who completed both courses, they tended to prefer Behavioral Activation over Foundational Skills because of the inclusion of more interactive features, such as being able to scroll through the content, rather than having to click each time for new content to appear on the screen. For navigation, participants did not appear to encounter any challenges and expressed satisfaction with the ease with which they could progress through the material. For the content, participants enjoyed the real-life role-play scenarios between counselors and patients, reflected across both courses. Participants consistently mentioned that they had not previously encountered courses of similar design and comprehensiveness.

Table 3. Summary of participant feedback on the Foundational Skills course

Table 4. Summary of participant feedback on the Behavioral Activation course

Participants offered suggestions for improving the courses, such as including a roadmap at the beginning that incorporates the goals of the course and a tracker showing the time remaining so that participants could anticipate the time required while completing the training. There were also recommendations to include additional modules with role-play scenarios when patients or counselors encounter a problem during a counseling session, more variation in the types of knowledge assessment questions after each lesson (i.e., adding matching or short answer response questions) and ensuring consistency in the difficulty level as some of the modules were considered easier to complete relative to others. Participants also highlighted that the opportunity to engage in active learning would be helpful, with the use of checklists that they could fill out while navigating the content and including a manual with a glossary of clinical terms. Participants also made stylistic suggestions such as adding subtitles for the videos, which were considered useful to read along with the course, titles for modules and supplementary materials after each module rather than at the end of the course. Participants commented on their encounters with various technical issues such as the videos abruptly ending or technical glitches that made it difficult to navigate and complete the training. Participants’ recommendations and resulting modifications to the digital training are summarized in Table 5.

Table 5. Summary of participant recommendations and resulting modifications aimed at improving the digital training program

Discussion

This study involved the step-wise development of a digital program for training non-specialist providers in the delivery of a brief behavioral activation intervention for depression in the United States. Use of digital programs for training non-specialist providers offers important advantages for achieving scale, by overcoming barriers to participating in more costly classroom-based instruction or in-person workshops (Khanna and Kendall, Reference Khanna and Kendall2015), and allowing participants to complete the training at their own pace. While using technology for the training of health workers is not new, there have been few efforts aimed at leveraging fully remote digital programs to develop skills and competencies of non-specialist providers in the delivery of psychosocial interventions in community settings (Frank et al., Reference Frank, Becker-Haimes and Kendall2020). If we conceptualize training programs on a spectrum of least to most scalable, fully remote digital training programs represent some of the most scalable because the work is ‘front-loaded’ to create a course that later requires minimal changes (e.g., context-specific guidelines can be tailored to facilitate implementation). Our findings add to a limited, yet growing, number of studies of sustainable approaches for building the mental health workforce in US settings, including studies involving a combination of web-based and peer-led training programs (German et al., Reference German, Adler, Frankel, Stirman, Pinedo, Evans, Beck and Creed2018), technology-enhanced training in cognitive behavioral therapy (Kobak et al., Reference Kobak, Wolitzky-Taylor, Craske and Rose2017), and online training in a psychosocial intervention augmented with an online learning collaborative supported by expert clinicians (Stein et al., Reference Stein, Celedonia, Swartz, DeRosier, Sorbero, Brindley, Burns, Dick and Frank2015). We collected participant feedback to inform modifications to the training platform and content, which can potentially improve overall usability and overcome challenges with existing online training programs, such as low user engagement, high attrition and limited acquisition of new knowledge and skills (Santos et al., Reference Santos, Tagai, Wang, Scheirer, Slade and Holt2014; Conte et al., Reference Conte, Held, Pipitone and Bowman2021; Hill et al., Reference Hill, Langley, Kervin, Pesut, Duggleby and Warner2021).

While we replicated prior digital training development work reported in India (Khan et al., Reference Khan, Shrivastava, Tugnawat, Singh, Dimidjian, Patel, Bhan and Naslund2020; Shrivastava et al., Reference Shrivastava, Singh, Khan, Choubey, Haney, Karyotaki, Tugnawat, Bhan and Naslund2023; Tyagi et al., Reference Tyagi, Khan, Siddiqui, Kakra Abhilashi, Dhurve, Tugnawat, Bhan and Naslund2023), our study adds to a small but growing number of studies aimed at leveraging and adapting successful global mental health efforts from LMICs for advancing the use of task-sharing models in the US (Belz et al., Reference Belz, Vega Potler, Johnson and Wolthusen2024). Despite robust evidence in support of task-shared mental health interventions in LMICs (Barbui et al., Reference Barbui, Purgato, Abdulmalik, Acarturk, Eaton, Gastaldon, Gureje, Hanlon, Jordans and Lund2020), there remain few reports describing the systematic process for adapting these programs for use within a US context, as well as a lack of rigorous evaluation studies focused on underserved communities in the US. Therefore, our study represents a novel contribution to the field, and this formative work opens the door to new avenues to scale up proven psychosocial interventions in communities facing rising mental health burden and significant workforce shortages.

Our study complements the mounting emphasis on considering frontline non-specialist providers such as community health workers as being essential for bridging gaps in access to mental health services (McBain et al., Reference McBain, Eberhart, Breslau, Frank, Burnam, Kareddy and Simmons2021). Importantly, non-specialist providers are oftentimes ideally positioned to address social disparities, meet the needs of vulnerable patient groups and even reach patients in settings outside the formal health system (Knowles et al., Reference Knowles, Crowley, Vasan and Kangovi2023). This is critical in the context of mental health services, where concerns pertaining to stigma, mistrust of the health system, and barriers due to socioeconomic status, culture or language create significant challenges for addressing the mental health needs of at-risk communities (Wong et al., Reference Wong, Collins, Cerully, Seelam and Roth2017; Pescosolido et al., Reference Pescosolido, Halpern-Manners, Luo and Perry2021). Non-specialist providers, such as community health workers, can play a key role in addressing mental health challenges in historically underserved communities, as reflected in a preliminary study consisting of qualitative interviews with community health workers in Southern Texas near the US-Mexico border region (Garcini et al., Reference Garcini, Kanzler, Daly, Abraham, Hernandez, Romero and Rosenfeld2022). The community health workers emphasized barriers to addressing mental health concerns in their communities, including limited training opportunities and emphasized the need for needs-based training programs to build their skills in mental health care (Garcini et al., Reference Garcini, Kanzler, Daly, Abraham, Hernandez, Romero and Rosenfeld2022). Therefore, the training program developed in this study could bridge this gap and support capacity building of community health workers working in underserved settings.

Limitations

Several limitations of this study warrant consideration. While we achieved the goal of recruiting both specialist and non-specialist providers, the small sample size and reliance on a convenience sampling approach make it difficult to generalize our findings. While the training was designed for use with non-specialist providers, over half of the participants in this study identified as mental health providers. As such, it will be critical to ensure further engagement of non-specialist providers, such as community health workers, to better understand the acceptability of the training and potential for integration and uptake as part of their current work responsibilities. The focus on evaluating the usability of the digital training represents another limitation, as it is not possible to confirm whether the digital training is effective in developing participants’ skills and competencies to deliver the depression intervention in practice. There was also high attrition, where of the 21 participants who completed the Foundational Skills course, only 12 went on to complete the Behavioral Activation course, raising concerns about the feasibility of the training and potential for uptake. The collection of additional usability metrics could help to better understand the reasons for discontinuing the training and inform opportunities to promote engagement or offer tailored support to learners. Ultimately, we acknowledge that the high attrition could be the result of the significant time commitment required to complete the training. We also observed dropout between courses, which could be further attributable to the roughly 1-month lag between completing Foundational Skills and starting Behavioral Activation, thereby suggesting that participants who went on to complete both courses were likely highly motivated and interested in the content, and therefore, may have expressed more positive feedback about the training. This highlights an important area for our team to consider as we seek to roll out the training, as the time required will likely emerge as a barrier to implementation. Frontline non-specialist providers generally have limited time availability and already experience heavy workloads and without additional compensation or being provided adequate time in their workplace, there will be challenges to integrating this training in health systems in the US and elsewhere.

We emphasized the importance of capturing user feedback about the usability of the training, making sure to recruit a combination of non-specialist and specialist providers. However, we recognize that non-specialist providers were not engaged in the early stages of scripting the content and reviewing the blueprint. This is partly because the content was adapted from the digital training developed and implemented in India, which had already undergone an extensive process of engaging community health workers in the development process, as well as time and funding constraints. To mitigate this limitation, we will pilot-test the training with a group of target non-specialist providers, and make additional modifications to the content as needed. One advantage of the digital platform is the flexibility to make modifications to the content and program layout. It is also important to note that while the training was adapted for use in a US context, our goal was to ensure that the training could be generalizable for use in as many settings as possible, meaning that the content is not tailored to specific cultural or demographic groups or specific geographic areas. For instance, an important future direction will be to evaluate the relevance of this training for use within rural settings and capture the perspectives of a wide range of non-specialist providers, which could cover nearly anyone without formal training in mental health care, about the relevance of this current program in their work. For example, in addition to community health workers, suitable participants for completing this training program could include members of congregations of churches, veteran serving organizations, college students, and ordinary people in community settings who may be ideally positioned to respond to the mental health needs of others.

Future directions

It is important to note that this course represents only an initial step as part of training non-specialist providers and that it would be unlikely that after completing such a course someone would be prepared to deliver care without first having a chance to practice these skills under the supervision of an experienced clinician. This is a critical observation, and it is essential to recognize that this is the first step in a broader journey towards achieving competence and ensuring progression from learning the content, to practicing the skills in real-world settings, and ultimately, mastering content and delivering high-quality care and improving patient outcomes (Patel et al., Reference Patel, Naslund, Wood, Patel, Chauvin, Agrawal, Bhan, Joshi, Amara and Kohrt2022). Access to ongoing supervision and opportunities to practice the newly learned content and skills must be considered a necessary extension to the current digital training program. For instance, newly trained non-specialist providers will need to apply what they have learned with support from experienced mental health clinicians, followed by independently seeing patients while engaging in routine supervision. Supervision of non-specialist providers in the delivery of psychosocial interventions, which can be completed remotely or in-person is essential to ensure quality, identify challenging cases for referral and offer additional opportunities for learning, further developing skills and knowledge and avoid the risk of burnout and exhaustion (Singla et al., Reference Singla, Ratjen, Krishna, Fuhr and Patel2020; Singla et al., Reference Singla, Fernandes, Savel, Shah, Agrawal, Bhan, Nadkarni, Sharma, Khan, Lahiri, Tugnawat, Lesh, Patel and Naslund2024). Future studies are needed to examine such questions by linking learner milestones, engagement and knowledge scores to clinical competencies evidenced in performance metrics (e.g., role-play-based supervision and real-patient interactions) and care metrics (e.g., patient outcomes) (Singla et al., Reference Singla, Nino, Zibaman, Andrejek, Hossain, Cohen, Dalfen, Dennis, Kim and La Porte2023).

There are additional critical steps to build on this formative research, beginning with the need to ensure that the training program is effective and can support the development of skills and achieving competency, which could be assessed using a standardized approach such as EQUIP (Kohrt et al., Reference Kohrt, Pedersen, Schafer, Carswell, Rupp, Jordans, West, Akellot, Collins and Contreras2025). With widespread consensus that non-specialist providers, such as community health workers, are ideally positioned to reach underserved communities in the US experiencing significant mental health disparities (Barnett et al., Reference Barnett, Gonzalez, Miranda, Chavira and Lau2018a), including racial and ethnic minority groups (Barnett et al., Reference Barnett, Lau and Miranda2018b), there is a need to further explore the relevance of this training in these communities. For instance, given that this study was focused in Texas, a key next step will be considering the relevance of the training for reaching Hispanic communities near the US-Mexico border, and determining what additional cultural, linguistic and contextual adaptations to the training content are needed. Furthermore, rigorous evaluation of the costs required to deploy the training and to successfully support non-specialist providers with acquiring the skills and knowledge to deliver behavioral activation represents an essential future research direction. Understanding the cost implications will be critical for informing health systems and other key stakeholders about the resources required to build workforce capacity to address depression and to sustain these efforts in practice.

Open peer review

To view the open peer review materials for this article, please visit http://doi.org/10.1017/gmh.2025.5.

Data availability statement

All de-identified data in this study is available from the authors upon request.

Author contribution

JAN wrote the first draft, oversaw the project activities and made revisions to the manuscript based on feedback from co-authors. NC, ST, MA, SW, AP, and SR supported the project activities, data collection, analysis and interpretation and contributed to the writing and revision of the manuscript. KF, BM and KS led participant recruitment at the study sites, supported data collection and contributed to revising the manuscript. BR, KG and RB contributed to revising the manuscript. AK and VP supported the acquisition of funding for this work, provided oversight of the project activities, and contributed to revising the manuscript. KS provided oversight for the project activities and contributed to revising the manuscript. All authors approved the contents of the final manuscript and decided to submit for publication.

Financial support

This study was supported by grant funding from the Surgo Foundation, a gift from NM Impact Ltd., and the Lone Star Prize awarded by Lyda Hill Philanthropies. This project was also supported by a grant from the National Institute of Mental Health (U19MH113211–01). The funders played no role in the conduct of the study, analysis and interpretation of the findings and decision to publish.

Competing interest

The authors report no conflicts of interest.

Ethics statement

The institutional review boards at Harvard Medical School, Boston, Massachusetts and the Baylor Scott & White Health System, Dallas, Texas, approved all study procedures and human subjects activities in this project.