1 Introduction

Making accurate estimates of how long others will take to complete a task is important for effective decision making across a variety of settings. For example, a major focus of the foundational work in organizational behavior and management was to precisely define and time the steps in industrial processes (e.g., Reference Lowry, Maynard and StegemertenLowry, Maynard & Stegemerten, 1940). This approach was feasible for the manufacturing assembly line but is difficult to implement in today’s workplace, where no two tasks might be the same, and completing each task may require different skills. Thus, traditional measurement approaches are often infeasible in modern workplaces, and managers must instead rely on judgment-based estimates of project completion time as the primary inputs for planning and resource allocation decisions (Reference Halkjelsvik and JørgensenHalkjelsvik & Jørgensen, 2012).

People making completion time judgments often do so based on limited objective information, and therefore their estimates are potentially vulnerable to contextual influences. In this paper, we investigate how completion time estimates for other people, a crucial input into planning, budgeting, and resource-allocations decisions are influenced by externally imposed time limits on the task. Across our studies, people estimated more time to complete a task when the time limit was longer. While estimating longer completion times for longer deadlines can constitute a normative inference in some settings, we find that judgments of the time that others will take are overly sensitive to time limits, even when the time limits are non-informative and irrelevant, contributing to inaccurate estimates. For example, we find that the effect persists even when the time limits are determined at random (so could not provide information) or are not known by the other person completing the task (so could not affect motivation or completion times).

The effect of deadlines that we report in this paper is extremely robust and may be driven by more than one psychological mechanism. We propose and isolate a novel scope perception account, which explains the observed behavior and is distinct from other mechanisms. In particular, our scope perception account predicts that longer time limits increase the subjective scope of the work. This cognition-based account makes distinct predictions from a motivation-based account, implied by prior research, in which managers believe that shorter (longer) time limits increase (decrease) workers’ pace. We also distinguish the effect of the scope perception account from that of other potential decision heuristics, including anchoring, truncation of estimates by available time, and differences in anticipated work quality. We test the time limit bias and scope perception account in five studies (as well as in an additional seven studies reported in the Online Supplemental Studies Appendix),Footnote 1 using lay subjects and experienced managers, familiar and novel tasks, hypothetical scenarios, and consequential settings.

2 Predictions of task completion time

Making duration judgments of prospective, non-experienced events, such as predicting a task-completion time, is a particularly difficult task. A large body of work has shown that duration judgments of non-experienced events are susceptible to contextual factors and are therefore often biased (see Reference Roy, Christenfeld and McKenzieRoy, Christenfeld & McKenzie, 2005, and Reference Halkjelsvik and JørgensenHalkjelsvik & Jørgensen, 2012, for reviews). For example, prospective duration judgments of non-experienced events have been found to be affected by many factors, including arousal and vividness (Reference Ahn, Liu and SomanAhn, Liu & Soman, 2009; Reference Caruso, Gilbert and WilsonCaruso, Gilbert & Wilson, 2008), details of task description (Reference Kruger and EvansKruger & Evans, 2004), valence of the pending outcome (Reference Bilgin and LeBoeufBilgin & LeBoeuf, 2010), completion motivation (Reference Buehler, Griffin and MacDonaldBuehler, Griffin & MacDonald, 1997; Reference ByramByram, 1997), measurement units (Reference LeBoeuf and ShafirLeBoeuf & Shafir, 2009), and perceived contraction of objective duration (Reference Zauberman, Kim, Malkoc and BettmanZauberman, Kim, Malkoc & Bettman, 2009).

Furthermore, even prior experience with the task might not improve duration judgments. Retrospective duration judgments are often biased by contextual factors, including the interval between the occurrence of the event and time of judgment (Reference NeterNeter, 1970), and availability of cognitive cues and resources (Reference BlockBlock, 1992; Reference Zauberman, Levav, Diehl and BhargaveZauberman, Levav, Diehl & Bhargave, 2010). These biases result in inaccurate recall and make it difficult for decision-makers to learn from (Reference Meyvis, Ratner and LevavMeyvis, Ratner & Levav, 2010) and utilize (Reference Buehler, Griffin and RossBuehler, Griffin & Ross, 1994; Reference Gruschke and JørgensenGruschke & Jørgensen, 2008) prior experience. Consequently, experienced decision-makers might not perform any better compared to novices when making completion time estimates. The inherent subjectivity of time estimation can also make decision-makers overconfident (Reference Klayman, Soll, González-Vallejo and BarlasKlayman, Soll, González-Vallejo & Barlas, 1999), and can bias their estimates even when they are deciding on a familiar task (Reference Jørgensen, Teigen and MoløkkenJørgensen, Teigen & Moløkken, 2004).

The malleability of prospective judgments and the difficulty of learning from prior experience makes time estimation susceptible to the use of judgment heuristics and sensitive to salient characteristics of the decision environment. Indeed, research has shown that decision-makers tend to rely on a more heuristic-based approach when they are thinking about expending resources in the form of time (vs. money; Reference Saini and MongaSaini & Monga, 2008). Could time limits, which are a salient and pervasive characteristic of many decision environments, trigger heuristic thinking and affect estimates of task completion times?

A decision-maker’s estimate of task completion times for the self and the other could be affected by very different psychological processes. In particular, prior literature has suggested that estimates of one’s own completion time are often explained by motives and biases specific to reasoning about the self, such as presentation motives (Reference LearyLeary, 1996), attributional motives (Reference Jones and NisbettJones & Nisbett, 1971), motivated reasoning (Reference KundaKunda, 1990), and strategic behavior (Reference KönigKönig, 2005; Reference Thomas and HandleyThomas & Handley, 2008; Reference Locke, Latham, Smith, Wood and BanduraLocke, Latham, Smith, Wood & Bandura, 1990). The key unanswered question we investigate is whether time limits also influence estimates for other people, where these egocentric motives might be weaker or even non-existent.

3 Predictions of task completion time for others under external time limits

Several factors can influence how a person’s prediction of other people’s task completion times may vary under different external time limits. First, external time limits can reveal relevant information. When informed people use their knowledge to set a reasonable time limit, taking into account the work needing to be done, the time limit itself can be a valid signal of the scope of the work. For example, the time limit could be either the upper bound on how long the task would be allowed to take or may reflect the limit-setters’ knowledge about how long it typically does take. As such, therefore, external time limits might exert a normative influence on people’s completion time estimations in some settings.

However, even in settings where time limits are potentially informative, the sources of the time limit and the associated goals in setting the limit may vary. For example, time limits may reflect the needs or requirements of a client or third party. In particular, a manager may face deadlines dictated by a client that either requires the work to be done faster than usual or allows the work to take significantly longer than necessary. As a result, time limits can differ from typical completion times. Therefore, the informativeness of the time limits might be particularly difficult to assess via intuitive judgment in those complex work environments where no two situations are exactly alike and the time limit is typically the result of a complex multi-party negotiation.

Second, people may base their estimates on their beliefs about the effects of time limits on others’ motivation. For example, people may believe that others will work more slowly when more time is available to them (e.g., “Parkinson’s Law,” Reference ParkinsonParkinson, 1955). Some lab studies have found evidence that people may, in fact, spend more time to complete a task when the time limit is experimentally manipulated to be longer (Reference Aronson and LandyAronson & Landy, 1967; Reference Brannon, Hershberger and BrockBrannon, Hershberger & Brock, 1999; Reference Bryan and LockeBryan & Locke, 1967; Reference Jørgensen and SjøbergJørgensen & Sjøberg, 2001 as reported in Reference Halkjelsvik and JørgensenHalkjelsvik & Jørgensen, 2012), though the necessary conditions for this effect are not well understood. Although not tested in this literature, beliefs about others taking more time than needed might be stronger when wages are paid based on the time taken. In such settings, decision-makers might expect that others will “slack”, i.e., strategically take longer so as to earn higher total wages, particularly when the external deadlines are longer (Reference Goswami and UrminskyGoswami & Urminsky, 2020).

Relatedly, people also could incorporate into their estimates a variety of other lay beliefs about how they think time limits affect others’ motivation. For example, the goal-gradient tendency for people to work more slowly earlier in the process when task completion goals are farther away (Reference GjesmeGjesme, 1975; Reference HullHull, 1932; Reference Kivetz, Urminsky and ZhengKivetz, Urminsky & Zheng, 2006) may be exacerbated by longer deadlines. Conversely, a shorter deadline might prompt people to work at a faster pace. Working under a longer deadline can also lead to more procrastination, rather than spending more time on the task (Ariely & Wertenbroch, 2002), leading to higher overall completion times.

Third, people could also be inadvertently influenced by time limits and anchor on the deadlines, even when the deadlines are not informative (e.g., when they are told that the deadlines are random). Alternatively, people could deliberately truncate or censor their beliefs about the distribution of completion times when generating estimates.

Fourth, it is possible that external time limits will not affect people’s estimates at all, beyond providing an upper bound on the possible time others could take. This would be consistent with prior research in other domains which has demonstrated insensitivity to important cues, particularly when evaluated in isolation, without a frame of reference (Reference Hsee, Loewenstein, Blount and BazermanHsee, Loewenstein, Blount & Bazerman, 1999; Reference HseeHsee, 1996).

Next, we introduce a novel additional possibility – the scope perception bias – based on an overgeneralized inference, which makes unique predictions about the effect of external time limits on judgments of others’ performance.

4 Scope Perception Bias

We hypothesize that people may judge the time taken to complete a task based on their perceived scope of work, and their judgments of scope may, in turn, be influenced by time limits. A longer external time limit might lead people to infer a task that is larger in scope, and accordingly, estimate it to have a longer completion time.

We propose that this “Scope Perception” effect of deadlines could be an overlearned response. People learn from their experience that “bigger” tasks often have “longer” deadlines. This directional relationship between time limits and task scope is so prevalent that people might then over-generalize it to a bidirectional association. When this happens, longer external time limits might spontaneously make beliefs about bigger task-scope salient. Longer time limits could also bring to mind aspects of task scope such as greater complexity, difficulty, number of intermediate steps required to complete the task, effort-required, thoroughness, and so on. Such inferences, in turn, could result in a higher estimate of task completion time when time limits are longer.

Extant research has suggested that over-learned responses are indeed quite common in everyday judgments. For example, decision-makers have been found to infer physical distance from clarity (Reference BrunswikBrunswik 1943), frequency of occurrence from valuation (Reference Dai, Wertenbroch and BrendlDai, Wertenbroch & Brendl, 2008), and perceived value of a service from the time it took to provide that service (Reference Yeung and SomanYeung & Soman, 2007). When judging an attribute that is less accessible and for which they have less information (e.g., physical distance, frequency of occurrence, value of a service, task-completion time), decision-makers might automatically substitute the less-accessible attribute with an attribute that is more salient to them (Reference Kahneman and FrederickKahneman & Frederick, 2002).

Thus, irrespective of whether the time limit is normatively informative, people may more readily imagine a scope of work consistent with the time limit, based on an over-learned association between time limit and amount of work. To test this, in some studies, we construct experimental situations in which the decision-maker knows that the time limit is uninformative about the task (e.g., because it is determined by coin-flip or by other non-informative external factors). We take this approach in order to identify potential bias in predicting others’ task completion times that result from longer or shorter time limits. However, we expect the psychological process we discuss here to also occur, along with more straightforward inferential processes, in situations where time limits could be diagnostic.

In order to provide a strong test of the scope perception hypothesis, we investigate whether time limits affect estimates even in the extreme case when workers are themselves unaware of the time limit while completing the task, and the time limits are only known to the decision-maker. According to our proposed account, time limits would activate an over-learned association in the decision-maker’s mind, even in this special case when the decision-maker is aware that such time limits cannot have a causal effect on workers’ motivation or pace of work. We predict that decision-makers would still be influenced by the time limits, even in this case.

We also distinguish scope perception from a standard anchoring and adjustment process. Standard anchoring involves anchor-consistent information coming to mind more readily (Reference Chapman and JohnsonChapman & Johnson, 1994; Reference Strack and MussweilerStrack & Mussweiler, 1997), along with subsequent adjustment (Reference Simmons, LeBoeuf and NelsonSimmons, LeBoeuf, & Nelson, 2010). Therefore, if decision-makers indeed anchor on the external time limits and insufficiently adjust from these limits in generating their estimates, the estimates should be clustered around the anchors (Sussman & Bartels 2018). Our proposed scope perception account, in contrast, is based on general inferences about task length, and does not predict that responses will be clustered around the time limit. We will use this approach, directly comparing the effect of time limits to the effect of anchors in Study 1, to test whether people’ estimates are better described by the scope perception account or a standard anchoring process. Finally, we will directly test the scope perception account by both measuring the perceived scope of work, and by manipulating people’s beliefs about the strength of the underlying association between time limits and scope.

The rest of the paper is organized as follows. We first measure the accuracy of judges’ estimates by comparing consequential judgments to actual time taken by real workers to complete a task under a time limit, and contrast the results with predictions of alternative accounts, including anchoring (Study 1). In pre-registered Study 2, we use a hypothetical scenario to test the robustness of the scope perception effect to workers’ awareness of the time limit and test a competing motivational-belief account. In pre-registered Study 3, we generalize the findings to situations when managers make multiple estimates and we provide evidence that perceived scope mediates the effect of deadlines on completion time judgments. Next, we further test the proposed scope perception mechanism by manipulating the underlying association between time limits and scope (Study 4). We examine the generalizability of our findings in a field survey with experienced managers (Study 5).

5 Study 1: The joint effect of time limits on workers’ times and judges’ estimates

5.1 Method

In Phase 1, participants in a research laboratory (n=116) were assigned the role of workers and were all asked to solve the same digital jigsaw puzzle. Each worker was randomly assigned to one of three between-subject time limit conditions: either having unlimited time, 5 minutes (shorter time limit), or 15 minutes (longer time limit) to complete the puzzle. The times were chosen based on pre-testing, which indicated that nearly all workers were able to solve the puzzle in 5 minutes.

The workers were paid a flat fee of $3 in all three conditions, regardless of how long they took to solve the puzzle. They were informed about their compensation and time limit before starting the timed puzzle. To make sure that participants did not think that they might have to continue waiting until any time limit assigned to them was up even after solving the puzzle, they were explicitly told that they could either move on to participate in another study or leave the lab after they completed the puzzle. Therefore, the design precluded any perverse incentives worker might have to delay task completion (e.g., in order to earn more from the jigsaw puzzle task).

The jigsaw puzzle was administered using a computer interface from the online puzzle site jigzone.com.Footnote 2 The interface showed a timer which started counting immediately after the first piece was moved, and which stopped and continued displaying the final time once all the pieces were in place. All participants solved the same puzzle, but each participant started off with a different random arrangement of the puzzle pieces. As participants moved the puzzle pieces on the screen, the pieces snapped together whenever two pieces fit. As a result, it was not possible to solve the puzzle incorrectly, and quality of outcome was held constant across workers. After each worker finished the puzzle, the completion time was recorded from the digital interface by a research assistant.

In Phase 2, a separate sample of online participants (n=602Footnote 3) was assigned the role of judges and were provided with detailed information about Phase 1, including two pictures of the puzzle which the workers had solved. The first picture showed a typical initial layout with the pieces randomly spread out, and the second showed the way the puzzle looked when it had been solved. All judges were told that each worker was randomly assigned to either have no restriction on the maximum time they could take, or a maximum time of 5 minutes (the shorter time limit), or a maximum time of 15 minutes (the longer time limit) to solve the puzzle. Judges were further informed that all of the workers were paid a flat fee of $3 for their work, and the materials emphasized that workers could not choose or influence their time limit. Given that workers’ wages were fixed and the same under all the time limits, the possibility that workers would intentionally work slower to earn more was not a relevant factor in the judges’ decisions.

Judges were told that they would be answering a few questions about estimating the time it took people to finish solving the puzzle, and were randomly assigned to a 2 (shorter vs. longer) × 2 (time limit vs. anchor) design. In the time limit conditions, judges were asked to predict the task completion time for an average worker either under the 5-minute time limit (shorter time-limit condition) or under the 15-minute time limit (longer time-limit condition). After making their estimate, judges in the time-limit conditions were asked to also make an estimate based on the other time limit, on a separate screen. Judges also estimated what percentage of workers in each condition solved the puzzle in 3 minutes or less, to examine the distributional beliefs about task completion times.

Judges in the anchoring conditions were instead asked to consider a typical worker for whom there was no maximum time and answered whether they believed the worker would spend more or less than either 5 minutes (low anchor condition) or 15 minutes (high anchor condition) working on the puzzle. The same judges were then asked to predict the task completion time of an average worker with no restriction on the maximum time.

Judges in all conditions were told that they could earn a bonus of up to $1 based on how accurately they predicted the task completion time. Specifically, for every minute their estimate deviated from the actual average time in their time limit condition, 10 cents were deducted from the maximum bonus amount (i.e., a linear incentive for accuracy). After judges made their estimates, they were asked a few follow-up questions about their beliefs about the completion time, their assessment about workers’ feelings while they were solving the puzzle, and their familiarity with jigsaw puzzles.

5.2 Results

Workers’ Completion Times. All workers solved the puzzle within the allotted time. On average, workers took a similar length of time to solve the puzzle in all three conditions (M Shorter=2.24; M Longer=2.75; M Unlimited Time=2.23; F(2,113)=1.92, p=.153). Post-hoc tests indicated that workers completed the task directionally faster under the shorter and unlimited time limits than the longer time limit (M Shorter vs. M Longer: p=.118; M Shorter vs. M Unlimited: p=.988; M Longer vs. M Unlimited: p=.148). Therefore, even when time limits were three times longer (compared to the 5-minute limit) or absent altogether, the actual time taken by the workers was not greatly affected by the time limit. Given that workers in all conditions earned a flat fee of $3 irrespective of how much time it took them to solve the puzzle, they arguably did not face meaningfully different incentives for working at different speeds across the conditions.

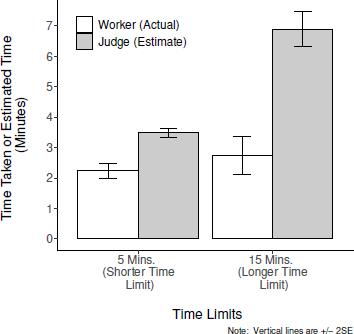

Judges’ Time Estimates. Judges’ first estimates in the time limit conditions did not accurately predict the workers’ times. Judges’ average estimates were significantly higher than the actual workers’ times in each of the two time limit conditions (Shorter Time: M Judges=3.49 vs. M Workers=2.24, t(187)=7.86, p<.001; Longer Time: M Judges=6.89 vs. M Workers=2.75, t(191)=6.86, p<.001). More importantly, the over-prediction increased with longer time limits (interaction F(1, 378)=21.61, p<.001; see Figure 1).

Figure 1: Time taken by workers and time estimated by judges as a function of time limits (Study 1).

Could these observed results be explained by the judges anchoring on the time limits and insufficiently adjusting? In the anchoring conditions, judges’ estimated task completion times were not only lower but also higher than the anchor value (35% and 7% estimated higher values in the 5-minute and in the 15-minute conditions, respectively) suggesting that the anchoring manipulation was successful. In contrast with estimates in the time limit conditions, estimates in the anchoring conditions were clustered closer to the anchor value (consistent with insufficient adjustment), and were, therefore, higher, on average, than the estimates in the time limit conditions (M Anchoring=8.49 vs. M TimeLimit=5.22, t(600)=2.42, p=.016). Specifically, estimates in the shorter anchor condition were significantly higher than in the equivalent shorter time limit condition (M Shorter, Anchoring=9.07 vs. M Shorter=3.49, t(300)=2.12, p=.035). A similar almost-significant difference was observed comparing the longer anchoring condition and the corresponding time limit condition (M Longer, Anchoring=7.87 vs. M Longer=6.89, t(298)=1.84, p=.066).Footnote 4 These results suggest that the way time limits impact people’s estimates is distinct from a standard anchoring and adjustment process, and suggests that a different psychological mechanism accounts for the observed findings in the time limit conditions.

5.3 Discussion

The results of Study 1 provide an initial demonstration of a time limit bias: people estimate more time for others to complete a task when there is a longer time limit, compared to a shorter time limit, even when actual completion times do not substantially differ. We find similar but directionally weaker results within-subjects (see Online Appendix C), suggesting that judges updated their beliefs based on a new time limit. This basic effect of external time limits on completion time estimates is highly robust, and we have consistently replicated it (e.g., see also studies S1 and S2 in Online Appendix D). Furthermore, the evidence suggests standard anchoring on the numerical amounts used in the time limits yields different estimates and, therefore, cannot fully explain the observed time limit bias. We further rule out the role of incidental anchors in Study S3 in Online Appendix D.

5.3.1 Other Alternative Accounts

As noted, estimating more time based on a longer time limit can sometimes be a valid inference. This is not the case in Study 1, however, where judges were informed that the workers’ time limits were assigned at random. A follow-up question confirmed that nearly all the judges (96%) correctly recalled that workers were randomly assigned to the different time limits (rather than on the basis of matching workers to time limits based on their proficiency or skill, for example) in all the conditions. The difference in the judges’ estimates between the two time-limit conditions remains even after excluding the judges who failed this comprehension test.

This bias does not seem to be attributable to judges’ lack of attention, or lack of relevant experience with jigsaw puzzles or beliefs about workers’ motivation. In Phase 2, the amount of time judges took to read the instructions did not moderate the effect of time limits on judges’ estimates. The results did not differ based on judges’ self-reported experience with jigsaw puzzles, although in this study, judges with higher self-reported knowledge of jigsaw puzzles showed lower sensitivity to time limits (p=.068). Judges’ beliefs about differences in either how accountable workers felt to finish the puzzle as soon as possible or about workers’ task goals (to finish quickly or to take longer and enjoy it) in the different time limit conditions likewise could not explain the difference in estimates.

An important alternative explanation of the observed results is that judges hold a belief that people work more slowly when they have more time (“Parkinson’s Law”). Although this might be a reasonable heuristic in some situations, Study 1 suggests that, at a minimum, judges in this situation were over-applying the heuristic, since the time limit did not substantially affect the actual time spent by the workers. Furthermore, if judges based their estimates on a lay theory about different rates of work under different time limits, it would have to be a lay theory that did not fully account for the workers’ incentive to complete the puzzle quickly, regardless of the time limit, given the fixed payment and outside option of completing other studies.

To further test this possibility, we asked the judges for their general beliefs about whether people’s work would be slower, faster or the same pace when more time is available. The majority (63%) of judges stated that people take more time when more time is available. However, whether or not a judge expressed this belief in Parkinson’s law made no difference for the effect of time limits on their completion time estimates (interaction of time limit condition and measured beliefs, p=.710). This suggests that beliefs about differences in rates of work due to time limits do not explain the results.

Another potential explanation of our results is that judges in both shorter and longer time limit conditions had the same underlying distribution of completion times in mind, but simply truncated all the higher estimates to the deadline when the time limit was shorter. To test this “truncation account” (Reference Huttenlocher, Hedges and BradburnHuttenlocher, Hedges & Bradburn, 1990), we compared the proportion of workers that judges expected to finish in up to 3 minutes in the longer vs. shorter time limit conditions. Under the truncation account, these two proportions should be the same. However, judges estimated that significantly fewer workers would complete the puzzle in up to 3 minutes in the longer time limit condition than within the same duration in the shorter time limit condition (M Shorter=51% vs. M Longer=34%, t(301)=5.28, p<.001),Footnote 5, suggesting they had very different distributions of task completion times in mind for the two different time limit conditions.

A separate distributional-heuristic account is that judges may have started with the same believed distribution of unlimited times in all conditions, but eliminated from consideration all times greater than the time limit, possibly coding them as failing in the task and therefore not qualifying for inclusion. In Study S4 (see Online Appendix D), we investigate this “censoring account” by having judges explicitly indicate the proportion of workers in each of a set of time ranges, including the proportion they believed did not complete the task in the assigned time limit. Computing the estimated completion time by using a weighted average of the mid-point of each time-bins and the reported proportions as weights, we replicate the results of Study 1. This result suggests that censoring accounts cannot explain our findings.

Why did the time limits bias judges’ estimates? Most judges understood the instructions and knew that workers were randomly assigned to different time limits, and therefore that time limits did not have any informational value. However, it is still possible that judges, acting as amateur psychologists, were trying to predict the impact of the time limits on workers’ behavior. When workers had more time to complete the job, workers could have procrastinated by engaging in daydreaming or could have simply worked at a slower pace (although doing so would be non-optimal based on the wage scheme). This might result in workers taking more time under longer deadlines. In the next study, we directly test this account by testing judges’ estimates in a hypothetical scenario using the extreme case of a non-influential time limit – one that workers are actually unaware of and which therefore could not affect their motivation.

6 Study 2: Estimating completion times with irrelevant time limits

6.1 Method

We ran a pre-registered study (http://aspredicted.org/blind.php?x=kv2g7m) in which online participants (n=358) played the role of judges and estimated the time to complete a hypothetical jigsaw puzzle. Judges were assigned to one of three between-subjects conditions: unlimited time, shorter (30 minutes) time limit or longer (45 minutes) time limit. Judges read a hypothetical scenario, in which a 100-piece jigsaw puzzle was administered to an initial group of people, and every person took less than 28 minutes to solve the puzzle (see Online Appendix A), with no time limit mentioned.

In the scenario used in the study, judges were told that the same puzzle was administered to a new worker, from the same population. The worker in the scenario either had unlimited time, or had been randomly assigned to one of two timed conditions based on a coin flip – a maximum time of 30 minutes (shorter time), or a maximum time of 45 minutes (longer time). By telling the judges that similar workers had all completed the task within both the time limits used in the study, we controlled for accounts based on beliefs about workers having difficulty completing the puzzles in time used (such as truncation or censoring). However, because we informed judges only about the maximum time used by prior workers, the judges still needed to make inferences about project scope to estimate the average time workers took.

In this study, the scenario was designed so that time limits logically could not affect worker’s behavior. Specifically, judges in the time limit conditions read that the worker did not know about the time limit and had simply been instructed to complete the work at his or her own pace. Judges were then asked to estimate the average task completion time. If the time limit bias persists in this scenario, the finding could not be explained by judges taking into account the effect of providing time limits to workers (e.g., by revealing private information to workers or shifting their motivation).

Of course, the ability of this study to potentially rule out these alternative explanations is predicated on the assumption that the judges read and understood these key details in the instructions. To address whether the observed results could be explained by judges failing to understand that the deadlines in the study were arbitrary and irrelevant, we asked the judges a battery of six comprehension check questions at the end of the survey (Online Appendix A). In particular, judges were asked to recall both the external times limits used in the study, whether workers in both these time limits worked on the same jigsaw puzzle, whether workers were randomly assigned to one of these two time limits, the number of pieces in the jigsaw puzzle, the maximum time taken by a similar group of workers who had attempted to solve the same jigsaw puzzle in the past, and finally whether the current group of workers believed that they had unlimited time to work on the puzzle. We will test the robustness of the results to restricting the analysis to only those judges who passed this comprehensive set of checks.

6.2 Results

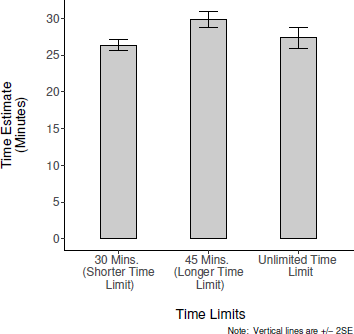

An omnibus test revealed that the estimates differed significantly across the three conditions (F(2,355)=9.40, p<.001).Footnote 6 In the unlimited time (control) condition, judges estimated an average completion time of 27.35 minutes. In the irrelevant time limit conditions, judges estimated a significantly higher task completion time when the time limit was longer (M Shorter=26.36 minutes vs. M Longer=29.84, t(232)=5.28, p < .001; see Figure 2).

Figure 2: Estimated time for task completion in different irrelevant time limit conditions (Study 2)

We checked whether the observed results could be explained by judges failing to register the details of the situation, particularly the fact that the workers did not have any time limits. We redid the analysis using only those judges who passed all six of the comprehension checks asked at the end of the survey (n=198). This subset of judges with perfect recall also estimated a longer task completion time under a longer deadline (M Shorter=27.05 minutes vs. M Longer=28.66, t(131)=3.74, p < .001).Footnote 7 This confirms that the results cannot be interpreted as normative on account of judges failing to understand the instructions in the study.

6.3 Discussion

We once again replicated the effect of time limits on estimates, this time using an extreme case in which the time limits are completely irrelevant. We have also replicated these results of irrelevant time limits on planning using a completely different, consequential budgeting game conducted in a classroom setting (Study S5, Online Appendix D). Overestimation of task completion times, even in these contexts where judges understood that workers cannot be affected by external time limits (e.g., because the effect holds among those who passed comprehension checks), suggests that the effect of time limits on estimates cannot be explained by beliefs about differences in worker’s rate of work, quality of work, the distribution of task completion times, or information signaling. In other words, the observed results cannot be interpreted as an artifact of judges misunderstanding or failing to recall that the deadlines in the study were normatively uninformative.

Furthermore, given that all judges in Study 2, regardless of condition, were exposed to the same timing information (both the shorter and longer time limits were known, along with the maximum time of 28 minutes taken by a prior group to solve the same puzzle), this study provides further evidence that the effect of deadlines cannot be explained by even incidental anchoring.

Why do even randomly determined time limits unknown to workers lead to differences in judgments about workers’ task completion times? In people’s experience, more effortful tasks often have longer time limits, and people may overgeneralize this relationship, such that they perceive a task with a longer time limit to have a larger scope of work even when longer time limits are irrelevant. Over-learned associations can then become a decision heuristic that is triggered by the presence of relevant stimuli in the decision environment, such as time limits triggering an associated sense of the scope of work. According to this scope perception account, the effect of time limits on estimated completion time is driven by this subjective perception of the work to be done, rather than by beliefs about how rapidly workers are trying to work.

In the next study, we further replicate the effect of time limits on perceived scope even when judges are told that workers do not know about time limits. We also include a confirmatory test of the proposed causal role of perceived scope on completion time estimates by measuring the perceived scope and using mediation analysis. Finally, we examine the generalizability of our findings by extending our test to a situation in which decision-makers make multiple estimates for different tasks of the same kind.

7 Study 3: Role of perceived task scope on completion time estimates

7.1 Method

Online participants (n=347) acting as judges participated in a pre-registered scenario study (https://aspredicted.org/blind.php?x=5u3fi9) where they were required to predict the task completion times for a few jigsaw puzzles. Judges were briefed about jigsaw puzzles, the online interface used to administer the puzzles, and the details of a sample of participants who had worked on puzzles in the past. Specifically, judges were told that every participant worked on only one puzzle and no participant took more than 30 minutes to solve any puzzle. Unlike Study 2, where judges were not told anything about how the participants were paid, we also explicitly told the judges that all participants were paid a fixed fee for their work.

In the new scenario, a sample of participants drawn from the same population was asked to solve a jigsaw puzzle. Further, based on a coin toss, workers in the scenario were randomly assigned to one of two time-limit conditions: 35 minutes (shorter time limit) and 50 minutes (longer time limit). Judges were then assigned to the only between-subjects condition in the experiment. Half the judges were told that the participants knew about their assigned time limit before they started working. The other half were told that participants were not informed about the time limits and that the time limits were for administrative convenience such that a worker, instructed to work as his/her own pace, who could not finish solving the puzzle before the “assigned” time limit expired, was asked to stop working any further.

Participants answered a series of nine comprehension questions on the experimental setup before they could proceed to make their estimates (see Online Appendix A). The comprehension questions were presented on the same page as the instructions, so every participant could answer them correctly if they attended to the information provided. Judges then estimated the task completion time, in minutes, for two different jigsaw puzzles, one described in terms of the shorter time limit and the other with the longer time limit. The specific puzzles and the order of time limits were counterbalanced.

After judges made their completion time estimates, they answered two different questions that captured their beliefs about the relative scope of work for the two puzzle tasks that had been associated with different time limits. Specifically, they rated which puzzle had more pieces, on a bipolar scale anchored on the tasks and with a neutral mid-point. They also reported how much work they thought it would take to solve each of the two puzzles using two separate slider scales (ends marked as 1=a little work and 100=a lot of work). Finally, judges reported how interesting and enjoyable they thought participants might have found the two puzzles (answered using a bipolar scale described earlier). Judges answered a few questions on their knowledge about jigsaw puzzles, demographics, and an attention check question.

7.2 Results

Given the repeated-measures design, we used hierarchical regression to analyze the results of this study. When time limits were known to the participants, as in Study 1, we replicated the effect even when the judges made multiple estimates and were told that the time limits were arbitrary. In particular, judges estimated longer completion time when the externally assigned time limit was longer (M Shorter=21.85 minutes vs. M Longer=24.59; β=2.75, t=5.21, p<.001).

In the experiment, although the tasks were similar, judges encountered different jigsaw puzzles. This mimics real-world situations where managers often manage projects that are of a similar type but different in terms of intrinsic details. The robustness of the time limit bias to the within-subjects design suggests that the bias can persist even in contexts where evaluability of time limits is high (e.g., where managers make estimates for different tasks under different deadlines).

Furthermore, replicating the results of Study 2, the effect persisted even when judges were told that workers did not know about the time limits (M Shorter=20.74 minutes vs. M Longer=22.91; β=2.17, t=4.29, p<.001). In fact, the magnitude of the time limit bias did not significantly differ depending on whether or not the workers knew about the time limits (interaction β=0.58, t=0.79, p=.428), further confirming that judges’ beliefs about how deadlines affect workers’ behavior were not responsible for our findings.Footnote 8 Given that there was no difference, the remaining analyses use the combined data.

Consistent with our hypothesis that longer deadlines increase the perceived scope of work, judges estimated that a task with a longer deadline would have significantly more pieces (M=+0.15; mid-point test vs. M=0: t(346)=2.46, p=.014). Furthermore, judges’ ratings of the number of pieces significantly mediated the effect of time limit on completion time estimates (bootstrapped 95% CI=[0.06, 0.38]; see Online Appendix C). Judges also believed that a task with a longer time limit would entail marginally more work to solve when they responded using the slider scales (M Longer=60.89 vs. M Shorter=59.13; β=1.77, t=1.83, p=.067), and these elicited beliefs about task scope significantly mediated the effect of time limit on completion time estimates (bootstrapped 95% CI=[0.06, 0.57]). Taken together, a composite z-score index measuring the overall perceived scope of work (computed from these two measures of task scope), partially mediated the effect of time limit on completion time estimates (bootstrapped 95% CI=[0.18, 0.69]). By contrast, time limits did not affect judges’ ratings of whether workers found a task more interesting and enjoyable (M=-0.009; mid-point test vs. M=0: t(346)=0.10, p=.920).

7.3 Discussion

Although judges were told that the time limits were arbitrarily determined by a coin toss, they were influenced by time limits when making repeated judgments for similar tasks. In particular, they estimated a significantly longer completion time for a task that was associated with a longer deadline.

As in Study 2, this study also required judges to answered a battery of nine questions to indicate their comprehension (see Online Appendix A), but in this study, the questions were asked before the judges made their estimates. All the results held if we looked at only the judges who passed all the comprehension questions (n=203; see Online Appendix C).Footnote 9 This strongly confirms that the results are not due to a subset of judges who misunderstood the actual irrelevance of the time limits and then made reasonable inferences from the time limits.

Instead, the results are consistent with the scope perception account. In particular, a longer deadline resulted in a larger scope of work perceptions, which in turn mediated the causal relationship between time limits and completion time estimations. This provides evidence for the scope perception account of the time limit bias. We replicated the effect of time limits on scope perception in Study S6 by having judges estimate the number of puzzle pieces as a measure of task scope. Furthermore, in Study S7 (both Studies S6 and S7 are reported in Online Appendix D), we demonstrate that the effect of time limits on both task scope (as measured by puzzle pieces) and estimated completion time is debiased when participants are provided with sufficiently detailed scope-relevant information: the full distribution of completion times in the absence of a time limit.

Despite the robustness of the findings, the scope perception account does point to a testable moderator of the time limit effect. If relatively longer time limits prompt perception of a higher scope of work because of an overgeneralized association between available time and scope, a weaker belief in the association may reduce the effect. In the next study, we test this prediction by either confirming (e.g., suggesting that tasks’ scope and time limits are related) or questioning (e.g., suggesting that task scope and time limits need not be related) judges overlearned beliefs about the association between available time and task scope, before they made their estimations. This kind of belief manipulation has been previously used to investigate psychological mechanisms that rely on subjective beliefs and confidence (Reference Briñol, Petty and TormalaBriñol, Petty & Tormala, 2006; Reference Ülkümen, Thomas and MorwitzÜlkümen, Thomas & Morwitz, 2008).

8 Study 4: Time estimation with belief manipulation

8.1 Method

Online participants (n=317) played the role of judges in a 2 (time limit: shorter vs. longer) × 2 (lay belief: confirming vs. questioning) between-subjects experiment. As in Study 1, judges read about real workers who had completed a 20-piece jigsaw puzzle under a randomly-assigned 5-minute or 15-minute time limit and saw pictures of the actual puzzle. They were also told that all workers, across time limits, were paid a $3.00 fixed payment for their work.

Judges were then informed that they would be asked to estimate the time it took workers to finish solving the puzzle. Before making their estimates, judges were presented with additional information, manipulated between-subjects. In the confirming-lay-belief condition, judges read:

Recent studies in industrial and organizational psychology indicate that tasks that are larger in scope usually have longer time limits. Tasks smaller in scope, on the other hand, usually have shorter time limits.

In the questioning-lay-belief condition, they read:

Recent studies in industrial and organizational psychology indicate that task scope might be unrelated to time limits. Therefore, tasks that are larger in scope need not have longer time limits, and vice-versa.

Judges then proceeded to make their estimates for a typical worker who had completed the puzzle with a maximum time of either 5 minutes or 15 minutes, manipulated between-subjects.

After participants indicated their estimates, they answered a series of five comprehension questions on the two different time limits, how the workers were assigned to one of the time limits, whether the workers were paid differently based on the assigned time limit, and whether workers could potentially earn more money by working longer (see Online Appendix A). Finally, they answered a few questions on their knowledge about jigsaw puzzles, demographics, and an attention check question.

8.2 Results

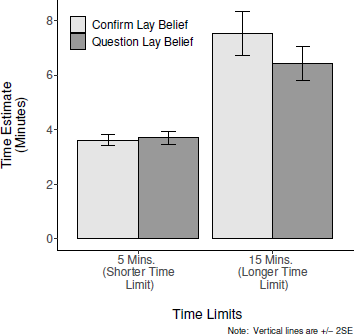

The experimental conditions affected judges’ estimates of task completion time (F(3, 313)=55.45, p<.001). The manipulation of lay beliefs had no discernible effect on judges’ estimates in the shorter time limit conditions (M Shorter, Question=3.70 vs. M Shorter, Confirm=3.62, t(155)<1, p=.624). However, judges’ estimates in the longer time limit condition were significantly lower in the questioning lay belief condition than the confirming lay belief condition (M Longer, Question=6.43 vs. M Longer, Confirm=7.53, t(158)=2.21, p=.028). As a result, the sensitivity of time estimates to the time limit was weaker in the questioning condition (Δ=2.73, t(156)=7.38, p<.001), than in the confirming condition (Δ=3.90, t(157)=10.55, p<.001). The 2-way interaction was significant (F(1, 313)=5.02, p=.025; see Figure 3), demonstrating that prompting judges to question the over-generalized belief reduced (but did not eliminate) the time limit bias.

Figure 3: Estimated time for task completion under different time limits when beliefs are manipulated (Study 4).

8.3 Discussion

The results of Study 4 provide further support for the scope perception account, demonstrating a role of beliefs about the association between time limits and task scope in the effects of time limits on completion time estimates. When judges read information casting doubt on the association between time limit and task scope, the effect of time limits on estimates was reduced, specifically in the longer time limit condition. It should be noted that the manipulation we used in this study was subtle, in that, it prompted participants to doubt a potential link between task deadline and task scope (e.g., “might be unrelated,” “need not have”). Presumably, the manipulation weakened judges’ lay beliefs but did not necessarily eliminate them, particularly among participants who held strong beliefs prior to the manipulation. The observed results suggest that weakening the underlying association reduces the overgeneralized response and reduces the bias in estimation due to time limits. Therefore, this study provides additional evidence that scope perception beliefs underlie the observed time limit bias in this paper.

Furthermore, the moderation by believed associations demonstrated in this study is incompatible with other alternative accounts. The results cannot be explained by anchoring and adjustment, which should persist in both conditions. The observed difference across conditions is also inconsistent with the effect occurring because of participants not reading instructions carefully. As an additional test, as in Studies 2 and 3, we examined the robustness of the results by looking at the subset judges who currently answered a battery of five questions to indicate their comprehension. The substantive conclusions remained unchanged among this subset of judges (n=257; see Online Appendix C), further confirming that the observed results are not due to judges misunderstanding the instructions regarding the situation facing the workers.

Thus far, we have investigated the effect of time limits on estimates using a single setting (jigsaw puzzles) that provides strong experimental control and using a population that generally has limited experience in making completion time estimates for workers. In the final study, we generalize our findings to a population of experienced managers making estimates about a relatively familiar task in a more naturalistic setting.

9 Study 5: Scope Perception Bias among experienced managers

9.1 Method

In a field survey, 203 actual managers of small-to-medium businesses (under 100 employees), who were responsible for deciding printing needs for their companies, were recruited from a paid online panel to answer questions in a vignette study that was included in a larger marketing research survey. After completing survey questions about their use of office printing services, participants read a scenario in which they were asked to imagine that they had hired a third-party vendor to send out customized mailers as part of a direct-marketing campaign. The vendor would use its own list of potential customers, customizing the mailers based on other information they had about the individuals. The scenario specified that after the vendor finalized the list of people to target from their database, it usually took four weeks to customize the mailers before sending them out.

The study employed a 2 (longer vs. shorter time limit) × 2 (time estimate vs. scope estimate) design. Participants were randomly assigned to either the shorter (4 weeks) or longer (6 weeks) time limit conditions. In the longer time limit condition, the scenario further elaborated that they had just come across an industry report which suggested that direct mail was less effective during late summer, and they had therefore informed the vendor about delaying the mailing by two weeks so that the mailers instead went out in early fall. We reiterated that this was a last-minute decision, made after the list of potential target customers had already been finalized and that, because of this change, the vendor now had six weeks to customize the mailers before sending them out. This manipulation introduces a difference in deadlines between the two conditions while holding constant the project’s scope.

The managers participating in the survey were randomly assigned to either estimate the number of weeks it would take the vendor to prepare the customized mailers (time-estimate condition) or the number of mailers that would be prepared (scope-estimate condition; between-subjects). All participants then estimated the typical worker’s rate (the number of mailers prepared by a worker in a day). The estimates were elicited using an ordinal measure with six different numerical ranges (see online Appendix A for the instructions used in the study). The judges also indicated the number of prospective customers they thought was within the mailing area of the direct-marketing campaign, whether they had any prior experience with direct-marketing campaigns and their zip code. We merged in an estimate of population density based on census data for each participant’s zip code.

9.2 Results

Using ordinal regression (since the responses were elicited using an ordered scale), participants in the time-estimate condition (n=101) thought the vendor would take longer when the time limit in the scenario was longer (interpolated means in weeks: M Shorter=1.62 vs. M Longer=2.73; β=2.07, z=5.21, p <.001). This generalizes our prior finding of an effect of time limits on time estimates to a different setting and to experienced decision-makers. In particular, this demonstrates that a non-informative longer time limit leads experienced judges to expect that the same project, in a setting familiar to them, will take longer to complete.

We elicited beliefs about the rate of work in all the conditions by asking participants to estimate the number of mailers prepared per worker per day (unlike Study 3 where the rate was imputed). Participants’ estimated rate of work did not differ significantly between the two time limit conditions (M Shorter=398.00 vs. M Longer=313.72; β=0.36, z=1.01, p=.311). Furthermore, in a multivariate ordinal regression predicting project completion time, we find that longer time limits yielded significantly higher completion time estimates (β=2.11, z=5.26, p <.001) controlling for the effect of estimated rate (β=0.0004, z=0.859, p=.390).Footnote 10 This suggests that the differences in the managers’ time estimates under different deadline conditions cannot be explained by their belief that workers would work slower when more time was available.

To more directly test the scope of work account, the participants in the scope-estimate conditions (n=102) rated the total number of mailers they thought would be prepared by the vendor, which was not specified in the scenario. Interpolating within the ordered levels representing ranges, the average estimated number of mailers was approximately 12,000 when the time limit was shorter, compared with 17,000 when two additional weeks were available. In an ordinal regression, we find that the estimated amount of work (i.e., number of mailers) in the longer time limit condition was marginally higher than in the shorter time limit condition (β=0.69, z=1.82, p=.069).

As in the time-estimate condition, the reported rate of work did not significantly differ based on the time limit (M Shorter=464 vs. M Longer=356, z=0.99, p=.322). A multivariate ordinal regression reveals that the managers estimated a larger scope for the completed project (more mailers sent) when the time limit was longer (β=1.06, z=2.57, p=.010) controlling for the effect of estimated rate (β=0.002, z=4.53, p<.001) suggesting that the scope perception effect is distinct from the effect of beliefs that workers would adjust their pace depending on the available time. In addition, as expected, the reported rate of work did not differ in the time-estimate and scope-estimate conditions, as a function of external time limits (interaction: β=0.025, z=0.051, p=.959).

Thirty-five percent of the participants reported that they had prior personal experience running direct-marketing campaigns, just like the one we had described in the experimental scenario. Personal experience with direct mail did not moderate any of the results (see Online Appendix C).

9.3 Discussion

In Study 5, using a field survey with experienced managers, we first replicated our previous finding that judges predict a longer task completion time when the deadline is longer, even when the change in deadline is due to completely incidental reasons. Furthermore, we find that a direct measure of the scope of work (i.e., the number of mailers sent) did vary with the time limit. Importantly, this effect was distinct from the effect of beliefs about how time limits affect the rate of work. Controlling for the measured rate of work, participants estimated a significantly larger amount of work when the deadline was longer, consistent with the scope perception account.

10 General Discussion

Across five studies in this paper (along with another seven studies in the Appendix), we consistently find that people systematically estimate longer task completion time for others when more time is available to complete a task (meta-analytic d=1.247, see Online Appendix E), even when the available time limits are irrelevant, contributing to over-estimation. Judges’ estimates were overly influenced by time limits, relative to actual workers’ time, even when they were paid for the accuracy of their estimates (Study 1). The effect of time limits persisted when judges were told that time limits were not known to the workers (Study 2, pre-registered). This finding is inconsistent with a lay motivational theory, in which time estimates are based on beliefs about how time limits affect workers’ pace. Indeed, we found that the bias in time estimation cannot be explained by either imputed or directly-elicited beliefs about the rate of work (Study 5). Our studies also rule out alternative accounts such as direct inferences from time limits, truncation, or censoring of the completion time distribution.

In everyday life, time limits are often associated with task scope because time limits are endogenous, i.e., the deadlines are set after, at least in part, based on estimating the scope. Therefore, when judgments are impacted by time limits in practice, we typically cannot tell whether that is because of a scope perception bias or a reasonable inference. Our experiments, by contrast, are designed to distinguish between the two and isolate any bias in estimation. Specifically, we informed judges that the time limits were randomly assigned (and therefore exogenous; Studies 1–4). Even when time limits are exogenous, judges could have a reasonable belief that workers will pace themselves to finish the work in the assigned time limit. To address this normative inference, we told judges that workers did not know about the time limit (e.g., the time limit was the time after which they would have been stopped in their work, without their prior knowledge; Studies 2 and 3). The time-limit bias replicated among only the subset of judges who passed a comprehensive set of checks (Studies 2–4). Therefore, the findings cannot be attributed to judges’ lack of understanding of the instructions in our experiments.

Instead, we find direct evidence for a scope-of-work explanation of the time-limit bias, in which longer time limits affect people’s perceptions of task scope (meta-analytic d=0.324, see Online Appendix E), even when there is no effect on rate of work. We find that judges estimate a task to be objectively larger (e.g., more puzzle pieces, more difficult) with longer time limits, even when the time limit is chosen at random and participants make judgments for multiple similar tasks (Study 3, pre-registered). Furthermore, in Study 3, we find that the larger perceived scope of work mediates the relationship between time limits and estimated completion time, consistent with the proposed scope perception mechanism. We also find that weakening beliefs about an association between time limits and task scope reduces the time limit bias (Study 4), providing further evidence that believed associations underlie the observed misestimation. Furthermore, experienced managers estimate that a direct mail campaign involves sending more items of mail when the deadline is exogenously extended and therefore longer (Study 5).

Our results are consistent with recent research showing incidental externally imposed deadlines can affect perceptions of task difficulty for one’s own self, with downstream consequences (Zhu et al., 2018). In our conceptualization, task difficulty is another aspect of task scope that can be affected by external deadlines. It is important to note that some of our tests of time-limit bias (e.g., Studies 1 and 4) were particularly conservative because the judges were provided with information relevant to the scope of the task (e.g., they saw a picture of the puzzle and knew the number of pieces). In these studies, the effect of scope perception on time estimation is limited to a subjective judgment of task difficulty, potentially incorporating factors such as the similarity of the puzzle pieces, the difficulty of sorting through them to find matching pieces, etc. This is analogous to a manager who has information about the objective, quantifiable parameters of a deliverable, but whose time estimates may still depend on a subjective assessment, shaped by the time limit, of the difficulty workers will have in completing the task. When objective measures of a task scope are absent, as is often the case with work tasks becoming more complex, we expect an even greater sensitivity to time limits on estimates via the scope perception bias.

Given the robustness of the effects described in the paper, it is likely that the time-limit bias is multiply determined. In particular, while anchoring and adjustment (Reference Epley and GilovichEpley & Gilovich, 2001) alone is not sufficient to explain our findings (e.g., tested directly in Study 1, and excluded by showing all participants the same time limits in Studies 2-4), anchoring could contribute to the phenomenon we document in practice. Some prior research has suggested that anchors can influence judgments across modalities by priming a general sense of magnitude (Oppenheimer, LeBouef and Brewer 2008; Reference Critcher and GilovichCritcher & Gilovich, 2008; Reference Wilson, Houston, Etling and BrekkeWilson et al., 1996), such that when people encounter a large number, they make higher unrelated magnitude estimates. It is noteworthy that in Study 5, we found that the larger time limits increased only those judgments predicted by the scope account, but did not increase judgments indiscriminately (e.g., that of the rate of work), inconsistent with the cross-modal anchoring account.

In most of our studies (Study 1, 3 and 4) we used scenarios in which all workers were paid a fixed fee. This provides a conservative test of the effect of time limits on completion time estimates. If managers know that workers have no incentive to delay task completion, their estimates are likely to be less affected by time limits. However, in the real world, workers are sometimes paid using time-metered fees (e.g., dollar per minute, dollar per hour, etc.). Such payment schemes might perversely motivate workers to work for a longer duration to earn more money, and judges anticipating such behavior might predict longer completion times when time limits are longer. The extent to which judges are well-calibrated about workers’ behavior would determine the accuracy of their estimates, and therefore the time-limit bias reported in this paper could also manifest in the real world for this completely different reason. In this paper, our aim was to identify and isolate a particular psychological route, scope perception, which could lead to biased completion time estimates in response to time limits facing a task.

11 Limitations and future research

The current paper focuses on examining a theoretical account of how deadlines might affect completion time judgments for others. In order to do this, we used stylized settings and vignette scenarios that included relatively short deadlines and small incentives among participants inexperienced in making such judgments. We deliberately used the controlled jigsaw puzzle setting in most of our studies because it helped rule out important confounds (e.g., quality of final output), but it raises questions about generalizability. Study 5 provides initial evidence that the time-limit bias can generalize to a setting outside the lab among experienced managers. The scope perception bias may help explain why managers may over-value flat fee contracts (versus per-unit-time contracts) to hire temporary employees when the available time limit is longer (Reference Goswami and UrminskyGoswami & Urminsky, 2020). Similarly, managers might be overly impressed by workers’ completion times when an exogenously determined time limit (e.g., set by a client) is longer, and then judge the same performance as poor when the time limit is shorter, potentially even impacting promotion, compensation, and performance appraisal decisions (Reference Levy and WilliamsLevy & Williams, 2004).

Our findings on how deadlines affect estimates of other people’s task completion times also raise questions about whether deadlines will similarly affect predictions of one’s own completion times. Indeed, recent research has shown that incidental longer deadlines affect resource allocation decisions for personal goals when the means and the outcomes are not well defined (Zhu et al., 2018). Our results also suggest that deadlines may affect procrastination, planning, and goal pursuit via the scope perception bias and resulting beliefs about one’s own completion times. Interestingly, Zhu et al. (2018) find that experience with the task attenuates the effect of deadlines, whereas we do not find any moderating influence of measured experience or task knowledge (Studies 1-4), or of repeated choices (Study 3). This highlights the importance of systematically studying the role of experience on the effect of deadlines for decisions pertaining to self vs. others.

Future research could also examine what kind of scope information might be sufficient to eliminate the estimation bias induced by time limits. Studies 2 and 3 suggested that merely providing people information about the maximum time to complete a task is not sufficient to eliminate the bias. Likewise, in Study 4, calling into question the association between time limits and task scope significantly reduces but does not eliminate the bias. We believe that more detailed scope-relevant information is likely to be necessary to counter people’s pre-existing beliefs about the relationship between time limits and scope that drive the observed bias. We find preliminary support for this in Study S7 (Online Appendix), in which we provided judges with a complete distribution of hypothetical task completion, which eliminated the scope perception effect. Given the pervasiveness of deadlines in the real world and the evidence that scope-relevant judgments are biased by deadlines, further investigation of interventions in consequential settings that reduce time-limit bias would be a useful research endeavor.

“Parkinson’s Law,” the idea that work expands such that people take more time when more time is available (Reference ParkinsonParkinson, 1955), has been highly influential, despite limited empirical evidence. In this paper, we suggest and provide evidence for a parallel Parkinson’s Law of the Mind: people’s conceptualization of a task expands with the time available, such that beliefs about the project scope and the time required expand with longer time limits, even when both the actual work done and time taken are not affected.