1. Introduction

User-centered design, often referred to as human-centered design, is an approach to design that puts the intended users at the center of the product development process (Martens et al. Reference Martens, Rinnert and Andersen2018; Sanders Reference Sanders2002; Seshadri et al. Reference Seshadri, Joslyn, Hynes and Reid2019; Vredenburg et al. Reference Vredenburg, Mao, Smith and Carey2002). This is a well-documented approach that has been used by numerous companies to improve the desirability, viability, and/or feasibility of their products (Boy Reference Boy2017; Isa & Liem Reference Isa and Liem2021). Prototyping is a factor in the success of companies, and it is an essential part of user-centered design (Ahmed et al. Reference Ahmed, Irshad and Demirel2021; Akasaka et al. Reference Akasaka, Mitake, Watanabe and Shimomura2022; Faludi et al. Reference Faludi, Yiu and Agogino2020). Prototypes are valuable in new product development as they allow for communication between stakeholders, creating the space to express ideas and feedback (Lauff et al. Reference Lauff, Kotys-Schwartz and Rentschler2018; Menold et al. Reference Menold, Jablokow and Simpson2017; Bobbe et al. Reference Bobbe, Opeskin, Lüneburg, Wanta, Pohlmann and Krzywinski2023; Gill et al. Reference Gill, Sanders and Shim2011). Testing the prototype should occur with end users to develop an ideal final solution (Hess & Fila Reference Hess and Fila2016; Surma-aho et al. Reference Surma-aho, Björklund and Hölttä-Otto2022), as it is imperative to understand their specific needs and wants of the product (Chang-Arana et al. Reference Chang-Arana, Surma-aho, Hölttä-Otto and Sams2022; Gericke et al. Reference Gericke, Eckert and Stacey2022; Liao et al. Reference Liao, Tanner and MacDonald2020). This research focuses on children as the intended end user for physical toy products.

Historically, children did not test products developed for them, instead adults tested the products (Druin Reference Druin2002; Markopoulos et al. Reference Markopoulos, Read, MacFarlane and Hoysniemi2008). After conducting a literature review on usability testing with children (Banker & Lauff Reference Banker and Lauff2022), it was identified that more research is needed around understanding how children understand prototypes and give feedback during physical prototype testing.

As designers it is important to create models that end users will understand so that they can give better feedback (Kim et al. Reference Kim, Lee and Kim2006). Knowing how to help children understand the prototype better is one way of aiding in informed decisions regarding the making of the prototype (Codner & Lauff Reference Codner and Lauff2023). This paper looks at children’s feedback, specifically trying to understand how that feedback changes through prototype iterations. The guiding research is, “How does children’s feedback change based on the different fidelities of prototypes?” This research uses a semester-long project focused on designing wooden toys for the company PlanToys. The course had three prototype milestones that correspond with low-, medium-, and high-fidelity prototype models. The fidelity of the models was further organized in terms of form and function; form relating to the visual and aesthetic aspects of the model (looks-like model) and function relating to the functional and interactive elements in the model (works-like model).

2 Aims and significance

The goal of this qualitative research study is to begin to understand how the fidelity of prototypes, separated into form and function, influences feedback from children during testing. If we understand the relationship between fidelity of prototypes and children’s feedback, we can make more informed decisions regarding the prototype’s fidelity based on the designer or researcher’s goals of the testing session.

The significance of this research is that it adds new knowledge around how a specific type of end user (children) give feedback on prototypes as the evolve throughout the design process. This research helps designers better prepare prototypes for testing with children, by matching desired types of feedback with the appropriate fidelity of the model.

3 Background

3.1 Role of prototypes in design process

Prototypes are communication tools used to visualize concepts, explain and update information to stakeholders, negotiate requirements, and persuade influential stakeholders (Lauff et al. Reference Lauff, Knight, Kotys-Schwartz and Rentschler2020; Deininger et al. Reference Deininger, Daly, Lee, Seifert and Sienko2019a). While prototypes are communicating the concepts to stakeholders and designers are learning from those interactions, the prototypes are also demonstrating that milestones in the projects have been met and that the components work together as expected, both aesthetically and functionally (Hess & Summers Reference Hess and Summers2013; Jensen et al. Reference Jensen, Özkil and Mortensen2016; Petrakis et al. Reference Petrakis, Wodehouse and Hird2021). Sanders and Stappers (Reference Sanders and Stappers2014) explained in their comparison of probes, toolkits, and prototypes, that probes and toolkits are used at the “fuzzy front end” of design while prototyping occurs in the “traditional design development process.” During this phase, prototypes help to communicate product ideas and concepts as well as demonstrating aesthetic and functional qualities (Camburn et al. Reference Camburn, Viswanathan, Linsey, Anderson, Jensen, Crawford, Otto and Wood2017; Deininger et al. Reference Deininger, Daly, Sienko, Lee and Kaufmann2019b; Eckert et al. Reference Eckert, Wynn, Maier, Albers, Bursac, Chen, Clarkson, Gericke, Gladysz and Shapiro2017; Lauff et al. Reference Lauff, Kotys-Schwartz and Rentschler2018; Petrakis et al. Reference Petrakis, Hird and Wodehouse2019). Beyond understanding and refining the current concept of the product, prototypes can also be used to explore new and novel solutions to design problems (Camburn et al. Reference Camburn, Viswanathan, Linsey, Anderson, Jensen, Crawford, Otto and Wood2017; Petrakis et al. Reference Petrakis, Hird and Wodehouse2019). In summary, prototypes serve many purposes in the design process, including to learn, communicate, refine, integrate, demonstrate, explore, and elicit requirements (Hannah et al. Reference Hannah, Michaelraj and Summers2008; Petrakis et al. Reference Petrakis, Hird and Wodehouse2019).

3.2 Prototype fidelity

Prototypes exist along a spectrum, from low-fidelity to high-fidelity. Rueda et al. (Reference Rueda, Hoto, Conejero, Holzinger, Ziefle, Hitz and Debevc2013) defines fidelity as the resolution, or the refinement and detail, of the model. Low-fidelity prototyping can save money because it often uses simple, readily available, and low-cost materials such as cardboard, paper, foam, or similar (Lauff et al. Reference Lauff, Kotys-Schwartz and Rentschler2018; Lim et al. Reference Lim, Stolterman and Tenenberg2008). These low-fidelity materials are often the quickest way to make a physical embodiment of the design concept (Isa & Liem Reference Isa and Liem2014; Stompff & Smulders Reference Stompff and Smulders2015). High-fidelity prototypes are often made of the same or similar materials as the final product and are made to represent accuracy and final specifications of the prototype (Isa & Liem Reference Isa and Liem2014; Lauff et al. Reference Lauff, Kotys-Schwartz and Rentschler2018). Because high-fidelity prototypes take longer and are more expensive to produce, they often occur towards the end of the design process when most of the design decisions have been made. Isa and Liem (Reference Isa and Liem2021) discovered that “high- and low-fidelity prototypes can be applied at the same time and iteratively throughout all stages of the co-creation and design process.” Low- and high-fidelity prototypes each serve a purpose, whether it is to create a model quickly or if it is to show the distinct details of the product concept. However, fidelity can cause bias in the users’ perception. Rueda et al. (Reference Rueda, Hoto, Conejero, Holzinger, Ziefle, Hitz and Debevc2013) determined that the more attractive a physical prototype is, the more critical the users were toward the prototype. Users also gave more verbal feedback on paper prototypes than high-fidelity digital prototypes (Walker et al. Reference Walker, Takayama and Landay2002). Fidelity is a crucial factor for the designers to consider, not only for their own needs of time and money but also for getting actionable feedback from users (Virzi Reference Virzi1989).

3.3 Prototype testing with children

Druin (Reference Druin2002) found that children can fill different roles in the design process: design partner, informant, tester, and user. The most frequent role seen in product development is tester, where the children interact with a prototype and then give feedback for future iterations. It is important that the intended end user for a product is included in testing, so that the designer can understand the specific challenges and relationship the users would have with the product (Prescott & Crichton Reference Prescott and Crichton1999; Sauer et al. Reference Sauer, Seibel and Rüttinger2010). Children are a unique set of users, with their own needs, likes, dislikes, and life experiences (Markopoulos et al. Reference Markopoulos, Read, MacFarlane and Hoysniemi2008; Snitker Reference Snitker2021). Historically, adults tested prototypes on behalf of children, which may have resulted in leaving out some of the children’s needs, thoughts, and opinions in the design. If the testing situation is safe, then testing with children can take place in the field or lab, in large groups or singular, and with multiple types of research methods (Banker & Lauff Reference Banker and Lauff2022; Barendregt & Bekker Reference Barendregt and Bekker2003; Khanum & Trivedi Reference Khanum and Trivedi2012).

While methods for testing with children have been well studied, literature on how to prepare prototypes for testing with children is still lacking. Hershman et al. (Reference Hershman, Nazare, Qi, Saveski, Roy and Resnick2018) found that creating paper prototypes with buttons that turn lights on, makes the prototype more interesting and believable as a virtual interface. Another study by Sim et al. (Reference Sim, Cassidy and Read2013) found that game designers could successfully evaluate mobile game prototypes made from paper with children aged 7-9. These examples demonstrate that paper prototypes (i.e., low-fidelity prototypes) can be used to gain valuable insights and how to prepare the prototypes when evaluating digital products with children. However, the research does not discuss the specifics of the children’s feedback or on how to prepare physical product prototypes for children. This paper focuses on filling this gap by evaluating children’s feedback on physical products varying in fidelity.

4 Theoretical frameworks

4.1 Prototypes as critical design objects

Lauff et al.’s (Reference Lauff, Knight, Kotys-Schwartz and Rentschler2020) work discusses prototypes as critical design objects that act as a language between humans. Like most languages, a prototype changes meaning according to who is using it and how it is used. For example, a CEO will view a prototype within a specific context and awareness of the company while an end user will view a prototype within the context of their interpretation of the end use of the product. Continuing with the development of prototypes as a form of communication, Lauff et al. (Reference Lauff, Knight, Kotys-Schwartz and Rentschler2020) discussed the notion of “encoding and decoding” messages through prototypes. A representative image of this is shown in Figure 1. In Lauff et al.’s work, several social contexts were considered. The focus of this paper, however, is on the interaction of prototypes, intended end users (in the context of this work, children), and the researcher or designer. In this paper, we are looking specifically at the stage of children re-encoding the prototype with their own thoughts and ideas, and then the feedback they give to the designer. The topic of the stage re-encoding still has several nuances to be uncovered, specifically how fidelity affects this stage. Additionally, Deininger et al. (Reference Deininger, Daly, Lee, Seifert and Sienko2019a) discuss how the quality and fidelity of a prototype can influence various stakeholder perceptions, making this a relevant and important topic to study.

Figure 1. The interaction of designers and children when encoding and decoding prototypes.

4.2 Prototyping for X

To incorporate user-centered design practices into prototyping, Menold et al. (Reference Menold, Jablokow and Simpson2017) developed a prototyping framework called prototyping for X (PFX). In this work, Menold et al. used human-centered design’s three lens model of innovation, in which innovation occurs when a product is desirable, feasible, and viable. A model of this is shown in Figure 2. In previous prototyping frameworks, researchers did not often emphasize these user-centered design practices or evaluate their impact in realistic design settings. In PFX, Menold et al. (Reference Menold, Jablokow and Simpson2017) created a holistic prototyping framework that addresses not only technical development and resource management, but also user satisfaction, perceived value, and manufacturability. In our research, the goal is to aid in this prototyping for X framework by expanding its context to a specific group of users, children, and by seeing what else besides desirability, viability, and feasibility can come from testing. In understanding the fidelity in which prototypes produce valuable feedback, the more efficient the process of producing an innovative product.

Figure 2. The Human-Centered Design model for innovative product development.

5 Methods

5.1 Design context: Course, teams, and design brief

The data for this research was collected from a cross-disciplinary, upper-level, project-based studio course at a large R1 university in North America. This course is 15 weeks long and begins in the middle of January and ends in early May. The data for this study was collected in Spring 2022. The students in this course were asked to design a wooden toy for the company, PlanToys. The design brief was as follows: “Students must design an innovative physical product that fits within one of these five categories: Reimagined Classic Toys, Educational Toys, Grow-with-the-Child Toys, Tech x Wooden Toys, and Toys for Better Aging.”

The class had a final total of 45 students that were divided into 9 teams of 5 students. Students come from a variety of backgrounds, including product design, mechanical engineering, computer science, and business. Teams are formed by distributing skills in the first two weeks of the semester (Kudrowitz & Davel Reference Kudrowitz, Davel, Bruyns and Wei2022). In this paper, we use fruit pseudonyms for the teams, (i.e., Plum Team or Mango Team).

This class is structured like a design consultancy, where these interdisciplinary teams pitch their concepts/prototypes to the sponsor after each main milestone to determine the next path forward. Additionally, each team is paired with two industry mentors to give feedback and guidance on their process and progress on a weekly basis. Grading for the class is also completed by these industry advisors, with overall structure and final input from the course instructor. While this is a university course project, the students are expected to treat this like a design consultancy project, which includes the expectation to actively recruit target end users to engage in front-end research and multiple rounds of prototype testing. The learnings from these tests are then reported at each milestone presentation. As such, this class and research study used self-reported prototype testing feedback from the design teams in the data analysis. We recognize this has limitations, which are reported in Section 5.4.

5.2 Research context – Prototype testing sessions

The teams were required to test each prototype with three different types of stakeholders: the intended end user, a caretaker, and an expert on a topic relating to play, children, toys, or product design. The students were tasked with a series of prototype checkpoints, or design iterations, throughout the semester. Each prototype testing session with a stakeholder happened independently. Teams were required to recruit their own stakeholders and hold their own testing session outside of class time. Guidelines and local resources were given to teams to identify appropriate stakeholders. Teams started with their top concept sketches and then created 5-6 low-fidelity models (Checkpoint 1). At Checkpoint 2, they continued two of the previous 5-6 concepts forward, making two prototypes with the goal of creating fully functional models at this checkpoint. At Checkpoint 3, the students created one final looks-like and works-like prototype based on one of their previous two concepts. This timeline is visualized with prototype examples in Figure 3 from the Plum Team. Eight of the teams created 8 prototypes and one team created 9 prototypes throughout the semester, resulting in 73 prototypes created for the entire class.

Figure 3. Visualization of the prototype checkpoints throughout the course for one representative team (Plum team). The blue arrow indicates the concept that made it from the first to the third checkpoint; this is an example of the data used for the analysis in this paper for each of the 8 teams.

Even though 73 prototypes were created in the course over three checkpoints, data on 23 of the prototypes during testing sessions was used in this research study. One team’s data was removed as they chose to design for the elderly, not children. Additionally, the decision was made to look at the evolution of one concept over the semester and remove prototypes that did not make it further in the course. This can be seen in Figure 3, where 4 of the 5 prototypes in Checkpoint 1 did not make it through to Checkpoint 3. Therefore, for the 8 teams’ data that continued into analysis there were 3 prototypes used per team in this study, resulting in 24 data points. One team (Apple) did not fill out the data form from Checkpoint 3, which resulted in 23 unique data points of the models tested with children.

For the prototype testing sessions at each checkpoint, an example of stakeholders recruited for Plum Team in Figure 3 would include: (1) children between the ages of 4-7 as the intended end user of their products, (2) parents of children between the ages of 4-7 as the intended caregiver (and likely consumer of the product), and (3) product designers that have experience with toy development as the experts. For this paper, we only used data from the children testing sessions.

For the 8 teams used in this study, the teams self-reported the age range for the children who engaged in their testing sessions. This age range question can be seen in Appendix A – Prototyping Survey. Three teams tested with children between 4-7 years old and five teams tested with children between 7-11 years old. This is all the information that we have about the children in these testing sessions, as it was up to the teams to determine the appropriate age range for their product and then recruit those participants.

5.3 Data collection

The researchers submitted an IRB prior to the start of data collection (STUDY00014959), which was deemed not human subject’s research. This classification is due to the structure of the study, where we used historical class data on testing prototypes; this data did not include details on the student teams nor the prototype testers outside from their age range. More details on this can be found in the Limitations (Section 5.4).

The data collected from this course included prototyping testing feedback that was documented by each team in a Google Form after each testing session. For each session of testing, the students were given an 18-question survey via Google Forms to complete (see Appendix A). This survey included questions relating to the participant’s feedback and the student’s own perspective of the testing session (Turner Reference Turner2014). For the eight teams with a final product designed for children, the feedback given toward the prototypes that were an iteration of the final concept was reviewed. At Checkpoints 1 and 2, 8 feedback surveys were reviewed respectively, and at Checkpoint 3, 7 were reviewed, totaling 23 for the class. The Apple team did not fill out a survey report at Checkpoint 3, which is also noted in the limitations of this study.

5.3 Data analysis

5.3.1 Determining prototype fidelity

The guiding research question is “How does children’s feedback change based on the different fidelities of prototypes?” To begin to answer this question, the teams were organized by their rankings determined by the audience feedback regarding the prototypes’ success of looking and working like a real product from the class’s final presentation after Checkpoint 3 (Figure 4). Prototype fidelity is often discussed in terms of “works like” (function) and “looks like” (form) (Hallgrimsson Reference Hallgrimsson2012). The audience consisted of 130 reviewers who submitted their responses via a Google Form. The audience consisted of the course’s volunteer industry lab instructors (n = 18), university faculty (n = 6), local industry members (n = 9), client representatives (n = 4), students (n = 45), and family/friends of the teams (n = 48). Knowing these rankings allows us to make relationships between the pattern of fidelity, feedback, and success. This comparison is emphasized within the larger research study.

Figure 4. Survey results (n = 130) evaluating the final prototype at checkpoint 3 (1 = Very Poor, 5 = Excellent).

After determining the ranking of the teams, we determined the function and form fidelities of the teams’ prototypes (Figure 5). In this figure, we plot all three checkpoint prototypes for the teams in terms of both form fidelity (x-axis) and function fidelity (y-axis). Table 1 reports fidelity by team, form and function, and checkpoint. Since prototype fidelity is a multi-faceted topic, we decided to further refine overall prototype fidelity by looking at both form fidelity and function fidelity at every checkpoint. In this paper, we refer to form as a “looks-like” model and function as a “works-like” model, which is a well-established framework within the product design industry (Hallgrimsson Reference Hallgrimsson2012).

Figure 5. Scatterplot of the form and function fidelities of the prototypes analyzed in this paper.

Table 1. Summary of form and function fidelity ratings for each team at each checkpoint

To determine the function fidelity, which is less subjective, a 5-point Likert scale was used to evaluate the models. A score of 1 meant the prototype did not work (low-fidelity function) and a score of 5 meant all the intended functions worked well on the prototype (high-fidelity function). The two researchers on this project determined the function fidelity of each prototype based on witnessing the prototypes in-action and interacting with the models. The graduate researcher reviewed the models using this 5-point scale and then met with the primary advisor to review and discuss these scores until there was agreement between the two researchers. These two perspectives acted as a checks-and-balance system. This is an effective approach for qualitative data analysis (Miles & Huberman Reference Miles and Huberman1994).

For the form fidelity, which is more subjective, a 5-point Likert scale was also used. A score of 1 means the prototype is a low quality looks-like model (low-fidelity form). A score of 5 means the prototype is a high quality looks-like model (high-fidelity form). Looks-like models contain multiple factors ranging from size and scale, color, silhouette and visual hierarchy, materials and finish, text and icons, to overall construction quality (Hallgrimsson Reference Hallgrimsson2012). Because of this complex and subjective nature of the form, we opted to use a panel of product design industry experts (n = 9) to calculate the form fidelity for this study. These experts are very familiar with the class and attend lab sessions every week of the semester to work with the teams. They have seen and interacted with all the prototypes in-person. The form fidelity was then averaged from the 9 responses.

5.3.2 Analyzing prototype survey responses

There were four survey question responses reviewed for this analysis (see Appendix A); the first two questions included categories to select from and the last two were open-ended responses that were qualitatively coded (Anderson Reference Anderson2010; Vaismoradi et al. Reference Vaismoradi, Turunen and Bondas2013):

-

1. What category did you receive feedback in regarding the prototype?

-

2. What category did you receive feedback in regarding prototyping for X?

-

3. What nonverbal feedback did you receive?

-

4. What verbal feedback did you receive?

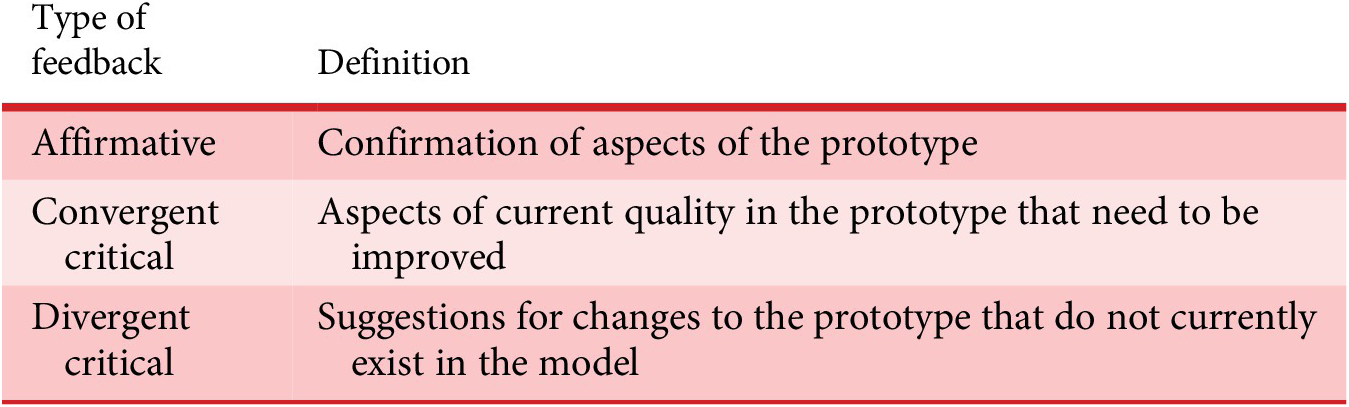

The categories for the feedback regarding the prototype (Q1) include function, form, interaction, size, color, material, emotional, safety, play value, marketability, and “other” where they could fill in a response. These categories were decided by the researchers in collaboration with the course professor, and then revised as more categories surfaced. Edits to the categories were allowed until the first checkpoint testing sessions of the students. Students could choose as many categories as were required. The categories for the feedback regarding prototyping for X (Q2) include viability, feasibility, and desirability (Menold et al. Reference Menold, Jablokow and Simpson2019). Again, students could choose as many categories as were required. The category responses were then analyzed across the checkpoints and the teams for similarities and differences. Verbal and nonverbal feedback was reported textually in 146 open-ended responses averaging around 69 words per response. These responses were first organized by form or function and then analyzed in terms of affirmative, convergent critical, and divergent critical feedback as defined in Table 2. Affirmative refers to confirmation of aspects of the prototypes. Convergent critical refers to aspects of the current model quality that need to be improved. Divergent critical refers to suggestions for changes to the prototype that currently do not exist in the model.

Table 2. Types of feedback defined

These categories of feedback were constructed from the Disconfirmed Expectations Ontology (DEO) developed by Koca et al. (Reference Koca, Karapanos and Brombacher2009) and the convergence-divergence design process models by Jones (Reference Jones1992). Other researchers, such as Pieterse et al. (Reference Pieterse, Artieda, Koca and Yuan2010) have used this framework to analyze data of user reviews from online sources into the categories demonstrated in the DEO framework. Mader and Eggink (Reference Mader and Eggink2014) define the convergent phase of the design process as being “the process of reducing the design space” and the divergence phase is an “opening up” of the design space. The framework used for analyzing feedback in this research analysis is a combination of both DEO and convergent-divergent. The feedback can be divided into generally positive or negative feedback. However, instead of using the terms positive/negative, the terms used are affirmative/critical. The phrasing is different to better align with how feedback is discussed in the product design process, such as “I like. I wish…,” but it is based on the same principles (Kelley & Kelley Reference Kelley and Kelley2013).

The critical (“negative”) feedback found was then divided into convergent or divergent, demonstrating how the feedback relates to the direction of the design space. For example, divergent critical feedback would expand the solution space more broadly and in a new direction. Convergent critical feedback suggests an improvement in quality on the aspects that are already present in the prototype. Affirmative feedback is feedback that confirms an aspect should stay the same, remaining convergent on the prototypes. When analyzing the responses, feedback was considered affirmative when it encouraged the designer to keep a characteristic the same in the next iteration.

To determine which of these categories the feedback falls into, a content analysis coding process took place (Vaismoradi et al. Reference Vaismoradi, Turunen and Bondas2013). The responses of feedback were read by the lead researcher multiple times and then the phrases were analyzed by whether it had a “positive” or “negative” connotation. The feedback that was positive was initially coded as affirmative. The “negative” feedback was initially coded as critical feedback and had to be further coded by “convergent” or “divergent” in relation to the product development. The feedback that stated something was “unliked” or the user thought “something did not work well” was considered “convergent critical feedback.” The feedback that made suggestions for “more,” to “add,” do something “instead of” something else, or had an “idea” was coded as “divergent critical feedback.” The feedback in the three categories was then analyzed for recurring themes relating to the fidelity of the prototype. The themes of feedback will be discussed in relation to the prototype fidelity, specifically regarding form and function fidelity to answer our research question, “How does children’s feedback change based on the different fidelities of prototypes?” The results are presented in the following Results section, and then these themes will be further discussed in the Discussion section.

5.4 Limitations

When considering the results and discussion, it is important to know that there are limitations to this research. The first limitation is the data set size. The size of the class and total number of teams limited the overall prototype data collection (9 teams with 3 evolving prototype concepts total). One team (Apple team) did not complete the survey for their Checkpoint 3 prototype and one team designed toys for the elderly, so their data was removed. Therefore, there were only 23 prototype testing sessions analyzed in total. This is not a large quantity of data to make inferences about future prototype feedback from children (Whitehead & Whitehead Reference Whitehead and Whitehead2016).

The second limitation is that the data came from student self-reported prototype testing sessions. Because the researchers were not present during the students’ testing sessions, the interpretations are based only on the feedback that was documented and presented in the survey by the students. Students were given best practices to conduct prototype testing with children, but ultimately the teams decided how to conduct their test. This approach is in-line with how industry professionals would collect and document feedback about prototype testing.

The third limitation is that we did not collect specific demographic details about the students or the children. Since this study was IRB exempt, we did not collect data on these populations. Instead, we used teams’ self-reported forms that only included the age range.

The fourth limitation is that the prototype fidelity evaluation occurred in two different ways, which could influence the ratings given. Form fidelity is more subjective, and therefore, a panel of product design experts (n = 9) were surveyed for their input on the models. Form can include aspects like size and scale, color, silhouette and visual hierarchy, materials and finish, text and icons, to overall construction quality. Function fidelity is more objective, and therefore, was determined by two researchers evaluating these physical models.

The fifth limitation is that this research was conducted by one primary graduate student researcher, and we did not have a secondary researcher code the data to provide an interrater reliability score. The graduate researcher brought data and examples to weekly meetings with the primary advisor, which acted to check the validity of the findings (Miles & Huberman Reference Miles and Huberman1994). Despite the limitations, the research provides insights into prototype testing with children and some directions for future research.

6 Results

6.1 Categories of feedback

First, we analyzed the various categories of feedback the students reported from the Google Form. The results of these responses are shown in Figures 6 and 7. Both figures show the categories of feedback across the top; In Figure 6, these categories are: function, form, interaction, size, color, material, emotional, safety, and play value and in Figure 7 these are feasibility, desirability, and viability. Under each category of feedback there are three boxes, which represent the three prototype checkpoints throughout the project. Each team is presented and organized by ranking. By organizing by ranking, it is easier to compare general success with kind of feedback. When the box is filled in with the associated team color, this represents that the team marked this as an area where they received feedback. When the box is blank, this means the team did not get feedback on this category at that checkpoint. For example, the Plum team received feedback at all three checkpoints in all the prototype categories except material, safety, and marketability. In the category for “material” the team received feedback at Checkpoint 2. For the other two categories (safety and marketability), they did not receive any feedback at any checkpoint.

Figure 6. The survey results of the question “What category did you receive feedback regarding the prototype?”

Figure 7. The survey results of the question “What category did you receive feedback in regarding prototyping for X?”

Teams rarely received feedback from children regarding material, safety, and marketability. However, as part of our larger study, these prototypes were also tested with parents/guardians and product design experts to compare the feedback across stakeholder types. That feedback is not reported here but it did include more from these categories. For material, the Lemon and Apricot teams received feedback regarding material at Checkpoint 1 and the Plum and Raspberry teams at Checkpoint 2. The Cherry and Apricot teams were the only team to report Safety feedback at Checkpoint 1. None of the teams reported marketability. Play value, form, interaction, and function were reported by the most teams, with play value and form being reported 18 out of 23 times and interaction and function reported 19 and 20 times, respectively. Emotional and color feedback was reported 10 and 11 times, respectively. Size feedback was reported 7 times overall. At Checkpoint 1 the total number of reports of feedback was 41 times, at Checkpoint 2 the total of reports was 39, and at Checkpoint 3 the total was 29. More variety of feedback is given for Checkpoint 1, which was in general lower fidelity models, and then the amount of feedback categories decreases throughout the other two checkpoints.

Regarding prototyping for X (Figure 7), the teams rarely received feedback relating to viability (occurring 1 time in checkpoint 3 with the highest fidelity prototype model) and often received feedback relating to desirability (21 times overall). For feasibility, teams reported feedback on this category 10 times. As part of a larger study, these prototypes were also tested with parents/guardians and product design experts to compare the feedback across stakeholder types. That feedback is not reported here but it did include more on the viability of the design. In Figures 6 and 7, the Apple team did not fill out the final survey, so we did not include this.

6.2 Affirmative feedback

Affirmative feedback is feedback that is generally positive and emphasizes that a specific quality of the prototype or the whole prototype is “good” and should be continued forward into the next iteration. The terms/phrases reported in the children’s feedback that were understood to be affirmative included: like, cute, better, cool, pretty, good, excited, fun, smiling, perfect, laughing, interest, excited, focused, understood quickly, enjoyed, spent more time, loved, works well, and easy. When viewing the affirmative form feedback, it was found that there was no affirmative feedback for prototypes in the lower form fidelity range between 1-2.9 out of 5. Examples of affirmative feedback relating to form are “These animals are really cute.” (Apple team, 3.4 form fidelity) and “The cloud at the top of the mountain was cool.” (Lemon team, 3.1 form fidelity).

Affirmative feedback regarding function appeared to have less connection with the level of fidelity. One interesting insight was that affirmative feedback on function was often in contradiction with the intended function of the model. At function fidelity of 2, the lowest classified function fidelity of the prototypes, the Strawberry team received feedback on their prototype (Figure 8) from the children stating, “I like how it [the train] bounces. It can go so fast [on the track]!”. Even though the function did not work well as intended, the children were still able to express a positive interaction with their interpreted function in the model. The intended function by the design team was that the train glided seamlessly along a magnetic track; however, the children created their own function of bouncing the train up-and-down along the track to create a “fun ride”. This affirmative feedback on the function also acts as divergent critical (perpendicular) feedback in that it was suggesting a new direction to the design team that was not intended to be present in the design, but this can only be learned when comparing it to the onset of design goals from the team. We classify this as affirmative feedback since children made affirming statements about the design, regardless of if those interpretations were correct.

Figure 8. Strawberry team’s first checkpoint prototype of a magnetic levitating toy train on buildable wooden train tracks (form fidelity = 2.8/5 and function fidelity = 2/5).

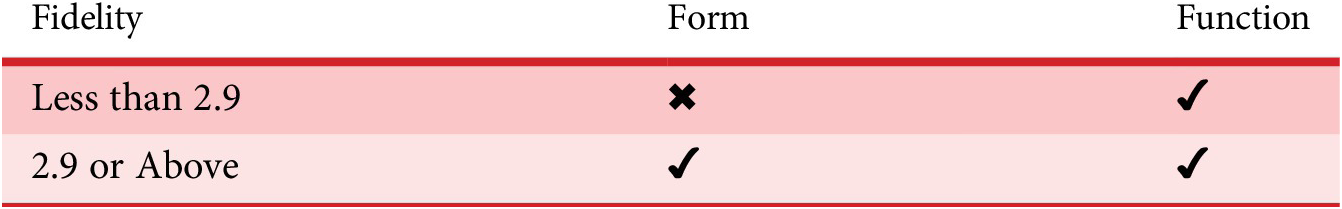

Table 3 displays whether form or function received affirmative feedback and in which range of fidelities. In Table 3, a checkmark indicates that affirmative feedback was given (function fidelity less than 2.9/5 and both form and function fidelity above 2.9/5), while an X indicates that affirmative feedback was not given (form fidelity less than 2.9). The cut off at 2.9 was chosen because that was the lowest in which affirmative feedback on form was given as well as being close to the middle of the range. This cut off was kept for all comparisons to make it easily comparable. This could indicate that children’s affirmative feedback may be impacted by the fidelity of a prototype in terms of form, but less so in terms of function.

Table 3. Affirmative feedback occurrence

6.3 Convergent critical feedback

Convergent critical feedback is related to areas for improvement on aspects that are already present in the prototype, typically because the quality of the prototype was lacking regarding form or function. Convergent critical feedback specifically refers to aspects that need to be designed or built better.

The convergent critical feedback the children gave referring to aspects of form did not vary much between low-fidelity and high-fidelity prototypes. Relating to size, the fidelity appeared to have little effect on whether the children gave feedback on that aspect. The children testers made it clear to the Strawberry team that the prototype with a form fidelity of 2.8 (Figure 8) was “kinda heavy.” At Checkpoint 2, the Raspberry team (3.7 form fidelity) also received feedback that the prototype was heavy (Figure 9e). The Cherry team, at checkpoint 3 with a form fidelity of 4 (Figure 9a), found that the prototype “was a little big for their small hands.”

Figure 9. Examples of Convergent Critical Feedback Prototypes listed from left to right: (a) Cherry team’s final Checkpoint 3 prototype of a musical rainbow toy, (b) Lemon team’s first checkpoint prototype of wooden topographic building blocks, (c) Lime team’s second checkpoint prototype of wooden stackable game of food objects, (d) Lime team’s final Checkpoint 3 prototype of refined wooden stackable game of food objects, and (e) Raspberry team’s Checkpoint 2 prototype of a musical rotating xylophone.

Confusion on form was present at all three checkpoints. At Checkpoint 1 (Figure 9b), the children stated “the sizes and different colors were slightly confusing” regarding the Lemon team’s topography block set (2.9 form fidelity). The children did not understand the reference to topography and the specific use of those colors and shapes. At Checkpoint 2 (Figure 9c), the Lime team found that the children “could identify all the food but the chicken” (4.9 form fidelity) and at checkpoint 3 (4.8 form fidelity) (Figure 9d) the children had “confusion on what some of the sandwich fillings were in terms of form.”

The feedback relating to sound was also given at two very distinct fidelities. One child tester, on the Strawberry team’s first prototype that had a form fidelity of 2.8 (Figure 8), stated “The noise it makes is not nice.” At checkpoint 3 with a form fidelity of 4 (Figure 9a), the Cherry team received feedback that “[the children] wished the drums sounded better.” The children’s convergent critical feedback related to form appears to have little relationship with the fidelity of the prototype.

Regarding function, the teams received convergent critical feedback at all fidelity levels. The type of feedback changed between checkpoints, depending on the team and the specific function in their models. With the prototypes of the five overall lowest ranked teams, the function fidelity at each stage was lower than the three highest ranked teams. By the last stage, the children were reporting the same issues as previously with the less successful teams. For example, for the Strawberry team, at Checkpoint 2 (function fidelity of 2.1, Figure 10a), the children noted, “It works very well with a section of track, but the train cannot go all the way around.” At checkpoint 3 (function fidelity of 3.8, Figure 10b), the students observed “grimaces showing the problem of pushing the train at the junction points between the pieces of tracks.” These two examples of feedback demonstrate that the problem of a difficult track still existed into the next prototype, meaning the team did not address that feedback from the prior model.

Figure 10. Examples of Convergent Critical Feedback Prototypes from left to right: (a) Strawberry team’s second checkpoint prototype of a magnetic levitating toy train on train tracks, (b) Strawberry team’s final prototype of a magnetic levitating toy train on train tracks, (c) Lemon team’s second checkpoint prototype of a marble sorting game, and (d) Lemon team’s final prototype of a marble sorting game.

The top three highest ranked teams overall saw changes in the convergent critical feedback by the last stage, suggesting that the prototypes evolved throughout the design process. For example, with the Lime team, at Checkpoint 2 (function fidelity of 4.3, Figure 9c), a specific part of the stacking game, the avocado, “caused some challenge.” At Checkpoint 3 (function fidelity of 4.7, Figure 9d), the children “were a bit confused by the end goal and how you won the game.” The children reported different convergent critical feedback at each stage. Table 4 shows that at all fidelities, children gave convergent critical feedback, a checkmark indicates that convergent critical feedback was given for both form and function fidelity less than and above 2.9/5.

Table 4. Convergent critical feedback occurrence

6.4 Divergent critical feedback

Divergent critical feedback is feedback that suggests a change beyond what is present in the prototype instead of suggestions to improve the qualities currently in the prototype like convergent critical feedback. This type of feedback was found to go in two different directions, parallel or perpendicular (Figure 11). Divergent critical feedback that is “parallel” includes suggestions that express the want for more of what is already present in the prototype. In Figure 11, this is represented by the star symbol in prototype 1 (top left) evolving to multiple stars in prototype 2 (top right), thus more of the same thing being requested in the design. Divergent critical feedback that is “perpendicular” includes suggestions that express inclusion of ideas beyond what it presented in the prototype. In Figure 11, this is represented by the same initial star symbol in prototype 1 (bottom left) evolving into a new geometric shape in prototype 2 (bottom right), thus something beyond what was initially shown in the design.

Figure 11. Illustration of how divergent critical feedback in terms of “parallel” (top) and “perpendicular” (bottom) feedback affects the prototype. Moving left to right you see a hexagon shape with a star symbol which represents prototype 1. Next to this is feedback from a person indicated with a person icon. Lastly, the type of feedback requested is shown in prototype 2 image for both parallel and perpendicular feedback.

For higher form fidelity models, the divergent critical feedback asked for more of the ideas already present. At Checkpoint 2 (4.8 form fidelity), the Plum team received the suggestions for “more colors,” “more colors of the same shape,” and “more smaller shapes.” Being that the prototype was a set of acrylic tangrams with puzzle cards, the prototype was built based on colors and shapes (Figure 9). Again, at Checkpoint 3 (4.7 form fidelity), the Plum team received similar suggestions: “Make more shapes and add more colors.” and “More familiar shapes like hearts or diamonds.” At Checkpoint 3 with a form fidelity of 4, the Cherry team received feedback that the children “wanted different mallets.” While the children did suggest changes, the changes did not go beyond what ideas were present in these higher fidelity models.

At Checkpoint 1, the Lime team already had a high form fidelity model with a fidelity of 4.5, then 4.9 at Checkpoint 2, and, finally, 4.8 at stage three. This team designed a stackable sandwich toy that consisted of various wooden foods. The divergent critical feedback this team received was related to adding ingredients. At Checkpoint 1, they received the suggestion to “add the other half of the tomato.” The prototype had half of a tomato but not the other side to it for it to be a whole tomato. At Checkpoint 3, the team reported that the children “had ideas of ingredients to add.” While these ingredients were different from the foods that were present in the prototype, they were still building on the idea that was present and not introducing new concepts to the design.

When divergent critical feedback was reported for lower fidelity form models, this feedback tended to be “perpendicular” in nature (Figure 11), meaning that the feedback includes ideas beyond what it presented in the prototype. At Checkpoint 1, the Strawberry team’s feedback from the children included this suggestion, “It would be even cooler if there were lights on the magnets.” The prototype was a low-fidelity prototype (rated 2.8) of a magnetically levitating train. There were no lights or indication of lights on the prototype. The child was able to project their own ideas onto the model. In the same feedback on that model, there was also the suggestion to make the train red. The students had not added color to their model except what the materials originally were. Again, the children were able to ideate what was not yet present in the model. For the Lemon team’s topographic toy in Checkpoint 1 (rated 2.9 for form fidelity), the children suggested for, “the lands [to] be natural colors like green or white for winter.” While this suggestion relates to an aspect that was already present on the prototype, color, it also relates to another idea, the change of seasons. At this checkpoint, the prototype did not reference seasonal changes, only topographic changes. Relating to form, the children provided divergent critical feedback that goes beyond the concepts present in the existing prototypes when the prototype is lower fidelity. Receiving perpendicular divergent critical feedback on lower form fidelity prototypes from children could be beneficial when the goal of a testing session is ideating with children during conceptual stage, since divergent thinking is valued. The more children that are involved in the design process, such as being informants or design partners (Druin Reference Druin2002), the better the set up should be to allow them to express their ideas freely. Giving the children a blank slate, such as a lower fidelity form prototype, allows them to see their own ideas on the prototype, which will help to enable them to have ideas to share.

For function-related divergent critical feedback, the feedback found was perpendicular, branching out from the original function. All fidelities of models received feedback on more ways to play or use the toy. However, the high-fidelity models received feedback on more uses in addition to feedback on the intended use. For their Checkpoint 1 model (rated 4), the Plum team reported, “[the children] also started their own imagination with the pieces.” This function of using their imagination to play with the pieces varied from the intended function which was to solve the puzzle card. At stage three (rated 4.8), they received similar feedback, “[The children] liked to make their own shapes out of the blocks.” Again, the children were adding another function to the product. The Lime team received similar feedback on their sandwich stacker prototype at Checkpoint 2 when their user stated, “They have a play kitchen and would like to be able to play with the food there too.” The user wanted to use the product in multiple formats. At Checkpoint 2, with a function fidelity of 4.7, the Raspberry team’s user understood how to move and play with the pieces but then they also “discovered a new way to spin” the toy. At Checkpoint 3, with a function fidelity of 4.6, the children wanted to add to the game play by adding a spinner, stating that “the spinner would take the game to the next level.” The children understood the base level of the game and wanted to expand on it. These examples of feedback demonstrate that the children understood the original functions of the prototypes and were able to add to those uses.

For low function fidelity prototypes, the divergent critical feedback involving the function was that the children created completely different functions than what was intended. Looking specifically at the Lemon team, at checkpoints 1 and 2, the children missed the intended function and developed their own function with the parts in front of them. At Checkpoint 1, with a function fidelity of 5, “they thought it was a puzzle and not a building set.” The children changed the function not because they thought it would also be fun to do a puzzle, but because that was how they interpreted the prototype, missing the intentions of the designers. At Checkpoint 2, with a function fidelity of 3.3, regarding the mountain game with various parts that could be moved around as part of the gameplay, “[the children] didn’t have any kind of strategy in mind–they just thought it was fun to fill all the holes.” Again, the children did not understand the intended function of gameplay. Because they did not realize there was a specific way to play, they created their own function. The Apple team had technical issues at Checkpoint 2. The goal of the prototype (rated 2.4) was to be a train that played specific songs when different characters with computer chips were inserted into the conductor’s seat. When the team conducted their tests with the user, only the physical functions worked. The children played with the animals “in more areas than the conductor seat.” The children were adding functions, the free play of the characters and train cars, however they were not able to interact with the intended function because it was not present in the model. This is an example of how low-fidelity function may cause the intended function to be missed and then the child creates their own definition for the use of the prototype. Table 5 is a summation of the fidelity ranges in which each type of divergent critical feedback was given. In Table 5, a checkmark indicates that divergent feedback was given (parallel feedback on form fidelity above 2.9/5, perpendicular feedback on form fidelity less than 2.9/5, and perpendicular feedback on function fidelity less than and above 2.9/5), while an X indicates that divergent feedback was not given (parallel feedback on form fidelity less than 2.9/5, perpendicular feedback on form fidelity above 2.9/5, and parallel feedback on function fidelity less than and above 2.9/5).

Table 5. Parallel or perpendicular divergent critical feedback occurrence

Table 6 is a summary of the results as an answer to the research question, “How does children’s feedback change based on the different fidelities of prototypes?” The results are organized by type of feedback as well as whether it related to form or function.

Table 6. Summary of findings related to types of feedback (affirmative, convergent critical, divergent critical) and prototype fidelity (form and function)

7 Discussion

7.1 Affirmative feedback and fidelity

From our results, we learned that the children did not give affirmative feedback on prototypes with a form fidelity lower than 2.9 out of 5. Not getting affirmation by the user could be discouraging to designers. However, this should not be a reason for designers to avoid using low form fidelity prototypes. These low-fidelity prototypes are usually quick renditions of an idea that can communicate the intentions of the designer to the stakeholder. In our study, we saw no connection between fidelity and function affirmative feedback. Children gave affirmative feedback on the functions of the models, even at times when they misunderstood the intended function they still enjoyed this misunderstood function. Putting more time into making higher fidelity form could postpone receiving feedback that could have a more impactful direction on the design. It is important that designers, especially new designers, have a realistic expectation of affirmative feedback. This is an area for continued future research, specifically how the form fidelity impacts the feedback received, along with developing heuristics and guidelines to prepare designers for building and testing prototypes.

Fidelity may not have affected the amount of ‘Affirmative Feedback’ relating to function, because children are often able to create functional interactions with any object as a way of making it “fun” and “entertaining” for them. Even if a child does not understand the intended function, they are often able to create their own function in the design, and thus be affirmative about that “made-up” function. Children find it humorous when something goes wrong, such as something falling or breaking (Loizou Reference Loizou2005; Loizou & Kyriakou Reference Loizou and Kyriakou2016). If a prototype is broken or working in a different way than the designer intended, the child may still find it entertaining and thus giving affirmative feedback on the function. Designers should be aware of this possible bias toward the absurd, understanding that affirmative feedback on function may not mean the prototype works well, but just that children found it entertaining. In this case, it may be good to have a second testing participant, such as a parent, to understand if it is entertaining in its concept or in its faults. Fidelity may not have affected the amount of ‘Convergent Critical Feedback’ relating to form and function because no matter how well the prototype is designed, if the child testing it identifies a problem, it will need to be fixed.

7.2 Divergent critical feedback and fidelity

Children in this study were more likely to give more divergent feedback, including new ideas for prototypes with lower form fidelity. Because a prototype with lower form fidelity looks less complete, there is space for children to project their own ideas onto the prototype. If the designer is at a stage where they are not down-selecting and converging, but instead they are still diverging in the conceptual stage, then they may seek gaining ideas from their users, in this case, the children during prototype testing. To encourage more ideas from children, it would be helpful to create a prototype that has a lower form fidelity. Because the researchers were unable to control the formal qualities that were presented in the prototypes, further research could be done that would be able to isolate the various form qualities (i.e., color, size). This research can give further insight into which qualities inhibit or encourage creativity from the children in their feedback relating to prototyping.

Divergent critical feedback relating to function was given at all levels of fidelity, however, there were differences in how the feedback was given. When divergent critical feedback was given for higher function fidelity, the feedback was given in addition to the intended function, demonstrating the children understood the original function. When this kind of feedback was given to lower function fidelity prototypes, the children only gave feedback on the non-intended use. If the designer’s goal in the testing session is to get feedback on the intended function, it would be helpful to create the function at a higher fidelity. If the designer wants to see how the children would naturally use the object without bias of a specific function, it may be helpful to create a lower function fidelity model.

7.3 Purposeful prototyping

Prototypes as critical design objects allow for communication between stakeholders (Lauff et al. Reference Lauff, Knight, Kotys-Schwartz and Rentschler2020; Deininger et al. Reference Deininger, Daly, Lee, Seifert and Sienko2019a). In this research, we looked at how children as stakeholders give feedback to design teams through prototypes. Understanding at what fidelity children are more likely to give specific types of feedback allows for more purposeful prototyping, or Prototyping for X, by the design team (Menold et al. Reference Menold, Jablokow and Simpson2017).

For example, if a design team wants to test for feasibility, this research shows that more teams received feasibility feedback at Checkpoint 1. Prototyping for feasibility is the “practice of creating prototypes that test the technical functionality of the design” (Menold et al. Reference Menold, Jablokow and Simpson2017). When we asked the students if they received feedback in the category ‘Feasibility,’ four teams reported receiving feedback at Checkpoint 1 while only two teams reported it at Checkpoints 2 and 3. The students could have been specifically seeking to understand the feasibility of the prototype during the first checkpoint testing session. Following the design concept of “form follows function” (Lambert Reference Lambert1993; Sullivan Reference Sullivan1896) the expected start for the students would have been to make sure the technical functionality works in the design. If this is the pattern the students were aiming to follow, it would have been beneficial for the students to know earlier in the design process if the concept works or not so that they could either fix the problem in the next iteration or be able to refine the prototype in other aspects, such as form. Each team should consider their goals and what they hope to learn from the children as the stakeholder before designing their prototype.

8 Conclusions

Children are a specific population of end user, and products developed for children should address their specific needs. One way to find these needs is to test prototypes with children. This paper begins to answer the question, “How does children’s feedback change based on the different fidelities of prototypes?” In this research, we looked at both fidelity as the product evolved chronologically through three prototyping checkpoints (low, medium, and high) as well as fidelity in terms of form and function. Through this analysis we learned children’s feedback can be affected by form and function fidelity of prototypes. For affirmative feedback, children are more affirmative toward products with higher form fidelity; relating to function, children are affirmative throughout the range of fidelities, even when those functions may be misunderstood. Critical feedback that suggests improving the current aspects of the prototype is given for form and function at all fidelities. Critical feedback that suggests additions be made to the prototype is also given at all fidelities. However, involving form, higher fidelity models are more likely to receive feedback that asks for more of the same aspects that are already present. Lower fidelity models are more likely to receive feedback to add aspects that are not yet present in the model. Lastly, a wider variety of feedback was given for Checkpoint 1, which was in general lower fidelity models, and then the amount of feedback categories decreased throughout the other two checkpoints. Teams received the most feedback from children on categories: play value, form, interaction, function, color, size, desirability, and feasibility and received the least feedback on categories: materials, safety, marketability, and viability.

In summary, children give helpful feedback that can direct the future direction of a product designed for them; the type of feedback given (affirmative, convergent critical, divergent critical) and categories of feedback (i.e. desirability, feasibility, viability) can change based on the fidelity of the prototype. Understanding the design team’s intent, “Prototyping for X”, along with understanding how children’s feedback changes with different fidelities can benefit designers in the planning and preparation of their models along with the analysis of their prototyping testing sessions.

Acknowledgements

We would like to thank Dr. Barry Kudrowitz for allowing us to use his course for data collection. We would like the thank the students in the class who provided us with their prototypes and feedback after each iteration. We would like to the thank the course lab instructors who gave feedback on prototype fidelity.

Financial support

This research was supported in part by the Kusske Design Initiative (KDI) and the Imagine Grant at the University of Minnesota – Twins Cities.

Appendix A

Prototype testing questionnaire – Google Form for End User

Please fill out this form after you have performed testing with your prototype. This survey needs to be filled out for EACH prototype that is created on your team and for EACH stakeholder group tested with at that stage.

-

1. Email Address

-

2. Team Color

-

3. Prototype Name

-

4. Which session of prototyping testing is this? Select one.

-

a. Stage 1 - Low fidelity prototypes (models due March 3rd)

-

b. Stage 2 - Medium fidelity prototypes (models due March 31st)

-

c. Stage 3 - High fidelity prototype (models due May 5th)

-

-

5. Which stakeholder group are you testing your prototype with during this testing session? Select one.

-

a. End user (i.e. child, elderly)

-

b. Parent or Caretaker

-

c. Expert (i.e. play/design/toys/industry)

-

-

6. What is your age group of your end user? Select all that apply.

-

a. 0-2 years

-

b. 2-4 years

-

c. 4-7 years

-

d. 7-11 years

-

e. 11-14 years

-

f. 14-18 years

-

g. 18-60 years

-

h. 60+ years

-

-

7. How many participants of this stakeholder group did you test with?

-

8. Where did testing take place?

-

9. What was the duration of the testing session?

-

10. Describe your prototype at this stage (materials, functionality, colors, size, etc.). (Example: Our prototype is made of cardboard, attached by hot glue, some stickers were added to serve as “functions,” and it is about 10x10x8 inches.)

-

11. Submit a picture of the prototype. Upload PDF file.

-

12. What were you hoping to learn from this testing session?

-

13. How long did the participant(s) interact with the product? Estimate in minutes for each person you tested with and list the times below. (For example, if you tested with two children please list: (1) child 1 for 10 minutes and (2) child 2 for 15 minutes.)

-

14. Which categories did you receive feedback in regarding the prototype? Select all that apply. If none of these categories apply, please select “Other” and type the category (or categories) that does apply.

-

a. Function

-

b. Form

-

c. Interaction

-

d. Size

-

e. Color

-

f. Material

-

g. Safety

-

h. Emotional

-

i. Play value

-

j. Marketability

-

k. Other: ______

-

-

15. Which category did you receive feedback in regarding the future product? Select all that apply. If none of these categories apply, please select “Other” and type the category (or categories) that does apply.

-

a. Feasibility

-

b. Desirability

-

c. Viability (includes Marketability)

-

d. Other: ______

-

-

16. What verbal feedback did you receive? Please provide examples or a summary.

-

17. What nonverbal feedback did you receive? Nonverbal can be gestures, facial expression, and other body language. Please provide examples or a summary.

-

18. What is your plan continuing forward? How will you implement the participant’s feedback?

-

19. How helpful did you find the feedback from the participant?

-

a. 1: Not Helpful

-

b. 2

-

c. 3

-

d. 4

-

e. 5: Very Helpful

-

-

20. Why did you choose that rating for helpfulness?