INTRODUCTION

Hospitalized patients are at risk for bloodstream infections (BSIs), especially within intensive-care units (ICUs). Most BSIs originate from central vascular catheters (CVCs) [Reference Crnich and Maki1] and they extend hospitalization, increase attributable costs of healthcare and mortality [Reference Rosenthal2]. The efficacy of nosocomial infection surveillance programmes has been demonstrated in both Western Europe and the United States [Reference Haley3, Reference Gastmeier4]. The Centers for Disease Control and Prevention (CDC) Intravenous Guidelines (introduced in the 1980s and updated in 2002) [Reference O'Grady5] are used throughout the United States and other countries. There is a high risk of contamination of intravenous (i.v.) fluids during set-up, admixture preparation, and administration [Reference Macias6, Reference Maki, Anderson and Shulman7] and there are additional risks of extrinsic contamination when the system is vented, as is mandatory with open infusion systems.

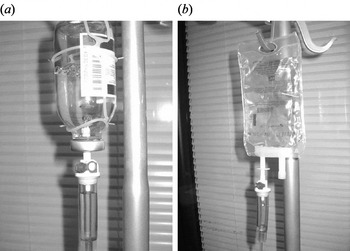

There are two types of i.v. infusion containers (open and closed infusion systems, Fig. 1) in use worldwide [Reference Rosenthal8, Reference Rosenthal and Maki9]. Open i.v. infusion containers are rigid (glass, burette) or semi-rigid plastic containers that must admit air to empty (air filter or needle). Closed i.v. infusion containers are fully collapsible plastic containers that do not require or use any external vent (air filter or needle) to empty the solution, and injection ports are self-sealing.

Fig. 1. Open and closed intravenous (i.v.) infusion containers. (a) Open i.v. infusion container: glass container with air filter. (b) Closed i.v. infusion container: fully collapsible plastic container without air filter.

In general, the use of closed infusion systems is being incorporated into standard practice to prevent healthcare-associated infections (HAI), e.g. catheter-associated urinary tract infections (CAUTI) [Reference Epstein10], ventilator-associated pneumonia (VAP) [Reference Tablan11], surgical site infection [Reference Mangram12], and central vascular catheter-associated bloodstream infections (CVC-BSI) [Reference Rosenthal8, Reference Rosenthal and Maki9]. Outbreaks of infusion-related BSI traced to contamination of infusate in open infusion systems have been reported in numerous countries [Reference Macias6, Reference Maki13–Reference Matsaniotis17]. Other studies have shown that extrinsic or in-use contamination plays the most important role in bacterial contamination of the infusion system [Reference Kilian18, Reference McAllister, Buchanan and Skolaut19]. The clinical impact and cost-effectiveness of closed i.v. infusion containers, compared to semi-rigid, plastic, open i.v. infusion containers, was studied in Argentina. This showed that switching from a semi-rigid plastic open container to a closed i.v. infusion container reduced the BSI rate by 64%. However, the time to onset of CVC-BSI was not determined [Reference Rosenthal and Maki9].

We report here the results of a prospective, sequential study undertaken to determine the impact of switching from an open (glass) to a closed, fully collapsible, plastic i.v. infusion container (Viaflo®, Baxter S.p.A, Italy) on rate and time to onset of CVC-BSI in Italy.

METHODS

Setting

The study was conducted at four ICUs in Sacco Hospital, a university hospital (Milan, Italy). Sacco has an active infection control programme with a physician trained in infectious diseases and two infection control nurses. Three of the four ICUs operate at the highest level of complexity, providing treatment for medical, surgical and trauma patients. The hospital ethics committee approved the protocol.

Data collection

Patients who had a CVC in place for ⩾24 h were enrolled from each of the study ICUs. A trained nurse prospectively recorded on case-report forms the patient's gender, average severity-of-illness score (ASIS) on ICU entry [Reference Emori20], device utilization, antibiotic exposure, and all active infections identified while in the ICU. The patient's physicians independently decided to obtain blood cultures. Standard laboratory methods were used to identify microorganisms recovered from positive blood cultures.

Definitions

United States Centers for Disease Control (CDC) National Nosocomial Infections Surveillance Systems (NNIS) programme definitions were used to define device-associated infections: CVC-BSI (laboratory-confirmed BSI; LCBI) and clinical primary nosocomial sepsis; CSEP). These definitions are given below.

Laboratory-confirmed BSI

Criterion 1

Patient had a recognized pathogen cultured from one or more percutaneous blood cultures, and the pathogen cultured from the blood was not related to an infection at another site. With common skin commensals (e.g. diphtheroids, Bacillus spp., Propionibacterium spp., coagulase-negative staphylococci, or micrococci), the organism was cultured from two or more blood cultures drawn on separate occasions.

Criterion 2

Patient had at least one of the following signs or symptoms: fever (>38°C), chills, or hypotension not considered to be related to an infection at another site.

Clinical primary nosocomial sepsis

Patient had at least one of the following clinical signs, with no other recognized cause: fever (>38°C), hypotension (systolic pressure <90 mmHg), or oliguria (<20 ml/h), although blood cultures were not obtained or no organisms were recovered from blood cultures. There was no apparent infection at any other site and the physician instituted treatment for sepsis [Reference Garner21].

Open infusion container

Rigid (glass, burette) or semi-rigid plastic containers that must admit air to empty (air filter or needle).

Closed infusion container

Fully collapsible plastic containers that do not require or use any external vent (air filter or needle) to empty the solution, and the injection ports are self-sealing.

Investigational products

Baxter Viaflo® (Baxter S.p.A), a fully collapsible plastic bag, was used during the closed period. Commercially available glass, open infusion system products were used during the open period.

Study design

Active surveillance for CVC-BSI and compliance with infection control practices continued throughout the study using CDC NNIS methodologies, definitions and criteria [Reference Emori20]. The study was designed with a lead-in period followed by the open and closed container periods. The lead-in period was designed to measure baseline incidence of CVC-BSI and to standardize hand hygiene (HH) and CVC care compliance. Both the open and closed container periods covered the same period of time and lasted an equal number of months (March 2004–February 2005 and March 2005–February 2006, respectively).

Protocol-specified target HH and CVC care compliance was set at ⩾70% and ⩾95%, respectively. We assessed HH compliance [Reference Rosenthal22], placement of gauze on CVC insertion sites [Reference Rosenthal23, Reference Higuera24], condition of gauze dressing (absence of blood, moisture and gross soiling; occlusive coverage of insertion site) [Reference Rosenthal23, Reference Higuera24], and documentation for date of CVC insertion. A research nurse observed healthcare workers (physicians, nurses, and paramedical staff) twice weekly across all work shifts, and recorded information on a standard form.

Data analysis

Outcomes measured during the open and closed periods included the incidence density rate of CVC-BSI (number of cases/1000 CVC days) and time to CVC-BSI of patients. χ2 analyses for dichotomous variables and t test for continuous variables were used to analyse baseline differences between periods. Unadjusted relative risk (RR) ratios, 95% confidence intervals (CI) and P values were determined for all primary and secondary outcomes. Time to first BSI was analysed using a log-rank test and presented graphically using Kaplan–Meier curves. In addition, simple life-table conditional probabilities are presented graphically to help explain the changing risk of infection over time (Fig. 2). A Cox proportional hazards analysis was performed to estimate the hazard function. No formal testing of the proportional hazards assumption was performed. However, the plot of estimated survival function showed that this assumption did not appear to be severely violated.

Fig. 2. Cumulative probability of first bloodstream infection (BSI) displayed by days on central vascular catheter (CVC). Numbers at risk for closed (![]() ) vs. open (

) vs. open (![]() ) containers: closed (day 0=507, day 4=505, day 7=123, day 10=30, day 12=10); open (day 0=558, day 4=553, day 7=116, day 10=28, day 12=7).

) containers: closed (day 0=507, day 4=505, day 7=123, day 10=30, day 12=10); open (day 0=558, day 4=553, day 7=116, day 10=28, day 12=7).

RESULTS

During the study, 1173 patients were enrolled: 608 during the open period, and 565 during the closed period. Patients in both periods were statistically similar regarding patient demographics, underlying illness, length of stay, device utilization and antibiotic usage, exceptions being ASIS score, abdominal surgery and stroke (Table 1).

Table 1. Patient demographics, underlying illness, length of stay, CVC-device utilization and antibiotic usage during the two study periods

RR, Relative risk; CI, confidence interval; COPD, chronic obstructive pulmonary disease; ICU, intensive care unit; CVC, central vascular catheter.

HH compliance during both periods was >70% (73·2% and 86·6% during the open and closed periods, respectively; RR 1·18, 95% CI 1·15–1·22). The presence of gauze at CVC site was 98·3% and 98·8% during the open and closed periods, respectively (RR 1·01, 95% CI 1·00–1·01) and the correct condition of gauze was 95·8% and 97·0% during the same periods (RR 1·01, 95% CI 1·01–1·02).

For CVC-BSI, the incidence density rate and percentage of patients were each statistically significantly lower in the closed compared to the open period (Table 2). The distribution of microorganisms is given in Table 3.

Table 2. Incidence of CVC-associated BSI (LCBI and CSEP), CAUTI, VAP, and mortality during the two study periods

CVC, Central vascular catheter; BSI, bloodstream infection; LCBI, laboratory-confirmed bloodstream infections; CSEP, clinical primary nosocomial sepsis; CAUTI, catheter-associate urinary tract infection; VAP, ventilator-associated pneumonia; RR, relative risk; CI, confidence interval.

Table 3. Microbial profile of CVC-associated BSI (LCBI) during the two study periods

CVC, Central vascular catheter; BSI, bloodstream infection; LCBI, laboratory-confirmed bloodstream infections.

We examined the timing of when the first CVC-BSI was acquired comparing the open and closed i.v. infusion containers (Fig. 2). The majority (70%) of patients had a CVC in place for ⩽4 days. In the closed period, the timing of the first CVC-BSI remained relatively constant (0·8% at days 1–3 to 1·4% at days 7–9), whereas during the open period it increased (2% at days 1–3 to 5·8% at days 7–9). Overall, the chance of a patient acquiring a CVC-BSI was significantly decreased by 61% in the closed period (Cox proportional hazard ratio 0·39, P=0·0043). There was no statistically significant difference between the two periods with respect to incidence of CAUTI, VAP or mortality (Table 2).

DISCUSSION

Central venous access for administration of large volumes of i.v. fluid, medications, blood products, or for haemodynamic monitoring is commonly required for critically ill patients. Unfortunately, the use of CVCs carries a substantial risk of BSI [Reference Rosenthal8, Reference Ramirez25–Reference Leblebicioglu33]. When CVC-BSI occurs, studies have shown increased length of stay, increased cost and increased attributable mortality [Reference Rosenthal2, Reference Stone, Braccia and Larson34, Reference Higuera35]. In Mexico, Higuera et al. found that CVC-BSI resulted in an extra 6 days and cost of US$11 560 [Reference Higuera35], while in Argentina, Rosenthal et al. reported an extra 12 days and cost of US$4888 for CVC-BSI [Reference Rosenthal2]. A recent meta-analysis of the cost studies published in the last 5 years found that the average cost of one BSI was US$36 441 (range US$1822–107 156) [Reference Stone, Braccia and Larson34]. Most importantly, CVC-BSIs are apparently related to increased attributable mortality as reported by Pittet and colleagues [Reference Pittet and Wenzel36, Reference Pittet, Tarara and Wenzel37] who cited an attributable mortality of 25%. Indeed, CVC-BSIs are largely preventable [Reference O'Grady5, Reference Jarvis38] and randomized trials have documented the efficacy of simple interventions, including, but not limited to, mandating use of maximal barrier precautions during CVC insertion [Reference Raad39]. Implementation of infection control programmes (outcome and process surveillance plus education and performance feedback) has been shown to be effective in reducing rates of CVC-BSI [Reference Rosenthal23, Reference Higuera24].

Most epidemics of infusion-related BSI have been a direct consequence of contamination of infusate or catheter hubs [Reference Crnich and Maki1]. Intrinsic contamination of parenteral fluids (microorganisms introduced during manufacture) is now considered very rare in North America [Reference Crnich and Maki1]. Widespread use of closed infusion systems has also reduced the risk of extrinsic contamination of infusate during administration in the hospital setting.

Many hospitals throughout the world use open infusion systems. In this study, a glass, open i.v. infusion container was associated with a high rate of CVC-BSI, whereas switching to a fully collapsible, closed i.v. infusion container significantly reduced the BSI rate. To evaluate the effect of time on CVC-BSI, the probability of developing a CVC-BSI was assessed in 3-day intervals during each period. In the 2002 CDC guidelines [Reference O'Grady5] the recommendation was to not routinely replace CVC at fixed intervals. The BSI rate, here, during the closed period remained constant and achieved levels reported in the NNIS, whereas, the probability of a BSI during the open period significantly increased over time and was higher than those reported in the NNIS. Thus, by using this closed system the CDC guidelines can be followed.

When comparing results of CVC-BSI between studies, it is important to display and assess the distribution of time of CVC use across patients in order to avoid being misled by a cross-study comparison. For example, when the hazard function is not constant, a study with a preponderance of 2–4 CVC days per patient compared to a study with a preponderance of 10–12 CVC days per patient would have vastly different observed CVC-BSI rates even when the total number of CVC days in each study, the patient population and all other characteristics of study design and conduct are identical.

While no differences were found in mortality rates between the two periods, it should be noted that the power to detect such differences in the study was low due to the small study sample size (relative to a mortality endpoint), the few BSI-related deaths, and the short length of follow-up (relative to a mortality endpoint).

The best approach to minimize bias is a single-stage, blinded, randomized study. This type of study mitigates the potential effect of changes over time (e.g. reporting classifications, diagnostic techniques, seasonality, staffing, and educational programmes) as well as baseline differences such as demographics, disease severity. It was not practical to conduct this type of study because the interventional products were different structurally and the healthcare workers would have readily discerned the difference. To minimize the effect of confounding factors, the following controls were implemented. No new infection control interventions, training programmes, products or technologies were introduced during the study periods and all of the investigators, key study personnel, classifications and diagnostics techniques remained constant throughout the entire study. The time effect was mitigated by equal 12-month periods covering all seasons of the year. A lead-in period was performed to standardize HH and CVC care compliance practice.

In addition, the following analyses show that the confounding effects were minimal. CAUTI and VAP were analysed to determine if there was any change in the ICU that might impact other healthcare-associated infections. We found a significant reduction of CVC-BSI rate but no reduction in the rates of CAUTI or VAP. Thus, the change to a closed infusion container was associated only with the reduced CVC-BSI rate in the study. Although HH compliance increased between the open and closed container periods, there is no published evidence showing that HH compliance >70% is associated with a further reduction in CVC-BSI rate. In addition, nurse-to- patient ratios and bed occupancy rates were comparable over both study periods. The baseline effect was minimal since the two groups had similar baseline distributions. Although not presented in the present study, effects of baseline covariates were examined. Exploratory analyses showed that no baseline variable(s), either individually or collectively, affected the overall conclusion.

In a second-level general teaching hospital in Mexico, Munoz et al. [Reference Munoz15] cultured running i.v. infusions and found a 29·6% contamination rate during a baseline period. However, a multi-centre cross-sectional study in the same country reported a 2% contamination rate and the authors highlighted lapses in aseptic technique, and breaks in the infusion system while injecting i.v. medications as risk factors for in-use contamination [Reference Macias6]. The CDC HICPAC guideline for prevention of CVC-BSI recommends limiting manipulations of and entry into running infusions, and that persons handling or entering an infusion should first wash their hands or wear clean gloves [Reference O'Grady5].

Our findings pose questions about the safety of all open i.v. infusion containers (rigid glass, burette or semi-rigid plastic containers). We have demonstrated that the adoption of a closed i.v. infusion container will prevent cases of CVC-BSI. Many hospitals still use open rigid or semi-rigid i.v. fluid containers which must be vented to allow ambient air entry and fluid egress. Switching to closed, non-vented, fully collapsible bags, could substantially reduce rates of CVC-BSI.

ACKNOWLEDGEMENTS

Baxter S.p.A. Italy sponsored this study at Sacco Hospital, Milan, Italy, and provided Baxter Viaflo® products. We acknowledge the many healthcare professionals at Sacco Hospital who helped make this study possible.

DECLARATION OF INTEREST

Baxter Healthcare provided financial support to Dr Rosenthal to serve as the infection control coordinator for this study.