1. Introduction

Psychometric investigation on cognitive ability and speed has a long and rich history (e.g., Carroll Reference Carroll1993; Gulliksen Reference Gulliksen1950; Luce Reference Luce1986; Thorndike et al. Reference Thorndike, Bregman, Cobb and Woodyard1926). In the Reference Thorndike, Bregman, Cobb and Woodyard1926 monograph, Thorndike et al. stated that “level”, “extent”, and “speed” are three distinct aspects in any measure of performance: While both “level” and “extent” are manifested by correctness of answers and thus can be collectively translated to ability in modern terminology, “the speed of producing any given product is defined, of course, by the time required” (Thorndike et al. Reference Thorndike, Bregman, Cobb and Woodyard1926, p. 26). The prevalence of computerized test administration and data collection in recent years facilitates the acquisition of response-time (RT) data at the level of individual test items. In parallel, we witnessed a mushrooming development of psychometric models for item responses and RT over the past few decades (see De Boeck & Jeon, Reference De Boeck and Jeon2019; Goldhammer, Reference Goldhammer2015, for reviews), which in turn gave rise to broader investigations on the relationship between response speed and accuracy in various substantive domains (see Lee & Chen, Reference Lee and Chen2011; Kyllonen & Zu, Reference Kyllonen and Zu2016; von Davier et al., Reference von Davier, Khorramdel, He, Shin and Chen2019, for reviews). Empirical findings suggested that response speed not only composes proficiency or informs the construct to be measured but also bespeaks secondary test-taking behaviors such as rapid guessing (Deribo et al., Reference Deribo, Kroehne and Goldhammer2021; Wise, Reference Wise2017), using preknowledge (Qian et al., Reference Qian, Staniewska, Reckase and Woo2016; Sinharay, Reference Sinharay2020; Sinharay and Johnson, Reference Sinharay and Johnson2020), lacking motivation (Finn, Reference Finn2015; Thurstone, Reference Thurstone1937; Wise and Kong, Reference Wise and Kong2005), etc.

Characterizing individual differences in ability and speed with item responses and RT data is in essence a factor analysis problem (Molenaar et al., Reference Molenaar, Tuerlinckx and van der Maas2015a, Reference Molenaar, Tuerlinckx and van der Maasb). The two-factor simple-structure model proposed by van der Linden (Reference van der Linden2007) was arguably the most popular modeling option so far: Item responses and log-transformed RT variables are treated as two independent clusters of observed indicators for the ability and speed/slowness factors, respectively, and the two latent factors jointly follow a bivariate normal distribution (see Fig. 2 of Molenaar et al., Reference Molenaar, Tuerlinckx and van der Maas2015a, for a path-diagram representation). A notable merit of the simple-structure factor model is its plug-and-play nature: Analysts can separately apply standard item response theory (IRT) models for discrete responses (e.g., one-, two-, three-, or four- parameter logistic [1-4PL] model; Birnbaum, Reference Birnbaum1968; Barton & Lord, Reference Barton and Lord1981) and standard factor analysis models for the continuous log-RT variables (e.g., linear-normal factor model; Jöreskog, Reference Jöreskog1969), and then simply let the two latent factors covary. Despite its succinctness and popularity, the simple-structure model may fit poorly to empirical data. A highly endorsed interpretation for the lack of fit is that the two inter-dependent latent factors cannot fully explain the dependencies among item-level responses and RT variables. Based on this rationale, numerous diagnostics for residual dependencies and remedial modifications of the simple-structure model have been proposed in the recent literature (e.g., Bolsinova et al., Reference Bolsinova, De Boeck and Tijmstra2017; Bolsinova & Maris, Reference Bolsinova and Maris2016; Bolsinova & Molenaar, Reference Bolsinova and Molenaar2018; Bolsinova et al., Reference Bolsinova, Tijmstra and Molenaar2017; Bolsinova & Tijmstra, Reference Bolsinova and Tijmstra2016; Glas & van der Linden, Reference Glas and van der Linden2010; Meng et al., Reference Meng, Tao and Chang2015; Ranger & Ortner, Reference Ranger and Ortner2012; van der Linden & Glas, Reference van der Linden and Glas2010).

Augmenting standard IRT models with a measurement component for item-level RT may result in more precise ability scores, which is often highlighted as a practical benefit of RT modeling in educational assessment (Bolsinova and Tijmstra, Reference Bolsinova and Tijmstra2018; van der Linden et al., Reference van der Linden, Klein Entink and Fox2010). Under a simple-structure model with bivariate normal factors, the degree to which item-level RT improves scoring precision is dictated by the strength of the inter-factor correlation (see Study 1 of van der Linden et al., Reference van der Linden, Klein Entink and Fox2010). However, near-zero correlation estimates between ability and speed were sometimes encountered in real-world applications (e.g., Bolsinova, De Boeck, & Tijmstra, Reference Bolsinova, De Boeck and Tijmstra2017; Bolsinova, Tijmstra, & Molenaar, Reference Bolsinova, Tijmstra and Molenaar2017; Lee & Jia, Reference Lee and Jia2014; van der Linden, Scrams, & Schnipke, Reference van der Linden, Scrams and Schnipke1999). Whenever it happens, analysts are inclined to conclude that item-level RT is not useful for ability estimation at all, or that a less parsimonious factor structure is needed to enhance the utility of RT for scoring purposes (e.g., allowing the log-RT variables to cross-load on the ability factor; Bolsinova & Tijmstra, Reference Bolsinova and Tijmstra2018).

Indeed, van der Linden’s (Reference van der Linden2007) model could be overly restrictive for analyzing item responses and RT data. We, however, do not want to rush to the conclusion that it is the simple factor structure that should be blamed and abandoned. Other parametric assumptions, such as link functions, linear or curvilinear dependencies, and distributions of latent traits and error terms, are also part of the model specification and may contribute to the misfit as well. A fair evaluation on the tenability and usefulness of a simple factor structure demands a version of the model with minimal parametric assumptions other than the simple factor structure itself, which we refer to as a semiparametric simple-structure model. Should the semiparametric model still struggle to fit the data adequately, we no longer hesitate to give up on the simple factor structure.

Fortunately, the major components of a semiparametric simple-structure factor analysis have been readily developed in the existing literature. They are

-

1. A semiparametric (unidimensional) IRT model for dichotomous and polytomous responses (Abrahamowicz and Ramsay, Reference Abrahamowicz and Ramsay1992; Rossi et al., Reference Rossi, Wang and Ramsay2002);

-

2. A semiparametric (unidimensional) factor model for continuous log-RT variables (Liu and Wang, Reference Liu and Wang2022)

-

3. A nonparametric copula density estimator for ability and speed/slowness with fixed marginals (Kauermann et al., Reference Kauermann, Schellhase and Ruppert2013; Dou et al., Reference Dou, Kuriki, Lin and Richards2021).

As a side remark, we are aware of alternative semiparametric approaches that can be used for each of the above three components: for example, the monotonic polynomial logistic model for item responses (Falk and Cai, Reference Falk and Cai2016a, Reference Falk and Caib), the proportional hazard model (Kang, Reference Kang2017; Ranger and Kuhn, Reference Ranger and Kuhn2012; Wang et al., Reference Wang, Fan, Chang and Douglas2013b) and the linear transformation model (Wang et al., Reference Wang, Chang and Douglas2013a) for item-level RT, and the finite normal mixture model (Bauer, Reference Bauer2005; Pek et al., Reference Pek, Sterba, Kok and Bauer2009) and the Davidian curve model (Woods and Lin, Reference Woods and Lin2009; Zhang and Davidian, Reference Zhang and Davidian2001; Zhang et al., Reference Zhang, Wang, Weiss and Tao2021) for the joint distribution of latent traits. However, we focus on methods based on smoothing splines in the current analysis. Besides, the simultaneous incorporation of flexible models for all the three components of a simple structure model appears to be novel in the literature of RT modeling. Compared to, e.g., Wang et al. (Reference Wang, Chang and Douglas2013a) and Wang et al. (Reference Wang, Fan, Chang and Douglas2013b), in which semiparametric models were applied to only the RT data, our model fares more flexible and thus is more likely to reveal sophisticated dependency patterns in a joint analysis of item responses and RT data.

By retrospectively analyzing a set of mathematics testing data from the 2015 Programme for International Student Assessment (PISA; OECD, 2016), we revisit the following research questions that have only been partially answered previously through parametric simple-structure models:

-

(1) Is a simple factor structure sufficient for a joint analysis of item response and RT?

-

(2) How strong are math ability and general processing speed associated in the population of respondents?

-

(3) To what extent can processing speed improve the precision in ability estimates under a simple-structure model?

It is worth mentioning that the data set was previously analyzed by Zhan et al. (Reference Zhan, Liao and Bian2018) using a variant of van der Linden’s (Reference van der Linden2007) simple-structure model with testlet effects: A higher-order cognitive diagnostics model with testlet effects was used for item responses, a linear-normal factor model was used for log-transformed RT, and the (higher-order) ability and speed factors were assumed to be bivariate normal. Zhan et al. (Reference Zhan, Liao and Bian2018) reported an estimated inter-factor correlation of

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$-0.2$$\end{document}

![]() and hence concluded that the association between speed and ability is weak. We are particularly interested in whether their conclusion stands after abandoning inessential parametric assumptions other than the simple factor structure.

and hence concluded that the association between speed and ability is weak. We are particularly interested in whether their conclusion stands after abandoning inessential parametric assumptions other than the simple factor structure.

The rest of the paper is organized as follows. We first provide a technical introduction of the proposed semiparametric procedure in Sect. 2: The three components of the semiparametric simple-structure model are formulated in Sects. 2.1 and 2.2, penalized maximum likelihood (PML) estimation and empirical selection of penalty weights are outlined in Sect. 2.3, and bootstrap-based goodness-of-fit assessment and inferences are described in Sects. 2.4 and 2.5. Descriptive statistics for the 2015 PISA mathematics data and a plan of our analysis are summarized in Sect. 3, followed by a detailed report of results in Sect. 4. The paper concludes with a discussion of broader implications of our findings and limitations of our method.

2. Methods

2.1. Unidimensional Semiparametric Factor Models

Let

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$Y_{ij}\in {\mathcal {Y}}_j\subset {\mathbb {R}}$$\end{document}

![]() be the jth manifest variable (MV) observed for respondent i:

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$Y_{ij}$$\end{document}

be the jth manifest variable (MV) observed for respondent i:

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$Y_{ij}$$\end{document}

![]() represents either a discrete response to a test item or a continuous item-level RT. In our semiparametric factor model, the distribution of

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$Y_{ij}$$\end{document}

represents either a discrete response to a test item or a continuous item-level RT. In our semiparametric factor model, the distribution of

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$Y_{ij}$$\end{document}

![]() is characterized by the following logistic conditional densityFootnote 1 of

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$Y_{ij} = y\in {\mathcal {Y}}_j$$\end{document}

is characterized by the following logistic conditional densityFootnote 1 of

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$Y_{ij} = y\in {\mathcal {Y}}_j$$\end{document}

![]() given a unidimensional latent variable (LV; also known as latent factor, latent trait, etc.)

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$X_i = x\in {\mathcal {X}}\subset {\mathbb {R}}$$\end{document}

given a unidimensional latent variable (LV; also known as latent factor, latent trait, etc.)

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$X_i = x\in {\mathcal {X}}\subset {\mathbb {R}}$$\end{document}

![]() :

:

in which the normalizing integral with respect to the dominating measure

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\mu _j$$\end{document}

![]() on

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${\mathcal {Y}}_j$$\end{document}

on

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${\mathcal {Y}}_j$$\end{document}

![]() is assumed to be finite. Equation 1 defines a valid conditional density as it is non-negative and integrates to unity with respect to y for a given x. However, the bivariate function

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$g_j: {\mathcal {X}}\times {\mathcal {Y}}_j\rightarrow {\mathbb {R}}$$\end{document}

is assumed to be finite. Equation 1 defines a valid conditional density as it is non-negative and integrates to unity with respect to y for a given x. However, the bivariate function

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$g_j: {\mathcal {X}}\times {\mathcal {Y}}_j\rightarrow {\mathbb {R}}$$\end{document}

![]() is not identifiable: It is not difficult to see that adding any univariate function of x to

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$g_j(x, y)$$\end{document}

is not identifiable: It is not difficult to see that adding any univariate function of x to

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$g_j(x, y)$$\end{document}

![]() does not change the value of Eq. 1 (Gu, Reference Gu1995, Reference Gu2013). To impose necessary identification constraints, we re-write

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$g_j$$\end{document}

does not change the value of Eq. 1 (Gu, Reference Gu1995, Reference Gu2013). To impose necessary identification constraints, we re-write

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$g_j$$\end{document}

![]() by the functional analysis of variance (fANOVA) decomposition

by the functional analysis of variance (fANOVA) decomposition

and require that

for some reference levels

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$x_0\in {\mathcal {X}}$$\end{document}

![]() and

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$y_0\in {\mathcal {Y}}_j$$\end{document}

and

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$y_0\in {\mathcal {Y}}_j$$\end{document}

![]() . Equation 3 is referred to as side conditions;

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$x_0$$\end{document}

. Equation 3 is referred to as side conditions;

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$x_0$$\end{document}

![]() and

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$y_0$$\end{document}

and

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$y_0$$\end{document}

![]() can be set arbitrarily within the respective domains (see Liu & Wang, Reference Liu and Wang2022, for more detailed comments). The univariate component

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$g_j^y$$\end{document}

can be set arbitrarily within the respective domains (see Liu & Wang, Reference Liu and Wang2022, for more detailed comments). The univariate component

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$g_j^y$$\end{document}

![]() and the bivariate component

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$g_j^{xy}$$\end{document}

and the bivariate component

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$g_j^{xy}$$\end{document}

![]() are functional parameters to be estimated from observed data.

are functional parameters to be estimated from observed data.

Let

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\varvec{\psi }_j: {\mathcal {Y}}_j\rightarrow {\mathbb {R}}^{L_j}$$\end{document}

![]() be a collection of

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$L_j$$\end{document}

be a collection of

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$L_j$$\end{document}

![]() basis functions defined on the support of

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$Y_{ij}$$\end{document}

basis functions defined on the support of

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$Y_{ij}$$\end{document}

![]() , and

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\varvec{\varphi }: {\mathcal {X}}\rightarrow {\mathbb {R}}^K$$\end{document}

, and

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\varvec{\varphi }: {\mathcal {X}}\rightarrow {\mathbb {R}}^K$$\end{document}

![]() be a collection of K basis functions defined on the support of

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$X_i$$\end{document}

be a collection of K basis functions defined on the support of

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$X_i$$\end{document}

![]() . We proceed to approximate the functional parameters by basis expansion. In particular, we set the univariate component

. We proceed to approximate the functional parameters by basis expansion. In particular, we set the univariate component

in which the coefficient vector

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\varvec{\alpha }_j\in {\mathbb {R}}^{L_j}$$\end{document}

![]() satisfies

satisfies

Similarly, the bivariate component is expressed as

in which the coefficient matrix

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${\textbf{B}}_j\in {\mathbb {R}}^{L_j\times K}$$\end{document}

![]() satisfies

satisfies

The linear constraints imposed for the coefficients

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\varvec{\alpha }_j$$\end{document}

![]() and

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${\textbf{B}}_j$$\end{document}

and

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${\textbf{B}}_j$$\end{document}

![]() (Eqs. 5 and 7) guarantee that the side conditions (Eq. 3) are satisfied.

(Eqs. 5 and 7) guarantee that the side conditions (Eq. 3) are satisfied.

Continuous Data When both

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$X_i\in {\mathcal {X}}$$\end{document}

![]() and

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$Y_{ij}\in {\mathcal {Y}}_j$$\end{document}

and

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$Y_{ij}\in {\mathcal {Y}}_j$$\end{document}

![]() (equipped with the Lebesgue measure

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\mu _j$$\end{document}

(equipped with the Lebesgue measure

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\mu _j$$\end{document}

![]() ) are continuous random variables defined on closed intervals, Eq. 1 corresponds to the semiparametric factor model considered by Liu and Wang (Reference Liu and Wang2022). Without loss of generality, let

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${\mathcal {X}}= {\mathcal {Y}}_j = [0, 1]$$\end{document}

) are continuous random variables defined on closed intervals, Eq. 1 corresponds to the semiparametric factor model considered by Liu and Wang (Reference Liu and Wang2022). Without loss of generality, let

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${\mathcal {X}}= {\mathcal {Y}}_j = [0, 1]$$\end{document}

![]() . In fact, any closed interval can be rescaled to the unit interval via a linear transform: If

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$z\in [a, b]$$\end{document}

. In fact, any closed interval can be rescaled to the unit interval via a linear transform: If

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$z\in [a, b]$$\end{document}

![]() ,

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$a < b$$\end{document}

,

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$a < b$$\end{document}

![]() , then

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$(z - a)/(b - a)\in [0, 1]$$\end{document}

, then

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$(z - a)/(b - a)\in [0, 1]$$\end{document}

![]() . To approximate smooth functional parameters supported on unit intervals or squares, we use the same cubic B-spline basis with equally spaced knots (De Boor, Reference De Boor1978) for both

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\varvec{\psi }_j$$\end{document}

. To approximate smooth functional parameters supported on unit intervals or squares, we use the same cubic B-spline basis with equally spaced knots (De Boor, Reference De Boor1978) for both

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\varvec{\psi }_j$$\end{document}

![]() and

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\varvec{\varphi }$$\end{document}

and

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\varvec{\varphi }$$\end{document}

![]() (and thus

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$L_j = K$$\end{document}

(and thus

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$L_j = K$$\end{document}

![]() ). It is sometimes desirable to force the MV to be stochastically increasing as the LV increases. Liu and Wang (Reference Liu and Wang2022) considered a simple approach to impose likelihood-ratio monotonicity, which boils down to the following linear inequality constraints on the coefficient matrix

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${\textbf{B}}_j$$\end{document}

). It is sometimes desirable to force the MV to be stochastically increasing as the LV increases. Liu and Wang (Reference Liu and Wang2022) considered a simple approach to impose likelihood-ratio monotonicity, which boils down to the following linear inequality constraints on the coefficient matrix

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${\textbf{B}}_j$$\end{document}

![]() :

:

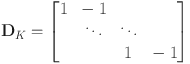

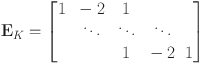

In Eq. 8,

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\hbox {vec}(\cdot )$$\end{document}

![]() denotes the vectorization operator, and

denotes the vectorization operator, and

is a

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$(K - 1)\times K$$\end{document}

![]() first-order difference matrix. We also set

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${\textbf{D}}_1 = 1$$\end{document}

first-order difference matrix. We also set

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${\textbf{D}}_1 = 1$$\end{document}

![]() by convention.

by convention.

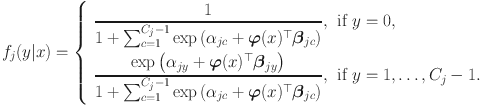

Discrete Data When

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${\mathcal {Y}}_j = \{0, \dots , C_j - 1\}$$\end{document}

![]() and

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\mu _j$$\end{document}

and

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\mu _j$$\end{document}

![]() is the associated counting measure, let

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$y_0 = 0$$\end{document}

is the associated counting measure, let

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$y_0 = 0$$\end{document}

![]() ,

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$L_j = C_j - 1$$\end{document}

,

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$L_j = C_j - 1$$\end{document}

![]() , and

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\varvec{\psi }_j(y) = (\psi _{j1}(y), \dots , \psi _{j,C_j-1}(y))^\top $$\end{document}

, and

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\varvec{\psi }_j(y) = (\psi _{j1}(y), \dots , \psi _{j,C_j-1}(y))^\top $$\end{document}

![]() such that

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\psi _{jk}(y) = 1$$\end{document}

such that

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\psi _{jk}(y) = 1$$\end{document}

![]() if

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$y = k$$\end{document}

if

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$y = k$$\end{document}

![]() and 0 if

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$y\ne k$$\end{document}

and 0 if

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$y\ne k$$\end{document}

![]() . Then our generic model (Eqs. 1 and 2) reduces to Abrahamowicz and Ramsay’s (1992) multi-categorical semiparametric IRT model for unordered polytomous responses, which is further equivalent to the semiparametric logistic IRT proposed by Ramsay and Winsberg (Reference Ramsay and Winsberg1991) and Rossi et al. (Reference Rossi, Wang and Ramsay2002) when

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$C_j = 2$$\end{document}

. Then our generic model (Eqs. 1 and 2) reduces to Abrahamowicz and Ramsay’s (1992) multi-categorical semiparametric IRT model for unordered polytomous responses, which is further equivalent to the semiparametric logistic IRT proposed by Ramsay and Winsberg (Reference Ramsay and Winsberg1991) and Rossi et al. (Reference Rossi, Wang and Ramsay2002) when

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$C_j = 2$$\end{document}

![]() (i.e., dichotomous data). It is because the basis expansions (i.e., Eqs. 4 and 6) are simplified to

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$g_j^y(y) = \alpha _{jy}$$\end{document}

(i.e., dichotomous data). It is because the basis expansions (i.e., Eqs. 4 and 6) are simplified to

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$g_j^y(y) = \alpha _{jy}$$\end{document}

![]() and

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$g_j^{xy}(x, y) = \varvec{\varphi }(x)^\top \varvec{\beta }_{jy}$$\end{document}

and

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$g_j^{xy}(x, y) = \varvec{\varphi }(x)^\top \varvec{\beta }_{jy}$$\end{document}

![]() , in which

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\varvec{\beta }_{jy}^\top $$\end{document}

, in which

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\varvec{\beta }_{jy}^\top $$\end{document}

![]() denotes the yth row of

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${\textbf{B}}_j$$\end{document}

denotes the yth row of

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${\textbf{B}}_j$$\end{document}

![]() , if

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$y = 1, \dots , C_j - 1$$\end{document}

, if

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$y = 1, \dots , C_j - 1$$\end{document}

![]() ; meanwhile,

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$g_j^y(0) = 0$$\end{document}

; meanwhile,

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$g_j^y(0) = 0$$\end{document}

![]() and

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$g_j^{xy}(x, 0)\equiv 0$$\end{document}

and

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$g_j^{xy}(x, 0)\equiv 0$$\end{document}

![]() as part of the side conditions. The conditional density (e.g., Eq. 1) then becomes the item response function (IRF)

as part of the side conditions. The conditional density (e.g., Eq. 1) then becomes the item response function (IRF)

Like the continuous case, we only consider

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${\mathcal {X}}= [0, 1]$$\end{document}

![]() and

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\varvec{\varphi }$$\end{document}

and

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\varvec{\varphi }$$\end{document}

![]() being a cubic B-spline basis defined by a sequence of equally spaced knots. Similar to Eq. 8 in the continuous case, we may impose likelihood-ratio monotonicity on the conditional density by

being a cubic B-spline basis defined by a sequence of equally spaced knots. Similar to Eq. 8 in the continuous case, we may impose likelihood-ratio monotonicity on the conditional density by

which reduces to

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${\textbf{D}}_k\varvec{\beta }_{j1}\ge \textbf{0}$$\end{document}

![]() when

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$C_j = 2$$\end{document}

when

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$C_j = 2$$\end{document}

![]() (i.e., dichotomous items).

(i.e., dichotomous items).

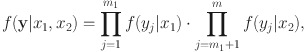

2.2. Simple Factor Structure and Latent Variable Density

Consider a battery of

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$m_1$$\end{document}

![]() continuous MVs and

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$m_2$$\end{document}

continuous MVs and

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$m_2$$\end{document}

![]() discrete MVs and write

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$m = m_1 + m_2$$\end{document}

discrete MVs and write

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$m = m_1 + m_2$$\end{document}

![]() . We typically have

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$m_1 = m_2 = m/2$$\end{document}

. We typically have

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$m_1 = m_2 = m/2$$\end{document}

![]() when the discrete responses and continuous RT variables are observed for the same set of items. From now on, denote by

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$Y_{i1}, \dots , Y_{i,m_1}$$\end{document}

when the discrete responses and continuous RT variables are observed for the same set of items. From now on, denote by

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$Y_{i1}, \dots , Y_{i,m_1}$$\end{document}

![]() the base-10 log-transformed RT, each of which is rescaled to [0, 1], and by

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$Y_{i, m_1+1}, \dots , Y_{im}$$\end{document}

the base-10 log-transformed RT, each of which is rescaled to [0, 1], and by

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$Y_{i, m_1+1}, \dots , Y_{im}$$\end{document}

![]() the corresponding responses. Let

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$X_{i1}, X_{i2}\in [0, 1]$$\end{document}

the corresponding responses. Let

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$X_{i1}, X_{i2}\in [0, 1]$$\end{document}

![]() be the slowness

Footnote 2 and ability factors for respondent i, respectively. A simple factor structure requires that the item responses

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$Y_{i,m_1+1}, \dots , Y_{im}$$\end{document}

be the slowness

Footnote 2 and ability factors for respondent i, respectively. A simple factor structure requires that the item responses

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$Y_{i,m_1+1}, \dots , Y_{im}$$\end{document}

![]() are conditionally independent of the slowness factor

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$X_{i1}$$\end{document}

are conditionally independent of the slowness factor

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$X_{i1}$$\end{document}

![]() given the ability factor

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$X_{i2}$$\end{document}

given the ability factor

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$X_{i2}$$\end{document}

![]() , and symmetrically that the log-RT variables

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$Y_{i1}, \dots , Y_{i,m_1}$$\end{document}

, and symmetrically that the log-RT variables

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$Y_{i1}, \dots , Y_{i,m_1}$$\end{document}

![]() are independent of

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$X_{i2}$$\end{document}

are independent of

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$X_{i2}$$\end{document}

![]() given

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$X_{i1}$$\end{document}

given

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$X_{i1}$$\end{document}

![]() . We also make the local independence assumption that is standard in factor analysis (McDonald, Reference McDonald1982):

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$Y_{i1}, \dots , Y_{im}$$\end{document}

. We also make the local independence assumption that is standard in factor analysis (McDonald, Reference McDonald1982):

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$Y_{i1}, \dots , Y_{im}$$\end{document}

![]() are mutually independent conditional on

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$X_{i1}$$\end{document}

are mutually independent conditional on

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$X_{i1}$$\end{document}

![]() and

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$X_{i2}$$\end{document}

and

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$X_{i2}$$\end{document}

![]() . Further let

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${\textbf{Y}}_i = (Y_{i1}, \dots , Y_{im})^\top $$\end{document}

. Further let

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${\textbf{Y}}_i = (Y_{i1}, \dots , Y_{im})^\top $$\end{document}

![]() collect all the MVs produced by respondent i. The simple structure and local independence assumptions imply that

collect all the MVs produced by respondent i. The simple structure and local independence assumptions imply that

in which

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${\textbf{y}}= (y_1, \dots , y_m)^\top \in [0, 1]^{m_1}\times {\mathcal {Y}}_{m_1 + 1}\times \cdots \times {\mathcal {Y}}_{m}$$\end{document}

![]() , and

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$x_1, x_2\in [0, 1]$$\end{document}

, and

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$x_1, x_2\in [0, 1]$$\end{document}

![]() .

.

For convenience in approximating functional parameters, both

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$X_{i1}$$\end{document}

![]() and

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$X_{i2}$$\end{document}

and

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$X_{i2}$$\end{document}

![]() are assumed to follow a Uniform[0, 1] distribution marginally. However, we are aware that uniformly distributed LVs are less attractive for substantive interpretation. Adopting the strategy of Liu and Wang (Reference Liu and Wang2022), we define

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$X_{id}^* = \Phi ^{-1}(X_{id})$$\end{document}

are assumed to follow a Uniform[0, 1] distribution marginally. However, we are aware that uniformly distributed LVs are less attractive for substantive interpretation. Adopting the strategy of Liu and Wang (Reference Liu and Wang2022), we define

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$X_{id}^* = \Phi ^{-1}(X_{id})$$\end{document}

![]() ,

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$d = 1, 2$$\end{document}

,

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$d = 1, 2$$\end{document}

![]() , where

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\Phi ^{-1}$$\end{document}

, where

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\Phi ^{-1}$$\end{document}

![]() is the standard normal quantile function; the transformed LVs are marginally

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\mathcal{N}(0, 1)$$\end{document}

is the standard normal quantile function; the transformed LVs are marginally

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\mathcal{N}(0, 1)$$\end{document}

![]() variates, in agreement with the standard formulation in parametric factor analysis. To capture the potentially complex association between latent slowness and ability, we employ a nonparametric estimator for the copula density (Sklar, Reference Sklar1959; Nelsen, Reference Nelsen2006) of

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$(X_{i1}, X_{i2})^\top $$\end{document}

variates, in agreement with the standard formulation in parametric factor analysis. To capture the potentially complex association between latent slowness and ability, we employ a nonparametric estimator for the copula density (Sklar, Reference Sklar1959; Nelsen, Reference Nelsen2006) of

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$(X_{i1}, X_{i2})^\top $$\end{document}

![]() , denoted

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$c(x_1, x_2)$$\end{document}

, denoted

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$c(x_1, x_2)$$\end{document}

![]() . A copula density is non-negative and has uniform marginals: That is,

. A copula density is non-negative and has uniform marginals: That is,

c is in fact the joint density of

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$(X_{i1}, X_{i2})^\top $$\end{document}

![]() since both

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$X_{i1}$$\end{document}

since both

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$X_{i1}$$\end{document}

![]() and

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$X_{i2}$$\end{document}

and

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$X_{i2}$$\end{document}

![]() are marginally uniform. In the light of Sklar’s theorem, the joint density of the transformed

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$(X_{i1}^*, X_{i2}^*)^\top $$\end{document}

are marginally uniform. In the light of Sklar’s theorem, the joint density of the transformed

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$(X_{i1}^*, X_{i2}^*)^\top $$\end{document}

![]() can be calculated by

can be calculated by

in which

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\phi $$\end{document}

![]() and

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\Phi $$\end{document}

and

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\Phi $$\end{document}

![]() are the density and distribution functions of

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${{{\mathcal {N}}}}(0, 1)$$\end{document}

are the density and distribution functions of

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

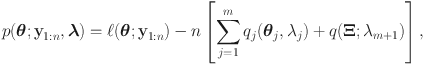

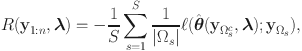

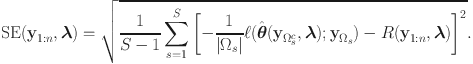

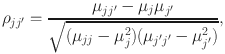

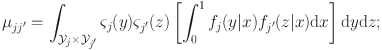

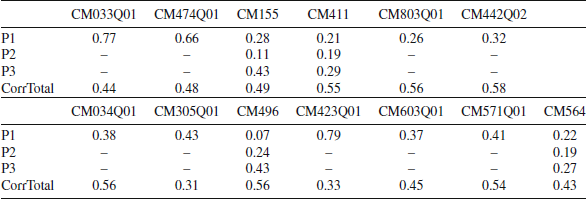

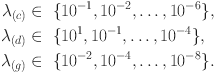

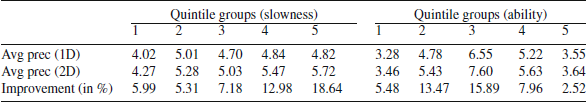

\begin{document}$${{{\mathcal {N}}}}(0, 1)$$\end{document}