1 Introduction

Primitive recursion has a solid foundation in a variety of different fields. In the categorical setting, it can be seen in the structures of algebras. In the logical setting, it corresponds to proofs by induction. And in the computational setting, it can be phrased in terms of languages and type theories with terminating loops, like Gödel’s System (Gödel, Reference Gödel1980). The latter viewpoint of computation reveals a fine-grained lens with which we can study the subtle impact of the primitive combinators that capture different forms of recursion. For example, the recursive combinators given by Mendler (Reference Mendler1987, Reference Mendler1988) yield a computational complexity for certain programs when compared to encodings in System (Böhm & Berarducci, Reference Böhm and Berarducci1985; Girard et al., Reference Girard, Lafont and Taylor1989). Recursive combinators have desirable properties—like the fact that they always terminate—which make them useful for the design of well-behaved programs (Meijer et al., Reference Meijer, Fokkinga and Paterson1991; Gibbons, Reference Gibbons2003), also for optimizations made possible by applying those properties and theorems (Malcolm, Reference Malcolm1990).

The current treatment of the dual of primitive recursion—primitive corecursion—is not so fortunate. Being the much less understood of the two, corecursion is usually only viewed in light of this duality. Consequently, corecursion tends to be relegated to a notion of coalgebras (Rutten, Reference Rutten2019), because only the language of category theory speaks clearly enough about their duality. This can be seen in the study of corecursion schemes, where coalgebraic “anamorphisms” (Meijer et al., Reference Meijer, Fokkinga and Paterson1991) and “apomorphisms” (Vos, Reference Vos1995; Vene & Uustalu, 1998) are the dual counterparts to algebraic “catamorphisms” (Meertens, Reference Meertens1987; Meijer et al., Reference Meijer, Fokkinga and Paterson1991; Hinze et al., 2013) and “paramorphisms” (Meertens, Reference Meertens1992). Yet the logical and computational status of corecursion is not so clear. For example, the introduction of stream objects is sometimes described as the “dual” to the elimination of natural numbers (Crole, Reference Crole2003; Sangiorgi, Reference Sangiorgi2011), but how is this so?

The goal of this paper is to provide a purely computational and logical foundation for primitive corecursion based on classical logic. Specifically, we will express different principles of corecursion in a small core calculus, analogous to the canonical computational presentation of recursion (Gödel, Reference Gödel1980). Much of the early pioneering work in this area was inspired directly by the duality inherent in (co)algebras and category theory (Hagino, 1987; Cockett & Spencer, Reference Cockett and Spencer1995). In contrast, we derive the symmetry between recursion and corecursion through the mechanics of programming language implementations, formalized in terms of an abstract machine. This symmetry is encapsulated by the syntactic and semantic duality (Downen et al., Reference Downen, Johnson-Freyd and Ariola2015) between data types—defined by the structure of objects—and codata types—defined by the behavior of objects.

We begin in Section 2 with a review of the formalization of primitive recursion in terms of a foundational calculus: System T (Gödel, Reference Gödel1980). We point out the impact of evaluation strategy on different primitive recursion combinators, namely the recursor and the iterator:

In call-by-value, the recursor is just as (in)efficient as the iterator.

In call-by-name, the recursor may end early; an asymptotic complexity improvement.

Section 3 presents an abstract machine for both call-by-value and call-by-name evaluation and unifies both into a single presentation (Ariola et al., 2009; Downen & Ariola, 2018b).The lower-level nature of the abstract machine explicitly expresses how the recursor of inductive types, like numbers, accumulates a continuation during evaluation, maintaining the progress of recursion. This is implicit in the operational model of System T. The machine is shown correct, in the sense that a well-typed program will always terminate and produce an observable value (Theorem 3.2), which in our case is a number.

Section 4 continues by extending the abstract machine with the primitive corecursor for streams. The novelty is that this machine is derived by applying syntactic duality, corresponding to de Morgan duality, to the machine with recursion on natural numbers, leading us to a classical corecursive combinator with multiple outputs modeled as multiple continuations. This corecursor is classical in the sense that it abstracts binds first-class continuations using control effects in a way that corresponds to multiple conclusions in classical logic (à la

![]() $\lambda\mu$

-calculus (Parigot, 1992) and the classical sequent calculus (Curien & Herbelin, 2000)). From de Morgan duality in the machine, we can see that the corecursor relies on a value accumulator; this is logically dual to the recursor’s return continuation. Like recursion versus iteration, in Section 5 we compare corecursion versus coiteration: corecursion can be more efficient than coiteration by letting corecursive processes stop early. Since corecursion is dual to recursion, and call-by-value is dual to call-by-name (Curien & Herbelin, 2000; Wadler, Reference Wadler2003), this improvement in algorithmic complexity is only seen in call-by-value corecursion. Namely:

$\lambda\mu$

-calculus (Parigot, 1992) and the classical sequent calculus (Curien & Herbelin, 2000)). From de Morgan duality in the machine, we can see that the corecursor relies on a value accumulator; this is logically dual to the recursor’s return continuation. Like recursion versus iteration, in Section 5 we compare corecursion versus coiteration: corecursion can be more efficient than coiteration by letting corecursive processes stop early. Since corecursion is dual to recursion, and call-by-value is dual to call-by-name (Curien & Herbelin, 2000; Wadler, Reference Wadler2003), this improvement in algorithmic complexity is only seen in call-by-value corecursion. Namely:

In call-by-name, the corecursor is just as (in)efficient as the coiterator.

In call-by-value, the corecursor may end early; an asymptotic complexity improvement.

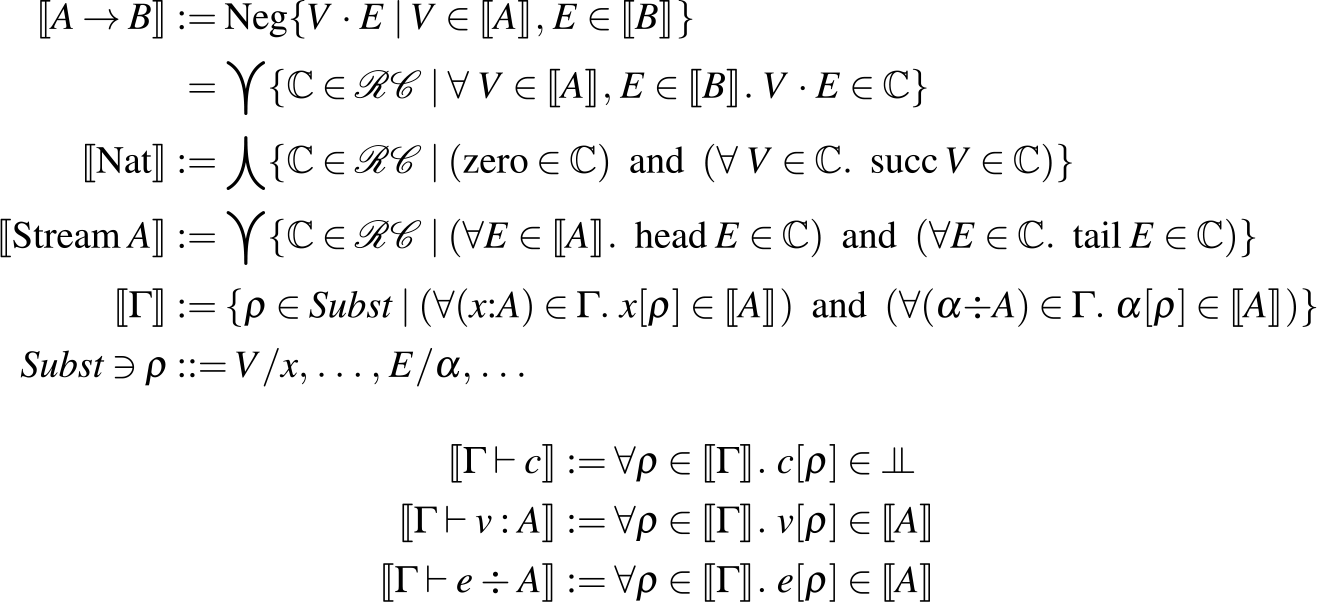

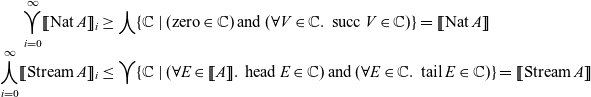

Yet, even though we have added infinite streams, we don’t want to ruin System T’s desirable properties. So in Section 6, we give an interpretation of the type system which extends previous models of finite types (Downen et al., Reference Downen, Johnson-Freyd and Ariola2020, Reference Downen, Ariola and Ghilezan2019) with the (co)recursive types of numbers and streams. The novel key step in reasoning about (co)recursive types is in reconciling two well-known fixed point constructions—Kleene’s and Knaster-Tarski’s—which is non-trivial for classical programs with control effects. This lets us show that, even with infinite streams, our abstract machine is terminating and type safe (Theorem 6.14).

In summary, we make the following contributions to the understanding of (co)recursion in programs:

We present a typed uniform abstract machine, with both call-by-name and call-by-value instances, that can represent functional programs that operate on both an inductive type (Figs. 4 and 6) and a coinductive type (Figs. 7 to 8).

In the context of the abstract machine (Section 4.2), we show how the primitive corecursion combinator for a coinductive type can be formally derived from the usual recursor of an inductive type using the notion of de Morgan duality in classical logic. This derivation is made possible by a computational interpretation of involutive duality inherent to classical logic (Fig. 9 and Theorem 4.2).

We informally analyze the impact of call-by-value versus call-by-name evaluation on the algorithmic complexity of different primitive combinators for (co)inductive types. Dual to the fact that primitive recursion is more efficient than iteration (only) in call-name (Section 3.5), we show that classical corecursion is more efficient than intuitionistic coiteration (only) in call-by-value (Section 5).

The combination of primitive recursion and corecursion is shown to be type safe and terminating in the abstract machine (Theorem 6.14), even though it can express infinite streams that do not end. This is proved using an extension of bi-orthogonal logical relations built on a semantic notion of subtyping (Section 6).

Proofs to all theorems that follow are given in the appendix. For further examples of how to apply classical corecursion in real-world programming languages, and for an illustration of how classicality adds expressive power to corecursion, see the companion paper (Downen & Ariola, 2021).

2 Recursion on natural numbers: System T

We start with Gödel’s System T (Gödel, Reference Gödel1980), a core calculus which allows us to define functions by structural recursion. Its syntax is given in Fig. 1. It is a canonical extension of the simply typed

![]() $\lambda$

-calculus, whose focus is on functions of type

$\lambda$

-calculus, whose focus is on functions of type

![]() $A \to B$

, with ways to construct natural numbers of type

$A \to B$

, with ways to construct natural numbers of type

![]() $\operatorname{Nat}$

. The

$\operatorname{Nat}$

. The

![]() $\operatorname{Nat}$

type comes equipped with two constructors

$\operatorname{Nat}$

type comes equipped with two constructors

![]() $\operatorname{zero}$

and

$\operatorname{zero}$

and

![]() $\operatorname{succ}$

, and a built-in recursor, which we write as

$\operatorname{succ}$

, and a built-in recursor, which we write as

![]() $\operatorname{\mathbf{rec}} M \operatorname{\mathbf{as}}{} \{\operatorname{zero} \to N \mid \operatorname{succ} x \to y.N'\}$

. This

$\operatorname{\mathbf{rec}} M \operatorname{\mathbf{as}}{} \{\operatorname{zero} \to N \mid \operatorname{succ} x \to y.N'\}$

. This

![]() $\operatorname{\mathbf{rec}}$

-expression analyzes M to determine if it has the shape

$\operatorname{\mathbf{rec}}$

-expression analyzes M to determine if it has the shape

![]() $\operatorname{zero}$

or

$\operatorname{zero}$

or

![]() $\operatorname{succ} x$

, and the matching branch is returned. In addition to binding the predecessor of M to x in the

$\operatorname{succ} x$

, and the matching branch is returned. In addition to binding the predecessor of M to x in the

![]() $\operatorname{succ} x$

branch, the recursive result—calculated by replacing M with its predecessor x—is bound to y.

$\operatorname{succ} x$

branch, the recursive result—calculated by replacing M with its predecessor x—is bound to y.

Fig. 1. System T:

![]() $\lambda$

-calculus with numbers and recursion.

$\lambda$

-calculus with numbers and recursion.

The type system of System T is given in Fig. 2. The var,

![]() ${\to}I$

and

${\to}I$

and

![]() ${\to}E$

typing rules are from the simply typed

${\to}E$

typing rules are from the simply typed

![]() $\lambda$

-calculus. The two

$\lambda$

-calculus. The two

![]() ${\operatorname{Nat}}I$

introduction rules give the types of the constructors of

${\operatorname{Nat}}I$

introduction rules give the types of the constructors of

![]() $\operatorname{Nat}$

, and the

$\operatorname{Nat}$

, and the

![]() ${\operatorname{Nat}}E$

elimination rule types the

${\operatorname{Nat}}E$

elimination rule types the

![]() $\operatorname{Nat}$

recursor.

$\operatorname{Nat}$

recursor.

Fig. 2. Type system of System T.

System T’s call-by-name and call-by-value operational semantics are given in Fig. 3. Both of these evaluation strategies share operational rules of the same form, with

![]() $\beta_\to$

being the well-known

$\beta_\to$

being the well-known

![]() $\beta$

rule of the

$\beta$

rule of the

![]() $\lambda$

-calculus, and

$\lambda$

-calculus, and

![]() $\beta_{\operatorname{zero}}$

and

$\beta_{\operatorname{zero}}$

and

![]() $\beta_{\operatorname{succ}}$

defining recursion on the two

$\beta_{\operatorname{succ}}$

defining recursion on the two

![]() $\operatorname{Nat}$

constructors. The only difference between call-by-value and call-by-name evaluation lies in their notion of values V (i.e., those terms which can be substituted for variables) and evaluation contexts (i.e., the location of the next reduction step to perform). Note that we take this notion seriously and never substitute a non-value for a variable. As such, the

$\operatorname{Nat}$

constructors. The only difference between call-by-value and call-by-name evaluation lies in their notion of values V (i.e., those terms which can be substituted for variables) and evaluation contexts (i.e., the location of the next reduction step to perform). Note that we take this notion seriously and never substitute a non-value for a variable. As such, the

![]() $\beta_{\operatorname{succ}}$

rule does not substitute the recursive computation

$\beta_{\operatorname{succ}}$

rule does not substitute the recursive computation

![]() $\operatorname{\mathbf{rec}} V \operatorname{\mathbf{as}} \ \{ \operatorname{zero} \to N \mid \operatorname{succ} x \to y. N' \}$

for y, since it might not be a value (in call-by-value). The next reduction step depends on the evaluation strategy. In call-by-name, this next step is indeed to substitute

$\operatorname{\mathbf{rec}} V \operatorname{\mathbf{as}} \ \{ \operatorname{zero} \to N \mid \operatorname{succ} x \to y. N' \}$

for y, since it might not be a value (in call-by-value). The next reduction step depends on the evaluation strategy. In call-by-name, this next step is indeed to substitute

![]() $\operatorname{\mathbf{rec}} V \operatorname{\mathbf{as}} \ \{ \operatorname{zero} \to N \mid \operatorname{succ} x \to y. N' \}$

for y, and so we have:

$\operatorname{\mathbf{rec}} V \operatorname{\mathbf{as}} \ \{ \operatorname{zero} \to N \mid \operatorname{succ} x \to y. N' \}$

for y, and so we have:

So call-by-name recursion is computed starting with the current (largest) number first and ending with the smallest number needed (possibly the base case for

![]() $\operatorname{zero}$

). If a recursive result is not needed, then it is not computed at all, allowing for an early end of the recursion. In contrast, call-by-value must evaluate the recursive result first before it can be substituted for y. As such, call-by-value recursion always starts by computing the base case for

$\operatorname{zero}$

). If a recursive result is not needed, then it is not computed at all, allowing for an early end of the recursion. In contrast, call-by-value must evaluate the recursive result first before it can be substituted for y. As such, call-by-value recursion always starts by computing the base case for

![]() $\operatorname{zero}$

(whether or not it is needed), and the intermediate results are propagated backwards until the case for the initial number is reached. So call-by-value allows for no opportunity to end the computation of

$\operatorname{zero}$

(whether or not it is needed), and the intermediate results are propagated backwards until the case for the initial number is reached. So call-by-value allows for no opportunity to end the computation of

![]() $\operatorname{\mathbf{rec}}$

early.

$\operatorname{\mathbf{rec}}$

early.

Fig. 3. Call-by-name and Call-by-value Operational semantics of System T.

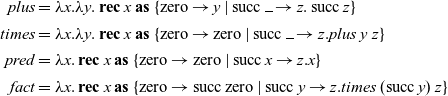

Example 2.1. The common arithmetic functions

![]() $\mathit{plus}$

,

$\mathit{plus}$

,

![]() $\mathit{times}$

,

$\mathit{times}$

,

![]() $\mathit{pred}$

, and

$\mathit{pred}$

, and

![]() $\mathit{fact}$

can be written in System T as follows:

$\mathit{fact}$

can be written in System T as follows:

\begin{align*} \mathit{plus} &= \lambda x. \lambda y. \operatorname{\mathbf{rec}} x \operatorname{\mathbf{as}} \ \{ \operatorname{zero} \to y \mid \operatorname{succ} \rule{1ex}{0.5pt} \to z. \operatorname{succ} z \} \\ \mathit{times} &= \lambda x. \lambda y. \operatorname{\mathbf{rec}} x \operatorname{\mathbf{as}} \ \{ \operatorname{zero} \to \operatorname{zero} \mid \operatorname{succ} \rule{1ex}{0.5pt} \to z. \mathit{plus}~y~z \} \\ \mathit{pred} &= \lambda x. \operatorname{\mathbf{rec}} x \operatorname{\mathbf{as}} \ \{ \operatorname{zero} \to \operatorname{zero} \mid \operatorname{succ} x \to z.x \} \\ \mathit{fact} &= \lambda x. \operatorname{\mathbf{rec}} x \operatorname{\mathbf{as}} \ \{ \operatorname{zero} \to \operatorname{succ}\operatorname{zero} \mid \operatorname{succ} y \to z. \mathit{times}~(\operatorname{succ} y)~z \}\end{align*}

\begin{align*} \mathit{plus} &= \lambda x. \lambda y. \operatorname{\mathbf{rec}} x \operatorname{\mathbf{as}} \ \{ \operatorname{zero} \to y \mid \operatorname{succ} \rule{1ex}{0.5pt} \to z. \operatorname{succ} z \} \\ \mathit{times} &= \lambda x. \lambda y. \operatorname{\mathbf{rec}} x \operatorname{\mathbf{as}} \ \{ \operatorname{zero} \to \operatorname{zero} \mid \operatorname{succ} \rule{1ex}{0.5pt} \to z. \mathit{plus}~y~z \} \\ \mathit{pred} &= \lambda x. \operatorname{\mathbf{rec}} x \operatorname{\mathbf{as}} \ \{ \operatorname{zero} \to \operatorname{zero} \mid \operatorname{succ} x \to z.x \} \\ \mathit{fact} &= \lambda x. \operatorname{\mathbf{rec}} x \operatorname{\mathbf{as}} \ \{ \operatorname{zero} \to \operatorname{succ}\operatorname{zero} \mid \operatorname{succ} y \to z. \mathit{times}~(\operatorname{succ} y)~z \}\end{align*}

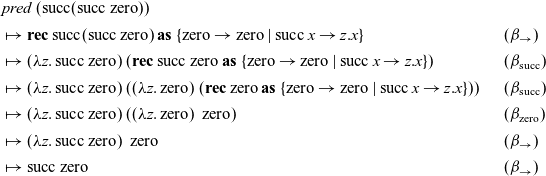

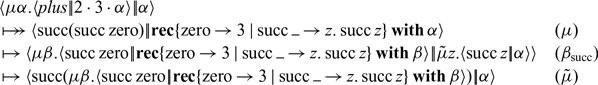

Executing

![]() $pred~(\operatorname{succ}(\operatorname{succ}\operatorname{zero}))$

in call-by-name proceeds like so:

$pred~(\operatorname{succ}(\operatorname{succ}\operatorname{zero}))$

in call-by-name proceeds like so:

\begin{align*} & \mathit{pred}~(\operatorname{succ}(\operatorname{succ}\operatorname{zero})) \\ &\mapsto \operatorname{\mathbf{rec}} \operatorname{succ}(\operatorname{succ}\operatorname{zero}) \operatorname{\mathbf{as}} \ \{ \operatorname{zero} \to \operatorname{zero} \mid \operatorname{succ} x \to z.x \} &&(\beta_\to) \\ &\mapsto (\lambda z. \operatorname{succ}\operatorname{zero}) ~ (\operatorname{\mathbf{rec}} \operatorname{succ}\operatorname{zero} \operatorname{\mathbf{as}} \ \{ \operatorname{zero} \to \operatorname{zero} \mid \operatorname{succ} x \to z.x \}) &&(\beta_{\operatorname{succ}}) \\ &\mapsto \operatorname{succ}\operatorname{zero} &&(\beta_\to)\end{align*}

\begin{align*} & \mathit{pred}~(\operatorname{succ}(\operatorname{succ}\operatorname{zero})) \\ &\mapsto \operatorname{\mathbf{rec}} \operatorname{succ}(\operatorname{succ}\operatorname{zero}) \operatorname{\mathbf{as}} \ \{ \operatorname{zero} \to \operatorname{zero} \mid \operatorname{succ} x \to z.x \} &&(\beta_\to) \\ &\mapsto (\lambda z. \operatorname{succ}\operatorname{zero}) ~ (\operatorname{\mathbf{rec}} \operatorname{succ}\operatorname{zero} \operatorname{\mathbf{as}} \ \{ \operatorname{zero} \to \operatorname{zero} \mid \operatorname{succ} x \to z.x \}) &&(\beta_{\operatorname{succ}}) \\ &\mapsto \operatorname{succ}\operatorname{zero} &&(\beta_\to)\end{align*}

However, in call-by-value, the predecessor of both

![]() $\operatorname{succ} \operatorname{zero}$

and

$\operatorname{succ} \operatorname{zero}$

and

![]() $\operatorname{zero}$

is computed even though these intermediate results are not needed in the end:

$\operatorname{zero}$

is computed even though these intermediate results are not needed in the end:

\begin{align*} & \mathit{pred}~(\operatorname{succ}(\operatorname{succ}\operatorname{zero})) \\ &\mapsto \operatorname{\mathbf{rec}} \operatorname{succ}(\operatorname{succ}\operatorname{zero}) \operatorname{\mathbf{as}} \ \{ \operatorname{zero} \to \operatorname{zero} \mid \operatorname{succ} x \to z.x \} &&(\beta_\to) \\ &\mapsto (\lambda z. \operatorname{succ}\operatorname{zero}) ~ (\operatorname{\mathbf{rec}} \operatorname{succ}\operatorname{zero} \operatorname{\mathbf{as}} \ \{ \operatorname{zero} \to \operatorname{zero} \mid \operatorname{succ} x \to z.x \}) &&(\beta_{\operatorname{succ}}) \\ &\mapsto (\lambda z. \operatorname{succ}\operatorname{zero}) ~ ((\lambda z.\operatorname{zero}) ~ (\operatorname{\mathbf{rec}} \operatorname{zero} \operatorname{\mathbf{as}} \ \{ \operatorname{zero} \to \operatorname{zero} \mid \operatorname{succ} x \to z.x \})) &&(\beta_{\operatorname{succ}}) \\ &\mapsto (\lambda z. \operatorname{succ}\operatorname{zero})~ ((\lambda z.\operatorname{zero}) ~ \operatorname{zero}) &&(\beta_{\operatorname{zero}}) \\ &\mapsto (\lambda z. \operatorname{succ}\operatorname{zero}) ~ \operatorname{zero} &&(\beta_\to) \\ &\mapsto \operatorname{succ}\operatorname{zero} &&(\beta_\to)\end{align*}

\begin{align*} & \mathit{pred}~(\operatorname{succ}(\operatorname{succ}\operatorname{zero})) \\ &\mapsto \operatorname{\mathbf{rec}} \operatorname{succ}(\operatorname{succ}\operatorname{zero}) \operatorname{\mathbf{as}} \ \{ \operatorname{zero} \to \operatorname{zero} \mid \operatorname{succ} x \to z.x \} &&(\beta_\to) \\ &\mapsto (\lambda z. \operatorname{succ}\operatorname{zero}) ~ (\operatorname{\mathbf{rec}} \operatorname{succ}\operatorname{zero} \operatorname{\mathbf{as}} \ \{ \operatorname{zero} \to \operatorname{zero} \mid \operatorname{succ} x \to z.x \}) &&(\beta_{\operatorname{succ}}) \\ &\mapsto (\lambda z. \operatorname{succ}\operatorname{zero}) ~ ((\lambda z.\operatorname{zero}) ~ (\operatorname{\mathbf{rec}} \operatorname{zero} \operatorname{\mathbf{as}} \ \{ \operatorname{zero} \to \operatorname{zero} \mid \operatorname{succ} x \to z.x \})) &&(\beta_{\operatorname{succ}}) \\ &\mapsto (\lambda z. \operatorname{succ}\operatorname{zero})~ ((\lambda z.\operatorname{zero}) ~ \operatorname{zero}) &&(\beta_{\operatorname{zero}}) \\ &\mapsto (\lambda z. \operatorname{succ}\operatorname{zero}) ~ \operatorname{zero} &&(\beta_\to) \\ &\mapsto \operatorname{succ}\operatorname{zero} &&(\beta_\to)\end{align*}

In general,

![]() $\mathit{pred}$

is a constant time (O(1)) function over the size of its argument when following the call-by-name semantics, which computes the predecessor of any natural number in a fixed number of steps. In contrast,

$\mathit{pred}$

is a constant time (O(1)) function over the size of its argument when following the call-by-name semantics, which computes the predecessor of any natural number in a fixed number of steps. In contrast,

![]() $\mathit{pred}$

is a linear time (O(n)) function when following the call-by-value semantics, where

$\mathit{pred}$

is a linear time (O(n)) function when following the call-by-value semantics, where

![]() $\mathit{pred}~(\operatorname{succ}^n\operatorname{zero})$

executes with a number of steps proportional to the size n of its argument because it requires at least n applications of the

$\mathit{pred}~(\operatorname{succ}^n\operatorname{zero})$

executes with a number of steps proportional to the size n of its argument because it requires at least n applications of the

![]() $\beta_{\operatorname{succ}}$

rule before an answer can be returned.

$\beta_{\operatorname{succ}}$

rule before an answer can be returned.

3 Recursion in an abstract machine

In order to explore the lower-level performance details of recursion, we can use an abstract machine for modeling an implementation of System T. Please suggest whether the spelling of the term ’redex’ in the sentence ’Unlike the reduction step’ is OK.Unlike the operational semantics given in Fig. 3, which requires a costly recursive search deep into an expression to find the next redex at every step, an abstract machine explicitly includes this search in the computation itself which can immediately resume from the same position as the previous reduction step. As such, every step of the machine can be applied by matching only on the top-level form of the machine state, which models the fact that a real implementations in a machine does not have to recursively search for the next reduction step to perform, but can identify and jump to the next step in a constant amount of time. Thus, in an abstract machine instead of working with terms one works with configurations of the form:

where M is a term also called a producer and E is a continuation or evaluation context, also called a consumer. A state, also called a command, puts together a producer and a consumer, so that the output of M is given as the input to E. We first present distinct abstract machines for call-by-name and call-by-value, we then smooth out the differences in the uniform abstract machine.

3.1 Call-by-name abstract machine

The call-by-name abstract machine for System T is based on the Krivine machine (Krivine, 2007), which is defined in terms of these continuations (representing evaluation contexts, for example,

![]() $\operatorname{\mathbf{tp}}$

represents the empty, top-level context) and transition rules:

Footnote 1

$\operatorname{\mathbf{tp}}$

represents the empty, top-level context) and transition rules:

Footnote 1

![]() $^{\kern-1pt,}$

Footnote 2

$^{\kern-1pt,}$

Footnote 2

Refocusing rules:

\begin{align*} \langle {M~N} |\!| {E} \rangle &\mapsto \langle {M} |\!| {N \cdot E} \rangle \\ \langle {\operatorname{\mathbf{rec}} M \operatorname{\mathbf{as}} \{\dots\} } |\!| {E} \rangle &\mapsto \langle {M} |\!| {\operatorname{\mathbf{rec}} \{\dots\} \operatorname{\mathbf{with}} E} \rangle\end{align*}

\begin{align*} \langle {M~N} |\!| {E} \rangle &\mapsto \langle {M} |\!| {N \cdot E} \rangle \\ \langle {\operatorname{\mathbf{rec}} M \operatorname{\mathbf{as}} \{\dots\} } |\!| {E} \rangle &\mapsto \langle {M} |\!| {\operatorname{\mathbf{rec}} \{\dots\} \operatorname{\mathbf{with}} E} \rangle\end{align*}

Reduction rules:

The first two rules are refocusing rules that move the attention of the machine closer to the next reduction building a larger continuation:

![]() $N \cdot E$

corresponds to the context

$N \cdot E$

corresponds to the context

![]() $E[\square~N]$

, and the continuation

$E[\square~N]$

, and the continuation

![]() $\operatorname{\mathbf{rec}} \{\operatorname{zero} \to N \mid \operatorname{succ} x \to y.N'\} \operatorname{\mathbf{with}} E$

corresponds to the context

$\operatorname{\mathbf{rec}} \{\operatorname{zero} \to N \mid \operatorname{succ} x \to y.N'\} \operatorname{\mathbf{with}} E$

corresponds to the context

![]() $E[\operatorname{\mathbf{rec}} \square \operatorname{\mathbf{as}} \{\operatorname{zero} \to N \mid \operatorname{succ} x \to y.N'\} ]$

. The latter three rules are reduction rules which correspond to steps of the operational semantics in Fig. 3. While the distinction between refocusing and reduction rules is just a mere classification now, the difference will become an important tool for generalizing the abstract machine into a more uniform presentation later in Section 3.3.

$E[\operatorname{\mathbf{rec}} \square \operatorname{\mathbf{as}} \{\operatorname{zero} \to N \mid \operatorname{succ} x \to y.N'\} ]$

. The latter three rules are reduction rules which correspond to steps of the operational semantics in Fig. 3. While the distinction between refocusing and reduction rules is just a mere classification now, the difference will become an important tool for generalizing the abstract machine into a more uniform presentation later in Section 3.3.

3.2 Call-by-value abstract machine

A CEK-style (Felleisen & Friedman, 1986), call-by-value abstract machine for System T—which evaluates applications

![]() $M_1~M_2~\dots~M_n$

left-to-right to match the call-by-value semantics in Fig. 3—is given by these continuations E and transition rules:

$M_1~M_2~\dots~M_n$

left-to-right to match the call-by-value semantics in Fig. 3—is given by these continuations E and transition rules:

Refocusing rules:

\begin{align*} \langle {M~N} |\!| {E} \rangle &\mapsto \langle {M} |\!| {N \cdot E} \rangle \\ \langle {V} |\!| {R \cdot E} \rangle &\mapsto \langle {R} |\!| {V \circ E} \rangle \\ \langle {V} |\!| {V' \circ E} \rangle &\mapsto \langle {V'} |\!| {V \cdot E} \rangle \\ \langle {\operatorname{\mathbf{rec}} M \operatorname{\mathbf{as}} \{\dots\}} |\!| {E} \rangle &\mapsto \langle {M} |\!| {\operatorname{\mathbf{rec}} \{\dots\} \operatorname{\mathbf{with}} E} \rangle \\ \langle {\operatorname{succ} R} |\!| {E} \rangle &\mapsto \langle {R} |\!| {\operatorname{succ} \circ E} \rangle \\ \langle {V} |\!| {\operatorname{succ} \circ E} \rangle &\mapsto \langle {\operatorname{succ} V} |\!| {E} \rangle\end{align*}

\begin{align*} \langle {M~N} |\!| {E} \rangle &\mapsto \langle {M} |\!| {N \cdot E} \rangle \\ \langle {V} |\!| {R \cdot E} \rangle &\mapsto \langle {R} |\!| {V \circ E} \rangle \\ \langle {V} |\!| {V' \circ E} \rangle &\mapsto \langle {V'} |\!| {V \cdot E} \rangle \\ \langle {\operatorname{\mathbf{rec}} M \operatorname{\mathbf{as}} \{\dots\}} |\!| {E} \rangle &\mapsto \langle {M} |\!| {\operatorname{\mathbf{rec}} \{\dots\} \operatorname{\mathbf{with}} E} \rangle \\ \langle {\operatorname{succ} R} |\!| {E} \rangle &\mapsto \langle {R} |\!| {\operatorname{succ} \circ E} \rangle \\ \langle {V} |\!| {\operatorname{succ} \circ E} \rangle &\mapsto \langle {\operatorname{succ} V} |\!| {E} \rangle\end{align*}

Reduction rules:

where R stands for a non-value term. Since the call-by-value operational semantics has more forms of evaluation contexts, this machine has additional refocusing rules for accumulating more forms of continuations including applications of functions (

![]() $V \circ E$

corresponding to

$V \circ E$

corresponding to

![]() $E[V~\square]$

) and the successor constructor (

$E[V~\square]$

) and the successor constructor (

![]() $\operatorname{succ} \circ E$

corresponding to

$\operatorname{succ} \circ E$

corresponding to

![]() $E[\operatorname{succ}~\square]$

). Also note that the final reduction rule for the

$E[\operatorname{succ}~\square]$

). Also note that the final reduction rule for the

![]() $\operatorname{succ}$

case of recursion is different, accounting for the fact that recursion in call-by-value follows a different order than in call-by-name. Indeed, the recursor must explicitly accumulate and build upon a continuation, “adding to” the place it returns to with every recursive call. But otherwise, the reduction rules are the same.

Footnote 3

$\operatorname{succ}$

case of recursion is different, accounting for the fact that recursion in call-by-value follows a different order than in call-by-name. Indeed, the recursor must explicitly accumulate and build upon a continuation, “adding to” the place it returns to with every recursive call. But otherwise, the reduction rules are the same.

Footnote 3

3.3 Uniform abstract machine

We now unite both evaluation strategies with a common abstract machine, shown in Fig. 4. As before, machine configurations are of the form

which put together a term v and a continuation e (often referred to as a coterm). However, both terms and continuations are more general than before. Our uniform abstract machine is based on the sequent calculus, a symmetric language reflecting many dualities of classical logic (Curien & Herbelin, 2000; Wadler, Reference Wadler2003). An advantage of this sequent-based system is that the explicit symmetry inherent in its syntax gives us a language to express and understand the implicit symmetries that are hiding in computations and types. This utility of a symmetric language is one of the key insights of our approach, which will let us syntactically derive a notion of corecursion which is dual to recursion.

Fig. 4. Uniform, recursive abstract machine for System T.

Unlike the previous machines, continuations go beyond evaluation contexts and include

![]() ${\tilde{\mu}}{x}. \langle {v} |\!| {e} \rangle$

, which is a continuation that binds its input value to x and then steps to the machine state

${\tilde{\mu}}{x}. \langle {v} |\!| {e} \rangle$

, which is a continuation that binds its input value to x and then steps to the machine state

![]() $\langle {v} |\!| {e} \rangle$

. This new form allows us to express the additional call-by-value evaluation contexts:

$\langle {v} |\!| {e} \rangle$

. This new form allows us to express the additional call-by-value evaluation contexts:

![]() $V \circ E$

becomes

$V \circ E$

becomes

![]() ${\tilde{\mu}}{x}. \langle {V} |\!| {x \cdot E} \rangle$

, and

${\tilde{\mu}}{x}. \langle {V} |\!| {x \cdot E} \rangle$

, and

![]() $\operatorname{succ} \circ E$

is

$\operatorname{succ} \circ E$

is

![]() ${\tilde{\mu}}{x}. \langle {\operatorname{succ} x} |\!| {E} \rangle$

. Evaluation contexts are also more restrictive than before; only values can be pushed on the calling stack. We represent the application continuation

${\tilde{\mu}}{x}. \langle {\operatorname{succ} x} |\!| {E} \rangle$

. Evaluation contexts are also more restrictive than before; only values can be pushed on the calling stack. We represent the application continuation

![]() $M \cdot E$

with a non-value argument M by naming its partner—the generic value V—with y:

$M \cdot E$

with a non-value argument M by naming its partner—the generic value V—with y:

![]() $ {\tilde{\mu}}{y}. \langle {M} |\!| {{\tilde{\mu}}{x}. \langle {y} |\!| {x \cdot E} \rangle} \rangle$

.

Footnote 4

$ {\tilde{\mu}}{y}. \langle {M} |\!| {{\tilde{\mu}}{x}. \langle {y} |\!| {x \cdot E} \rangle} \rangle$

.

Footnote 4

The refocusing rules can be subsumed all together by extending terms with a dual form of

![]() ${\tilde{\mu}}$

-binding. The

${\tilde{\mu}}$

-binding. The

![]() $\mu$

-abstraction expression

$\mu$

-abstraction expression

![]() $\mu{\alpha}. \langle {v} |\!| {e} \rangle$

binds its continuation to

$\mu{\alpha}. \langle {v} |\!| {e} \rangle$

binds its continuation to

![]() $\alpha$

and then steps to the machine state

$\alpha$

and then steps to the machine state

![]() $\langle {v} |\!| {e} \rangle$

. With

$\langle {v} |\!| {e} \rangle$

. With

![]() $\mu$

-bindings, all the refocusing rules for call-by-name and call-by-value can be encoded in terms of

$\mu$

-bindings, all the refocusing rules for call-by-name and call-by-value can be encoded in terms of

![]() $\mu$

and

$\mu$

and

![]() ${\tilde{\mu}}$

.

Footnote 5

It is also not necessary to do these steps at run-time, but can all be done before execution through a compilation step. The target language of this compilation step becomes the syntax of the uniform abstract machine, as shown in Fig. 4. This language does not include anymore applications

${\tilde{\mu}}$

.

Footnote 5

It is also not necessary to do these steps at run-time, but can all be done before execution through a compilation step. The target language of this compilation step becomes the syntax of the uniform abstract machine, as shown in Fig. 4. This language does not include anymore applications

![]() $M~N$

and the recursive term

$M~N$

and the recursive term

![]() $\operatorname{\mathbf{rec}} M \operatorname{\mathbf{as}} \{\dots\}$

. Also, unlike System T, the syntax of terms and coterms depends on the definition of values and covalues;

$\operatorname{\mathbf{rec}} M \operatorname{\mathbf{as}} \{\dots\}$

. Also, unlike System T, the syntax of terms and coterms depends on the definition of values and covalues;

![]() $\operatorname{succ} V$

,

$\operatorname{succ} V$

,

![]() $\operatorname{\mathbf{rec}} \{\dots\} \operatorname{\mathbf{with}} E$

, and call stacks

$\operatorname{\mathbf{rec}} \{\dots\} \operatorname{\mathbf{with}} E$

, and call stacks

![]() $V \cdot E$

are valid in both call-by-name and call-by-value, just with different definitions of V and E. Yet, all System T terms can still be translated to the smaller language of the machine, as shown in Fig. 5. General terms also include the

$V \cdot E$

are valid in both call-by-name and call-by-value, just with different definitions of V and E. Yet, all System T terms can still be translated to the smaller language of the machine, as shown in Fig. 5. General terms also include the

![]() $\mu$

- and

$\mu$

- and

![]() ${\tilde{\mu}}$

-binders described above:

${\tilde{\mu}}$

-binders described above:

![]() $\mu{\alpha}. c$

is not a value in call-by-value, and

$\mu{\alpha}. c$

is not a value in call-by-value, and

![]() ${\tilde{\mu}}{x}. c$

is not a covalue in call-by-name. So for example,

${\tilde{\mu}}{x}. c$

is not a covalue in call-by-name. So for example,

![]() $\operatorname{succ} (\mu{\alpha}. c)$

is not a legal term in call-by-value, but can be translated instead to

$\operatorname{succ} (\mu{\alpha}. c)$

is not a legal term in call-by-value, but can be translated instead to

![]() $\mu{\beta}. \langle {\mu{\alpha}. c} |\!| {{\tilde{\mu}}{x}. \langle {\operatorname{succ} x} |\!| {\beta} \rangle} \rangle$

following Fig. 5. Similarly

$\mu{\beta}. \langle {\mu{\alpha}. c} |\!| {{\tilde{\mu}}{x}. \langle {\operatorname{succ} x} |\!| {\beta} \rangle} \rangle$

following Fig. 5. Similarly

![]() $x \cdot {\tilde{\mu}}{y}. c$

is not a legal call stack in call-by-name, but it can be rewritten to

$x \cdot {\tilde{\mu}}{y}. c$

is not a legal call stack in call-by-name, but it can be rewritten to

![]() ${\tilde{\mu}}{z}. \langle {\mu{\alpha}.\langle {z} |\!| {x \cdot \alpha} \rangle} |\!| {{\tilde{\mu}}{y}. c} \rangle$

.

${\tilde{\mu}}{z}. \langle {\mu{\alpha}.\langle {z} |\!| {x \cdot \alpha} \rangle} |\!| {{\tilde{\mu}}{y}. c} \rangle$

.

Fig. 5. The translation from System T to the uniform abstract machine.

As with System T, the notions of values and covalues drive the reduction rules in Fig. 4. In particular, the

![]() $\mu$

and

$\mu$

and

![]() ${\tilde{\mu}}$

rules will only substitute a value for a variable or a covalue for a covariable, respectively. Likewise, the

${\tilde{\mu}}$

rules will only substitute a value for a variable or a covalue for a covariable, respectively. Likewise, the

![]() $\beta_\to$

rule implements function calls, but taking the next argument value off of a call stack and plugging it into the function. The only remaining rules are

$\beta_\to$

rule implements function calls, but taking the next argument value off of a call stack and plugging it into the function. The only remaining rules are

![]() $\beta_{\operatorname{zero}}$

and

$\beta_{\operatorname{zero}}$

and

![]() $\beta_{\operatorname{succ}}$

for reducing a recursor when given a number constructed by

$\beta_{\operatorname{succ}}$

for reducing a recursor when given a number constructed by

![]() $\operatorname{zero}$

or

$\operatorname{zero}$

or

![]() $\operatorname{succ}$

. While the

$\operatorname{succ}$

. While the

![]() $\beta_{\operatorname{zero}}$

is exactly the same as it was previously in both specialized machine, notice how

$\beta_{\operatorname{zero}}$

is exactly the same as it was previously in both specialized machine, notice how

![]() $\beta_{\operatorname{succ}}$

is different. Rather than committing to one evaluation order or the other,

$\beta_{\operatorname{succ}}$

is different. Rather than committing to one evaluation order or the other,

![]() $\beta_{\operatorname{succ}}$

is neutral: the recursive predecessor (expressed as the term

$\beta_{\operatorname{succ}}$

is neutral: the recursive predecessor (expressed as the term

![]() $\mu{\alpha}.\langle {V} |\!| {\operatorname{\mathbf{rec}}\{\dots\}\operatorname{\mathbf{with}}\alpha} \rangle$

on the right-hand side) is neither given precedence (at the top of the command) nor delayed (by substituting it for y). Instead, this recursive predecessor is bound to y with a

$\mu{\alpha}.\langle {V} |\!| {\operatorname{\mathbf{rec}}\{\dots\}\operatorname{\mathbf{with}}\alpha} \rangle$

on the right-hand side) is neither given precedence (at the top of the command) nor delayed (by substituting it for y). Instead, this recursive predecessor is bound to y with a

![]() ${\tilde{\mu}}$

-abstraction. This way, the correct evaluation order can be decided in the next step by either an application of

${\tilde{\mu}}$

-abstraction. This way, the correct evaluation order can be decided in the next step by either an application of

![]() $\mu$

or

$\mu$

or

![]() ${\tilde{\mu}}$

reduction.

${\tilde{\mu}}$

reduction.

Notice one more advantage of translating System T at “compile-time” into a smaller language in the abstract machine. In the same way that the

![]() $\lambda$

-calculus supports both a deterministic operational semantics along with a rewriting system that allows for optimizations that apply reductions to any sub-expression, so too does the syntax of this uniform abstract machine allow for optimizations that reduce sub-expressions in advance of the usual execution order. For example, consider the term

$\lambda$

-calculus supports both a deterministic operational semantics along with a rewriting system that allows for optimizations that apply reductions to any sub-expression, so too does the syntax of this uniform abstract machine allow for optimizations that reduce sub-expressions in advance of the usual execution order. For example, consider the term

![]() $\operatorname{\mathbf{let}} f = (\lambda{x}. (\lambda{y}. y)~x) \operatorname{\mathbf{in}} M$

.

Footnote 6

Its next step is to substitute the abstraction

$\operatorname{\mathbf{let}} f = (\lambda{x}. (\lambda{y}. y)~x) \operatorname{\mathbf{in}} M$

.

Footnote 6

Its next step is to substitute the abstraction

![]() $(\lambda{x}. (\lambda{y}. y)~x)$

for f in M, and every time M calls

$(\lambda{x}. (\lambda{y}. y)~x)$

for f in M, and every time M calls

![]() $(f~V)$

we must repeat the steps

$(f~V)$

we must repeat the steps

![]() \begin{align*} (\lambda{x}. (\lambda{y}. y)~x)~V \mapsto (\lambda{y}. y)~V \mapsto V\end{align*}

again. But applying a

\begin{align*} (\lambda{x}. (\lambda{y}. y)~x)~V \mapsto (\lambda{y}. y)~V \mapsto V\end{align*}

again. But applying a

![]() $\beta$

reduction under the

$\beta$

reduction under the

![]() $\lambda$

bound to f, we can instead optimize this expression to

$\lambda$

bound to f, we can instead optimize this expression to

![]() $\operatorname{\mathbf{let}} f = (\lambda{x}. x) \operatorname{\mathbf{in}} M$

. With this optimization, when M calls

$\operatorname{\mathbf{let}} f = (\lambda{x}. x) \operatorname{\mathbf{in}} M$

. With this optimization, when M calls

![]() $(f~V)$

it reduces as

$(f~V)$

it reduces as

![]() $(\lambda{x}. x)~V \mapsto V$

in one step. This same approach applies to the uniform abstract machine, too, because all elimination forms like

$(\lambda{x}. x)~V \mapsto V$

in one step. This same approach applies to the uniform abstract machine, too, because all elimination forms like

![]() $\square~N$

and

$\square~N$

and

![]() $\operatorname{\mathbf{rec}} \square \operatorname{\mathbf{as}} \{\dots\}$

have been compiled to continuations of the form

$\operatorname{\mathbf{rec}} \square \operatorname{\mathbf{as}} \{\dots\}$

have been compiled to continuations of the form

![]() $N \cdot \alpha$

and

$N \cdot \alpha$

and

![]() $\operatorname{\mathbf{rec}}\{\dots\}\operatorname{\mathbf{with}}\alpha$

that we can use to apply machine reductions. For example, the translation of

$\operatorname{\mathbf{rec}}\{\dots\}\operatorname{\mathbf{with}}\alpha$

that we can use to apply machine reductions. For example, the translation of

![]() $\operatorname{\mathbf{let}} f = (\lambda{x}. (\lambda{y}. y)~x) \operatorname{\mathbf{in}} M$

is quite large, but we can optimize away several

$\operatorname{\mathbf{let}} f = (\lambda{x}. (\lambda{y}. y)~x) \operatorname{\mathbf{in}} M$

is quite large, but we can optimize away several

![]() $\mu$

and

$\mu$

and

![]() ${\tilde{\mu}}$

reductions—which are responsible for the administrative duty of directing information and control flow—in advance inside sub-expressions to simplify the translation to:

Footnote 7

${\tilde{\mu}}$

reductions—which are responsible for the administrative duty of directing information and control flow—in advance inside sub-expressions to simplify the translation to:

Footnote 7

where

![]() $\mathrel{\to\!\!\!\!\to}$

denotes zero or more reduction steps

$\mathrel{\to\!\!\!\!\to}$

denotes zero or more reduction steps

![]() $\mapsto$

applied inside any context. From there, we can further optimize the program by simplifying the application of

$\mapsto$

applied inside any context. From there, we can further optimize the program by simplifying the application of

![]() $\lambda{y}. y$

in advance, by applying the

$\lambda{y}. y$

in advance, by applying the

![]() $\beta_\to$

rule inside the

$\beta_\to$

rule inside the

![]() $\mu$

s binding

$\mu$

s binding

![]() $\alpha$

and

$\alpha$

and

![]() $\beta$

and the

$\beta$

and the

![]() $\lambda$

binding x like so:

$\lambda$

binding x like so:

This way, we are able to still optimize the program even after compilation to the abstract machine language, the same as if we were optimizing the original

![]() $\lambda$

-calculus source code.

$\lambda$

-calculus source code.

Intermezzo 3.1 We can now summarize how some basic concepts of recursion are directly modeled in our syntactic framework:

– With inductive data types, values are constructed and the consumer is a recursive process that uses the data. Natural numbers are terms or producers, and their use is a process which is triggered when the term becomes a value.

– Construction of data is finite and its consumption is (potentially) infinite, in the sense that there must be no limit to the size of the data that a consumer can process. We can only build values from a finite number of constructor applications. However, the consumer does not know how big of an input it will be given, so it has to be ready to handle data structures of any size. In the end, termination is preserved because only finite values are consumed.

– Recursion uses the data, rather than producing it.

$\operatorname{\mathbf{rec}}$

is a coterm, not a term.

$\operatorname{\mathbf{rec}}$

is a coterm, not a term.– Recursion starts big and potentially reduces down to a base case. As shown in the reduction rules, the recursor breaks down the data structure and might end when the base case is reached.

– The values of a data structures are all independent from each other but the results of the recursion potentially depend on each other. In the reduction rule for the successor case, the result at a number n might depend on the result at

$n-1$

.

$n-1$

.

3.4 Examples of recursion

Restricting the

![]() $\mu$

and

$\mu$

and

![]() ${\tilde{\mu}}$

rules to only binding (co)values effectively implements the chosen evaluation strategy. For example, consider the application

${\tilde{\mu}}$

rules to only binding (co)values effectively implements the chosen evaluation strategy. For example, consider the application

![]() $(\lambda z. \operatorname{succ}\operatorname{zero})~((\lambda x. x)~\operatorname{zero})$

. Call-by-name evaluation will reduce the outer application first and return

$(\lambda z. \operatorname{succ}\operatorname{zero})~((\lambda x. x)~\operatorname{zero})$

. Call-by-name evaluation will reduce the outer application first and return

![]() $\operatorname{succ}\operatorname{zero}$

right away, whereas call-by-value evaluation will first reduce the inner application

$\operatorname{succ}\operatorname{zero}$

right away, whereas call-by-value evaluation will first reduce the inner application

![]() $((\lambda x.x)~\operatorname{zero})$

. How is this different order of evaluation made explicit in the abstract machine, which uses the same set of rules in both cases? First, consider the translation of

$((\lambda x.x)~\operatorname{zero})$

. How is this different order of evaluation made explicit in the abstract machine, which uses the same set of rules in both cases? First, consider the translation of

![]() $[\![ (\lambda z. x)~((\lambda x. x)~y) ]\!]$

:

$[\![ (\lambda z. x)~((\lambda x. x)~y) ]\!]$

:

This is quite a large term for such a simple source expression. To make the example clearer, let us first simplify the administrative-style

![]() ${\tilde{\mu}}$

-bindings out of the way (using applications of

${\tilde{\mu}}$

-bindings out of the way (using applications of

![]() ${\tilde{\mu}}$

rules for to substitute

${\tilde{\mu}}$

rules for to substitute

![]() $\lambda$

-abstractions and variables in a way that is valid for both call-by-name and call-by-value) to get the shorter term:

$\lambda$

-abstractions and variables in a way that is valid for both call-by-name and call-by-value) to get the shorter term:

To execute it, we need to put it in interaction with an actual context. In our case, we can simply use a covariable

![]() $\alpha$

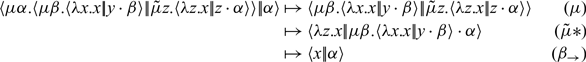

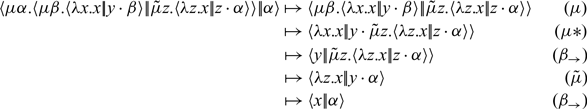

. Call-by-name execution then proceeds from the simplified as:

$\alpha$

. Call-by-name execution then proceeds from the simplified as:

And call-by-value execution of the simplified proceeds as:

The first two steps are the same for either evaluation strategy. Where the two begin to diverge is in the third step (marked by a

![]() $*$

), which is an interaction between a

$*$

), which is an interaction between a

![]() $\mu$

- and a

$\mu$

- and a

![]() ${\tilde{\mu}}$

-binder. In call-by-name, the

${\tilde{\mu}}$

-binder. In call-by-name, the

![]() ${\tilde{\mu}}$

rule takes precedence (because a

${\tilde{\mu}}$

rule takes precedence (because a

![]() ${\tilde{\mu}}$

-coterm is not a covalue), leading to the next step which throws away the first argument, unevaluated. In call-by-value, the

${\tilde{\mu}}$

-coterm is not a covalue), leading to the next step which throws away the first argument, unevaluated. In call-by-value, the

![]() $\mu$

rule takes precedence (because a

$\mu$

rule takes precedence (because a

![]() $\mu$

-term is not a value), leading to the next step which evaluates the first argument, producing y to bind to z that eventually gets thrown away.

$\mu$

-term is not a value), leading to the next step which evaluates the first argument, producing y to bind to z that eventually gets thrown away.

Consider the System T definition of

![]() $\mathit{plus}$

from Example 2.1, which is expressed by the machine term

$\mathit{plus}$

from Example 2.1, which is expressed by the machine term

The application

![]() $\mathit{plus}~2~3$

is then expressed as

$\mathit{plus}~2~3$

is then expressed as

![]() $ \mu{\alpha}. \langle {\mathit{plus}} |\!| { } \rangle {2 \cdot 3 \cdot \alpha}$

, which is obtained by reducing some

$ \mu{\alpha}. \langle {\mathit{plus}} |\!| { } \rangle {2 \cdot 3 \cdot \alpha}$

, which is obtained by reducing some

![]() $\mu$

- and

$\mu$

- and

![]() ${\tilde{\mu}}$

-bindings in advance. Putting this term in the context

${\tilde{\mu}}$

-bindings in advance. Putting this term in the context

![]() $\alpha$

, in call-by-value it executes (eliding the branches of the

$\alpha$

, in call-by-value it executes (eliding the branches of the

![]() $\operatorname{\mathbf{rec}}$

continuation, which are the same in every following step) like so:

$\operatorname{\mathbf{rec}}$

continuation, which are the same in every following step) like so:

Notice how this execution shows how, during the recursive traversal of the data structure, the return continuation of the recursor is updated (in blue) to keep track of the growing context of pending operations, which must be fully processed before the final value of 5 (

![]() $\operatorname{succ}(\operatorname{succ} 3)$

) can be returned to the original caller (

$\operatorname{succ}(\operatorname{succ} 3)$

) can be returned to the original caller (

![]() $\alpha$

). This update is implicit in the

$\alpha$

). This update is implicit in the

![]() $\lambda$

-calculus-based System T, but becomes explicit in the abstract machine. In contrast, call-by-name only computes numbers as far as they are needed, otherwise stopping at the outermost constructor. The call-by-name execution of the above command proceeds as follows, after fast-forwarding to the first application of

$\lambda$

-calculus-based System T, but becomes explicit in the abstract machine. In contrast, call-by-name only computes numbers as far as they are needed, otherwise stopping at the outermost constructor. The call-by-name execution of the above command proceeds as follows, after fast-forwarding to the first application of

![]() $\beta_{\operatorname{succ}}$

:

$\beta_{\operatorname{succ}}$

:

Unless

![]() $\alpha$

demands to know something about the predecessor of this number, the term

$\alpha$

demands to know something about the predecessor of this number, the term

![]() $\mu{\beta}.\langle {\operatorname{succ}\operatorname{zero}} |\!| {\operatorname{\mathbf{rec}}\{\dots\}\operatorname{\mathbf{with}}\beta} \rangle$

will not be computed.

$\mu{\beta}.\langle {\operatorname{succ}\operatorname{zero}} |\!| {\operatorname{\mathbf{rec}}\{\dots\}\operatorname{\mathbf{with}}\beta} \rangle$

will not be computed.

Now consider

![]() $\mathit{pred}~(\operatorname{succ}(\operatorname{succ}\operatorname{zero}))$

, which can be expressed in the machine as:

$\mathit{pred}~(\operatorname{succ}(\operatorname{succ}\operatorname{zero}))$

, which can be expressed in the machine as:

In call-by-name, it executes with respect to

![]() $\alpha$

like so:

$\alpha$

like so:

Notice how, after the first application of

![]() $\beta_{\operatorname{succ}}$

, the computation finishes in just one

$\beta_{\operatorname{succ}}$

, the computation finishes in just one

![]() ${\tilde{\mu}}$

step, even though we began recursing on the number 2. In call-by-value instead, we have to continue with the recursion even though its result is not needed. Fast-forwarding to the first application of the

${\tilde{\mu}}$

step, even though we began recursing on the number 2. In call-by-value instead, we have to continue with the recursion even though its result is not needed. Fast-forwarding to the first application of the

![]() $\beta_{\operatorname{succ}}$

rule, we have:

$\beta_{\operatorname{succ}}$

rule, we have:

3.5 Recursion versus iteration: Expressiveness and efficiency

Recall how the recursor performs two jobs at the same time: finding the predecessor of a natural number as well as calculating the recursive result given for the predecessor. These two functionalities can be captured separately by continuations that perform shallow case analysis and iteration, respectively. Rather than including them as primitives, both can be expressed as syntactic sugar in the form of macro-expansions in the language of the abstract machine like so:

\begin{align*}\begin{aligned} & \operatorname{\mathbf{case}} \begin{alignedat}[t]{2} \{& \operatorname{zero} &&\to v \\ \mid& \operatorname{succ} x &&\to w \} \end{alignedat} \\ &\operatorname{\mathbf{with}} E\end{aligned}&:=\!\!\begin{aligned} &\operatorname{\mathbf{rec}} \begin{alignedat}[t]{2} \{& \operatorname{zero} &&\to v \\ \mid& \operatorname{succ} x &&\to \rule{1ex}{0.5pt}\,. w \} \end{alignedat} \\ &\operatorname{\mathbf{with}} E\end{aligned}&~~\begin{aligned} & \operatorname{\mathbf{iter}} \begin{alignedat}[t]{2} \{& \operatorname{zero} &&\to v \\ \mid& \operatorname{succ} &&\to x.w \} \end{alignedat} \\ &\operatorname{\mathbf{with}} E\end{aligned}&:=\!\!\begin{aligned} &\operatorname{\mathbf{rec}} \begin{alignedat}[t]{2} \{& \operatorname{zero} &&\to v \\ \mid& \operatorname{succ} \rule{1ex}{0.5pt} &&\to x.w \} \end{alignedat} \\ &\operatorname{\mathbf{with}} E\end{aligned}\end{align*}

\begin{align*}\begin{aligned} & \operatorname{\mathbf{case}} \begin{alignedat}[t]{2} \{& \operatorname{zero} &&\to v \\ \mid& \operatorname{succ} x &&\to w \} \end{alignedat} \\ &\operatorname{\mathbf{with}} E\end{aligned}&:=\!\!\begin{aligned} &\operatorname{\mathbf{rec}} \begin{alignedat}[t]{2} \{& \operatorname{zero} &&\to v \\ \mid& \operatorname{succ} x &&\to \rule{1ex}{0.5pt}\,. w \} \end{alignedat} \\ &\operatorname{\mathbf{with}} E\end{aligned}&~~\begin{aligned} & \operatorname{\mathbf{iter}} \begin{alignedat}[t]{2} \{& \operatorname{zero} &&\to v \\ \mid& \operatorname{succ} &&\to x.w \} \end{alignedat} \\ &\operatorname{\mathbf{with}} E\end{aligned}&:=\!\!\begin{aligned} &\operatorname{\mathbf{rec}} \begin{alignedat}[t]{2} \{& \operatorname{zero} &&\to v \\ \mid& \operatorname{succ} \rule{1ex}{0.5pt} &&\to x.w \} \end{alignedat} \\ &\operatorname{\mathbf{with}} E\end{aligned}\end{align*}

The only cost of this encoding of

![]() $\operatorname{\mathbf{case}}$

and

$\operatorname{\mathbf{case}}$

and

![]() $\operatorname{\mathbf{iter}}$

is an unused variable binding, which is easily optimized away. In practice, this encoding of iteration will perform exactly the same as if we had taken

$\operatorname{\mathbf{iter}}$

is an unused variable binding, which is easily optimized away. In practice, this encoding of iteration will perform exactly the same as if we had taken

![]() $\operatorname{\mathbf{iter}}$

ation as a primitive.

$\operatorname{\mathbf{iter}}$

ation as a primitive.

While it is less obvious, going the other way is still possible. It is well known that primitive recursion can be encoded as a macro-expansion of iteration using pairs. The usual macro-expansion in System T is:

\begin{align*} \begin{aligned} & \operatorname{\mathbf{rec}} M \operatorname{\mathbf{as}} \\ &\quad \begin{alignedat}{2} &\{~ \operatorname{zero} &&\to N \\ &\mid \operatorname{succ} x &&\to y. N' \} \end{alignedat} \end{aligned} &:= \begin{aligned} &\operatorname{snd}~ ( \operatorname{\mathbf{iter}} M \operatorname{\mathbf{as}} \\ &\qquad\quad \begin{alignedat}{2} &\{~ \operatorname{zero} &&\to (\operatorname{zero}, N) \\ &\mid \operatorname{succ} &&\to (x,y).\, (\operatorname{succ} x, N') \} ) \end{alignedat} \end{aligned}\end{align*}

\begin{align*} \begin{aligned} & \operatorname{\mathbf{rec}} M \operatorname{\mathbf{as}} \\ &\quad \begin{alignedat}{2} &\{~ \operatorname{zero} &&\to N \\ &\mid \operatorname{succ} x &&\to y. N' \} \end{alignedat} \end{aligned} &:= \begin{aligned} &\operatorname{snd}~ ( \operatorname{\mathbf{iter}} M \operatorname{\mathbf{as}} \\ &\qquad\quad \begin{alignedat}{2} &\{~ \operatorname{zero} &&\to (\operatorname{zero}, N) \\ &\mid \operatorname{succ} &&\to (x,y).\, (\operatorname{succ} x, N') \} ) \end{alignedat} \end{aligned}\end{align*}

The trick to this encoding is to use

![]() $\operatorname{\mathbf{iter}}$

to compute both a reconstruction of the number being iterated upon (the first component of the iterative result) alongside the desired result (the second component). Doing both at once gives access to the predecessor in the

$\operatorname{\mathbf{iter}}$

to compute both a reconstruction of the number being iterated upon (the first component of the iterative result) alongside the desired result (the second component). Doing both at once gives access to the predecessor in the

![]() $\operatorname{succ}$

case, which can be extracted from the first component of the previous result (given by the variable x in the pattern match (x,y)).

$\operatorname{succ}$

case, which can be extracted from the first component of the previous result (given by the variable x in the pattern match (x,y)).

To express this encoding in the abstract machine, we need to extend it with pairs, which look like (Wadler, Reference Wadler2003):

In the syntax of the abstract machine, the analogous encoding of a

![]() $\operatorname{\mathbf{rec}}$

continuation as a macro-expansion looks like this:

$\operatorname{\mathbf{rec}}$

continuation as a macro-expansion looks like this:

\begin{align*}\begin{aligned} &\operatorname{\mathbf{rec}} \begin{alignedat}[t]{2} \{& \operatorname{zero} &&\to v \\ \mid& \operatorname{succ} x &&\to y.w \} \end{alignedat} \\ &\operatorname{\mathbf{with}} E\end{aligned}&:=\begin{aligned} &\operatorname{\mathbf{iter}} \begin{alignedat}[t]{2} \{& \operatorname{zero} &&\to (\operatorname{zero}, v) \\ \mid& \operatorname{succ} &&\to (x,y). (\operatorname{succ} x, w) \} \end{alignedat} \\ &\operatorname{\mathbf{with}}{} \operatorname{snd} E\end{aligned}\end{align*}

\begin{align*}\begin{aligned} &\operatorname{\mathbf{rec}} \begin{alignedat}[t]{2} \{& \operatorname{zero} &&\to v \\ \mid& \operatorname{succ} x &&\to y.w \} \end{alignedat} \\ &\operatorname{\mathbf{with}} E\end{aligned}&:=\begin{aligned} &\operatorname{\mathbf{iter}} \begin{alignedat}[t]{2} \{& \operatorname{zero} &&\to (\operatorname{zero}, v) \\ \mid& \operatorname{succ} &&\to (x,y). (\operatorname{succ} x, w) \} \end{alignedat} \\ &\operatorname{\mathbf{with}}{} \operatorname{snd} E\end{aligned}\end{align*}

Since the inductive case w might refer to both the predecessor x and the recursive result for the predecessor (named y), the two parts must be extracted from the pair returned from iteration. Here we express this extraction in the form of pattern matching, which is shorthand for:

Note that the recursor continuation is tasked with passing its final result to E once it has finished. In order to give this same result to E, the encoding has to extract the second component of the final pair before passing it to E, which is exactly what

![]() $\operatorname{snd} E$

expresses.

$\operatorname{snd} E$

expresses.

Unfortunately, this encoding of recursion is not always as efficient as the original. If the recursive parameter y is never used (such as in the

![]() $\mathit{pred}$

function), then

$\mathit{pred}$

function), then

![]() $\operatorname{\mathbf{rec}}$

can provide an answer without computing the recursive result. However, when encoding

$\operatorname{\mathbf{rec}}$

can provide an answer without computing the recursive result. However, when encoding

![]() $\operatorname{\mathbf{rec}}$

with

$\operatorname{\mathbf{rec}}$

with

![]() $\operatorname{\mathbf{iter}}$

, the result of the recursive value must always be computed before an answer is seen, regardless of whether or not y is needed. As such, redefining

$\operatorname{\mathbf{iter}}$

, the result of the recursive value must always be computed before an answer is seen, regardless of whether or not y is needed. As such, redefining

![]() $\mathit{pred}$

using

$\mathit{pred}$

using

![]() $\operatorname{\mathbf{iter}}$

in this way changes it from a constant time (O(1)) to a linear time (O(n)) function. Notice that this difference in cost is only apparent in call-by-name, which can be asymptotically more efficient when the recursive y is not needed to compute N’, as in

$\operatorname{\mathbf{iter}}$

in this way changes it from a constant time (O(1)) to a linear time (O(n)) function. Notice that this difference in cost is only apparent in call-by-name, which can be asymptotically more efficient when the recursive y is not needed to compute N’, as in

![]() $\mathit{pred}$

. In call-by-value, the recursor must descend to the base case anyway before the incremental recursive steps are propagated backward. That is to say, the call-by-value

$\mathit{pred}$

. In call-by-value, the recursor must descend to the base case anyway before the incremental recursive steps are propagated backward. That is to say, the call-by-value

![]() $\operatorname{\mathbf{rec}}$

has the same asymptotic complexity as its encoding via

$\operatorname{\mathbf{rec}}$

has the same asymptotic complexity as its encoding via

![]() $\operatorname{\mathbf{iter}}$

.

$\operatorname{\mathbf{iter}}$

.

3.6 Types and correctness

We can also give a type system directly for the abstract machine, as shown in Fig. 6. This system has judgments for assigning types to terms as usual:

![]() $\Gamma \vdash v : A$

says v produces an output of type A. In addition, there are also judgments for assigning types to coterms (

$\Gamma \vdash v : A$

says v produces an output of type A. In addition, there are also judgments for assigning types to coterms (

![]() $\Gamma \vdash e \div A$

says e consumes an input of type A) and commands (

$\Gamma \vdash e \div A$

says e consumes an input of type A) and commands (

![]() $\Gamma \vdash c \ $

says c is safe to compute, and does not produce or consume anything).

$\Gamma \vdash c \ $

says c is safe to compute, and does not produce or consume anything).

Fig. 6. Type system for the uniform, recursive abstract machine.

This type system ensures that the machine itself is type safe: well-typed, executable commands don’t get stuck while in the process of computing a final state. For our purposes, we will consider “programs” to be commands c with just one free covariable (say

![]() $\alpha$

), representing the initial, top-level continuation expecting a natural number as the final answer. Thus, well-typed executable commands will satisfy

$\alpha$

), representing the initial, top-level continuation expecting a natural number as the final answer. Thus, well-typed executable commands will satisfy

![]() $\alpha\div\operatorname{Nat} \vdash c \ $

. The only final states of these programs have the form

$\alpha\div\operatorname{Nat} \vdash c \ $

. The only final states of these programs have the form

![]() $\langle {\operatorname{zero}} |\!| {\alpha} \rangle$

, which sends 0 to the final continuation

$\langle {\operatorname{zero}} |\!| {\alpha} \rangle$

, which sends 0 to the final continuation

![]() $\alpha$

, or

$\alpha$

, or

![]() , which sends the successor of some V to

, which sends the successor of some V to

![]() $\alpha$

. But the type system ensures more than just type safety: all well-typed programs will eventually terminate. That’s because

$\alpha$

. But the type system ensures more than just type safety: all well-typed programs will eventually terminate. That’s because

![]() $\operatorname{\mathbf{rec}}$

-expressions, which are the only form of recursion in the language, always decrement their input by 1 on each recursive step. So together, every well-typed executable command will eventually (termination) reach a valid final state (type safety).

$\operatorname{\mathbf{rec}}$

-expressions, which are the only form of recursion in the language, always decrement their input by 1 on each recursive step. So together, every well-typed executable command will eventually (termination) reach a valid final state (type safety).

Theorem 3.2 (Type safety & Termination of Programs). For any command c of the recursive abstract machine, if

![]() $\alpha\div\operatorname{Nat} \vdash c \ $

then

$\alpha\div\operatorname{Nat} \vdash c \ $

then

![]() $c \mathrel{\mapsto\!\!\!\!\to} \langle {\operatorname{zero}} |\!| {\alpha} \rangle$

or

$c \mathrel{\mapsto\!\!\!\!\to} \langle {\operatorname{zero}} |\!| {\alpha} \rangle$

or

![]() $c \mathrel{\mapsto\!\!\!\!\to} \langle {\operatorname{succ} V} |\!| {\alpha} \rangle$

for some V.

$c \mathrel{\mapsto\!\!\!\!\to} \langle {\operatorname{succ} V} |\!| {\alpha} \rangle$

for some V.

The truth of this theorem follows directly from the latter development in Section 6, since it is a special case of Theorem 6.14.

Intermezzo 3.3 Since our abstract machine is based on the logic of Gentzen’s sequent (Gentzen, 1935), the type system in Fig. 6 too can be viewed as a term assignment for a particular sequent calculus. In particular, the statement

![]() $v : A$

corresponds to a proof that A is true. Dually

$v : A$

corresponds to a proof that A is true. Dually

![]() $e \div A$

corresponds to a proof that A is false, and hence the notation, which can be understood as a built-in negation

$e \div A$

corresponds to a proof that A is false, and hence the notation, which can be understood as a built-in negation

![]() $-$

in

$-$

in

![]() $e : -A$

. As such, the built-in negation in every

$e : -A$

. As such, the built-in negation in every

![]() $e \div A$

(or

$e \div A$

(or

![]() $\alpha \div A$

) can be removed by swapping between the left- and right-hand sides of the turnstyle (

$\alpha \div A$

) can be removed by swapping between the left- and right-hand sides of the turnstyle (

![]() $\vdash$

), so that

$\vdash$

), so that

![]() $e \div A$

on the right becomes

$e \div A$

on the right becomes

![]() $e : A$

on the left, and

$e : A$

on the left, and

![]() $\alpha \div A$

on the left becomes

$\alpha \div A$

on the left becomes

![]() $\alpha : A$

on the right. Doing so gives a conventional two-sided sequent calculus as (Ariola et al., 2009; Downen & Ariola, 2018b), where the rules labeled L with conclusions of the form

$\alpha : A$

on the right. Doing so gives a conventional two-sided sequent calculus as (Ariola et al., 2009; Downen & Ariola, 2018b), where the rules labeled L with conclusions of the form

![]() $x_i : B_i, \alpha_j \div C_j \vdash e \div A$

correspond to left rules of the form

$x_i : B_i, \alpha_j \div C_j \vdash e \div A$

correspond to left rules of the form

![]() $x_i:B_i \mid e : A \vdash \alpha_j:C_j$

in the sequent calculus. In general, the three forms of two-sided sequents correspond to these three different typing judgments used here (with

$x_i:B_i \mid e : A \vdash \alpha_j:C_j$

in the sequent calculus. In general, the three forms of two-sided sequents correspond to these three different typing judgments used here (with

![]() $\Gamma$

rearranged for uniformity):

$\Gamma$

rearranged for uniformity):

\begin{align*} x_i : B_i, \overset{i}\dots, \alpha_j \div C_j, \overset{j}\dots \vdash v : A \end{align*}

corresponds to

\begin{align*} x_i : B_i, \overset{i}\dots, \alpha_j \div C_j, \overset{j}\dots \vdash v : A \end{align*}

corresponds to

\begin{align*} x_i : B_i, \overset{i}\dots \vdash v : A \mid \alpha_j : C_j, \overset{j}\dots \end{align*}

.

\begin{align*} x_i : B_i, \overset{i}\dots \vdash v : A \mid \alpha_j : C_j, \overset{j}\dots \end{align*}

. \begin{align*} x_i : B_i, \overset{i}\dots, \alpha_j \div C_j, \overset{j}\dots \vdash e \div A \end{align*}

corresponds to

\begin{align*} x_i : B_i, \overset{i}\dots, \alpha_j \div C_j, \overset{j}\dots \vdash e \div A \end{align*}

corresponds to

\begin{align*} x_i : B_i, \overset{i}\dots \mid e : A \vdash \alpha_j : C_j, \overset{j}\dots \end{align*}

.

\begin{align*} x_i : B_i, \overset{i}\dots \mid e : A \vdash \alpha_j : C_j, \overset{j}\dots \end{align*}

. \begin{align*} x_i : B_i, \overset{i}\dots, \alpha_j \div C_j, \overset{j}\dots \vdash c \ \end{align*}

corresponds to

\begin{align*} x_i : B_i, \overset{i}\dots, \alpha_j \div C_j, \overset{j}\dots \vdash c \ \end{align*}

corresponds to

\begin{align*} c : (x_i : B_i, \overset{i}\dots \vdash \alpha_j : C_j, \overset{j}\dots) \end{align*}

.

\begin{align*} c : (x_i : B_i, \overset{i}\dots \vdash \alpha_j : C_j, \overset{j}\dots) \end{align*}

.

4 Corecursion in an abstract machine

Instead of coming up with an extension of System T with corecursion and then define an abstract machine, we start directly with the abstract machine which we obtain by applying duality. As a prototypical example of a coinductive type, we consider infinite streams of values, chosen for their familiarity (other coinductive types work just as well), which we represent by the type

![]() $\operatorname{Stream} A$

, as given in Fig. 7.

$\operatorname{Stream} A$

, as given in Fig. 7.

Fig. 7. Typing rules for streams in the uniform, (co)recursive abstract machine.

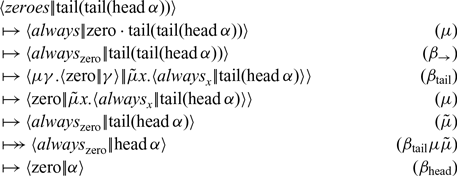

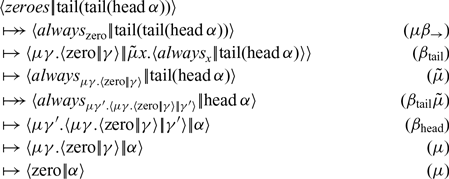

The intention is that

![]() $\operatorname{Stream} A$

is roughly dual to

$\operatorname{Stream} A$

is roughly dual to

![]() $\operatorname{Nat}$

, and so we will flip the roles of terms and coterms belonging to streams. In contrast with

$\operatorname{Nat}$

, and so we will flip the roles of terms and coterms belonging to streams. In contrast with

![]() $\operatorname{Nat}$

, which has constructors for building values,

$\operatorname{Nat}$

, which has constructors for building values,

![]() $\operatorname{Stream} A$

has two destructors for building covalues. First, the covalue

$\operatorname{Stream} A$

has two destructors for building covalues. First, the covalue

![]() $\operatorname{head} E$

(the base case dual to

$\operatorname{head} E$

(the base case dual to

![]() $\operatorname{zero}$

) projects out the first element of its given stream and passes its value to E. Second, the covalue

$\operatorname{zero}$

) projects out the first element of its given stream and passes its value to E. Second, the covalue

![]() $\operatorname{tail} E$

(the coinductive case dual to

$\operatorname{tail} E$

(the coinductive case dual to

![]() $\operatorname{succ} V$

) discards the first element of the stream and passes the remainder of the stream to E. The corecursor is defined by dualizing the recursor, whose general form is:

$\operatorname{succ} V$

) discards the first element of the stream and passes the remainder of the stream to E. The corecursor is defined by dualizing the recursor, whose general form is:

Notice how the internal seed V corresponds to the return continuation E. In the base case of the recursor, term v is sent to the current value of the continuation E. Dually, in the base case of the corecursor, the coterm e receives the current value of the internal seed V. In the recursor’s inductive case, y receives the result of the next recursive step (i.e., the predecessor of the current one), whereas in the corecursor’s coinductive case,

![]() $\gamma$

sends the updated seed to the next corecursive step (i.e., the tail of the current one). The two cases of the recursor match the patterns

$\gamma$

sends the updated seed to the next corecursive step (i.e., the tail of the current one). The two cases of the recursor match the patterns

![]() $\operatorname{zero}$

(the base case) and

$\operatorname{zero}$

(the base case) and

![]() $\operatorname{succ} x$

(the inductive case). Analogously, the corecursor matches against the two possible copatterns: the base case is

$\operatorname{succ} x$

(the inductive case). Analogously, the corecursor matches against the two possible copatterns: the base case is

![]() $\operatorname{head}\alpha$

, and the coinductive case is

$\operatorname{head}\alpha$

, and the coinductive case is

![]() $\operatorname{tail}\beta$

. So the full form of the stream corecursor is:

$\operatorname{tail}\beta$

. So the full form of the stream corecursor is:

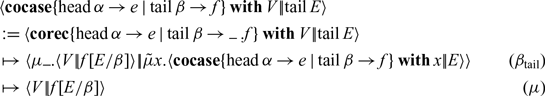

The uniform abstract machine is given in Fig. 8, where we highlight the extensions. Note how the corecursor generates (on the fly) the values of the stream using V as an incremental accumulator or seed, saving the progress made through the stream so far. In particular, the base case

![]() $\operatorname{head}\alpha \to e$

matching the head projection just passes the accumulator to e, which (may) compute the current element and send it to

$\operatorname{head}\alpha \to e$