1. Introduction

Accessing health information has never been easier. In the European Union (EU), the number of households with internet access is 90% (Eurostat, 2020). The percentage of internet users globally can be estimated at more than half the world population (World Bank, Reference World Bank2017). More than 1 billion health-related queries are made daily on Google (Murphy, Reference Murphy2019).

Similarly, it has never been easier to publish content online: either on individual websites or on social media platforms, where billions of people are registered to share and comment on user-generated content. Speech has never been cheaper (Hasen, Reference Hasen2017), which means that there were never as many people speaking for an audience at the same time. With online virality being triggered by feelings of anxiety, practical value and interest (Berger and Milkman, Reference Berger and Milkman2013), the increased spread of false information on health topics should be an unsurprising consequence of the current digital landscape. For example, the top 100 health stories published in 2018 were shared 24 million times, reaching billions of readers (Teoh, Reference Teoh2018).

The COVID-19 pandemic triggered an infodemic (Ahmed et al., Reference Ahmed, Shahbaz, Shamim, Khan, Hussain and Usman2020), described by the World Health Organization (WHO) as an abundance of information, some accurate and some not, in digital and physical environments during a disease outbreak (WHO, 2022). The COVID-19 infodemic grew stronger on the back of multiple social media platforms (Banerjee and Meena, Reference Banerjee and Meena2021), such as Facebook, YouTube, Instagram (Quinn et al., Reference Quinn, Fazel and Peters2021) and Twitter (Massaro et al., Reference Massaro, Tamburro, La Torre, Dal Mas, Thomas, Cobianchi and Barach2021). These platforms' competition for our attention in the past few decades (Wu Reference Wu2016) made them central hubs for information flow – and therefore the places where misinformation flourishes the most.

COVID-19-related misinformation created visible and measurable health consequences, from harmful self-medication to mental health problems (Tasnim et al., Reference Tasnim, Hossain and Mazumder2020), to heightened concerns around vaccine hesitancy (Razai et al., Reference Razai, Chaudhry, Doerholt, Bauld and Majeed2021). Social media amplified rumours and good intentions based on false information about the origins, causes, effects and solutions for the virus. COVID-19 misinformation stemmed mostly from reconfigurations of actual information, with less of it being entirely fabricated (Brennen et al., Reference Brennen, Simon and Nielsen2021).

To tackle the infodemic, social media platforms adopted and enforced stricter content rules, from labelling to nudging, blocking monetisation on certain topics and outright erasing and banning content and accounts.

Researchers have called out governments to further intervene by creating appropriate legislation that can help prevent the spread of misinformation (Cuan-Baltazar et al., Reference Cuan-Baltazar, Muñoz-Perez, Robledo-Vega, Pérez-Zepeda and Soto-Vega2020). Public pressure for action has been mounting (Walker, Reference Walker2020).

Based on existing state interventions, two models of legislative approach can be discerned. One aims at targeting content deemed ‘illegal’ by establishing stricter rules for social media platforms, forcing them to block or delete it within a short time frame. This is the case, for example, of Germany and their Network Enforcement Act (Wagner et al., Reference Wagner, Rozgonyi, Sekwenz, Cobbe and Singh2020).

The other model targets ‘harmful content’, which may include content that is fake but not illegal, provided it can cause harm. This is the case of Russia and their Law on Information, Information Technologies and Protection of Information (Stanglin, Reference Stanglin2019).

In both cases, as well as in other government attempts to address the infodemic problem, it becomes apparent that regulating misinformation from a state's perspective is not without perils and always involves some form of speech regulation, censure and truth-monitoring. In the context of a pandemic, however, these concerns may often clash with many essential public health strategies. Misinformation hinders people's perception of the risks and the rules involved in dealing with a pandemic. This can have a major impact on the health and safety of populations, which naturally raises legal and ethical concerns, some of which we aim to address in this paper.

As such, we will begin by examining briefly the health misinformation scenario before COVID-19. We will then look at how the COVID-19 infodemic introduced a shift in the discussion around the topic of misinformation and how digital intermediaries responded to it.

We will examine in more detail the balance to be struck between a sound public policy against misinformation and the importance of protecting the freedom of the press, specifically in the context of the case law of the European Court of Human Rights.

Finally, we will look at the ethical dimension of this problem, with the goal of providing some clarity on what is at stake.

2. Health misinformation before COVID-19

When the Oxford Dictionary elected ‘post-truth’ as word of the year, in 2016, it meant to reflect on 12 months of growing distrust by the public of any information provided by the establishment, including politicians, mainstream media and big corporations, ushered by the American election and Brexit (BBC News, 2016).

Similarly, the Collins English Dictionary, in 2017, chose as word of the year the term ‘fake news’ (BBC News, 2017), a term that has by now lost its original meaning as a proxy to false information, and became instead a label to discredit any politically uncomfortable or inconvenient news.

Mentioning these two anecdotes has the sole purpose of establishing the point in time where misinformation – an old phenomenon by any account (Mansky, Reference Mansky2018) – became a mainstream concern.

We believe, as others have pointed out, that ‘fake news’, as a term, should be retired from scientific discourse, due to its now latent ambiguity (Sullivan, Reference Sullivan2017). In fact, ‘fake news’ has been by now widely adopted in public discourse to refer to any news that casts a negative light on a subject. Any news item has become easily dismissible by labelling it ‘fake news’, regardless of the accuracy or truthfulness of the reporting.

In this paper, the term ‘disinformation’ and ‘misinformation’ (stricto sensu) will be used to refer, respectively, to incorrect and false information spread with, or without, a deceitful intention. As ‘intention’ is, in most cases, hard to ascertain, ‘misinformation’ (lato sensu) will be the preferred term to refer to the larger phenomenon of spreading false information, regardless of intention.

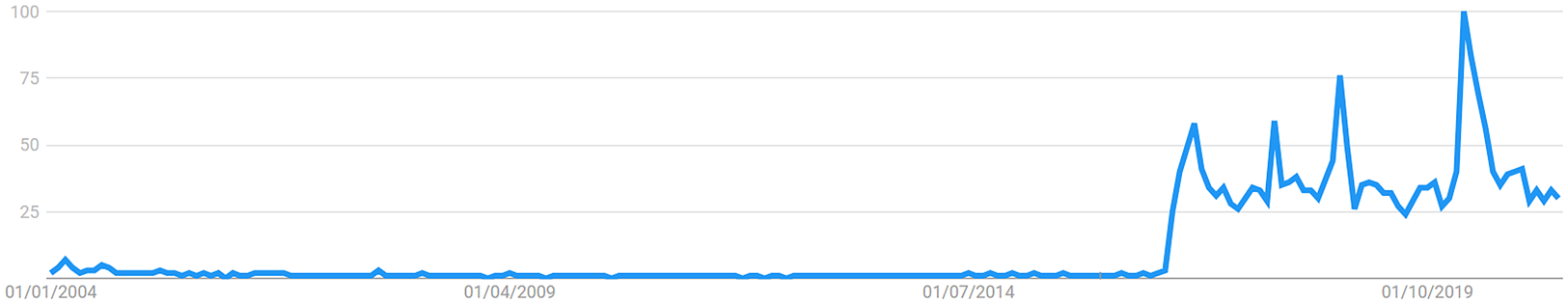

However, the term ‘fake news’ retains its usefulness for certain purposes. For example, ‘fake news’ can be used as a proxy to ascertain mainstream interest or attention on the larger topic of misinformation. In fact, and confirming the anecdotal evidence introduced by the abovementioned ‘word of the year’ awards, an analysis of the popularity of the term ‘fake news’ on Google Trends – a tool developed by Google that enables users to search and compare the popularity of a certain ‘search query’ in a certain period (Google Support, 2020) – confirms that the period covering the end of 2016 and the beginning of 2017 is when the term became consistently more popular.

Figure 1 is taken from a Google Trends search for the term ‘fake news’, across all categories, worldwide, from 2004 (the earliest possible date) to the most recent date (March 2021).

Figure 1. Google Trends search for the term ‘fake news’, across all categories, worldwide, from 2004 (the earliest possible date) to the most recent date.

The numbers in the y axis represent, on a scale from 0 to 100, the search interest relative to the highest point on the chart for the selected region and time. A value of 100 is the peak popularity of the term.

As per this graph, and despite the sudden increase in relative popularity of the term during and right after the Brexit Referendum and the 2016 US Presidential Election, its peak popularity came at a later date.

That date was March 2020, the month when the WHO declared COVID-19 a pandemic (BBC News, 2020), and the whole world finally acknowledged that a global life-changing event was taking place.

Before that date, research around online health misinformation was largely focused on health-related conspiracy theories, such as those promoting vaccine hesitancy, deemed by the WHO as one of the top 10 threats to global health in 2019 (Wang et al., Reference Wang, McKee, Torbica and Stuckler2019).

There was some general research around health misinformation signalling what was already a problem with sizable dimensions, albeit less visible. A study found that 40% of the health-related stories published between 2012 and 2017 in Poland contained falsehoods (Waszak et al., Reference Waszak, Kasprzycka-Waszak and Kubanek2018). Misinformation extended to a wide range of topics, from the effects on health of alcohol consumption, smoking tobacco and marijuana, eating habits, obesity and physical exercise.

One of the most visible faces of health misinformation before COVID-19 was vaccine hesitancy, which had already been signalled by the WHO as one of the main threats to global health (World Health Organisation, 2019). An article published in The Lancet in 1998 by Wakefield et al. (Reference Wakefield, Murch, Anthony, Linnell, Casson, Malik, Berelowitz, Dhillon, Thomson and Harvey1998), claiming a connection existed between the measles, mumps and rubella vaccine and autism in young children, sparked what came to be known as the anti-vaccination movement. Even though the paper was later discredited by the UK General Medical Council's Fitness to Practise Panel on 28 January 2010, and subsequently retracted by The Lancet shortly after, media reported extensively on the topic following publication of the first report, and continued to do so even after the emergence of new studies discrediting the initial one (BBC News, 1998; Fraser, Reference Fraser2001).

The endorsement of anti-vaccination sentiment by celebrities may have further contributed to the increasing popularity of the idea that vaccines were dangerous (Martinez-Berman et al., Reference Martinez-Berman, McCutcheon and Huynh2020) and a conspiracy was afoot to vaccinate the world's population for evil purposes.

Other more recent epidemics, such as Zika (Carey et al., Reference Carey, Chi, Flynn, Nyhan and Zeitzoff2020) and Ebola (Vinck et al., Reference Vinck, Pham, Bindu, Bedford and Nilles2019), also elicited research around the impact of online health misinformation. Epidemics are fertile ground for misinformation as they tend to generate an abundance of information, produced fast based on real-time events, without the benefit of time. Populations are usually highly invested in the topic, due to its sensitivity and urgency, and are more prone to believe and share false content (Salvi et al., Reference Salvi, Iannello, Cancer, McClay, Rago, Dunsmoor and Antonietti2021).

Notwithstanding, widespread health misinformation with large-scale consequences is a recent phenomenon, which explained the lack of specific initiatives designed to tackle it. The COVID-19 infodemic changed that.

3. The COVID-19 infodemic

As articulated above, COVID-19 signified an important shift in legal debate surrounding both disinformation and misinformation. The ability for false and misleading information online to undermine public health and hamper state responses has exacerbated anxieties surrounding the veracity of digital information. Since the outbreak, disinformation and misinformation have proliferated online. Dubious stories about military ‘lockdowns’ and falsities surrounding bogus potential cures have amplified socio-legal anxieties surrounding digital platforms. This led the WHO to classify the deluge of rapidly spread falsehoods as an ‘infodemic’ (World Health Organisation, 2020). During the height of the pandemic, EU institutions such as the European Commission cautioned that ‘disinformation in the health space’ is now ‘thriving’, and urged citizens to avoid spreading ‘unverified’ claims online (European Commission, 2020a, 2020b).

The COVID-19 infodemic has spurred new challenges in the global fight against disinformation and misinformation, particularly from a public health perspective. False health narratives and their amplification online can ‘engender ill-informed, dangerous public decisions’ (Nguyen and Catalan, Reference Nguyen and Catalan2020). While pre-eminent sources of health misinformation during the pandemic raise novel challenges, the techniques used to spread falsehoods predate COVID-19. Malicious actors who target voters with predatory disinformation campaigns leveraged similar techniques to stifle responses to the pandemic. In this connection, there is a crossover between health misinformation in the pandemic and broader campaigns to influence elections that have attracted scrutiny in recent years. Vaccine disinformation feeds on pre-existing ‘QAnon’ conspiracies (Santos Rutschman, Reference Santos Rutschman2020). This acceleration of misinformation during the pandemic has led to a resurfacing and intensified scrutiny surrounding the legislative responses to disinformation from European institutions (Vériter et al., Reference Vériter, Bjola and Koops2020). The current environment has also raised fresh concerns surrounding the liability of technological intermediaries for misinformation. As highlighted, not all harmful content is unlawful, and this is a quagmire that is directly linked to the novel challenges surrounding contemporary disinformation. This is discussed below. Notwithstanding the new challenges that arose during the infodemic, it must be pointed out that the prevailing conspiracies surrounding the origins of COVID-19, and misinformation surrounding vaccines, ultimately reflect a new variant of an existing problem. In the EU, the European Commission has initiated a legislative agenda since 2018. In light of the evolving nature of health misinformation in the midst of the pandemic, it is both timely and necessary to examine the novel aspects of health misinformation, and how the current legal framework for disinformation is fit for purpose to meet this evolving challenge.

3.1 A new variant of an old problem

When considering the infodemic and its relevance to the current pandemic, this concept refers not only to the factual veracity of information but the overabundance of information. This is directly linked to the powerful role of technological intermediaries that have now become important vectors of democratic communication. The distortion of information to deceive citizens can be traced back to the invention of the printing press. However, new technologies change how citizens receive information in a public health context. While the concept of an infodemic is nothing new, the scale and efficient speed of information distribution on dominant technological platforms means that the contemporary manifestation raises novel challenges. This is particularly relevant when considering misinformation related to COVID-19 vaccines. Public scepticism of vaccinations long predates the contemporary infodemic. As outlined above, this is particularly acute when examining misinformation surrounding the debunked link between the MMR vaccine and autism (DeStefano and Shimabukuro, Reference DeStefano and Shimabukuro2019). In the same way as spurious vaccine claims predate COVID-19, the adverse effects of such conspiracies have been widely documented in a public health context. Vaccine scepticism has been attributed to contemporary outbreaks of measles in concentrated areas, while public scepticism surrounding the Zika vaccine hampered public health responses in areas where the virus was most pronounced (Dredze et al., Reference Dredze, Broniatowski and Hilyard2016; Dyer Reference Dyer2017).

While current misinformation surrounding COVID-19 is by no means unprecedented, the information distribution system of digital platforms means that citizens who are predisposed to consuming misinformation are more easily empowered to do so. This has been examined through an abundance of literature that probes how technology transforms political communication into a process that is automated, personalised and algorithmically driven. This is intrinsically linked to broader changes in how audiovisual communications are transmitted. Broadcasting itself has evolved from being a medium ‘where advertising is placed in order to reach as much of the population as possible’ to a medium which is ‘increasingly dependent on the ability to target effectively’ (Lovett and Peress, Reference Lovett and Peress2010). In this connection, the rise of ‘disintermediated access to information’ has paved the way for information distribution to become ‘increasingly personalized’, both in terms of how messages are conveyed and their content (Del Vicario et al., Reference Del Vicario, Zollo, Caldarelli, Scala and Quattrociocchi2017). This precarious digital environment has elicited particular interest as governmental and scientific stakeholders attempt to inform citizens about the efficacy of COVID-19 vaccines. The rapid speed and efficiency of digital communication also makes it difficult for researchers to keep track of the ever-expanding scope of conspiracy theories.

Such conspiratorial narratives are broad in scope, ranging from unsubstantiated claims that the ‘pandemic was engineered to control society or boost hospital profits’ to claims that the risks of vaccines outweigh their potential benefits (Tollefson, Reference Tollefson2021). The WHO has raised concerns surrounding how the ‘overabundance of information’ related to vaccines can be spread ‘widely and at speed’ online (World Health Organisation, 2021). In spite of this necessary recognition, the WHO has advised citizens who encounter false claims to report them to the social media companies on which they are found. For this reason, it is necessary to understand the efficacy in how major technological intermediaries are currently responding to the ever-expanding plethora of misinformation claims in this critical public health area. In auditing this coordinated response, the existing legal framework and its limitations must be dissected.

3.2 Responses by digital intermediaries

In response to the proliferation of health misinformation during the pandemic, the reaction from key technological stakeholders has been partially satisfactory but ultimately inconsistent. Their particular position as special-purpose sovereigns (Balkin, Reference Balkin2018) with regulation powers over major information platforms demanded decisive responses. Anecdotal instances of proactive measures taken by platforms have been documented. For example, WhatsApp's message forwarding function was temporarily limited at the height of health misinformation during the outbreak. This was due to the efficient manipulation of the forwarding function as part of broader techniques to sow uncertainty and fuel mistrust in authoritative media sources across Europe (Elías and Catalan-Matamoros, Reference Elías and Catalan-Matamoros2020; Roozenbeek et al., Reference Roozenbeek, Schneider, Dryhurst, Kerr, Freeman, Recchia, Van Der Bles and Van Der Linden2020). As Ricard and Medeiros highlight, misinformation on WhatsApp was particularly ‘weaponised’ in Brazil, wherein malicious actors leveraged misinformation to ‘minimize the severity of the disease, discredit the social isolation measures intended to mitigate the course of the disease's spread and increase the distrust of public data’ (Ricard and Medeiros, Reference Ricard and Medeiros2020).

While independent measures to limit message forwarding are an example of proactive steps adopted by platforms in response to health misinformation, platforms in general have ultimately displayed a continued ‘reluctance to act recursively’ in the face of bogus claims during the pandemic (Donovan, Reference Donovan2020). The characterisation is unsurprising when assessing how the EU's intermediary liability regime treats misinformation as harmful but nonetheless lawful content. Unlike other well-established content categories such as terrorist content, child pornography and copyright infringement, misinformation is not unlawful. Accordingly, this means that platforms have far more discretion in adopting rules and implementing minimum safeguards to mitigate health misinformation as opposed to other categories of content that are unlawful. Due to this discretion, an argument often raised in debates on misinformation regulation is that such regulation could infringe constitutionally protected rights to freedom of expression (Craufurd Smith, Reference Craufurd Smith2019). This point is addressed in more detail below.

The quagmire of harmful but lawful content, and the inconsistent measures to address misinformation and disinformation more generally, has manifested through the piecemeal results of the current self-regulatory scheme in the EU. The European Commission has pushed the legislative agenda for disinformation since 2018. The most concrete development in this area has been the Commission's development of a self-regulatory Code of Practice on Disinformation. In a nutshell, digital signatories including Twitter, Facebook and Google adopt voluntary commitments to prevent the spread of false information on their platforms. While the Commission has praised some of the efforts by signatories, it has pointed out important flaws that are directly attributable to the inherent limitations of the self-regulatory model. For example, different platforms vary widely in their definitions of paid-for content, and their criteria for assessing the presence of inauthentic behaviour online. Moreover, many platforms have obscured the provision of relevant data to researchers in a manner that falls short of reaching ‘the needs of researchers for independent scrutiny’ (European Commission, 2020a, 2020b). Platforms that have developed ‘repositories of political ads’ can still unilaterally ‘alter or restrict’ repository access for researchers. This has led the Commission to criticise the ‘episodic and arbitrary’ manner in which platforms under the self-regulatory model have provided access to information that is relevant to identifying misinformation and disinformation. The limitations of this model, and the consequences of having an EU legislative framework that lacks cohesion and consistency, has been severely exposed by the proliferation of health misinformation during the pandemic.

Arguably driven by the shortcomings of the 2018 Code, the Commission published a revised Code in 2022 and has complemented this with binding obligations for Very Large Online Platforms in several provisions of the incoming Digital Services Act (DSA). It is highly likely that developments in the 2022 Code will encourage platforms to provide more granular detail surrounding their actions to combat misinformation. For example, the new Code includes more specific commitments surrounding how platforms adopt specific policies aimed at combatting deceptive content and how effective these policies are (European Commission, 2022). Moreover, the fact that many Code signatories will now be required under the DSA to identify and mitigate ‘significant systemic risks’ is likely to encourage more consistent efforts to combat health misinformation. Crucially, however, these new developments carry threats to freedom of expression. For example, the DSA leaves extensive discretion for platforms to define and mitigate risks but also may compel platforms to take down forms of misinformation that have been deemed illegal by national laws that do not uphold key European human rights standards surrounding the right to access information (Ó Fathaigh et al., Reference Ó Fathaigh, Helberger and Appelman2021). Considering these developments, it is vital that policy makers understand the application of the right to freedom of expression in the public health context.

4. Right to health and freedom of speech

When considering how legislation can be used to insulate the public from health misinformation, the relevant legal safeguards to freedom of expression must be acknowledged and dissected (Noorlander, Reference Noorlander2020). As outlined above, existing efforts to curtail false information online have been carried out through a self-regulatory model, and this has resulted in measures adopted by digital platforms that have been arbitrary and haphazard (Noorlander, Reference Noorlander2020). As a consequence, there is growing pressure on governments and EU institutions to develop and enforce binding legal rules in this area (Radu, Reference Radu2020). In light of this pressure, it is critical to understand how legislation in this area must navigate the protective legal contours of freedom of expression. As outlined above, concerns surrounding how misinformation legislation could cause a chilling effect on freedom of expression are particularly important to address because this type of harmful and misleading content is often not unlawful under EU or domestic laws.

European standards surrounding freedom of expression – and legitimate limitations to this right – must be derived from the framework of Article 10 of the European Convention on Human Rights (ECHR) and associated case law of the European Court of Human Rights (ECtHR). This framework not only informs national laws that affect the responsibilities of technological intermediaries but also influences EU fundamental rights protections through the EU Charter. This is an instrument that is used to inform the above-mentioned Code of Practice on Disinformation and the DSA.

It should be pointed out that under Article 10, there is no general obligation for speech to be truthful, nor is there any specific requirement that information and ideas must be imparted in an accurate and factual manner (McGonagle, Reference McGonagle2017). It must also be highlighted that the ECHR is an instrument that is addressed to states and not directly to technological intermediaries. However, ECHR standards surrounding freedom of expression under Article 10 are crucial as a tool that can be used to inform national legislation in Europe that may impose responsibilities on platforms to remove – or otherwise obscure access to – health misinformation. Moreover, Article 10 of the ECHR is a protection that not only applies to national laws in all EU Member States but also informs EU disinformation policy surrounding intermediaries. Freedom of expression is not an unlimited right under the ECtHR. Article 10 explicitly lays out scope for limitations within its first and second paragraphs. Importantly, Article 10(2) lays out criteria for when freedom of expression can be limited in line with numerous legitimate aims. One of the aims under this limitation clause is the ‘protection of health or morals’. In the ECtHR, the protection of public health has been assessed by the Court as a justification for limiting freedom of expression. Through this aspect of the Court's commentary, important lessons on the scope for limiting freedom of expression on public health grounds can be extracted.

Threaded throughout the ECtHR's commentary on Article 10 is the principle that citizens must have access to information and ideas that are in the ‘public interest’. This principle stems from the Court's early jurisprudence. In Sunday Times vs. The United Kingdom, the Court found an Article 10 violation for the first time. The applicant was a newspaper and had published articles that documented the birth defects associated with the prescription drug thalidomide, and actions that were being litigated at the time by the families of affected children. At the time that the reporting was occurring, the manufacturers of the drug had entirely withdrawn thalidomide from the British market. The topic had also been the subject of extensive parliamentary debate in Britain at the time. In 1972, the Divisional Court of the Queen's Bench granted the injunction on the grounds that publications of an incoming future article on the matter could amount to contempt of court. The ECtHR found that the granting of an injunction against the newspaper could not be regarded as having been an interference that was ‘necessary in a democratic society’. Crucially, the Court held that the public interest of open debate on the subject of the thalidomide drug outweighed the purported justification for the UK's interference with freedom of expression. A similar reasoning was applied in the more recent case of Open Door and Well Woman vs Ireland. Here, the ECtHR found a violation of freedom of expression under Article 10 when the Supreme Court issued two injunctions against advocacy groups. Injunctions had been issued to prevent Open Door Counselling Ltd and the Dublin Well Woman Centre from providing women with information related to the provision of abortion services. In spite of the fact that abortion had been unlawful in Ireland at the time, the information did not attempt to procure any abortion services procured in Ireland, and instead focused on abortion services legally obtainable in other jurisdictions. The ECtHR disagreed with the Supreme Court's decision to uphold the injunctions, arguing that the restrictions were disproportionate to the aims sought, and constituted an unjustifiable impediment on the receipt of impartial and accurate health information.

While in the above decisions the ECtHR reasoned that citizens should have unrestricted access to information relevant to public health, the Court has also reasoned that freedom of expression can be limited by states on the grounds of protecting public health. In particular, the Strasbourg Court has explicitly rejected the idea that freedom of expression encompasses a right to disseminate false and misleading information that is veiled as legitimate medical and scientific information. In the admissibility decision of Vérités santé pratique Sarl vs France, the applicant was a company that published a health magazine. The magazine adopted a ‘critical approach to health matters’ and informed its readership on ‘alternative types of treatment’. The applicant had been denied renewal of its registration with the Joint Committee on Press Publications and Press Agencies (CPPAP). The refusal was based on the grounds that unverified medical information had been published in the magazine. Specifically, the magazine ‘discredited the conventional treatment given to patients with serious illnesses such as cancer or hypertension’. The ECtHR found the application manifestly ill founded. Crucially, the Court reiterated that freedom of expression does not encompass ‘unlimited freedom of expression’ and that public health grounds can be used to justify certain restrictions, provided that restrictions satisfy the longstanding and robust requirements that the ECtHR has fleshed out in its rich Article 10 jurisprudence. That is, interference must be legally prescribed, in pursuit of a legitimate aim, and circumscribed in a manner that is necessary in a democratic society. Importantly, the Court has recognised that the legitimate aims under Article 10(2) can apply in contexts where online platforms may be held liable for identifiably harmful content. For example, in the Grand Chamber decision of Delfi AS vs Estonia, the ECtHR not only accepted that a commercially operated online news portal could be held liable for user comments that harmed ‘the reputation and rights of others’ but also opined that harmful content posted online could cause more lasting damage. Thus, while the application of Article 10 and limitations to freedom of expression primarily affects public authorities, this right also has an important application to private entities that mediate public discourse and play a role in facilitating or restricting access to potentially harmful content.

The Court views domestic restrictions in line with the margin of appreciation doctrine. This means that states have a level of discretion on regulating communications, depending on the nature of the communications. Importantly, the Court has addressed this in the context of dubious public health communications. In the Grand Chamber decision of Mouvement Raëlien Suisse vs Switzerland, the Court accepted that restrictions imposed by Swiss authorities preventing the applicant company from continuing a billboard campaign had been justified and proportionate. The campaign involved the promotion of human cloning, sensual meditation and geniocracy. The Grand Chamber upheld the restriction on the billboard campaign, noting that it could be considered ‘commercial speech’ rather than a genuine contribution to debate in the public interest. In Palusinski vs Poland, the Court rejected the admissibility of an Article 10 application where the applicant had been convicted for publishing a book that enticed readers to consume certain narcotics. The Court, in finding that the Polish authorities had not been disproportionate in the penalty applied, and in finding that the book in question has effectively endorsed the use of certain narcotics, rejected the applicant's argument that a violation of freedom of expression had occurred.

As evidenced in these decisions, the ECtHR has explicitly accepted that freedom of expression can be limited, and that the protection of public health can be considered a legitimate aim for domestic authorities to limit freedom of expression. Importantly however, it is imperative that any restrictions imposed as prescribed by law are based on a legitimate aim, and are necessary in a democratic society. Legislation to curb health misinformation, including vaccine misinformation, must be justifiable and proportionately executed. If these important requirements are not satisfied, it is highly likely that regulatory efforts to flatten the curve of health misinformation will run contrary to freedom of expression.

5. The ethics of health misinformation regulation

The regulation of health misinformation has a complicated ethical dimension. There are two competing interests – to ensure that individuals are not influenced to make decisions that are not in their best interests and which they might not otherwise have made, and to ensure that information can be shared without the threat of it being censored merely because some find it undesirable or unpalatable. It is very important to consider how these interests should be balanced as a matter of law, but it is just as important to consider how they are balanced as a matter of ethics. Whether a regulatory objective can be achieved is distinct from whether it should be achieved. As the analysis above made clear, human rights law generally supports the choice of governments to control the sharing of information in order to protect public health, yet some lawful public health regulations might still impose burdens that are ethically unacceptable in an enlightened democratic society.

Thus, it is important to examine the ethics of health misinformation regulation, and raise ethical concerns when they are warranted – failure to do so is an abdication of the responsibility borne by all professionals who work in the health field to contribute accurately to public health debates and highlight inadequacies where they might exist (O'Mathúna, Reference O'Mathúna2018; Robinson, Reference Robinson2018; Wu and McCormick, Reference Wu and McCormick2018). Moreover, since misinformation has become a tool in the political struggle to control social reality (Farkas and Schou, Reference Farkas and Schou2018), the question of how to combat the generation and dissemination of health misinformation has become a sensitive one that is imbued with political significance. The choice to regulate health misinformation or not is accompanied by an implicit political declaration, which can have real practical effects upon wider health policy. Thus, the matter of whether these choices are made ethically must be carefully considered, to avoid a wider degradation of the moral foundations of health policy.

Scholars have made persuasive arguments for employing a pragmatist ethical approach to the regulation of misinformation (Stroud, Reference Stroud2019), and we contend that this argument applies equally well to the regulation of health misinformation. This is because preventing the spread of health misinformation is a ‘wicked problem’ (Head, Reference Head2008). It is complex, since there are multiple types and sources of health misinformation. It is ongoing, since health misinformation will persist as long as scientists continue to make advances in public health protection (Dubé et al., Reference Dubé, Vivion and MacDonald2015) and as long as there are vested interests in health policy. It is also resistant to being ‘solved’, not only because people are induced by confirmation bias to seek out evidence that confirms their beliefs when they are told that those beliefs are based on misinformation (Meppelink et al., Reference Meppelink, Smit, Fransen and Diviani2019), but also because media platforms and providers, while willing to work with governments to prevent the worst kinds of misinformation, will contest attempts to restrict their editorial independence (Schweikart, Reference Schweikart2018).

Designing a suitable response to a complex and nuanced problem such as how to regulate health misinformation requires an equally complex and nuanced ethical analysis. A pragmatist ethical approach – in which the key insights from major ethical theories are applied without dogmatically relying on any particular one – is most desirable in this situation. Applying any one ethical theory to the problem cannot be appropriate since the complexity of the issue means that such theories could easily be applied in different manners to reach contradictory conclusions.

From a consequentialist perspective, one could argue that regulating the provision of health information to the public may end up depriving the public of knowledge that is helpful in addition to removing information that is unhelpful, and that negative psychological, health and democratic outcomes may outweigh any benefits of regulation (Grill and Hansson, Reference Grill and Hansson2005). However, the fact that discussion of an issue might lead to negative consequences should not be a reason to avoid that discussion – for every instance of mistrust and stifling of democratic debate that could be raised, there is an opposing democratic gain to be made in raising awareness of issues that might otherwise have been hidden from public view (Brown, Reference Brown2019).

From a deontological perspective, it could be argued that by regulating false health information governments are manipulating the public (Rossi and Yudell, Reference Rossi and Yudell2012), interfering in the autonomy of individuals to engage with health information on their own terms, and disrespecting the intrinsic moral worth of the human capacity for rationality, which would be unacceptable. However it is equally possible to argue the opposite, that regulating false health information facilitates autonomous decision-making (Ruger, Reference Ruger2018; Stroud, Reference Stroud2019).

From the perspective of virtue ethics and capability theory (Alexander, Reference Alexander2016), the regulation of health information to prevent the spread of misinformation could have the effect of limiting and restricting online and other freely accessible channels and technologies that increase health literacy and help to address health inequity (Kickbusch et al., Reference Kickbusch, Pelikan, Apfel and Tsouros2013) – the resulting restriction of access to health information generally would have a disproportionate effect upon disadvantaged populations, which would be inconsistent with virtuous behaviour, the promotion of human health capabilities and ideas of social justice. However, once again, the opposite argument could be made. Since governments are uniquely empowered to take actions to improve public health, refusing the opportunity to do so could be considered irresponsible (Rossi and Yudell, Reference Rossi and Yudell2012).

To produce a suitably nuanced ethical analysis of health misinformation, a pragmatic approach that draws upon multiple theories is necessary. A detailed exposition of such an approach is beyond the scope of this paper, and must be the subject of further detailed research. Here, we have the more modest ambition of highlighting the necessity of analysing the ethics of health misinformation regulation, and the challenges inherent in this analysis. A pragmatist approach is the one that we suggest, however it is not the only multi-principled ethical framework that might be suitable. The five virtues of medical practitioners – trustworthiness, integrity, discernment, compassion and conscientiousness (Beauchamp and Childress, Reference Beauchamp and Childress2013) – may also offer a framework for ethical analysis that accommodates the complexity of health misinformation regulation. At the very least, proposed interventions should be scrutinised from multiple ethical perspectives to build up a holistic picture that policymakers should then use to make a decision as to whether, on balance, the intervention is ethically legitimate. The use of ethical evidence in this manner should be an equal part of the policymaking process, alongside legal and political assessments.

6. Conclusions

Misinformation in general, and health misinformation in particular, is a matter of genuine concern due to its political, social and individual implications. Directly and indirectly, instances of misinformation are credited with causing important events with tragic consequences. The COVID-19 infodemic, and the way it is believed to be impacting the success of public health policies such as the mandatory use of protective equipment, the practice of social distancing and mass vaccination, has likely contributed, and will continue to contribute, to avoidable deaths.

This context fosters understandable calls for broad State regulation of misinformation and of the digital intermediaries seen as its conduits. Such initiatives are ongoing, and legislative acts have been rolled out or announced in many countries and at the EU level. The goal of this paper was not to review particular legislative initiatives, or to assess the impact and efficacy of measures implemented by digital intermediaries, but to reflect on the high stakes involved in regulating health misinformation both from a legal and ethical point of view.

Freedom of speech, for all its undeniable relevance, is not an unlimited right, and as we have shown, the ECtHR's case law provides a framework under which limitations based on public health considerations can be deemed acceptable. These limitations must be justifiable and proportionate to the purpose at hand. In times of crisis, such as during a pandemic, the proportionality of certain policies should be considered carefully.

The regulation of digital intermediaries in order to force them to take action on their users' content, as a way of implementing anti-health-misinformation policies, should also take into consideration the same checks and balances. Individuals' protections against abusive behaviour from digital intermediaries are still diminished, and State regulation of freedom of expression via such entities should not be used to achieve a result that would have been inadmissible if done directly.

Equally important when considering how to approach regulation of health misinformation are the ethical considerations that must support and inform responsible policymaking in this area. Public discourse on this topic is often polarised between free speech maximalists who do not see restrictions as fair even for public health reasons, and regulation maximalists, who aim at the eradication of misinformation even if at the expense of some legitimate speech. It is of utmost importance to acknowledge the complexity of the ethical values at stake. In fact, most ethical theories can be used to defend both the imperative of regulating speech to avoid health misinformation and the imperative to protect speech by allowing health misinformation. This should warrant careful consideration when discussing potential avenues for regulation.

The wicked nature of this problem suggests that a regulatory silver bullet is unlikely to be found. Policymakers should acknowledge the complexity of this matter and avoid doing either too little at the expense of people's health and safety, or too much at the expense of our freedom of speech and our free access to information.

Although the goal of this article was not to distinguish and review potential policy pathways, our findings strongly suggest that solutions more focused on empowering individuals – such as media literacy initiatives, quality journalism and fact-checking and credibility indicators – are less likely to raise freedom of expression concerns (Marecos and Duarte, Reference Marecos, Duarte and De Abreu2022). Naturally, exploring these types of intervention does not then negate or short circuit the need to engage in ethical analysis. It may, however, ease some of the more acute ethical conflicts to the point that governments can be more confident that they are addressing health misinformation in a morally acceptable manner.