The Quality Assurance Group (QAG) was established in 2000 by the International Network of Agencies for Health Technology (INAHTA) (Reference Hailey1) to define and address issues concerning harmonization of the health technology assessment (HTA) methods used among INAHTA's fifty-seven public sector member agencies.

Although comprehensive standardization of methods is unlikely to be applicable to all types of HTA products, it could potentially reduce duplication of effort across member agencies. Existing HTA products could be identified and simply adapted to the local setting, and/or updated, rather than independently produced. Greater standardization in HTA methods through the specification of desirable approaches to conducting different types of HTA, might facilitate this adaptation.

Harmonization efforts with respect to producing and adapting HTAs have been undertaken by other organizations, most notably by the member agencies of the European Network for Health Technology Assessment (EuNetHTA), some of whom are members of INAHTA (Reference Kristensen, Lampe and Chase2;Reference Kristensen, Makela and Neikter3). Varying types of HTA products are recognized as part of the proposed harmonization process but no formal definition has been applied to the different products, instead the focus has been on identifying and addressing key aspects in specific modules (e.g., safety domain, effectiveness domain, economic evaluation domain) and then applying or adapting modules as needed to meet local requirements (Reference Milne4).

There have been doubts raised with respect to how successful harmonization efforts have been to date. Hutton et al. (2008) have indicated that, although there is some degree of agreement between researchers and practitioners on the principles of HTA, there is still a lack of agreement on methodology details and evidence standards (Reference Hutton, Trueman and Facey5). Trueman et al. (2009) when comparing HTAs on drug eluting stents across jurisdictions found that “although there is a common core data set considered by most of the agencies, differences in the approach to HTA, heterogeneity of studies, and the limited relevance of research findings to local practice meant that the core data set had only limited influence on the resulting recommendations.” (Reference Trueman, Hurry, Bending and Hutton6). Localizing factors (e.g., local delivery of health care) are always going to impact on policy decisions and so it would be surprising if harmonization in HTA resulted in a harmonization of decision making. What is concerning, however, is that the approaches to the HTA, that is the methods used to evaluate the common core data set, could contribute to the lack of harmonization between HTAs.

HTAs are produced to be responsive to the decision maker and so the approach to the HTA will necessarily be affected by resource and time constraints. However, if it is clear at the outset that a full HTA, for example, will always include an assessment of the safety, effectiveness, economic evaluation, and organizational domains; that certain specific elements will be presented in each of those domains; and that these specific elements will be addressed to a minimum standard, then adaptation of modules from an HTA across jurisdictions and regions is more likely to occur.

During the 2000 Annual Meeting of INAHTA, it was determined that methodologies for producing HTA products varied among member organizations. The following year (2001) a draft checklist was presented, that could serve both HTA developers and the users of HTA (such as policy makers and individuals within other HTA organizations), to identify what methods had been used during the conduct of the HTA, and thus whether the content would meet their needs (Reference Hailey1). During a revision of this checklist in 2007, it became apparent that what might be considered a “rapid review” by some agencies would be classified as a “full HTA” by others, and some agencies were calling “mini-HTAs,” “rapid reviews,” and vice versa. The QAG agreed that, before the methods for performing HTAs could be internationally harmonized, an understanding of the types and components of HTA products performed by each member organization was required. Simply, the QAG sought to classify all HTA products so that like-products could be compared under the same appellation.

To assist this adaptation, a greater understanding of how different agencies are conceptualizing and producing HTAs and communicating the results of their HTAs to policy makers was needed. The first step to achieving this was to survey the INAHTA membership about the target audience, content and methods used to develop their different HTA products.

The ultimate aim would be to develop (i) some commonly accepted definitions for the different HTA products and (ii) benchmarks for different HTA products to achieve the QAG's goals of sharing knowledge, promoting collaboration, and reducing duplication of effort in HTA.

METHODS

The initial paper-based survey was drafted by one of the authors (TM) with input from the QAG and was disseminated in May 2010. In terms of the survey structure and content, twelve closed and open (free text) questions were used for each HTA product. Agencies were asked to identify all of their HTA products and to describe the methods and skills used to create them (Supplementary Table 1, which can be viewed online at http://dx.doi.org/10.1017/S0266462314000543). The core HTA domains, as defined by Busse et al. (Reference Busse, Orvain and Velasco7), were used to classify the content for each HTA product.

HTA products were categorized by two co-authors using a stepped approach, that is, first, according to the provided product label and, when that was not sufficiently explanatory, according to the purpose of the product as described by the agency. It was decided that the primary analysis would involve the most commonly produced HTA products across the agencies. These included full HTA reports, mini-HTAs, policy briefs, horizon scanning reports, and rapid reviews.

In 2013, a Web-based follow-up survey was constructed in Survey Monkey® and distributed to members. It consisted of twenty-two questions about the five common HTA product types (Supplementary Table 2, which can be viewed online at http://dx.doi.org/10.1017/S0266462314000543). Respondents were able to skip questions if they were not relevant. Descriptive analyses and graphs were generated using Excel 2007® (Microsoft™).

On the basis of the 2013 survey results, definitions were finalized for three of the product types (full HTA reports, mini-HTAs, and rapid reviews) and presented at the HTAi and INAHTA meetings in June 2013. A final three-question survey was circulated to member agencies seeking agreement with the proposed definitions (Supplementary Table 3, which can be viewed online at http://dx.doi.org/10.1017/S0266462314000543). Consensus was pre-defined as reaching agreement by more than 70 percent of respondents.

RESULTS

The paper-based 2010 survey achieved a response rate of 40 percent (21/53), despite active promotion at the annual meeting and by the INAHTA Board. The Web-based 2013 follow-up survey had a response rate of 68 percent (38/56), while the short “agreement” survey had a response rate of 59 percent (33/56). Between 2010 and 2013, INAHTA had 53 to 56 member agencies. Of these agencies, 45 responded to at least one of the surveys (Supplementary Table 4, which can be viewed online at http://dx.doi.org/10.1017/S0266462314000543). Approximately half of the respondents were from European countries, with the remainder spread throughout Oceania, Africa, Asia, and the Americas. The results of the 2013 surveys are reported throughout (given the better response rates), although the 2010 survey results are reported for unique questions.

Purpose and Type of HTA Product

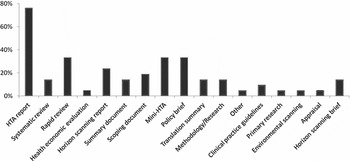

Overall, seventy named products were described by respondents in the 2010 survey. Products that were not clearly designated as a full HTA, mini-HTA, rapid review, or policy brief were reported by a third of agencies as including all nine, or all of the first five, core HTA domains (Reference Busse, Orvain and Velasco7), domains that are often associated with full HTA reports. Thus, it was confirmed that the product label may not always be indicative of product content. Seventeen different product types were identified (Figure 1).

Figure 1. Percentage of responding INAHTA agencies reporting the production of at least one of the below products (2010 survey).

In both the 2010 and 2013 surveys, agencies reported that their most common product was a full HTA report (16/21, 76 percent; and 30/38, 79 percent, respectively). However, the publication of short, tailored HTA products increased, and in some cases doubled, over the same period: rapid reviews were produced by 7/21 (33 percent) agencies in 2010, compared with 25/38 (66 percent) of agencies in 2013; mini-HTAs were published by 7/21 (33 percent) in 2010, compared with 23/36 (64 percent) in 2013; and policy briefs were disseminated by 7/21 (33 percent) of agencies in 2010, as opposed to 16/36 (44 percent) in 2013.

The intended audience of the more common HTA product types varied according to 2010 respondents and in some cases there were multiple audiences intended for a product. According to the agencies, policy makers were the most common target of full HTA reports (88 percent), rapid reviews (86 percent) and policy briefs (63 percent), but were less commonly the target of mini-HTAs (56 percent). Mini-HTAs were primarily aimed at health professionals.

Duration and Frequency of HTA Production

Agencies in 2013 reported that the average timeframes to produce different types of HTA were 8.8 months for an HTA report, 2.9 months for a rapid review, 4 months for a mini-HTA, 2.8 months for a policy brief, and 2.8 months for an horizon scanning report. As these averages could be skewed by a small number of large organizations with large output, median durations and interquartile ranges are also given in Figure 2.

Figure 2. Production time-frames by HTA product type (2013 survey).

Among agencies that created at least one HTA product, the most frequently produced was a rapid review, with 40 percent of agencies (n = 25) producing more than ten a year. Mini-HTAs were produced by 21 percent of agencies at a rate of more than ten per year, with another 21 percent producing five per year (n = 24). Full HTA reports were commonly produced but, consistent with the complexity of a full HTA, the output was less frequent. Most agencies reported publication of between two and four full HTAs each year.

Core Domains Included in HTA Products

According to the 2013 survey, the four most common HTA products are all likely to contain a description of the technology, an overview of its current use, and information on its safety and effectiveness (Figure 3). More than 90 percent of INAHTA agency respondents indicated that these domains are present in full HTAs. In addition, 97 percent of agencies include economic evaluations in their full HTAs—86 percent using economic modeling and 89 percent providing financial (budget) impact analyses. Eighty-one percent of agencies also include economic evaluations in their mini-HTAs but the proportion using economic modeling is much smaller (38 percent), with most of these agencies providing financial impact analyses (67 percent). The other difference between full HTAs and mini-HTAs is that the full version is much more likely to include information on the organizational, ethical, social and legal core domains (Figure 3). Of these, the organizational domain is addressed frequently in HTA reports. The social and legal domains are the least likely to be included in any HTA product type.

Figure 3. The likelihood that an agency always includes a “core domain” in the specified HTA products.

Patient and consumer involvement in HTA product development appears to decrease as the length of time available to create a product decreases. Forty-three percent of agencies producing full HTA reports had no patient or consumer involvement, as compared to 58 percent for mini-HTAs, 70 percent for rapid reviews, 77 percent for policy briefs, and 92 percent for horizon scanning reports.

Approaches Used in HTA Products

Approximately half of agencies reported adapting common HTA products from those produced by other agencies, with the exception of horizon scanning reports. Similarly, 61–79 percent of agencies reported updating existing full HTA reports, mini-HTAs, and rapid reviews, whether produced by their agency or another agency. Original (primary) data were collected by some agencies, usually to inform full HTA reports (46 percent) and mini-HTAs (24 percent).

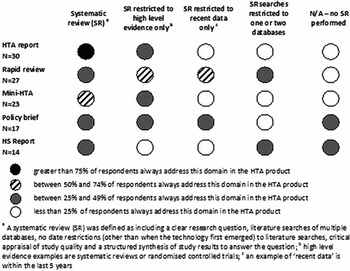

There was reasonable consensus across agencies with respect to the methods of evidence synthesis used in full HTA reports. Ninety-three percent of agencies would undertake a comprehensive systematic literature review (as defined in Figure 4), while 30 percent would also consider restricting the review to only high level evidence eg to systematic reviews or randomized controlled trials. The least robust methods, including not performing a systematic review, restricting the review to recent data only, or of limiting the literature search to one or two databases, were rarely considered (<10 percent of agencies) (Figure 4). Agencies indicated they would use similar approaches to identify and synthesize information for mini-HTAs but would be slightly more willing to undertake the less robust methodologies (albeit still less than 25 percent).

Figure 4. The likelihood that an agency will include a type of systematic literature review in an HTA product type.

As can be seen in Figure 4, there was a greater heterogeneity of approaches when undertaking rapid reviews. Agencies were more likely to consider riskier review methods that could miss key information or allow bias to be introduced. Twenty-two percent of agencies would not undertake a systematic review of any type for rapid reviews.

Evidence Ascertainment

All agencies indicated that a complete search strategy would always be reported in their full HTA reports, with enough detail that the search could be replicated correctly in one database. For mini-HTAs, this would be done by 66 percent of agencies. This was less common for agencies doing rapid reviews (44 percent) and horizon scanning reports (42 percent). The databases searched by agencies writing full and mini-HTA reports were very similar. Agencies performing rapid reviews would also search similar databases, with the exception of those dealing exclusively with economics literature. More than 75 percent of agencies writing full and mini-HTA reports and rapid reviews used the HTA database, the Cochrane Library, Medline, or PubMed. Embase was more commonly used by agencies writing full HTA reports (83 percent), than mini-HTAs (74 percent), or rapid reviews (65 percent). The EuroScan database (79 percent) and PubMed (86 percent) were the main sources of information for those writing horizon scanning reports.

More than half of agencies used seven sources of information for their full HTA reports, mini-HTAs, and horizon scanning reports; with six sources of information used for rapid reviews.

Extraction and Appraisal of Evidence

All agencies (n = 30) indicated that a critical appraisal tool or checklist was used some or all of the time to ascertain the quality of studies included in full HTA reports. Similar results were obtained for agencies undertaking mini-HTAs (96 percent, n = 24). Critical appraisal was not undertaken by a substantial proportion of agencies producing rapid reviews (33 percent, n = 27), policy briefs (66 percent, n = 16) and horizon scanning reports (43 percent, n = 14).

Evidence tables are always presented by 83 percent, 67 percent, and 41 percent of agencies, respectively, in their full HTA reports, mini-HTAs, or rapid reviews. Data elements included by agencies in these evidence tables were very similar, irrespective of HTA product type. The least common domains appear to be the clinical importance and applicability (external validity) of study results. It may be that this is done narratively, when the findings from the evidence are interpreted within the local policy context.

Proposing Product Type Definitions

On the basis of the results of the 2013 survey, definitions were proposed for three of the product types: full HTA reports, mini-HTAs, and rapid reviews. It was decided that a content definition for policy briefs would be unrealistic given that they tend to be secondary products that could summarize the results of any other HTA product. A content definition for horizon scanning reports was also considered unrealistic as they also vary widely in scope according to the available evidence base.

The INAHTA members were asked to indicate their agreement with the proposed content definitions (see the Discussion section) for the three product types, in a final survey (Supplementary Table 3). Consensus was achieved as more than 70 percent of respondents agreed with the definitions proposed for HTA reports, mini-HTAs, and rapid reviews.

DISCUSSION

One of the aims of the INAHTA Quality Assurance Group (QAG) is to create a shared international understanding of HTA. It has become apparent that a barrier to discussing and quality assuring the methods used for HTA products is that there is no common naming convention or categorization of HTA product types.

Our survey results indicate that the proportion of HTA agencies producing short, tailored products has doubled over a 4-year period. Seventy distinct HTA products and seventeen product types were already being produced by twenty-one HTA agencies in 2010. The range of products and product types available suggests that agencies are appropriately developing products that target specific policy-maker audiences and address the preferences of policy makers for different types of information. Both macro and micro perspectives on a health technology are often needed (Reference Andradas, Blasco, Valentin, Lopez-Pedraza and Garcia8).

It is clear that the names attributed to HTA products can often describe what the product is used for rather than what the product contains. Consequently, two similar products could be given entirely different names primarily because one is aimed at policy makers and the other at clinicians.

Some organizations have produced their own definitions for different HTA products. For example, DACEHTA first developed mini-HTAs and defined them as a management and decision support tool or checklist with the primary purpose of informing the introduction of new health technologies within the hospital setting. This is consistent with the fact that the primary audience of mini-HTAs in our 2010 survey were health professionals. The original aim of a mini-HTA was to cover the components of a full HTA report but in an abbreviated format so that the assessment could be completed within a short time frame (9). However, it is apparent from the current survey that some organizations consider that an HTA within a short timeframe is a rapid review, while others produce mini-HTAs that are intended for policy makers and are simply full HTA reports minus the social, ethical, legal, and organizational considerations.

The HTA adaptation toolkit produced by EUnetHTA proposes a modularized approach for adapting an HTA to the local setting (Reference Milne4). However, this would be simpler if it is clear as to the type of HTA that is being adapted, and also if the HTA product type is associated with a standard approach and accepted methods. Milne et al. (2011) state, “Clearly, the more information, data and explanation provided in the HTA report for adaptation, the easier and more comprehensive the adaptation process. Thus, the toolkit would be best used as an aid to adapting more comprehensive HTA reports. However, it can also be used to adapt information and data from “rapid reviews” and “mini-HTAs” but the user will need to be aware of the purpose, and potential limitations, of the original report.”

Accepted Definitions

The QAG surveys have provided valuable insight into the HTA products developed by INAHTA member agencies. The definitions proposed and accepted (below) for the most common of these products were based solely on the results of the surveys and thus reflect the methods and approaches that are currently used by INAHTA agencies. In the statements below, the asterisk denotes the HTA Glossary (htaglossary.net) definition of a systematic review.

-

1. An HTA Report will

-

• Always

-

° describe the characteristics and current use of the technology

-

° evaluate safety and effectiveness issues

-

° determine the cost-effectiveness of the technology eg through economic modeling (when it is appropriate)

-

° provide information on costs/financial impact, and

-

° discuss organizational considerations.

-

-

• Always conduct a comprehensive systematic literature review* or a systematic review of high level evidence.

-

• Always critically appraise the quality of the evidence base.

-

• Optionally address ethical, social and legal considerations.

-

-

2. A Mini-HTA will:

-

• Always

-

° describe the characteristics and current use of the technology

-

° evaluate safety and effectiveness issues, and

-

° provide information on costs/financial impact.

-

-

• Always conduct a comprehensive systematic literature review* or a systematic review of high level evidence.

-

• Always critically appraise the quality of the evidence base.

-

• Optionally address organizational considerations.

-

-

3. A Rapid Review will:

-

• Always

-

° describe the characteristics and current use of the technology, and

-

° evaluate safety and effectiveness issues.

-

-

• Often conduct a review of only high level evidence or of recent evidence and may restrict the literature search to one or two databases.

-

• Optionally critically appraise the quality of the evidence base.

-

• Optionally provide information on costs/financial impact.

-

The impetus toward the development of minimum standards in HTA reflects, to some extent, similar moves by the Cochrane Collaboration (Reference Chandler, Churchill, Higgins, Lasserson and Tovey10) and the Guidelines International Network (Reference Mlika-Cabanne, Harbour, de Beer, Laurence, Cook and Twaddle11) towards minimum standards and consistency in their products. It is important that all producers of HTA concur in their understanding of at least the common HTA product types. These definitions can guide future development of products by newer agencies and assist the large proportion of INAHTA agencies that adapt or update other agency's products for their local context.

It was apparent from the survey results that HTA reports have the greatest rigor and complexity of all products and provide the most amount of information. Mini-HTAs maintain the rigor but have a restricted scope and provide less information than full HTA reports. Rapid reviews, on the other hand, have the least rigor and the smallest scope, a likely consequence of the truncated timeline for production. Although timelines did vary substantially between agencies, rapid reviews tend to take an average of 1 month less to produce than mini-HTAs. Therefore, if the policy decision is important, it would appear that the less risky alternative (i.e., in terms of ensuring conclusions are fully informed) would be to wait a little longer for the mini-HTA to be produced. For decisions that have serious policy implications, the least risky strategy would be to commission a full HTA report. However, if the intended audience of an HTA is only seeking information, prioritizing activities or determining options, rather than formulating a fixed policy, then a rapid review might be the most cost-effective option.

INAHTA Product Type Mark

The name or title of an HTA product can be as varied as the 70 identified for the 2010 survey, but if the product type is unambiguously defined then stakeholders will have a clear understanding of the approaches used in the HTA and this may inform collaborative or adaptive arrangements or simply make the policy maker aware of the benefits or limitations inherent in the chosen product type.

To operationalize these agreed definitions, the INAHTA QAG received Board approval to develop an INAHTA Product Type (IPT) Mark that indicates conformity with the INAHTA defined product type. INAHTA members create many more products than are encompassed by the agreed three product types but at least the IPT Marks will be able to be used for these common ones. The IPT Mark will be available for download from the members’ only section of the INAHTA Web site (www.inahta.org) and can be inserted by member agencies on the front cover or inside front cover of their documents. This will help readers determine at a glance, irrespective of language and naming conventions, the content and methods used in the HTA product.

There are two IPT Mark options for each product type: one that indicates the product type designation has been independently verified, and one other that does not mention independent verification. The two options were provided in the event that an INAHTA member cannot find another independent member agency to verify that the document meets the INAHTA definition (e.g., due to language considerations).

Study Limitations

The applicability of the 2010 survey results is limited by the poor response rate, despite the response being similar to other surveys done of INAHTA agencies (Reference Hailey12;Reference Watt, Cameron and Sturm13). However, the more comprehensive survey undertaken in 2013 had a good response rate and so the findings are more likely to be applicable to non-responding INAHTA agencies. Forty-five INAHTA agencies participated overall, and this is a significant proportion of the 53–56 agencies that were members between 2010 and 2013.

The extent to which the survey results obtained from not-for-profit or public sector HTA agencies, a requirement for INAHTA membership, are generalizable to all HTA agencies is uncertain. While the jurisdictional context in which HTA is produced and the resources available necessarily affect the content and approach to HTA, this should not be a deterrent to providing guidance and frameworks for creating HTA (Reference Hailey1). Those agencies who responded to the survey included developing and developed economies, and representatives from Europe, southern Africa, the middle-east, Asia, Oceania, and the Americas (North, Central, and South).

POLICY IMPLICATIONS OF THIS RESEARCH

HTA products differ across agencies, both in nomenclature and the methods used to create them. However, contextual differences and regional nuances which are important to individual agencies and the policy makers they target, as well as the proliferation of HTA products, will present a barrier to achieving the harmonization required to adapt or translate HTA findings across agencies and countries. On analyzing the survey results, it is obvious that certain products created by INAHTA agencies are produced with similar broadly defined methods and address similar core domains. However, the product label for an HTA is not always indicative of the product content. Evidence-based working definitions for each of the three common product types have been proposed and accepted by INAHTA members. The use of an IPT Mark to distinguish between these three product types, without requiring a name change of individual products or an understanding of the language, will assist with harmonization and quality assurance. This will enable easier identification, adaptation and risk assessment of HTA products by both HTA users and producers.

SUPPLEMENTARY MATERIAL

Supplementary Table 1: http://dx.doi.org/10.1017/S0266462314000543

Supplementary Table 2: http://dx.doi.org/10.1017/S0266462314000543

Supplementary Table 3: http://dx.doi.org/10.1017/S0266462314000543

Supplementary Table 4: http://dx.doi.org/10.1017/S0266462314000543

CONTACT INFORMATION

Tracy Merlin BA(Hons Psych), MPH, Adv Dip PM ([email protected]), Associate Professor and Director of Adelaide Health Technology Assessment (AHTA) David Tamblyn, BHlthSc, MPH Senior Research Officer Benjamin Ellery, BHlthSc, GDPH Research Officer Adelaide Health Technology Assessment (AHTA), Discipline of Public Health, Mail Drop DX 650545 School of Population Health, University of Adelaide Adelaide, South Australia, 5005 Australia

CONFLICTS OF INTEREST

We have no financial relationships with any of the agencies providing the evidence-base for this manuscript.