Article contents

THE OPTIMAL MALLIAVIN-TYPE REMAINDER FOR BEURLING GENERALIZED INTEGERS

Published online by Cambridge University Press: 09 August 2022

Abstract

We establish the optimal order of Malliavin-type remainders in the asymptotic density approximation formula for Beurling generalized integers. Given  $\alpha \in (0,1]$ and

$\alpha \in (0,1]$ and  $c>0$ (with

$c>0$ (with  $c\leq 1$ if

$c\leq 1$ if  $\alpha =1$), a generalized number system is constructed with Riemann prime counting function

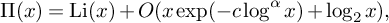

$\alpha =1$), a generalized number system is constructed with Riemann prime counting function  $ \Pi (x)= \operatorname {\mathrm {Li}}(x)+ O(x\exp (-c \log ^{\alpha } x ) +\log _{2}x), $ and whose integer counting function satisfies the extremal oscillation estimate

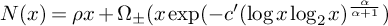

$ \Pi (x)= \operatorname {\mathrm {Li}}(x)+ O(x\exp (-c \log ^{\alpha } x ) +\log _{2}x), $ and whose integer counting function satisfies the extremal oscillation estimate  $N(x)=\rho x + \Omega _{\pm }(x\exp (- c'(\log x\log _{2} x)^{\frac {\alpha }{\alpha +1}})$ for any

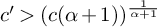

$N(x)=\rho x + \Omega _{\pm }(x\exp (- c'(\log x\log _{2} x)^{\frac {\alpha }{\alpha +1}})$ for any  $c'>(c(\alpha +1))^{\frac {1}{\alpha +1}}$, where

$c'>(c(\alpha +1))^{\frac {1}{\alpha +1}}$, where  $\rho>0$ is its asymptotic density. In particular, this improves and extends upon the earlier work [Adv. Math. 370 (2020), Article 107240].

$\rho>0$ is its asymptotic density. In particular, this improves and extends upon the earlier work [Adv. Math. 370 (2020), Article 107240].

Keywords

MSC classification

- Type

- Research Article

- Information

- Journal of the Institute of Mathematics of Jussieu , Volume 23 , Issue 1 , January 2024 , pp. 249 - 278

- Copyright

- © The Author(s), 2022. Published by Cambridge University Press

Footnotes

F. Broucke was supported by the Ghent University BOF-grant 01J04017. G. Debruyne acknowledges support by Postdoctoral Research Fellowships of the Research Foundation–Flanders (grant number 12X9719N) and the Belgian American Educational Foundation. The latter one allowed him to do part of this research at the University of Illinois at Urbana-Champaign. J. Vindas was partly supported by Ghent University through the BOF-grant 01J04017 and by the Research Foundation–Flanders through the FWO-grant 1510119N.

References

- 2

- Cited by