1. Introduction

Part-of-speech (POS) tagging is one of the building blocks of modern natural language processing (NLP). POS tagging automatically assigns grammatical class types to words in a sentence, such as noun,pronoun, verb, adjective, adverb, conjunction, and punctuation. Various approaches are there for automatic POS tagging; however, the deep neural network approach has achieved state-of-the-art accuracy for resource-rich languages. POS tagging plays a vital role in various text processing tasks, such as named entity recognition (NER) (Aguilar et al. Reference Aguilar, Maharjan, López-Monroy and Solorio2019), machine translation (Niehues and Cho Reference Niehues and Cho2017), information extraction (IE) (Bhutani et al. Reference Bhutani, Suhara, Tan, Halevy and Jagadish2019), question answering (Le-Hong and Bui Reference Le-Hong and Bui2018), and constituency parsing (Shen et al. Reference Shen, Lin, Jacob, Sordoni, Courville and Bengio2018). Therefore, a well-performing POS tagger is inevitable in developing a successful NLP system for a language.

Bodo (Boro) is a Tibeto-BurmanFootnote a morphologically rich language, mainly spoken in Assam, a state in northeastern India. The Bodoland Territorial Region, an independent entity in Assam, uses it as its official language. The Devanagari script is used to write Bodo. As per the 2011 Indian census, Bodo has about 1.5 million speakers, making it the 20th most spoken language in India among the 22 scheduled languages. Even though most Bodo speakers are ethnic, Bodos, Assamese, Rabha, Koch Rajbongshi, Santhali, Garo, and the Muslim community in the Bodoland Territorial Region also speak the language.

However, the state of the NLP systems for Bodo is in a very nascent stage. The study of fundamental NLP tasks in Bodo language, such as lemmatization, dependency parser, language Identification,language modeling, POS tagging, and NER has yet to start. While word embedding or language models (LMs) play a crucial role in a deep learning approach, we observe that no existing pretrained language models cover the Bodo language. As a result, we could not find any downstream NLP tools developed using a deep learning method.

With 1.5 million Bodo speakers, the need for developing NLP resources for the Bodo language is highly demanding. Motivated by this gap in research of the NLP areas of the Bodo language, we present the first LM for Bodo language—BodoBERT based on BERT architecture (Devlin et al. Reference Devlin, Chang, Lee and Toutanova2018). We prepare a monolingual training corpus to train the LM by collecting and acquiring corpus from various sources. After that, we develop a POS tagger for Bodo language by employing the BodoBERT.

We explore different sequence labeling architectures to get the best POS tagging model. We conduct three experiments to train the POS tagging models: (a) fine-tuning based, (b) conditional random field (CRF) based, and (c) long-short term memory (BiLSTM)-CRF based. Among the three, the BiLSTM-CRF-based POS tagging model performs better than the others.

Bodo language uses the Devanagari script, which the Hindi language also uses. Therefore, we conducted the POS tagging experiment with LMs that were trained in Hindi to compare the performance with the BodoBERT. We cover LMs such as Fasttext, Byte Pair Embeddings (BPE), Contextualise Character Embedding (FlairEmbedding), MuRIL, XLM-R, and IndicBERT. We employ two different methods to embed the words in the sentence to train POS tagging models using BiLSTM-CRF architecture—Individual and Stacked methods. In the individual method, the model trained using BodoBERT achieves the highest F1 score of 0.7949. After that, we experiment with the stacked method to combine the performance of the BodoBERT with other LMs. The highest F1 score in the stacked method reached 0.8041. We believe this is not only the first neural Network-based POS tagging model for the language but also the first POS tagger in Bodo. Our contributions can be summarized as follows:

-

Proposed a LM for Bodo language based on the BERT framework. To the best of our knowledge, this is the first LM for Bodo.

-

Presented comparison of different POS tagging models for Bodo by employing state-of-the-art sequence tagger frameworks such as CRF, fine-tuned LMs, and BiLSTM-CRF.

-

Comparison of POS tagging performance of different LMs in POS tagging models such as Fasttext, BPE, XLM-R, FlairEmbedding, IndicBERT, and MuRIL embedding using Individual and Stacked embedding methods.

-

The top-performing Bodo POS tagging model and BodoBERT are made publicly available.Footnote b

This paper is organized as follows- Section 2 describes related works on POS tagging for similar language. The details about the pretraining of BodoBERT and Bodo corpus used in training are presented in Section 3. Section 4 presents the experiments carried out to develop the neural network POS tagger. The section also includes the description of the annotated dataset and POS tagset in the experiment. In Section 5, we present all the experiment results of all three models using different sequence tagging architectures. Finally, we conclude our paper in Section 6.

2. Related work

This section presents related works about POS tagging in different languages. In our literature study, we do not find any prior work on language modeling for Bodo language. Furthermore, to the best of our knowledge, there is no previous work on POS tagging for Bodo language. Therefore, we discuss recent research works reported on neural network-based POS tagging in various low-resource languages belonging to the northeastern part of India.

In paper (Pathak et al. Reference Pathak, Nandi and Sarmah2022), an Assamese POS tagger based on deep learning (DL) approach is proposed. Various DL architectures are used to develop the POS model, which includes CRF, bidirectional long short-term memory (BiLSTM) with CRF, and gated recurrent unit (GRU) with CRF. The BiLSTM-CRF model achieved the highest tagging F1 score of 86.52. Pathak et al. (Reference Pathak, Nandi and Sarmah2023) presented an ensemble POS tagger for Assamese, where two DL-based taggers and a rule-based tagger are combined. The ensemble model achieved an F1 score of 0.925 in POS tagging. Warjri et al. (Reference Warjri, Pakray, Lyngdoh and Maji2021) presented a POS-tagged corpus for Khasi language. The paper also presented a DL-based POS tagger for the language using different architectures, including BiLSTM, CRF, and character-based embedding with BiLSTM. The top performing tagger achieved an accuracy of 96.98% using BiLSTM with CRF technique. Alam et al. (Reference Alam, Chowdhury and Noori2016) proposed a neural network-based POS tagging system for Bangla using BiLSTM and CRF architecture. They also used a pretrained word embedding model during training. The model achieved an accuracy of 86.0%. In paper (Pandey et al. Reference Pandey, Dadure, Nunsanga and Pakray2022), reported work on POS tagging of the Mizo language. They employed classical LSTM and quantum-enhanced long short-term memory (QLSTM) in training and reported a tagging accuracy of 81.86% using the LSTM network. Kabir et al. (Reference Kabir, Abdullah-Al-Mamun and Huda2016) presented their research on POS tagger for Bengali language using a DL-based approach. The tagger achieves an accuracy of 93.33% using deep belief network (DBN) architecture. There are other works that have been reported over the years on POS tagging for a variety of languages, including Assamese, Mizo, Manipuri, Khasi, and Bengali. However, they are based on traditional methods such as rule-based and HMM-based models.

3. BodoBERT

The transformer-based LM BERT achieves state-of-the-art performance on many NLP tasks. However, the success of BERT models on downstream NLP tasks is mostly limited to high-resource languages such as English, Chinese, Spanish, and Arabic. The BERT model is trained in English (Devlin et al. Reference Devlin, Chang, Lee and Toutanova2018). After that, the multilingual pretrained models were released for 104 languages. However, Bodo language is not covered. We could not find any pretrained LMs that cover the Bodo language. So, this motivates us to develop a LM for Bodo using the vanilla BERT (Devlin et al. Reference Devlin, Chang, Lee and Toutanova2018) architecture.

3.1. Bodo raw corpus

A large monolingual corpus is required to train a well-performed LM like BERT. Moreover, training BERT is a computationally intensive task, and it requires substantial hardware resources. On the other hand, getting decent monolingual raw corpus for Bodo has been an enduring problem for NLP research community. Although Bodo has a rich oral literary tradition, however, there was no standard writing script for writing until the year 2003. After a long history of the Bodo script movement, the Bodo language is recognized as a scheduled language of India by the Government of India, and the Devanagari script for writing is officially adopted.

We have curated the corpus after acquiring it from the Linguistic Data Consortium for Indian Languages (LDC-IL) (Ramamoorthy et al. Reference Ramamoorthy, Choudhary, Basumatary and Daimary2019; Choudhary Reference Choudhary2021). The text of the raw corpus is from different domains such as Aesthetics (Culture, Cinema, Literature, Biographies, and Folklore), Commerce, Mass media (Classified, Discussion, Editorial, Sports, General news, Health, Weather, and Social), Science and Technology (Agriculture, Environmental Science, Textbook, Astrology, Mechanical Engineering, and Environmental Science) and Social Sciences (Economics, Education, Political Science, Linguistics, Health and Family Welfare, History, Text Book, Law, etc). We also acquired another corpus from the work (Narzary et al. Reference Narzary, Brahma, Narzary, Muchahary, Singh, Senapati, Nandi and Som2022). The final consolidated corpus has 1.6 million tokens and 191k sentences.

3.2. BodoBERT model training

The architecture of the BodoBERT model is based on a multilayer bidirectional transformer framework (Vaswani et al. Reference Vaswani, Shazeer, Parmar, Uszkoreit, Jones, Gomez, Kaiser and Polosukhin2017). We use the BERT framework (Devlin et al. Reference Devlin, Chang, Lee and Toutanova2018) to train the LM using the masked LM and next-sentence prediction tasks. WordPiece tokenizer (Wu et al. Reference Wu, Schuster, Chen, Le, Norouzi, Macherey, Krikun, Cao, Gao and Macherey2016) is used for embeddings with 50,000 vocabularies. The BERT model architecture is described in the guide “The Annotated Transformer,”Footnote c and the implementation details are provided in “Tensorflow code and BERT model”.Footnote d

The model is trained with six layers of transformers block with a hidden layer size of 768 and the number of self-attention heads as 6. There are approximately 103 M parameters. The model was trained on Nvidia Tesla P100 GPU (3584 Cuda Cores) for 300K steps with a maximum sequence length of 128 and batch size of 64. We used the Adam optimizer (Kingma and Ba Reference Kingma and Ba2014) with a warm-up of the first 3000 steps to a peak learning rate of 2e-5. The pretraining took approximately seven days to complete.

4. Bodo POS tagger

Part-of-speech tagging belongs to the sequence labeling tasks. There are different stages in developing a DL-based POS tagger. This section presents various stages of development of the Bodo POS tagger.

4.1. Annotated dataset

A DL-based method requires a large size of properly annotated corpus to train a POS tagging model. The performance of a well-performed tagging model depends upon the quality of the annotated dataset. On the other hand, the availability of annotated corpus in the public domain is very rare. Moreover, it is also a tedious and time-consuming task to annotate a corpus manually. Therefore, it is a challenging task to build a deep learning model for a low-resource language. In our literature study, we could find only one Bodo annotated corpus—Bodo Monolingual Text Corpus (ILCI-II, 2020b). The corpus is tagged by language experts manually as part of the project Indian Languages Corpora Initiative Phase-II (ILCI Phase- II), initiated by the Ministry of Electronics and Information Technology (MeitY), Government of India, and Jawaharlal Nehru University, New Delhi. The corpus contains approximately 30k sentences and 240k tokens comprised of different domains. The statistics of the annotated dataset are reported in Table 1. The corpus is tagged according to the Bureau of Indian Standards (BIS) tagset. We use this dataset for all our experiments to train neural-based taggers for Bodo.

Table 1. Statistics of Bodo POS annotated dataset

We prepare the tagged dataset in CoNLL-2003 (Sang and De Meulder Reference Sang and De Meulder2003) column format in which each line contains one word, and the corresponding POS tag is separated by tab space. An empty line represents the sentence boundary in the dataset. In Table 2, the sample column format is shown.

Table 2. Dataset format

For training, we first randomized the dataset; after that, the dataset was divided into 80:10:10 train, development, and test sets, respectively.

4.2. POS taggset

The TDIL dataset is tagged according to the Bureau of Indian Standards (BIS) tagset, which is considered the national standard for annotating Indian languages. The dataset contains eleven (11) top-level categories that include 34 tags. The complete tagset used in our experiment is reported in Table 3.

Table 3. Tagset used in the dataset

4.3. Language model

Language models are a crucial component in a deep learning-based model. These models are typically trained on large unlabeled text corpus to capture both semantic and syntactic meanings. The size of the training corpora (Bojanowski et al. Reference Bojanowski, Grave, Joulin and Mikolov2017) impacts the quality of the language models. In our literature survey, we could not find any other LMs that covered the Bodo language. The lack of availability of text corpus is one of the main factors behind this. Due to this, the BodoBERT is the first LM that has ever been trained on Bodo text. Therefore, to accomplish our comparative experiment, we consider pretrained language models for Hindi as it shares the same written script with Bodo-Devanagari.

Table 4 provides the details about the LMs that are used for training the Bodo POS tagging model. A brief description of these models is described below.

Table 4. Training datasize of different language models

FastText embedding (Bojanowski et al. Reference Bojanowski, Grave, Joulin and Mikolov2017) uses subword embedding technique and skip-gram method. It is trained on character

![]() $n$

-grams of words to get the internal structure of a word. Therefore, it has the ability to get the word vectors for out-of-vocabulary (OOV) words by using the subword information from the previously trained model.

$n$

-grams of words to get the internal structure of a word. Therefore, it has the ability to get the word vectors for out-of-vocabulary (OOV) words by using the subword information from the previously trained model.

Byte-Pair embedding (Heinzerling and Strube Reference Heinzerling and Strube2018) are precomputed on subword level. They can embed by splitting words into subwords or character sequences, looking up the precomputed subword embeddings. It has the capability to deal with unknown words and has the ability to infer meaning from unknown words.

Flair embedding (Akbik, Blythe, and Vollgraf, Reference Akbik, Blythe and Vollgraf2018) is a type of character-level embedding. It is a contextualized word embedding that captures word semantics in context, meaning that a word has different vector representations under different contexts. The embedding method based on recent advances in neural language modeling (Sutskever, Vinyals, and Le, Reference Sutskever, Vinyals and Le2014; Karpathy, Johnson, and Fei-Fei, Reference Karpathy, Johnson and Fei-Fei2015) that provides sentences to be modeled as distributions over sequences of characters instead of words (Sutskever, Martens, and Hinton, Reference Sutskever, Martens and Hinton2011; Kim et al. Reference Kim, Jernite, Sontag and Rush2016).

Multilingual Rrepresentations for Indian Languages (MuRIL) (Khanuja et al. Reference Khanuja, Bansal, Mehtani, Khosla, Dey, Gopalan, Margam, Aggarwal, Nagipogu, Dave, Gupta, Gali, Subramanian and Talukdar2021) is a multilingual language model based on BERT architecture. It is pretrained in 17 Indian languages.

XLM-R (Conneau et al. Reference Conneau, Khandelwal, Goyal, Chaudhary, Wenzek, Guzmán, Grave, Ott, Zettlemoyer and Stoyanov2020) uses self-supervised training techniques in cross-lingual understanding, a task in which a model is trained in one language and then used with other languages without additional training data.

IndicBERT (Kakwani et al. Reference Kakwani, Kunchukuttan, Golla, Gokul, Bhattacharyya, Khapra and Kumar2020) based on Fasttext-based word embedding and ALBERT-based language models for 11 languages trained on the IndicCorp dataset.

4.4. Experiment on POS models

In this section, we describe the experiments conducted to develop POS tagging models using different LMs, including BodoBERT. The experiment can be divided into three phases. In the first phase, we employ three different sequence labeling architectures that are shown to be a well performer in other languages, namely - Fine-tuning BodoBERT model for POS tagging, CRF (Lafferty, McCallum, and Pereira Reference Lafferty, McCallum and Pereira2001) and the third one with BiLSTM-CRF (Hochreiter and Schmidhuber Reference Hochreiter and Schmidhuber1997; Rei, Crichton, and Pyysalo Reference Rei, Crichton and Pyysalo2016). The pretrained BodoBERT is used to get the embedding vector of the words present in the sentences during training with CRF and BilSTM-CRF (Huang, Xu, and Yu Reference Huang, Xu and Yu2015) architecture. It is observed that the BiLSTM-CRF-based model outperforms the other two models.

Therefore, the BiLSTM-CRF architecture is used in the second phase to develop POS tagging models employing different language models, including BodoBERT.

Apart from Bodo, we also conducted the same experiment on Assamese, another low-resource language. Existing Assamese pretrained LMs are used for the experiment. To conduct the training for Assamese POS model, we acquired dataset (ILCI-II, 2020a) from TDIL, which was tagged by language experts manually. There are 35k sentences from different domains and 404k words in the POS datasets. The Assamese annotated dataset also follows the BIS tagset and contains 41 tags with 11 top-level categories.

In the third phase, we further experiment with the Stacked embedding method to develop the Bodo POS tagging mode. The Stacked method allows us to learn how well one embedding performs when combined with others during the training process. The top-performing LM (BodoBERT) in the second phase is selected for further training. In the Stacked method, each one of the LMs is combined with BodoBERT to get the final word embedding vector.

Compared to the best individual method, the stacked embedding approach using BiLSTM-CRF improves the performance score for POS tagging by around 2–7%. The model with BodoBERT

![]() $+$

BytePairEmbedding attains the highest F1 score of 0.8041. The results of the experiment are listed in Table 5. The POS model architecture is illustrated in Figure 1. In all experiments, the Flair frameworkFootnote l is used to train the sequence tagging model.

$+$

BytePairEmbedding attains the highest F1 score of 0.8041. The results of the experiment are listed in Table 5. The POS model architecture is illustrated in Figure 1. In all experiments, the Flair frameworkFootnote l is used to train the sequence tagging model.

Table 5. POS tagging performance in the stacked method using BiLSTM-CRF architecture

Figure 1. Block diagram of POS tagging model.

We explored different hyperparameters to optimize the configurations concerning the hardware constraint. After that, we use the same set of hyperparameters in all three experiments. We use a fixed mini-batch size of 32 to account for memory constraints. The early stopping technique is used if there is no improvement in the accuracy of the validation data. We use the learning rate annealing factor for early stopping.

5. Result and analysis

In this section, we present an analysis of our experiment and the performance of taggers. We evaluated the performance of the three sequence tagging methods: CRF, Fine-tuning of BodoBERT, and BiLSTM-CRF by measuring the micro F1 score. The weighted average of the tagging performance is also reported.

Table 6 illustrates an overview of the tagging performance in F1 score of the three models. We observe that the BiLSTM-CRF based model with BodoBERT performs the best with an F1 score of 0.7949 and weighed the average F1 score as 0.7898. In contrast, the fine-tune-based and CRF-based tagging model achieves an F1 score of 0.7754 and 0.7583, respectively. The performance comparison result of different LMs is reported in Table 7. We observe BodoBERT outperforms all the other pretrained models. The Flair Embedding model achieves almost similar performance in tagging with an F1 score of 0.7885.

We also cover a similar low-resource language, Assamese spoken in the same region. The same set of experimental setups is used for the experiment. It provides us with an overview of how the models work on similar languages with almost the same size as the annotated dataset. It has been observed that the highest F1 score achieved in the case of Assamese is 0.7293 using IndicBERT. This is almost

![]() $\approx$

7 less than the highest of the Bodo POS tagging model. It could be because of the difference in the number of tagsets used in Assamese (41 tags) versus Bodo (34 tags).

$\approx$

7 less than the highest of the Bodo POS tagging model. It could be because of the difference in the number of tagsets used in Assamese (41 tags) versus Bodo (34 tags).

Data augmentation experiment: The overall best-performing model (BodoBERT

![]() $+$

BytePairEmbedding) is employed to annotate a new set of sentences from another corpus. The corpus is taken from the Bodo corpus created by Narzary et al. (Reference Narzary, Brahma, Narzary, Muchahary, Singh, Senapati, Nandi and Som2022). The annotated dataset is further manually corrected. A new dataset of 10k has been added to the existing training dataset. In order to evaluate the performance of the model when increasing the training dataset, the same architectures (BodoBERT

$+$

BytePairEmbedding) is employed to annotate a new set of sentences from another corpus. The corpus is taken from the Bodo corpus created by Narzary et al. (Reference Narzary, Brahma, Narzary, Muchahary, Singh, Senapati, Nandi and Som2022). The annotated dataset is further manually corrected. A new dataset of 10k has been added to the existing training dataset. In order to evaluate the performance of the model when increasing the training dataset, the same architectures (BodoBERT

![]() $+$

BytePairEmbedding in BiLSTM-CRF) are employed in further training the POS model with the same set of parameters. The test and dev are kept the same. It is observed that the model performance is enhanced by 1

$+$

BytePairEmbedding in BiLSTM-CRF) are employed in further training the POS model with the same set of parameters. The test and dev are kept the same. It is observed that the model performance is enhanced by 1

![]() $\approx$

2%. The model achieves an F1 score of 0.8494.

$\approx$

2%. The model achieves an F1 score of 0.8494.

Table 6. Performance of POS tagging model in different methods

Table 7. The F1 score of different language models in POS tagging task on Bodo and Assamese language

The tag-wise performance score of precision, recall, and F1 score, along with support consolidated micro, macro, and weighted score, are reported in Table 8. The learning curve of training and validation for the best-performing model is shown in Figure 2.

Table 8. Tag-wise performance of best-performing Bodo POS tagging model

Figure 2. Learning curve of BodoBERT

![]() $+$

BytePairEmbeddings based POS model.

$+$

BytePairEmbeddings based POS model.

The learning curve implies that the dataset needs improvement as the training dataset does not provide sufficient information to learn the sequence tagging problem relative to the validation dataset used to evaluate it. Whatsoever, we get the first POS tagging model for the Bodo language. Eventually, it becomes the de facto baseline for the Bodo tagging.

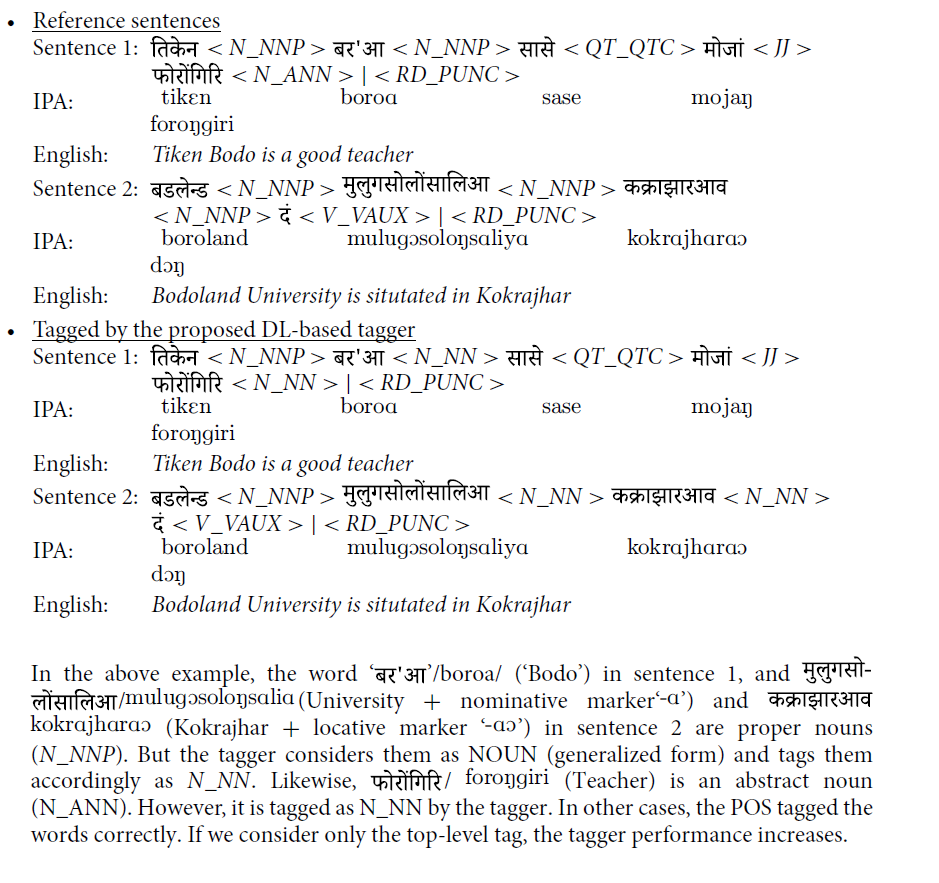

The reference sentences and their corresponding tagged results from the proposed tagger are given below.

The reported highest score is arguably lower than the state-of-the-art score on resource-rich languages. This could be due to a variety of factors.

-

1. The size of the annotated corpus may not be adequate.

-

2. The training data size of BodoBERT may not be sufficient enough to capture the linguistic features of the language.

-

3. The LM BodoBERT may need more improvement in capturing the linguistic characteristics of the Bodo language.

-

4. The assignment of the tags to the words in the dataset may need some correction.

Observation from confusion matrix: The confusion matrix of the top-performing Bodo POS tagging model is reported in Figure 3. The confusion matrix covers the top twelve POS tags in terms of frequency counts in the test set. The diagonal entries of the matrix represent the correctly predicted tags, and the off-diagonal entries represent incorrectly classified tags.

Figure 3. Confusion matrix of BodoBERT

![]() $+$

BytePairEmbeddings POS model.

$+$

BytePairEmbeddings POS model.

It is observed that the common error occurs in Noun (N_NN), Proper Noun (N_NNP), Locative Noun (N_NST), VERB (V_VM), Adjective (JJ), and Adverb (RB) in the taggers. We can draw the following observation from the confusion matrix.

-

The most common error in the dataset is intra-class confusion, i.e., confusing Noun (N_NN) with Proper (N_NNP) and Noun (N_NN) with Locative noun (N_NST). This might have occurred due to the similarity in the attached features of nouns, pronouns, and locative nouns.

-

In some instances, the tagger incorrectly predicts a noun when its class-type changes to an adjective in a sentence. It happens when it describes another noun, e.g., ‘Bodo language’; in this case, although Bodo is a proper noun, it is being used to describe the noun- language. Therefore, it becomes an adjective.

-

Sometimes, it becomes difficult to figure out the correct tag- JJ or N_NN, V_VM or N_NN, and RB or N_NN. So, many times, the tagger incorrectly tags as N_NN for JJ, V_VM, and RB.

-

Furthermore, Bodo has no similar orthographic conventions to differentiate the proper nouns as done using capitalization in English. Therefore, it is difficult for a machine to differentiate a proper noun from other nouns.

6. Conclusion

In this work, we presented a LM, BodoBERT, for the Bodo language based on BERT architecture.

We develop a POS tagging model employing three distinct sequence tagging architectures using the BodoBERT. Upon applying the BodoBERT LM in POS tagging, we obtained two outcomes: first, an evaluation of the pretrained BodoBERT’s performance on a downstream task in different architectures; second, we obtained a model for POS tagging in the Bodo language. We also compared the performance of BodoBERT to that of other LMs using two methods: Individual and Stacked. The stacked method improves the performance of the POS tagging model. In our experiment, the model that uses BodoBERT and BytePairEmbeddings together in a stacked method does better.

Despite the fact that the Bodo POS tagger is unable to attain state-of-the-art accuracy in comparison to resource-rich languages, we feel that our POS model can serve as a baseline for future studies. Our contributions may be useful to the research community in terms of using the LM BodoBERT and the POS tagging model for various downstream tasks.