Trials and developmental research

Substantial trial funding is a major research investment and should maximise its scientific output. The first priority is naturally to test the effectiveness of interventions but, when appropriately designed, we argue that trials in developmental psychiatry can and should also be used to illuminate basic science. Whereas academic and funding traditions can sometimes act to pull apart basic science and intervention research, this use of trials potentially provides a more integrated clinical research approach giving added value to expensive trials.

Developmental intervention

The classic view of treatment – as an episode of care of discrete disorder leading to reversal or removal of pathology – rarely applies in developmental disorder. Reference Green1 Treatments here are rarely definitive or short term. They often need phasing over a much longer period and aim to target the developmental course of a disorder to alter its primary progression (insofar as this is tractable), or its secondary sequelae. Research into the multiple varying influences on the course of disorders has led to the tendency for such interventions to become more complex and multimodal. Such intervention can be conceptualised as a kind of ‘developmental perturbation’ in longitudinal course of a complex disorder.

New trial designs

Testing such interventions raises significant challenges to trial design, 2 but also opportunities. For instance, so-called ‘hybrid’ clinical trial designs Reference Howe, Reiss and Yuh3 judiciously add elements from longitudinal association studies to the classic randomised controlled trial. Experimental studies generate methods and hypotheses regarding proximal mediators or moderators of treatment effect; the longitudinal design adds repeated measures analysis of proposed risk and protective factors–so that the two arms of the trial become in effect parallel longitudinal cohort studies. In principle, such hybrid trials can be used to study questions as diverse as causal effects in complex disorders, gene–environment interactions and the timing of the effect of risk or protective factors in development.

The idea of combining the best elements of randomised intervention trials with the use of statistical and econometric methods characteristic of observation studies has also been advocated in the social sciences. Bloom Reference Bloom4 argues that by combining the two approaches investigators ‘can capitalise on the strengths of each approach to mitigate the weaknesses of the other’. He builds on ideas first proposed by Boruch to advocate methods to evaluate the effects of treatment received from the results of randomised trials in which not everyone receives the treatment they are offered.

Causal inference in analysis

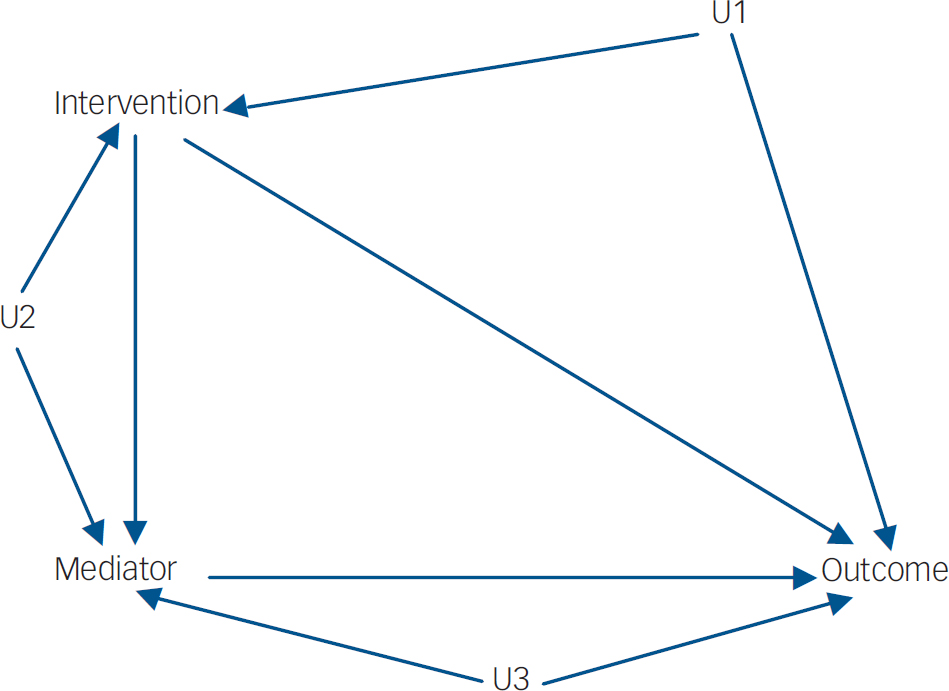

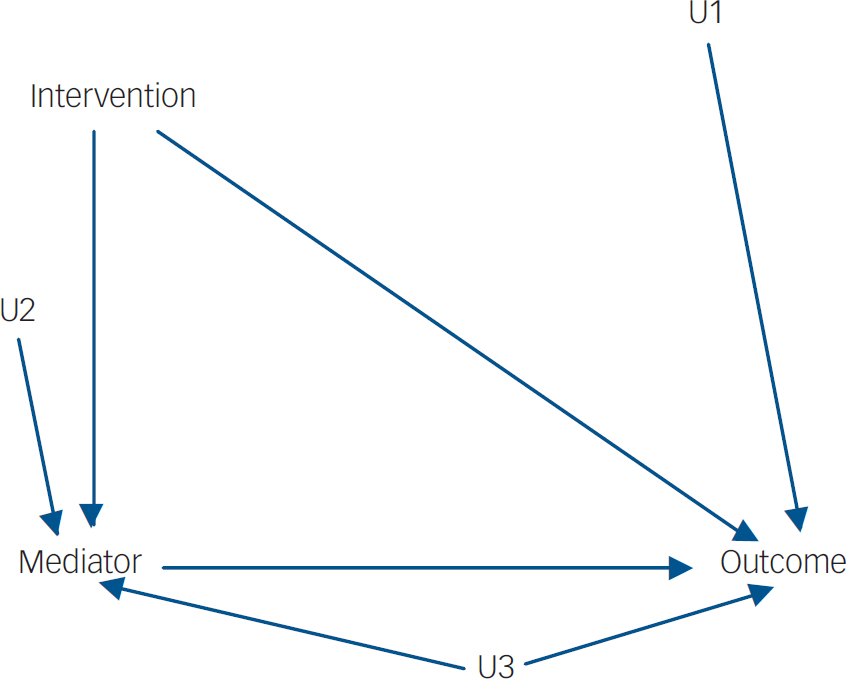

Since the late 1980s there have been exciting developments in both statistics (particularly medical statistics) and econometrics for the use of so-called ‘causal inference’ in the modelling of the influences of post-randomisation covariates (levels of treatment adherence, surrogate endpoints and other potential mediators) on final outcome. Reference Angrist, Imbens and Rubin5,Reference Dunn and Bentall6 In considering the possible causal influence of an intervention on outcome from data in an observational study there is always the possibility of an unmeasured variable (U1, say–Figs 1 and 2) which is associated with receipt of the intervention and also has a causal effect on the outcome. The variable U1 is known as a hidden or unmeasured confounder in the epidemiological literature and as a hidden selection effect in econometrics. In the presence of U1, straightforward methods of estimating the effects of intervention on outcome (through some form of regression model, for instance) will lead to biased results. When there is a potential mediator involved the situation is considerably more complex. Here there might be hidden confounding between intervention and mediator (U2) and also between mediator and outcome (U3). The great strength of randomisation is that it breaks the link between intervention and outcome (giving the possibility of valid intention-to-treat estimates) and between intervention and mediator. Hence, both U1 and U2 are no longer a problem. The effects of U3 (what Howe et al Reference Howe, Reiss and Yuh3 call mediated confounding), however, remain. It is the possible (or, in fact, very likely) existence of U3 that is the major challenge to valid inference from trials (including inferences regarding developmental causality). Typically it is implicitly assumed to be absent Reference Kraemer, Fairburn and Agras7 and the vast majority of the investigators using methods such as those first introduced by Baron & Kenny Reference Baron and Kenny8 seem to be blissfully unaware of this threat to the validity of their results.

Fig. 1 Without randomisation (observational study). The confounders (U1, U2 and U3) may be correlated.

Fig. 2 With randomisation (randomised trial). U1 and U2 are no longer confounders.

The key to the solution to this validity threat comes from recent statistical developments that enable us to evaluate both direct and indirect (mediated) effects of a randomised intervention on outcome in the presence of mediated confounding. The solution involves finding baseline variables (called instrumental variables or instruments) which have a strong influence on the mediator (and hence on the outcome) but a priori can be assumed to only influence outcome via the mediator (i.e. complete mediation). Further technical details can be found elesewhere. Reference Angrist, Imbens and Rubin5,Reference Dunn and Bentall6

Linking methodological developments with clinical questions

The challenge for designers of clinical trials here is to identify real-world clinical analogues of these instrumental variables within a trial design, which can then be used simultaneously to test relevant aspects of treatment process and of developmental theory. For instance, in developmental psychiatry, parent-mediated treatments of child disorder are common. The final aim of such interventions is to improve child functioning; but the immediate focus is on working with the parent to improve parent–child interaction. It is this parent–child interaction (an aspect of the non-shared environment for the child) that will be the hypothesised mediator of change in the primary target child outcome (say behaviour disorder). However, this interaction is also likely to be influenced by pre-treatment parental variables such as personality or social functioning. Such parental variables may have a direct effect on child outcome through shared genetic effects in some disorders, but in the majority of cases will have an impact on the result of treatment (child functioning) largely or solely through their effect on the parent–child interaction. The mediation effect of the parent–child interaction is then said to be moderated by the pretreatment parental trait. Measurement of this parental variable can therefore have two simultaneous and related uses: first, as a real-world factor in the child outcome of treatment; and second, fulfilling statistical conditions to be used as an instrumental variable as described above in the context of U3. Including such variables allows the trial analysis to define more precisely the causal roots of a treatment effect: the instrumental variable (in this case parental functioning) is not just used as a covariate against which to undertake the rest of the analysis but is entered into a more complex causal analysis modelling. Although few trials of parent-mediated treatments report additional measurement of relevant parental variables, even though they are theoretically relevant to causal effects, a recent trial that did measure them Reference Kazdin and Whitley9 found that they contributed to the explanation of treatment variance. Developmental psychopathology research has been challenged by difficulties in untangling the causal relationships between parental functioning, parent–child interaction and child functioning. Such designs could help address these causal questions in a powerful and novel way.

A second area in which this approach has been applied productively is in the investigation of the effect of process variables such as therapeutic alliance. Reference Green10 A worked example of the analysis of therapeutic alliance in trial in this way is set out in Dunn & Bentall. Reference Dunn and Bentall6

Implications for clinical trials

Key criteria for the kind of trial in which developmental questions can be tested were identified by Howe et al: Reference Howe, Reiss and Yuh3

-

(a) the intervention must be theory-based and clearly constructed;

-

(b) the proximal target of the intervention should be a variable known from developmental theory to be a likely candidate for an important developmental process worth testing; this implies that the developmental theory behind a particular research question must be mature.

-

(c) the intervention must have been shown in pilot studies to be able to change this intermediate mediating variable as well as the outcome;

-

(d) sampling for the trial needs to be consistent with the theories to be tested in the developmental aspect of the trial.

It is self-evident that funding for such a design must be adequate and that there needs to be active collaboration between designers of clinical trials, statisticians, and developmental scientists in the design phase.

Concluding remarks

There is potential synergy between methodological developments in causal analysis, the need to have trials better modelling the process and outcome of complex interventions, 2 and basic science research in developmental psychiatry. Clinical decision-making as well as scientific studies rely on implicit procedures for establishing causal relationships. New causal analytic methods in trials may lead to better understanding of how interventions have their effect, while simultaneously allowing testing of basic science hypotheses. These considerations could inform future studies of developmental interventions and suggest that funding for clinical trials should not necessarily be considered separately from basic science research.

Acknowledgements

G.D. is a member of the UK Mental Health Research Network Methodology Research Group. Methodological research funding for both G.D. and J.G. is provided by the UK Medical Research Council (grant numbers G0600555/G0401546).

eLetters

No eLetters have been published for this article.