The increasing cost of research and pressure on universities to raise their profiles may be contributing to the large number of observational studies reported in mass media. Headlines blaming individual foods or nutrients for chronic diseases or, in contrast, implying that eating a particular food could prolong life or drastically cut disease risk, seem all too common but can be misleading, in part due to the way they are communicated by scientists(Reference Woloshin, Schwartz and Casella1).

A retrospective analysis(Reference Sumner, Vivian-Griffiths and Boivin2) which matched academic press releases from twenty leading UK universities to their associated peer-reviewed papers and news stories found that misrepresentation of research by academics contributed towards misleading media stories. Out of four-hundred sixty-two press releases, 40 % contained exaggerated advice, 33 % inferred causation beyond that reported in the actual study, while 36 % overstated the potential human benefits from animal studies. Not unexpectedly, exaggeration within press releases drove exaggeration within media stories. However, it was notable that hyperbole did not appear to improve the uptake of news, suggesting this is an unnecessary reputational risk for academics.

A worrying finding from this media analysis was that press releases which conflated associations from observational studies into ‘cause and effect’ scenarios were twenty times more likely to be repeated in the media, potentially leading to public misconceptions about the benefits and risks of particular foods or nutrients. It is well known that data dredging or using observational datasets to answer research questions for which they were not designed can lead to statistically significant findings that may be spurious or clinically irrelevant(Reference Ioannidis3). However, the media also bear some responsibility as it has been observed that journalists underreport findings from randomised trials, emphasise bad news from observational studies, and ignore research from developing countries(Reference Bartlett, Sterne and Egger4).

The unfortunate consequence is that misleading findings from observational studies could drive changes to dietary guidelines, or influence policy and future research plans, ending in erroneous conclusions being amplified by meta-analyses(Reference Satija, Yu and Willett5). Some academic commentators(Reference Ioannidis3,Reference Young and Karr6) have noted that randomised controlled trials (RCTs) set-up to test the conclusions from prospective cohort studies rarely corroborate the latter's findings, and can even find conflicting results.

Perhaps, the time has come to establish exactly what the role of observational studies should be in the armoury of nutrition evidence, as well as debating how guidance can be tightened to improve methodological design, limitation of bias, statistical planning, reporting and peer review. This was the topic of a Nutrition Society member-led meeting which sought to examine the strengths and limitations of observational studies and the role of systematic reviews in helping to limit bias and summarise high-quality evidence. The appropriate use of nutrition epidemiology for dietary guidelines, public policy and media dissemination was also explored. The aim of this report is to provide a summary of the meeting and offer best practice recommendations on planning and conducting nutritional epidemiological studies, as well as communicating their findings in mass media.

What's wrong with epidemiology and how can we fix it?

The first speaker, Dr Darren Greenwood, a medical statistician from the University of Leeds, summarised different ways that the results from observational studies could be misused. He proposed that inconsistency is a feature of nutrition science, as it is with other health research, but unfortunately this can erode public trust leading to claims that diet experts are ‘always changing their minds’.

Inconsistent media headlines about diet and nutrition, often based on inconsistent research results, are common. According to a survey of 1576 researchers published in Nature (Reference Baker7), more than 70 % of scientists across different disciplines have tried and failed to reproduce another researcher's experiments, whereas more than half have failed to reproduce their own experiments. However, in nutrition science we should not be surprised at this.

The main reason for inconsistency between observational studies in nutrition is chance. Most studies are based on a specific sample of people from a population of interest and are not universally representative. Hence, there will be some uncertainty and the findings will not relate to everyone. Indeed, there is often more uncertainty than anticipated – rather like aiming darts at a bullseye but not hitting it every time, despite the darts being scattered closely around the centre.

In research, unlike the bullseye on a dart board, it is not known where the correct answer lies. All that can be observed is where the darts land. One mitigation, however, is to examine the confidence intervals (CIs) around estimates from a study to reveal the level of uncertainty. This provides a good feel for how much those darts are scattering, and there's often a lot of scatter – indicated by wider CIs – so this uncertainty should be considered when interpreting and communicating results.

Other sources of inconsistency between observational studies relate to the range of methods and exposures used. These include different dietary assessment tools, incompatible food definitions, different ranges of intake, different inclusion/exclusion criteria, different comparators, varied lengths of follow-up and whether disease outcomes are self-reported or independently verified. An example is coffee and risk of pancreatic cancer which was investigated by several prospective cohort studies and assessed by the World Cancer Research Fund/American Institute for Cancer Research in 2018(8). Even with similar intakes of coffee (more than three, or more than four cups daily), relative risks ranged from 0⋅37 to 2⋅87 creating a confusing picture of risk/benefit.

A specific challenge for nutritional epidemiology is estimating habitual diet which is notoriously hard to measure and may introduce potential biases that can distort results. As well as ensuring that participants are able to accurately recall intakes from the past, and adding quantification to dietary assessment tools (e.g. food weights or pictures), we also need to take into account which foods typically co-exist (e.g. eggs and bacon, or toast and butter) and how they may interact. Furthermore, an effect related to a decreased intake of a known food may, in fact, relate to the increased intake of an unknown food used in substitution (e.g. lower meat and higher pulses).

These sources of variation are causes of measurement error. If the error is entirely random, this can end up diluting the results, but it is rarely random. There is often some systematic nature to it, resulting in distortion of results in either direction, e.g. underreporting of energy intake or overreporting of foods perceived to be healthier.

Another way in which results can be exaggerated is by cherry-picking those that support a pre-determined view, often inadvertently. This can lead to some results being systematically less likely to be submitted for publication, for example those that conflict with an established view. The greater the number of exposures included in the analysis, or the different ways of looking at the same exposure or different outcomes, the more likely we are to obtain false-positive results.

Observational studies are more prone to bias than RCTs, but their design and potentially lower cost enable a far larger sample size which can result in very precise results, statistically significant even, but it does not remove the bias. Confounding – where variables are correlated so that an apparent association with a dietary factor may actually be a causal association with another factor, making apparent associations misleading – is an established weakness of observational studies. Yet, identifying which characteristics to adjust for is not trivial. Some characteristics, such as socio-economic status, are difficult to measure and several are hard to disentangle from diet. At the same time, we need to avoid adjusting for characteristics that lie on the causal pathway between the dietary exposure and the outcomes of interest, e.g. adjusting for energy intake when studying associations between a particular food/beverage and risk of obesity.

Finally, we need to consider how to present and interpret results from nutritional epidemiology. When reporting a relative risk (the risk of an event in the exposed group divided by the risk in the non-exposed group), it is advisable to establish the context of the underlying risk and quantify what the relative risk means in terms of health (i.e. by stating the absolute risk). This is especially important in large prospective cohort studies which have the size to enable even very modest dietary associations to reach statistical significance. Hence, it is important to ask if this could be the result of bias, and whether the absolute increase in risk represents a true public health concern.

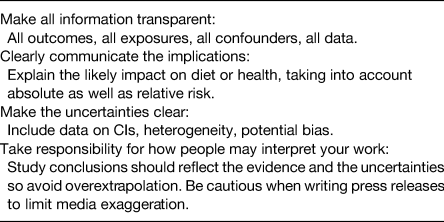

As it is widely accepted that correlation or association does not imply causation, so we should not over-extrapolate or exaggerate the strength of evidence from observational studies. There is a lot of uncertainty in nutritional epidemiology, and we need to be transparent about this when disseminating results. As outlined in Table 1, uncertainties should be made explicit, particularly in press releases, as this would be one way to reduce the misuse of observational studies in the media.

Table 1. Best practice recommendations for disseminating observational studies

Know your limits! Taking a closer look at observational diet–disease associations

Building on the first presentation, Professor Janet Cade, Head of the Nutritional Epidemiology Group at the University of Leeds, explored how we can use observational studies effectively to examine links between diet and disease risk, and avoid common pitfalls. She quoted Professor Richard Peto who said: ‘Epidemiology is so beautiful and provides such an important perspective on human life and death, but an incredible amount of rubbish is published’(Reference Taubes9). But why is this, and what should nutritionists look for when designing or appraising observational studies?

First, it is important to acknowledge the benefits of observational studies and why we use them. RCTs are rightly viewed as the gold standard of evidence because, unlike epidemiology, they provide evidence for causal links, but they are not appropriate in several cases. For example, it would be prohibitively expensive and potentially unethical to change participants' diets for decades to investigate links between diet and disease, or mortality. In contrast, longitudinal observational studies can follow-up participants for decades. The expense of running RCTs means that they typically have sample sizes in the tens or hundreds, which are unlikely to be representative of populations. However, observational studies can recruit thousands, or hundreds of thousands of participants that are more representative of overall populations. In some cases, use of a placebo can be unethical, e.g. the question of whether or not parachutes save lives has been discussed philosophical(Reference Yeh, Valsdottir and Yeh10), or it is impossible to identify an appropriate placebo since the introduction of one food may mean the absence of another. In contrast, observational studies can track real-life clustering of dietary and lifestyle variables, and examine their associations with risk. This may be why the number of publications from observational studies is rising dramatically.

Secondly, we should be aware of the different types of observational studies and their relative strengths and weaknesses. These include: ecological studies which have the most limitations and are only hypothesis generating since they provide blunt estimates of whole country dietary intake and disease risk; cross-sectional studies where a ‘snapshot’ of a population is taken but there is a considerable likelihood of confounding; case control studies where the baseline diets/lifestyles of the group developing a disease are compared with those who remained free of this disease, but the retrospective nature is open to bias; and prospective cohort studies which enable a distinct population to be followed longitudinally and which are viewed as the highest quality design within observational studies, although the risk of confounding is still high.

Thirdly, the limitations of observational studies need to be recognised. Although these can only provide a best guess estimate of an unknown true value, trust in the estimate can improve if there is: (a) a strong methodology which includes a sample that is as representative as possible of the target population; (b) an objective, validated method of data collection (especially for dietary assessment); (c) an objective measurement of the outcome variable; and (d) planned statistical analysis.

However, for public health or clinical significance, the quality of the data is more important than the population size. Large studies, such as the UK Biobank survey of more than 500 000 people, can generate several statistically significant findings on the basis of sheer size, but the dietary survey methodology is limited and would not be expected to provide a suitable standard of information for diet policy or patient advice. Other limitations of observational studies include control of confounding, unrepresentative populations, non-response, missing data, reporting bias or selective reporting, publication bias, data fishing rather than following a pre-determined hypothesis, and use of multiple testing in statistical analyses.

Despite these limitations and criticisms of self-reported observational data, we should continue to collect this information to support the development and evaluation of nutrition policy. New methods, especially making use of noveltechnologies and biomarkers, could help reduce measurement error. It is also possible to adjust for measurement error in statistical analysis.

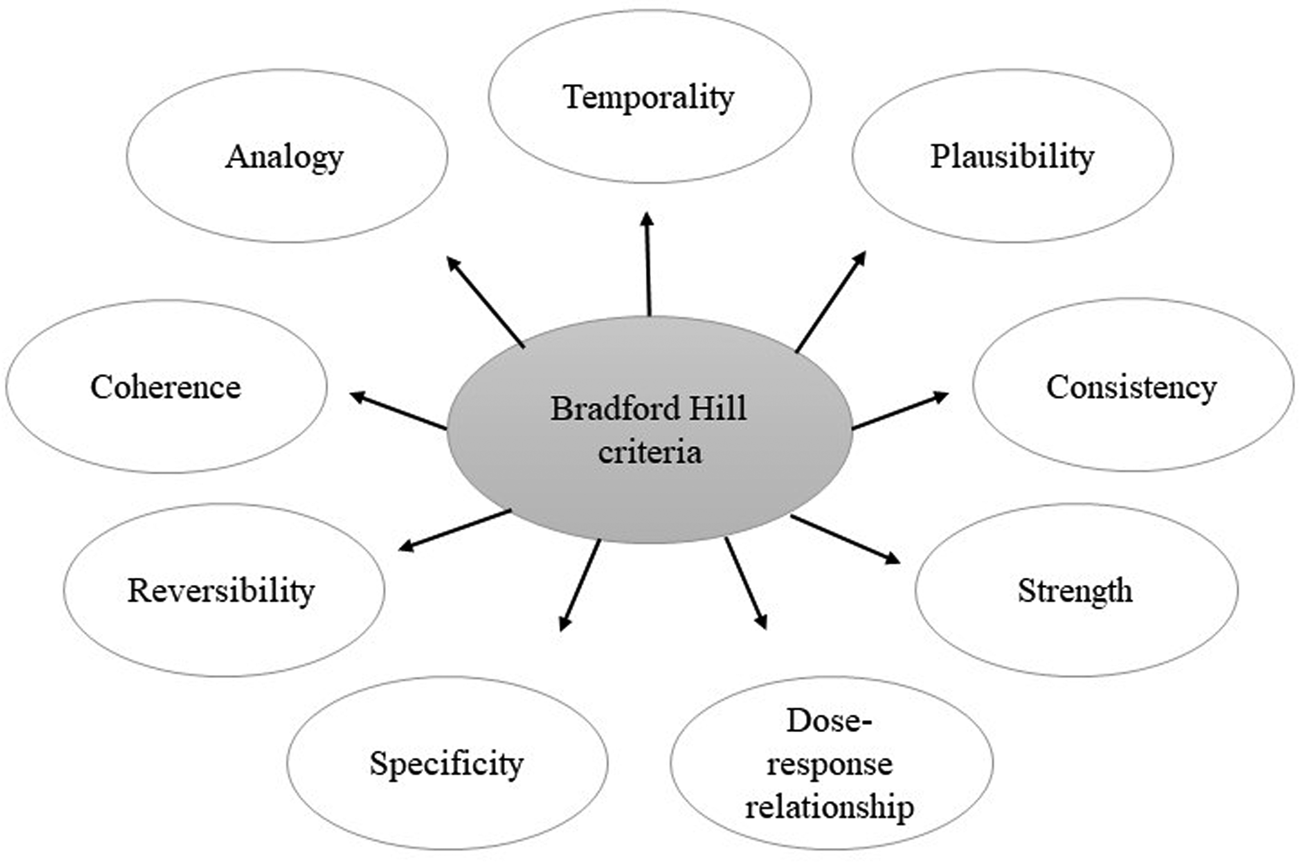

Thus, when considering findings from observational studies in relation to public health, it is necessary to consider the wider evidence landscape rather than allowing results from observational studies to dominate, regardless of their size. One example is β-carotene intake and CVD mortality where conclusions from historic observational studies and RCTs contradicted one another, with observational studies showing benefit whereas RCTs – albeit using very high dose interventions – suggested an increased risk of mortality(Reference Smith and Ebrahim11). Interestingly, more recent studies(Reference Aune, Keum and Giovannucci12) tend towards null findings, whether they are observational or RCT. One reason for this may be differences in outcomes between studies since one may measure disease outcome (incidence) while another may record mortality from disease which is influenced by medical care as well as aetiological factors. Another reason may be differences in the way confounders are identified, measured and accounted for in statistical analyses. Regardless of the quality of the observational study, it must be remembered that these are not appropriately designed to determine cause and effect. The Bradford Hill criteria are a useful model for thinking about cause and effect from a dietary perspective (Fig. 1).

Fig. 1. Pictural representation of Bradford Hill criteria(Reference Hill13).

One may speculate that, if this model had been used by Professor Ancel Keys when developing his theory on saturated fat and CHD, it is possible that dietary advice would not have been dominated by a low-saturated/low-fat message for the past 70 years. Keys' Seven Countries Study(Reference Smit and van Duin14) was designed to support his hypothesis, but evidence on mortality risk from later studies has been inconsistent(Reference Harcombe, Baker and Cooper15). Regardless of the merits of low-fat diets, it is nevertheless clear that Professor Keys' theory was not tested beyond observational studies – a less than ideal situation which may have led to unintended health consequences given questions about the benefits v. risks of high-carbohydrate diets(16).

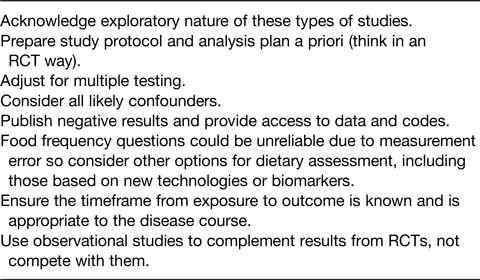

Thus, there are limitations with all types of diet/disease studies, not just observational types so one must avoid ‘throwing out the baby with the bathwater’. When evaluating existing observational studies, or when developing methodology for future studies, it is helpful to develop a critical approach by using a checklist (Table 2) to assess quality, precision of dietary assessment, risk of bias, timeframe between exposures and outcomes, peer review, and plausibility of association given the wider evidence base. Observational studies should complement, not compete with, RCTs which is why there remains a place for them in diet–disease research in spite of their limitations.

Table 2. Checklist for planning observational studies

Combining the evidence from observational studies

Since individual observational studies have limitations and are subject to bias, combining findings from several studies – for example, within systematic reviews – can compound bias unless care is taken in the selection of studies and overall interpretation. This was the topic of the presentation by Dr Lee Hooper, Reader in Research Synthesis, Nutrition & Hydration at Norwich Medical School. Dr Hooper has authored several influential systematic reviews of diet/disease interactions, for example Cochrane reviews on saturated fat and CVD(Reference Hooper, Martin and Jimoh17).

The whole point of systematic reviews (with or without meta-analysis) is to provide a concise summary of the best, and least biased, evidence to answer a particular research question. In the field of nutrition, we are seeking to understand what we should be eating, and in which amounts, to reduce the risk of chronic disease (as well as deficiency and toxicity) and improve health. This means that establishing causation is necessary and, of course, that is more in the domain of RCTs than observational studies. Hence systematic reviews of prospective cohort studies would be ranked lower in the evidence hierarchy than systematic reviews of RCTs.

Grading Recommendations, Assessment, Development and Evaluation(Reference Siemieniuk and Guyatt18) is a useful approach to rating the certainty of evidence and works by assuming high-quality evidence initially for RCTs and low-quality evidence initially for observational studies. These ratings can then be downgraded in response to risk of bias, imprecision, inconsistency, indirectness or publication bias of the evidence, or upgraded for large effect size, plausible confounding and dose–response gradient in high-quality observational studies.

Traditionally, observational studies would be used to generate hypotheses which are then tested in RCTs. If both trials and prospective cohort studies are valid for assessing relationships between diet and disease, their findings should align. However, there are numerous cases in practice where conclusions from prospective cohort studies are not corroborated by RCTs and, in about 10 % of cases, the findings are statistically significantly in the opposite direction(Reference Young and Karr6).

One example is the evidence on β-carotene and lung cancer where a large RCT had to be stopped early as it found a 28 % increase in lung cancer risk and a 17 % increase in death following randomisation to the β-carotene supplementation. The trial was conducted because the majority of prospective and retrospective observational studies at the time reported that low β-carotene was associated with an increased lung cancer risk(Reference Omenn19). It is now clear that several of these studies did not fully adjust for smoking which independently correlates with poor diets as well as an increased risk of lung cancer, so is a strong confounder of the relationship. Additionally, low carotenoid intake or status may be a marker for low consumption of fruit and vegetables rather than the causal factor(Reference Gallicchio20). Indeed, healthy lifestyles tend to cluster, so that a person eating more fruit and vegetables is more likely to be physically active, not smoke, take alcohol in moderation, be better educated, have a lower fat intake and higher vitamin and mineral intake, have a higher income and greater social capital. This makes it difficult to isolate the causative associations of any one lifestyle, particularly in the case of dietary factors. Thus, unless we interpret findings cautiously, and in the context of the wider evidence base, observational studies can badly mislead us, even when combined in systematic reviews.

An important point is that systematic reviews are scientific investigations in their own right that use methodological and statistical techniques to limit bias and random error. These include a registered protocol so that key decisions are pre-planned, a comprehensive literature search, explicit and reproducible inclusion criteria, careful assessment of risk of bias within primary studies, thoughtful synthesis of results (often in meta-analysis) that includes exploration of heterogeneity and sensitivity analyses and finally, objective interpretation of the results.

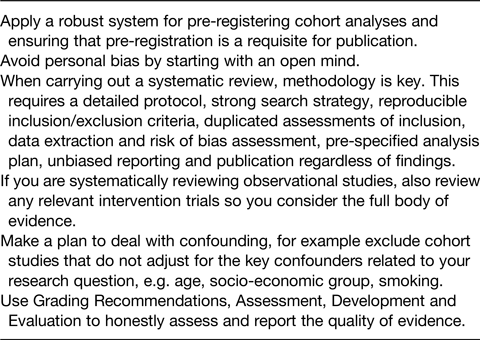

We have observed that confounding is one problem when attempting to gain an unbiased overview of observational studies, but it is not the only one. Others are dietary intake measurement bias (which is complex and merits its own dedicated meeting), selective reporting of results and publication bias. These last two result from a lack of pre-registration of observational studies and their protocols which, if implemented widely as is now the case for RCTs, would enable researchers to identify neutral or even negative, unpublished analyses of observational studies and data gaps. Pre-registration also ensures that pre-planned statistical methods, sub-groupings and outcomes are openly published, which avoids the temptation to manipulate these, or omit uncomfortable or uninteresting (non-statistically significant!) data from publications.

Currently, there remains a risk of data trawling where tens or hundreds of associations are examined in large databases but only the small number of positive associations is published. As the negative or null associations tend to remain hidden, this can skew the conclusions of future systematic reviews of observational studies towards false positives. Splitting dietary data into sub-groups and conducting multiple post-hoc statistical tests can also produce spurious ‘significant’ findings, but this may be avoided by pre-determining the statistical approach within the protocol.

An example of selective reporting is provided in a study where researchers selected fifty ingredients randomly from cookbooks and searched the literature to determine whether they had been linked with cancer risk in observational studies, finding that 80 % had been associated with either an increased or decreased cancer risk(Reference Schoenfeld and Ioannidis21). Positive associations were more likely to be reported in the abstracts than null associations, making them easier to find. In addition, the relative risks were often large for individual observational studies but were generally null when combined in meta-analyses.

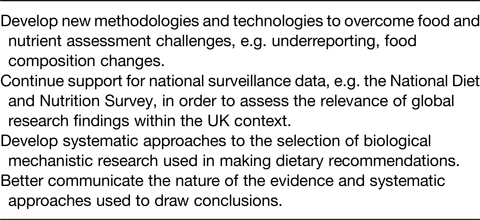

Although well conducted systematic reviews can attempt to overcome bias in individual studies, poor quality systematic reviews can accentuate the bias, increasing the chance of misleading conclusions. A recent study(Reference Zeraatkar22) found that, when assessing systematic reviews in nutrition, only 20 % were pre-registered, 28 % did not report a replicable search strategy, 44 % did not consider dose–response associations where appropriate, and just 11 % used an established method such as Grading Recommendations, Assessment, Development and Evaluation to evaluate the certainty of evidence. This suggests that published systematic reviews in nutrition are often of poor quality and may be misleading. However, there are ways that we can improve the quality of the evidence on nutrition and health as summarised in Table 3.

Table 3. Reducing bias when conducting systematic reviews of observational studies

Development of dietary guidelines: how can we best weigh up the evidence?

Just as the Nutrition Society aims to advance ‘the scientific study of nutrition and its application to the maintenance of human and animal health’, the ultimate goal of nutritional epidemiology and related systematic reviews is to inform more effective policies and public health advice which, in turn, should deliver dietary and health improvements. This topic was presented by Professor Christine Williams OBE, a trustee of the Academy of Nutrition Sciences and an Honorary Fellow of the Nutrition Society.

Effective dietary prevention of non-communicable diseases requires rigorous evidence of a high certainty showing clear causal relationships between diet and disease risk. In addition, conclusions should be based on the totality of the evidence. This is important as individual studies can sometimes provide conflicting conclusions – a point often made by the media when they claim that ‘experts are always changing their minds on diet’. As an example of this, two systematic reviews which concluded that there were no links between saturated fat consumption and heart disease risk(Reference Chowdhury, Warnakula and Kunutsor23,Reference Siri-Tarino, Sun and Hu24) were published at a similar time and received widespread media coverage, as well as criticism from other academics. One of the papers required several corrections(Reference Chowdhury, Warnakula and Kunutsor25) but the overall conclusion was not withdrawn.

To overcome potential selection bias which can arise from consideration only of highly cited papers, expert bodies tend to use standardised frameworks to select, evaluate and score all relevant peer reviewed studies which adhere to their pre-defined inclusion criteria. A position paper from the Academy of Nutrition Sciences(Reference Williams, Ashwell and Prentice26) examined methods used to assess evidence by key expert groups, including the UK's Scientific Advisory Committee on Nutrition, the World Cancer Research Fund, the European Food Safety Authority and the National Academy of Sciences, Engineering and Medicine. Common approaches were found to involve systematic selection and assessment of the evidence, interrogation of the evidence for strength and adherence to causal criteria, for example biological plausibility, and finally methods of grading evidence. This lengthy process includes discussion and a collective judgement of the assembled evidence. Care is taken to avoid premature conclusions since dietary advice to whole populations must be unlikely to cause harm to vulnerable or susceptible sub-groups.

Although RCTs of surrogate risk markers (e.g. cholesterol and blood pressure) may be used to assess potential links between diet and disease outcomes, and such data are widely used by expert groups, it is problematic to use their findings in isolation. Such studies do not have morbidity or mortality as their end points so the full impact of diet on the pathway from exposure to disease cannot be assessed. Most expert groups synthesise data from RCTs looking at surrogate risk markers with population studies, such as prospective cohort studies, on the longer-term effects of diet on disease end points or mortality risk. In several cases, the quantity and quality of evidence is insufficient which is why expert groups may be unable to make specific, or strong, recommendations about how particular diets impact on disease risk or prevalence.

However, the success of any study, whether RCT or prospective cohort study, is hindered by changes to background diets, dropouts and shifts in the nutritional composition of foods that can take place over the duration of long-term studies. This can affect the consistency of findings from different studies conducted at different times. For example, average butter and whole milk consumption in the UK was five times lower in 2010 than that in the 1970s(27). Hence, studies reported in the 1970s on the impact of these dietary components are likely to report different findings from those conducted in the early 21st century, and this has proven to be the case for studies of saturated fats and CVD. Both RCTs and prospective cohort studies may be subject to recruitment bias, e.g. more participation from older subjects from affluent countries, which makes it difficult to generalise results to populations from other countries, or to different age groups.

The limitations of prospective cohort studies were discussed by a number of the speakers, in particular the challenge of drawing conclusions of causality from their findings. However, systematic reviews and meta-analyses can help to overcome some of these difficulties. Combining study data and the use of statistical methods to assess bias and confounding creates opportunity for analysis of effect sizes (strength), consistency, specificity and dose–response, which are the key criteria for causality as defined by Bradford Hill(Reference Hill13). These analyses form an important part of an expert group's work and can add considerable confidence to the overall conclusion.

Neither prospective cohort studies nor RCTs can directly assess biological plausibility, which is why the Academy of Nutrition Sciences, in their position paper(Reference Williams, Ashwell and Prentice26), recommended inclusion of evidence from mechanistic studies when considering diet–disease interactions (see Table 4 for a summary of their recommendations). Large numbers of studies can help to understand whether dose–response relationships exist which can indicate higher possibility of a plausible biological relationship.

Table 4. Recommendations of the Academy of Nutrition Sciences(Reference Williams, Ashwell and Prentice26)

Where data are available, causal evidence on consistency or dose–response can be used to grade the strength of the evidence and, ultimately, inform recommendations. In its third report, the World Cancer Research Fund considered the strength of the evidence on body fatness and post-menopausal breast cancer, and evidence on red meat and colorectal cancer. For breast cancer, thirty-five prospective cohort studies were combined into a meta-analysis producing a summary association of 1⋅12 (1⋅09–1⋅15)(8) while, for colorectal cancer, eleven prospective cohort studies were combined producing a summary association of 1⋅22 (1⋅06–1⋅39)(28). The relationship between body fatness and post-menopausal breast cancer was graded ‘Convincing’ (the highest grading) for reasons including: a significant finding for overall relative risk in the combined data; significant findings in the majority of the thirty-five prospective cohort studies for increases in BMI of 5 kg/m2; a linear dose–response relationship over a wide range of body fatness; and the existence of sound, plausible biological mechanisms.

In contrast, for the relationship between red meat and colon cancer, only two of eleven prospective cohort studies were individually significant with one study influencing the overall findings, but the non-linear dose–response relationship was significant. These data were downgraded to ‘Plausible’ (second highest grading) because the individual data were less consistent, but a plausible mechanism was considered a sound basis for the proposed relationship(28). Hence, in the above examples, expert bodies carefully considered the overall findings from a body of evidence, including study strength, consistency and likelihood of a dose–response before coming to a conclusion.

Even when expert groups have a clear view of a diet/disease association and are confident about biological mechanisms, other aspects need to be considered to contextualise the risk/benefit. These include the prevalence of the disease, the applicability of evidence to the population of interest, the size effects of the diet/disease association and intake levels of the candidate food/nutrient (both as current estimates and potential for change). At the end of this process, the expert group will be able to publish a risk/benefit assessment, but policy makers and government are ultimately responsible for taking this forward into dietary recommendations and policy changes, which are in the realm of risk management.

The role of mechanistic data needs to be explored further. Clearly, it is an important part of the evidence mix since prospective cohort studies, and systematic reviews of these, cannot be used alone to determine cause and effect. However, there is a lack of consensus on whether plausible biological mechanisms should be considered essential for making dietary recommendations, and what criteria should be applied to these to ensure they have acceptable rigour, relevance and freedom from selection and publication bias. Currently, mechanistic studies sit at the base of the evidence pyramid but perhaps their importance needs further consideration.

Have I got the message right? Communicating with consumers via the media

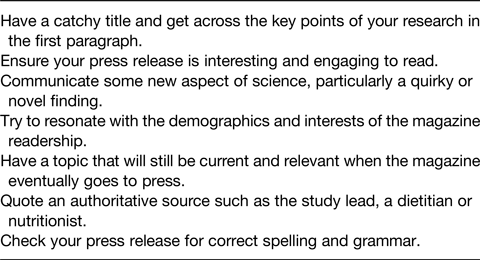

Lucy Gornall, Health and Fitness Editor for TI Media, picked up the thread of better communication and provided insight into how mass media respond to press releases about scientific studies and translate them into stories for the general public. She emphasised that journalists want to receive press releases from scientists and are keen to run health- and diet-related stories.

The needs of journalists differ depending on whether they are writing for news media, which is short-lead and issue focused, or magazines which have longer lead times typically 4–5 months, and are based on feature articles. Both of these formats can be print or digital. Feature articles rarely use a study as a headline but will incorporate one or more studies, plus expert comments, into a broader wellbeing article. News articles will tend to be more sensational, particularly the headlines, and normally focus on one story from a press release. Hence, scientists need to keep their target journalists in mind when crafting appropriate press releases.

A typical magazine editor will receive numerous emails daily, so a successful press releases needs to stand out with a catchy headline and key statistics in the first paragraph. Journalists will select studies that are most relevant to the demographics of their audience, are published in reputable journals, have a large sample size and believable message, especially if related to disease risk.

Press releases need to be carefully written as journalists will tend not to read the full paper nor contact the authors unless they have specific questions. Hence, releases should be checked for errors, including those related to spelling and grammar, to avoid mistakes being transferred into articles. Editors will pay more attention to releases that fit with their titles so scientists can improve their chances of a good media response by being aware of regular health features run by a magazine, or issues that are important to the target readership.

Occasionally, studies are misreported and, in this case, authors should approach the Section Editor to request a correction. Print media is far harder to change than digital and scientists may need to accept that news moves on so quickly that often it is not worth correcting. Digital can easily be changed and has more longevity, so it is worthwhile requesting a correction. In summary, Table 5 suggests ways to improve media take up of research studies.

Table 5. How to increase media attention for your research

Learning summary

This meeting provided a valuable learning experience on how to design better observational studies by setting out hypotheses and methods beforehand, considering potential bias and confounding, reporting all of these transparently and communicating the results responsibly. Weaknesses and limitations of observational studies should also be acknowledged. Speakers then covered best practice for combining the results of observational studies into systematic reviews and meta-analyses to minimise bias and ensure that the wider evidence-base contributes towards the development of effective public health advice and policies (summarised in Tables 1–4).

Media coverage of scientific studies is beneficial to academics since it may result in greater numbers of citations(Reference Phillips, Kanter and Bednarczyk29) but it is important to avoid misleading the public by exaggerating the results of studies or implying cause and effect where this is not evidenced (see Table 5). Studies(Reference Adams, Challenger and Bratton30,Reference Sumner, Vivian-Griffiths and Boivin31) suggest that press releases which contain cautious claims and caveats about correlational findings can still achieve attention in the mass media. Hence, the primary responsibility for accurate dissemination of studies lies with academics, not journalists.

Acknowledgements

The author acknowledges the excellent contributions of Dr Darren Greenwood, Professor Janet Cade, Dr Lee Hooper, Professor Christine Williams OBE and Lucy Gornall, and for their assistance in preparing this report. She also wishes to thank Nutrition Society staff for helping to organise the meeting.

Financial Support

The Nutrition Society funded the online hosting of the meeting, enabling participants to attend for free. Neither the speakers nor the organiser (CR) received any payment or expenses.

Conflict of Interest

None.

Authorship

The author had sole responsibility for all aspects of preparation of this paper.