1 Introduction

Dependently typed languages such as Coq, Agda, Idris, and F* allow programmers to write full-functional specifications for a program (or program component), implement the program, and prove that the program meets its specification. These languages have been widely used to build formally verified high-assurance software including the CompCert C compiler (Leroy, Reference Leroy2009), the CertiKOS operating system kernel (Gu et al., Reference Gu, Koenig, Ramananandro, Shao, Wu, Weng, Zhang and Guo2015; Gu et al., Reference Gu, Shao, Chen, Wu, Kim, SjÖberg and Costanzo2016), and cryptographic primitives (Appel, Reference Appel2015) and protocols (Barthe et al., Reference Barthe, Grégoire and Zanella-Béguelin2009).

Unfortunately, even these machine-verified programs can misbehave when executed due to errors introduced during compilation and linking. For example, suppose we have a program component S written and proven correct in a source language like Coq. To execute S, we first compile S from Coq to a component T in OCaml. If the compiler from Coq to OCaml introduces an error, we say that a miscompilation error occurs. Now T contains an error despite S being verified. Because S and T are not whole programs, T will be linked with some external (i.e., not verified in Coq) code C to form the whole program P. If C violates the original specification of S, then we say a linking error occurs and P may contain safety, security, or correctness errors.

A verified compiler prevents miscompilation errors, since it is proven to preserve the run-time behavior of a program, but it cannot prevent linking errors. Note that linking errors can occur even if S is compiled with a verified compiler, since the external code we link with, C, is outside of the control of either the source language or the verified compiler. Ongoing work on CertiCoq (Anand et al., Reference Anand, Appel, Morrisett, Paraskevopoulou, Pollack, Bélanger, Sozeau and Weaver2017) seeks to develop a verified compiler for Coq, but it cannot rule out linking with unsafe target code. One can develop simple examples in Coq that, once compiled to OCaml and linked with an unverified OCaml component, jump to an arbitrary location in memory—despite the Coq component being proven memory safe. We provide an example of this in the supplementary materials.

To rule out both miscompilation and linking errors, we could combine compiler verification with type-preserving compilation. A type-preserving compiler preserves types, representing specifications, into a target typed intermediate language (IL). The IL uses type checking at link time to enforce specifications when linking with external code, essentially implementing proof-carrying code (Necula, Reference Necula1997). After linking in the IL, we have a whole program, so we can erase types and use verified compilation to machine code. To support safe linking with untyped code, we could use gradual typing to enforce safety at the boundary between the typed IL and untyped components (Ahmed, Reference Ahmed2015; Lennon-Bertrand et al., Reference Lennon-Bertrand, Maillard, Tabareau and Tanter2022). Applied to Coq, this technique would provide significant confidence that the executable program P is as correct as the verified program S.

We consider this particular application of type preservation important for compiler verification and for protecting the trusted computing base when working in a dependently typed language, but type preservation has other long studied applications. Type-preserving compilers can provide a lightweight verification procedure using type-directed proof search (Chlipala, Reference Chlipala2007), can be better equipped to handle optimizations and changes than a certified compiler (Tarditi et al., Reference Tarditi, Morrisett, Cheng, Stone, Harper and Lee1996; Chen et al., Reference Chen, Hawblitzel, Perry, Emmi, Condit, Coetzee and Pratikaki2008), and can support debugging internal compiler passes (Peyton Jones, Reference Peyton Jones1996).

Unfortunately, dependent-type preservation is difficult because compilation that preserves the semantics of the program may disrupt the syntax-directed typing of the program. This is particularly problematic with dependent types since expressions from a program end up in specifications expressed in the types. As the compiler transforms the syntax of the program, the expressions in types can become “out of sync” with the expressions in the compiled program.

To preserve dependent typing, the type system of the IL needs access to the semantic equivalences relied upon by compilation. Past work has used strong axioms, including parametricity and impredicativity, to recover these equivalences (Bowman et al., Reference Bowman, Cong, Rioux and Ahmed2018). However, this approach does not support all dependent type system features, particularly predicative universe hierarchies (which are anti-impredicative) or ad hoc polymorphism and type quotation (which is anti-parametric) (Boulier et al., Reference Boulier, Pédrot and Tabareau2017). Dependently typed languages, such as Coq, restrict impredicative quantification as it is inconsistent with other features and axioms, such as excluded middle and large elimination. Restricting impredicativity restricts type-level abstraction, so such languages often add a predicative universe hierarchy—abstractions over types live in a higher universe than the parameter, and so on. Higher universes enable recovering the ability to abstract over types and the types of types, etc., which is useful for generic programming and abstract mathematics. This means reliance on parametricity and impredicativity restrict which source programs can be compiled and thus cannot be applied in practice. To scale to a realistic dependently typed language such as Coq, we must encode these semantic equivalences in the type system without restricting core features.

To preserve dependent types, the compiler IL must encode the semantic equivalences relied upon by compilation. In this work, we do this using extensionality, which allows the type system to consider two types (or two terms embedded in a type) definitionally equivalent if the program contains an expression representing a proof that the two types (or terms) are equal. Using this feature, the translation can insert hints for the type system about which terms it can assume to be equivalent and provide proofs of those facts elsewhere to discharge these assumptions. This approach scales to higher universes and other features that prior work could not handle and does so without relying on parametricity or impredicativity in the target IL. Relying on extensionality has one key downside: decidable type checking becomes more complex. We discuss how to mitigate this downside in Section 7.

We present a dependent-type-preserving translation to A-normal form (ANF), a compiler intermediate representation that makes control flow explicit and facilitates optimizations (Sabry & Felleisen, Reference Sabry and Felleisen1992; Flanagan et al., Reference Flanagan, Sabry, Duba and Felleisen1993). The source of this translation is ECC, the Extended Calculus of Constructions with dependent elimination of booleans and natural numbers. ECC represents a significant subset of Coq. The translation supports all core features of dependency, including higher universes, without relying on parametricity or impredicativity, in contrast to prior work (Bowman et al., Reference Bowman, Cong, Rioux and Ahmed2018; Cong & Asai Reference Cong and Asai2018a). This ensures that the translation works for existing dependently typed languages and that we can reuse existing work on ANF translations, such as join-point optimization. This work provides substantial evidence that dependent-type preservation can, in theory, scale to the practical dependently typed languages currently used for high-assurance software.

Our translation targets our typed IL ![]() $\mathrm{CC}_{e}^{A}$, the ANF-restricted extensional Calculus of Constructions.

$\mathrm{CC}_{e}^{A}$, the ANF-restricted extensional Calculus of Constructions. ![]() $\mathrm{CC}_{e}^{A}$ features a machine-like semantics for evaluating ANF terms, and we prove correctness of separate compilation with respect to this machine semantics. To support the type-preserving translation of dependent elimination for booleans,

$\mathrm{CC}_{e}^{A}$ features a machine-like semantics for evaluating ANF terms, and we prove correctness of separate compilation with respect to this machine semantics. To support the type-preserving translation of dependent elimination for booleans, ![]() $\mathrm{CC}_{e}^{A}$ uses two extensions to ECC: it records propositional equalities in typing derivations and applies equality reflection to access these equalities. These extensions make type checking undecidable. We discuss how to recover decidability in Section 7.

$\mathrm{CC}_{e}^{A}$ uses two extensions to ECC: it records propositional equalities in typing derivations and applies equality reflection to access these equalities. These extensions make type checking undecidable. We discuss how to recover decidability in Section 7.

Our ANF translation is useful as a compiler pass, but it also provides insights into dependent-type preservation and ANF translations. The target IL is designed to express and check semantic equivalences that the translation relies on for correctness. This lesson likely extends to other translations in a type-preserving compiler for dependent types.

We also develop a new proof architecture for reasoning about the ANF translation. This proof architecture is useful when ANF is achieved through a translation rather than a reduction system. Our translation is indexed by a continuation, a program with a hole, to build up the translated term. Thus, the type of the translated term can only be determined when the hole is filled with a well-typed term. Mirroring the translation, we use the type of the continuation to build up a proof of type-preservation.

This paper includes key definitions and proof cases; extended figures and proofs are available in Appendix 1.

2 Main ideas

ANF 101. A-normal form (ANF)Footnote 1 is a syntactic form that makes control flow explicit in the syntax of a program (Sabry & Felleisen, Reference Sabry and Felleisen1992; Flanagan et al., Reference Flanagan, Sabry, Duba and Felleisen1993). ANF encodes computation (e.g., reducing an expression to a value) as a sequence of primitive intermediate computations composed through intermediate variables, similar to how all computation works in an assembly language.

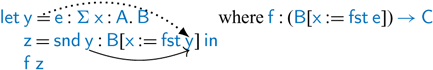

For example, to reduce ![]() , which projects the second element of a pair, we need to describe the evaluation order and control flow of each language primitive. We evaluate

, which projects the second element of a pair, we need to describe the evaluation order and control flow of each language primitive. We evaluate ![]() to a value, and then, we project out the second component. ANF makes control flow explicit in the syntax by decomposing

to a value, and then, we project out the second component. ANF makes control flow explicit in the syntax by decomposing ![]() into the series of primitive computations that the machine must execute, sequenced by

into the series of primitive computations that the machine must execute, sequenced by ![]() . Roughly, we can think of the ANF translation

. Roughly, we can think of the ANF translation ![]() as

as ![]() . However,

. However, ![]() could also be a deeply nested expression in the source language. In general, the ANF translation reassociates all the intermediate computations from

could also be a deeply nested expression in the source language. In general, the ANF translation reassociates all the intermediate computations from ![]() so there are no nested

so there are no nested ![]() expressions, and we end up with

expressions, and we end up with ![]() .

.

Once in ANF, it is simple to formalize a machine semantics to implement evaluation. Each ![]() -bound computation

-bound computation ![]() is some primitive machine step, performing the computation

is some primitive machine step, performing the computation ![]() and binding the value to

and binding the value to ![]() . In this way, control flow has been compiled into data flow. The machine proceeds by always reducing the left-most machine step, which will be a primitive operation with values for operands. For a lazy semantics, we can instead delay each machine step and begin forcing the inner-most body (right-most expression).

. In this way, control flow has been compiled into data flow. The machine proceeds by always reducing the left-most machine step, which will be a primitive operation with values for operands. For a lazy semantics, we can instead delay each machine step and begin forcing the inner-most body (right-most expression).

Why Translation Disrupts Typing and How to Fix it. The problem with dependent-type preservation has little to do with ANF itself and everything to do with dependent types. Transformations which ought to be fine are not because the type theory is so beholden to details of syntax. This is essentially the problem of commutative cuts in type theory (Herbelin, Reference Herbelin2009; Boutillier, Reference Boutillier2012). Transformations that change the structure (syntax) of a program can disrupt dependencies. By dependency, we mean an expression ![]() whose type and evaluation depends on a sub-expression

whose type and evaluation depends on a sub-expression ![]() . We call a sub-expression such as

. We call a sub-expression such as ![]() depended upon. These dependencies occur in dependent elimination forms, such as application, projection, and if expressions. Transforming a dependent elimination can disrupt dependencies.

depended upon. These dependencies occur in dependent elimination forms, such as application, projection, and if expressions. Transforming a dependent elimination can disrupt dependencies.

For example, the dependent type of a second projection of a dependent pair ![]() is typed as follows:

is typed as follows:

Notice that the depended upon sub-expression ![]() is copied into the type, indicated by the solid line arrow. Dependent pairs can be used to define refinement types, such as encoding a type that guarantees an index is in bounds:

is copied into the type, indicated by the solid line arrow. Dependent pairs can be used to define refinement types, such as encoding a type that guarantees an index is in bounds: ![]() . Then, the second projection

. Then, the second projection ![]() represents an explicit proof about first projection. Unfortunately, transforming the expression

represents an explicit proof about first projection. Unfortunately, transforming the expression ![]() can easily change its type.

can easily change its type.

For example, suppose we have the nested expression ![]() , and we want to

, and we want to ![]() -bind all intermediate computations (which, incidentally, ANF does).

-bind all intermediate computations (which, incidentally, ANF does).

This is not well typed, even with the following dependent-![]() rule (treated as syntactic sugar for dependent application):

rule (treated as syntactic sugar for dependent application):

The problem now is the dependent elimination ![]() is

is ![]() -bound and then used, changing the type to

-bound and then used, changing the type to ![]() . This means the equality

. This means the equality ![]() is missing,Footnote 2

but is needed to type check this term (indicated by the dotted line arrow). This fails since

is missing,Footnote 2

but is needed to type check this term (indicated by the dotted line arrow). This fails since ![]() expects

expects ![]() , but is applied to

, but is applied to ![]() . The typing rule essentially forgets that, by the time

. The typing rule essentially forgets that, by the time ![]() happens, the machine will have performed the step of computation

happens, the machine will have performed the step of computation ![]() , forcing

, forcing ![]() to take on the value of

to take on the value of ![]() , so it ought to be safe to assume that

, so it ought to be safe to assume that ![]() in the types. When type checking in linearized machine languages, we need to record these machine steps throughout the typing derivation.

in the types. When type checking in linearized machine languages, we need to record these machine steps throughout the typing derivation.

The above explanation applies to all dependent eliminations of negative types, such as dependently typed functions and compound data structures (modeled as ![]() $\Pi$ and

$\Pi$ and ![]() $\Sigma$ types).

$\Sigma$ types).

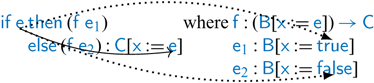

An analogous problem occurs with dependent elimination of positive types, which include data types eliminated through branching such as booleans with ![]() expressions. In the dependent typing rule for

expressions. In the dependent typing rule for ![]() , the types of the branches learn whether the predicate of the

, the types of the branches learn whether the predicate of the ![]() is either

is either ![]() . This allows the type system to statically reflect information from dynamic control flow.

. This allows the type system to statically reflect information from dynamic control flow.

Now consider an ![]() expression and a function

expression and a function ![]() . The expression

. The expression ![]() is well typed, but if we want to push the application into the branches to make

is well typed, but if we want to push the application into the branches to make ![]() behave a little more like goto (like the ANF translation does), this result is not well typed.

behave a little more like goto (like the ANF translation does), this result is not well typed.

This transformation fails when type checking the applications of ![]() to the branches

to the branches ![]() and

and ![]() . The function

. The function ![]() is expecting an argument of type

is expecting an argument of type ![]() but is applied to arguments of type

but is applied to arguments of type ![]() and

and ![]() . The type system cannot prove that

. The type system cannot prove that ![]() is equal to both

is equal to both ![]() and

and ![]() . In essence, this transformation relies on the fact that, by the time the expression

. In essence, this transformation relies on the fact that, by the time the expression ![]() executes, the machine has evaluated

executes, the machine has evaluated ![]() to

to ![]() (and, analogously,

(and, analogously, ![]() to

to ![]() in the other branch), but the type system has no way to express this.

in the other branch), but the type system has no way to express this.

We design a ![]() type system in which we can express these intuitions and recover type preservation of these kinds of transformations. We use two extensions to ECC: one for negative types (

type system in which we can express these intuitions and recover type preservation of these kinds of transformations. We use two extensions to ECC: one for negative types (![]() $\Pi$ and

$\Pi$ and ![]() $\Sigma$ in ECC) and one for positive types (booleans and natural numbers in ECC).

$\Sigma$ in ECC) and one for positive types (booleans and natural numbers in ECC).

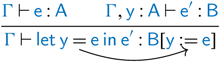

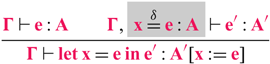

For negative types, it suffices to use definitions (Severi & Poll Reference Severi and Poll1994), a standard extension to type theory that changes the typing rule for ![]() to thread equalities into sub-derivations and resolve dependencies. The relevant typing rule is:

to thread equalities into sub-derivations and resolve dependencies. The relevant typing rule is:

The highlighted part, ![]() , is the only difference from the standard dependent typing rule. This definition is introduced when type checking the body of the

, is the only difference from the standard dependent typing rule. This definition is introduced when type checking the body of the ![]() and can be used to solve type equivalence in sub-derivations, instead of only in the substitution

and can be used to solve type equivalence in sub-derivations, instead of only in the substitution ![]() in the “output” of the typing rule. While this is an extension to the type theory, it is a standard extension that is admissible in any Pure Type System (PTS) (Severi & Poll Reference Severi and Poll1994) and is a feature already found in dependently typed languages such as Coq. With this addition, the transformation of

in the “output” of the typing rule. While this is an extension to the type theory, it is a standard extension that is admissible in any Pure Type System (PTS) (Severi & Poll Reference Severi and Poll1994) and is a feature already found in dependently typed languages such as Coq. With this addition, the transformation of ![]() type checks in the target language by recording the definition

type checks in the target language by recording the definition ![]() while type checking the body of a

while type checking the body of a ![]() expression.

expression.

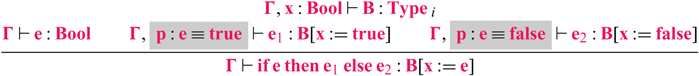

For positive types, we record a propositional equality between the term being eliminated and its value. For booleans, we need the following typing rule for ![]() .Footnote 3

.Footnote 3

The two highlighted portions of the rule are modifications over the standard typing rule. This rule introduces a propositional equality ![]() between the term

between the term ![]() that appears in the calling context’s type to the value known in the branches. This represents an assumption that

that appears in the calling context’s type to the value known in the branches. This represents an assumption that ![]() and

and ![]() are equal in the type system and allows pushing the context surrounding the

are equal in the type system and allows pushing the context surrounding the ![]() expression into the branches.

expression into the branches.

Like definitions, propositional equalities thread “machine steps” into the typing derivations of ![]() and

and ![]() . In contrast, these equalities are accessed through an additional type equivalence rule

. In contrast, these equalities are accessed through an additional type equivalence rule ![]() , which states that an equivalence holds between two terms if some proof of equality exists between them.

, which states that an equivalence holds between two terms if some proof of equality exists between them.

Our earlier example ![]() expression now type checks using the modified typing rule for

expression now type checks using the modified typing rule for ![]() and

and ![]() . We thread the equivalence

. We thread the equivalence ![]() (respectively

(respectively ![]() ) which allows

) which allows ![]() to be applied to

to be applied to ![]() (respectively

(respectively ![]() ) at the correct type.

) at the correct type.

Equality reflection is not a perfect solution, as adding this type equivalence rule can introduce undecidable type checking. While equality reflection allows definitions like ![]() to be subsumed by a propositional equality

to be subsumed by a propositional equality ![]() , we use definitions when sufficient (such as for negative types) to avoid undecidable type checking. We discuss how to recover decidability of

, we use definitions when sufficient (such as for negative types) to avoid undecidable type checking. We discuss how to recover decidability of ![]() $\mathrm{CC}_{e}^{A}$ in Section 7; however, this would distract us from the ANF translation in the present work.

$\mathrm{CC}_{e}^{A}$ in Section 7; however, this would distract us from the ANF translation in the present work.

Formalizing Type-Preserving ANF Translation. Despite these simple extensions to ![]() $\mathrm{CC}_{e}^{A}$, formalizing the ANF type-preservation argument is still tricky. In the source, looking at an expression such as

$\mathrm{CC}_{e}^{A}$, formalizing the ANF type-preservation argument is still tricky. In the source, looking at an expression such as ![]() , we do not know whether the expression is embedded in a larger context. To formalize the ANF translation, it helps to have a compositional syntax for translating and reasoning about the types of an expression and the unknown context.

, we do not know whether the expression is embedded in a larger context. To formalize the ANF translation, it helps to have a compositional syntax for translating and reasoning about the types of an expression and the unknown context.

To make the translation compositional, we index the ANF translation by a target language (non-first-class) continuation ![]() representing the rest of the computation in which a translated expression will be used.Footnote 4 A continuation

representing the rest of the computation in which a translated expression will be used.Footnote 4 A continuation ![]() is a program with a hole (single linear variable)

is a program with a hole (single linear variable) ![]() $[\cdot]$ and can be composed with a computation

$[\cdot]$ and can be composed with a computation ![]() , written

, written ![]() , to form a program

, to form a program ![]() . Keeping continuations non-first-class ensures that continuations must be used linearly and avoids control effects, which cause inconsistency with dependent types (Barthe & Uustalu, Reference Barthe and Uustalu2002; Herbelin, 2005). In ANF, there are only two continuations: either

. Keeping continuations non-first-class ensures that continuations must be used linearly and avoids control effects, which cause inconsistency with dependent types (Barthe & Uustalu, Reference Barthe and Uustalu2002; Herbelin, 2005). In ANF, there are only two continuations: either ![]() $[\cdot]$ or

$[\cdot]$ or ![]() . Using continuations, we define ANF translation for

. Using continuations, we define ANF translation for ![]() $\Sigma$ types and second projections as follows. We use

$\Sigma$ types and second projections as follows. We use ![]() as shorthand for translating

as shorthand for translating ![]() with an empty continuation,

with an empty continuation, ![]() .

.

This allows us to focus on composing the primitive operations instead of reassociating ![]() bindings.

bindings.

For compositional reasoning, we develop a type system for continuations. The key typing rule is the following.

The type ![]() of continuations describes that the continuation must be composed with the term

of continuations describes that the continuation must be composed with the term ![]() of type

of type ![]() , and the result will be of type

, and the result will be of type ![]() . Note that this type allows us to introduce the definition

. Note that this type allows us to introduce the definition ![]() via the type, before we know how the continuation is used.Footnote 5 We discuss this rule further in Section 4, and how continuation typing is not an extension to the target type theory.

via the type, before we know how the continuation is used.Footnote 5 We discuss this rule further in Section 4, and how continuation typing is not an extension to the target type theory.

The key lemma to prove type preservation is the following.

Lemma 2.1 ![]()

The intuition behind this lemma shows that type preservation follows if every time we construct a continuation ![]() , we show that

, we show that ![]() is well typed. The translation starts with an empty continuation

is well typed. The translation starts with an empty continuation ![]() , which is trivially well typed. We systematically build up the translation of

, which is trivially well typed. We systematically build up the translation of ![]() in

in ![]() by recurring on sub-expressions of

by recurring on sub-expressions of ![]() and building up a new continuation

and building up a new continuation ![]() . If each time we can show that this

. If each time we can show that this ![]() is well typed, then by induction, the whole translation is type preserving. This lemma is indexed by an additional environment

is well typed, then by induction, the whole translation is type preserving. This lemma is indexed by an additional environment ![]() and answer type

and answer type ![]() . Intuitively, this is because it is the continuation

. Intuitively, this is because it is the continuation ![]() , not the expression

, not the expression ![]() , that dictates the final answer type

, that dictates the final answer type ![]() of the translation, and the environment will provide some additional equalities and definitions in

of the translation, and the environment will provide some additional equalities and definitions in ![]() under which we can type check the transformed

under which we can type check the transformed ![]() .

.

3 Source: ECC with definitions

Our source language, ECC, is Luo’s Extended Calculus of Constructions (ECC) (Luo, Reference Luo1990) extended with dependent elimination of booleans, natural numbers with the recursive eliminator, and definitions (Severi & Poll, Reference Severi and Poll1994). This language is a subset of CIC and is based on the presentation given in the Coq reference manualFootnote 6; Timany & Sozeau, (Reference Timany and Sozeau2017) give a recent account of the metatheory for the CIC, including all the features of ECC. We typeset ECC in a ![]() . We present the syntax of ECC in Figure 15. ECC extends the Calculus of Constructions (CC) (Coquand & Huet, Reference Coquand and Huet1988) with

. We present the syntax of ECC in Figure 15. ECC extends the Calculus of Constructions (CC) (Coquand & Huet, Reference Coquand and Huet1988) with ![]() $\Sigma$ types (strong dependent pairs) and an infinite predicative hierarchy of universes. There is no explicit phase distinction; that is, there is no syntactic distinction between terms, which represent run-time expressions, and types, which classify terms. However, we usually use the meta-variable

$\Sigma$ types (strong dependent pairs) and an infinite predicative hierarchy of universes. There is no explicit phase distinction; that is, there is no syntactic distinction between terms, which represent run-time expressions, and types, which classify terms. However, we usually use the meta-variable ![]() to evoke a term and the meta-variables

to evoke a term and the meta-variables ![]() and

and ![]() to evoke a type. The language includes one impredicative universe,

to evoke a type. The language includes one impredicative universe, ![]() , and an infinite hierarchy of predicative universes

, and an infinite hierarchy of predicative universes ![]() . The syntax of expressions

. The syntax of expressions ![]() includes names

includes names ![]() , universes

, universes ![]() , dependent function types

, dependent function types ![]() , functions

, functions ![]() , application

, application ![]() , dependent pair types

, dependent pair types ![]() , dependent pairs

, dependent pairs ![]() , first

, first ![]() and second

and second ![]() projections of dependent pairs, dependent let

projections of dependent pairs, dependent let ![]() , the boolean type

, the boolean type ![]() , the boolean values

, the boolean values ![]() , dependent if

, dependent if ![]() , the natural number type

, the natural number type ![]() , the natural number values

, the natural number values ![]() and

and ![]() , and the recursive eliminator for natural numbers

, and the recursive eliminator for natural numbers ![]() . For brevity, we omit the type annotation on dependent pairs, as in

. For brevity, we omit the type annotation on dependent pairs, as in ![]() . Note that

. Note that ![]() bound definitions do not include type annotations; this is not standard, but type checking is still decidable (Severi & Poll, Reference Severi and Poll1994), and it simplifies our ANF translation. As is standard, we assume uniqueness of names and consider syntax up to

bound definitions do not include type annotations; this is not standard, but type checking is still decidable (Severi & Poll, Reference Severi and Poll1994), and it simplifies our ANF translation. As is standard, we assume uniqueness of names and consider syntax up to ![]() $\alpha$-equivalence.

$\alpha$-equivalence.

The following table summarizes the judgments for ECC and the new notation introduced, in particular the notation for definitions in contexts and substitution of variables for expressions.

In Figure 2, we give the reductions ![]() for ECC, which are entirely standard. We extend reduction to conversion by defining

for ECC, which are entirely standard. We extend reduction to conversion by defining ![]() to be the reflexive, transitive, compatible closure of reduction

to be the reflexive, transitive, compatible closure of reduction ![]() $\triangleright$. The conversion relation is used to compute equivalence between types, but we can also view it as the operational semantics for the language. We define

$\triangleright$. The conversion relation is used to compute equivalence between types, but we can also view it as the operational semantics for the language. We define ![]() as the evaluation function for whole programs using conversion, which we use in our compiler correctness proof.

as the evaluation function for whole programs using conversion, which we use in our compiler correctness proof.

Fig. 1: ECC syntax.

Fig. 2: ECC dynamic semantics (excerpt).

In Figure 3, we define definitional equivalence (or just equivalence) ![]() as conversion up to

as conversion up to ![]() $\eta$-equivalence. We use the notation

$\eta$-equivalence. We use the notation ![]() for equivalence, eliding the environment when it is obvious or unnecessary. We also define cumulativity (subtyping)

for equivalence, eliding the environment when it is obvious or unnecessary. We also define cumulativity (subtyping) ![]() , to allow types in lower universes to inhabit higher universes.

, to allow types in lower universes to inhabit higher universes.

Fig. 3: ECC equivalence and subtyping (excerpt).

We define the type system for ECC in Figure 4, which is mutually defined with well-formedness of environments. The typing rules are entirely standard for a dependent type system. Note that types themselves, such as ![]() have types (called universes), and universes also have types which are higher universes. In [AX-PROP], the type of

have types (called universes), and universes also have types which are higher universes. In [AX-PROP], the type of ![]() is

is ![]() , and in [Ax-Type], the type of each universe

, and in [Ax-Type], the type of each universe ![]() is the next higher universe

is the next higher universe ![]() . Note that we have impredicative function types in

. Note that we have impredicative function types in ![]() , given by [Prod-Prop]. For this work, we ignore the

, given by [Prod-Prop]. For this work, we ignore the ![]() versus

versus ![]() distinction used in some type theories, such as Coq’s, although adding it causes no difficulty. Note that the rules for second projection, [Snd], let, [Let], if, [If], and the eliminator for natural numbers [ElimNat] substitute sub-expressions into the type system. These are the key typing rules that introduce difficulty in type-preserving compilation for dependent types.

distinction used in some type theories, such as Coq’s, although adding it causes no difficulty. Note that the rules for second projection, [Snd], let, [Let], if, [If], and the eliminator for natural numbers [ElimNat] substitute sub-expressions into the type system. These are the key typing rules that introduce difficulty in type-preserving compilation for dependent types.

Fig. 4: ECC typing (excerpt).

4 Target: ECC with ANF support

Our target language, ![]() $\mathrm{CC}_{e}^{A}$, is a variant of ECC with a modified typing rule for dependent

$\mathrm{CC}_{e}^{A}$, is a variant of ECC with a modified typing rule for dependent ![]() that introduces propositional equalities between terms and equality reflection for accessing assumed equalities. The type system of

that introduces propositional equalities between terms and equality reflection for accessing assumed equalities. The type system of ![]() $\mathrm{CC}_{e}^{A}$ is not particularly novel as its type theory is adapted from the extensional Calculus of Constructions (Oury, Reference Oury2005). While

$\mathrm{CC}_{e}^{A}$ is not particularly novel as its type theory is adapted from the extensional Calculus of Constructions (Oury, Reference Oury2005). While ![]() $\mathrm{CC}_{e}^{A}$ supports ANF syntax, the full language is not ANF-restricted; it has the same syntax as ECC. We do not restrict the full language because maintaining ANF while type checking adds needless complexity, especially when checking for equality since conversion often breaks ANF. Instead, we show that our compiler generates only ANF restricted terms in

$\mathrm{CC}_{e}^{A}$ supports ANF syntax, the full language is not ANF-restricted; it has the same syntax as ECC. We do not restrict the full language because maintaining ANF while type checking adds needless complexity, especially when checking for equality since conversion often breaks ANF. Instead, we show that our compiler generates only ANF restricted terms in ![]() $\mathrm{CC}_{e}^{A}$ and define a separate ANF-preserving machine-like semantics for evaluating programs in ANF. We typeset

$\mathrm{CC}_{e}^{A}$ and define a separate ANF-preserving machine-like semantics for evaluating programs in ANF. We typeset ![]() $\mathrm{CC}_{e}^{A}$ in a

$\mathrm{CC}_{e}^{A}$ in a ![]() ; in later sections, we reserve this font exclusively for the ANF restricted

; in later sections, we reserve this font exclusively for the ANF restricted ![]() $\mathrm{CC}_{e}^{A}$. This ability to break ANF locally to support reasoning is similar to the language

$\mathrm{CC}_{e}^{A}$. This ability to break ANF locally to support reasoning is similar to the language ![]() $F_J$ of Maurer et al. (Reference Maurer, Downen, Ariola and Peyton Jones2017), which does not enforce ANF syntactically, but supports ANF transformation and optimization with join points.

$F_J$ of Maurer et al. (Reference Maurer, Downen, Ariola and Peyton Jones2017), which does not enforce ANF syntactically, but supports ANF transformation and optimization with join points.

We can imagine the compilation process as either (1) generating ANF syntax in ![]() $\mathrm{CC}_{e}^{A}$ from ECC or (2) as first embedding ECC in

$\mathrm{CC}_{e}^{A}$ from ECC or (2) as first embedding ECC in ![]() $\mathrm{CC}_{e}^{A}$ and then rewriting

$\mathrm{CC}_{e}^{A}$ and then rewriting ![]() $\mathrm{CC}_{e}^{A}$ terms into ANF. In Section 5, we present the compiler as process (1), a compiler from ECC to ANF

$\mathrm{CC}_{e}^{A}$ terms into ANF. In Section 5, we present the compiler as process (1), a compiler from ECC to ANF ![]() $\mathrm{CC}_{e}^{A}$. In this section, we develop most of the supporting metatheory necessary for ANF as intra-language equivalences and process (2) may be a more helpful intuition.

$\mathrm{CC}_{e}^{A}$. In this section, we develop most of the supporting metatheory necessary for ANF as intra-language equivalences and process (2) may be a more helpful intuition.

We give the ANF syntax for ![]() $\mathrm{CC}_{e}^{A}$ in Figure 5(a). We impose a syntactic distinction between values

$\mathrm{CC}_{e}^{A}$ in Figure 5(a). We impose a syntactic distinction between values ![]() which do not reduce, computations

which do not reduce, computations ![]() which eliminate values and can be composed using continuations

which eliminate values and can be composed using continuations ![]() , and configurations

, and configurations ![]() which represent the machine configurations executed by the ANF machine semantics. We add the identity type

which represent the machine configurations executed by the ANF machine semantics. We add the identity type ![]() and

and ![]() to enable preserving dependent typing for

to enable preserving dependent typing for ![]() expressions and the join-point optimization, further described in Section 6. We do not require an elimination form for identity types; they are instead used via the restricted form of equality reflection in the equivalence judgment. A continuation

expressions and the join-point optimization, further described in Section 6. We do not require an elimination form for identity types; they are instead used via the restricted form of equality reflection in the equivalence judgment. A continuation ![]() is a program with a hole and is composed

is a program with a hole and is composed ![]() with a computation

with a computation ![]() to form a configuration

to form a configuration ![]() . For example,

. For example, ![]() . Since continuations are not first-class objects, we cannot express control effects. Note that despite the syntactic distinctions, we still do not enforce a phase distinction—configurations (programs) can appear in types. Finally in Figure 5(b), we give the full non-ANF syntax, denoted by meta-variables

. Since continuations are not first-class objects, we cannot express control effects. Note that despite the syntactic distinctions, we still do not enforce a phase distinction—configurations (programs) can appear in types. Finally in Figure 5(b), we give the full non-ANF syntax, denoted by meta-variables ![]() . As done with ECC, we usually use the meta-variable

. As done with ECC, we usually use the meta-variable ![]() to evoke a term and the meta-variables

to evoke a term and the meta-variables ![]() to evoke a type.

to evoke a type.

Fig. 5: ![]() $\mathrm{CC}_{e}^{A}$ syntax.

$\mathrm{CC}_{e}^{A}$ syntax.

To summarize, the meaning of the judgments of ![]() $\mathrm{CC}_{e}^{A}$ is the same as ECC. However,

$\mathrm{CC}_{e}^{A}$ is the same as ECC. However, ![]() $\mathrm{CC}_{e}^{A}$ has additional syntax for the identity type.

$\mathrm{CC}_{e}^{A}$ has additional syntax for the identity type.

We give the new typing rules in Figure 6. The rules for the identity type are standard. The key change in ![]() $\mathrm{CC}_{e}^{A}$ is in the typing rule for

$\mathrm{CC}_{e}^{A}$ is in the typing rule for ![]() . The typing rule for

. The typing rule for ![]() introduces a propositional equality into the typing environment for each branch. These record a machine step: the machine will have reduced

introduces a propositional equality into the typing environment for each branch. These record a machine step: the machine will have reduced ![]() to

to ![]() before jumping to the first branch and reduced

before jumping to the first branch and reduced ![]() to

to ![]() before jumping to the second branch. This is necessary to support the type-preserving ANF transformation of

before jumping to the second branch. This is necessary to support the type-preserving ANF transformation of ![]() .

.

Fig. 6: ![]() $\mathrm{CC}_{e}^{A}$ typing (excerpt).

$\mathrm{CC}_{e}^{A}$ typing (excerpt).

We require a new definition of equivalence to support extensionality, as shown in Figure 7. The rule ![]() is used to determine two terms are equivalent if there is a proof of their equality. In particular, we use this rule to access equalities in the context such as those introduced in the typing rule for

is used to determine two terms are equivalent if there is a proof of their equality. In particular, we use this rule to access equalities in the context such as those introduced in the typing rule for ![]() . We add congruence rules for all syntactic forms including the identity type. The rules

. We add congruence rules for all syntactic forms including the identity type. The rules ![]() Footnote 7 and

Footnote 7 and ![]() state that equivalence is preserved under substitution and the standard reduction defined for ECC. We also include

state that equivalence is preserved under substitution and the standard reduction defined for ECC. We also include ![]() $\eta$-equivalence as defined for ECC and

$\eta$-equivalence as defined for ECC and ![]() $\eta$-equivalences for booleans.

$\eta$-equivalences for booleans.

Fig. 7: ![]() $\mathrm{CC}_{e}^{A}$ equivalence (excerpt).

$\mathrm{CC}_{e}^{A}$ equivalence (excerpt).

Fig. 8: Composition of configurations.

To ensure reduction preserves ANF, we define composition of a continuation ![]() and a configuration

and a configuration ![]() , Figure 24, typically called renormalization in the literature (Sabry & Wadler Reference Sabry and Wadler1997; Kennedy Reference Kennedy2007). In ANF, all continuations are left associated, so the standard definition of substitution does not preserve ANF, unlike in alternatives such as CPS or monadic form. Note that

, Figure 24, typically called renormalization in the literature (Sabry & Wadler Reference Sabry and Wadler1997; Kennedy Reference Kennedy2007). In ANF, all continuations are left associated, so the standard definition of substitution does not preserve ANF, unlike in alternatives such as CPS or monadic form. Note that ![]() $\beta$-reduction takes an ANF configuration

$\beta$-reduction takes an ANF configuration ![]() but would naßvely produce

but would naßvely produce ![]() . Substituting the term

. Substituting the term ![]() , a configuration, into the continuation

, a configuration, into the continuation ![]() could result in the non-ANF term

could result in the non-ANF term ![]() . When composing a continuation with a configuration,

. When composing a continuation with a configuration, ![]() , we unnest all continuations so they remain left associated. Note that these definitions rely on our uniqueness-of-names assumption.

, we unnest all continuations so they remain left associated. Note that these definitions rely on our uniqueness-of-names assumption.

Digression on composition in ANF. In the literature, the composition operation ![]() is usually introduced as renormalization, as if the only intuition for why it exists is “well, it happens that ANF is not preserved under

is usually introduced as renormalization, as if the only intuition for why it exists is “well, it happens that ANF is not preserved under ![]() $\beta$-reduction.” It is not mere coincidence; the intuition for this operation is composition, and having a syntax for composing terms is not only useful for stating

$\beta$-reduction.” It is not mere coincidence; the intuition for this operation is composition, and having a syntax for composing terms is not only useful for stating ![]() $\beta$-reduction, but useful for all reasoning about ANF. This should not come as a surprise—compositional reasoning is useful. The only surprise is that the composition operation is not the usual one used in programming language semantics, that is, substitution. In ANF, as in monadic normal form, substitution can be used to compose any expression with a value, since names are values and values can always be replaced by values. But substitution cannot just replace a name, which is a value, with a computation or configuration. That would not be well typed. So how do we compose computations with configurations? We can use

$\beta$-reduction, but useful for all reasoning about ANF. This should not come as a surprise—compositional reasoning is useful. The only surprise is that the composition operation is not the usual one used in programming language semantics, that is, substitution. In ANF, as in monadic normal form, substitution can be used to compose any expression with a value, since names are values and values can always be replaced by values. But substitution cannot just replace a name, which is a value, with a computation or configuration. That would not be well typed. So how do we compose computations with configurations? We can use ![]() , as in

, as in ![]() , which we can imagine as an explicit substitution. In monadic form, there is no distinction between computations and configurations, so the same term works to compose configurations. But in ANF, we have no object-level term to compose configurations or continuations. We cannot substitute a configuration

, which we can imagine as an explicit substitution. In monadic form, there is no distinction between computations and configurations, so the same term works to compose configurations. But in ANF, we have no object-level term to compose configurations or continuations. We cannot substitute a configuration ![]() into a continuation

into a continuation ![]() , since this would result in the non-ANF (but valid monadic) expression

, since this would result in the non-ANF (but valid monadic) expression ![]() . Instead, ANF requires a new operation to compose configurations:

. Instead, ANF requires a new operation to compose configurations: ![]() . This operation is more generally known as hereditary substitution (Watkins et al., Reference Watkins, Cervesato, Pfenning and Walker2003), a form of substitution that maintains canonical forms. So we can think of it as a form of substitution, or, simply, as composition.

. This operation is more generally known as hereditary substitution (Watkins et al., Reference Watkins, Cervesato, Pfenning and Walker2003), a form of substitution that maintains canonical forms. So we can think of it as a form of substitution, or, simply, as composition.

In Figure 9, we present the call-by-value (CBV) evaluation semantics for ANF ![]() $\mathrm{CC}_{e}^{A}$ terms. The semantics is only for the run-time evaluation of configurations; during type checking, we continue to use the conversion relation defined in Section 3. The semantics is essentially standard, but recall that

$\mathrm{CC}_{e}^{A}$ terms. The semantics is only for the run-time evaluation of configurations; during type checking, we continue to use the conversion relation defined in Section 3. The semantics is essentially standard, but recall that ![]() $\beta$-reduction produces a configuration

$\beta$-reduction produces a configuration ![]() which must be composed with the existing continuation

which must be composed with the existing continuation ![]() . To maintain ANF, the natural number eliminator for the

. To maintain ANF, the natural number eliminator for the ![]() case must first let-bind the intermediate application and recursive call before plugging the final application into the existing continuation

case must first let-bind the intermediate application and recursive call before plugging the final application into the existing continuation ![]() .

.

Fig. 9: ![]() $\mathrm{CC}_{e}^{A}$ evaluation.

$\mathrm{CC}_{e}^{A}$ evaluation.

4.1 Dependent continuation typing

The ANF translation manipulates continuations ![]() as independent entities. To reason about them, and thus to reason about the translation, we introduce continuation typing, defined in Figure 10. The type

as independent entities. To reason about them, and thus to reason about the translation, we introduce continuation typing, defined in Figure 10. The type ![]() of a continuation expresses that this continuation expects to be composed with a term equal to the configuration

of a continuation expresses that this continuation expects to be composed with a term equal to the configuration ![]() of type

of type ![]() and returns a result of type

and returns a result of type ![]() when completed. Normally,

when completed. Normally, ![]() is equivalent to some computation

is equivalent to some computation ![]() , but it must be generalized to a configuration

, but it must be generalized to a configuration ![]() to support typing

to support typing ![]() expressions. This type formally expresses the idea that

expressions. This type formally expresses the idea that ![]() is depended upon in the rest of the computation. The [K-Bind] rule ensures we have access to the specific

is depended upon in the rest of the computation. The [K-Bind] rule ensures we have access to the specific ![]() needed to type body of the

needed to type body of the ![]() continuation and is similar to the rule [T-Cont] used in the type-preserving CPS translation by Bowman et al., (Reference Bowman, Cong, Rioux and Ahmed2018). The rule [K-Empty] has an arbitrary term

continuation and is similar to the rule [T-Cont] used in the type-preserving CPS translation by Bowman et al., (Reference Bowman, Cong, Rioux and Ahmed2018). The rule [K-Empty] has an arbitrary term ![]() , since an empty continuation

, since an empty continuation ![]() $[\cdot]$ has no “rest of the program” that could depend on anything.

$[\cdot]$ has no “rest of the program” that could depend on anything.

Fig. 10: ![]() $\mathrm{CC}_{e}^{A}$ continuation typing.

$\mathrm{CC}_{e}^{A}$ continuation typing.

Intuitively, what we want from continuation typing is a compositionality property—that we can reason about the types of configurations ![]() (generally, for configurations

(generally, for configurations ![]() ) by composing the typing derivations for

) by composing the typing derivations for ![]() and

and ![]() . To get this property, a continuation type must express not merely the type of its hole

. To get this property, a continuation type must express not merely the type of its hole ![]() , but which term

, but which term ![]() will be bound in the hole. We see this formally from the typing rule [Let] (the same for

will be bound in the hole. We see this formally from the typing rule [Let] (the same for ![]() $\mathrm{CC}_{e}^{A}$ as for ECC in Section 3), since showing that

$\mathrm{CC}_{e}^{A}$ as for ECC in Section 3), since showing that ![]() is well typed requires showing that

is well typed requires showing that ![]() , that is, requires knowing the definition

, that is, requires knowing the definition ![]() . If we omit the expression

. If we omit the expression ![]() from the type of a continuation, we know there are some configurations

from the type of a continuation, we know there are some configurations ![]() that we cannot type check compositionally. Intuitively, if all we knew about

that we cannot type check compositionally. Intuitively, if all we knew about ![]() was its type, we would be in exactly the situation of trying to type check a continuation that has abstracted some dependent type that depends on the specific

was its type, we would be in exactly the situation of trying to type check a continuation that has abstracted some dependent type that depends on the specific ![]() into one that depends on an arbitrary

into one that depends on an arbitrary ![]() . We prove that our continuation typing is compositional in this way, Lemma 4.1 (Continuation Cut).

. We prove that our continuation typing is compositional in this way, Lemma 4.1 (Continuation Cut).

Note that the result of a continuation type cannot depend on the term that will be plugged in for the hole, that is, for a continuation ![]() does not depend on

does not depend on ![]() . To see this, first note that the initial continuation must be empty and thus cannot have a result type that depends on its hole. The ANF translation will take this initial empty continuation and compose it with intermediate continuations

. To see this, first note that the initial continuation must be empty and thus cannot have a result type that depends on its hole. The ANF translation will take this initial empty continuation and compose it with intermediate continuations ![]() . Since composing any continuation

. Since composing any continuation ![]()

![]() with any continuation

with any continuation ![]() results in a new continuation with the final result type

results in a new continuation with the final result type ![]() , then the composition of any two continuations cannot depend on the type of the hole.

, then the composition of any two continuations cannot depend on the type of the hole.

To prove that continuation typing is not an extension to the type system—that is, is admissible—we prove Lemmas 4.1 and 4.3, that plugging a well-typed computation or configuration into a well-typed continuation results in a well-typed term of the expected type.

We first show Lemma 4.1 (Continuation Cut), which is simple. This lemma tells us that our continuation typing allows for compositional reasoning about configurations ![]() whose result types do not depend on

whose result types do not depend on ![]() .

.

Lemma 4.1 (Continuation Cut) ![]()

Proof. By cases on ![]() .

.

Case:

, trivial.

, trivial.-

Case:

We must show that ![]() , which follows directly from [Let] since, by the continuation typing derivation, we have that

, which follows directly from [Let] since, by the continuation typing derivation, we have that ![]() .

.

Continuation typing seems to require that we compose a continuation ![]() syntactically with

syntactically with ![]() , but we will need to compose with some

, but we will need to compose with some ![]() . It is preferable to prove this as a lemma instead of building it into continuation typing to get a nicer induction property for continuation typing. The proof is essentially that substitution respects equivalence.

. It is preferable to prove this as a lemma instead of building it into continuation typing to get a nicer induction property for continuation typing. The proof is essentially that substitution respects equivalence.

Lemma 4.2 (Continuation Cut Modulo Equivalence) If ![]()

![]() , and

, and ![]() , then

, then ![]() .

.

Proof. By cases on the structure of ![]() .

.

Case:

Trivial.

Trivial.-

Case:

It suffices to show that: If ![]() then

then ![]() .

.

Note that anywhere in the derivation ![]() that

that ![]() is used, it must be used essentially as:

is used, it must be used essentially as: ![]() . We can replace any such use by

. We can replace any such use by ![]()

![]() to construct a derivation for

to construct a derivation for ![]() .

.

Some presentations of context typing (Gordon, Reference Gordon1995)Footnote 8, in non-dependent settings, use a rule like the following.

This suggests we could define continuation typing as follows.

That is, instead of adding separate rules [K-Empty] and [K-Bind], we define a well-typed continuation to be one whose composition with the expected term in the hole is well typed. Then, Lemma 4.1 (Continuation Cut) is definitional rather than admissible. This rule is somewhat surprising; it appears very much like the definition of [Cut], except the computation ![]() being composed with the continuation comes from its type, and the continuation remains un-composed in what we would consider the output of the rule.

being composed with the continuation comes from its type, and the continuation remains un-composed in what we would consider the output of the rule.

The presentations are equivalent, but it is less clear how [K-Type] is related to the definitions we wish to focus on. It is exactly the premises of [K-Bind] that we need to recover type-preservation for ANF, so we choose the presentation with [K-Bind]. However, the rule [K-Type] is more general in the sense that the continuation typing does not need any changes as the definition of continuations change.

The final lemma about continuation typing is also the key to why ANF is type preserving for ![]() . The heterogeneous composition operations perform the ANF translation on

. The heterogeneous composition operations perform the ANF translation on ![]() expressions by composing the branches with the current continuation, reassociating all intermediate computations. The following lemma states that if a configuration

expressions by composing the branches with the current continuation, reassociating all intermediate computations. The following lemma states that if a configuration ![]() is well typed and equivalent to another (well-typed) configuration

is well typed and equivalent to another (well-typed) configuration ![]() under an extended environment

under an extended environment ![]() , and the continuation

, and the continuation ![]() is well typed with respect to

is well typed with respect to ![]() , then the composition

, then the composition ![]() is well typed. We include the additional environment

is well typed. We include the additional environment ![]() , as this environment contains information about new variables introduced through the composition with

, as this environment contains information about new variables introduced through the composition with ![]() , such as definitions and propositional equalities. We choose to include an explicit extra environment

, such as definitions and propositional equalities. We choose to include an explicit extra environment ![]() to avoid needing to prove tedious properties about individual environments. Thus, the proof is simple, except for the

to avoid needing to prove tedious properties about individual environments. Thus, the proof is simple, except for the ![]() case, which essentially must prove that ANF is type preserving for

case, which essentially must prove that ANF is type preserving for ![]() .

.

Lemma 4.3 (Heterogeneous Continuation Cut) If ![]()

![]() such that

such that ![]() and

and ![]() , then

, then ![]() .

.

Proof. By induction on ![]() .

.

Case:

, by Lemma 4.2.

, by Lemma 4.2.-

Case:

Our goal follows by the induction hypothesis applied to the sub-expression

if we can show (1)

if we can show (1)  and (2)

and (2)  under a context

under a context  . (1) follows by the following equations and transitivity of

. (1) follows by the following equations and transitivity of  $\equiv:$:

For (2), note that

$\equiv:$:

For (2), note that

from our premises.

from our premises.

-

Case:

These follow by the induction hypotheses applied to the sub-expressions

and

and  if we can show (1)

if we can show (1)  and (2)

and (2)  under a context

under a context  (analogously for

(analogously for  . For (1), note that

. For (1), note that  by [

by [ $\equiv$-Reflect] and the typing rule [Var].

For (2), note that

$\equiv$-Reflect] and the typing rule [Var].

For (2), note that

from our premises and weakening. By [

from our premises and weakening. By [ $\equiv$-Subst], we know

$\equiv$-Subst], we know  , which follows by [

, which follows by [ $\equiv$-Reflect] and the typing rule [Var].

$\equiv$-Reflect] and the typing rule [Var].

4.2 Metatheory

To demonstrate that the new typing rule for ![]() is consistent, we develop a syntactic model of

is consistent, we develop a syntactic model of ![]() $\mathrm{CC}_{e}^{A}$ in extensional CIC (eCIC). We also prove subject reduction for

$\mathrm{CC}_{e}^{A}$ in extensional CIC (eCIC). We also prove subject reduction for ![]() $\mathrm{CC}_{e}^{A}$. Subject reduction is valuable property for a typed IL to model various optimizations through the equational theory of the language, such as modeling inlining as

$\mathrm{CC}_{e}^{A}$. Subject reduction is valuable property for a typed IL to model various optimizations through the equational theory of the language, such as modeling inlining as ![]() $\beta$-reduction. If subject reduction holds, then all these optimizations are type preserving.

$\beta$-reduction. If subject reduction holds, then all these optimizations are type preserving.

4.2.1 Consistency

Our syntactic model essentially implements the new ![]() rule using the convoy pattern (Chlipala, Reference Chlipala2013), but leaves the rest of the propositional equalities and equality reflection essentially unchanged. Each

rule using the convoy pattern (Chlipala, Reference Chlipala2013), but leaves the rest of the propositional equalities and equality reflection essentially unchanged. Each ![]() expression

expression ![]() is modeled as an

is modeled as an ![]() expression that returns a function expecting a proof that the predicate

expression that returns a function expecting a proof that the predicate ![]() is equal to

is equal to ![]() in the first branch and

in the first branch and ![]() in the second branch. The function, after receiving that proof, executes the code in the branch. The model

in the second branch. The function, after receiving that proof, executes the code in the branch. The model ![]() expression is then immediately applied to refl, the canonical proof of the identity type. We could eliminate equality reflection using the translation of Oury (Reference Oury2005), but this is not necessary for consistency.

expression is then immediately applied to refl, the canonical proof of the identity type. We could eliminate equality reflection using the translation of Oury (Reference Oury2005), but this is not necessary for consistency.

The essence of the model is given in Figure 11. There is only one interesting rule, corresponding to our altered dependent if rule. The model relies on auxiliary definitions in CIC, including subst, if-eta1 and if-eta2, whose types are given as inference rules in Figure 11. Note that the model for ![]() $\mathrm{CC}_{e}^{A}$’s

$\mathrm{CC}_{e}^{A}$’s ![]() is not valid ANF, so it does not suffice to merely use the convoy pattern if we want to take advantage of ANF for compilation.

is not valid ANF, so it does not suffice to merely use the convoy pattern if we want to take advantage of ANF for compilation.

Fig. 11: ![]() $\mathrm{CC}_{e}^{A}$ model in eCIC (excerpt).

$\mathrm{CC}_{e}^{A}$ model in eCIC (excerpt).

We show this is a syntactic model using the usual recipe, which is explained well by Boulier et al. (Reference Boulier, Pédrot and Tabareau2017): we show the translation from ![]() $\mathrm{CC}_{e}^{A}$ to eCIC preserves equivalence, typing, and the definition of

$\mathrm{CC}_{e}^{A}$ to eCIC preserves equivalence, typing, and the definition of ![]() $\perp$ (the empty type). This means that if

$\perp$ (the empty type). This means that if ![]() $\mathrm{CC}_{e}^{A}$ were inconsistent, then we could translate the proof of

$\mathrm{CC}_{e}^{A}$ were inconsistent, then we could translate the proof of ![]() $\perp$ into a proof of

$\perp$ into a proof of ![]() in eCIC, but no such proof exists in eCIC, so

in eCIC, but no such proof exists in eCIC, so ![]() $\mathrm{CC}_{e}^{A}$ is consistent.

$\mathrm{CC}_{e}^{A}$ is consistent.

We use the usual definition of ![]() as

as ![]() , and the same in eCIC. It is trivial that the definition is preserved.

, and the same in eCIC. It is trivial that the definition is preserved.

Lemma 4.4 (Model Preserves Empty Type) ![]()

The essence of showing both that equivalence is preserved and that typing is preserved is in showing that the auxiliary definitions in Figure 11 exist and are well typed. We give these definitions in Coq in the supplementary materials, and the equivalences hold and are well typed without any additional axioms.

Lemma 4.5 (Model Preserves Equivalence) ![]()

Lemma 4.6 (Model Preserves Typing) ![]()

Consistency tells us that there does not exist a closed term of the empty type ![]() . This allows us to interpret types as specifications and well-typed terms as proofs.

. This allows us to interpret types as specifications and well-typed terms as proofs.

Theorem 4.7 (Consistency) There is no ![]() such that

such that ![]()

4.2.2 Syntactic metatheory

![]() $\mathrm{CC}_{e}^{A}$ satisfies all the usual syntactic metatheory properties, but the main property of interest for developing our typed IL is subject reduction. We present the proof of subject reduction, which follows the structure presented by Luo (Reference Luo1990).

$\mathrm{CC}_{e}^{A}$ satisfies all the usual syntactic metatheory properties, but the main property of interest for developing our typed IL is subject reduction. We present the proof of subject reduction, which follows the structure presented by Luo (Reference Luo1990).

Theorem 4.8 (Subject Reduction) ![]()

Proof. By induction on ![]() ■

■

The proof of subject reduction relies on some additional lemmas. In particular, the proof relies on subject reduction for single step reduction (in the [Red-Trans] case), and a lemma called Luo calls context replacement (in the [Red-Cong-Lam] and [Red-Cong-Let] cases).

Lemma 4.9 (Single Step Subject Reduction) ![]() .

.

Proof. By cases on ![]() . Most cases are straightforward, except for

. Most cases are straightforward, except for ![]() $\rhd_{\beta}$ and

$\rhd_{\beta}$ and ![]() $\rhd_{\zeta}$, which rely on Lemmas 4.12 and 4.13, respectively.

$\rhd_{\zeta}$, which rely on Lemmas 4.12 and 4.13, respectively.

Lemma 4.10 (Context Replacement) If ![]() and

and ![]() , then

, then ![]() .

.

Proof. By (mutual) induction on ![]() .

.

Because our typing contexts also contain definitions, we must also be able to replace a definition expression with an equivalent expression. This is required specifically for the [Red-Cong-Let] case in the proof of subject reduction.

Lemma 4.11 (Context Definition Replacement) If ![]() and

and ![]() , then

, then ![]() .

.

Proof. By (mutual) induction on ![]() .

.

Finally, the proof of single step subject reduction relies on a lemma Luo calls Cut, named after the cut rule in sequent calculus. We must also prove an additional similar lemma due to definitions in our context, specifically for the ![]() $\rhd_{\delta}$ case.

$\rhd_{\delta}$ case.

Lemma 4.12 (Cut) If ![]() and

and ![]() then

then ![]()

![]() .

.

Proof. By (mutual) induction on ![]() .

.

Lemma 4.13 (Cut With Definitions) ![]()

![]() .

.

Proof. By (mutual) induction on ![]() .

.

The proofs of both versions of context replacement and Cut are done by mutual induction, because typing in ![]() $\mathrm{CC}_{e}^{A}$ is mutually defined with well-formedness of contexts. Typing also relies on subtyping in the [Conv] case, subtyping relies on equivalence, and equivalence now relies on typing due to the equality reflection rule [

$\mathrm{CC}_{e}^{A}$ is mutually defined with well-formedness of contexts. Typing also relies on subtyping in the [Conv] case, subtyping relies on equivalence, and equivalence now relies on typing due to the equality reflection rule [![]() $\equiv$-Reflect]. The mutual reliance of all these judgments requires that these lemmas be proven by mutual induction. The lemma statements above are not the full statement of the lemma, which we omit for brevity, but the corollaries are of interest. For example, the full lemma definition for Lemma 4.10 is as follows:

$\equiv$-Reflect]. The mutual reliance of all these judgments requires that these lemmas be proven by mutual induction. The lemma statements above are not the full statement of the lemma, which we omit for brevity, but the corollaries are of interest. For example, the full lemma definition for Lemma 4.10 is as follows:

Lemma 4.14 (Context Replacement (full definition))

The full definitions for Lemmas 4.11, 4.12, and 4.13 are included in Appendix 1.

4.3 Correctness of ANF evaluation

In ![]() $\mathrm{CC}_{e}^{A}$, we have an ANF evaluation semantics for run time and a separate definitional equivalence and reduction system for type checking. We prove that these two coincide: running in our ANF evaluation semantics produces a value definitionally equivalent to the original term.

$\mathrm{CC}_{e}^{A}$, we have an ANF evaluation semantics for run time and a separate definitional equivalence and reduction system for type checking. We prove that these two coincide: running in our ANF evaluation semantics produces a value definitionally equivalent to the original term.

When computing definitional equivalence, we end up with terms that are not in ANF and can no longer be used in the ANF evaluation semantics. This is not a problem—we could always ANF translate the resulting term if needed—but can be confusing when reading equations. When this happens, we wrap terms in a distinctive boundary such as ![]() and

and ![]() . The boundary indicates the term is not normal, that is, not in A-normal form. The boundary is only meant to communicate with the reader; formally,

. The boundary indicates the term is not normal, that is, not in A-normal form. The boundary is only meant to communicate with the reader; formally, ![]() .

.

The heart of the correctness proof is actually naturality, a property found in the literature on continuations and CPS that essentially expresses freedom from control effects (e.g., (Thielecke Reference Thielecke2003) explain this well). Lemma 4.15 is the formal statement of naturality in ANF: composing a term ![]() with its continuation

with its continuation ![]() in ANF is equivalent to running

in ANF is equivalent to running ![]() to a value and plugging the result into the hole of the continuation

to a value and plugging the result into the hole of the continuation ![]() . Formally, this states that composing continuations in ANF is sound with respect to standard substitution. The proof follows by straightforward equational reasoning.

. Formally, this states that composing continuations in ANF is sound with respect to standard substitution. The proof follows by straightforward equational reasoning.

Lemma 4.15 (Naturality) ![]()

Proof By induction on the structure of ![]() .

.

Next we show that our ANF evaluation semantics are sound with respect to definitional equivalence. To do that, we first show that the small-step semantics are sound. Then, we show soundness of the evaluation function.

Lemma 4.16 (Small-step soundness) If ![]() .

.

Proof By cases on ![]() . Most cases follow easily from the ECC reduction relation and congruence, except for

. Most cases follow easily from the ECC reduction relation and congruence, except for ![]() $\mapsto_{\beta}$ which requires Lemma 4.15. We give representative cases.

$\mapsto_{\beta}$ which requires Lemma 4.15. We give representative cases.

Theorem 4.17 (Evaluation soundness) ![]() ■

■

Proof. By induction on the length n of the reduction sequence given by ![]() . Note that, unlike conversion, the ANF evaluation semantics have no congruence rules.

. Note that, unlike conversion, the ANF evaluation semantics have no congruence rules.

5 ANF translation

The ANF translation is presented in Figure 12. The translation is standard, defined inductively over syntax and indexed by a current continuation. The continuation is used when translating a value and is composed together “inside-out” the same way continuation composition is defined in Section 4. When translating a value such as ![]() , we plug the value into the current continuation and recursively translate the sub-expressions of the value if applicable. For non-values such as application, we make sequencing explicit by recursively translating each sub-expression with a continuation that binds the result and performs the rest of the computation.

, we plug the value into the current continuation and recursively translate the sub-expressions of the value if applicable. For non-values such as application, we make sequencing explicit by recursively translating each sub-expression with a continuation that binds the result and performs the rest of the computation.

Fig. 12: Naïve ANF translation.

We prove that the translation always produces syntax in ANF (Theorem 5.1). The proof is straightforward.

Theorem 5.1 (ANF) For all ![]() and

and ![]() ,

, ![]() for some

for some ![]() .

.

Our goal is to prove type preservation: if ![]() is well typed in the source, then

is well typed in the source, then ![]() is well typed at a translated type in the target. But to prove type preservation, we must also preserve the rest of the judgmental and syntactic structure that the dependent type system relies on. The type judgment is defined mutually inductively only with well-formedness of environments; all other judgments relied on are defined inductively. We proceed top-down, starting from our main theorem, in order to motivate where each lemma comes into play. The full proof of type preservation is omitted for brevity but is included in Appendix 1.

is well typed at a translated type in the target. But to prove type preservation, we must also preserve the rest of the judgmental and syntactic structure that the dependent type system relies on. The type judgment is defined mutually inductively only with well-formedness of environments; all other judgments relied on are defined inductively. We proceed top-down, starting from our main theorem, in order to motivate where each lemma comes into play. The full proof of type preservation is omitted for brevity but is included in Appendix 1.

Type-preservation is stated below. We do not prove it directly. Since the ANF translation is indexed by a continuation ![]() , we need a stronger induction hypothesis to reason about the type of the continuation

, we need a stronger induction hypothesis to reason about the type of the continuation ![]() .

.

Theorem 5.2 (Type Preservation) If ![]()

Proof. By Lemma 5.3, it suffices to show that ![]() , which follows by [K-Empty].

, which follows by [K-Empty].