Introduction

Originated from a designer's way of thinking, design thinking as a methodology for finding and solving real-life, user-centric problems has demonstrated its effectiveness in multiple areas. Existing literature recommends that design thinking in the K-12 context fosters 21st-century skills for students, such as creativity, collaboration, communication, metacognition, and critical thinking (Retna, Reference Retna2016; Rusmann and Ejsing-Duun, Reference Rusmann and Ejsing-Duun2022). Therefore, design thinking should be nurtured in school students. In order to instil design thinking in school education, a pedagogy needs to be developed in which useful instructions and evaluation techniques are formed, and delivery methods that are compatible with contemporary learning principles are formulated. Besides, to make the learning process purposeful and meaningful, factors such as motivation and active engagement of the learner are essential to be addressed. Gamification is an approach that can enhance the effectiveness of educational activities, through an engagement mechanism that can help learners remain interested, and hence can improve their performance by making their learning experience more enjoyable.

In the process of inculcating design thinking to school students, two Learning Objectives have been formulated: (1) to inculcate creative mindset and (2) to support understanding of the abstract concepts underlying the design thinking process. To fulfil the above objectives and create engagement in the learning process, a gamification approach has been adopted, and two gamified versions have been developed. The research objectives of this work are to assess the effectiveness of gamification:

• as a way of achieving the Learning Objectives and

• as a tool for inducing fun and engagement.

Based on these research objectives, two research questions are formed:

1. What is the effectiveness of the gamification proposed to achieve the learning objectives: L1. to inculcate a creative mindset and L2. to support understanding of the abstract concepts underlying the design process?

2. What is the effectiveness of the game elements in supporting fun and engagement?

To answer these research questions, the elements and principles of design, learning and games have been synthesized into a framework for a learning tool for school students. The framework includes a design thinking process called “IISC Design Thinking™” and its gamified version called “IISC DBox™”. The effectiveness of the framework as a learning tool has been evaluated in this work by conducting workshops that involved 77 school students.

In addition to presenting results from empirical studies for fulfilment of the above objectives, this paper also proposes an approach that can be used for identifying appropriate learning objectives, selecting appropriate game elements to fulfil these objectives, and integrating appropriate game elements with design and learning elements. The paper also proposes a general approach for assessing the effectiveness of a gamified version for attaining a given set of learning objectives.

The research paper is divided into seven sections. Section “Background” provides the literature review (i.e., the need for teaching DT in school education, the role of gamification in learning, and contribution of existing design thinking games in the field of design education). Section “Research methodology” summarizes the research methodology; details of the research methodology are provided in Sections “Nurturing a mindset for creativity: formulation of L1 based on earlier literature" and "Nurturing design concepts before performing design activity steps: formulation of learning objective 2 to address limitations in V1” for the formulation of Learning Objectives, and the development and testing of two, alternative gamified versions. Section “Discussion” discusses research contributions. Section “Conclusions and future work” suggests directions for future work.

Background

Design is a process of finding problems from an existing situation and converting the situation into a preferred one by solving the problems (Simon, Reference Simon1969). While it has been over half a century during which design has been suggested and practised as a means of teaching various critical skills not taught in conventional engineering education, its universal recognition as a tool for education across domains, especially in school education, seems more recent. In particular, Design thinking (DT) is emerging as an effective, domain-agnostic tool for the development of a number of sought-after skills in the 21st century. Johansson-Sköldberg et al. (Reference Johansson-Sköldberg, Woodilla and Çetinkaya2013) defined DT as a simplified version of “designerly thinking” or a way of describing a designer's methods that is applied into an academic or practical discourse. It is an iterative process that involves identifying goals (needs), generating proposals to satisfy the goals, and improving both the goals and proposals (Chakrabarti, Reference Chakrabarti2013). Design and design thinking process (DTP) has been taught in undergraduate and post-graduate courses, especially in the domain of design, engineering, and management. The following section talks about a need to inculcate DTP in school education.

Need for teaching DT in school education

A number of skills have often been cited as some of the most significant ones needed for the workforce in the 21st century. For instance, according to World Economic Forum (2016), complex problem-solving, critical thinking, and creativity are the three most important skills required for the future workforce. Similarly, the report by Finegold and Notabartolo (Reference Finegold and Notabartolo2010) stated critical and creative thinking, problem-solving, communication, collaboration, and flexibility and adaptability as the five most relevant skills for the 21st-century workforce. Furthermore, there is a coherence between the skills required from a workforce and the skills needed to be developed by students. Based on a number of surveys and studies, the American Association of College and Universities (AAC&U) recommended the same intellectual skills (i.e., ritical and creative thinking, communication, teamwork, and problem solving) to be taught from the beginning of the education (AAC&U, 2007). As noted in the reports of the European Commission (European Commission, 2016) and Pacific Policy Research Center (Pacific Policy Research Center, 2010), creative thinking, collaboration, critical thinking, and problem solving are the critical 21st-century learning and innovation skills that young children need to be facilitated with from early on in their life. However, in India, the importance of these skills has been emphasized only very recently [e.g., in the draft National Education Policy, India, 2019 (National Education Policy, 2019)], at a time, when the current Indian education system is under serious criticism because of outdated curricula and teaching methodology. In South Asian context, a large part of the school education system puts greater emphasis on the retention of knowledge, leaving less scope for the development of skills such as creativity, critical thinking, and problem solving (Bhatt et al., Reference Bhatt, Acharya and Chakrabarti2021a). In addition, the assessment of learning in schools often focus on memory-based learning and testing a students’ ability to reproduce content knowledge (National Education Policy India, 2016). Moreover, in classrooms, learning is receptive (where the teacher demonstrates, describes, or writes the teaching content and information is passed one way), and the assignments are typically solved individually (Bhatt et al., Reference Bhatt, Acharya and Chakrabarti2021a). This inhibits the development of collaboration and communication skills in students. The dominance of rote learning is not only limited to India. For example, various literatures (Safdar, Reference Safdar2013; Balci, Reference Balci2019) show the dominance of rote learning education in countries like Turkey and Pakistan. Besides, as noted by Razzouk and Shute (Reference Razzouk and Shute2012), if schools continue to focus on increasing students’ proficiency in traditional subjects such as math and reading, via didactic approaches, it leaves many students disengaged. As stated by Cassim (Reference Cassim2013), complex problems cannot be easily solved by just analytical thinking, which is the dominant mode of thought adopted in education. The above literature indicates that there is a substantial gap between what is offered by the current education system and the requirements of the future.

Design has often been regarded as a central element of engineering education. For example, the Moulton report on “Engineering Design Education” of 1976 laid great emphasis on design as a central focus of engineering education in UK (McMahon et al., Reference McMahon, Ion and Hamilton2003). Design has also been included as an essential part of curricular goals for undergraduate engineering in the CDIO initiatives (Crawley et al., Reference Crawley, Malmqvist, Lucas and Brodeur2011).

Its importance as a generic tool for education across domains, however, seems more recent, and in the form commonly known as design thinking. For instance, Melles et al. (Reference Melles, Howard and Thompson-Whiteside2012) noted the employment of design thinking to improve decision-making practices in various fields and applications such as healthcare systems and services, library system design, strategy and management, operations and organizational studies, and projects where social innovation and social impact matters. Lately, design thinking has been recognized as a useful approach for promoting 21st-century skills in school education. As noted by Scheer et al. (Reference Scheer, Noweski and Meinel2012), DT is effective in fostering 21st century learning through its application in complex interdisciplinary projects in a holistic and constructivist manner. DT complements mono-disciplinary thinking (Lindberg et al., Reference Lindberg, Noweski and Meinel2010) and encourages students to engage in collaborative learning, provides opportunity to express opinion and to think in new ways (Carroll et al., Reference Carroll, Goldman, Britos, Koh, Royalty and Hornstein2010). Helping students to think like designers may better prepare them to deal with difficult situations and to solve complex problems in school, in their careers, and in life in general (Razzouk and Shute, Reference Razzouk and Shute2012). As experts foresee, innovations that stem from creative thinking during the design process are key to economic growth (Roberts, Reference Roberts2006). Affinity for teamwork is one of the characteristics of DT that enables communication as described by Owen (Reference Owen2005). With the use of Bloom's taxonomy, Bhatt et al. (Reference Bhatt, Acharya and Chakrabarti2021a) identified the association between the instructions, that are used in performing design thinking activities, and the cognitive processes. The results showed that following the instructions while performing design activities enabled higher-level cognitive processes such as applying, analyzing, evaluating, and creating; these can lead to the development of 21st-century skills. Rusmann and Ejsing-Duun (Reference Rusmann and Ejsing-Duun2021) reviewed research articles that investigated design thinking at the primary or secondary school levels, and identified the links between design thinking competencies and 21st-century skills such as collaboration, communication, metacognition, and critical thinking. The results imply that nurturing DTP should help students acquire these skills and instil a school of thought that would contribute to the development of the above skill set. Therefore, there is a need to inculcate DTP in school education.

Gamification and learning

Because of its characteristics of keeping players engaged in the process, games are used in education to serve various purposes (e.g., to imbibe skills and knowledge, to train, to spread awareness). There are three types of game approaches used in education: serious games, gamification, and simulation games. “Gamification” uses elements of games (Deterding et al., Reference Deterding, Dixon, Khaled and Nacke2011) and applies them to existing learning courses to fulfil learning objectives. “Serious game” is a full-fledged game “that does not have entertainment, enjoyment, or fun as their primary purpose” (Michael and Chen, Reference Michael and Chen2005). In contrast, “simulation game” is a simulation [representation of reality or some known process/phenomenon (Ochoa, Reference Ochoa1969)] with the game elements added to it (Crooltall et al., Reference Crooltall, Oxford and Saunders1987).

The motive of gamification is primarily to alter a contextual learner-behavior/attitude (Landers, Reference Landers2014); improve the performance; or maximize the required outcome. According to Kapp (Reference Kapp2012), gamification can be adopted when one or multiple motives or goals of an existing course or curriculum is to encourage learners, motivate action, influence behavior, drive innovation, help build skills, and acquire knowledge. Gamification is generally classified into structural gamification and content gamification (Kapp, Reference Kapp2012). Structural gamification is the application of game elements to propel learners through content with no alteration or changes to the content. In contrast, content gamification is the application of game elements, game mechanics, and game thinking to alter content to make it more game-like. Reeves and Read (Reference Reeves and Read2009) identified ten elements of a game, some or all of which can be adopted for the gamification of a course (i.e., self-representation with avatars, three-dimensional environments, narrative context, feedback, reputations, ranks, and levels, marketplaces and economies, competition under rules that are explicit and enforced, teams, parallel communication systems that can be easily configured, time pressure). Gamification can overcome significant problems like lack of learner's motivation, lack of interactivity, or isolation, leading to a high dropout rate in a course (Khalil and Ebner, Reference Khalil, Ebner and Herrington2014). The purpose of gamification can be served only when both the following are satisfied: (a) the Learning Objectives and outcomes are well defined; and (b) efforts are spent to assess the effect of gamification on those learning outcomes (Morschheuser et al., Reference Morschheuser, Hassan, Werder and Hamari2018; Bhatt et al., Reference Bhatt, Suressh, Chakrabarti, Chakrabarti, Poovaiah, Bokil and Kant2021b). Various empirical studies show that gamification has a positive effect on student interest, early engagement (Betts et al., Reference Betts, Bal and Betts2013), and learning outcomes (e.g., retention rate) (Vaibhav and Gupta, Reference Vaibhav and Gupta2014; Krause et al., Reference Krause, Mogalle, Pohl and Williams2015). Therefore, gamification can be a valuable technique for enhancing learning outcomes.

In the design context, Sjovoll and Gulden (Reference Sjovoll and Gulden2017) identified gamification as a typology of engagement that may elicit activities that lead to creative agency and subsequent enjoyment.

To identify various perspectives to be considered in the game development, Cortes Sobrino et al. (Reference Cortes Sobrino, Bertrand, Di Domenico, Jean and Maranzana2017) proposed a taxonomy for games, with three categories (i.e., targeted user/public, the purpose of the game, and types of skill to be imbibed through the game), and classified 17 educational games of the Design Society Database. Later, Bhatt et al. (Reference Bhatt, Suressh, Chakrabarti, Chakrabarti, Poovaiah, Bokil and Kant2021b) augmented the existing taxonomy by considering additional categories and proposed extended taxonomy for aiding the development and evaluation of educational games. The authors found that the games developed and evaluated were either serious games or simulation games. This gives an opportunity to show how gamification can be developed and evaluated. Nevertheless, this paper focuses on the use of gamification; it is worthwhile to mention the applications of the related concepts such as “serious games” and “simulation games” in the field of design education.

By using Serious Game Design Assessment (SGDA) framework (Mitgutsch and Alvarado, Reference Mitgutsch and Alvarado2012), Ma et al. (Reference Ma, Vallet, Cluzel and Yannou2019) analyzed the existing innovation process (IP) game CONSORTiØ (Jeu, Reference Jeu2016), where the focus was on assessing the coherence of the game's purpose with the other design elements such as content and information, game mechanics, fiction and narrative, esthetics an graphics, and framing. Furthermore, with the aim of transforming traditional innovation teaching, Ma et al. (Reference Ma, Vallet, Cluzel and Yannou2020) integrated eight general design frameworks for serious games, combined the specificities of innovation teaching and proposed Innovation Serious Games Design (ISGD) framework for the realization of teaching objectives.

The SGDA framework was used by Libe et al. (Reference Libe, Grenouillat, Lagoutte, Jean and Maranzana2020) to design a game to teach children a generic innovation process. Later, the game and its subsequent version were validated with the third-grade and fifth-grade students; the reaction was measured at the end of the game (Libe et al., Reference Libe, Grenouillat, Lagoutte, Jean and Maranzana2020; Boyet et al., Reference Boyet, Couture, Granier, Roudes, Vidal, Maranzana and Jean2021). The results showed that the children had fun and were willing to play the game again.

It is important to note that the SGDA and ISGD frameworks do not evaluate whether the game is effective for its designated purpose. The use of Kirkpatrick's model (Reference Kirkpatrick and Kirkpatrick2006) is prevalent for evaluating a game's effectiveness on its designated purpose. Kirkpatrick's model consists of four levels of evaluation: reaction, learning, behavior, and result. With the help of this model, Bhatt et al. (Reference Bhatt, Suressh, Chakrabarti, Chakrabarti, Poovaiah, Bokil and Kant2021b) analyzed 20 games and classified them based on the levels of evaluation, and found that some existing games were not evaluated for their effectiveness on both learning and engagement. For example, the PDP game (Becker and Wits, Reference Becker and Wits2014) and Innopoly (Berglund et al., Reference Berglund, Lindh Karlsson and Ritzén2011) only measure the effect of the game on learning but do not measure its ability to engage participants. The evaluation must be done in terms of the game's ability to fulfil learning goal/s and engage participants in the learning process. This opens up the scope of study about how to evaluate the game's effectiveness. In addition, existing games for DT lack an assessment framework (i.e., method of assessing the process and outcomes generated by participants) and thus do not provide a complete pedagogical instrument. The above gaps provide an opportunity to develop and evaluate an educational gamified version that provides a pedagogical view in the field of design and innovation.

IISC design thinking and its gamified variants

By analyzing and distilling out the essential steps from a large number of design processes and models developed over the last 50 years of literature in design research, Bhaumik et al. (Reference Bhaumik, Bhatt, Kumari, Menon, Chakrabarti and Chakrabarti2019) developed a DTP model called “IISC”, discussed as follows. The IISC DTP model (developed at the Indian Institute of Science, Bangalore, India) consists of four, generic, iterative stages: Identify, Ideate, Consolidate, and Select. Each stage is further divided into a number of activity steps (systematic procedures resulting in the overall design process) and instructions for carrying out each activity step (detailed information about collecting and processing relevant information and producing intended outcomes). The major activity steps within the four stages are given in Table 1. IISC design steps direct learners to identify problems and user needs, create requirements, generate ideas, combine ideas into solutions, consolidate solutions, and evaluate solutions to select the most promising solution. In addition, while carrying out each stage, and the various steps within the stages, of IISC, a list of design methods can be followed for carrying out the activity steps. For instance, methods like “brainstorming” or “gallery method” can be used during the “Ideate” stage, while carrying out the step “generate ideas”. IISC DTP model aims to aid designers or learners during designing to identify, formulate, and structure user-centric, real-life problems, and to solve these innovatively and effectively, the outcome of which can be a product, a process, a policy, or others.

Table 1. IISC DT stages and associated activity steps

There are many DT models such as Stanford d.school (Design Thinking Bootleg, 2018), SUTD (Foo et al., Reference Foo, Choo, Camburn and Wood2017), and IDEO: Human Centered Design (Design Kit, 2015) that are made for practitioners (designers), aiming to assist in or provide a structure to design thinking. DT model by Stanford d.school has broken down design thinking into five steps: (1) Empathize, (2) Define, (3) Ideate, (4) Prototype, and (5) Test. In this DT model, the first step is “Empathize”, which is only one of many ways of finding users’ problems, and therefore, should be one of many possible means of carrying out the broad step of “Define”. Similarly, prototyping is one of many ways of consolidating, communicating, or testing a concept or a process, and thus should be one of many possible methodological options for the broad step “test”. The “Identify” stage of IISC DT model, therefore, consists of the activities “Empathize” and “Define”. Also, the step “prototyping” is considered as an activity and categorized under the broad stages of both “Consolidate” and “Select” in IISC DT. Besides, SUTD cards include methods like “house of quality”, “Finite Element Modeling”, “TRIZ”, etc. However, most of these design methods are relevant mainly in an engineering context and are more suitable for use by professional designers. Similarly, some of the methods proposed by IDEO are applicable only in the context of an organization. In contrast, IISC DT is a generic model for design thinking, not only for engineering or organization applications. Unlike a cluster of formalized design methods, each stage of IISC DT model consists of primitive specific activities. These systematic/informal activities together externalize DT process in a logical order in which the outcomes of a former activity step become input for a later one. Besides, the instructions of the activity steps are jargon-free and easily understandable by the beginner. Thus, it is appropriate for school education. Moreover, unlike most existing DT models, the IISC DT model provides, in addition to the stages, associated activity steps and design methods, a pedagogical framework (i.e., curriculum and instructional strategies). At different steps, it has evaluation criteria for assessing and marking as to how well the design process is carried out, and how good the resulting outcomes are. Therefore, IISC DT is intended to act as a complete learning tool for design thinking.

To keep participants engaged and make learning more effective, the authors, in their earlier work, had adopted gamification as a technique to impart IISC DTP to school students, and developed a number of variants of the board game called IISC DBox (Bhatt et al., Reference Bhatt, Bhaumik, Moothedath Chandran and Chakrabarti2019; Bhaumik et al., Reference Bhaumik, Bhatt, Kumari, Menon, Chakrabarti and Chakrabarti2019). The board game comprises level boards (corresponding to the IISC stages), activity cards (instructions to perform design activities), workbook (for documenting the outcomes), performance evaluation sheets, and various game components (e.g., marker, dice, etc.). The selection process and significance of these key elements of the game are discussed elsewhere (Bhaumik et al., Reference Bhaumik, Bhatt, Kumari, Menon, Chakrabarti and Chakrabarti2019). The variations across the IISC DBox variants are in the game objectives, game resources, the mentor's training instructions, participants’ instructions, and feedback strategies and evaluation techniques. At the same time, design elements such as design stages and activities remain unchanged across all the game variants. The effectiveness of IISC DBox on the motivation and performance of participants (school students who have little or no prior exposure to design thinking) has been tested by conducting workshops. A number of variants of IISC DBox have then been evolved to overcome the limitations and enhance the effectiveness of the gamification used and learning supported. The research work reported in this paper discusses the development, testing, and improvement of one such gamified variant of IISC DBox.

Research methodology

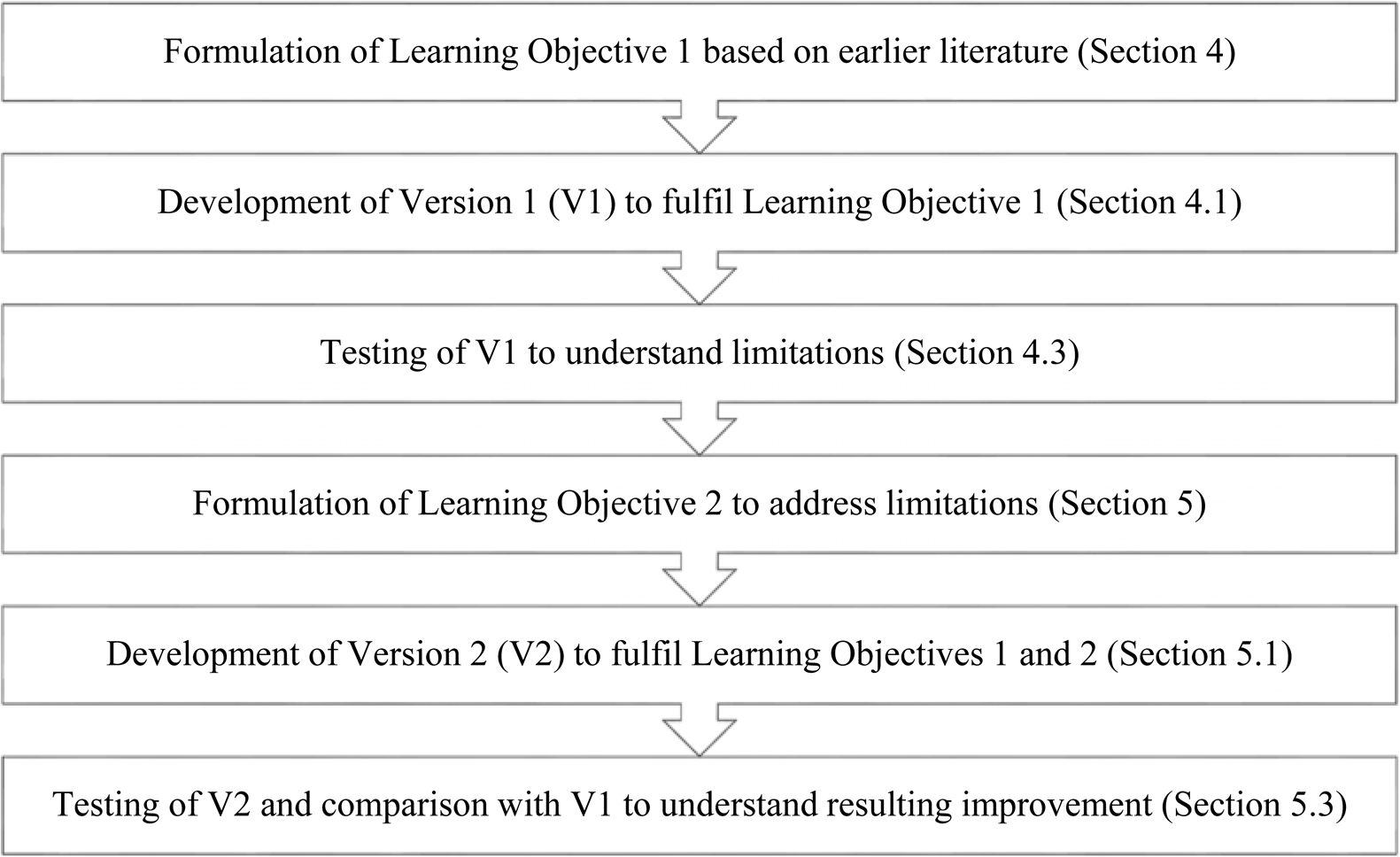

Constructing an effective pedagogy (curriculum, instructions, and assessment) is an evolutionary and iterative process. In this work, the authors integrated design thinking principles with learning theories and game elements in order to develop two gamified versions of a DTP, where one evolved from the other. The first version (henceforth referred to as V1) was developed with the following learning objective (henceforth called L1): to inculcate a creative mindset. V1 was empirically tested by conducting a design workshop with 20 school students of 6th standard to 12th standard. Based on the shortcomings identified, a second learning objective (L2) was introduced: (2) support understanding of the abstract concepts underlying the design process. With the above two as the learning objectives, the second gamified version (henceforth called V2) has been developed by introducing new game elements in the learning process. V2 has then been empirically tested by conducting two more workshops with 57 school students to assess the resulting improvement (which would be referred to as Research Objectives 1 and 2, each corresponding to the assessment of fulfilment of Learning Objectives 1 and 2, see Section “Development of V2 to fulfil learning Objectives 1 and 2”). Also, the overall effectiveness of gamification on learning DTP (called Research Objective 3) and its effectiveness for inducing fun and engagement. (Research Objective 4) have been evaluated. Both the versions have been tested through evaluation of design outcomes, efficacy in the documentation process and feedback from students and mentors. Table 2 shows the Learning Objectives, Research Objectives, and Research Questions for gamified versions V1 and V2. The overall research process, as delineated above, is pictorially represented in Figure 1; the sections in brackets point to where its specific parts are discussed, and results presented.

Fig. 1. Research process.

Table 2. Learning Objectives (L), Research Objectives (R.O.), and Research Questions (R.Q.) for gamified version 1 (V1) and version 2 (V2)

Nurturing a mindset for creativity: formulation of L1 based on earlier literature

Various studies stress that creativity and divergent thinking are important outcomes of a DT course. The authors investigated literature to seek various measurable factors that are used to determine standards of DT course outcomes, the favorable values of which confirms predetermined standards and improvement in the performance. To evaluate creativity of a design process and its outcomes, various criteria have been proposed in the literature, such as fluency, flexibility, originality and usefulness (Charyton and Merrill, Reference Charyton and Merrill2009), novelty and usefulness (Sarkar and Chakrabarti, Reference Sarkar and Chakrabarti2011). Criteria like fluency and flexibility can be measured using quantity and variety of ideas (Shah et al., Reference Shah, Smith and Vargas-Hernandez2003). In previous work, Bhatt et al. (Reference Bhatt, Bhaumik, Moothedath Chandran and Chakrabarti2019) argued that fluency and flexibility could be related not only to solutions but also to other outcomes such as problems, requirements, concepts, or evaluations. For example, if the quantity and variety of identified problems are high, then the formulation and structure of the problem definition are likely to be better. Since consideration of such criteria leads to more innovative and creative outcomes, IISC DTP has creativity matrices containing a combination of the criteria: novelty, fluency, flexibility, and need satisfaction (as a proxy for usefulness) for the evaluation of outcomes. Since the ability to produce desired outcomes (e.g., high flexibility, fluency, novelty, and need satisfaction) indicates effectiveness in learning, there is a need to nurture these elements in exercising these skills in students. Based on the above, the following is identified as a major Learning Objective in this work:

1. To develop a learner's mindset in producing outcomes in a way that the major criteria for creativity (flexibility, fluency, novelty, and need satisfaction) get satisfied.

Development of V1 to fulfil Learning Objective 1

Fulfilling the above Learning Objective, it is argued, should improve the outcomes of DTP. According to the Octalysis framework for gamification by Chou (Reference Chou2019), the above objective falls under “development and accomplishment” – one of the eight core drives and can be stimulated by game mechanics such as points, badges, fixed action rewards, and win prize. Literature suggests that rewards in a game should be used wisely. Extrinsic motivation in the form of tangible rewards can be detrimental for children (Deci et al., Reference Deci, Koestner and Ryan1999) if they are introduced for the activities that can be driven with the help of intrinsic motivation. Also, rewards should be separated from feedback as it can result in a decrease in intrinsic motivation and self-regulation activities of participants (Kulhavy and Wager, Reference Kulhavy, Wager, Dempsey and Sales1993). However, tasks like finding quantity and variety of problems from a habitat or gathering as many needs as possible from different end-users may not be intrinsically enjoyable (i.e., interesting) for a learner which can result in them spending less time on performing these tasks. In such cases, as noted by Werbach and Hunter (Reference Werbach and Hunter2012), one may have to use extrinsic rewards as a fallback to change the learner's behavior. In addition, short-term rewards are more powerful than long-term benefits when it comes to influencing behavior (Kapp, Reference Kapp2012). While considering the above constraints, to accomplish the Learning Objective 1 and to improve learning outcomes, the authors adopted gamification (i.e., use of game elements in the learning process) and developed DT game version V1 of IISC DBox, where predefined challenges with objectives and rewards (points) were kept as the mechanism for cultivating the practice of finding/generating outcomes with high fluency, flexibility, novelty, and need satisfaction in school students. The authors introduced short-term reward elements in the form of “coins” and “diamonds” that would be given to participants after the accomplishment of an activity. The participant's efforts are recognized by giving these rewards, so as to create a sense of achievement to the participant. Table 3 shows the game mechanics and components that are used to fulfil this Learning Objective.

Table 3. Learning Objective 1 and the corresponding game mechanics and components

Description of game, components, and play

IISC DBox V1 is a gamification of IISC DTP. The game has been developed by integrating both game and learning elements such that enjoyment and learning happen concurrently. It is embodied in a board game that can be played in a group of players in a cooperative manner. The game also can be played with other groups in a competitive environment. Implementing gamification through a board game allows players to engage in a team, displays progress, and enables integration of the game elements with design activities. The game elements are selected such that they work together to contribute to the fulfilment of the Learning Objective. Currently, the game is played under the continuous observation of mentors in order to ensure that students understand and follow all the processes correctly.

After de-boxing (i.e., opening the box of) the game, students would identify four-level boards, each with a different visual theme corresponding to one of the four stages of IISC DTP (see Fig. 2a). At each level, students aim to accomplish all the activity steps traversing through the path and obtain as many points as possible till the end of the path. Each group uses an Avatar to indicate its position on the level board at any instant. The group traverse the path that consists of the various design activity steps, as well as a number of flags and check posts. The group must enact the activity step specified for the block at which it reaches in each move. As a result of the moves, the participants undertake a journey through a set of design activities that are necessary for them to complete the four stages of IISC DTP. Each design activity step card has a title, and portions that provide an overall understanding of the step, an example, the instructions to be followed, and desired outcomes (see Fig. 2b). After performing each activity step, participants document the outcomes in a workbook and show it to the mentor for evaluation and feedback. Based on the performance, a group is given rewards in the form of coins only for those activity steps for which there is a need for improving the creative performance of participants (details of activity steps and desired outcomes are given in Appendix Table A.2). In addition to that, when a group reaches a check-post represented on the path, the mentor evaluates the intended outcomes, and students may have to revisit the previously carried out design activity steps for which their performances are not adequate. A move can also end up in a block that represents a flag, which indicates a call for end-user evaluation and feedback. Thus, there are several iteration loops that can result from a user or mentor evaluation (see Fig. 2c). Once all four stages have been satisfactorily completed, the game ends. The group with the maximum number of points at the end of the game is declared the winner. Table 4 enlists the game components and their purpose in the game.

Fig. 2. (a) Game board, (b) design activity card, and (c) process steps of earning rewards.

Table 4. Game components and purpose

A mentor's role is to ensure that the participants have correctly understood and performed all the processes and documented the design activities. At the end of each design activity, the mentor needs to evaluate the process, give content-related feedback, and reward the participant or group based on the assessment criteria. A mentor assessment sheet is provided, which comprises the four performance criteria to be considered: Whether the activity was attempted, whether the activity was completed, the level of fluency achieved, and the level of flexibility achieved. Detailed information about the assessment criteria to be used by mentors along with the reward system proposed is given in Appendix Table A.1.

End-users are the owner of the problem on which a participant or group is working. A user's role is to evaluate the outcomes after the group performs certain design activities in the game. Users are given an assessment sheet through which they give feedback to the groups. Some of the questions given in the user assessment sheet are given below: Do the enlisted requirements reflect the user's problems? Are these requirements prioritized as would have been done by the user? Does the solution/prototype satisfy the user's needs/problems? Do the conflicts identified by the user (have the potential to) get resolved in the final prototype? Detailed information about the assessment criteria to be used by end-users, along with the reward system to be used, is given in Appendix Table A.3.

Testing of V1 to understand limitations

If the learning goals get satisfied, but the game is not enjoyable for the learner, it becomes an activity rather than a game. Thus, apart from a game's effectiveness in the fulfilment of its goals, its impact on the learner's experience (fun, engagement, etc.) also needs to be tested. Thus, to assess the effectiveness of IISc DBox V1, two Research Objectives were formed: the first was to assess the effectiveness of gamification as a way of achieving the Learning Objective 1 and make the intended outcomes better in this respect; the second was to assess the effectiveness of the gamification used as a tool for inducing fun and engagement.

Based on these two Research Objectives, the following are taken as the research questions for this study.

1. What is the effectiveness of the reward system to achieve desired outcomes so that the creativity criteria get satisfied?

2. What is the effectiveness of reward system in terms of fun and engagement?

Methodology for empirical study

In order to understand the effectiveness of the gamification used in V1 for carrying out design activities for the intended outcomes, a workshop (W1) was carried out with 20 students of 6th standard to 12th standard (typically 12- to 18-year-olds) having diverse age and backgrounds. As the completion of the IISC DTP course takes over 30 h, workshops were chosen as the means through which empirical studies could be conducted in a controlled environment with a few days of full engagement rather than usual, brief, classroom sessions distributed in a regular semester in schools. Prior to the workshop, mentor training sessions were organized by the authors where mentors were briefed about their role, design activities, assessment techniques, and the game rules. The mentors were from engineering and/or design fields pursuing master's or doctorate degrees. A mentor's role in the game was the following: (1) To ensure that students understood all the processes correctly by teaching the rules of the games, necessary skills/methods, if required; clarifying doubts on the instructions or giving clarity on the tasks. (2) To ensure that students follow the process properly and evaluate their performance (Bhatt et al., Reference Bhatt, Bhaumik, Moothedath Chandran and Chakrabarti2019). Thus, a mentor's role was merely of facilitating the process and keeping the learning student-centric. The workshop was held for 5 days. In the workshop, there were five groups comprising four students in each group, and a mentor for each group guiding them through gamified IISC DTP using V1. The students were divided into groups based on their age. Students from each group understood the game, followed the instructions and performed the design activities under the continuous observation of assigned mentor. Each group worked on different habitats. Each group had autonomy to select its habitat, users, and their problems. As groups worked on different habitats and problems, there was no public grading and thus less opportunity for social comparison. Completing all DT activities and game objectives by each group took 30 h on average.

Methodology for analysis

For addressing the first research question, the performance of each group was evaluated and examined at the various activity steps of the DT process (either by the mentor or by a user). At the end of the workshop, students from each group gave a 20-minute, oral presentation to a jury of experts on the selected problem and the proposed solution, followed by a discussion. Each of the final design solutions, communicated in the form of a prototype, a poster, and an oral presentation, was evaluated by four design experts or experienced faculty members. The criteria used for the assessment were the following:

• Is the selected problem significant to solve?

• Have the groups identified enough problems?

• Does the problem have any existing, satisfactory solution?

• Is the solution proposed feasible, novel, and likely to solve the problem?

Detailed information about expert assessment criteria is given in Appendix Table A.4. Total score for each group was calculated by aggregating the mentors’, users’, and experts’ evaluations. Weightages were given to the experts’ and mentors’ and users’ evaluation, in a ratio of 4:1. The total score was normalized on a scale of 100. After completing the game, based on the evaluation scheme used, a group could obtain a minimum score of 48 and a maximum score of 100, depending on their performance during the game. Based on these upper and lower limits, the mean score that could be obtained by a group is 74 (mean of 48 and 100). Thus, this means score of 74 in the evaluation scheme was considered as one of the baseline criteria for assessing the effectiveness of the reward scheme on the achievement of the desired outcomes. For addressing the second research question, at the end of workshop, a questionnaire form was given to each student and each mentor for feedback. An analysis has been carried out on the data obtained from these feedback forms. Interviews of mentors were conducted at the end of workshop. The questions asked during the interviews were related to what was liked and disliked about the game and activities used, and what the suggestions and improvements of the game, were if any.

Results

Details of the processes followed, and outcomes produced by school students while using IISC DTP V1, and reactions of students and mentors after using this version were captured for evaluating its potential for fulfilling Learning Objective 1 and for inducing fun and engagement; the results are discussed here in brief; the detailed results are presented elsewhere (Bhatt et al., Reference Bhatt, Bhaumik, Moothedath Chandran and Chakrabarti2019). By combining scores given to evaluation of the outcomes and the process, the average score obtained by the groups was 76.2. This score is higher than the baseline criterion (i.e., mean score of 74); this means that the reward system as a game element helped these groups to achieve the desired outcomes. These results are used again in the Section “Comparison of the two versions of IISC DBox” for comparison purposes. In addition, feedback data revealed that the inclusion of rewards and surprise factors led to a high level of engagement with the gamified design thinking process (Bhatt et al., Reference Bhatt, Bhaumik, Moothedath Chandran and Chakrabarti2019). Feedback data also revealed that the students found the game interesting and enjoyable (Bhatt et al., Reference Bhatt, Bhaumik, Moothedath Chandran and Chakrabarti2019).

Delineating inefficacy in V1 of IISC DBox

While the overall results indicated substantial promise of IISC DTP as a problem finding and solving tool for school students, the efficiency of the tool needed to be understood in detail. For this, the students’ performance in each stage of the DTP, as well as the final outcomes (e.g., identified problems, enlisted requirements, generated ideas), were evaluated. After the students performed a specific design activity, the outcomes generated were written in a workbook and checked by the mentors; feedback on the outcomes and suggestions for correction, if any, were provided to the students. The workbook consists of a template for each activity step that guides the students in documenting their outcomes. The rationale for using a workbook, in which the students wrote down these specifics, was to gain a deeper insight into the specific steps in which the students might have struggled with the approach and the tool. Evaluation of these intermediate, documented outcomes revealed the difficulties the students faced and the inefficiency this brought into their processes of learning. Evaluation of these outcomes, by the authors, led to identification of common patterns of mistakes, and a classification of the mistakes within each design activity step. Post-assessment of the workbooks revealed that the students committed frequent mistakes during a particular set of activities, some of which are listed below.

• Finding cause/root-cause of identified problems;

• Converting each problem statement into a need;

• Constructing desired “process steps” for an existing process; and

• Checking compatibility among the generated ideas.

Sometimes, the students misunderstood and incorrectly performed the instructions given for carrying out certain activities. For example, when students were asked to state the desired situation and its environment for a given problem, over 50% of the students stated either a solution to the problem, a reason behind the problem, or an irrelevant desired situation. For instance, one of the problems that the students identified was “people find it difficult to catch buses”; for this, the desired situation they formulated was “There should be bus route boards on buses” (i.e., solution). Similarly, when the students were asked to state desired “process steps” (e.g., soaking, washing, rinsing, drying for the process of cleaning utensils), many students stated the means (i.e., solution) of achieving the processes. Assessments indicated that the students often did not learn the content (terminologies and concepts) provided in the design activity cards. Feedback collected from mentors’ interviews revealed that a major reason behind committing such mistakes was a lack of clear, conceptual understanding of the activities. Though such mistakes were possible to be rectified by their mentor's assessment and immediate feedback, this made the understanding of the process more dependent on the knowledge of the mentors. This, however, was against a primary objective of the proposed DTP, which was to reduce reliance on mentors (i.e., minimal mentor intervention). Having students understand the tasks and perform the activity demanded, with minimum intervention from the mentors, is a challenge that needed to be overcome. Rather than jumping directly on further activities, it was felt that students should first have a thorough understanding of the concepts, vocabulary, and terminology provided in the design activity cards, as students first needed to have clarity on how each activity should be carried out.

Nurturing design concepts before performing design activity steps: formulation of Learning Objective 2 to address limitations in V1

Concepts are cognitive symbols (or abstract terms) that specify the features, attributes, or characteristics of a phenomenon in the real or phenomenological world that they are meant to represent and that distinguishes the phenomenon from other related phenomena (Podsakoff et al., Reference Podsakoff, MacKenzie and Podsakoff2016). Each discipline has its common concepts, and people who belong to the discipline use these concepts in generalization, classification, and communication. For example, “need”, “requirement”, “demand”, “wishes”, “idea”, and “solution” are common concepts in the field of design. As these terms provide a common vocabulary, one must learn these concepts prior to performing design activities. Prior knowledge of these concepts should help perform instructions provided in the DTP correctly without difficulty in understanding. Several studies have proven that prior knowledge and interest have an additive effect on reading comprehension (Baldwin et al., Reference Baldwin, Peleg-Bruckner and McClintock1985). Furthermore, it is important to determine prior knowledge before one encounters new information (Ausubel et al., Reference Ausubel, Novak and Hanesian1968). As noted by Schmidt (Reference Schmidt1993), prior knowledge is the single most important factor influencing learning. Therefore, it is necessary to ascertain, before teaching, as to what the learner already knows. The authors realized that even though understanding the terminologies and concepts have already been embodied in the activity cards and the instructions for performing activities, verifying as to whether the students understood the terminologies and concepts was still necessary. This is missing in the current version, resulting in frequent mistakes being made during documentation. The above discussion shows the necessity of learning and evaluation of concepts of design prior to performing design activities, and the proposed DTP and tool to provide a mechanism by which mistakes related to the common concepts can be prevented from happening. From the above discussion, the following is identified as the second, major Learning Objective of the work reported in this paper:

-

2. To ensure that a learner has understood the abstract concepts underlying design correctly and performed design activities correctly.

Development of V2 to fulfil Learning Objectives 1 and 2

Fulfilling the above Learning Objective, it is argued, should improve the learning process of DTP. According to the Octalysis framework for gamification (Chou, Reference Chou2019), the second objective also falls under one of the eight core drives (i.e., empowerment of creativity and feedback) and can be stimulated by game mechanics such as milestone unlock, evergreen mechanics (where players can continuously stay engaged). In order to accomplish the above Learning Objective and to improve the learning process, the authors have modified the gamification approach in V1 by introducing additional game elements in the learning process, and developed gamified V2 of IISC DBox.

Since there is a necessity for learning the common concepts of design prior to performing design activities, the terminology and the concepts have been proposed to be introduced as part of the game mechanics; this should support understanding of the instructions before performing the design activities and translating new knowledge into the practice. Therefore, in the revised gamified version of IISC DBox (i.e., V2), participants can perform a design activity only after they have understood the concepts behind that activity. For the execution of the above game mechanics, “content unlocking” was used with “clue cards”, “treasure boxes” with combination padlocks, and “activity cards” given inside the treasure boxes was used as the game components. Design concept descriptions were given in the form of clue cards followed by multiple-choice questions (MCQ) about the concept. A combination of numbers representing the right set of answers is necessary to unlock the corresponding combination padlock of the treasure box, inside which relevant design activity cards are concealed. Table 5 shows the game mechanics and components that are used to fulfil the second Learning Objective.

Table 5. Learning Objective 2 and the corresponding game mechanics and components

Description of the modified game, new components, and play

IISC DBox V2 is the revised game version of V1 where all the game components (e.g., maps, marker, flag, reward system, etc.) of V1 remain as it is. V2 comprises additional game components for the fulfilment of the second Learning Objective, as explained below: The Design activity cards are now locked in a treasure box that is found on the existing path of a map. To obtain the design activity cards, students must unlock the treasure box. To unlock the treasure box, students will have to understand the design concepts written on the clue cards and answer a set of corresponding questions. The description of the new game components and their purposes are given in Table 6. Figure 3 shows the learning process for IISC DBox V2.

Fig. 3. IISC DBox: learning process.

Table 6. New game components and purpose

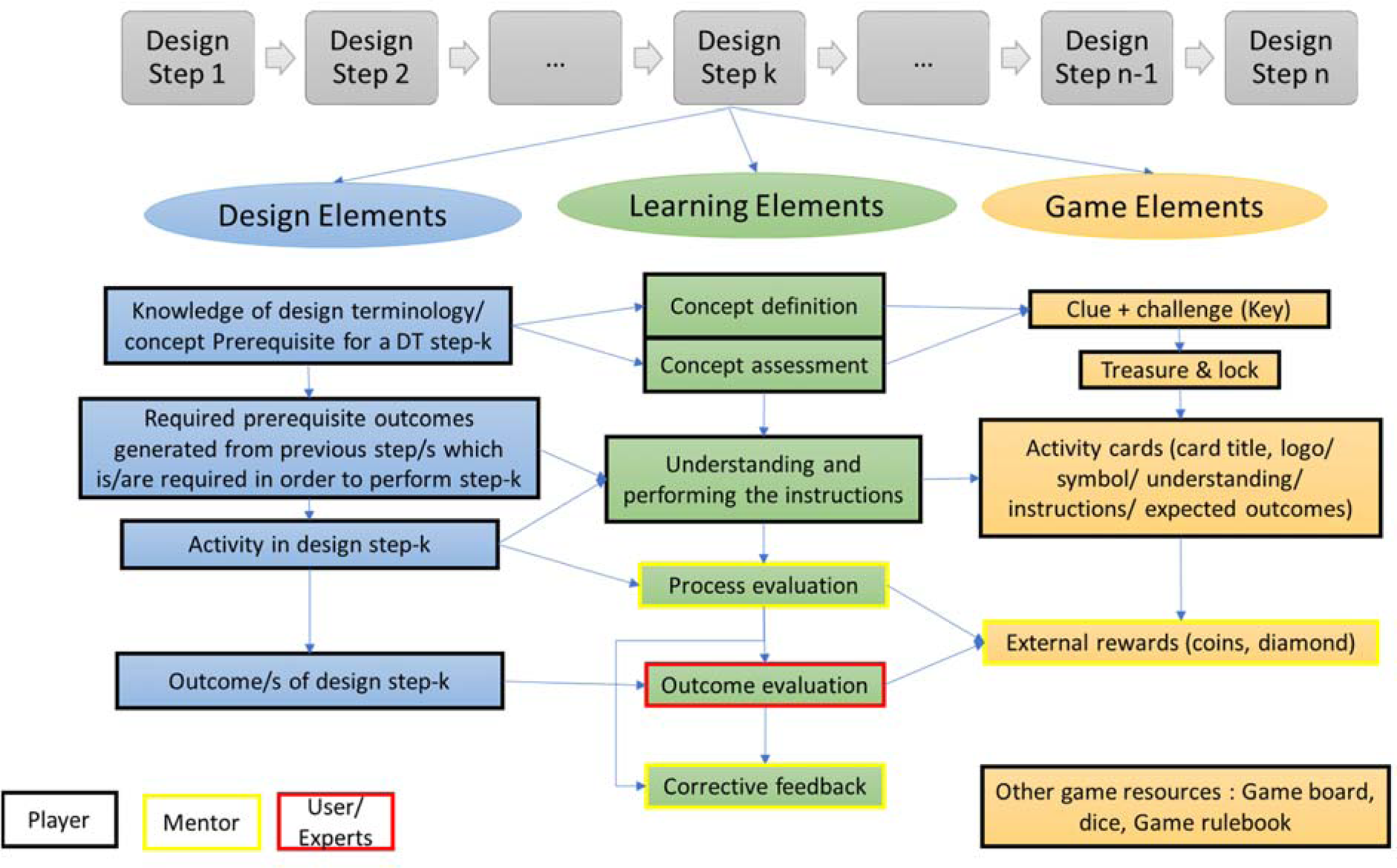

Figure 4 depicts the overall framework for learning DTP using the gamification tool. The interrelationship and compatibility among design, learning and game elements are explained as follows: As knowledge of the design terminology and concepts is a prerequisite for performing a design activity (i.e., a design element in Fig. 4), concept definition and assessment (i.e., learning elements) are presented in the form of clue, challenge (key), treasure, and lock (game elements in Fig. 4). Similarly, a design activity card (a design element) consists of title, illustration, understanding, instructions, and expected outcomes (learning elements). The process to be followed involves the following: first, students should understand and carry out the activity written in the instructions given in a design activity card; then mentors should carry out a process evaluation (a learning element); and then, end users should carry out an outcome evaluation with associated external rewards (a game element).

Fig. 4. Overall framework of learning DTP using a gamification tool.

Testing of V2 and comparison with V1 to assess resulting improvement

For testing of V2, Research Objective 1 is the same as that for V1: (1) to assess the effectiveness of gamification as a way of achieving Learning Objective 1 and improve the intended outcomes. Research Objective 2 was to assess the effectiveness of gamification as a way of achieving Learning Objective 2 and improve the learning process. Research Objective 3 was to assess the overall effectiveness of gamification on learning DTP. Research Objective 4 was to assess the effectiveness of game elements as a tool for inducing fun and engagement while achieving Learning Objectives. For this study, these four Research Objectives are translated into the following Research Questions (1–4) and hypotheses.

1. What is the effectiveness of the reward system to achieve the desired outcomes so that the creativity criteria get satisfied?

Hypothesis 1: The reward system provided helps achieve desired outcomes.

2. What is the effectiveness of the clues and challenges to achieve successful understanding of design concepts prior to performing design activity?

Hypothesis 2: The clues and challenges provided help students increase their understanding of the design concepts.

3. What is the overall effectiveness of gamification on learning DTP?

Hypothesis 3: The game elements help to learn DTP.

4. What is the effectiveness of the game elements (i.e., the reward system and the clues and challenges) in terms of fun and engagement?

Hypothesis 4: The game elements induce fun and engagement.

Methodology for empirical study

In order to assess the effectiveness of the modified gamification approach used in V2, two workshops (W2 and W3) were carried out with a total of 57 students, from 6th standard to 12th standard (12- to 18-year-olds), having diverse ages and backgrounds. In W2, there were nine groups (28 students); in W3, there were eight groups (29 students). In each workshop, each group had three to four students, and a mentor assigned to each group for guiding them through the gamified IISC DTP. The students were divided into groups based on their age (i.e., each group has students with similar age/standard). All students played version V2 of IISC DBox. Each group chose a different habitat for its study and developed solutions in the form of cardboard prototypes (Fig. 5). Other details of W2 and W3 (i.e., workshop duration, mentors’ training, autonomy in selecting habitat, users, and problems) were similar to those in W1 (see Section “Description of game, components, and play”).

Fig. 5. Solutions in the form of prototypes made by student groups (e.g., canteen, bus stop, lab, parking area).

Methodology for analysis

The authors used a triangulation technique to check whether the findings obtained from the outcomes’ analyses were consistent with the subjective experience of the students as well as mentors. The methodology used to answer the research questions are shown in Table 7.

Table 7. Methodology used to answer the research questions

As the reward system (points) was adopted as the mechanism for cultivating the practice of generating outcomes that satisfied the creativity criteria, Research Question 1 (to assess the effectiveness of the reward system) was addressed as follows. The total score received by each group, as an aggregation of the evaluation scores of design outcomes by the mentor, user, and expert, was kept as the criterion for validity. To test the hypothesis, the score received by each group was calculated and compared with the feedback received by mentors. The remaining details of the analysis used here are the same as those used for analyzing the outcomes from W1 (see Section “Methodology for analysis”).

The percentage of correct answers given by the students was used as the criterion to assess understanding of design concepts by the students, and was used for answering Research Question 2 (to assess the effectiveness of the clues and challenges) and testing associated hypothesis. For this, after the workshop, the outcomes written in the workbooks of the students were evaluated, the average number of correctly interpreted statements across the groups were calculated, and converted into the average percentage of correct answers across the groups. For the first six activity steps (see Appendix Table A.2), the number of problems identified by each group were counted. Then the number of correctly interpreted statements (desired situations/needs) based on the identified problems were counted. Then, the average number of correctly interpreted statements by the groups were identified and converted into percentage. Similarly, the number of groups who had correctly constructed the desired “process steps” for an existing process and correctly solved the compatibility issues among the ideas they generated were counted. The average percentage of correctly interpreted statements documented in the workbooks during W1 was compared with that from those in W2 and W3 combined (since both the workshops have the same intervention and similar groups). A significant improvement in the percentage of correctly interpreted statements across the workshops was taken as the criterion to test the associated hypothesis.

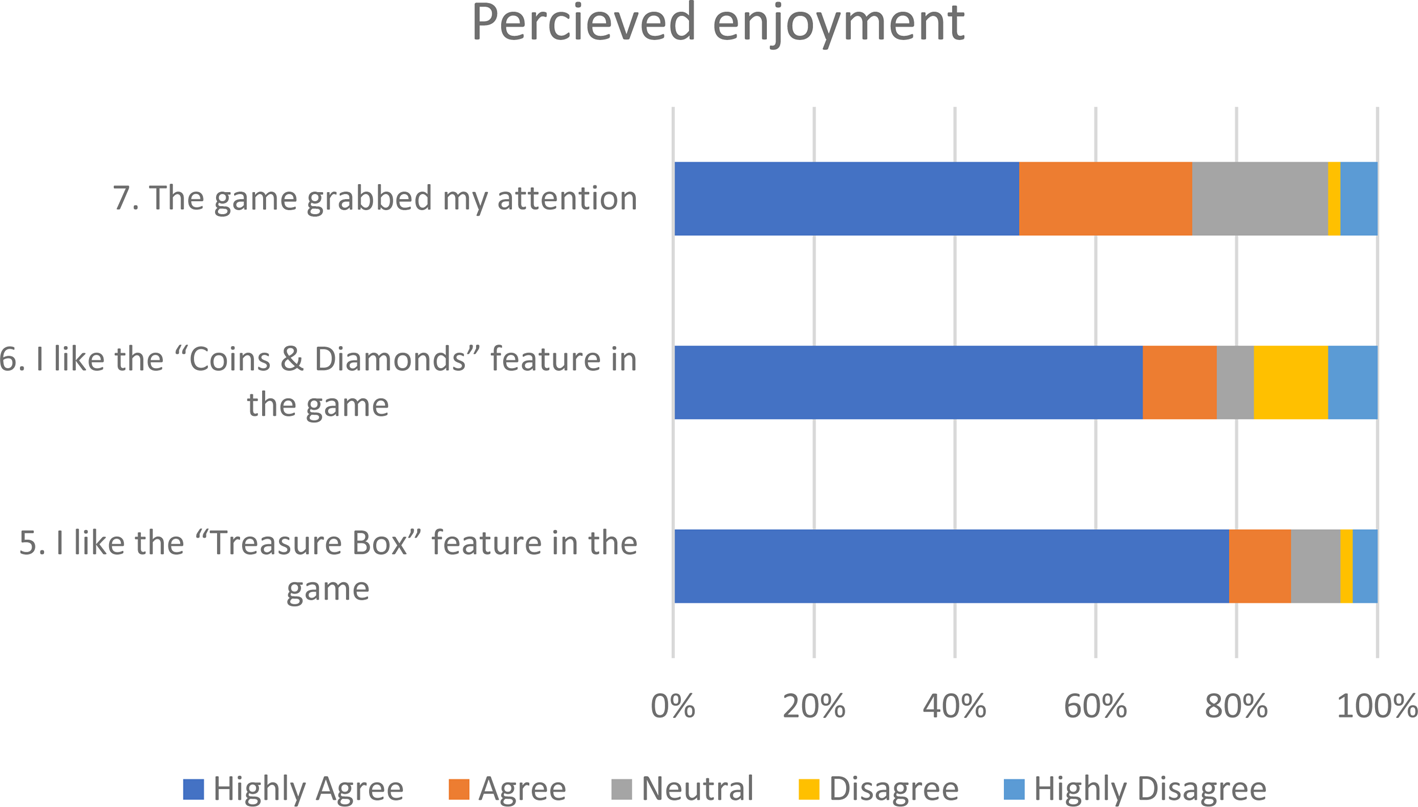

For addressing Research Questions 2, 3, and 4, a student feedback questionnaire (using a five-point Likert scale from highly disagree to highly agree) was shared with each student at the end of the workshop. The questions asked are given in Table 8. Answers to questions 1 and 2 capture the students’ perception about the effect of clues and challenges on their performance, and improvement of their skills (supporting RQ 2). Answers to questions 3 and 4 capture the students’ perception about the relevance of the game in learning and its effect on knowledge (supporting RQ 3). Answers to questions 5, 6, and 7 capture students’ perception about relevant features of the game and the ability of the game to grab attention (supporting RQ 4). Data collected from the questionnaires were aggregated, converted into percentage points, and analyzed using a stacked bar chart.

Table 8. Survey questionnaires for students with a five-point Likert scale along with the granularity varying from highly disagree (1), disagree (6), neutral (11), agree (16) to highly agree (21)

In addition, a mentor survey questionnaire (with a five-point Likert scale varying from highly disagree, disagree, neutral, agree to highly agree) was shared with each mentor, at the end of workshops (Appendix Table A.5). To validate hypotheses 1–4, an average value of response of mentors greater than or equal to “Neutral” was taken as the test criterion. The statistical analysis (t-test) was performed on the empirical data of feedback.

Results

Discussed below are how well these results address the research questions.

Q 1. How effective is the reward system to achieve desired outcomes?

The assessment sheets collected from mentors, users, and experts were analyzed. Based on the point calculations, a weighted average score was awarded to each group. The average score obtained by the groups in each workshop exceeded 74 (which is the average value of performance) out of 100 points. Since performance with exceeding the average value indicates an above-average performance (74), the above indicates an above-average impact. Appendices A2–A4 provide details of the task and associated evaluation scheme. The score reflects the students’ effort and engagement in various activities. The authors observed that the students’ engagement in the various activities was due to two reasons: (1) The students were keen to absorb the design thinking mindset, an indication of their internal motivation. (2) The students were keen to earn more points (rewards) because of the competitive environment created by introducing a reward system. In both these, the only thing that a higher score implies is an above-average degree of engagement. However, this does not reveal the reason for the engagement. That is, whether the engagement took place due to internal motivation, due to the temptation of earning rewards, or due to both. This insight came from the mentor's feedback, which revealed that it was the reward system (points and coins) that encouraged the students to achieve the desired outcomes (e.g., quantity and variety of problems, ideas, etc.). (The average value of the response (3.77) was exceeded the neutral value of 3 on a scale of 1–5 where 1 represents “highly disagree” and 5 represents “highly agree”; sample size: 9; test statistics: t-test; p-value < 0.1; ref: Appendix Table A.5). The feedback also revealed that the goal of obtaining a higher number of points (i.e., coins and diamonds) motivated the students to spend additional effort (in finding and documenting a greater number of problems, generating a greater number of ideas, etc.). Furthermore, it is noted that the weighted average score awarded to each group is an aggregate of the evaluation score of all the processes followed and the outcomes produced by the group. We, therefore, argue that the score and the mentor's feedback together indicate that the reward system had a positive impact on the overall performance, and on achieving the desired outcomes. To demonstrate the effectiveness of the rewards system in achieving desired outcomes, a case study of a portion of the work done by one of the groups is given below.

Case study: A group of students from 8th standard (W2) selected a security cabin at the entrance of the university campus as their habitat of study. During their observation of the security cabin, the students identify a number of problems (e.g., absence of security guards, irregularity in identity and security check, lack of passive securities, and a broken and dysfunctional sitting arrangement). Similarly, while interacting and empathizing with users (e.g., security guards or university students), the students identified more issues faced by the users (e.g., the security guards get bored, there is no easy access to lavatory, double shifts and night shifts make the guards tired, there is little time for rest, the work involves frequent standing and sitting positions, there is inadequate illumination and ventilation in security cabins, and students living in hostels in the campus are not happy with the security system because of thefts). The mentors’ feedback revealed that in order to gain more reward points from mentor-evaluation, the students put in more effort and gathered a larger number of problems (demonstrating greater fluency) with variety (demonstrating greater flexibility). Likewise, to obtain more points in end-user evaluation (e.g., from the security guards), the requirements enlisted by the students ensured inclusion of the problems faced by the security guards. Also, they assigned greater priority to those requirements that were more important to the security guards (For example, a security guard should not get tired during his working hours, should not get bored in the cabin, should be able to see ID card easily, and there should be adequate quantities of fresh air, lights/illumination, and lavatory). Similarly, to earn more points, the students generated solutions and prototyped these with a clear intent to satisfy the requirements that solved the problems faced by the security guards (e.g., changing the location of the sitting space so as to offer a better view of the surroundings, providing an entertainment system to reduce boredom, providing a convertible chair to support frequent standing and sitting, and a cabin with proper ventilation). The above case illustrates that the reward system encouraged the students to identify and generate desired outcomes and engaged them in the learning process.

Q 2. How effective are the clues and challenges to understand the design concepts?

Post-assessment of workbooks revealed that the frequency of mistakes committed by the students in the workshops (i.e., W2 and W3 for V2) reduced drastically compared with those in the workshop in the earlier study (W1 for V1). About 85% of the interpretations while converting a problem statement into a need statement during the design activities (i.e., observe habitat, persons and objects in use, to talk to persons, to empathize, to do task yourself) were found to be correct for the 17 groups in W2 and W3 combined, as opposed to that being 43% for the 5 groups in W1. Also, all the groups in W2 and W3 constructed the desired “process steps” for an existing process and correctly resolved the compatibility issues among the ideas generated. Furthermore, feedback obtained using the students’ questionnaire suggested that the students perceived the clues and challenges to have been effective (Feedback on questions 1 and 2, Fig. 6): about 77% highly agreed or agreed that the clues and challenges helped them perform the design activities, and about 90% highly agreed or agreed that their skills improved to overcome the challenges. Also, mentors’ feedback revealed that the clues and challenges provided through the clue card and treasure box in the game helped students increase their understanding of the design concepts (The average value of the response (4.33) was exceeded the neutral value of 3 in a scale of 1–5 where 1 represents “highly disagree” and 5 represents “highly agree”; sample size: 9; test statistics: t-test; p-value < 0.01; ref: Appendix Table A.5).

Fig. 6. Perceived use of game elements in learning.

We, therefore, argue that the workbook analysis, the students’ feedback, and the mentor's feedback together indicate the effectiveness of clues and challenges in supporting understanding in the design concepts.

To demonstrate the effectiveness of the clues and challenges in understanding the design concepts, a case study of a portion of the work done by one of the groups is given below.

Case study (the same study as was used for explanation in RQ 1): After understanding the concept of “need” followed by assessment provided in the clue card, the group correctly converted each problem statement into a need. Similarly, after understanding the concept of “process steps” followed by an assessment given in the clue card, the group listed desired process steps correctly (e.g., directing traffic, monitoring entry of outsiders without getting tired, checking and validating identity with ease, and communicating effectively). The above shows that the mechanism of the treasure box and clue cards help students understand the concepts and terminology before performing associated activities. In the absence of the treasure box and clue cards, it would be difficult to know whether the students understood the concepts correctly.

Q 3. How effective is the game to learn DTP?

The students perceived the game to have been useful for learning (Feedback on questions 3 and 4, Fig. 6); about 89% highly agreed or agreed that most of the game activities were related to the learning task, disagreed or highly disagreed, and about 88% highly agreed or agreed that it increased their knowledge. This implies that these students were able to relate game objectives with the learning task, and the game elements helped them improve their knowledge. Furthermore, mentors’ feedback revealed that the game elements (i.e., reward system and clue cards) helped students learn DTP (The average value of the response (4) was exceeded the neutral value of 3 in a scale of 1–5 where 1 represents “highly disagree” and 5 represents “highly agree”; sample size: 9; test statistics: t-test; p-value < 0.01; ref: Appendix Table A.5). Based on the above, we conclude that the game is effective for learning DTP.

Q 4. What is the effectiveness of game elements (i.e., the reward system and the clues and challenges) in terms of fun and engagement?

The students perceived the gamification positively (Feedback on Question 7, Fig. 7): about 74% highly agreed or agreed that the game grabbed their attention. About 77% highly agreed or agreed that they liked the features coins and diamonds (Feedback on Question 6, Fig. 7), and about 88% highly agreed or agreed that they liked the treasure box feature disagreed (Feedback on Question 5, Fig. 7). Also, mentors’ feedback revealed that the game elements induced fun and engagement in the students (The average value of the response (3.88) was exceeded the neutral value of 3 in a scale of 1–5 where 1 represents “highly disagree” and 5 represents “highly agree”; sample size: 9; test statistic: t-test; p-value < 0.01, ref: Appendix Table A.5). This indicates that the game elements were not only effective in fulfilling learning objectives but also induced fun and engagement in the students.

Fig. 7. Perceived use of game elements in enjoyment and engagement.

Comparison of the two versions of IISC DBox

As a benchmark, V1 is compared with V2 to compare the effectiveness of the game. Table 9 enlists the key criteria for comparison.

Table 9. Comparison of two versions of IISC DBox

Discussion

DTP in school education can be implemented in various ways: introducing DTP as a subject in a regular classroom, introducing a Massive Open Online Course (MOOC) on DTP, or conducting workshops on DTP. In all such settings, it is possible to apply gamification to improve learning objectives. Evaluation of the workbooks, outcomes, and feedback from students and mentors indicate that the game elements in IISC DBox significantly helped students perform the design activities and achieve desired outcomes while satisfying the creativity criteria. In addition, the game elements and the rules ensured learning in students, guiding students in performing design activities, supporting evaluation of the performance of the students, and created enjoyment in the students. We argue that gamification helped students to perform, learn, and enjoy the activities and thus can be an effective learning tool for school students.

As discussed in the section “IISC Design Thinking and its gamified variants”, gamification is generally classified into structural gamification and content gamification (Kapp, Reference Kapp2012). IISC DBox is an example of a gamified system that includes both structural and content gamification. Elements of structural gamification (i.e., reward system and leveling up) were used to fulfil L1. In contrast, elements of content gamification (i.e., challenges given in the clue cards and content unlocking) were used to fulfil L2.

Table 10 shows the comparison of IISC DBox with other contemporary innovation and design games that have been developed and tested for school students. The comparison has been made on the following: game approach, objective, a framework used for game development, and evaluation approach. The table shows that to fulfil the objectives, different researchers have used different game approaches: simulation game, serious game, and gamification. Differences also exist in the framework used to develop the games, the design or innovation stages for which the games were developed, the age group and number of participants, and the methods used to evaluate the effectiveness of the games. This table is intended to help researchers, educators, and game developers to consider these aspects in their development and evaluation of innovation games.

Table 10. Comparison of innovation and design games developed for school students

This paper describes an approach to evaluating the performance of students in this open-ended course. In addition, the methodology used in the process (see Fig. 8) can be used as a general approach for developing and evaluating a gamified course in the field of innovation and design. The process proposed in the approach consists of the following steps: Identification of intended DT course outcomes to be delivered or identification of shortcomings in the learning process, formulation of learning objectives, selection of game elements based on the nature of learning objectives, integration of game elements into the existing learning process, and assessment of effectiveness of game elements for the fulfilment of formulated learning objectives. The proposed approach includes an additional step, assessment of effectiveness of game elements, which complements the existing game development frameworks such as SGDA and ISGD.

Fig. 8. Proposed approach for the development and evaluation of gamification.

However, the research approach used in the work has scope for further improvement. The rewards were present in both empirical studies. The effect of rewards was tested through the outcome evaluation supplemented with questionnaires to capture students’ and mentors’ opinions on the impact of rewards in the learning process. However, there is a need to assess the effect of rewards by comparing the performance with and without using rewards. This requires a more controlled experimental setup where the effect of external factors such as the variability of mentors and end-users can be minimized. In addition, to measure the amount of learning each student has acquired in DTP, there is a need to conduct pre- and post-tests along with the student's feedback.

Another issue is that since the continuous evaluation of the performance of the groups is made by assigning points by a mentor and the users, even though there are defined criteria for these assessments, the decision of these evaluators still has subjective elements. This means that the role of mentors and users and their training are crucial for maintaining the quality of guidance and assessment. As the workshop continued for 5 days at a stretch and comprised rigorous activities, mentors also observed that sometimes students tended to complete the activities fast and interest in game elements reduced as the workshop advanced.

Conclusion and future work

The research reported in this paper contributes to the development of a gamified design pedagogy framework for school education. The specific aim of the work reported is to improve the learning efficacy of school students in design education. The paper focuses on fulfilling specific Learning Objectives: (1) To develop a learner's mindset in producing outcomes in a way that the major parameters for creativity (flexibility, fluency, novelty, and need satisfaction) get satisfied. (2) To ensure that the learner correctly understood the concepts and performed the activities accordingly. To fulfil the first Learning Objective, the authors developed a gamified version V1 of a DTP, where predefined challenges with objectives and reward system were introduced to cultivate the above design practice in school students. To fulfil the second Learning Objective, the authors developed a gamified version V2, where “content unlocking” was introduced as a mechanism for understanding instructions in the DTP before performing design activities and translating new knowledge into practice. The above objectives were evaluated by conducting workshops involving school students by asking the following research questions: (1) What is the effectiveness of reward system to achieve the desired outcomes so that the creativity criteria get satisfied? (2) What is the effectiveness of the clues and challenges to achieve successful understanding of design concepts prior to performing design activity? (3) What is the overall effectiveness of gamification on learning DTP? (4) What is the effectiveness of gamification of DTP in terms of fun and engagement? Results showed that the frequency of mistakes was reduced significantly in version V2 of the DTP compared with those made while using version V1. Results also suggest that these gamification efforts positively affected the learners’ reactions and outcomes. The results from feedback analyses further suggest that the game was able to grab the students’ attention, students found the game elements exciting, and that the game elements and activities were relevant and effective to learning. These were aligned with the evaluation of the final outcomes by experts. Overall, IISC DBox was found to induce fun, engage students while learning, and motivate them to learn important elements of DT, thus promising to be an effective tool for learning the problem finding and problem-solving processes in design.

The approach used in this work can be used for identifying appropriate learning objectives, selecting appropriate game elements to fulfil these objectives, integrating appropriate game elements with design and learning elements, and evaluating its effectiveness. This work, therefore, not only indicates the fulfilment of the Learning Objectives but also points to an approach for developing and evaluating a gamified version of a design thinking course that might be possible to adapt not only for school education but also for other domains (e.g., engineering, management).

Though gamification proposed could be effective for learning DTP, there is scope for further improvement of the pedagogy, as indicated by the following questions that still need to be answered: Is the enjoyment and learning the same for different age groups of participants? If not, then what are the factors that affect learning and enjoyment? Furthermore, which design activities are the most appropriate ones to teach the students of a particular age group or grade, what should be the depth and understanding of the design activity instructions for these different age groups, and which design methods should be developed and taught in school education along with the basic design activities? All of these require further investigation, and therefore are part of future work.

Acknowledgments