Introduction

The landscape of clinical trials has undergone significant changes in recent years [Reference Harmon, Noseworthy and Yao1]. Traditionally, pharmaceutical industry-sponsored trials were the primary drivers, setting the pace, resources, and technologies used in interventional trials [Reference Petrini, Mannelli, Riva, Gainotti and Gussoni2]. More recently, the clinical research enterprise has seen an increase in investigator-initiated trials, government-funded projects [Reference Nelson, Tse, Puplampu-Dove, Golfinopoulos and Zarin3], and interest from private nonprofit organizations [Reference Patterson, O’Boyle, VanNoy and Dies4]. These shifts present a unique challenge: maintaining regulatory compliance and adhering to best practices with limited informatics resources [Reference Charoo, Khan and Rahman5].

The adoption of electronic data capture (EDC) systems like REDCap (Research Electronic Data Capture) is pivotal in this context. REDCap is a web-based software platform designed to support data capture for research studies, enhancing data integrity, participant engagement, and regulatory compliance [Reference Harris, Taylor, Thielke, Payne, Gonzalez and Conde6]. REDCap is available at no cost to academic, nonprofit, and government organizations and is currently in use at over 7200 institutions in 156 countries. Each REDCap partner is responsible for installing and maintaining the software platform and supporting local researchers [Reference Harris, Taylor and Minor7]. This decentralized approach allows institutions to tailor REDCap to their specific needs, but it also means that each site must validate its own environment. The global pandemic has further propelled the adoption of these platforms for remote data collection and consent, thereby intensifying the scrutiny of their regulatory compliance [Reference Petrini, Mannelli, Riva, Gainotti and Gussoni2]. Amid evolving FDA guidance and heightened regulatory scrutiny, institutions must adapt to rapid technological advancements[Reference Oakley, Worley and Yu8] while strictly adhering to compliance standards [Reference Mueller, Herrmann and Cichos9]. This involves ensuring robust data security, comprehensive audit trails, and strict data integrity protocols in accordance with regulations such as 21 CFR Part 11 [10,11]. While not every clinical trial requires 21 CFR Part 11 compliance, a growing number of studies led by REDCap Consortium members have needed to submit to the FDA for marketing applications such as for investigational new drugs.

Historically, validation of REDCap software to meet FDA regulations began in 2009 when the decentralized nature of validation efforts became apparent. Each participating institution desiring software validation independently devised and conducted its own compliance tests, leading to a scattered and often redundant validation landscape. This approach burdened individual institutions with repeated efforts and resulted in varying compliance standards across the board. To address these challenges, the REDCap Consortium established the Regulatory and Software Validation Committee (RSVC) shortly after validation efforts began. The RSVC was tasked with harmonizing efforts by developing a centralized set of best practices and standardized validation tests for the purpose of reducing duplicated efforts and ensuring that all institutions adhered to a consistent standard of compliance.

Despite best efforts, the validation documents and packages often trailed behind REDCap software releases. This lag meant that by the time the validation packages were made available, new versions of REDCap had been released, and the provided validations were immediately outdated. This delay hindered institutions’ ability to remain compliant with the latest regulations and effectively leverage the full capabilities of newer software versions.

Recognizing the critical need for timely validation in line with software updates, the VUMC REDCap team, and key leaders of the RSVC devised and launched the Rapid Validation Process (RVP) in 2022. This project aims to develop a framework to generate guidance and a core set of software validation documentation with every semi-annual release of REDCap, which institutions using REDCap can adopt to bolster compliance. This effort leverages the community-driven nature of the REDCap Consortium to meet and exceed the latest regulatory expectations. The REDCap RVP seeks to comply with FDA 21 CFR Part 11 and emphasizes procedural rigor and testing efficiency.

Methods

We designed RVP to leverage the REDCap Consortium’s collective expertise, and it is designed to adapt dynamically to evolving regulatory requirements and software updates. REDCap has two release cycles: a monthly standard feature release and a semi-annual Long-Term Support (LTS) release. The REDCap team supports the latest release standard and LTS version with security patches and bug remediation until a new standard and LTS version are available. REDCap partners typically choose one or the other REDCap version to support their local research enterprise, but some choose to have one server running the standard release and another running LTS for trials requiring validated software documentation. Since system updates and testing often take several weeks or months, we decided to perform software validation for each LTS version. Figure 1 summarizes the validation process for each release. The REDCap developer at Vanderbilt releases each version with a change log and a User Requirement Specification (URS) document. Members of the REDCap Consortium first perform a systematic examination of REDCap changes. This includes a detailed analysis of change logs to identify modifications in core functionalities or new feature introductions. These resources, provided by VUMC, are essential for systematically examining REDCap changes by Consortium members. This support includes significant technical documentation, guidance, and direct resources necessary to maintain and validate the software across various environments. The VUMC team’s continuous contributions ensure that all institutions can stay compliant and leverage the full capabilities of REDCap. We also perform a review of 21 CFR Part 11 regulations to determine whether any tests need to be rewritten or added.

Figure 1. REDCap Rapid Validation Process - this diagram outlines the collaborative validation process for REDCap’s Long-Term Support (LTS) releases. The REDCap team at Vanderbilt University Medical Center (VUMC) handles the software release and User Requirement Specification (URS). The Regulatory and Software Validation Committee (RSVC) performs the change log assessment, functional requirement specification (FRS), feature tests, and prepares the validation summary report, validation package guide, and certificate of validation, leading to the final validation package release. This collaboration highlights the unique partnership between VUMC and the REDCap Consortium, contrasting with traditional vendor-provided software validation.

We organized functional requirements into tiers based on the function’s core determination, system user role, and the ability to test outside the production environment. These distinctions are crucial for prioritizing validation efforts and ensuring that essential features, particularly those impacting regulatory compliance and system integrity, undergo rigorous testing first, and are available to the Consortium immediately after a software version release. We employed a validation rubric that categorized functionalities based on their criticality and relevance to the overall system operations. The REDCap Consortium assesses new features in REDCap according to this rubric at the time of release.

-

• Tier A focuses on essential REDCap Control Center configuration settings, and project setup necessitating immediate validation due to their critical nature. Many of the Tier B, C, and D tests are predicated on Tier A features working.

-

• Tier B addresses core EDC functionalities that are crucial for data entry and management.

-

• Tier C tests commonly used features but that are necessary for all REDCap projects.

-

• Tier D covers less commonly used features that are outside the Consortium’s core validation scope . Local REDCap may choose to write tests for these features. Tier D features may become Tier B or C features in the future with increased use by the REDCap Consortium.

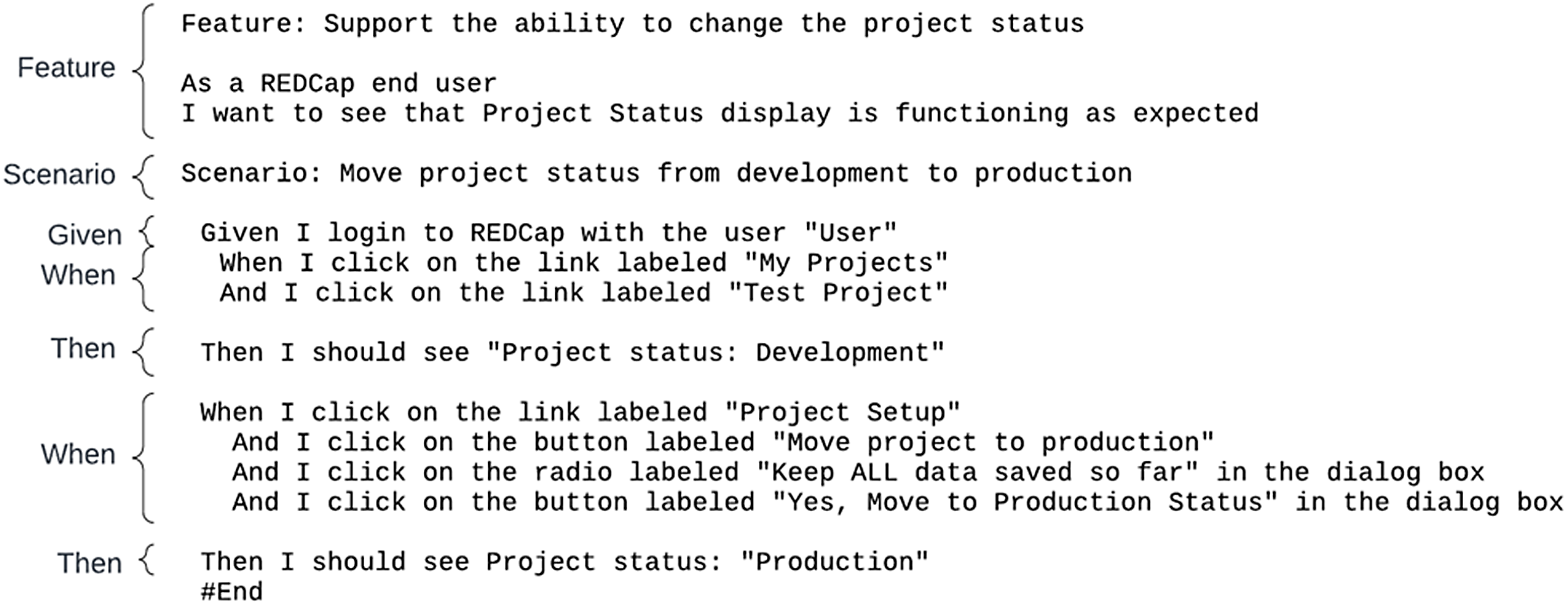

The RVP team uses Gherkin syntax to write feature tests [12]. Gherkin is a scripting language that allows for the development of clear, executable test scripts that can be understood by non-software developers but also machine-readable for automated testing. VUMC provides a dedicated instance of REDCap, where RSVC members perform these tests. Members document the time it takes to complete each test and communicate any failures or inconsistencies to the RVP team. This iterative feedback loop is critical for refining the tests and ensuring each script meets the intended validation criteria. After adjustments, the revised scripts are retested to confirm their effectiveness and accuracy. Example scripts are provided in Figure 2.

Figure 2. Example of Gherkin Syntax - this figure demonstrates the use of Gherkin syntax, employing keywords such as feature, scenario, given, when, and then. These keywords provide structure and meaning to executable specifications, allowing clear communication of testing requirements in plain language. The example illustrates how these steps are used to describe initial context, events, and expected outcomes in the REDCap Rapid Validation Process.

The RVP team compiles all test documentation, executed scripts, and a comprehensive validation report in the final stages of preparing the validation package. This package also includes a validation summary report that outlines the test outcomes, any issues encountered, and the corrective actions taken. This document serves as a formal record of the validation process and is essential for institutional compliance reviews. The completed package is then made available to all REDCap Consortium members through the REDCap Administrator Community of Practice website [Reference Harris, Taylor and Minor7,13], ensuring that all participating institutions have immediate access to the latest validated tools and resources.

When updating to a new version of REDCap, institutions have the option to either use the validation evidence provided by the RVP or run the provided test scripts locally. Regardless of the approach taken, institutions are responsible for conducting site-specific tests. These tests include developing their own functional requirements, executing local validations, and preparing a summary report. For instance, features such as email notifications or user authentication systems, which depend on local infrastructure, must be tested and validated at the institutional level.

The RVP team categorizes certain site-dependent features as Tier D to indicate that local testing is required. However, this category is not comprehensive, and it remains the responsibility of each institution to perform a gap analysis to identify any additional site-specific features that need validation. Institutions must document and address any local validation requirements to ensure full compliance with FDA 21 CFR Part 11.

Results

Table 1 displays the tests by feature, starting with the most critical control center settings in Tier A and moving through to Tier B’s core functionalities and Tier Cs widely used non-core features. Each feature underwent intensive testing to verify its functionality and reliability. The number of tests in each tier reflects our commitment to thoroughly vetting every essential aspect of the system.

Table 1. Overview of validation by tier for REDCap version 14.0

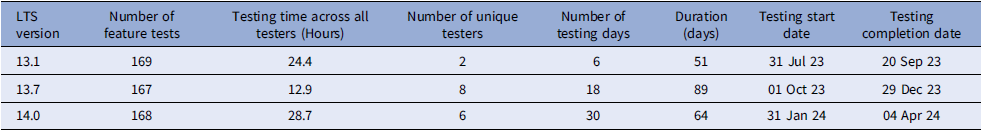

Since the initiation of RVP, we have validated three LTS versions of REDCap. Table 2 summarizes the efficiency and collaborative spirit that marked our validation process across different LTS versions. We meticulously documented the quantity of tests conducted and their duration, how long it took to conduct the tests, the personnel involved in testing, and the total days committed to testing from version release to test completion.

Table 2. Efficiency gains and collaborative effort

Calendar days for testing increased dramatically from version 13.1 to 13.7 due to onboarding of new RSVC members to test. However, the total time of performing tests and testing time per tester decreased. Major releases for REDCap typically involve more feature changes and occur annually, with every version ending with “.1.” Therefore, total testing time for versions 13.1 to 14.1 are higher than 13.7. enhancing the system’s resilience and bolstering our collective expertise. Nevertheless, there was a notable decrease in the number of testing days from 89 to 64, indicative of a more streamlined approach that has facilitated faster validation cycles and hastened readiness for deployment. Additionally, the time spent conducting tests was less in version 14.1 (4.8 hours) than in version 13.1 (12.2 hours), showing the workload is more evenly distributed among Consortium members. The RVP process decreased the overall time to package release compared to previous releases as shown in Figure 3.

Figure 3. Days between REDCap release and validation package release to REDCap Consortium. Long-Term Support (LTS) versions without a validation package do not have a bar in this graph.

To date, 109 current or retired members from 94 unique institutions have participated in the development and maintenance of the RSVC validation materials. For the three versions we validated within RVP: 12.4 had 276 downloads, 13.1 had 227 downloads, 14.0 had 311 downloads.

Discussion

The RVP by the REDCap Consortium has advanced the standard for software validation in clinical research, emphasizing the critical role of community-driven endeavors in bolstering regulatory compliance. The strategic integration of function-based validation and preparations for future automation enhances REDCap’s ability to swiftly adapt to the evolving technological and regulatory landscapes. Our methodology ensures that validation efforts are current, responsive, and aligned with the latest developments in REDCap’s software architecture. This continuous review process is vital for maintaining the integrity and compliance of the REDCap system, especially considering frequent updates and enhancements that characterize modern EDC systems. Ultimately, the RVP produces documentation with each REDCap release that greatly decreases work for institutions to test their software and enables administrators to provide that documentation to study teams in a timely manner.

Efficiency results paint a picture of a validation process that has grown more efficient and refined with each successive LTS version. These results showcase a dual narrative. On the one hand, they underline the dedication to a community-driven validation process, with each consortium member playing a crucial role. On the other hand, they spotlight the agility and adaptability of the RVP itself, which has evolved to meet the rigorous and ever-changing regulatory standards. Under the consortium’s stewardship, leveraging the REDCap Consortium support team at VUMC, REDCap has been rigorously tested and is well prepared to meet the varied and demanding challenges of clinical research. The ongoing support from VUMC includes the development and release of software updates and the provision of comprehensive validation documentation and user guides. This partnership highlights the crucial role of VUMC in maintaining the integrity and compliance of REDCap, despite it being offered at no cost to eligible institutions. This support underscores the fact that maintaining REDCap’s compliance and operational efficiency involves considerable resources and effort from VUMC. These pivotal findings affirm that the RVP has achieved its objectives and laid the groundwork for more intuitive, structured, and efficient validation processes. Under the consortium’s stewardship leveraging the REDCap Consortium support team at VUMC, REDCap has been rigorously tested and is well prepared to meet the varied and demanding challenges of clinical research. The system’s integrity and compliance remain unimpeachable, inspiring users and regulatory authorities’ confidence. These accomplishments herald the promise of an even more resilient and adaptive system in the future.

Another innovation for RVC is the tiered approach to testing essential features first. This approach streamlines the validation process and ensures that resources are allocated efficiently, focusing first on the functionalities that require immediate attention. It allows partners who rely heavily on specific tiers to implement changes swiftly and effectively, enhancing the overall agility of the system’s adaptation to new requirements.

Prior to the RVP, the validation process for each REDCap release was significantly slower, often resulting in validation documentation becoming available only after new versions had already been released. This delay hindered institutions from remaining compliant with the latest regulations and fully leveraging the capabilities of newer software versions. The RVP model has proven much faster in calendar time compared to pre-RVP, where releases were often out of date before documentation was complete for a specific LTS version. Furthermore, the centralized efforts of the RSVC and RVP have significantly reduced the duplicative validation work that would otherwise be required at each individual institution. By providing a standardized validation package, the RSVC and RVP save each institution from independently validating the software, thereby conserving valuable resources and ensuring a consistent standard of compliance across all consortium members.

The methodology supports a robust validation framework by integrating crucial elements – systematic change reviews, structured testing based on feature criticality, and the use of advanced scripting languages for automated tests. This framework can accommodate the diverse needs of the REDCap user community, ranging from academic institutions to large-scale clinical research organizations, all while ensuring compliance with stringent regulatory standards comprehensive approach underlines our consortia commitment to maintaining REDCap as a reliable, efficient, and compliant tool for EDC in clinical research, setting a standard for validation practices in EDC systems. The methodologies we have implemented are about keeping pace with technological advancements and anticipating future regulatory and operational challenges.

As we look ahead, the consortium is committed to implementing these automated testing processes to significantly streamline manual testing efforts, reduce human errors, and accelerate the overall validation cycle. Although not currently automated, our adoption of Gherkin syntax sets a foundation for potential future automated testing processes. Once fully implemented, these syntax-driven tests scripts will increase enhanced efficiency and accuracy, ensuring consistency across various consortium implementations. Automated testing will enable us to run the full suite of feature test scripts with every monthly release of Standard REDCap. Since we typically release features in LTS in Standard first, automated testing will allow us to adapt scripts and create test for new features every month, which should further decrease testing time for each LTS release. Moreover, we plan to broaden the validation scope to address the diverse needs of the REDCap community, ensuring that our EDC solutions remain customizable and robust across a spectrum of research settings. This expansion will support a wider array of research needs, further enhancing REDCap’s flexibility and applicability. Finally, while RVP successfully implemented a process for validating the REDCap software features, other aspects of compliance such as installation qualification (server and networking setup) and process qualification (study data and process management documentation) are needed to achieve 21 CFR Part 11 standards. With the support of REDCap Consortium members and compliance experts from across the Clinical and Translational Science Awardee (CTSA) Network, we plan to publish a 21 CFR Part 11 Compliance Implementation Guide for REDCap. This guide will assist new institutions in validating their systems and help guide their study teams toward full compliance.

Conclusion

The RVP’s achievements mark a significant milestone in the evolution of REDCap as a ubiquitous EDC system for the global research community. Through innovative strategies and a focus on future preparedness for automation, the project has streamlined validation efforts and ensured adherence to stringent regulatory standards, setting a new benchmark in the realm of EDC system validation.

Looking forward, the REDCap Consortium is dedicated to continually refining and expanding the validation framework. The focus on automating testing processes and expanding the validation scope will ensure that REDCap remains a reliable, compliant, and versatile tool for clinical research, aptly equipped to meet the challenges of an evolving regulatory environment. The RVP exemplifies the impactful role of community-driven initiatives in software validation. As we move forward, the consortium’s ongoing efforts to refine and adapt the validation process will ensure that REDCap remains a pivotal asset in modern clinical research, poised to address the challenges of tomorrow.

Acknowledgments

We gratefully acknowledge the contributions of the Regulatory and Software Validation Committee (RSVC), which is part of the REDCap Consortium, for their significant role in this work. Special thanks to Chris Battiston (Women’s College Toronto), Emily Merrill (Beth Israel Deaconess Hospital), Elinora Price (Yale New Haven Health System), Pamela Thiell (University of Alberta), Kaitlyn Hofmann (Thomas Jefferson University), and Stephanie Oppenheimer (Medical University of South Carolina) for their dedicated efforts and expertise.

We also extend our gratitude to the Vanderbilt Institute for Clinical and Translational Research (VICTR) at Vanderbilt University Medical Center (VUMC) for their invaluable support. This includes developing and releasing REDCap software updates, comprehensive validation documentation, and user guides. Their ongoing support underscores the critical resources and efforts required to maintain REDCap’s compliance and operational efficiency.

We also acknowledge the Division of Biomedical Informatics at Cincinnati Children’s Hospital Medical Center (CCHMC). Special thanks to Michael Wagner, PhD, and the CCHMC REDCap Team: Maxx Somers, Warren Welch, Audrey Perdew, and other administrators for their support.

Author contributions

The authors confirm the contribution to the paper as follows: Study conception and design (Theresa Baker, Teresa Bosler, Adam DeFouw, Alex Cheng, Paul Harris), data collection (Theresa Baker, Teresa Bosler, Adam DeFouw, Michelle Jones), analysis and interpretation of results (Theresa Baker, Teresa Bosler, Michelle Jones, Alex Cheng), and draft manuscript preparation (Theresa Baker, Teresa Bosler, Michelle Jones, Alex Cheng, Paul Harris). Theresa Baker takes responsibility for the manuscript as a whole.

Funding statement

Additional thanks to the Trial Innovation Network (TIN) under the National Center for Advancing Translational Sciences (NCATS) of the National Institutes of Health (NIH), and the Clinical Research Informatics and Service Program Initiative (CRISPI), funded by the NIH and led by Leidos Biomedical Research, Inc., under contract number 17X198, for their valuable contributions.

This work was supported by the National Center for Advancing Translational Sciences (NCATS) of the National Institutes of Health (NIH) under award number UL1TR000445 & UL1TR001425, Trial Innovation Center (TIC) 1U24TR004437-01, and Recruitment Innovation Center (RIC) 1U24TR004432-01.

Competing interests

The authors declare none.