1. Introduction

Several natural language schemes such as ontologies (Li and Ramani Reference Li and Ramani2007), controlled natural language descriptions (Chakrabarti et al. Reference Chakrabarti, Sarkar, Leelavathamma and Nataraju2005), documentation templates (Lee et al. Reference Lee, Kim, Huh, Cho, Park and Lee2013), argumentation approaches (Eng, Aurisicchio, and Bracewell Reference Eng, Aurisicchio and Bracewell2017), artefact representations (Sasajima et al. Reference Sasajima, Kitamura, Ikeda and Mizoguchi1996), process models (Gero and Kannengiesser Reference Gero and Kannengiesser2012) and function structures (Gericke and Eisenbart Reference Gericke and Eisenbart2017) have been adopted in design research to envisage, encode, evaluate and enhance the design process. While these schemes have significantly impacted the development of several knowledge-based applications in design research and practise, it was not until the development of computational (e.g., graphical processing units (GPUs), cloud computing services) and methodological (e.g., NLTK,Footnote 1 WordNetFootnote 2) infrastructures that these schemes were popularly utilised to process unstructured natural language text data and extract design knowledge from these. These infrastructures have led to the evolution of what is currently understood and recognised as a family of natural language processing (NLP) techniques.

A typical NLP methodology converts a text into a set of tokens such as meaningful terms, phrases and sentences that are often embedded as vectors for applying these to standard NLP tasks such as similarity measurement, topic extraction, clustering, classification, entity recognition, relation extraction and sentiment analysis. These tasks primarily rely upon prescriptive language tools (e.g., Stanford Dependency ParserFootnote 3), lexicon (e.g., ANEWFootnote 4) and descriptive language models (e.g., BERTFootnote 5).

The ability of NLP methodologies to process unstructured text opens several opportunities such as topic discovery (Liang et al. Reference Liang, Liu, Chen and Jiang2018), ontology extraction (Bouhana et al. Reference Bouhana, Zidi, Fekih, Chabchoub and Abed2015), document structuring (Morkos, Mathieson, and Summers Reference Morkos, Mathieson and Summers2014), search summarisation (Noh, Jo, and Lee Reference Noh, Jo and Lee2015), keyword recommendation (Zhang et al. Reference Zhang, Kwon, Kramer, Kim and Agogino2017) and text generation (Souza, Meireles, and Almeida Reference Souza, Meireles and Almeida2021), which enable design scholars and practitioners to support knowledge reuse (Li et al. Reference Li, Chen, Zheng, Jiang and Wang2021a), needs elicitation (Lin, Chi, and Hsieh Reference Lin, Chi and Hsieh2012), biomimicry (Shu Reference Shu2010; Selcuk and Avinc Reference Selcuk and Avinc2021) and emotion-driven design (Dong et al. Reference Dong, Zhu, Peng, Tian, Guo and Liu2021) in the design process. NLP has therefore become an imperative strand of design research, where the scholars have extensively proposed NLP-based tools, frameworks and methodologies that are aimed to assist the participants in the design process, who otherwise often rely upon organisational history and personal knowledge to make important decisions, for example, choosing a lubricant for shaft interface.

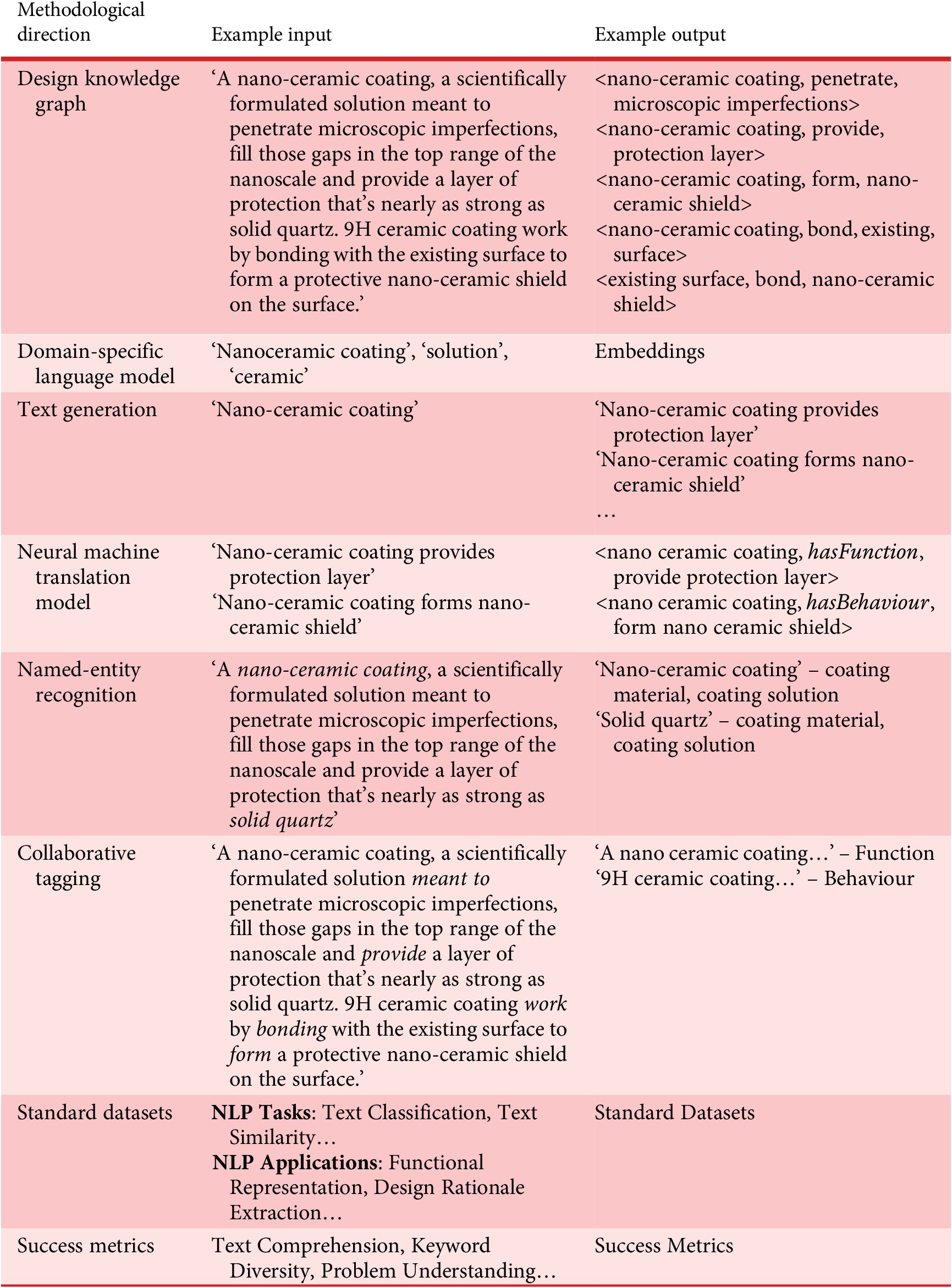

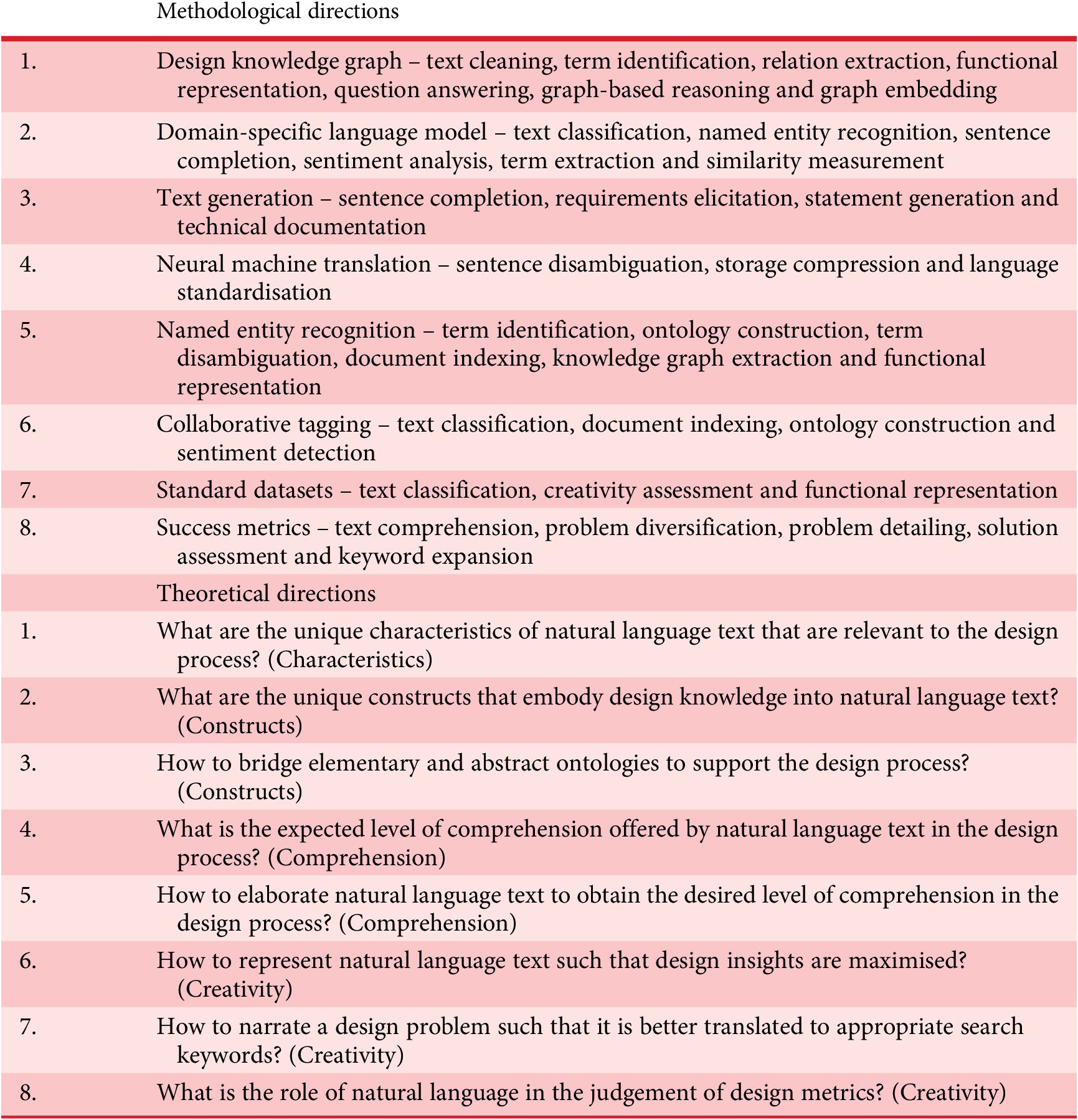

In this article, we review scholarly contributions that have applied as well as developed NLP techniques to process unstructured natural language text and thereby support the design process. Several motivations (as follows) have led to the effort of reviewing such contributions.

(i) To identify the methodological advancements that are necessary to bolster the performances of future NLP applications in-and-for design. For instance, the performances of parts-of-speech (POS) tagger and named entity recognition (NER) require significant improvement to process design documents. We have listed various possibilities of such methodological directions in Section 4.2.

(ii) To enhance theoretical understanding of the nature and role of natural language text in the design process. For example, it is still unclear as to which elements of design knowledge are necessary to be present in an artefact description so that it qualifies as adequate. We have asked several open questions along with necessary discussion to highlight such theoretical directions in Section 4.3.

(iii) To summarise a large body of NLP contributions into a single source. A variety of NLP applications to the design process are reported in journals outside the agreed scope of design research. Reviewing and summarising such contributions in this article could therefore be of importance. We have reviewed the contributions according to the type of text source in Section 3.

(iv) To create an NLP guide for developing applications to support the design process. For example, design methods like creating activity diagrams could be significantly benefited by NLP methodologies. We have indicated such cases in Section 4.1 using a design innovation process framework.

In line with the motivations described above, we adopt a heuristic approach (Section 2 and Appendix A) to retrieve 223 articles encompassing 32 academic journals. We review these articles in Section 3 according to the types of text sources and discuss these in Section 4 regarding applications and future directions.

2. Methodology

To retrieve the articles for our review, we use the Web of ScienceFootnote 6 portal, where we heuristically search the titles, abstracts and topics using a tentative set of keywords within design journals. Upon carrying out a frequency-based analysis of the preliminary results, we expand the keyword list as well as the set of design journals. We further expand our search to all journals that include NLP contributions to the design process. We then apply several filters and manually read through the titles, abstracts and full texts of a selected number of articles. In the end, we obtain 223 articles that we review in our work. We detail the search process in Appendix A. We have also uploaded the bibliometric data for all these articles on GitHub.Footnote 7

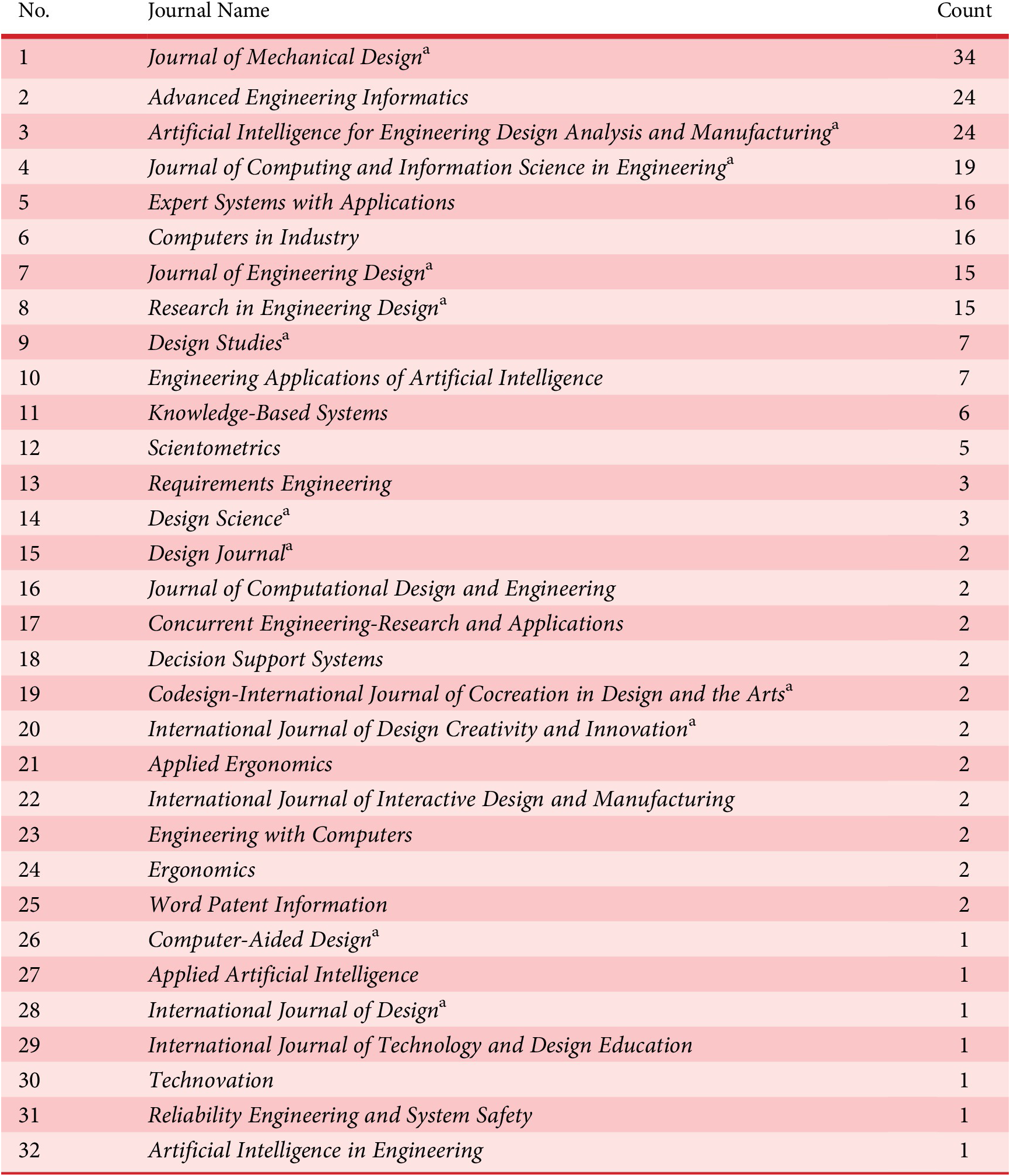

As shown in Table 1, the final set of papers is distributed across 32 journals. We have strategically chosen these journals such that these are primarily design-oriented and secondarily focused on general computer applications (e.g., Computers in Industry), artificial intelligence (e.g., Expert Systems with Applications) and technology related (e.g., World Patent Information). In addition, we have also included journals that focus on general design aspects such as ergonomics, requirements and safety.

Table 1. Article count w.r.t. journals

a Indicates the journals that we initially considered as those that fall within the scope of design.

As shown in the year-wise plot (Figure 1), there has been a steady increase in the number of contributions, which could be mainly due to the evolution of computational and methodological infrastructures.Footnote 8 While the contributions from the 1990s have been theoretically influential, the peak in the mid-2000s could be attributed to the popularity of biomimicry (Goel and Bhatta Reference Goel and Bhatta2004), ontologies (Romanowski and Nagi Reference Romanowski and Nagi2004), functional modelling (Bohm, Stone, and Szykman Reference Bohm, Stone and Szykman2005) and functional representation (Chandrasekaran Reference Chandrasekaran2005). Besides the year-wise plot, we report the 30 most frequent keywords as a word cloud in Figure 2, where we discard the generic keywords such as ‘design’ and ‘system’.

Figure 1. Article count w.r.t. the publication year. The data point at 2021 is applicable only until 19th September 2021.

Figure 2. Top 30 keywords w.r.t. frequency.

3. Review

In this section, we review the 223 articlesFootnote 9 thus selected using the methodology as described in Section 2 and Appendix A. To present the articles that we have reviewed, we considered the following categorisation schemes: 1) the types of natural language text data (e.g., internal reports, technical publications), 2) the types of NLP tasks (e.g., clustering, classification) and 3) the applications in the design process (e.g., brainstorming, problem formulation). Among these schemes, we adopt the types of text data because an NLP-based contribution is often associated with one text source data but combines a variety of NLP tasks and could be applied across different phases of the design process.

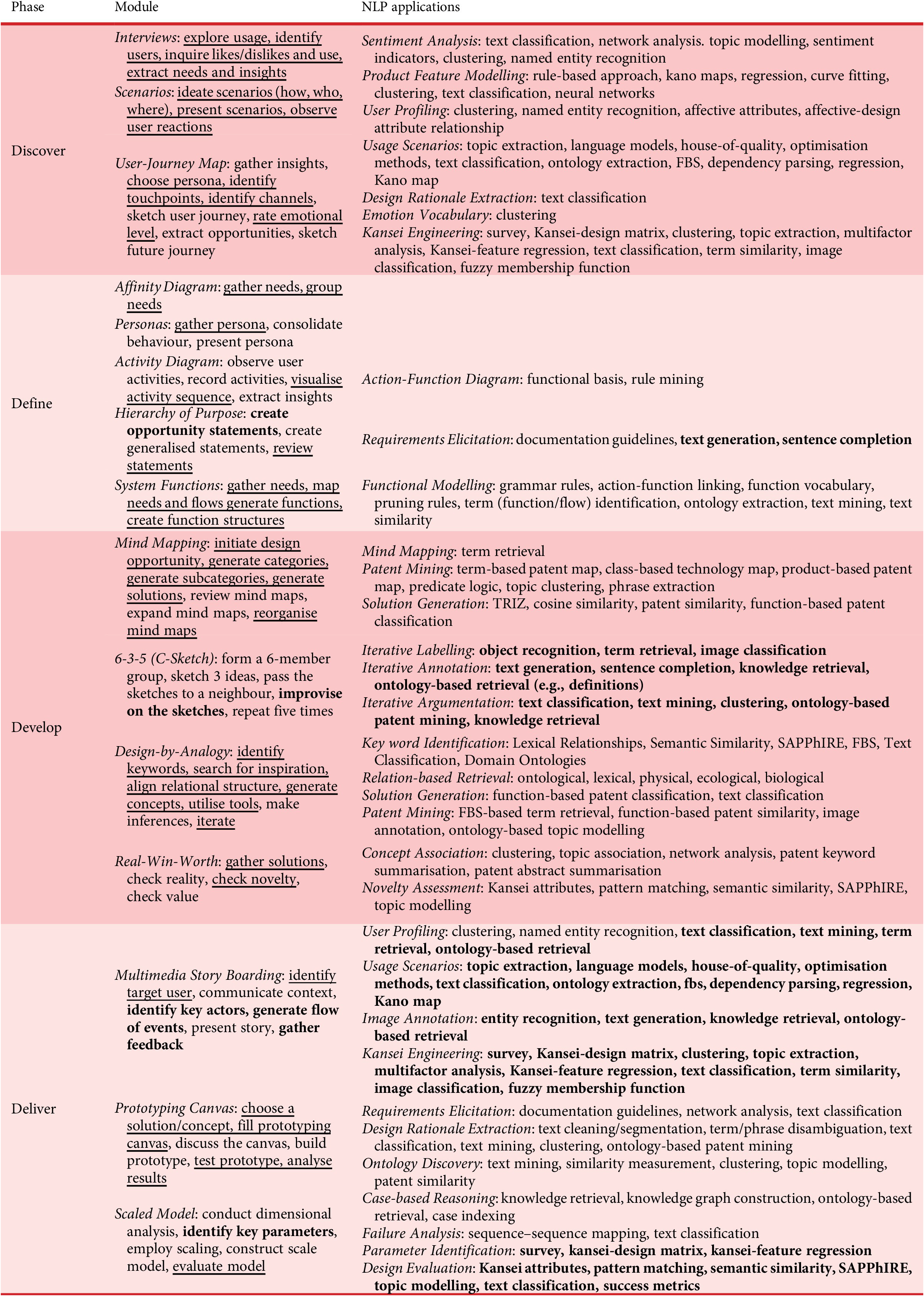

As shown in Figure 3, we map the categories of our scheme onto different phases of the design process as given in the model of the UK Design Business Council.Footnote 10 Among the types of text data sources as explained below, consumer opinions and technical publications are utilised in the design process, whereas the rest are generated in the design process.

• The internal reports are usually generated in the deliver phase of the design process, where the concepts are embodied and detailed into prototypes. These sources of natural language text often include the knowledge of failures, situations, logs, instructions, etc.

• The design concepts are generated during the develop phase, when the designers search, retrieve, associate and select concepts using various supports. The NLP contributions that we review under this category not only involve processing design concepts but also problem statements, keywords, supporting databases (e.g., AskNature), etc.

• The discourse transcripts constitute the recorded communication such as speech transcripts and emails that are obtained from organisational data or think-aloud experiments. These sources need not capture the communication that is pertinent to a particular phase but the design process as a whole.

• The technical publications that constitute patents, scientific articles and textbooks are considered external sources that are often utilised in the develop and deliver phases of the design process. Owing to the quality and quantity of text, these sources are best suited for the application of NLP tasks.

• The consumer opinions are external sources that are available in the form of product reviews and social media posts. These sources are predominantly utilised in the discover phase of the design process when the designers understand the usage scenarios and extract user needs.

• We categorise the miscellaneous contributions as ‘other sources’ that are not indicated in Figure 3.

Figure 3. Indication of natural language text data sources related to the design process, following the design process model from the UK Design Business Council.

3.1. Internal reports

Internal reports constitute over 80% of the knowledge in the industry (Ur-Rahman and Harding Reference Ur-Rahman and Harding2012) and are often present as product specifications, design rationale, design reports, drawing notes and logbooks (Li et al. Reference Li, Qin, Gao and Liu2014). Although conventional NLP methodologies like building classifiers (Sexton and Fuge Reference Sexton and Fuge2020) using internal reports are a recent phenomenon in design research, scholars have attempted to process internal reports and discover ontologies (Cavazza and Zweigenbaum Reference Cavazza and Zweigenbaum1994), since the early 90s.

Requirement extraction

Scholars initially aimed to extract design requirements as meaningful terms, phrases and segments from internal reports to reuse these in the design process. Such requirements shall also be derived from the past cases of failure in which violated constraints were recorded (Siddharth, Chakrabarti, and Ranganath Reference Siddharth, Chakrabarti and Ranganath2019a). As mentioned below, scholars initially encountered some challenges while extracting design requirements from internal reports.

Kott and Peasant (Reference Kott and Peasant1995, p. 94) observe that requirements in internal reports are incomplete, ambiguous, include inconsistent rationale and denote a wrong purpose. To mitigate some of these issues, they provide an example (1995, p. 103) as shown below to illustrate how lengthy requirements could be decomposed into short sentences.

The Loader shall provide the capability of handling HCU6/E pallets, ISO 40-foot containers, and Type V airdrop platforms. Loader shall be able to move forward with speed of at least 5 mph, the goal being 7 mph. An on-board maintenance diagnostic system shall be provided.

The Loader shall be able to perform the Loading function. The Load Type of the Loading function shall be any of: HCU6/E pallets, ISO 40-foot containers, and Type V airdrop platforms. The Loader shall be able to perform function Move Forward. The speed of Move Forward shall be at least 5 mph, the goal being 7 mph. The Loader shall include an On-Board Maintenance Diagnostic System.

Farley (Reference Farley2001, pp. 296, Reference Zhang, Luo, Li and Buis299) identifies that airtime faults (also called ‘snags’) include abbreviations (e.g., CHKD – checked, S0V – serviceable), acronyms, spelling errors (e.g., VLVE) and plural terms. While differing in structure and semantics (Kim, Bracewell, and Wallace Reference Kim, Bracewell and Wallace2007, p. 155), internal reports also include noisy terms (Menon, Tong, and Sathiyakeerthi Reference Menon, Tong and Sathiyakeerthi2005, p. 179), ‘plastic’ terms (e.g., ‘progress’, ‘planning’) and implicit phrases (e.g., ‘insufficient performance’) (Lough et al. Reference Lough, Van Wie, Stone and Tumer2009, p. 62). Kim, Bracewell, and Wallace (Reference Kim, Bracewell and Wallace2007, p. 162) suggest that acronyms (‘CNC’) and abbreviations (‘chkd’) shall be recognised in text using ontologies. To reduce ambiguity, Madhusudanan, Chakrabarti, and Gurumoorthy (Reference Madhusudanan, Chakrabarti and Gurumoorthy2016, p. 451) suggest that the anaphora (‘those’) shall be replaced with the corresponding entity in the previous sentence.

When co-ordination ambiguity exists in a sentence, for example, ‘slot widths and radii should conform to those of cutters’ (Kang et al. Reference Kang, Patil, Rangarajan, Moitra, Robinson, Jia and Dutta2019b, p. 2), it is unclear if the term ‘slot’ modifies ‘widths’ or ‘radii’. Here, Kang et al. (Reference Kang, Patil, Rangarajan, Moitra, Robinson, Jia and Dutta2019b, pp. 6, Reference Ameri, Kulvatunyou, Ivezic and Kaikhah7) suggest checking if the corresponding domain ontology includes (‘slot’, ‘hasProperty’, ‘radii’). To extract meaningful segments that are devoid of ambiguities, Madhusudanan, Chakrabarti, and Gurumoorthy (Reference Madhusudanan, Chakrabarti and Gurumoorthy2016, p. 452) measure coherence between sentences by integrating and extending WordNet-based similarity measures. To extract segments within a sentence, for example, ‘sharp corners should be avoided because they interfere with the metal flow’, Kang et al. (Reference Kang, Path, Rangarajan, Moitra, Jia, Robinson and Dutta2019a, p. 294) extract the italicised portion using domain concepts (e.g., corner) and attributes (e.g., isSharp). They also discard the unwanted portion using some rules (2019a, p. 295), for exasmple, the subordinate clause that occurs after a marker shall be discarded, except for ‘if’ or ‘unless’.

Ontology construction

To represent design rationale,Footnote 11 scholars have proposed a variety of prescriptive-generic ontologies (Ebrahimipour, Rezaie, and Shokravi Reference Ebrahimipour, Rezaie and Shokravi2010; Liu et al. Reference Liu, Liang, Kwong and Lee2010; Zhang et al. Reference Zhang, Luo, Li and Buis2013; Aurisicchio, Bracewell, and Hooey Reference Aurisicchio, Bracewell and Hooey2016; Siddharth, Chakrabarti, and Ranganath Reference Siddharth, Chakrabarti and Ranganath2019a) that build upon the fundamental idea of entity-relationship models (Taleb-Bendiab et al. Reference Tan, Wang, Yang, Chen, Huang, Sun and Liu1993). While generic ontologies are capable of capturing rationale from a variety of domains, the performances of these in terms of knowledge retrieval are expected to be low due to the level of abstraction. For example, a list of generic terms that represent ‘issue’ (Liu et al. Reference Liu, Liang, Kwong and Lee2010, p. 4) may not retrieve phrases that inherently or intricately communicate a design issue.

Domain-specific ontologies like QuenchML (Varde, Maniruzzaman, and Sisson Reference Varde, Maniruzzaman and Sisson2013) and Kodak Cover (Nanda et al. Reference Nanda, Thevenot, Simpson, Stone, Bohm and Shooter2007) overcome the limitations of generic ontologies while also being evolvable (Poggenpohl, Chayutsahakij, and Jeamsinkul Reference Poggenpohl, Chayutsahakij and Jeamsinkul2004), machine-readable (Biswas et al. Reference Biswas, Fenves, Shapiro and Sriram2008; Fenves et al. Reference Fenves, Foufou, Bock and Sriram2008) and semantically interoperable (Ding, Davies, and McMahon Reference Ding, Davies and McMahon2009). Scholars have therefore attempted to extract domain-specific ontologies from domain text sources.

Among domain-specific ontologies, Kim, Bracewell, and Wallace (Reference Kim, Bracewell and Wallace2007, p. 160) identify the following categories of relationships from aircraft engine repair notes: background, cause-effect, condition and contrast. Lough et al. (Reference Lough, Van Wie, Stone and Tumer2009, p. 33) understand from 117 risk statements that these are indicators of failure modes, performance, design and noise parameters. Using oil platform accident reports, Garcia, Ferraz, and Vivacqua (Reference Garcia, Ferraz and Vivacqua2009, pp. 430, 431) propose that concept relationships could be generalised as Is-a, Part-Of, Is-an-attribute-of, Causes, Time-Follows, Space-Follows and more. Hsiao et al. (Reference Hsiao, Ruffino, Malak, Tumer and Doolen2016, p. 147) populate 822 actions contained in 185 risk reports and identify that action could carry the attributes ‘purpose’ and ‘embodiment’, which are further categorised as ‘Approval’, ‘Gather_data’, ‘Coordinate’ and ‘Request’ (Hsiao et al. Reference Hsiao, Ruffino, Malak, Tumer and Doolen2016, p. 158).

Scholars have built ontologies by associating technical terms and segments using various similarity measures. Hiekata, Yamato, and Tsujimoto (Reference Hiekata, Yamato and Tsujimoto2010) use an existing ontology to associate word segments (component and malfunction) from 9604 shipyard surveyor reports. Lee et al. (Reference Lee, Kim, Huh, Cho, Park and Lee2013) mine the task data from shipbuilding transportation logs and cluster these using a variety of distances (e.g., Jaccard, Euclidean). Kang and Tucker (Reference Kang and Tucker2016) extract functions as topic vectors from 16 module descriptions (Pimmler and Eppinger Reference Pimmler and Eppinger1994) of an automotive control system. They propose that the cosine similarity between a pair of topic-vectors (function) quantifies the functional interaction between corresponding modules. Song et al. (Reference Song, Yoon, Lee and Park2017, pp. 265–269) construct a semantic network using iPhone Apps PlusFootnote 12 text data that includes 697 service documents indicating 66 feature elements and 95 feature keywords.

Arnarsson et al. (Reference Arnarsson, Frost, Gustavsson, Jirstrand and Malmqvist2021) use latent dirichlet allocation (LDA) to cluster the Doc2Vec-based embeddings of over 8000 Engineering Change Requests (ECRs) in a commercial vehicle manufacturer. Yang et al. (Reference Yang, Kim, Hur, Cho, Han and Seo2018) construct an ontology using 114,793 problem-solution records within preassembly reports inside an automotive manufacturer. They use the ontology to process (e.g., identify n-grams), structure and represent new text data in various forms (2018, p. 214) to facilitate the design and managerial decisions. Xu et al. (Reference Xu, Dang, Zhang and Chen2020) obtain the text data of 1844 problems and 1927 short-term remedies from a vehicle manufacturer. To link the problems and remedies, they transform the text using term frequency – inverse document frequency (TD-IDF) and perform K-means clustering for problems and short-term remedies, while also linking the clusters.

Design knowledge retrieval

While ‘knowledge retrieval’ could assume a broad meaning across different areas of research and practise, we mention this in reference to the methods that ‘retrieve’ terms, phrases and segments that include components, issues, constraints and interactions. The outputs of such retrieval methods shall be considered as ‘design knowledge’ if it is possible to re-represent these as <entity, relationship, entity> triples that form constituents of an artefact that is relevant to the design process.

For example, a segment extracted from a transistor patent (Saeroonter et al. Reference Saeroonter, Beak, Lee, Baeck, Shin, Jeyong and Dohyung2021, p. 8) – ‘an insulating material is deposited on the whole surface of the substrate having the first semiconductor layer’ shall be encoded into triples such as <insulating material, is deposited, whole surface>, <whole surface, of, substrate> and < substrate, having, first semi-conducted layer> that form the constituents of the patent that shall be utilised as knowledge aid in the design process. To extract such relevant terms, phrases and segments, the scholars have adopted a couple of directions. First, using an ontology that shares the same domain as target text data so that relevant portions of the text are identified. Second, indexing the unstructured text data using a classification algorithm so that the search is restricted to the relevant portions.

To assist with case-based reasoning, Guo, Peng, and Hu (Reference Guo, Peng and Hu2013) build a domain ontology using 1000 injection moulding cases that were encountered in a Shenzhen-based company. They demonstrate using an Information-Content (IC) based similarity measure as to how the ontology aids in knowledge retrieval. For case-based retrieval, Akmal, Shih, and Batres (Reference Akmal, Shih and Batres2014) compare a variety of ontology-based similarity measures (e.g., Tversky’s Index, Dice’s Co-efficient) against numeric similarity measures (e.g., Wu-Palmer, Lin) to observe that the former deviated less from expert’s similarity scores. To retrieve CAD models using text inputs, Jeon et al. (Reference Jeon, Lee, Hahm and Suh2016) demonstrate how ontologies could be used as intermediaries. To assist CAD designers with design rule recommendations, Huet et al. (Reference Huet, Pinquie, Veron, Mallet and Segonds2021) create a knowledge graph around a design rule using relationships such as ‘has keyword’ (semantic context), ‘has material’ (engineering context) and ‘has employee’ (social context).

To relate phenomena and failure modes, Wang et al. (Reference Wang, Wu, Liu and Gao2010) extract a lightweight ontology from 400 aviation engine failure analysis reports and utilise the ontology to represent phenomena and failure modes as attribute-value vectors. They (2010, pp. 270, 271) then map the phenomena and failure mode vectors using an artificial neural network. To extract candidate components and responsibilities from the design rationale text, Casamayor, Godoy, and Campo (Reference Casamayor, Godoy and Campo2012) obtain sentences from IBM supported rationale suiteFootnote 13 and the UNICEN university repository.Footnote 14 Upon classifying the sentences as functional or nonfunctional using a semi-supervised approach, they extract verb phrases as candidate responsibilities and group these using the hierarchical clustering method to identify candidate components.

To understand the coupling between design requirements, Morkos, Mathieson, and Summers (Reference Morkos, Mathieson and Summers2014, p. 142) construct a bipartite network of terms and 374 requirements obtained from Toho (160) and Pierburg (214) manufacturing projects. They label a portion of these terms as ‘useful’ or ‘not useful’ and vectorise these using the network properties (29 features) and string length (1 feature). They train a neural network using the labelled dataset to classify the rest of the terms. Using the set of terms that are classified as ‘useful’, they reconstruct the bipartite network and retrain the classifier until the length of the list of terms is saturated (Morkos, Mathieson, and Summers Reference Morkos, Mathieson and Summers2014, p. 149).

To classify and index airtime faults, Tanguy et al. (Reference Tanguy, Tulechki, Urieli, Hermann and Raynal2016) train a support vector machines (SVM) classifier on 136,861 labelled documents that were obtained from the French Aviation Regulator – DGAC. To classify the causes of automotive issues, Xu, Dang, and Munro (Reference Xu, Dang and Munro2018) obtain titles and descriptions of 2420 issues from a Chinese automotive manufacturer. They retrieve cause-related phrases using a domain ontology and label these with the categories of the Fishbone diagram – Man, Machine, Material, Method and Environment. They use the labelled dataset to train a binary-tree-based SVM classifier.

To identify computer-supported collaborative technologies, Brisco, Whitfield, and Grierson (Reference Brisco, Whitfield and Grierson2020, p. 65) obtain Global Design Project text data from 104 students and classify the sentences into requirements, technologies and technology functionalities using RapidMinerStudio.Footnote 15 Lester, Guerrero, and Burge (Reference Lester, Guerrero and Burge2020, pp. 133–135) classify the chrome bug reportsFootnote 16 into requirements, decisions and alternatives using the Naïve Bayes algorithm to find that features selected using optimisation approaches (e.g., Ant colony) result in higher F-1 measure compared to document characteristics (e.g., TF-IDF).

To index manufacturing rules, Ye and Lu (Reference Ye and Lu2020) train a feedforward neural network with two hidden layers (128 and 32 neurons) using the embeddings of manufacturing rules and eight category labels. Song et al. (Reference Song, Lee, Choi and Kim2020) train a Bi-directional LSTM using 350 building regulation sentences to extract predicates and arguments. For example, in a design rule – ‘The roof height of the building must be 15 meters or less,’ the predicate is ‘be less’ and the arguments are ‘roof height’, ‘building’and ‘15 meters.’ To automatically extract design requirements, Fantoni et al. (Reference Fantoni, Coli, Chiarello, Apreda, Dell’Orletta and Pratelli2021) process tender documents of Hitachi Railway using a variety of ontologies and classify a sentence as a requirement if it includes certain keywords.

Summary

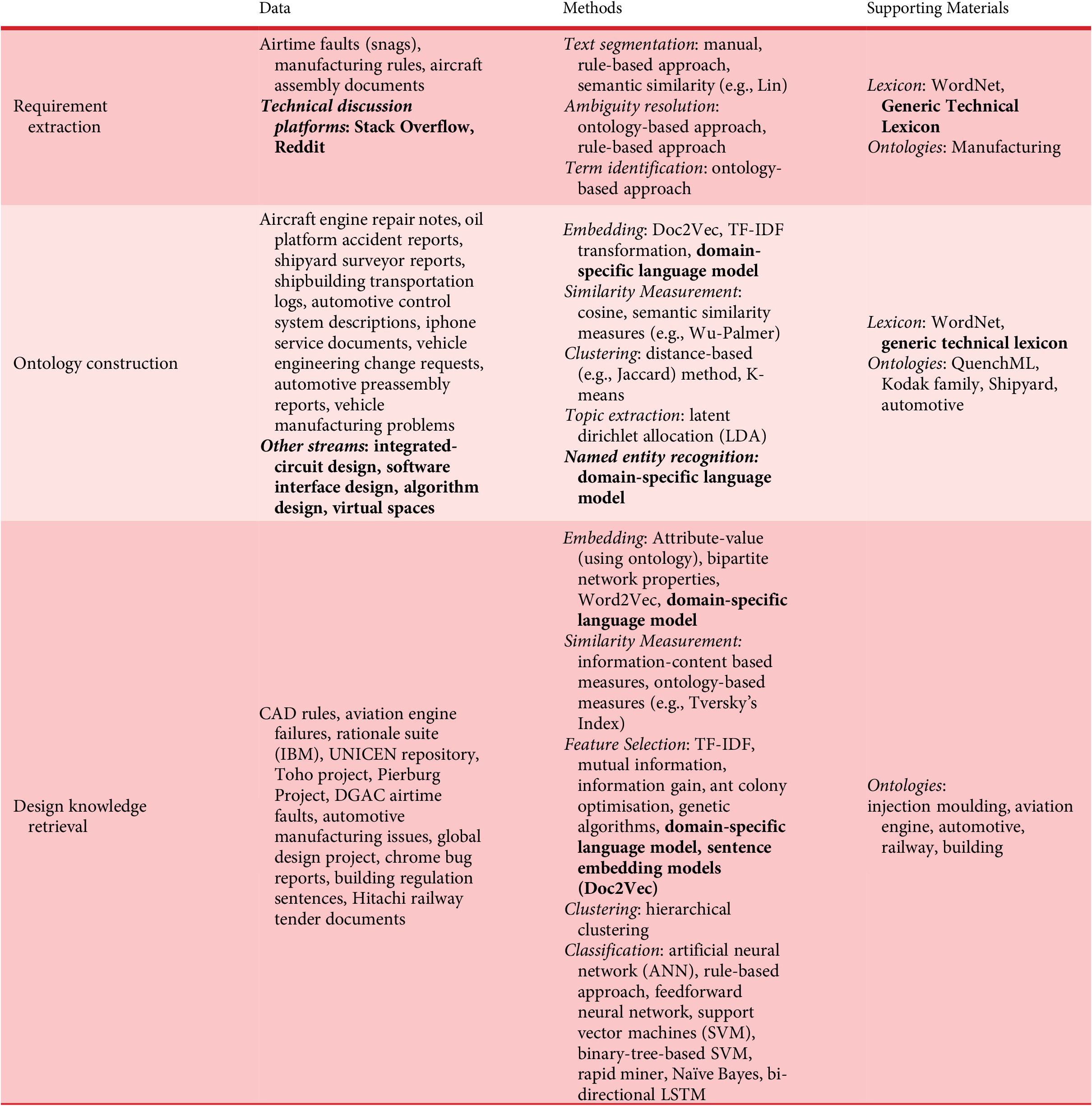

We summarise the NLP methodologies applied to internal reports in Table 2 according to data, methods, and supporting materials. We indicate the future possibilities of these in bold font. We use the same table format to summarise the literature review for the remaining types of data sources as well. Internal reports mainly include issues and remedies that are pertinent to a specific organisation or a domain. Scholars have used a variety of internal reports to process, extract ontologies, and classify sentences. They have also demonstrated how ontologies are used for effective knowledge retrieval.

Table 2. Summary of NLP methodologies and future possibilities (bolded) with internal reports

We can observe from the data column of Table 2 that internal reports have been utilised from a variety of domains: Aerospace, Shipbuilding and Automotive. Surprisingly, none of the methodologies has utilised data sources from the most popular ‘silicon-based streams such as Integrated Circuits, Software Architecture and Data Structures. While discussion platforms like Stack Overflow and Reddit may not be classified as those included within internal reports, they include the knowledge of issues and solutions that are found both in the industry and academia.

Upon training nearly 0.8 million labelled patent documents for a classification task, Jiang et al. (Reference Jiang, Hu, Magee and Luo2022) observe that the accuracy tends to be higher when the input feature vectors integrate text, image and meta information of the document compared to only-text and only-image feature vectors. Hence, the analysis of mere text in multimodal documents like transportation logs (Lee et al. Reference Lee, Kim, Huh, Cho, Park and Lee2013) may not reflect the entire design knowledge that is being communicated in these.

As understood from the list of data sources, the accessibility to internal reports is highly restricted. Although analyses of internal reports have a high probability of extracting design knowledge, these sources are also characterised by low information content, for example, 350 building regulation sentences (Song et al. Reference Song, Lee, Choi and Kim2020). While this caveat limits the performances of classifiers, the ontologies extracted from these also may not be comprehensive. Hence, it is necessary to aggregate various internal reports from a domain into a single source of natural language text, for example, NASA Memorandum on Space Mechanisms – lessons learned (Shapiro et al. Reference Shapiro, Murray, Howarth and Fusaro1995).

As far as the methods are concerned, although scholars have applied several state-of-the-art methods to perform NLP tasks such as classification and clustering, they are yet to utilise language models like BERT. While such language models are expected to perform poorly on domain documents (Fantoni et al. Reference Fantoni, Coli, Chiarello, Apreda, Dell’Orletta and Pratelli2021), it would be significantly useful to develop domain-specific language models, for example, BioBERT (Lee et al. Reference Lee, Jeon, Ahn and Kwon2020). These models shall be useful for obtaining embeddings and subsequent tasks such as Named Entity Recognition and Text Classification. Apart from the cost and resource limitations, training these language models also requires high amounts of text data that does not seem currently feasible with internal reports.

Term identification is a fundamental NLP problem that has not been given enough attention by design scholars apart from those that have utilised internal reports. The terms like ‘roller bearing’ reduce the ambiguity caused by individual words ‘roller’ and ‘bearing’. Since meaningful terms are made of two or more words (Tseng, Lin, and Lin Reference Tseng, Lin and Lin2007; Fantoni et al. Reference Fantoni, Apreda, Dell’Orletta and Monge2013), it is critically important to identify these before applying higher-level NLP tasks. Scholars have resorted to ontology-based approaches to identify these terms (Yang et al. Reference Yang, Kim, Hur, Cho, Han and Seo2018; Fantoni et al. Reference Fantoni, Coli, Chiarello, Apreda, Dell’Orletta and Pratelli2021). While ontology-based approaches are recommended over common-sense lexicon (e.g., WordNet), it is necessary to rely on domain-specific language models and generic- design- and technical-oriented lexicon to identify general terms (e.g., rough surface). Although such supports are hard to build, there has been recent progress in the literature that adopts patent databases to develop a generic lexicon (Sarica, Luo, and Wood Reference Sarica, Luo and Wood2020; Jang, Jeong, and Yoon Reference Jang, Jeong and Yoon2021).

Term disambiguation is another fundamental NLP problem, for example, the terms such as ‘cathodic protection anode bed’, ‘deep anode well’ and ‘deep ground bed’ are often used to refer to ‘cathodic protection well’ (Xu and Cai Reference Xu and Cai2021, p. 5). Since the ambiguity posed by these terms concerns the underlying meaning, the approach to resolve this issue should concern the measurement of semantic similarities among these terms. Gu et al. (Reference Gu, Xu, Wu, Yang and Ye2005, p. 108) resolve semantic conflicts between similar sentences, for example, ‘I will buy a bike’ and ‘I will buy a bicycle’ using a WordNet-based ontology – FloDL. Such a type of semantic conflict resolution is hardly relevant to industrial applications. While usage of a common-sense lexicon like WordNet is not recommended for such tasks, it is necessary to obtain true embeddings of these terms using domain-specific language models so that cosine similarity reflects ‘nearly’ actual similarity.

3.2. Design concepts

Often associated with the develop phase of the design process (as indicated in Figure 3), design concepts are generated through search, retrieval, association and selection. The NLP methodologies applied to these stages need not use only concept descriptions as primary text sources but also the problems, keywords, source of stimuli, etc.

Concept search

To formulate a comprehensive set of keywords to search for concepts, researchers have sought WordNet for identifying troponyms (‘prevent’ ➔ ‘inhibit’) (Cheong et al. Reference Cheong, Chiu, Shu, Stone and McAdams2011, p. 3; Linsey, Markman, and Wood Reference Linsey, Markman and Wood2012, pp. 3, Reference Akmal, Shih and Batres4), bridge verbs (Chiu and Shu Reference Chiu and Shu2007, p. 50), semantically distant verbs (Chiu and Shu Reference Chiu and Shu2012, pp. 272, Reference Yan, Zanni-Merk, Cavallucci and Collet291) and morphological nouns (Lee, Mcadams, and Morris Reference Lee, Mcadams and Morris2017, p. 5). Chakrabarti et al. (Reference Chakrabarti, Sarkar, Leelavathamma and Nataraju2005, pp. 119–121) provide a systematic approach to searching and retrieving biological stimuli based on the SAPPhIREFootnote 17 model. In the Action construct of the SAPPhIRE model, for instance, they propose that the search could be a combination of verb, noun and adjective. Rosa, Cascini, and Baldussu (Reference Rosa, Cascini and Baldussu2015) build upon the approach of Chakrabarti et al. (Reference Chakrabarti, Sarkar, Leelavathamma and Nataraju2005) by combining SAPPhIRE and Function-Behaviour-Structure to form a unified ontology for biomimicry.

To effectively search for concepts of biological species, Rosa et al. (Reference Rosa, Rovida, Vigano and Razzetti2011) develop a structured database of these and group these using high-level functions that are represented using <verb, noun, predicate> where the predicate is represented as <preposition, noun>. Vandevenne et al. (Reference Vandevenne, Verhaegen, Dewulf and Duflou2015, pp. 21, 22) use the k-nearest neighbours (k-NN) algorithm to index the AskNatureFootnote 18 database by classifying 1531 unique analogical transfer strategies into the following levels (2015, p. 25): group (e.g., move or stay put, modify), subgroup (e.g., attach, adapt) and function (e.g., temperature, compression). Chen et al. (Reference Chen, Tao, Li, Liu, Li and Tang2021) examine 20 AskNature pages to extract meaningful keywords and structure–function knowledge using, respectively, TF-IDF values and selected dependency patterns.

The above-stated contributions aim to enhance the concept search w.r.t. the biological domain. We also review some approaches that sought other domains. To improve the quality of concepts generated by architects (Segers, de Vries, and Achten Reference Segers, de Vries and Achten2005), De Vries et al. (Reference De Vries, Jessurun, Segers and Achten2005) integrate WordNet-based word graphs and a sketching canvas. To assist novice designers to form domain-specific keywords, Lin, Chi, and Hsieh (Reference Lin, Chi and Hsieh2012, p. 356) map user needs and domain concepts through the so-called ‘OntoPassages’ that were extracted using a domain ontology, which was built using 111 documents belonging to the National Center for Research on Earthquake Engineering, Taiwan.

To recommend a suitable design method for a problem description, Fuge, Peters, and Agogino (Reference Fuge, Peters and Agogino2014) obtain 886 case study descriptions and method labels from human-centred design (HCD) Connect. They use latent semantic analysis (LSA) to obtain the vectors of the descriptions and train the following classifiers using the labelled dataset: Random Forest, SVM, Logistic Regression and Naïve Bayes. To enhance problem definition, Chen and Krishnamurthy (Reference Chen and Krishnamurthy2020) facilitate human-AI collaboration in completing problem formulation mind maps with the help of ConceptNet and the underlying relationships.

Concept retrieval

The contributions in this section are primarily retrieval systems that are built under the assumption that the problem is well defined, and the search keywords are known beforehand. Chou (Reference Chou2014) and Yan et al. (Reference Yan, Zanni-Merk, Cavallucci and Collet2014) adopt the Su-field problem modelling approach to systematically obtain ideas through TRIZ and manually evaluate these using a fuzzy-linguistic scale. Kim and Lee (Reference Kim and Lee2017) integrate various design-by-analogy approaches into an interface called Bionic MIR that allows retrieval of biological systems based on physical, biological and ecological relations.

In a tool named Retriever, for a search keyword (e.g., chair) and a relation (e.g., ‘is used for’) from ConceptNetFootnote 19 categories, Han et al. (Reference Han, Shi, Chen and Childs2018a, pp. 467–469) retrieve three re-representations (e.g., chair, bench, sofa) and corresponding entities (e.g., leading a meeting, growing plants, reading a book) that are connected by the selected relation. In another tool named Combinator, for the same inputs, Han et al. (Reference Han, Shi, Chen and Childs2018b, p. 12/34) retrieve the related entity (noun, verb, adjective) and concatenate it with the search keyword, for example, ‘Handbag’ ➔ ‘Origami Handbag’.

To support the rapid retrieval of concepts, Goucher-Lambert et al. (Reference Goucher-Lambert, Gyory, Kotovsky and Cagan2020) employ LSA on a design corpus to identify a near or far concept from the current concept. They then provide the concept thus identified from the corpus as a stimulus to the designers for generating more concepts. To demonstrate retrieval of concepts using the C-K theory (Hatchuel, Weil, and Le Masson Reference Hatchuel, Weil and Le Masson2013), Li et al. (Reference Li, Chen, Zheng, Wang, Jiang and Jiang2020) extract a healthcare knowledge graph by mining SVO tripes of the form:  $ \underset{amod}{\underbrace{NP_{sub}}}\overset{nsubj}{\to}\underset{advmod}{\underbrace{VP}}\overset{dobj}{\to}\underset{amod}{\underbrace{NP_{obj}}} $ from 18,000 Chinese websites. They also populate an FBS-based ‘nursing bed’ knowledge graph using experts.

$ \underset{amod}{\underbrace{NP_{sub}}}\overset{nsubj}{\to}\underset{advmod}{\underbrace{VP}}\overset{dobj}{\to}\underset{amod}{\underbrace{NP_{obj}}} $ from 18,000 Chinese websites. They also populate an FBS-based ‘nursing bed’ knowledge graph using experts.

Concept association

Once the concepts are generated using the search and retrieval methods, it is necessary to group similar concepts, especially when a large number of concepts are crowdsourced. In this section, we review NLP contributions that associate concepts predominantly using graph-based approaches. Zhang et al. (Reference Zhang, Kwon, Kramer, Kim and Agogino2017, p. 2) group 930 concepts (described as paragraphs) that were obtained from a human-centred design courseFootnote 20 using Word2Vec and the hierarchical clustering algorithm. Ahmed and Fuge (Reference Ahmed and Fuge2018, p. 11,12/30) measure topic level association for 3918 ideas that were submitted to OpenIDEOFootnote 21 using a Topic Bison Measure, which indicates if a topic pair co-occurs in an idea as well as the proportions of the pair.

To examine the effectiveness of crowdsourced stimuli, Goucher-Lambert and Cagan (Reference Goucher-Lambert and Cagan2019) crowdsource concepts as three nouns and three verbs for 12 design problems and categorise these as near, far and medium stimuli based on the frequency and WordNet-based path similarity. He et al. (Reference He, Camburn, Liu, Luo, Yang and Wood2019) crowdsource text descriptions of thousands of ideas to future transportation systems via Amazon Mechanical Turk. They (2019, pp. 3, 4) form a coword network of these ideas and use MINRESFootnote 22 to extract core ideas from the network. Liu et al. (Reference Liu, Wang, Li and Liu2020, p. 6) summarise 1757 scientific articles (solutions to a transmission problem) by building Word2Vec-based semantic networks around the central keywords – {transmission, line, location, measurement, sensor and wave}. Camburn et al. (Reference Camburn, Arlitt, Anderson, Sanaei, Raviselam, Jensen and Wood2020a, Reference Camburn, He, Raviselvam, Luo and Wood2020b) utilise HDBSCANFootnote 23 for clustering crowdsourced concepts and TextRazorFootnote 24 for extracting entities and topics from these.

Concept selection

In this section, we review the contributions that have utilised NLP supports to evaluate and select concepts. These concepts primarily aim to measure one or more success metrics (e.g., novelty). Delin et al. (Reference Delin, Sharoff, Lillford and Barnes2007, pp. 125–129) use bipolar adjectives obtained from the British National Corpus (BNC) to rate concepts. Strug and Slusarczyk (Reference Strug and Slusarczyk2009) evaluate floor plan concepts using the frequently occurring patterns in the hypergraph representations of past floor plans. To understand the concept selection phenomenon, Dong et al. (Reference Dong, Sarkar, Yang and Honda2014) model the change of linguistic preferences using the Markov Process and calculate the transition probabilities. To calculate creativity, Gosnell and Miller (Reference Gosnell and Miller2015, pp. 4–6) tie 27 concepts with some adjectives and match these against the terms – ‘innovation’ and ‘feasibility’ using DISCO.Footnote 25

Chang and Chen (Reference Chang and Chen2015) obtain 108 ideas for future personal computers from DesignBoomFootnote 26 and mine the idea-related information using RapidMiner. They apply K-means clustering to group the ideas and Analytic Hierarchy Comparison to evaluate these. Siddharth, Madhusudanan, and Chakrabarti (Reference Siddharth, Madhusudanan and Chakrabarti2019b, pp. 3–5) measure the novelty of a concept by comparing it against all entries in a reference product database across SAPPhIRE constructs and using a WordNet-based similarity. To examine the success of ideas that were submitted to Kickstarter – a crowdfunding platform, Lee and Sohn (Reference Lee and Sohn2019) shortlist 595 ideas in the Software-Technology category. They apply LDA to extract the most important 50 topics from the text descriptions of these ideas. Using the 50 topics and the funding received by the ideas, they conduct a conjoint analysis to examine the contribution of a topic to the success of an idea (2019, pp. 107, 108).

Summary

As we have summarised in Table 3, the NLP contributions that are pertinent to design concepts assist concept search, retrieval, association and selection. Scholars have utilised a variety of knowledge bases to search and retrieve concepts, while also recommending novel ways to expand search keywords. Since crowdsourcing concepts have recently emerged as an alternative to traditional laboratory-based design studies, scholars have therefore found the need to associate and group the concepts for assessing these. The NLP applications to concept selection are still emerging as there exist many metrics and many ways to compute these.

Table 3. Summary of NLP methodologies and future possibilities (bolded) with design concepts.

While scholars have utilised both general and domain-specific text sources for searching concepts, it is also possible to explore more text sources such as Encyclopaedia and How and Stuff Works. One of the most consulted platforms – YouTube seems unexplored. Although being primarily a video-sharing platform, the descriptions, comments and captions on YouTube are still useful text sources for inspiration.

To retrieve suitable search keywords, in addition to NLP-centric approaches like dependency parsing and TF-IDF, it is necessary to construct design knowledge graphs for specific streams such as engineering, architecture and software. Such knowledge graphs are likely to recommend new terms as well as assist with text completion for queries. For example, if we begin to search for ‘bearing’ and next-word predictions are ‘lubricant’ and ‘load’, we could choose ‘bearing lubricant’ and leverage from next word predictions like ‘density’, ‘film’ and ‘material’. Common-sense knowledge graphs like Google (and YouTube) make predictions based on many senses of the word ‘bearing’ and do not return the words as we have indicated in the example.

WordNet and ConceptNet have been the main supporting pillars for concept search as well as retrieval, while generic ontologies such as FBS and SAPPhIRE have been utilised to largely channel the search and retrieval processes. Since creative concepts emerge from the marriage of diverse sets of domains, a common-sense lexicon like WordNet is still a preferable supporting material. Similarly, scholars can also use readily available search methods like Google APIs to retrieve results from sources such as YouTube and patent databases. However, while retrieving concepts from a domain-specific knowledge source, it makes sense to utilise the domain-specific ontologies for query formation.

Alongside ontologies, scholars could benefit from the embeddings of common-sense language models such as BERT and GPT-xFootnote 27 to obtain nearby search keywords, compare search results, etc. Since the concept search and retrieval are largely exploratory and preferably involve diverse domains, the usage of common-sense language models shall not limit the desired performance of the NLP applications. While the same applies to concept association as well, the scholars shall also utilise domain-specific language models if the design problem is quite domain-specific.

Concept selection involves one or more metrics such as novelty, value, feasibility, and so forth. Scholars could benefit from an affective lexicon to rate the design concepts and carry out systematic approaches to analyse and present the results. Since the theory behind these metrics is yet to be consolidated, the NLP applications are still in nascent stages. Scholars can only benefit from preliminary NLP tasks such as similarity measurement, frequency analysis and term retrieval to assist them with one or more steps in the concept selection process.

Design theorists could benefit from the NLP methods to examine how successful concepts are selected. For example, Arts, Hou, and Gomez (Reference Arts, Hou and Gomez2021) observe the causality between frequencies of unigrams, bigrams and trigrams and the likelihood of a patent getting an award, for example, Nobel, Lasker, Bower and A.M. Turing. Similarly, scholars could leverage the text descriptions of concepts that have been selected for awards like Red Dot (indicates novelty or surprise)and Malcolm Baldrige National Quality Award (indicates value). Moreover, to understand the actual value of a concept, scholars could also utilise the sales information. For instance, Argente et al. (Reference Argente, Baslandze, Hanley and Moreira2020) connect the number of product units sold from Nielsen’s Retail Scanner data with the ‘value’ of a patent.

3.3. Discourse transcripts

Design communication is often documented as discourse transcripts in protocol studies, think-aloud experiments and recorded design workshops. Starting with a design issue or a problem, designers communicate subissues, solutions, related artefacts, arguments and justifications. Identifying and analysing the set of concepts that arise during such communications allows scholars to reveal a variety of insights about the design process.

A sequence of closely-related concepts within a segment in discourse transcripts represents a period of coherent communication, which could affect the design outcomes in terms of success metrics such as novelty and feasibility (Dong Reference Dong2007). To identify such concepts, scholars have extracted nouns, phrases, segments and topics, and associated these using vector-based or corpus-based similarity measurement techniques. We review such NLP-based approaches that are currently preferable over traditional linkographs (Botta and Woodbury Reference Botta and Woodbury2013).

Concept identification

Scholars have adopted different approaches to extract key concepts such as topics, words, ontology-based entities and n-grams. Wasiak et al. (Reference Wasiak, Hicks, Newnes, Dong and Burrow2010, p. 58) analyse emails to discover topics such as functions, performance, features, operating environment, materials, manufacturing, cost and ergonomics. From the email exchanges in a traffic wave project, Lan, Liu, and Lu (Reference Lan, Liu and Lu2018, p. 7) map word-frequency vectors and topic vectors (tasks, timestamps, persons, organisations, locations, input/output, techniques/tools) using Deep Belief Network – DBN (Bengio Reference Bengio2009; Lan, Liu, and Feng Lu Reference Lan, Liu and Feng Lu2017).

Goepp et al. (Reference Goepp, Matta, Caillaud and Feugeas2019, p. 165) identify the following speech acts from email exchanges: Information, Explication, Evaluation, Description and Request. These speech acts were associated with a set of verbs, for example, ‘Explication’ was associated with ‘explain’ and ‘clarify’. To capture significant phrases that denote design changes, using the DTRS7 dataset, Ungureanu and Hartmann (Reference Ungureanu and Hartmann2021) extract n-grams (0 < n < 8) based on frequency analysis and examine how short terms progress to a variety of long terms; for example, ‘a little’ ➔ ‘a little bit bigger’, ‘a little splash of colour’ (2021, p. 12).

Design process characterisation

To characterise the design process, scholars have aggregated the concepts thus identified from discourse transcripts into a whole (e.g., a semantic network) and performed analyses as reviewed below.

To characterise coherence in design communication, Dong (Reference Dong2005, pp. 450, 451) obtain vector representations of emails and memos using LSA and measure the standard deviations of these w.r.t. their centroid (mean). A low standard deviation of the set of vector representations is considered to denote a high coherence in communication (Dong, Hill, and Agogino Reference Dong, Hill and Agogino2004, p. 381). In an alternative approach, Dong (Reference Dong2006, pp. 39, 40) identifies segments by linking noun sequences using lexical relationships obtained from WordNet.

Based on the word occurrences of design alternatives within a time interval, Ji, Yang, and Honda (Reference Ji, Yang and Honda2012) model the relationship between preferences using the Preference Transition Model and Utterance-Preference Model. Menning et al. (Reference Menning, Grasnick, Ewald, Dobrigkeit and Nicolai2018, pp. 139, 142) use cosine similarity between LSA vectors of consecutive discourse entities to measure coherence. To characterise the uncertainty of the design process, Kan and Gero (Reference Kan and Gero2018) measure the text entropy of the transcripts that were obtained from protocol studies.

Georgiev and Georgiev (Reference Georgiev and Georgiev2018) utilise 49 WordNet-based semantic similarity measures to build a noun-based semantic network of students’ and instructors’ conversations as given in the DTRS10 dataset. They plot the average semantic similarity, information content, polysemy and level of abstraction w.r.t. time for characterising the design communication. Casakin and Georgiev (Reference Casakin and Georgiev2021) train regression models to establish a relationship between these network properties and the following metrics of design outcomes: originality, feasibility, usability, creativity and value.

Summary

As we summarise in Table 4, the NLP applications built using discourse transcripts are limited in comparison with other types of text sources due to the least accessibility and information content. While emails do not strictly qualify as ‘transcriptions’ of design communication, the currently available data sources are mainly DTRS datasets. Scholars could additionally explore panel discussions, protocol studies and client interactions (e.g., architect and customer). The accessibility to such sources is crucial for the development of NLP applications regarding discourse transcripts.

Table 4. Summary of NLP methodologies and future possibilities (bolded) with discourse transcripts

Beyond the likelihood of obtaining one or more of these sources, the probability of extracting meaningful design knowledge from these is quite limited. For instance, the usage of frequent colloquial phrases like ‘sort of too big’ limits the possibility of applying NLP methods to these (Glock Reference Glock2009). Moreover, a transcription, unlike any text document, involves a timestamp associated with its parts. Several factors such as lack of context, poor grammar, colloquial language and time variation are beyond what state-of-the-art NLP could handle. Scholars could still conduct preliminary analyses as they have done so far in terms of segment identification and topic discovery. Such analyses could also be benefitted from common-sense language models because verbal communication involves many common-sense terms. If required, scholars may also utilise domain-specific ontologies to recognise the domain terms in their analyses.

3.4. Technical publications

Technical publications include over 92 million patents and a portion of over 174 million records that comprise textbooks, journal articles and conference proceedings.Footnote 28 Due to their coverage, size and accessibility, these sources carry a significant advantage over other sources in terms of knowledge aids for the design process. Moreover, since these sources are peer-reviewed and adhere to grammar and typographical norms, NLP tasks are well-suited to these (Wang, Lu, and Loh Reference Wang, Lu and Loh2015).

Patent documents

As mentioned in section ‘Design knowledge retrieval’, design knowledge that is extracted from the text could be in the form of terms, phrases and segments, that should represent one or more constituents of an artefact that is relevant to the design process. Moreover, if re-represented, such terms, phrases and segments of text must assume the form <entity, relationship, entity> to store these in a machine-readable form. Being a large body of technical inventions, patents offer a rich source of design knowledge that is also characterised by high information content, quality and technicality.

To extract design knowledge from patents, scholars have primarily utilised ontologies to channel their extraction approaches. To extract issue-related concepts and relationships (noun–noun, noun–adjectives), using a WordNet-based similarity, Liu et al. (Reference Liu, Liang, Kwong and Lee2010, pp. 4, 5) compare sentences in 46 patent abstracts against an ontology (list of terms) of issues, disadvantages and challenges. Moehrle and Gerken (Reference Moehrle and Gerken2012) use a domain ontology to extract bigrams and trigrams from 522 patents of SUBARU’s four-wheel drive. They use the terms thus extracted to measure patent–patent similarity using a variety of measures (2012, p. 817) such as Jaccard, Inclusion, Cosine and DSS-Jaccard.

Liang et al. (Reference Liang, Liu, Kwong and Lee2012) adopt a sentence graph approach and Issue-Solution-Artefact ontology to extract design rationale from 18,920 Inkjet Printer patents that were assigned to Hewlett–Packard (HP) and Epson. Using a similar dataset of inkjet printer patents, Liang et al. (Reference Liang, Liu, Chen and Jiang2018) develop the topic-sensitive content extraction (TSCE) model and verify the model by testing the effect of segment length, parameters, sample count and topic count. Fantoni et al. (Reference Fantoni, Apreda, Dell’Orletta and Monge2013) propose a heuristic approach to extract the terms that correspond to functions, behaviours and structures (FBS ontology) from patents. In function, for example, they consider the frequent combinations of verb–noun and verb–object.

To discover the structural form assumed by a collection of patents, Fu et al. (Reference Fu, Cagan, Kotovsky and Wood2013a) perform LSA on 100 randomly selected US patent documents. They consider only verbs (functions) and only nouns (surfaces) to perform two different LSAs and thereby obtain corresponding patent vectors. Using the cosine similarities, they discover the most optimal structural form – hierarchy using which they construct a patent network. They also label the clusters of patents with the closest terms (verbs or nouns).

Upon training the abstracts of 500,000 patents (CPC-F subsection) using Word2Vec, Hao, Zhao, and Yan (Reference Hao, Zhao and Yan2017) obtain the embeddings of 1700 function terms (e.g., grill, cascade) that are given by Murphy et al. (Reference Murphy, Fu, Otto, Yang, Jensen and Wood2014). They obtain a patent vector as a circular convolution (![]() $ \otimes $) of function terms that are present in the corresponding patent abstract. To support efficient retrieval of patent images, Atherton et al. (Reference Atherton, Jiang, Harrison and Malizia2018, pp. 247, 248) annotate images in USPTO with geometric features and functional interactions extracted from claims. Song and Fu (Reference Song and Fu2019) obtain three patent-word matrices using 1060 patents and three sets of words corresponding to components, behaviours and materials. They apply a nonnegative matrix factorisation algorithm to these matrices to extract significant topics.

$ \otimes $) of function terms that are present in the corresponding patent abstract. To support efficient retrieval of patent images, Atherton et al. (Reference Atherton, Jiang, Harrison and Malizia2018, pp. 247, 248) annotate images in USPTO with geometric features and functional interactions extracted from claims. Song and Fu (Reference Song and Fu2019) obtain three patent-word matrices using 1060 patents and three sets of words corresponding to components, behaviours and materials. They apply a nonnegative matrix factorisation algorithm to these matrices to extract significant topics.

While patents offer design knowledge in specific domains, due to the totality of technology space covered by the patent database, scholars have also attempted to construct WordNet-equivalent lexicon as well as engineering ontologies. Vandevenne et al. (Reference Vandevenne, Verhaegen, Dewulf and Duflou2016, p. 86) analyse titles and abstracts in a randomly drawn set of 155,000 patents from the EPO databaseFootnote 29 to discover that nouns are abstract (e.g., system, device) and are meaning enablers (e.g., temperature, pressure) that also point towards the product (e.g., valve, display).

To identify the primary users of technological inventions that are documented as patents, Chiarello et al. (Reference Chiarello, Cimino, Fantoni and Dell’Orletta2018) extract a generic list of users in terms of job positions, sports, hobbies, animals, patients and others. They identify these generic users in selected patentsFootnote 30 using a semi-automatic approach and annotate the sentences using these. They then feed the annotated dataset of sentences into SVM and multilayer perceptron for named entity recognition (NER).

Sarica, Luo, and Wood (Reference Sarica, Luo and Wood2020) obtain embeddings of over 4 million unique terms from the titles and abstracts across the US patent database. Using a web-based tool called TechNet,Footnote 31 they facilitate a search for these terms (Sarica et al. Reference Sarica, Song, Luo and Wood2021) and utilise the embeddings of these to construct a similarity network (Sarica and Luo Reference Sarica and Luo2021). To create an engineering alternative to WordNet, Jang, Jeong, and Yoon (Reference Jang, Jeong and Yoon2021) collect 34,823 automotive patents (IPC B60). They examine the dependency patterns in abstracts and claim to extract dependency relations that form the TechWord network. For the words in the network, they create TechSynset by capturing the WordNet synonyms and calculating the cosine similarity between BERT-based embeddings of individual pairs.

Scholars have demonstrated how patents could act as stimuli for generating concepts as well as indirect supports for problem-solving through TRIZ-based tools (Cascini and Rissone Reference Cascini and Rissone2004; Vincent et al. Reference Vincent, Bogatyreva, Bogatyrev, Bowyer and Pahl2006; Zanni-Merk, Cavallucci, and Rousselot Reference Zanni-Merk, Cavallucci and Rousselot2009; Prickett and Aparicio Reference Prickett and Aparicio2012). In the effort to discover patent network structures, Fu et al. (Reference Fu, Cagan, Kotovsky and Wood2013a) include a design problem in their LSAs to identify a starting point for navigating the patent network. Given a starting point in the network, Fu et al. (Reference Fu, Chan, Cagan, Kotovsky, Schunn and Wood2013b) consider patents at one and three hops as ‘near’ and ‘far’, respectively. They examine the effect of ‘near’ and ‘far’ patents on novelty and quality when these patents are given stimuli alongside the design problem.

To support patent retrieval, Murphy et al. (Reference Murphy, Fu, Otto, Yang, Jensen and Wood2014) adopt a Zipfian statistic approach to extract 1700 function (verbs) terms from 65,000 patent documents and organise these into primary, secondary and tertiary w.r.t. the functional basis (Stone and Wood Reference Stone and Wood2000). They index 2,75,000 patents using these functions that also act as query elements. To map design problems and patents via the Functional Basis, Longfan et al. (Reference Longfan, Yana, Yan and Cavallucci2020) train a semi-supervised learning algorithm based on Naïve Bayes and E-M algorithm using 1666 patents and the texts labelled with function categories. In another approach, they extract meaningful terms from patents using a frequency-based statistic (2020, p. 8) and cluster the patents according to the terms.

Although several approaches to searching and managing patents exist (Russo and Montecchi Reference Russo and Montecchi2011; Montecchi, Russo, and Liu Reference Montecchi, Russo and Liu2013; Dirnberger Reference Dirnberger2016), it is necessary to simplify the patent documents before utilising these as stimuli for generating concepts. To form keyword summaries of patent search results, Noh, Jo, and Lee (Reference Noh, Jo and Lee2015) conduct an experimental study to find that it is best to extract 130 keywords from abstracts using TF–IDF and Boolean expression strategies.

Sarica et al (Reference Sarica, Song, Luo and Wood2021) propose TechNet (Sarica, Luo, and Wood Reference Sarica, Luo and Wood2020) as a means to search and expand technical terms, which were extracted from the titles and abstracts in the patent database. To facilitate cross-domain term retrieval, Luo, Sarica, and Wood (Reference Luo, Sarica and Wood2021) organise the output of TechNet into various domains that are associated with a knowledge distance measure. Souza, Meireles, and Almeida (Reference Souza, Meireles and Almeida2021) train an LSTM-based sequence-to-sequence (abstract-title ➔ summary) neural network using 7000 patents for generating abstract summaries of patent documents. They group the summaries thus generated using a semantic similarity measure (Al-Natsheh et al. Reference Al-Natsheh, Martinet, Muhlenbach and Zighed2017) and subsequently identify patent clusters.

Patents not only document technological inventions but are also assigned to specific domains, companies, inventors and countries. Using such meta-data, scholars have developed technology maps for exploring design opportunities. Jin, Jeong, and Yoon (Reference Jin, Jeong and Yoon2015) extract meaningful terms from patents and use bag-of-words (BOW) approach to create patent vectors that form a technology map. Trappey et al. (Reference Trappey, Trappey, Peng, Lin and Wang2014) adopt a similar approach to patents and clinical reports that concern dental implants. Altuntas, Erdogan, and Dereli (Reference Altuntas, Erdogan and Dereli2020) use the same dental implant patents and obtain vectors of these using the patent-class matrix. They cluster the patent vectors using the following methods: E-M algorithm, self-organising map and density-based method.

To explore new design opportunities as well as to aid in idea generation, Luo, Yan, and Wood (Reference Luo, Yan and Wood2017) develop a technology space map using all CPC 3-digit classes and the co-citation proximity measures among these. They implement the map using support called InnoGPSFootnote 32 which provides several interactive features that are analogous to Google Maps. The support tool mainly allows the users to position themselves on the technology map, identify the closest domains and navigate the technology space map. Luo et al. (Reference Luo, Song, Blessing and Wood2018) conduct an experimental study to demonstrate how the total technology space map is useful for exploring ‘white space’ design opportunities related to artificial neural networks and spherical rolling robots.

To identify new technology opportunities relating to carbon-fibre heating fabric, Russo, Spreafico, and Precorvi (Reference Russo, Spreafico and Precorvi2020) download 16,743 patents and extract Subject–Action–Object triples where the Subject is ‘heating fibre’. Assuming that Action represents a function, they mine dependency patterns to extract applications (e.g., ‘applied as’, ‘used for’) and requirements (e.g., ‘enhance…’, ‘un…ability’) pertinent to the heating fibre technology. To explore new technology opportunities using products, Lee et al. (Reference Lee, Yoon, Kim, Kim, Kim, So and Kang2020) use the patent–product databaseFootnote 33 developed at the Korea Institute of Science and Technology Information (KISTI). They extract Word2Vec embeddings for products and technologies to create an exploration map that allows the identification of technologies closer to products and vice-versa. They also propose 10 indices to assess the performance of technology exploration.

To identify technology opportunities in 3G that could be leveraged in 4G, Zhang and Yu (Reference Zhang and Yu2020) extract effect phrases from the corresponding patents using a Bi-LSTM with a conditional random field layer. They label the words in the sentences using {Begin, Inside, Other} of an effect phrase and feed the labelled data into the neural network. They combine the effect phrases in a patent using a weighted TF-IDF vector and use topic clustering to group the patents. Depending on the number of patents on each topic, they calculate the technology opportunity score (2020, pp. 560, 561).

Textbooks and handbooks

Several design studies support the notion that exploring concepts from distant domains could lead to novel design solutions. Adhering to this consensus, Shu and colleagues have conducted analyses on a biological textbook (Purves et al. Reference Purves, Sadava, Orians and Heller2003) to understand the characteristics that support bio-inspiration. Shu (Reference Shu2010, p. 510) understands that the textbook includes candidates for design-by-analogy, for example, (‘bacteria’, ‘fill’, ‘pores of clothes’) ➔ ‘prevent dirt’. Cheong et al. (Reference Cheong, Chiu, Shu, Stone and McAdams2011, pp. 4, 5) identify that in the text, domain and common verbs co-occur, for example, ‘received and converted or transduced’.

To capture causally related biological functions, Cheong and Shu (Reference Cheong and Shu2014, pp. 1–4) locate and extract pairs of enabler-enabled functions using syntactic rules, for example, ‘Lysozymes destroy bacteria to protect animals’. Upon searching in the same textbook, Lee, Mcadams, and Morris (Reference Lee, Mcadams and Morris2017, pp. 5–7) identify morphological nouns that co-occur with the keywords in a single paragraph. For every noun, they calculate a modified TF-IDF metric (2017, pp. 5, 6) for usage in LSA.

The following articles describe approaches to extracting design knowledge from published handbooks. Hsieh et al. (Reference Hsieh, Lin, Chi, Chou and Lin2011) mine the Table of Contents, Definitions and Index from an Earthquake Engineering Handbook to develop a domain ontology. Kestel et al. (Reference Kestel, Kuegler, Zirngibl, Schleich and Wartzack2019) apply several text mining steps to the published document that describes the standard procedure for simulation of multibolted joints (VDI 2230 Part 2). They extract structured data with specific attributes (e.g., part, contact, load, relation) from the text and utilise these to build ontologies that are integrated with finite element analysis (FEA) tools.

Richter, Ng, and Fallah (Reference Richter, Ng and Fallah2019) obtain the design standards and guidelines for landfilling in different provinces of Canada. They conduct word-frequency analyses using metrics such as Gunning-Fox Index and Lexical Density. Xu and Cai (Reference Xu and Cai2021) mine 300 sentences from the underground utility accommodation policies in the departments of transportation such as Indiana and Georgia. They use utility-product and spatial ontologies to process and label the terms in the sentences with seven categories (2021, p. 7). They examine the POS and category patterns in these sentences to extract hierarchical knowledge structures.

Scientific articles

Unlike patents and books, the overall motive behind processing scientific articles is unclear, mainly due to a limited number of contributions. We, therefore, review these contributions as follows by explicitly stating the purpose beforehand. To summarise engineering articles by discovering their micro-and macro-structures, Zhan, Liu, and Loh (Reference Zhan, Liu and Loh2011, pp. 5, 6) train Naïve Bayes and SVM classifiers by labelling 1425 sentences from 246 research articles into one of the four categories: background, contribution, methodology, results and conclusions.

To identify the sentences that could aid in bio-inspiration, Glier, McAdams, and Linsey (Reference Glier, McAdams and Linsey2014, pp. 5–7) represent sentences from five biology journals using a feature vector of 1869 terms and label these as ‘useful’ and ‘not useful’ for bio-inspiration. They feed the labelled dataset into the following classifiers: SVM, k-NN and Naïve Bayes. To build a bridge between biological and engineering domains and thus aid bio-inspiration, Vandevenne et al. (Reference Vandevenne, Verhaegen, Dewulf and Duflou2016, p. 82) map product and organism aspects upon processing 155,000 EPO patents and 8011 papers from the Journal of Experimental Biology.

To create a generic engineering ontology, Shi et al. (Reference Shi, Chen, Han and Childs2017, pp. 4–6) develop a large semantic network called B-Link by extracting and combining entities from technical websites and articles, respectively, using ScrapyFootnote 34 and Elsevier APIKey.Footnote 35 To understand the evolution of typology in design research, education and practise, Won and Park (Reference Won and Park2021) collect 222 termsFootnote 36 from over 300 documents that include design publications, abstracts and discover that these terms have evolved from being object-based to concept-based.

To understand the definitions of contemporary technologies such as Artificial Intelligence and Industry 4.0, Giordano, Chiarello, and Cervelli (Reference Giordano, Chiarello and Cervelli2021) identify these terms in the sentences of Elsevier-Scopus abstracts and filter the cases where the neighbour of these terms adhere to a pattern, for example, ‘defined as’, ‘refer to’ (2021, p. 10). They further analyse the frequencies of the constituents of these sentences so filtered. To understand the field of product-service systems (PSS), Rosa et al. (Reference Rosa, Wang, Stark and Rozenfeld2021) develop a concept map by analysing 29 articles relating to the design of PSS.

Summary

We have summarised the NLP contributions that use technical publications in Table 5. Due to high accessibility, information content, quality and technical density, technical publications have been quite popular sources for developing NLP applications. The methodologies have also adopted state-of-the-art NLP methods while also utilising domain ontologies wherever applicable. Therefore, a little could be commented about the potential gaps in these contributions.

Table 5. Summary of NLP methodologies and future possibilities (bolded) with technical publications

Scholars could invest more effort into scientific articles (including conference proceedings) as the literature on patent analyses is extant. In addition, scholars could also report more analyses on full texts of patent documents, as a majority of contributions are limited to titles, abstracts and claims. Scholars could leverage the wealth of knowledge in these sources to create ontologies and knowledge graphs both at the generic and domain-specific levels. As a part of knowledge graph extraction, relation extraction shall adopt a rule-based approach in patent documents as the language is consistent across the entire database. In scientific articles, however, relation extraction requires prior named entity recognition as well as relation label prediction algorithms. Scholars could also immix patent documents and scientific articles in a particular domain to develop a domain-specific graph extraction tool.

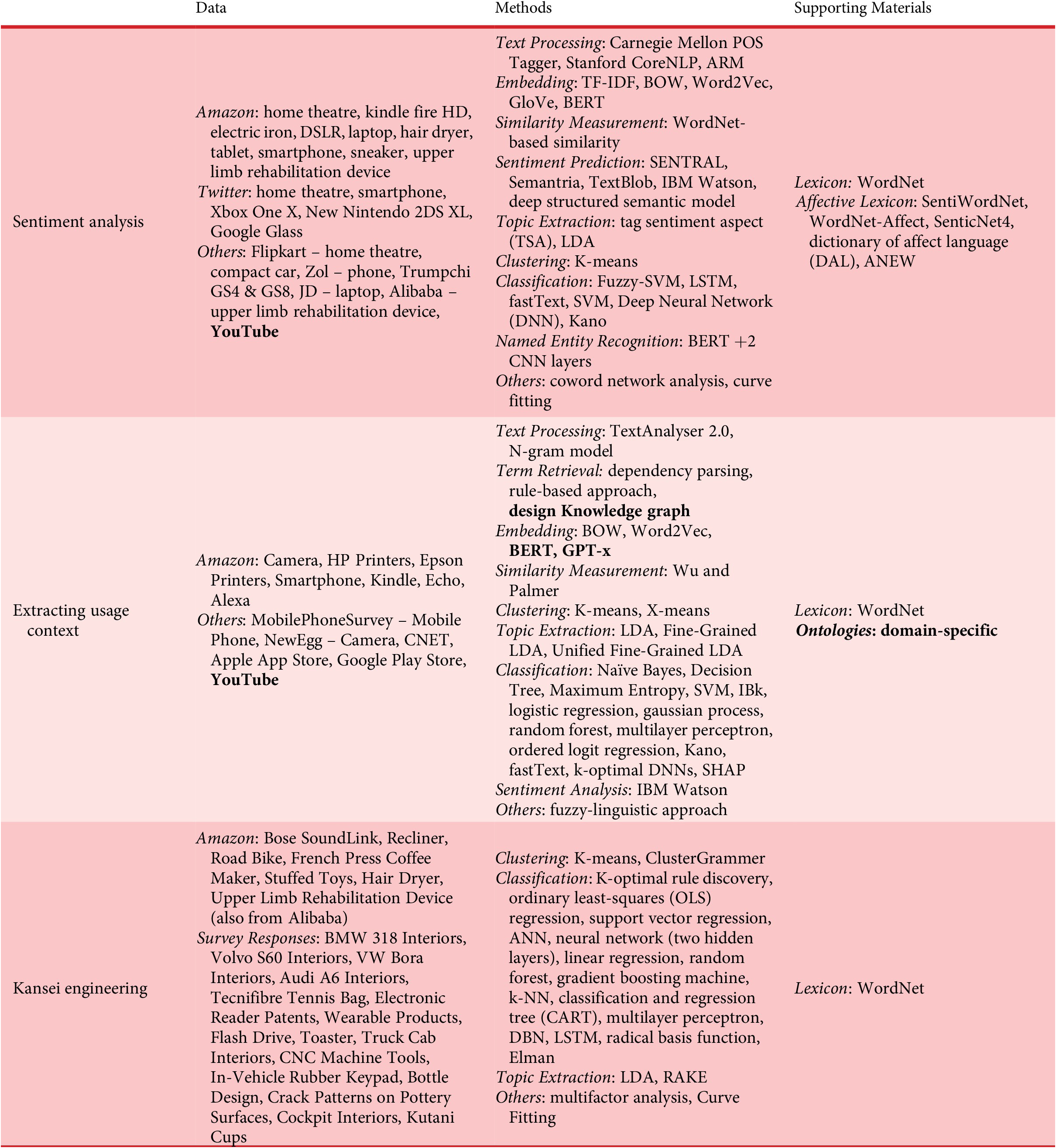

3.5. Consumer opinions

Available in plenty as a part of social media text and product reviews, consumer opinions are reflective of actual user experiences (Decker and Trusov Reference Decker and Trusov2010), product specifications, requirements and issues (Jin et al. Reference Jin, Liu, Ji and Kwong2019). Consumer opinions often include typographical errors (e.g., coooolll), alternative word forms (e.g., LOL), multilingual terms and grammatical errors. It is a challenge to remove symbols, hyperlinks, usernames, tags, artificially generated messages and misspelt words. Lim and Tucker (Reference Lim and Tucker2016, pp. 1, 2) posit that identifying product features in consumer opinions often involves challenges in term disambiguation (e.g., ‘researchers should really screen for this type of error’) and keyword recognition (‘… just as this court case is about to start, my iPhone battery is dying’).

To work around the above-mentioned issues, Tuarob and Tucker (Reference Tuarob and Tucker2015a) propose using Carnegie Mellon POS tagger that suits social media text. In addition, He et al. (Reference He, Camburn, Liu, Luo, Yang and Wood2019, p. 4) recommend using TextRazorFootnote 37 for identifying proper nouns like ‘Uber’ and ‘Manhattan’. While processing consumer opinions, Tuarob, Lim, and Tucker (Reference Tuarob, Lim and Tucker2018, p. 4) prefer not to perform stemming due to its negative effects on the performances of downstream NLP tasks. To improve the grammatical structure, Wang et al. (Reference Wang, Tian, Li, Wang, Barenji and Cheng2019a, pp. 456–458) suggest a few transformation rules, for example, sentence 1 (e.g., ‘very nice’) is prepended with subject and verb to obtain sentence 2 (e.g., ‘It is very nice’) if the former does not include these. In addition to these approaches to work around the issues with consumer opinions, scholars have incorporated distinct steps before performing sentiment analysis, capturing usage context and modelling user emotions.

Sentiment analysis

Sentiment analysis is an important application of NLP that uses ratings as well as an affective lexicon to determine the polarity and intensity of sentiment in a piece of text. The sentiment scores quantify the product favourability (Tuarob and Tucker Reference Tuarob and Tucker2015a, p. 5) and affective performances (Chang and Lee Reference Chang and Lee2018, pp. 450, 451). Obtaining true sentiment scores is often a challenge, given that 22.75% of a social media text is sarcastic (Tuarob, Lim, and Tucker Reference Tuarob, Lim and Tucker2018). In addition, Tuarob and Tucker (Reference Tuarob and Tucker2015b) identify that neutral words constitute over 53% and 48.6% of smartphone and automobile-related tweets. Since the sentiment score of a phrase may not often match that of a sentence, Chang and Lee (Reference Chang and Lee2018, p. 462) propose to adjust the sentiment score of a local context based on the polarity match with the whole sentence.

Sentiment analysis utilises product features (nouns) and sentiment indicators (adjectives, adverbs and verbs); for example, ‘The keyboard is fine but the keys are real slippery’ includes product features {keyboard, keys} and sentiment indicators {fine, slippery} (Tang et al. Reference Tang, Jin, Liu, Li and Zhang2019, pp. 1, 2). Sentiment analysis requires an affective lexicon like SentiWordnet (Baccianella, Esuli, and Sebastiani Reference Baccianella, Esuli and Sebastiani2010), Affective Space 2 (Cambria et al. 2015) and SenticNet 6 (Cambria et al. Reference Cambria, Li, Xing, Poria and Kwok2020). We review in the remainder of this section, the contributions that have conducted sentiment analyses on various design text sources.

Raghupathi et al. (Reference Raghupathi, Yannou, Farel and Poirson2015) compute sentiment scores of Home Theatre reviews from Twitter, Amazon and Flipkart using the SENTRAL algorithm and the dictionary of affect language (DAL). To predict sentiment scores, Zhou, Jiao, and Linsey (Reference Zhou, Jiao and Linsey2015, p. 4) feed a labelled dataset of Kindle Fire HD 7 reviews into the fuzzy-SVM algorithm along with a lexicon that is populated using ANEW (Bradley and Lang Reference Bradley and Lang1999). Jiang, Kwong, and Yung (Reference Jiang, Kwong and Yung2017, pp. 2, 4) extract nouns, adverbs, verbs and adjectives from electric iron reviews and utilise SentiWordNet (Baccianella, Esuli, and Sebastiani Reference Baccianella, Esuli and Sebastiani2010) to predict sentiment scores.

Zhou et al. (Reference Zhou, Jiao, Yang and Lei2017) compute sentiment scores of specific product features in Kindle Fire HD reviews using ANEW and classification based on a rough set. They augment the sentiment scores with a feature model that was constructed by extracting product features using ARM and combining these using WordNet-based similarity measures (e.g., Resnik, Leacock-Chodorow). Jiang et al. (Reference Jiang, Kwong, Park and Yu2018, p. 394) assess 1259 reviews of six compact cars using SemantriaFootnote 38 to obtain sentiment scores. Tuarob, Lim, and Tucker (Reference Tuarob, Lim and Tucker2018, pp. 6, 8) use TextBlobFootnote 39 to compute sentiment scores of tweets related to 27 smartphone models. They account for sarcasm using the analysis of a coword network, where nodes are ranked for likelihood, explicitness and relatedness.