Introduction

Health technology assessment (HTA) of tests differs from therapeutic interventions. One of the most important differences is that medical testing rarely improves health outcomes directly. Testing is usually part of a complex clinical pathway where test results guide treatment decisions, which include a variety of medical actions and processes. Medical tests have the potential to improve patient outcomes if improvements in accuracy (reductions in false positives and false negatives) are translated into more appropriate diagnoses (diagnostic yield) and more appropriate treatment (therapeutic yield). Treatment effectiveness ultimately determines the degree to which improvements in therapeutic yield will result in improved patient outcomes (Reference Fryback and Thornbury1). Medical tests may also improve patient outcomes by mechanisms other than accuracy. For example, tests may offer similar accuracy at reduced cost, simplify healthcare delivery thereby improving access, improve diagnostic confidence and improve diagnostic yield, reduce time to diagnosis, lower anxiety, reduce uncertainty or improve safety (Reference Ferrante di Ruffano, Hyde, McCaffery, Bossuyt and Deeks2).

Assessing test effectiveness, therefore, requires evaluation and comparison of complex test-treatment management strategies (Reference Bossuyt and Lijmer3–Reference Schünemann, Oxman and Brozek5). Direct (end-to-end) evidence of the effect of tests on patient outcomes as might be captured by a randomized controlled trial (RCT) is scarce (Reference Ferrante di Ruffano, Davenport, Eisinga, Hyde and Deeks6). In the absence of RCT evidence a “linked evidence approach” methodology has been advocated (Reference Merlin, Lehman, Hiller and Ryan7) to evaluate the clinical utility of medical tests using decision analytical modeling to integrate evidence (Reference Sutton, Cooper, Goodacre and Stevenson8) on each component of a test-treatment pathway.

The number of international HTA organizations with a remit including nonpharmacologic technologies has increased over the last decade (Reference Wilsdon and Serota9–Reference Garfield, Polisena and Spinner11). Concurrently, methodological research has defined the types of research required to evaluate medical tests (Reference Bossuyt, Irwig, Craig and Glasziou12;Reference Lijmer, Leeflang and Bossuyt13) and methods to undertake HTAs of tests have been developed. These include, the launch of the UK’s National Institute for Health and Care Excellence Diagnostics Assessment Programme (NICE DAP) in 2010 (14), and a programme of methods development for conducting HTAs of tests by the USA’s Agency for Healthcare Research and Quality (AHRQ) (15).

Existing reviews of international HTA methods have demonstrated that many organizations rely on established HTA systems set up for the evaluation of pharmaceuticals and apply these to tests with little or no modification (Reference Ciani, Wilcher and Blankart10;Reference Garfield, Polisena and Spinner11;Reference Oortwijn, Jansen and Baltussen16). Substantial variation in evidence requirements for HTA of tests across international organizations has also been noted (Reference Garfield, Polisena and Spinner11;Reference Shinkins, Yang, Abel and Fanshawe17). Reviews of UK National Institute of Health Research HTA reports (Reference Shinkins, Yang, Abel and Fanshawe17;Reference Novielli, Cooper, Abrams and Sutton18) have demonstrated that, whilst uptake of appropriate meta-analytic methods for test accuracy evidence has improved over the last decade, the extent to which these are incorporated in economic models remains variable, as do methods for ascertaining and using nontest accuracy evidence. No improvement was noted in consideration of the effects of threshold and dependency when evaluating test combinations (Reference Shinkins, Yang, Abel and Fanshawe17).

We are not aware of any reviews that have sought to summarize the methods used by HTA organizations to perform HTAs of tests. Mapping the extent, features, and variation in approach to HTA of tests is key to identifying and sharing good practice, preventing duplication of effort, and to informing an international research agenda.

Drawing on methods already used to identify and map international HTA activity for pharmaceuticals and medical devices (Reference Ciani, Wilcher and Blankart10;Reference Oortwijn, Jansen and Baltussen16) the aims of this review were to identify which international HTA agencies are undertaking evaluations of medical tests, summarize commonalities and differences in methodological approach, highlight examples of good practice as well as areas requiring methodological development.

Methods

We performed a descriptive analysis of publicly available guidance documents of international HTA organizations who evaluate medical tests. For organizations providing detailed test-specific methods guidance, this consisted of a thorough examination of methods and process documents. This was complemented by a shorter systematic interrogation of methods guides for all other organizations to identify additional innovative methods for HTAs of tests.

Identifying HTA Organizations

Organizations were identified through 5 international members lists: International Network of Agencies for Health Technology Assessment (INAHTA), Health Technology Assessment International, Red de Evaluación de Tecnologías Sanitarias de las Américas, Health Technology Assessment Asia, and European Network for Health Technology Assessment (EUnetHTA) (as of April 2020), by cross-reference to organizations included in two previous HTA surveys of medical devices (Reference Ciani, Wilcher and Blankart10) and surrogate outcomes (Reference Grigore, Ciani and Dams19), and through expert recommendations provided by the project’s advisory group. Organizations were eligible if active, or closed within the last 3 years, organized HTA at a national level, defined by the World Health Organization (WHO) as “institutionalized HTA” (20), and included diagnostic tests within their remit of health technologies. To determine eligibility, the role and activities of each organization were assessed using three sources of information: (i) information about each organization on HTA network Web sites (e.g., INAHTA); (ii) HTA organization’s Web site, generally in an “About Us” section or similar; and if both these approaches were unsuccessful; and (iii) by web searches using the organization’s name.

We excluded organizations whose remit did not include medical tests (e.g., All Wales Therapeutic and Toxicology Centre), but included agencies whose remit was less clearly stated. Organizations focussing entirely on evaluating population screening programmes were also excluded (e.g., UK National Screening Committee). We also excluded private research institutes, commercial organizations (e.g., manufacturers of health technologies), patient organizations, and university departments or other research groups undertaking HTA methods research, or producing HTAs not commissioned by an HTA organization.

Identifying Methods and Process Guides

Initially, documents were eligible for examination if they were easily located from an organization’s Web site and had the explicit aim of providing methodological guidance to reviewers undertaking HTAs for the organization. We excluded methodology papers or journal articles unless they were clearly identified as representing the HTA organization’s current methodology guidance. No language restrictions were applied. We restricted our examination to an organization’s most current version of methods guidance.

Web sites of included organizations were searched between March and July 2020 by visual inspection to locate relevant menus or page sections (e.g., “our methods”; “processes”). An automatic text translator was used for non-English language Web sites. If this process was unsuccessful, we used embedded search boxes with text strings: “guide,” “methods,” “methodology,” “process,” and “HTA.” We allocated a maximum of 1 hr searching time per HTA organization to find relevant documents.

Selecting Organizations with Detailed Test-Specific Guidance

Our pragmatic approach aimed to focus on a detailed examination of organizations that were likely to have the most thorough methods guidance for carrying out HTAs of tests. We identified all organizations that had at least one methods chapter specific to test evaluation. From this sample, we identified eligible organizations as those with the most detailed and informative methods guides and obtained any additional process documentation available on their Web sites.

Extraction and Analysis

We collected data in two stages. In Stage 1 we performed an in-depth examination of selected organizations’ process and methods documents. All description relating to diagnostic tests was extracted verbatim into a piloted extraction form in the following domains: topic referral, prioritization, and scoping processes (preevidence review); identification, selection, appraisal, and synthesis of evidence for clinical effectiveness reviews; methods for health economics evaluations; developing evidence into guidance; and stakeholder processes. A copy of the extraction form is provided in Supplementary File 1.

Each organization was extracted independently by at least two researchers. Succinct summaries of each organization’s approach were sent to a representative of each agency to validate and ensure the accuracy of our understanding. Responses were collated and discussed for inclusion. Six of seven organizations contacted agreed to have their responses published as part of our data extraction (Supplementary File 2). Approach to HTA of tests was summarized in the form of concordant and discordant themes across organizations and by in-depth discussion at team meetings. Examples of good practice were loosely defined as the most informative and useful methods for conducting any aspect of an HTA for evaluating tests, identified and mutually agreed through in-depth discussion.

In Stage 2, we performed a rapid interrogation of the methods guides from organizations without detailed HTA test methods guidance using the key themes generated in Stage 1. Methods documents were interrogated using common test-related terms, including “diagn,” “test,” “screen,” and “detect.” For documents containing at least one test-related term, documents were assessed for presence and quantity of reporting for each theme. Duplicate independent extraction of Stage 2 organizations was performed when test-related terms were present in the absence of test-specific methods, and to resolve extraction queries. All data are presented descriptively.

Results

Of 216 unique organizations, we included 41 with a remit for test evaluation and identified publicly available methods guidance (Figure 1). Experts recommended two non-HTA organizations (Grading of Recommendations, Assessment, Development and Evaluations [GRADE] (21) and WHO (22)) on the basis that they might provide important methodological innovations. The included organizations are listed in Supplementary Table 1.

Figure 1. Identification of international HTA organizations and documentation.

Stage 1: Key Organizations with Dedicated Sections on Test-Specific Methods

We identified 10 organizations with test-specific guidance sections. Three of these were not considered in Stage 1 but rather in our analysis of organizations in Stage 2. The reason for this for two organizations was that test-specific sections were limited to an overview of test accuracy challenges (Gesundheit Österreich GmbH [GOG] and Agency for Care Effectiveness [ACE]). The EUnetHTA Core Model (23) was also deferred to Stage 2 because it is an international collaboration for development and dissemination of standardized methods for HTA (24) rather than an organization undertaking HTA for a particular country and so strictly speaking not eligible.

The seven key organizations with test specific guidance sections were: AHRQ (15), Canadian Agency for Drugs and Technology in Health (CADTH) (25), Institut für Qualität und Wirtschaftlichkeit im Gesundheitswesen (IQWIG) (26), Medical Services Advisory Committee (MSAC) (27), NICE DAP (14), Statens beredning för medicinsk och social utärdering (SBU) (28), and Zorginstituut Nederland (ZIN) (29). Supplementary Table 2 summarizes the remit, capacity, and sources we used for these seven organizations. In addition, representatives from six of seven included organizations provided feedback on our methods summaries (Supplementary File 2). Methods guides were last updated between December 2011 (NICE DAP) and May 2021 (MSAC), including three during the project (MSAC, IQWiG, SBU). Three organizations produced test-specific methods guides (NICE DAP, AHRQ, ZIN), one a guide for nonpharmacologic interventions (MSAC), and three test-specific guidance as chapters and/or dedicated appendices within generic HTA methods guidance documents (SBU, IQWiG, CADTH). Although CADTH do perform clinical effectiveness reviews, their publicly available methods guide is for health economic evaluation only.

Thematic Analysis Findings

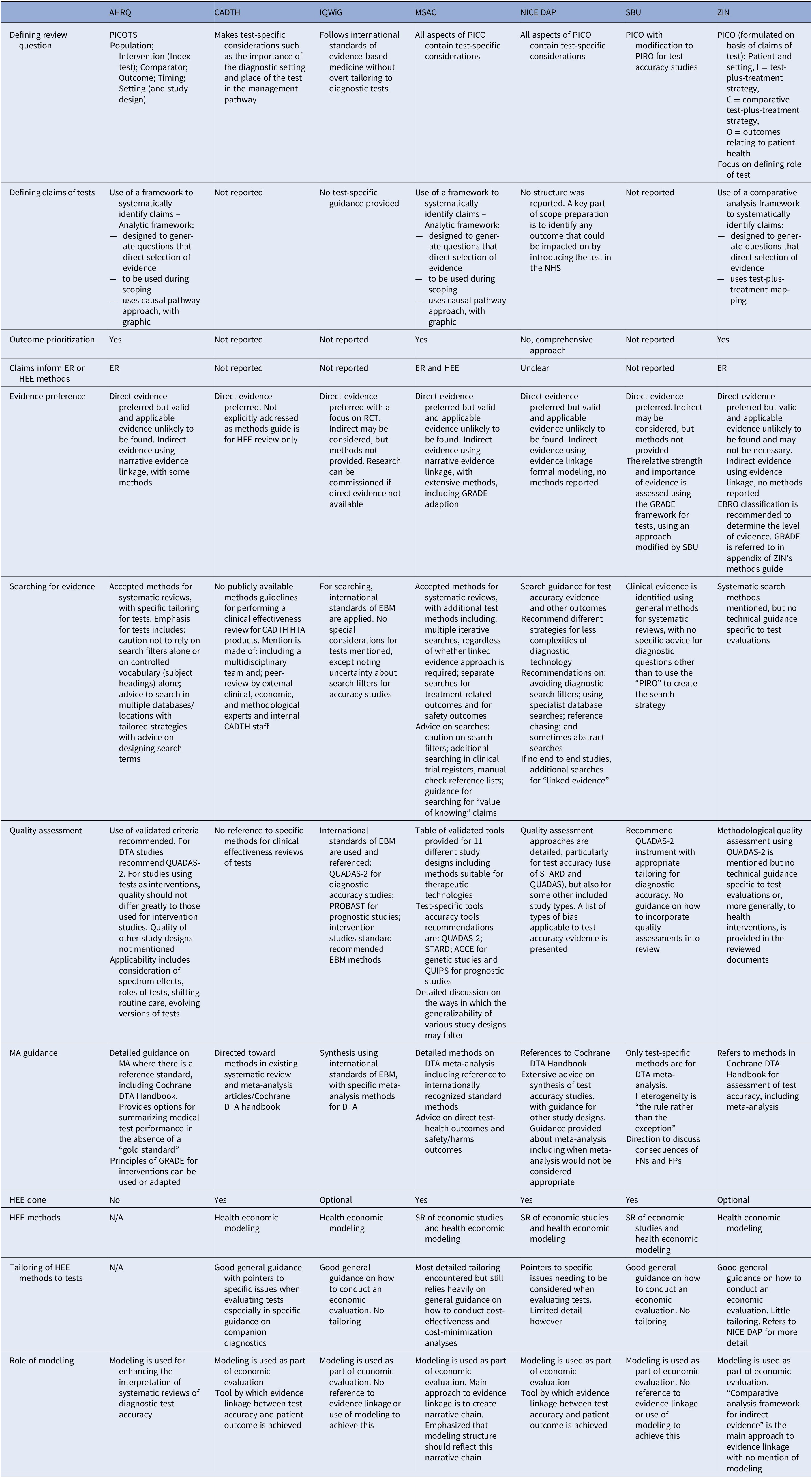

A summary of findings for Stage 1 organizations is provided in Table 1 and elaborated below.

Table 1. Summary of information on main themes in each key organization

ACCE, analytic validity, clinical validity, clinical utility and associated ethical, legal, and social implications; AHRQ, Agency for healthcare research and quality; CADTH, Canadian agency for drugs and technology in health; DTA, diagnostic test accuracy; EBM, evidence-based medicine; EBRO, evidence-based guideline development in the Netherlands; ER, evidence review; FN, false negative; FP, false positive; GRADE, Grading of recommendations, assessment, development and evaluations; HEE, health economic evaluation; HTA, health technology assessment; IQWiG, Institut für Qualität und Wirtschaftlichkeit im Gesundheitswesen; MA, meta-analysis; MSAC, Medical services advisory committee; N/A, not applicable; NICE DAP, National institute for health and care excellence diagnostics assessment programme; PICO, population, intervention, comparator, outcome; PIRO, population, intervention, reference standard, outcome; PROBAST, prediction model risk of bias assessment tool; PROSPERO, international prospective register of systematic reviews; QUADAS, quality assessment of diagnostic accuracy studies; RCT, randomized controlled trial; SBU, Statens beredning för medicinsk och social utärdering; STARD, standards for reporting of diagnostic accuracy; ZIN, Zorginstituut Nederland.

Claims of Tests

Establishing how a study test might be clinically effective is discussed to varying degrees by all organizations, who share the same philosophy of the primacy of patient health outcomes, and that tests impact these outcomes mainly indirectly through the consequences of test results. A shared key principle arising from this philosophy is that evidence of accuracy is on its own insufficient to demonstrate the test’s clinical effectiveness, since test results must also demonstrate a change to patient management, which is shown to change health outcomes.

The organizations differ, however, in how the claims of tests are used to guide the HTA process (Figure 2). Both AHRQ and MSAC use a structure to construct the claims of a test in a systematic manner, which is achieved using a graphic to map out the main components of patient care from testing to health outcomes, for each comparative management pathway. Both are adaptations of the USPSTF’s analytic framework (30), where a causal pathway approach is used to identify key differences in the care a patient will receive as a result of using different tests, which in turn generates review questions that direct the selection of evidence. ZIN use a “comparative analysis framework,” which employs a similar approach of mapping out “test-plus-treatment” pathways, and discerning important differences and consequences to patient health as a result of changing the test (ZIN).

Figure 2. Stage 1 organization approaches to defining the way in which a diagnostic test claims to impact on patient health. Green cells identify the majority view, gray cells indicate uncertainty in approach, and blue cells illustrate specifically reported approaches to identifying and using test claims in the HTA process. ER, effectiveness review; HEE, health economics evaluation; HTA, health technology assessment.

AHRQ, MSAC, and ZIN use the claims framework to identify and prioritize which outcomes should be evaluated as part of the HTA, and as such this process forms a central part of the scoping phase for each organization. MSAC provides detailed example frameworks with associated review questions, organized according to a categorization of claims, as well as conditions when it is appropriate to truncate the framework for a claim of noninferiority (no difference in health outcomes). Further detailed guidance is also given for different diagnostic comparisons (i.e., replacement, add-on, triage), as well as for various types of test, for example, multifactorial algorithms and machine learning/artificial intelligence tests.

NICE DAP conduct a thorough scoping process, in which outcomes are identified using pathways analysis and extensive stakeholder consultation, however, they do not actively prioritize those outcomes. Outcomes prioritization was not discussed by the remaining organizations (SBU, CADTH, IQWiG), however, all seven organizations use test-specific adaptations of the Population Intervention Comparator Outcome (PICO) structure, emphasizing test comparison, and all cite some additional requirements for tests, most commonly the role of the study test within existing care pathway. All organizations involve multiple stakeholders groups during question formulation, including clinical experts and patient/user representatives.

The degree to which the type of claim informs the method by which it is evaluated in an HTA was also found to vary, with an integrated approach described by ZIN, AHRQ and most clearly and thoroughly by MSAC. In all three, claims underlie the search for, selection and interpretation of evidence; AHRQ for example uses claims to inform quality assessment, particularly applicability, while organizations in whom claims do not appear to inform methods tend to use the PICO for this purpose (NICE DAP, SBU). For MSAC, a test’s claims underpin all subsequent HTA methods, from searching through to analysis, synthesis, and health economics. To achieve this, MSAC have developed a “hierarchy of claims” (MSAC, pp. 26–7), in which a health claim must always be made (that the “new” test results in superior or non-inferior patient health) but which also allows for claims of noninferiority to be accompanied by additional “nonhealth” claims, which MSAC terms “value of knowing” (MSAC, p. 18). The principal claim should relate to health, and although this is explicitly derived from the expected comparative accuracy of tests, methods to demonstrate noninferiority in test accuracy are not provided. We did note that MSAC’s advice for analyzing comparative accuracy was removed from their 2017 guidance (31).

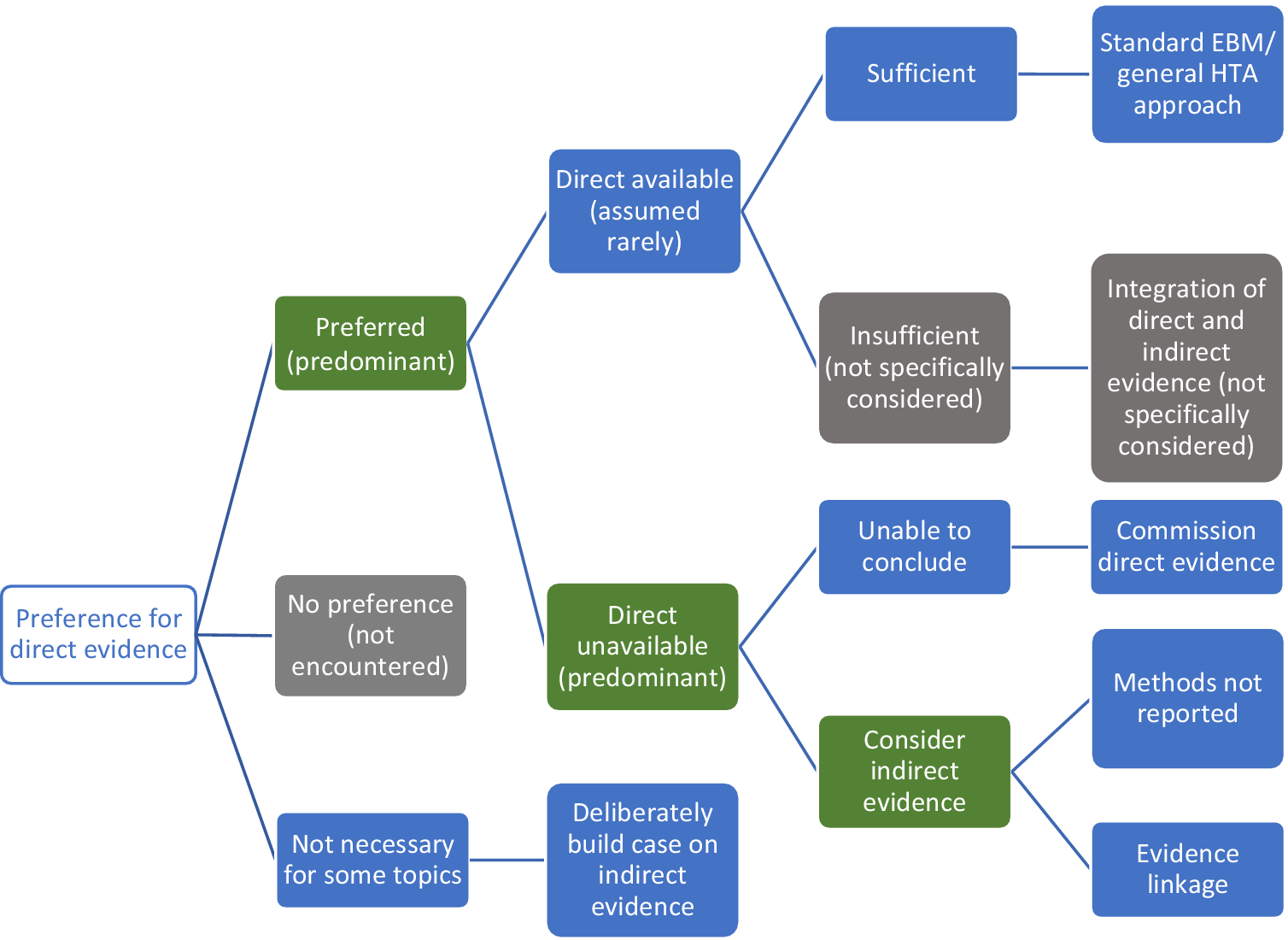

Direct and Indirect Evidence for the Clinical Effectiveness of Tests

Figure 3 summarizes the approaches Stage 1 organizations advocated for using direct and/or indirect evidence to investigate the effectiveness of diagnostic tests. All seven organizations state a clear preference for “direct” evidence, also referred to as “end-to-end” evidence (NICE DAP), “head-to-head effectiveness” (AHRQ) or “direct test to health outcomes evidence” (MSAC). This was consistently defined as comparisons of patient health outcomes following differing tests or testing strategies within a single primary study. The most common example given is of RCTs that randomize patients between tests and follow them through subsequent clinical management, after which health outcomes are measured.

Figure 3. Stage 1 organization approaches to using direct and indirect evidence to evaluate the effectiveness of diagnostic tests. Green cells identify the majority view, gray cells are not specifically mentioned but are plausible approaches, and blue cells illustrate specifically reported approaches to using direct and indirect evidence.

We found little test-specific guidance for how to assess and synthesize direct evidence. The most common recommendation is to follow international EBM methods for appraising and synthesizing RCT evidence of treatment interventions (AHRQ, MSAC, ZIN, IQWiG, NICE DAP), including the use of GRADE to assess the certainty/strength of the body of evidence (MSAC, SBU, AHRQ, ZIN). MSAC do supplement this treatment intervention method with three key additional components: examining the applicability of direct evidence, for which detailed guidance is given; a requirement to report how direct test-health outcomes evidence has been constructed; and the need to present evidence of test-related harms (both direct procedural harms and harms following subsequent management decisions) as a separate section of synthesis (MSAC, pp. 99–115).

There was a majority view that relying on direct evidence to inform an HTA is likely to be problematic, since these “test-plus-treatment RCTs” are uncommon (NICE DAP, MSAC, AHRQ, SBU, ZIN) and have particular challenges to their reliability and generalizability (AHRQ, MSAC, ZIN).

Handling Indirect Evidence

There does not appear to be a clear consensus on how to proceed when direct evidence is unavailable, insufficient, or unnecessary (Figure 3). Some organizations suggest that their main approach is to use direct evidence (IQWiG, SBU), and commission primary research when it is not available (IQWiG). Both SBU and IQWiG suggest they may also use “indirect” evidence, such as evidence of a test’s accuracy (or any other outcome that is intermediate between a test and health outcome), however, neither organization’s guidance provides explicit methods for how this should be used to determine clinical effectiveness.

The main alternative view to the use of direct evidence is “evidence linkage” (NICE DAP, AHRQ, MSAC, ZIN): test accuracy evidence (the proportion of individuals correctly classified as true positive and true negative) is linked with effectiveness evidence (the effects of appropriate treatment) and compared across different test-treatment strategies to provide an estimate of the likely overall effect of the test on a health outcome.

Two types of evidence linkage are identified, narrative and formal modeling. MSAC provides a detailed explanation of how to create a narrative chain of argument showing how different bodies of evidence confirm or challenge the likelihood that improvements in accuracy will lead to changes in clinical management and subsequently clinical outcomes. For MSAC, AHRQ, and ZIN, methods to select and assess indirect evidence are explicitly linked to a test’s claims, which enables an explicit examination of the adequacy of “links” between different types of evidence, such as between a test accuracy study and an impact to clinical decision-making study. This “transitivity” (MSAC) or “transferability” (AHRQ) of evidence forms a key method in both organizations, to which AHRQ add the recommendation to apply GRADE to each of these links in addition to grading the overall body of evidence, although they identify a lack of methods for doing so. MSAC outline specific adjustments to the standard GRADE approach for each of their three prespecified evidence links. ZIN provide an accessible stepped process for developing and conducting a comparative analysis (ZIN, pp. 28–9), while MSAC also present useful suggestions for producing an informative narrative synthesis, including explaining the summative benefits and harms for a hypothetical population from the point of undergoing testing.

The alternative approach to narrative evidence linkage is formal modeling of links between accuracy and effectiveness evidence (NICE DAP). There is limited detail on how this might be achieved and it is implied that methods for economic modeling, such as decision-analytic modeling, can be simply extended to create a linked evidence model of effectiveness and cost-effectiveness.

Aside from the narrative summary guidance provided by MSAC, methods for synthesizing indirect evidence were exclusively restricted to test accuracy meta-analysis (NICE DAP, SBU, IQWiG, CADTH, AHRQ). We also did not find reference to specific methods for judging the adequacy of linked cases, beyond notions of applicability, including how to deal with inconsistencies in the evidence base between different study types. Similarly, we did not find guidance for how to resolve inconsistencies in findings between direct and indirect evidence, nor how to assess the strength of such a mixed evidence base.

Searching for Evidence

All seven organizations acknowledge the importance of conducting comprehensive and systematic searches and presenting the results in a clear and transparent way. MSAC, NICE DAP, and AHRQ state that main databases and trial registries (e.g., MEDLINE, EMBASE), should be supplemented with other databases, reference checking, and contacting experts to identify additional titles and unpublished data. Some differences were noted. There is diversity concerning use of diagnostic search filters with NICE DAP, IQWiG, AHRQ, and MSAC advising caution against use, whilst AHRQ recommend further investigation of the option. Concerning search strategy NICE DAP recommend a 2-step approach first searching for direct evidence and then, if such evidence is not available or sufficient, conducting additional searches to inform a linked evidence analysis. MSAC set out a more iterative approach including the need for multiple searches depending on the test’s health claim and availability of direct “test-health outcomes” evidence. Notable additions include systematic and targeted searches to supplement direct evidence when it is found to be insufficient to answer the review question, to: assess the safety of tests; address each evidential link identified by the assessment framework, including separate searches for each change in management occurring as a result of the test; or to find evidence for exploring any “value of knowing” claims.

Quality Assessment

The importance of assessing the risk of bias (internal validity) and applicability (external validity) of the identified evidence in HTAs of tests is acknowledged by all key organizations with most focus being on the approach for test accuracy. The level of guidance provided varies. Risk of bias should be assessed using validated tools, most commonly (AHRQ, IQWiG, MSAC, NICE DAP, SBU, ZIN) Quality Assessment on Diagnostic Accuracy Studies-2 (QUADAS-2) is recommended for assessing diagnostic accuracy studies. NICE DAP and MSAC also suggest the use of STARD, without discussing the advantages and disadvantages of this approach. MSAC provides a valuable inventory of quality assessment tools for a range of different study designs, including RCTs and observational studies (MSAC, pp. 251–3), some of which are also recommended by other key organizations. For designs other than accuracy, the general assumption is that no adaptation of tools is required when the focus is tests, as in the case of RCT: “For trials of tests with clinical outcomes, criteria should not differ greatly from those used for rating the quality of intervention studies” (AHRQ). All key organizations recommend assessing the applicability of evidence, specific to the country and setting in which the test is to be used, and to review questions. Again, the level of guidance varies, from general recommendations related to the methodological quality assessment of individual studies (IQWiG) to more detailed treatment of the topic for all stages of the HTA process (MSAC, NICE DAP, and AHRQ).

Health Economic Evaluation

Six of the key organizations assess cost-effectiveness of tests although with different emphasis. MSAC is explicit that it is one part of the considerations, clinical need, comparative health gain and predicted use in practice, and financial impact being the others. IQWIG and ZIN imply that, although helpful, economic analysis is not always necessary, while at the other end of this spectrum CADTH and NICE DAP place emphasis on it. Where cost-effectiveness is used, the main approaches are to systematically review existing economic evaluations and to use model-based economic evaluation often estimating cost per QALY (quality-adjusted life year). They all provide good guidance on the general approach to conducting model-based evaluations, which could be applied irrespective of the technology under consideration. However, there is very little guidance on how general approaches need to be adapted to particular demands of evaluating tests, beyond offering pointers in the case of NICE DAP and CADTH. This gives the impression that there are a limited number of challenges which can be easily overcome. MSAC offers the greatest amount of detail but still relies heavily on general guidance on how to conduct cost-effectiveness and cost-minimization analyses.

Additional Contributions

We identified distinct additional contributions from all seven organizations, summarized in Supplementary Table 3. Notable methods include a framework to systematically identify the ethical aspects of any health technology (Reference Heintz, Lintamo and Hultcrantz32) (although no specific tailoring for tests) (SBU), a framework for assessing technical modifications of tests from ZIN, and guidance for specific types of diagnostic test, including genetic tests from AHRQ and MSAC, codependent tests from CADTH and MSAC and artificial intelligence/machine learning tests from MSAC.

Stage 2: Systematic Check of Test-Specific Methods in Other Organizations

We performed a rapid interrogation of manuals produced by 34 organizations not considered in Stage 1 (Figure 1). One document was jointly produced by four Austrian organizations (GOG, Austrian Institute for Health Technology Assessment, University for Health Sciences, Medical Informatics and Technology, Donau-Universität Krems) so there were 31 unique sources the findings from which are summarized in Table 2. Two organizations for whom we did not find methods manuals, referred to the EUnetHTA Core Model methods, considered in Stage 2, on their Web sites: Finnish Coordinating Center for Health Technology Assessment (https://www.ppshp.fi/Tutkimus-ja-opetus/FinCCHTA/Sivut/HTA.aspx) and Ministry of Health of the Czech Republic (https://www.mzcr.cz/category/metodiky-a-stanoviska/).

Table 2. Summary of information on organizations examined at Stage 2

a N = 31 Austrian organizations producing joint document counted as 1 organization.

b WHO document refers to the organization’s intranet for more information on test evaluation.

ACE, Agency for care effectiveness; AGENAS, Agenzia Nazionale per i Servizi Sanitari Regionali (National Agency for Regional Health Services); AOTMiT, Agencja Oceny Technologii Medycznych I Taryfikacji (Agency for Health Technology Assessment and Tariff System); AVALIA-T, Avaliación de Tecnoloxías Sanitarias de Galician (Galician Agency for Health Technology Assessment); CDE, Center for Drug Evaluation; C2H, Center for Outcomes Research and Economic Evaluation for Health; DEFACTUM, Social sundhed & arbejdsmarked (Social & Health Services and Labour Market); EUnetHTA, European network for health technology assessment; GOG, Gesunheit Österreich GmbH (Health Austria); GRADE, Grading of recommendations assessment, development and evaluation; HAS, Haute Autorité de Santé (National Authority for Health); HIQA, Health information and quality authority; HITAP, Health intervention and technology assessment program; HQO, Health quality Ontario; HTAIn, Health technology assessment in India; InaHTAC, Indonesia health technology assessment committee; KCE, Federaal Kenniscentrum/Centre fédéral d’expertise (Belgian Health Care Knowledge Centre); MAHTAS, Malaysian health technology assessment section; NECA, National Evidence-based healthcare collaborating agency, OSTEBA, Servicio de Evaluación de Tecnologías Sanitarias (Basque Office for Health Technology Assessment); STEP, Sentro ng Pagsusuri ng Teknolohiyang Pangkalusugan.

Most Stage 2 organizations referred to tests in at least one document, however, these tended to be short with few definitive methods. When present, methods were commonly limited to evaluating and synthesizing test accuracy studies (Avaliación de Tecnoloxías Sanitarias de Galician, Social sundhed & arbejdsmarked, Indonesia Health Technology Assessment Committee, Health Information and Quality Authority, Agenzia Nazionale per i Servizi Sanitari Regionali, GOG, Health Technology Assessment in India, Malaysian Health Technology Assessment Section, Sentro ng Pagsusuri ng Teknolohiyang Pangkalusugan, WHO). There were some exceptions. Detailed guidance was provided by EUnetHTA “Core Model (version 3)” (23) and associated documents provide test-specific methods for all components of an HTA, from scoping through to clinical and cost-effectiveness.

Stage 2 organizations rarely discussed how to discern a test’s claims. Those that did shared the same philosophy of a test’s predominantly indirect impact, achieved most commonly through differences in clinical decision making as a result of differing accuracy (ACE, GRADE, EUnetHTA). Test-specific methods were most commonly located in clinical effectiveness review sections, which were always focussed on test accuracy, with no tailoring of methods to evaluate direct evidence. A few organizations provided descriptions of linking evidence (ACE, EUnetHTA, GOG, Health Quality Ontario, GRADE), which may include decision-analytic modeling (GOG, EUnetHTA). Like MSAC, EUnetHTA emphasize the importance of evaluating safety as a type of outcome in its own right, distinguishing “reduced risk” (direct test harms) and “increased safety” (as a result of better accuracy). EUnetHTA are the only organization to distinguish safety from clinical effectiveness evidence, and although EUnetHTA highlight a likely overlap between the two, no methods are suggested for how to overcome the possibility of double-counting adverse consequences of inaccuracy. Alongside accuracy and diagnostic/therapeutic impact, EUnetHTA also define “other patient outcome[s],” such as knowledge and increased autonomy, which appear to be analogous to MSAC’s “value of knowing” outcomes. While EUnetHTA do not prioritize outcomes per se, they do describe the process of identifying the most important consequences (and hence outcomes) as “value-decisions” that demand transparency in how they are made.

Methods for health economic analysis infrequently referred to tests as the subject of evaluation and did not provide additional methods to those found in our Stage 1 assessment. EUnetHTA provide test-specific guidance for ethics analysis and for assessing the broader issues of organizational aspects, patients and social issues, and legal aspects, methods cited by MSAC as a key source for undertaking these investigations. Ethical analysis in particular constitutes a notable development in comparison to key organizations in Stage 1, detailing additional test-specific considerations including the aim of the test, the role of the test (differentiating between intended and likely actual role of the test), unintended implications (direct harms termed “risks” and harmful consequences of false test results), and normative issues in assessing effectiveness and accuracy (23).

Discussion

We present a first review of HTA organization methods for performing HTAs of tests.

Main Findings

In general, few test-specific methods are provided by HTA organizations that evaluate diagnostic tests, and when present these methods predominantly concern the evaluation of test accuracy evidence. A small number of organizations do provide more detailed tailoring of HTA methods for tests.

Within these key organizations, there is consistency in many respects. One is the need for the complexity of the diagnostic process to be fully incorporated into question definition, which favors the preeminence of patient health outcomes. Approaches to the searching, quality assessment, and meta-analysis of test accuracy studies is another. With the exception of dealing with test accuracy evidence, all HTA organizations tend to defer to general HTA approaches, designed to assess pharmaceuticals, rather than approaches specific to the HTA of tests.

There is also divergence. We found critical differences in methods used to identify the mechanisms by which a diagnostic intervention may impact health outcomes. Although prior methods research demonstrates these claims of tests are composed of numerous, interdependent mechanisms that are intrinsically based on the clinical setting (Reference Ferrante di Ruffano, Hyde, McCaffery, Bossuyt and Deeks2), most HTA organizations we examined did not provide methods for identifying the claims of the test intervention. While some key organizations provide an explicit method, consisting of a structured a priori framework, others rely on the scoping process to discover – but not prioritize – a test’s claims, and hence the evidence and outcomes required to assess clinical effectiveness. Here, good practice is showcased most clearly by MSAC who embed test claims as a foundation, informing the ensuing methods for evaluating clinical evidence and cost-effectiveness. Regardless of approach, all organizations frame differences in accuracy as the principal claim of a test’s impact to patient health.

Although all HTA groups recognize the primacy of direct evidence of the effect of a test on health outcomes, there are a range of views on how to proceed in its absence. At one extreme the emphasis is on encouraging the generation of direct evidence; at the other is an openness to all forms of evidence. Evidence linkage is a prominent solution, varying from narrative linkage to formal modeling, though it is unclear whether there is consistency in these approaches because most organizations do not clearly define them, and provide limited detail for how they should be performed. MSAC is the notable exception, describing a process by which evidence is sought with respect to each identified test claim, and a narrative chain of argument is created showing how improvements in test performance will be translated into improved health outcome, also incorporating harm and value of knowing.

Strengths and Weaknesses

Although this methods review is subjective, we have attempted to maximize objectivity by working to an a priori protocol, performing all steps in duplicate, and offering key (Stage 1) organizations the opportunity to check the face validity of summaries. We were exhaustive in our efforts to identify as many HTA organizations as possible, and to give equal opportunity for all of these to be considered eligible for detailed scrutiny as key organizations. We recognize a limitation of our research is an assumption that guidance reflects actual practice in relation to HTA of tests, whereas the reality may be that this guidance is not followed or is impractical to adopt by the organizations producing the individual HTAs. Further, our comparison of international HTA approaches may not fully account for the different contexts in which HTA is undertaken across different organizations. For example, some organizations have a remit to make recommendations for policy and reimbursement whereas others have a remit for evidence synthesis only. These contextual factors could have an impact on the level of detail and type of guidance offered.

Findings in Context

We are not aware of any other reviews that have examined methods for undertaking all phases of an HTA, for determining the clinical and cost-effectiveness of diagnostic tests. Our findings do mirror those of a previous comprehensive assessment of test-focused HTAs, which reviewed methods used to synthesize evidence informing economic decision models for HTAs of tests (Reference Shinkins, Yang, Abel and Fanshawe17). A key finding concerned the lack of a clear framework to identify and incorporate evidence into health economic models of tests. Despite being conducted 5 years later, our review found very few health economics methods were tailored specifically to tests, suggesting that this “gap” between clinical and cost-effectiveness may still remain. Further, our review suggests this gap may originate in failings to clearly delineate test claims at the outset of the HTA process.

Recommendations

For practice, we found some good materials which would be useful for guiding an organization new to HTAs of tests. As a comprehensive exposition of all aspects, we would particularly recommend the guidance by MSAC (27).

For research, aspects of divergence reflect areas where there is not yet consensus amongst the HTA community, and so indicate opportunities for methodological research. We would highlight further development of evidence linkage methods, particularly for managing and interpreting the heterogeneity of an indirect and linked evidence base. Another specific uncertainty concerns whether there is a difference between evidence linkage achieved by a health economic model alone and narrative evidence linkage combined with a model. We also noted absence of advice on how to evaluate noninferiority, with no guidance on how to identify, summarize and interpret comparative accuracy studies, which should be the ideal studies to assess noninferiority (Reference Takwoingi, Partlett, Riley, Hyde and Deeks33). A new tool, QUADAS-C, has recently been developed to specifically address risk of bias in comparative accuracy studies (Reference Yang, Mallett and Takwoingi34), and may be helpful in future guidance and updates. In addition, we would question whether sufficient attention has so far been paid to the complexities of identifying and interpreting direct evidence, and how a judgment is made that this evidence is sufficient or insufficient for decision making. Methods reviews of test-treatment RCTs have shown these studies are more susceptible to particular methodological challenges than RCTs of medicines (Reference Ferrante di Ruffano, Dinnes, Sitch, Hyde and Deeks35), suggesting that appraising their quality (both internal and external validity) may not be served well by the application of tools developed for treatment trials. Importantly, current guidance is silent on how to resolve inconsistencies between direct (end to end) evidence and indirect (linked) evidence (where both have been undertaken), which as MSAC note is the most likely scenario faced by reviewers of tests.

Conclusion

This methodological review gives an indication of the global state of the art with respect to HTA of tests. Our study reveals many important outstanding challenges, not least the general need for more extensive and detailed guidance outlining methods that are tailored specifically to evaluate diagnostic tests. Although the evaluation and synthesis of accuracy evidence is the most comprehensively and commonly provided methods topic, there is a need to incorporate more recent innovations, such as approaches for incorporating and interpreting comparative evidence of test accuracy. Although all HTA organizations are clearly focused on evaluating the benefits and harms of healthcare technologies to patient health, we found there is often a hiatus in methods guidance between evaluating diagnostic accuracy and assessing the end clinical effectiveness of diagnostic interventions. Organizations providing the most detailed guidance in this area tended to be those that incorporated the clearest methods for identifying and understanding how tests impact on patient health. We believe this observation underscores the centrality of test claims as a method for explicitly informing the selection, identification, interpretation, and synthesis of evidence used to deduce the clinical effectiveness of tests.

There has been a tendency in the past to deprioritise HTA of tests, and methods development relative to HTA of pharmacological interventions. However, the prominence of tests among health innovations (genetic testing and artificial intelligence applied to imaging) and the rapidly escalating costs of new tests argues that now is the time for priority to be given to methods development in this area. The recent confusion and uncertainty around evaluation of tests and testing strategies for COVID-19 reveals just how frail the current systems are for sanctioning the uptake of new tests into healthcare.

Supplementary material

To view supplementary material for this article, please visit https://doi.org/10.1017/S0266462323000065.

Acknowledgments

Representatives of HTA organizations for their useful feedback: Christine Chang and Craig Umscheid, on behalf of the Agency for Healthcare Research and Quality; Andrew Mitchell, on behalf of the Medical Services Advisory Committee; Joanne Kim and Cody Black, on behalf of the Canadian Agency for Drugs and Technologies in Health; Stefan Sauerland, on behalf of Institut für Qualität und Wirtschaftlichkeit im Gesundheitswesen; Thomas Walker, on behalf of the National Institute for Health and Care Excellence; and Susanna Axelsson, Jenny Odeberg, and Naama Kenan Modén, on behalf of Statens beredning för medicinsk och social utvärdering. Also Bogdan Grigore for his list of HTA organizations. Finally the MRC TEST Advisory Group for discussion of methods approach and comment on manuscript: Patrick Bossuyt, Katherine Payne, Bethany Shinkins, Thomas Walker, Pauline Beattie, Samuel Schumacher, Francis Moussy, and Mercedez Gonzalez Perez.

Funding statement

This work was funded by the Medical Research Council (grant number MR/T025328/1).

Conflicts of interest

The authors declare none.