Impact Statement

The accurate estimation of drag in aviation and shipping is of great economic value as it significantly affects energy expenditure and carbon emissions. The long-standing pursuit of a universal correlation between drag and topographical features of roughness has made remarkable progress in recent decades, yet it is still limited by the feasibility of demanding experiments and simulations. There exists no model which is generally applicable to any given rough surface. This positions machine-learning (ML) modelling as a promising cost-effective approach. Therefore, this study seeks to provide more insights into how different ML regression models perform in terms of the trade-off between capturing nonlinearity and training costs. The comprehensive analysis presented in this work aims to offer valuable insights for the future design of ML-based models in the field of drag prediction.

1. Introduction

Three-dimensional multi-scale surface irregularities are ubiquitous in industrial applications. The roughness imposes an increased resistance upon an overlying fluid flow, manifested as an increase in the measured drag. The increase in drag causes reduced energy efficiency, especially in turbulent flows. Examples include increased fuel consumption of cargo ships due to fouled hulls, reduced power output of eroded turbines in wind power plants and an increase in the power input required to maintain a constant flow rate in pipelines with non-smooth walls.

An efficient tool that can predict the drag induced by roughness would allow engineers and operators to optimize surface cleaning and treatment. However, as of today, there is no method for drag prediction that is both fast and accurate (Reference Yang, Zhang, Yuan and KunzYang et al. 2023b). There are accurate techniques that rely on towing tank experiments (Reference SchultzSchultz 2004), direct numerical simulations (Reference Thakkar, Busse and SandhamThakkar, Busse & Sandham 2016; Reference Forooghi, Stroh, Magagnato, Jakirlić and FrohnapfelForooghi et al. 2017; Reference Thakkar, Busse and SandhamThakkar, Busse & Sandham 2018) or large eddy simulations (Reference Chung and MckeonChung & Mckeon 2010). These methods measure the equivalent sand grain roughness ![]() $k_s$ that can be used to estimate the drag penalty for simple geometries (e.g. pipes) or incorporated into computational fluid dynamics software to evaluate the drag penalty on complex bodies (Reference Andersson, Oliveira, Yeginbayeva, Leer-Andersen and BensowAndersson et al. 2020; Reference De Marchis, Saccone, Milici and NapoliDe Marchis et al. 2020; Reference Kadivar, Tormey and McGranaghanKadivar, Tormey & McGranaghan 2023). However, towing tank experiments and computational techniques can be costly and time-consuming. The alternative approach is rough-wall modelling, where the objective is to predict

$k_s$ that can be used to estimate the drag penalty for simple geometries (e.g. pipes) or incorporated into computational fluid dynamics software to evaluate the drag penalty on complex bodies (Reference Andersson, Oliveira, Yeginbayeva, Leer-Andersen and BensowAndersson et al. 2020; Reference De Marchis, Saccone, Milici and NapoliDe Marchis et al. 2020; Reference Kadivar, Tormey and McGranaghanKadivar, Tormey & McGranaghan 2023). However, towing tank experiments and computational techniques can be costly and time-consuming. The alternative approach is rough-wall modelling, where the objective is to predict ![]() $k_s$ directly from the roughness topology. As discussed by Reference Yang, Zhang, Yuan and KunzYang et al. (2023b), models can be divided into correlation-type (Reference Flack and SchultzFlack & Schultz 2014; Reference Chan, Macdonald, Chung, Hutchins and OooiChan et al. 2015; Reference Forooghi, Stroh, Magagnato, Jakirlić and FrohnapfelForooghi et al. 2017), physics-based (Reference Yang, Sadique, Mittal and MeneveauYang et al. 2016) or data-driven regression methods (Reference Jouybari, Yuan, Brereton and MurilloJouybari et al. 2021; Reference Lee, Yang, Forooghi, Stroh and BagheriLee et al. 2022; Reference Yang, Stroh, Lee, Bagheri, Frohnapfel and ForooghiYang et al. 2023a,Reference Yang, Zhang, Yuan and Kunzb; Reference Shin, Khorasani, Shi, Yang, Bagheri and LeeShin et al. 2024; Reference Yang, Stroh, Lee, Bagheri, Frohnapfel and ForooghiYang et al. 2024). The accuracy, generalizability and complexity of the rough-wall models increase going from correlation-type to physics-based and finally to data-driven regression methods. In particular, while data-driven regression techniques have gained in popularity and show promise, they suffer primarily from lack of data that can be used for training.

$k_s$ directly from the roughness topology. As discussed by Reference Yang, Zhang, Yuan and KunzYang et al. (2023b), models can be divided into correlation-type (Reference Flack and SchultzFlack & Schultz 2014; Reference Chan, Macdonald, Chung, Hutchins and OooiChan et al. 2015; Reference Forooghi, Stroh, Magagnato, Jakirlić and FrohnapfelForooghi et al. 2017), physics-based (Reference Yang, Sadique, Mittal and MeneveauYang et al. 2016) or data-driven regression methods (Reference Jouybari, Yuan, Brereton and MurilloJouybari et al. 2021; Reference Lee, Yang, Forooghi, Stroh and BagheriLee et al. 2022; Reference Yang, Stroh, Lee, Bagheri, Frohnapfel and ForooghiYang et al. 2023a,Reference Yang, Zhang, Yuan and Kunzb; Reference Shin, Khorasani, Shi, Yang, Bagheri and LeeShin et al. 2024; Reference Yang, Stroh, Lee, Bagheri, Frohnapfel and ForooghiYang et al. 2024). The accuracy, generalizability and complexity of the rough-wall models increase going from correlation-type to physics-based and finally to data-driven regression methods. In particular, while data-driven regression techniques have gained in popularity and show promise, they suffer primarily from lack of data that can be used for training.

Over time, a sufficient amount of relevant roughness data will be accumulated, which can be used to develop efficient regression models for predicting the drag of rough surfaces. Regression models can directly process images or topographical maps of the roughness to predict ![]() $k_s$, thus replacing experiments and resolved simulations. Recent efforts have focused on relatively complex regression methods. Reference Jouybari, Yuan, Brereton and MurilloJouybari et al. (2021) adopted a multi-layer perceptron (MLP) and Gaussian processes regression to build a mapping from statistical surface measures to

$k_s$, thus replacing experiments and resolved simulations. Recent efforts have focused on relatively complex regression methods. Reference Jouybari, Yuan, Brereton and MurilloJouybari et al. (2021) adopted a multi-layer perceptron (MLP) and Gaussian processes regression to build a mapping from statistical surface measures to ![]() $k_s$. Both methods were trained on

$k_s$. Both methods were trained on ![]() $45$ labelled samples, achieving an accuracy of approximately

$45$ labelled samples, achieving an accuracy of approximately ![]() $10$ %. Realizing that the database size is the major bottleneck for fully exploiting the advantages of neural networks, Reference Lee, Yang, Forooghi, Stroh and BagheriLee et al. (2022) and Reference Yang, Stroh, Lee, Bagheri, Frohnapfel and ForooghiYang et al. (2023a) employed transfer and active learning techniques, respectively. Specifically, Reference Lee, Yang, Forooghi, Stroh and BagheriLee et al. (2022) trained a MLP model on a small number of high-fidelity numerical simulations of synthetic irregularly rough surfaces to predict the roughness function,

$10$ %. Realizing that the database size is the major bottleneck for fully exploiting the advantages of neural networks, Reference Lee, Yang, Forooghi, Stroh and BagheriLee et al. (2022) and Reference Yang, Stroh, Lee, Bagheri, Frohnapfel and ForooghiYang et al. (2023a) employed transfer and active learning techniques, respectively. Specifically, Reference Lee, Yang, Forooghi, Stroh and BagheriLee et al. (2022) trained a MLP model on a small number of high-fidelity numerical simulations of synthetic irregularly rough surfaces to predict the roughness function, ![]() $\Delta U^+$. However, the model was pre-trained using estimates of drag obtained from empirical correlations for over

$\Delta U^+$. However, the model was pre-trained using estimates of drag obtained from empirical correlations for over ![]() $10\ 000$ rough surfaces. Reference Yang, Stroh, Lee, Bagheri, Frohnapfel and ForooghiYang et al. (2023a) used active learning, where the model automatically suggests the surface roughness that should be simulated and added to the database, to most effectively enhance the model performance. In a subsequent study by the same group, Reference Yang, Stroh, Lee, Bagheri, Frohnapfel and ForooghiYang et al. (2024) examined models of varying complexity and found that those with reduced complexity achieved superior performance when trained and tested on specific roughness types. These findings indicate a nonlinear relationship between surface roughness and induced drag, which can be effectively explored by categorizing roughness types based on their statistical properties. Reference Shin, Khorasani, Shi, Yang, Bagheri and LeeShin et al. (2024) predicted drag using convolutional neural networks (CNNs) based on the raw topographical data of rough surfaces. Additionally, they interpreted their model to discover the drag-inducing mechanisms of roughness structures using a data-driven approach. It should be emphasized that drag prediction is a particularly demanding regression problem since each sample in the database used for training and testing is one direct numerical simulation (DNS) or experiment. Therefore, we are still far from having databases containing of the order of

$10\ 000$ rough surfaces. Reference Yang, Stroh, Lee, Bagheri, Frohnapfel and ForooghiYang et al. (2023a) used active learning, where the model automatically suggests the surface roughness that should be simulated and added to the database, to most effectively enhance the model performance. In a subsequent study by the same group, Reference Yang, Stroh, Lee, Bagheri, Frohnapfel and ForooghiYang et al. (2024) examined models of varying complexity and found that those with reduced complexity achieved superior performance when trained and tested on specific roughness types. These findings indicate a nonlinear relationship between surface roughness and induced drag, which can be effectively explored by categorizing roughness types based on their statistical properties. Reference Shin, Khorasani, Shi, Yang, Bagheri and LeeShin et al. (2024) predicted drag using convolutional neural networks (CNNs) based on the raw topographical data of rough surfaces. Additionally, they interpreted their model to discover the drag-inducing mechanisms of roughness structures using a data-driven approach. It should be emphasized that drag prediction is a particularly demanding regression problem since each sample in the database used for training and testing is one direct numerical simulation (DNS) or experiment. Therefore, we are still far from having databases containing of the order of ![]() $10^4$ samples, which is commonly used for developing neural networks.

$10^4$ samples, which is commonly used for developing neural networks.

Given that data-driven models show potential for rough-wall modelling, we assess the performance and limitations of increasingly complex regression methods. More specifically, this work compares linear regression, a kernel method based on support vector machine, MLP and a convolutional network. We have an order-of-magnitude larger database compared with earlier work (Reference Jouybari, Yuan, Brereton and MurilloJouybari et al. 2021; Reference Lee, Yang, Forooghi, Stroh and BagheriLee et al. 2022; Reference Yang, Stroh, Lee, Bagheri, Frohnapfel and ForooghiYang et al. 2023a,Reference Yang, Zhang, Yuan and Kunzb). Using a GPU-accelerated numerical solver (Reference Costa, Phillips, Brandt and FaticaCosta et al. 2021), we developed a DNS database of ![]() $O(10^3)$ samples which includes five types of irregular homogeneous roughness. However, our database is far from complete. There exists many roughness distributions, in particular, patchy inhomogeneous ones, that we do not consider. In addition – as we will demonstrate herein – the size of our database is still small for fully exploiting neural networks. Indeed, the purpose of this study is not to identify an optimal or universal regression technique, since this will depend on the training database and the specific application. Instead, our aim is to understand the advantages and limitations between different regression approaches for drag prediction.

$O(10^3)$ samples which includes five types of irregular homogeneous roughness. However, our database is far from complete. There exists many roughness distributions, in particular, patchy inhomogeneous ones, that we do not consider. In addition – as we will demonstrate herein – the size of our database is still small for fully exploiting neural networks. Indeed, the purpose of this study is not to identify an optimal or universal regression technique, since this will depend on the training database and the specific application. Instead, our aim is to understand the advantages and limitations between different regression approaches for drag prediction.

Alongside the actual technique used in regression, the choice of roughness features that constitute the model's input is another important aspect. For homogeneous roughness, the most common approach is to use statistics derived from the roughness height distribution, such as the peak or peak-to-trough height (Reference Flack and SchultzFlack & Schultz 2014; Reference Forooghi, Stroh, Magagnato, Jakirlić and FrohnapfelForooghi et al. 2017), skewness (Reference Jelly and BusseJelly & Busse 2018; Reference Busse and JellyBusse & Jelly 2023) and effective slopes (Reference Jelly, Ramaniand, Nugroho, Hutchins and BusseJelly et al. 2022), etc. Given that rough surfaces in engineering applications often exhibit heterogeneous, e.g. patchy, structures (Reference Sarakinos and BusseSarakinos & Busse 2023), and anisotropy (Reference Forooghi, Stroh, Magagnato, Jakirlić and FrohnapfelForooghi et al. 2017; Reference Jelly, Ramaniand, Nugroho, Hutchins and BusseJelly et al. 2022), using the entire surface topography as input data may be needed to capture these complexities. In this paper, we will discuss different model inputs, including statistical measures and the two-dimensional height distribution of a surface.

This paper is organized into four sections: § 2 describes the generation and statistical properties of the investigated database of rough surfaces. Model training and architecture details are outlined in § 3. The drag prediction results of the modes are presented and discussed in § 4. Finally, a discussion is provided in § 5.

2. Problem setting

2.1 Generation of irregular rough surfaces

The dataset in this study includes five categories of irregular, statistically homogeneous rough surfaces. The surfaces are represented as a height function (or maps), ![]() $k(x,z)$, which is a function of streamwise (

$k(x,z)$, which is a function of streamwise (![]() $x$) and spanwise (

$x$) and spanwise (![]() $z$) coordinates. Examples of topographies corresponding to each surface category are shown in the first column of table 1, which displays representative height maps along with their projections onto the

$z$) coordinates. Examples of topographies corresponding to each surface category are shown in the first column of table 1, which displays representative height maps along with their projections onto the ![]() $x$–

$x$–![]() $z$ plane. Rough surfaces of type

$z$ plane. Rough surfaces of type ![]() $Sk_0$ were generated using a Fourier-filtering algorithm based on the power spectrum method proposed by Reference Jacobs, Junge and PastewkaJacobs, Junge & Pastewka (2017). In this study, 271 distinct

$Sk_0$ were generated using a Fourier-filtering algorithm based on the power spectrum method proposed by Reference Jacobs, Junge and PastewkaJacobs, Junge & Pastewka (2017). In this study, 271 distinct ![]() $Sk_0$ surfaces were individually produced, each characterized by varying power spectrum amplitudes and random phase shifts in the Fourier modes. By cutting off the heights below the average of random

$Sk_0$ surfaces were individually produced, each characterized by varying power spectrum amplitudes and random phase shifts in the Fourier modes. By cutting off the heights below the average of random ![]() $Sk_0$ surfaces, we obtained the second type, i.e. the positively skewed roughness (

$Sk_0$ surfaces, we obtained the second type, i.e. the positively skewed roughness (![]() $Sk_+$) with mountainous topography. The negatively skewed surfaces (

$Sk_+$) with mountainous topography. The negatively skewed surfaces (![]() $Sk_-$) were generated in the opposite manner to

$Sk_-$) were generated in the opposite manner to ![]() $Sk_+$ and exhibit basins surrounded by flat regions. These three surface types are based on a prescribed skewness and are therefore isotropic. Two other types of anisotropic surfaces were generated using the algorithm from Reference Jelly and BusseJelly & Busse (2018). This algorithm generates surfaces by applying linear combinations of Gaussian random matrices using a moving average process. The correlation of discrete surface heights in the wall-parallel direction is governed by a predefined target correlation function. By adjusting key parameters such as the cutoff wavenumber for the circular Fourier filter, as well as the number of streamwise and spanwise points in the correlation function, we were able to create 406 distinct random anisotropic rough surfaces dominated by streamwise- and spanwise-preferential effective slopes. These randomly generated rough surfaces are illustrated by the bottom two rows in table 1, which have streamwise- and spanwise-preferential effective slopes (labelled as

$Sk_+$ and exhibit basins surrounded by flat regions. These three surface types are based on a prescribed skewness and are therefore isotropic. Two other types of anisotropic surfaces were generated using the algorithm from Reference Jelly and BusseJelly & Busse (2018). This algorithm generates surfaces by applying linear combinations of Gaussian random matrices using a moving average process. The correlation of discrete surface heights in the wall-parallel direction is governed by a predefined target correlation function. By adjusting key parameters such as the cutoff wavenumber for the circular Fourier filter, as well as the number of streamwise and spanwise points in the correlation function, we were able to create 406 distinct random anisotropic rough surfaces dominated by streamwise- and spanwise-preferential effective slopes. These randomly generated rough surfaces are illustrated by the bottom two rows in table 1, which have streamwise- and spanwise-preferential effective slopes (labelled as ![]() $\lambda _x$ and

$\lambda _x$ and ![]() $\lambda _z$, respectively).

$\lambda _z$, respectively).

Table 1. Examples of the five roughness types: the three-dimensional topography of each type and their two-dimensional projections on the ![]() $x$–

$x$–![]() $z$ plane are shown in the leftmost column. The number of samples of each type used in this study is given. The sample-averaged skewness and kurtosis

$z$ plane are shown in the leftmost column. The number of samples of each type used in this study is given. The sample-averaged skewness and kurtosis ![]() $\langle \rangle$ are provided to demonstrate whether a surface is Gaussian or not in terms of its height distribution. Anisotropy is examined by the mean ratio of effective slopes over the samples in two directions. ES, effective slope in the x or z direction.

$\langle \rangle$ are provided to demonstrate whether a surface is Gaussian or not in terms of its height distribution. Anisotropy is examined by the mean ratio of effective slopes over the samples in two directions. ES, effective slope in the x or z direction.

We adopted a number of statistical measures for parameterizing the surface topographies as listed in table 2. The left panel of the table displays the seven parameters that characterize the topographical information of the surface, such as the effective slopes that represent the frontal solidity of the rough surfaces. The range of the parameters for the rough surfaces investigated in this work is listed in table 5 in Appendix C. Reference Chung, Nicholas, Michael and KarenChung et al. (2021) provides a comprehensive summary of the physical significance of these statistical parameters. The centre column displays three statistical measures of the topography's height distribution, of which the skewness has been shown to have a notable influence upon turbulent kinetic energy (Reference Thakkar, Busse and SandhamThakkar et al. 2016), shear stress (Reference Jelly and BusseJelly & Busse 2018) and pressure drag (Reference Busse and JellyBusse & Jelly 2023). Following Reference Jouybari, Yuan, Brereton and MurilloJouybari et al. (2021), we also use additional parameters formed from pairs of ![]() $ES_x$,

$ES_x$, ![]() $ES_z$,

$ES_z$, ![]() $Skw$ and

$Skw$ and ![]() $Ku$. These take into account nonlinear effects in the model input, the significance of which will be discussed later.

$Ku$. These take into account nonlinear effects in the model input, the significance of which will be discussed later.

Table 2. The topographical statistics include ten ‘primary’ parameters and nine ‘pair’ parameters. The main features are divided into the ones bearing physical implications, i.e. crest height ![]() $k_c$, average height deviation

$k_c$, average height deviation ![]() $R_a$, effective slopes

$R_a$, effective slopes ![]() $ES_{x,z}$, porosity

$ES_{x,z}$, porosity ![]() $Po$, inclinations

$Po$, inclinations ![]() $inc_{x,z}$; and statistical parameters, i.e. root-mean-square height

$inc_{x,z}$; and statistical parameters, i.e. root-mean-square height ![]() $k_{rms}$, skewness

$k_{rms}$, skewness ![]() $Skw$ and kurtosis

$Skw$ and kurtosis ![]() $Ku$.

$Ku$.

2.2 Drag measurement

The drag penalty in turbulence from rough walls is commonly represented by the velocity deficit referred to as the roughness function ![]() $\Delta U^+=\Delta U/u_\tau$ (Reference HamaHama 1954), i.e. the friction-scaled downward offset of the mean velocity profile in the logarithmic layer. Here,

$\Delta U^+=\Delta U/u_\tau$ (Reference HamaHama 1954), i.e. the friction-scaled downward offset of the mean velocity profile in the logarithmic layer. Here, ![]() $u_\tau \equiv \sqrt {\tau _w/\rho }$ is the friction velocity,

$u_\tau \equiv \sqrt {\tau _w/\rho }$ is the friction velocity, ![]() $\tau _w$ is the wall shear stress and

$\tau _w$ is the wall shear stress and ![]() $\rho$ is the fluid density. Note that difference in the skin-friction coefficient

$\rho$ is the fluid density. Note that difference in the skin-friction coefficient ![]() $C_f$ between a smooth and a rough wall (at a matched

$C_f$ between a smooth and a rough wall (at a matched ![]() $Re_\tau$) is equivalent to the roughness function

$Re_\tau$) is equivalent to the roughness function ![]() $\Delta U^+$.

$\Delta U^+$.

To determine the drag for each generated surface, DNSs of turbulent channel flow at ![]() $Re_\tau =u_\tau \delta /\nu =500$ were conducted (here,

$Re_\tau =u_\tau \delta /\nu =500$ were conducted (here, ![]() $\delta$ is the half-channel height and

$\delta$ is the half-channel height and ![]() $v$ is viscosity). Considering the number of generated surfaces (1018), the simulations needed to be done in a cost-effective manner. For this reason, we employed the minimal-span channel approach of Reference Chung, Chan, MacDonald, Hutchins and OoiChung et al. (2015) and Reference MacDonald, Chung, Hutchins, Chan, Ooi and García-MayoralMacDonald et al. (2017), which has proven to a successful method for characterizing the hydraulic resistance of rough surfaces under turbulent flow conditions. The minimal-span approach exploits the fact that the flow retardation imposed by the roughness occurs close to it and this effect remains constant away from the roughness, manifesting as a downward shift in the logarithmic region of turbulent velocity profile,

$v$ is viscosity). Considering the number of generated surfaces (1018), the simulations needed to be done in a cost-effective manner. For this reason, we employed the minimal-span channel approach of Reference Chung, Chan, MacDonald, Hutchins and OoiChung et al. (2015) and Reference MacDonald, Chung, Hutchins, Chan, Ooi and García-MayoralMacDonald et al. (2017), which has proven to a successful method for characterizing the hydraulic resistance of rough surfaces under turbulent flow conditions. The minimal-span approach exploits the fact that the flow retardation imposed by the roughness occurs close to it and this effect remains constant away from the roughness, manifesting as a downward shift in the logarithmic region of turbulent velocity profile, ![]() $\Delta U^+$, and otherwise known as the roughness function (Reference ClauserClauser 1954; Reference HamaHama 1954). Therefore, the measure of drag we acquired from the DNS was

$\Delta U^+$, and otherwise known as the roughness function (Reference ClauserClauser 1954; Reference HamaHama 1954). Therefore, the measure of drag we acquired from the DNS was ![]() $\Delta U^+$. The size of the minimal channel in this work is

$\Delta U^+$. The size of the minimal channel in this work is ![]() $(L_x, L_y, L_z)=(2.4, 2, 0.8)\delta$. The simulations were conducted using the open-source code CaNS (Reference Costa, Phillips, Brandt and FaticaCosta et al. 2021) which solves the incompressible Navier–Stokes equations on three-dimensional Cartesian grids using second-order central finite differences. In these simulations, periodic boundary conditions were imposed along the streamwise (

$(L_x, L_y, L_z)=(2.4, 2, 0.8)\delta$. The simulations were conducted using the open-source code CaNS (Reference Costa, Phillips, Brandt and FaticaCosta et al. 2021) which solves the incompressible Navier–Stokes equations on three-dimensional Cartesian grids using second-order central finite differences. In these simulations, periodic boundary conditions were imposed along the streamwise (![]() $x$) and spanwise (

$x$) and spanwise (![]() $z$) directions, while a Dirichlet boundary condition was applied in the wall-normal (

$z$) directions, while a Dirichlet boundary condition was applied in the wall-normal (![]() $y$) direction.

$y$) direction.

To incorporate the generated rough surfaces into the simulations, we augmented CaNS with the volume-penalization immersed-boundary method (Reference Kajishima, Takiguchi, Hamasaki and MiyakeKajishima et al. 2001; Reference Breugem, van Dijk and DelfosBreugem, van Dijk & Delfos 2012). Specifics regarding the solver and numerical methods used may be found in the aforementioned references which we omit here to avoid repetition. To ensure, however, that the DNS framework was able to accurately account for the effect of the irregular rough surfaces, a validation was carried out against one of the rough-wall DNS cases of Reference Jelly and BusseJelly & Busse (2019). The results of the validation are gathered in Appendix A. The grid resolution in the ![]() $x$- and

$x$- and ![]() $z$-directions consisted of 302 and 102 points, respectively, corresponding to grid spacings of

$z$-directions consisted of 302 and 102 points, respectively, corresponding to grid spacings of ![]() $\Delta x^+ = 4.192$ and

$\Delta x^+ = 4.192$ and ![]() $\Delta z^+ = 4.137$, where the superscript

$\Delta z^+ = 4.137$, where the superscript ![]() $+$ denotes scaling by the viscous length scale

$+$ denotes scaling by the viscous length scale ![]() $\delta _\nu = \nu /u_\tau$. In the

$\delta _\nu = \nu /u_\tau$. In the ![]() $y$-direction, a hyperbolic tangent stretching function was used for the grid with the smallest grid spacing in wall units,

$y$-direction, a hyperbolic tangent stretching function was used for the grid with the smallest grid spacing in wall units, ![]() $y^+$, approximately 0.5. Velocity data were sampled at regular intervals, with the early stages of the simulations discarded to ensure sufficient convergence of the mean velocity. Details concerning requirements on the domain size and grid resolution that has to be satisfied when performing the minimal-span channel DNS of rough surfaces can be found in Reference Yang, Stroh, Chung and ForooghiYang et al. (2022).

$y^+$, approximately 0.5. Velocity data were sampled at regular intervals, with the early stages of the simulations discarded to ensure sufficient convergence of the mean velocity. Details concerning requirements on the domain size and grid resolution that has to be satisfied when performing the minimal-span channel DNS of rough surfaces can be found in Reference Yang, Stroh, Chung and ForooghiYang et al. (2022).

2.3 Parameter space

The input to the different models is presented by ![]() $\boldsymbol {x}=(x_1,\ldots, x_D)$, where

$\boldsymbol {x}=(x_1,\ldots, x_D)$, where ![]() $D$ is the number of input variables. For linear regression, support vector regression (SVR) and MLP, we used the primary and secondary statistical measures in table 2, resulting in an input vector of size

$D$ is the number of input variables. For linear regression, support vector regression (SVR) and MLP, we used the primary and secondary statistical measures in table 2, resulting in an input vector of size ![]() $D\,{=}\,10$ and

$D\,{=}\,10$ and ![]() $D\,{=}\,19$, respectively. For the CNN model, the roughness height map is used as the input, i.e.

$D\,{=}\,19$, respectively. For the CNN model, the roughness height map is used as the input, i.e. ![]() $D\,{=}\,n_zn_x\,{=}\,102\,{\times}\, 302$.

$D\,{=}\,n_zn_x\,{=}\,102\,{\times}\, 302$.

Before attempting any modelling for drag prediction, simply examining the distribution of input parameters with respect to the output provides insights into the relationship between them. Figure 1 shows the scatter distribution of four representative statistics (![]() $k^+_{rms}, Skw, ES_x, Ku$) with

$k^+_{rms}, Skw, ES_x, Ku$) with ![]() $\Delta U^+$. A notable degree of linearity between

$\Delta U^+$. A notable degree of linearity between ![]() $k^+_{rms}$ and

$k^+_{rms}$ and ![]() $\Delta U^+$ exists for the

$\Delta U^+$ exists for the ![]() $Sk_0$- and

$Sk_0$- and ![]() $Sk_+$-surfaces while it is less for the

$Sk_+$-surfaces while it is less for the ![]() $\lambda _x$ and

$\lambda _x$ and ![]() $\lambda _z$ surfaces (figure 1a). Similarly, the effective slopes shown in figure 1(c) show a certain degree of linearity with respect to

$\lambda _z$ surfaces (figure 1a). Similarly, the effective slopes shown in figure 1(c) show a certain degree of linearity with respect to ![]() $\Delta U^+$. However, the ‘cluster’ distributions seen for the skewness (figure 1b) and kurtosis (figure 1d) imply a nonlinear relationship that would need to be accounted for in any model for it to be more robust. Note that the negatively skewed roughness yields a much smaller

$\Delta U^+$. However, the ‘cluster’ distributions seen for the skewness (figure 1b) and kurtosis (figure 1d) imply a nonlinear relationship that would need to be accounted for in any model for it to be more robust. Note that the negatively skewed roughness yields a much smaller ![]() $\Delta U^+$ compared with other types of roughness. These surfaces are dominated by a pothole-like topography with few stagnation points. As a consequence, the viscous force contributes significantly to the total drag (Reference Busse and JellyBusse & Jelly 2023), in contrast to the other surface types where pressure drag is dominant. Finally, we note from figure 1 that, for our surfaces,

$\Delta U^+$ compared with other types of roughness. These surfaces are dominated by a pothole-like topography with few stagnation points. As a consequence, the viscous force contributes significantly to the total drag (Reference Busse and JellyBusse & Jelly 2023), in contrast to the other surface types where pressure drag is dominant. Finally, we note from figure 1 that, for our surfaces, ![]() $\Delta U^+$ falls into the range of 0.1 to 7.5, thus including both transitionally and fully rough regimes (Reference JiménezJiménez 2004). By including this range of roughness, the models need to learn both viscous and pressure drag components. Note that, while our database covers a continuous range in

$\Delta U^+$ falls into the range of 0.1 to 7.5, thus including both transitionally and fully rough regimes (Reference JiménezJiménez 2004). By including this range of roughness, the models need to learn both viscous and pressure drag components. Note that, while our database covers a continuous range in ![]() $k_{rms}$ and

$k_{rms}$ and ![]() $ES_x$, there are gaps in

$ES_x$, there are gaps in ![]() $Skw$ and

$Skw$ and ![]() $Ku$. This is a consequence of our surface generation approach for skewed roughness. A more continuous range of training data for training regression models will be considered in future work. However, we regard the size and span of parameters in the current database of primary importance for our purposes, which is a comparative study between different regression models.

$Ku$. This is a consequence of our surface generation approach for skewed roughness. A more continuous range of training data for training regression models will be considered in future work. However, we regard the size and span of parameters in the current database of primary importance for our purposes, which is a comparative study between different regression models.

Figure 1. Scatter distributions of ![]() $\Delta U^+$ and four representative statistics of each type of roughness: (a)

$\Delta U^+$ and four representative statistics of each type of roughness: (a) ![]() $k^+_{rms}$, (b)

$k^+_{rms}$, (b) ![]() $Skw$, (c)

$Skw$, (c) ![]() $ES_x$ and (d)

$ES_x$ and (d) ![]() $Ku$. The dashed straight lines in (a) highlight the linear relationship between

$Ku$. The dashed straight lines in (a) highlight the linear relationship between ![]() $\Delta U^+$ and

$\Delta U^+$ and ![]() $k^+_{rms}$ for merely zero and positively skewed surfaces while the ‘cluster’ distribution (circle lines) in (d) indicates a nonlinear relationship between

$k^+_{rms}$ for merely zero and positively skewed surfaces while the ‘cluster’ distribution (circle lines) in (d) indicates a nonlinear relationship between ![]() $\Delta U^+$ and

$\Delta U^+$ and ![]() $Ku$ prediction.

$Ku$ prediction.

To further quantify the correlation between ![]() $\Delta U^+$ and the input parameters, we show in figure 2 the correlation coefficient

$\Delta U^+$ and the input parameters, we show in figure 2 the correlation coefficient ![]() $\rho (x_i, x_j)$, defined as

$\rho (x_i, x_j)$, defined as

\begin{equation} \rho_{ij} = \frac{\displaystyle \sum(x_i-\langle x_i \rangle)(x_j-\langle x_j \rangle)}{\sqrt{\displaystyle \sum(x_i-\langle x_i \rangle)^2\sum(x_j-\langle x_j \rangle)^2}},\end{equation}

\begin{equation} \rho_{ij} = \frac{\displaystyle \sum(x_i-\langle x_i \rangle)(x_j-\langle x_j \rangle)}{\sqrt{\displaystyle \sum(x_i-\langle x_i \rangle)^2\sum(x_j-\langle x_j \rangle)^2}},\end{equation}

where ![]() $x_i=k^+_c, k^+_{rms}, Skw,\ldots,\Delta U^+$. The matrix in figure 2 visualizes the degree of linearity between the surface parameters (demarcated by the dashed triangle in figure 2a) and also between the surface parameters and

$x_i=k^+_c, k^+_{rms}, Skw,\ldots,\Delta U^+$. The matrix in figure 2 visualizes the degree of linearity between the surface parameters (demarcated by the dashed triangle in figure 2a) and also between the surface parameters and ![]() $\Delta U^+$ (bottom row in figure 2a). Coefficient values greater than 0.7 indicate a strong linear correlation between two variables. The matrices of the

$\Delta U^+$ (bottom row in figure 2a). Coefficient values greater than 0.7 indicate a strong linear correlation between two variables. The matrices of the ![]() $Sk_0$ and

$Sk_0$ and ![]() $Sk_+$ surfaces are overall similar, with the roughness height parameters (

$Sk_+$ surfaces are overall similar, with the roughness height parameters (![]() $k^+_c, k^+_{rms}, R^+_a$) and effective slopes (

$k^+_c, k^+_{rms}, R^+_a$) and effective slopes (![]() $ES_x, ES_z$) being strongly linearly correlated to

$ES_x, ES_z$) being strongly linearly correlated to ![]() $\Delta U^+$. This is in contrast to other surface types which manifest a nonlinear quality with respect to

$\Delta U^+$. This is in contrast to other surface types which manifest a nonlinear quality with respect to ![]() $\Delta U^+$. In particular, the

$\Delta U^+$. In particular, the ![]() $Sk_-$ surfaces exhibit a low degree of linearity both between parameters and parameter

$Sk_-$ surfaces exhibit a low degree of linearity both between parameters and parameter ![]() $\Delta U^+$. This indicates a more intricate mapping between the surface properties of pitted surfaces and their resulting drag. For all the surface types, the roughness height and effective slopes are the parameters that exhibit a common degree of linear correlation to

$\Delta U^+$. This indicates a more intricate mapping between the surface properties of pitted surfaces and their resulting drag. For all the surface types, the roughness height and effective slopes are the parameters that exhibit a common degree of linear correlation to ![]() $\Delta U^+$. It is worth noting that, for the surfaces generated by the prescribed skewness (types I, IV, V), a weaker correlation between skewness and

$\Delta U^+$. It is worth noting that, for the surfaces generated by the prescribed skewness (types I, IV, V), a weaker correlation between skewness and ![]() $\Delta U^+$ is observed. As illustrated in figure 1(b), the range of

$\Delta U^+$ is observed. As illustrated in figure 1(b), the range of ![]() $Skw$ for each type of surface is limited due to it being a prescribed (and hence controlled) parameter. This limited range precludes the possibility of revealing any relation between

$Skw$ for each type of surface is limited due to it being a prescribed (and hence controlled) parameter. This limited range precludes the possibility of revealing any relation between ![]() $Skw$ and

$Skw$ and ![]() $\Delta U^+$. This also applies to its correlation with other parameters, particularly for Gaussian surfaces.

$\Delta U^+$. This also applies to its correlation with other parameters, particularly for Gaussian surfaces.

Figure 2. Correlation coefficients ![]() $\rho$ of ten primary parameters and

$\rho$ of ten primary parameters and ![]() $\Delta U^+$ for each type of roughness. The circles in the bottom row show the linear correlation between

$\Delta U^+$ for each type of roughness. The circles in the bottom row show the linear correlation between ![]() $\Delta U^+$ and the parameters while the rest are the correlations between any two topographical parameters. Larger and darker circles represent stronger linear correlation between two variables. Those with

$\Delta U^+$ and the parameters while the rest are the correlations between any two topographical parameters. Larger and darker circles represent stronger linear correlation between two variables. Those with ![]() $|\rho _{ij}|>0.5$ are annotated.

$|\rho _{ij}|>0.5$ are annotated.

3. Predictive models

For training, we use a sequence of rough surfaces ![]() $\{\boldsymbol {x}_n\}$ together with their corresponding roughness functions

$\{\boldsymbol {x}_n\}$ together with their corresponding roughness functions ![]() $\{\Delta U^+_n\}$, where

$\{\Delta U^+_n\}$, where ![]() $n=1,\ldots, N$. The objective is to find the least complex model that accurately predicts the roughness function

$n=1,\ldots, N$. The objective is to find the least complex model that accurately predicts the roughness function ![]() $\Delta \tilde U^+$ for a new (i.e. ‘unseen’) rough surface,

$\Delta \tilde U^+$ for a new (i.e. ‘unseen’) rough surface, ![]() $\boldsymbol {x}$

$\boldsymbol {x}$

Here, ![]() $f$:

$f$: ![]() $\mathbb {R}^D\rightarrow \mathbb {R}$ represents different models obtained by solving a regression problem. We adopt the following approaches for creating the models: linear regression (LR), SVR utilizing kernel functions, MLP and CNN. Depending on the model, the inputs are either the statistical parameters listed in table 2 (LR, SVR, MLP) or the height maps bearing the roughness topography (CNN). We used 80 % of the total shuffled roughness data for training and validation with the remaining 20 % used for testing. A random sampling constituting 80 % of the development data is used for training, with each type of roughness comprising an equal fraction of this data. The data partitioning for training and testing is identical for all models.

$\mathbb {R}^D\rightarrow \mathbb {R}$ represents different models obtained by solving a regression problem. We adopt the following approaches for creating the models: linear regression (LR), SVR utilizing kernel functions, MLP and CNN. Depending on the model, the inputs are either the statistical parameters listed in table 2 (LR, SVR, MLP) or the height maps bearing the roughness topography (CNN). We used 80 % of the total shuffled roughness data for training and validation with the remaining 20 % used for testing. A random sampling constituting 80 % of the development data is used for training, with each type of roughness comprising an equal fraction of this data. The data partitioning for training and testing is identical for all models.

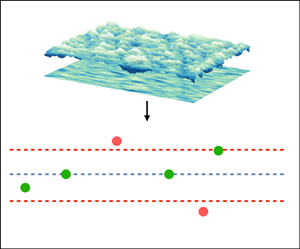

Figure 3 illustrates the process of the regression modelling. For the neural networks, Bayesian optimization (BO) was used for HP tuning due to the large parameter space. The LR and SVR models were tuned manually. Several measures are used to evaluate the model performance on the test data, including the mean absolute error

\begin{equation} {MAE} = \frac{1}{M}\sum_{i = 1}^M|\Delta U_{i}^ + - \Delta \tilde U_{i}^ + |,\end{equation}

\begin{equation} {MAE} = \frac{1}{M}\sum_{i = 1}^M|\Delta U_{i}^ + - \Delta \tilde U_{i}^ + |,\end{equation}and the mean absolute percentage error

\begin{equation} {MAPE} = \frac{1}{M}\sum_{i = 1}^M\left| \frac{\Delta U_{i}^ + - \Delta \tilde U_{i}^ + }{\Delta U_{i}^ + }\right|\times100.\end{equation}

\begin{equation} {MAPE} = \frac{1}{M}\sum_{i = 1}^M\left| \frac{\Delta U_{i}^ + - \Delta \tilde U_{i}^ + }{\Delta U_{i}^ + }\right|\times100.\end{equation}

Here, ![]() $M$ is the number of samples in the test data set,

$M$ is the number of samples in the test data set, ![]() $\Delta U_{i}^+$ is the reference drag value obtained from DNS and

$\Delta U_{i}^+$ is the reference drag value obtained from DNS and ![]() $\Delta \tilde U_{i}^+$ is the drag prediction obtained from the regression model (3.1). The above measures provide the absolute and relative accuracy of the regression model. The goodness-of-fit

$\Delta \tilde U_{i}^+$ is the drag prediction obtained from the regression model (3.1). The above measures provide the absolute and relative accuracy of the regression model. The goodness-of-fit ![]() $R^2$ measure is also reported.

$R^2$ measure is also reported.

Figure 3. Workflow of drag prediction. The four models are evaluated by MAE, MAPE and ![]() $R^2$. The model architectures of MLP and CNN are illustrated, wherein the hyperparameters (HPs) are determined using Bayesian optimization.

$R^2$. The model architectures of MLP and CNN are illustrated, wherein the hyperparameters (HPs) are determined using Bayesian optimization.

3.1 Linear regression

We begin with the LR model, which is the simplest of all models considered in this study. Such a model accounts for the linear correlation between the surface parameters and ![]() $\Delta U^+$, which were observed in figure 2. The model is defined as

$\Delta U^+$, which were observed in figure 2. The model is defined as

The weights ![]() $\boldsymbol {w}\in \mathbb {R}^{D\times 1}$ and the bias term

$\boldsymbol {w}\in \mathbb {R}^{D\times 1}$ and the bias term ![]() $b$ are found through a least-squares optimization of the model using the training data set

$b$ are found through a least-squares optimization of the model using the training data set

\begin{equation} E(\boldsymbol{w)} = \frac{1}{2}\sum_{i = 1}^N (\Delta U_{i}^ + - \Delta \tilde U_{i}^ + )^2. \end{equation}

\begin{equation} E(\boldsymbol{w)} = \frac{1}{2}\sum_{i = 1}^N (\Delta U_{i}^ + - \Delta \tilde U_{i}^ + )^2. \end{equation}

Figure 4(a) shows the drag prediction using LR on the test data samples. Using the ten primary surface-derived parameters (see table 2), the model has a MAPE of ![]() $=7.9\,\%$. Figure 4(b) shows the drag prediction obtained when using an extended number of input parameters that includes both primary and pair parameters. The extended-input model reduces the error by

$=7.9\,\%$. Figure 4(b) shows the drag prediction obtained when using an extended number of input parameters that includes both primary and pair parameters. The extended-input model reduces the error by ![]() $2\,\%$, along with a decrease in data scatter (improved

$2\,\%$, along with a decrease in data scatter (improved ![]() $R^2$). By including the pair parameters of roughness in the model input, we are incorporating nonlinear effects in the LR model. However, the choice of pair parameters in table 2 is arbitrary and we have chosen them to be similar to those of Reference Jouybari, Yuan, Brereton and MurilloJouybari et al. (2021).

$R^2$). By including the pair parameters of roughness in the model input, we are incorporating nonlinear effects in the LR model. However, the choice of pair parameters in table 2 is arbitrary and we have chosen them to be similar to those of Reference Jouybari, Yuan, Brereton and MurilloJouybari et al. (2021).

Figure 4. The ![]() $\Delta U^+$ predictions of LR versus those from DNS: model using (a) 10 primary statistics and (b) 19 statistics (i.e. including 9 pair-product parameters.)

$\Delta U^+$ predictions of LR versus those from DNS: model using (a) 10 primary statistics and (b) 19 statistics (i.e. including 9 pair-product parameters.)

3.2 Support vector regression

To increase the fidelity of the model, we now turn our attention to SVR, which allows for nonlinear regression through the use of kernel functions. Replacing the input vector ![]() $\boldsymbol {x}$ in (3.4) with a nonlinear mapping

$\boldsymbol {x}$ in (3.4) with a nonlinear mapping ![]() $\bar \phi (\boldsymbol {x})$, we will have

$\bar \phi (\boldsymbol {x})$, we will have

When using kernel functions, the weight vector ![]() $\boldsymbol {w}$ is given by a linear combination of the expansion basis

$\boldsymbol {w}$ is given by a linear combination of the expansion basis

\begin{equation} \boldsymbol{w} = \sum_{i = 1}^N a_i \bar \phi(\boldsymbol{x}_i).\end{equation}

\begin{equation} \boldsymbol{w} = \sum_{i = 1}^N a_i \bar \phi(\boldsymbol{x}_i).\end{equation}Inserting (3.7) into (3.6) results in

\begin{equation} \Delta \tilde U^ + (\boldsymbol{x}) = \sum_{i = 1}^N a_i \bar \phi(\boldsymbol{x}_i)^T\bar \phi(\boldsymbol{x}) + b = \sum_{i = 1}^N a_i k(\boldsymbol{x}_i, \boldsymbol{x}) + b,\end{equation}

\begin{equation} \Delta \tilde U^ + (\boldsymbol{x}) = \sum_{i = 1}^N a_i \bar \phi(\boldsymbol{x}_i)^T\bar \phi(\boldsymbol{x}) + b = \sum_{i = 1}^N a_i k(\boldsymbol{x}_i, \boldsymbol{x}) + b,\end{equation}

where ![]() ${k}(\boldsymbol {x}_{i}, \boldsymbol {x})$ is the kernel.

${k}(\boldsymbol {x}_{i}, \boldsymbol {x})$ is the kernel.

In the model above, the prediction requires ![]() $N$ function evaluations. Since,

$N$ function evaluations. Since, ![]() $N\in \mathcal {O}(10^3)$ is the number of training samples, kernel evaluations become inefficient for large datasets. Support vector regression sparsifies the kernel by including only support vectors in the expansion. To achieve this, instead of a least-squares minimization (3.5), one minimizes the

$N\in \mathcal {O}(10^3)$ is the number of training samples, kernel evaluations become inefficient for large datasets. Support vector regression sparsifies the kernel by including only support vectors in the expansion. To achieve this, instead of a least-squares minimization (3.5), one minimizes the ![]() $\epsilon$-sensitive cost function, defined as

$\epsilon$-sensitive cost function, defined as

This means that only errors larger than ![]() $\epsilon$ contribute to the cost function.

$\epsilon$ contribute to the cost function.

To determine the weights and the bias in (3.6), we minimize the regularized cost function

\begin{equation} E(\boldsymbol{w}) = C \sum_{j = 1}^N J(\Delta U^ + _{i}-\Delta \tilde U^ + _{i}) + \frac{1}{2}\|\boldsymbol{w}\|^2. \end{equation}

\begin{equation} E(\boldsymbol{w}) = C \sum_{j = 1}^N J(\Delta U^ + _{i}-\Delta \tilde U^ + _{i}) + \frac{1}{2}\|\boldsymbol{w}\|^2. \end{equation}

The second term is the regularization term that penalizes large weights, i.e. promoting flatness (i.e. achieving a smoother loss value). Note that, by convention, the regularization parameter ![]() $C$ appears in front of the first term. The key aspect of SVR is that, by using (3.9),

$C$ appears in front of the first term. The key aspect of SVR is that, by using (3.9), ![]() $a_j$ values in (3.8) are non-zero only for the training samples either lying on or above the boundary defined by

$a_j$ values in (3.8) are non-zero only for the training samples either lying on or above the boundary defined by ![]() $\epsilon$.

$\epsilon$.

The choice of kernel in this work for nonlinear mapping is the radial basis function (RBF)

where ![]() $\gamma =1/(N\sigma ^2)$ is the kernel coefficient and

$\gamma =1/(N\sigma ^2)$ is the kernel coefficient and ![]() $\sigma ^2$ is the variance of the training data. The input data

$\sigma ^2$ is the variance of the training data. The input data ![]() $\boldsymbol {x}$ are rescaled by the min–max normalization while the scaling of the target

$\boldsymbol {x}$ are rescaled by the min–max normalization while the scaling of the target ![]() $\Delta U^+$ is insignificant for prediction. The parameter

$\Delta U^+$ is insignificant for prediction. The parameter ![]() $C$ and kernel bandwidth

$C$ and kernel bandwidth ![]() $\varepsilon$ were tuned and the best performance was obtained for values of

$\varepsilon$ were tuned and the best performance was obtained for values of ![]() $C=0.1$ and

$C=0.1$ and ![]() $\epsilon =0.01$. We have presented a simplified formulation of the optimization problem associated with SVR here. We refer to Reference Cortes and VapnikCortes & Vapnik (1995) and Reference Smola and BernhardSmola & Bernhard (2002) for the complete formulation of the kernel in the optimization process, including the use of slack variables.

$\epsilon =0.01$. We have presented a simplified formulation of the optimization problem associated with SVR here. We refer to Reference Cortes and VapnikCortes & Vapnik (1995) and Reference Smola and BernhardSmola & Bernhard (2002) for the complete formulation of the kernel in the optimization process, including the use of slack variables.

3.3 Neural networks

While SVR has far greater capacity and fidelity than LR – due to mapping the input space onto a higher-dimensional space – it still requires the user to choose an appropriate expansion basis ![]() $\bar \phi (\boldsymbol {x})$. Neural networks can learn

$\bar \phi (\boldsymbol {x})$. Neural networks can learn ![]() $\bar \phi$ from a broad class of functions and form a composition of such functions using hidden layers. Neural networks often require more training data than SVR to generalize well and constitute a non-convex optimization problem. To explore neural networks for drag prediction, we consider MLP and CNN.

$\bar \phi$ from a broad class of functions and form a composition of such functions using hidden layers. Neural networks often require more training data than SVR to generalize well and constitute a non-convex optimization problem. To explore neural networks for drag prediction, we consider MLP and CNN.

3.3.1 Multi-layer perceptron

The MLP model is composed of multiple layers of neurons, where the neurons of two adjacent layers are connected by weights. The inputs are either the 10 primary statistics or the extended set of 19 statistics listed in table 2. The output, ![]() $\Delta \tilde U^+_{i}$, is composed from the nonlinear transfer functions of each layer. This is what enables an MLP to account for high degrees of nonlinearity. The objective is to identify the weights of a network such that the following loss function is minimized:

$\Delta \tilde U^+_{i}$, is composed from the nonlinear transfer functions of each layer. This is what enables an MLP to account for high degrees of nonlinearity. The objective is to identify the weights of a network such that the following loss function is minimized:

\begin{equation} E(\boldsymbol{w}) = \frac{1}{2}\sum_{i = 1}^N \|\Delta U_{i}^ + - \Delta \tilde U_{i}^ + \|^2 + \frac{\lambda}{2}\boldsymbol{w}^T\boldsymbol{w}.\end{equation}

\begin{equation} E(\boldsymbol{w}) = \frac{1}{2}\sum_{i = 1}^N \|\Delta U_{i}^ + - \Delta \tilde U_{i}^ + \|^2 + \frac{\lambda}{2}\boldsymbol{w}^T\boldsymbol{w}.\end{equation}

The loss function is composed of a sum of squared errors term and a regularization term. The weight vector ![]() $\boldsymbol {w}$ contains values between the neurons of adjacent layers of the network.

$\boldsymbol {w}$ contains values between the neurons of adjacent layers of the network.

We performed BO to determine the HPs of the MLP, including the number of layers, the number of neurons, the learning rate, the regularization term ![]() $\lambda$, the activation function and the initialization of the weights. The Gaussian process acts as a surrogate model to estimate the model performance and the HPs are updated after each evaluation of the loss function. The acquisition function directs the next search location in the given range of parameter space to find the optimal set of HPs. At each iteration, these HPs are evaluated by training the neural network, where the number of evaluations depends on the input dimension.

$\lambda$, the activation function and the initialization of the weights. The Gaussian process acts as a surrogate model to estimate the model performance and the HPs are updated after each evaluation of the loss function. The acquisition function directs the next search location in the given range of parameter space to find the optimal set of HPs. At each iteration, these HPs are evaluated by training the neural network, where the number of evaluations depends on the input dimension.

Using BO, we developed two architectures. The first one maintains a fixed number of layers with an optimized number of neurons. The second architecture has an optimized number of layers but a fixed number of neurons. Given that each layer learns different information from the previous input, the number of neurons or filters, in theory, should differ at each layer. After conducting a set of comparative trials for both architectures, we adopted the first architecture since it exhibited a slightly lower relative error. The final HPs for the two MLP models are displayed in table 3. Note that, to ensure consistent scaling, the inputs were rescaled by their respective standard deviations.

Table 3. The Bayesian-optimized HPs in ![]() $MLP_{10}$,

$MLP_{10}$, ![]() $MLP_{19}$ and CNN that are used for prediction in this work. ReLU, rectified linear unit.

$MLP_{19}$ and CNN that are used for prediction in this work. ReLU, rectified linear unit.

Figure 5(a) shows the training and validation losses for the MLP as a function of the number of epochs (i.e. iterations in the BO optimization process). The rapid decay of the training curve to a plateau after 100 epochs indicates a fast convergence. The validation curve – which represents the loss on a separate dataset not used for training – follows a similar initial decay followed by a plateau. This indicates that the model generalizes to unseen data relatively well.

Figure 5. Loss curves of training and validation in the Bayesian-optimized (a) ![]() $MLP_{10}$ and (b) CNN with leaning rate reschedule. Early stopping was employed during the neural network within the BO process to mitigate overfitting and expedite training.

$MLP_{10}$ and (b) CNN with leaning rate reschedule. Early stopping was employed during the neural network within the BO process to mitigate overfitting and expedite training.

3.3.2 Convolutional neural network

This regression model is a network with convolutional layers, i.e. a set of filters (or kernels) that are convoluted with the layer's input data. One key feature is that it has sparse connectivity between the neurons, allowing for the processing of very high-dimensional input data. In our case, the input is a two-dimensional function representing the height of the surface roughness. The objective of the CNN is to identify weights to minimize the loss function (3.12). We followed the same procedure used for the MLP to determine the architecture, i.e. the HPs were obtained using Bayesian optimization. The number of blocks, filters, kernel size, learning rate, activation function and weight initialization of the CNN are reported in table 3.

Figure 5(b) shows the training and validation losses for the CNN model. While the loss function of the training demonstrates a fast convergence, the corresponding validation curve shows large oscillations for the first 100 epochs, indicating overfitting of the unseen data. To improve CNN convergence, we implemented a learning rate schedule that reduces the rate by 0.1 every 100 epochs starting from epoch 100. Note that many other architectures are potentially more suitable for drag prediction. Our choice is, however, sufficient for comparative purposes.

3.3.3 Sensitivity analysis of training size

The size of training data is critical for truly exploiting the advantages of neural networks. While our dataset with over 1000 samples is, to the best of our knowledge, the largest such collection for rough-wall turbulence, it is still relatively small compared with what is commonly used for training neural networks in other applications. Therefore, we conducted a sensitivity analysis of the sample size for the training process. To ensure an even representation across different surface categories in parameter space, training samples are uniformly downscaled by the same proportion, as illustrated in figure 6(a). The depth of the new neural networks (NNs) trained using varying data fractions was kept the same as the initial MLP and CNN architectures, while the number of network units were optimized using BO.

Figure 6. (a) The sample coverage in ![]() $\Delta U^+-k^+_{rms}$ space at the fraction of 30 %. The reduced training samples consistently cover the full parameter space. (b) The MAPE of inference obtained from

$\Delta U^+-k^+_{rms}$ space at the fraction of 30 %. The reduced training samples consistently cover the full parameter space. (b) The MAPE of inference obtained from ![]() $LR_{10}$,

$LR_{10}$, ![]() $MLP_{10}$,

$MLP_{10}$, ![]() $SVR_{10}$ and CNN at different sample fractions. (c) The variation in the number of trainable parameters in each model at different sample fractions.

$SVR_{10}$ and CNN at different sample fractions. (c) The variation in the number of trainable parameters in each model at different sample fractions.

Figure 6(b) presents the relative prediction errors (MAPE) of identical test data using models trained with varying data fractions. The SVR achieves the lowest error and exhibits high robustness, as its predictions remain consistent for all training data fractions. The prediction by linear regression is also not affected by the data size but consistently yields the highest error among the models compared. As expected, the performance of NNs depends on the size of the training data. The MLP model converges with a 60 % fraction of the entire data, while the CNN model does not exhibit a clear convergence trend. Despite that, the best CNN, trained using the full dataset, achieves a low error of 4.6 %, which is comparable to SVR. Therefore, the CNN model has not yet achieved adequate generalizability to be employed for unseen data.

Figure 6(c) shows the variation of the number of trainable parameters (![]() $N_{train}$) for the models with different training data fractions. Unlike LR and SVR, where

$N_{train}$) for the models with different training data fractions. Unlike LR and SVR, where ![]() $N_{train}$ is fixed, the NN models exhibit a non-monotonic trend. The MLP seems to stabilize around an

$N_{train}$ is fixed, the NN models exhibit a non-monotonic trend. The MLP seems to stabilize around an ![]() $N_{train}$ of the order of

$N_{train}$ of the order of ![]() $10^5$ after reaching a fraction of 70 %, with roughly an order of magnitude fewer trainable parameters on average than the CNN. This value is an approximation of the optimal model capacity for learning the underlying mapping. In contrast, CNN models experience a significant drop by an order of magnitude at a fraction of 90 %, followed by an increase. This behaviour indicates an overfitting for CNN with the current volume of data, as was reflected by the loss in figure 5(b). The model evaluations in § 4 use predictions from models which were trained on the entire dataset.

$10^5$ after reaching a fraction of 70 %, with roughly an order of magnitude fewer trainable parameters on average than the CNN. This value is an approximation of the optimal model capacity for learning the underlying mapping. In contrast, CNN models experience a significant drop by an order of magnitude at a fraction of 90 %, followed by an increase. This behaviour indicates an overfitting for CNN with the current volume of data, as was reflected by the loss in figure 5(b). The model evaluations in § 4 use predictions from models which were trained on the entire dataset.

4. Drag prediction performance

4.1 Comparison of regression models

We now compare the performance of the four types of regression models. We trained LR, SVR and MLP using two sets of inputs: ten primary statistics and 19 pair statistics, as listed in table 2. We refer to these models as ![]() $LR_{10}$,

$LR_{10}$, ![]() $LR_{19}$, etc. The absolute error (MAE in (3.2)) and relative error (MAPE in (3.3)) obtained from the seven evaluated models trained on the entire roughness dataset are shown in figure 7(a). The LR trained using ten primary statistics displays the largest error in predicting

$LR_{19}$, etc. The absolute error (MAE in (3.2)) and relative error (MAPE in (3.3)) obtained from the seven evaluated models trained on the entire roughness dataset are shown in figure 7(a). The LR trained using ten primary statistics displays the largest error in predicting ![]() $\Delta U^+$. As previously observed in figure 4, by incorporating the nine additional pair parameters, some degree of nonlinearity becomes incorporated into the LR model and its error becomes reduced.

$\Delta U^+$. As previously observed in figure 4, by incorporating the nine additional pair parameters, some degree of nonlinearity becomes incorporated into the LR model and its error becomes reduced.

Figure 7. (a) Values of MAPE (%) (blue) and MAE (red) obtained from all models trained by the hybrid data. Left and right symbols correspond to 10 and 19 input parameters. All maximum errors correspond to negatively skewed surfaces (type: ![]() $Sk_-$). (b) Applying the trained model by the full dataset on each type of surface thus the corresponding mean errors. The data are slightly displaced on the horizontal axis for the same type of roughness to increase clarity.

$Sk_-$). (b) Applying the trained model by the full dataset on each type of surface thus the corresponding mean errors. The data are slightly displaced on the horizontal axis for the same type of roughness to increase clarity.

The SVR emerges as the optimal predictive model with an error of 4.4 %, the smallest of all models. The use of the extended input (![]() $SVR_{19}$) does not improve prediction compared with the

$SVR_{19}$) does not improve prediction compared with the ![]() $SVR_{10}$ model. This suggests that the chosen kernel function effectively captures the nonlinearity embedded within the input space. Moving on to the performance of MLP, we make two observations. First,

$SVR_{10}$ model. This suggests that the chosen kernel function effectively captures the nonlinearity embedded within the input space. Moving on to the performance of MLP, we make two observations. First, ![]() $MLP_{19}$ yields a MAE of around 5 %, which is slightly larger than the SVR model. Second, the input size has a small influence on the prediction performance, with

$MLP_{19}$ yields a MAE of around 5 %, which is slightly larger than the SVR model. Second, the input size has a small influence on the prediction performance, with ![]() $MLP_{19}$ performing slightly better than

$MLP_{19}$ performing slightly better than ![]() $MLP_{10}$. Although the network has a near-optimal performance, these observations imply that it has not fully captured the nonlinearity in the mapping from the inputs to the output. Presumably, a different network and/or larger database are required to reach the same level of performance as the SVR model.

$MLP_{10}$. Although the network has a near-optimal performance, these observations imply that it has not fully captured the nonlinearity in the mapping from the inputs to the output. Presumably, a different network and/or larger database are required to reach the same level of performance as the SVR model.

Finally, we observe that the best-performing CNN achieves comparable results to SVR. However, it requires significantly longer training time due to its vast number of trainable parameters (![]() $O(10^5)$). As mentioned in the previous section, to develop a generalizable CNN model a larger dataset is required. The local spatial topographical information in this specific case does not offer discernible advantage for solely predicting a single scalar value (

$O(10^5)$). As mentioned in the previous section, to develop a generalizable CNN model a larger dataset is required. The local spatial topographical information in this specific case does not offer discernible advantage for solely predicting a single scalar value (![]() $\Delta U^+$). However, CNN is inherently capable of learning hierarchical representations from grid-like data, which could potentially be advantageous when considering patchy, inhomogeneously distributed roughness where a statistical parameterization becomes non-trivial.

$\Delta U^+$). However, CNN is inherently capable of learning hierarchical representations from grid-like data, which could potentially be advantageous when considering patchy, inhomogeneously distributed roughness where a statistical parameterization becomes non-trivial.

4.2 Model performance for different surface categories

In addition to evaluating the prediction accuracy using test data from the full roughness database, further insight into the models is gained by assessing how accurately they predict different roughness types. As shown in figure 7(b), all predictive models demonstrate a comparable level of accuracy in predicting the Gaussian surfaces, i.e. ![]() $Sk_0$,

$Sk_0$, ![]() $\lambda _x$ and

$\lambda _x$ and ![]() $\lambda _z$. The average error of all models, including

$\lambda _z$. The average error of all models, including ![]() $LR_{10}$, is around 2 %–3 %. The largest errors are found for the negatively skewed roughnesses, which have a pit-dominated topography. Note that MAPE is normalized with

$LR_{10}$, is around 2 %–3 %. The largest errors are found for the negatively skewed roughnesses, which have a pit-dominated topography. Note that MAPE is normalized with ![]() $\Delta U^+$, resulting in large errors for small values of

$\Delta U^+$, resulting in large errors for small values of ![]() $\Delta U^+$, which is the case for

$\Delta U^+$, which is the case for ![]() $Sk_ - $. For example, applying

$Sk_ - $. For example, applying ![]() $LR_{10}$ to the

$LR_{10}$ to the ![]() $Sk_ - $ test data gives

$Sk_ - $ test data gives ![]() $\langle \Delta U^+ \rangle =0.60$ and

$\langle \Delta U^+ \rangle =0.60$ and ![]() $\langle \Delta \tilde U^+ \rangle =0.64$, resulting in a large

$\langle \Delta \tilde U^+ \rangle =0.64$, resulting in a large ![]() ${MAPE} = 25$ %. This is despite the difference between the DNS and predicted drag being relatively small. Considering that MAPE is a sensitive measure for small target values, we can confirm that the SVR models notably outperform the NNs, where the latter have an error of

${MAPE} = 25$ %. This is despite the difference between the DNS and predicted drag being relatively small. Considering that MAPE is a sensitive measure for small target values, we can confirm that the SVR models notably outperform the NNs, where the latter have an error of ![]() ${\sim }11\,\%$.

${\sim }11\,\%$.

In summary, we observe that SVR is consistently the most robust (figure 6) and efficient (figure 7) model in predicting the additional drag induced by homogeneously distributed irregular roughness. While the NN models have comparable performance, they lack robustness when trained on our dataset consisting of ![]() $\mathcal {O}(10^3)$ samples.

$\mathcal {O}(10^3)$ samples.

4.3 Key features for SVR prediction

We observed that SVR model's prediction performance did not benefit from extending the input with the pair parameters. In contrast, we have observed that the nonlinearity introduced by using the extended input improves the prediction performance of both the LR and MLP models, which indicates that these models are unable to fully learn the nonlinearity inherent in the data. In the following section, we attempt to provide insight into the capabilities of SVR.

4.3.1 Choice and interpretation of kernels

The SVR uses a kernel to map a low-dimensional input vector to a high-dimensional space where the relationship between the inputs and the output (e.g. ![]() $\Delta U^+$) can be mapped linearly. This feature allows SVR to implicitly take into account the pair parameters in table 2, even though the actual input,

$\Delta U^+$) can be mapped linearly. This feature allows SVR to implicitly take into account the pair parameters in table 2, even though the actual input, ![]() $\boldsymbol {x}$, used in the model (3.6) only contains the primary parameters. In Appendix B, we present a simple example using the kernel

$\boldsymbol {x}$, used in the model (3.6) only contains the primary parameters. In Appendix B, we present a simple example using the kernel

to illustrate how a nonlinear relation between primary surface statistics and ![]() $\Delta U^+$ is transformed to a linear relation between an extended-input vector and

$\Delta U^+$ is transformed to a linear relation between an extended-input vector and ![]() $\Delta U^+$.

$\Delta U^+$.

Leveraging kernels removes the arbitrariness that exists when manually selecting different combinations of the primary statistics. In fact, by constructing the kernel using radial basis functions, not only the pair parameters but an infinite number of products of the primary statistics are taken into account. To see how this works, consider the RBF kernel of (3.11) and assume that ![]() $N=1,D=1$ and

$N=1,D=1$ and ![]() $\gamma =1$, i.e.

$\gamma =1$, i.e.

\begin{equation} k(x_1,x) = {\rm e}^{-(x_1-x)^2} = {\rm e}^{ - x_1^2-x^2}\sum_{j = 0}^\infty (x_1x)^j. \end{equation}

\begin{equation} k(x_1,x) = {\rm e}^{-(x_1-x)^2} = {\rm e}^{ - x_1^2-x^2}\sum_{j = 0}^\infty (x_1x)^j. \end{equation}

We observe that the last term is an infinite sum containing products of ![]() $x$. More generally, the Gaussian kernel can be interpreted as a measure of similarity between the input vector of a new surface,

$x$. More generally, the Gaussian kernel can be interpreted as a measure of similarity between the input vector of a new surface, ![]() $\boldsymbol {x}$, and those of each surface in the training data set,

$\boldsymbol {x}$, and those of each surface in the training data set, ![]() $\boldsymbol {x}_i$. If

$\boldsymbol {x}_i$. If ![]() $\boldsymbol {x}$ is close to

$\boldsymbol {x}$ is close to ![]() $\boldsymbol {x}_i$ (in a Euclidean sense), then the corresponding term in the expansion (3.6) is large.

$\boldsymbol {x}_i$ (in a Euclidean sense), then the corresponding term in the expansion (3.6) is large.

4.3.2 Minimal SVR input space

We now move on to identify the smallest set of primary statistics that is needed in the input vector ![]() $\boldsymbol {x}$ for accurate drag prediction using SVR. There is no need to consider products of primary parameters, since SVR implicitly takes into account all such nonlinearities through the RBF kernel. Previous works (see e.g. Reference Chung, Nicholas, Michael and KarenChung et al. 2021) have highlighted that measures of height, effective slope and skewness are necessary to capture the drag increase from homogeneously distributed roughness. Indeed, it is likely that inputs in

$\boldsymbol {x}$ for accurate drag prediction using SVR. There is no need to consider products of primary parameters, since SVR implicitly takes into account all such nonlinearities through the RBF kernel. Previous works (see e.g. Reference Chung, Nicholas, Michael and KarenChung et al. 2021) have highlighted that measures of height, effective slope and skewness are necessary to capture the drag increase from homogeneously distributed roughness. Indeed, it is likely that inputs in ![]() $SVR_{10}$ and

$SVR_{10}$ and ![]() $SVR_{19}$ contain redundancy since, for example, the first three parameters (

$SVR_{19}$ contain redundancy since, for example, the first three parameters (![]() $k^+_c$,

$k^+_c$, ![]() $k^+_{rms}$,

$k^+_{rms}$, ![]() $R^+_a$) all represent the roughness height. The high correlations between these features, as seen in the triangle area demarcated in figure 2, further support this notion.

$R^+_a$) all represent the roughness height. The high correlations between these features, as seen in the triangle area demarcated in figure 2, further support this notion.

We first focus on the case where the input is ![]() $\boldsymbol {x}=(k^+_{rms}, ES_x, ES_z)$ and comprises only the vectors of the three parameters. A new SVR model,

$\boldsymbol {x}=(k^+_{rms}, ES_x, ES_z)$ and comprises only the vectors of the three parameters. A new SVR model, ![]() $SVR_3$, is trained on all types of roughness using these three parameters, followed by inference on the same testing data employed earlier. Figure 8(a) shows the diagonal spread of

$SVR_3$, is trained on all types of roughness using these three parameters, followed by inference on the same testing data employed earlier. Figure 8(a) shows the diagonal spread of ![]() $\Delta U^+$ (obtained from DNS) and

$\Delta U^+$ (obtained from DNS) and ![]() $\Delta \tilde U^+$ (predicted) for each category. A reasonably good agreement for Gaussian surfaces (

$\Delta \tilde U^+$ (predicted) for each category. A reasonably good agreement for Gaussian surfaces (![]() $Sk_0$,

$Sk_0$, ![]() $\lambda _x$, and

$\lambda _x$, and ![]() $\lambda _z$) is observed with a mean error of

$\lambda _z$) is observed with a mean error of ![]() ${\sim }4\,\%$. However, for

${\sim }4\,\%$. However, for ![]() $Sk_+$ surfaces, the prediction exhibits notable deviations from the DNS data, which cause the mean error to become

$Sk_+$ surfaces, the prediction exhibits notable deviations from the DNS data, which cause the mean error to become ![]() ${\sim }12\,\%$, in contrast to the significantly lower errors of

${\sim }12\,\%$, in contrast to the significantly lower errors of ![]() ${\sim }2.7\,\%$ obtained from

${\sim }2.7\,\%$ obtained from ![]() $SVR_{10}$ and

$SVR_{10}$ and ![]() $SVR_{19}$. Such outliers are also observed for

$SVR_{19}$. Such outliers are also observed for ![]() $Sk_-$. Furthermore, we tried to replace

$Sk_-$. Furthermore, we tried to replace ![]() $k^+_{rms}$ with

$k^+_{rms}$ with ![]() $k^+_c$ and

$k^+_c$ and ![]() $R^+_a$ for SVR training. While using the crest height,

$R^+_a$ for SVR training. While using the crest height, ![]() $k^+_c$, still gave a model with reasonable prediction accuracy, it was still inferior compared with models which used

$k^+_c$, still gave a model with reasonable prediction accuracy, it was still inferior compared with models which used ![]() $k^+_{rms}$ and

$k^+_{rms}$ and ![]() $R^+_a$. This finding suggests that

$R^+_a$. This finding suggests that ![]() $k^+_{rms}$ and

$k^+_{rms}$ and ![]() $R^+_a$ provide a more comprehensive quantification of the surface terrain than

$R^+_a$ provide a more comprehensive quantification of the surface terrain than ![]() $k^+_c$.

$k^+_c$.

Figure 8. The scatter distribution of ![]() $\Delta U^+$ vs

$\Delta U^+$ vs ![]() $\Delta \tilde U^+$ obtained from the new SVR. The model is trained with the reduced input space involving (a)

$\Delta \tilde U^+$ obtained from the new SVR. The model is trained with the reduced input space involving (a) ![]() $k^+_{rms}$,

$k^+_{rms}$, ![]() $ES_x$,

$ES_x$, ![]() $ES_z$ (b) and additional

$ES_z$ (b) and additional ![]() $Skw$. The error reduction is observed for

$Skw$. The error reduction is observed for ![]() $Sk_-$- and

$Sk_-$- and ![]() $Sk_+$-roughness, marked in bold.

$Sk_+$-roughness, marked in bold.